Abstract

In this paper, a quantitative measure of partial observability is defined for PDEs. The quantity is proved to be consistent if the PDE is approximated using well-posed approximation schemes. A first order approximation of an unobservability index using an empirical Gramian is introduced. Several examples are presented to illustrate the concept of partial observability. The theory is developed for the estimation of initial value. However, the concept can be extended to the observability of state variables at final time or any fixed time moment.

Similar content being viewed by others

1 Introduction

Observability is a fundamental property of dynamical systems [1, 2] with an extensive literature. It can be considered as a measure of well-posedness for the estimation of system states using sensor information as well as additional user knowledge about the system. We do not give a review of the huge literature here on this subject. Some interesting work can be found in [3–5] for nonlinear systems, [6] for partial differential equations (PDEs), [7] for stochastic systems, [8] for normal forms. Other related work includes [9–11].

For some models of high dimensional systems, the traditional concept of observability is not applicable. For instance, models used in numerical weather prediction have millions of state variables. Some variables are strongly observable while some others are extremely weakly observable. It is known that the sparse sensor network cannot make the entire system uniformly observable. These types of problems call for a partial observability analysis in which we study the observability of finite many modes (or state variables) that are important for weather prediction and ignore the modes that are less important. In addition, it is desirable to measure the observability of a system quantitatively. It is not good enough to just tell that a set of variables is observable or not. It is important to tell how strong or weak the observability is. In the large amount of data collected for weather prediction, only \(5\,\%\) or less are actually useful for each prediction. Finding a high-value dataset that improves the level of observability requires a quantitative measure of observability. When moving sensors are used, a quantitative measure of observability is fundamental in finding optimal sensor locations. Another issue about models of high dimensional systems is how to practically verify their observability when a model is given as a numerical input-output function, such as a code, rather than a set of differential equations. The definition of observability should be computational so that one can numerically verify the concept.

In [12, 13], a definition of partial observability is introduced using dynamic optimization. This concept is developed in a project of finding the best sensor locations for data assimilations, a computational algorithm widely used in numerical weather prediction. Different from traditional definitions of observability, the one in [12, 13] is able to collectively address several issues in a unified framework, including a quantitative measure of observability, partial observability, and improving observability by using user knowledge. Moreover, computational methods of dynamic optimization provide practical tools of numerically approximating the observability of complicated systems that cannot be treated using an analytic approach.

In this paper we extend a simplified version of observability defined in [12, 13] to systems defined by PDEs. A quantitative measure of partial observability makes perfect sense for infinite dimensional systems such as PDEs. However, its computation is carried out using finite dimensional approximations. It is known in the literature that an approximation of a PDE using ordinary differential equations (ODEs) may not preserve the property of observability, even if the approximation scheme is convergent and stable [6, 14]. Therefore, to develop the concept of partial observability for PDEs, it is important to understand its consistency in ODE approximations. In Sect. 2, some examples from the literature are introduced to illustrate the issues being addressed in this paper. Observability is defined for PDEs in Sect. 3. In Sect. 4, a theorem on the consistency of observability is proved. The relationship between the unobservability index and an empirical Gramian is addressed in Sect. 5, which serves as a first order approximation of the observability. The theory is illustrated using examples in Sect. 6.

2 Some issues on observability for PDEs

Partial observability was introduced in [12, 13] for finite dimensional systems. In [15] the concept was applied to optimal sensor placement for numerical weather prediction. The idea was extended to systems defined by linear PDEs in [16]. In this paper, a simplified version of the partial observability defined in [12, 13] is adopted and generalized to some distributed parameter systems.

Before we introduce the concept and theorems, we first use a few simple examples to illustration some issues to be addressed. Consider the initial value problem of a heat equation

Suppose the measured output is

for some \(x_0\in [0,L]\). In this example, we assume \(L=2\pi \), \(T=10\), and \(x_0=0.5\). The solution and its output have the following form

where the Fourier coefficients satisfy an ODE

Define

A \(N\)th order approximation of the original initial value problem with output is defined by a system of ODEs

A Gramian matrix [2] can be used to measure the observability of \(u^N_0\). More specifically, given \(N>0\) the observability Gramian is

Its smallest eigenvalue, \(\sigma ^N_{min}\), measures the observability of \(u^N_0\). A small value of \(\sigma ^N_{min}\) implies weak observability. If the maximum sensor error is \(\epsilon \), then the worst estimation error of \(u^N_0\) is bounded by

The system has infinitely many modes in its Fourier expansion. However, it has a single output. The computation shows that the output can make the first mode observable. However, when the number of modes is increased, their observability decreases rapidly. From Fig. 1, for \(N=1\) we have \(\sigma ^N_{min}=1.216\times 10^{-1}\), which implies a reasonably observable \(\bar{u}_1(0)\). However, when \(N\) is increased, the observability decreases rapidly. For \(N=8\), \(\sigma ^N_{min}\) is almost zero, i.e

is extremely weakly observable, or practically unobservable. According to (2), a small sensor noise results in a big estimation error. For this problem, it is not important to achieve observability for the entire system. All we need is the partial observability for the critical modes.

For a simple system like the heat equation, we already know that the high modes do not affect the solution. Their observability is not important. One may choose a discretization scheme based on the important modes only so that the observability is achieved using a simplified model. However, for large and highly nonlinear systems with tens of thousands or even millions of state variables, such as in a process of numerical weather prediction or power network control, model reduction or changing the scheme of discretization is almost impossible because the models are already packaged in the form of software and copyright issues are likely involved. For these types of applications, a quantitative measure of partial observability is useful for several reasons. If a finite number of modes is enough to achieve accurate state approximates, guarantee the observability of these finite modes is a practical solution. In large-scale networded systems with a very high dimension, operators may focus on a local area at a given period of time. In this case, the observability of the entire system is irrelevant. It is useful to achieve a partial observability just for the states directly related to the area of focus. For moving sensors, a quantitative measure of observability can be used as a cost function in finding optimal sensor locations in which the observability is maximized.

Another issue to be addressed in this paper is consistency. In general the observability for PDEs is numerically computed using a system of ODEs as an approximation. However, it is not automatically guaranteed that the observability of the ODEs is consistent with the observability of the original PDE. In fact, a convergent discretization of a PDE may not preserve its observability. Take the following wave equation as an example

It is known that the total energy of the system can be estimated by using the energy concentrated on the boundary. However, in [6] it is proved that the discretized ODEs do not have the same observability. The energy of solutions is given by

This quantity is conserved along time. It is known that, when \(T>2L\), the total energy of solutions can be estimated uniformly by means of the energy concentrated on the boundary \(x=L\). More precisely, there exists \(C(T)>0\) such that

Now consider the discretized system using a finite difference method,

The total energy of the ODEs is given by

This quantity is conserved along the trajectories of the ODEs. The energy on the boundary is defined by

Because the solutions of (5) converges to the solutions of (3), we expect that the total energy of (5) can be uniformly estimated using the energy concentrated along a boundary, i.e. the following inequality similar to (4) holds for some \(C(T)\) uniformly in \(h\),

However, it is proved in [6] that this inequality is not true. In fact, the ratio between the total energy and the energy along the boundary is unbounded as \(h\rightarrow 0\). To summarize, the observability of a PDE is not necessarily preserved in its discretizations.

In this paper, we introduce a quantitative measure of partial observability for PDEs. Sufficient conditions are proved for the consistency of the observability for well-posed discretization schemes.

3 Partial observability

Consider a nonlinear initial value problem

where \(\Omega \) is an open set in \({\mathbb {R}}^n\), \(F\) is a continuous function of \(t\), \(u\), and its derivatives with respect to \(x\). Let \(X\) be a Banach space of functions defined on \(\Omega \). The initial condition, \(u_0\), lies in a subspace, \( V_0\), of \(X\). In the following, \(u(t)\) represents \(u(\cdot ,t)\). A solution \(u(t)\) of (6), in either strict or weak sense, is a \(X\)-valued function in a subspace \(V\) of \(C^0([0,T],X)\). If additional boundary conditions are required, we assume that all functions in \(V\) satisfy the boundary conditions. We assume that (6) is locally well-posed in the Hadamard sense ([17, 18]) around a nominal trajectory. More specifically, let \(u(t)\) be an nominal trajectory. We assume that there is an open neighborhood \(D_0\subset V_0\) that contains \(u(0)\), such that

-

For any \(u_0\in D_0\), (6) has a solution.

-

The solution is unique.

-

The solution depends continuously on its initial value.

Proving the well-posedness of nonlinear PDEs is not easy. Nevertheless, for well-posed problems the consistency of observability is guaranteed.

In (6), \(\mathcal{H}(u(\cdot ,t))\) or in short notation \(y_u(t)\), represents the output of the system associated with the solution \(u(x,t)\), where \(\mathcal{H}\) is a mapping, linear or nonlinear, from \(X\) to \({\mathbb {R}}^p\). We assume that \(y_u(\cdot )\) stays in a normed space of functions from \([t_0,t_1]\) to \({\mathbb {R}}^p\). Its norm is denoted by \(|| \cdot ||_Y\). We say that \(\mathcal{H}\) is continuous in a subset of \(C^0([0,T],X)\) if for any sequence \(\{u_k(t)\}_{k=k_0}^\infty \) in the subset and function \(u(t)\in V\),

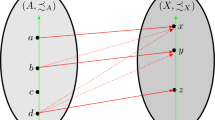

Instead of the entire state space, the observability is defined in a finite dimensional subspace. Let

be a subspace of \(V_0\) generated by a basis \(\{ e_1,e_2,\ldots ,e_s\}\). In the following, we analyze the observability of a component of \(u(0)\) using estimates from \(W\). Therefore \(W\) is called the space for estimation.

Let \(u(t)\) be a nominal trajectory satisfying (6). Suppose \(W\bigcap D_0\ne \emptyset \) and \(u(0)\) has a best estimate in \(W\bigcap D_0\), denoted by \(u_w(0)\), in the sense that \(u_w(t)\) minimizes the following output error,

Let \(u_r(t)=u(t)-u_w(t)\) be the remainder, then

If the output \(y_u(t)\) represents the sensor measurement, then it has noise. The data that we use in a estimation process has the following form

where \(d(t)\) is the measurement error. The observability addressed in this paper is a quantity that defines the sensitivity of the estimation error relative to \(d(t)\). From (9), the best estimate \(u_w(t)\) has an error that is the remainder \(||u_r(0)||_X\). This error is not caused by \(d(t)\) because the remainder cannot be reduced no matter how accurate the output is measured. This error is due to the choice of \(W\), not the observability of \(W\). Therefore, the observability is defined for \(u_w(0)\) only, thus a partial observability. For a strongly observable \(u_w(0)\), its estimate may not be close to \(u(0)\) if the remainder, \(u_r(0)\), is large. If the goal is to estimate \(u(0)\), an observable \(u_w(0)\) is useful only if the value of \(u_r(0)\) is either known or small. In the rest of the paper, we assume \(u_r(0)=0\) and \(u(0)\in W\). In applications, the concept is applicable to \(u(0)\) with a small \(u_r(0)\), (see, for instance, [15]).

Definition 1

Given a nominal trajectory \(u\) of (6). Suppose

For a given \(\rho >0\), suppose the sphere in \(W\) of radius \(\rho \) centered at \(u(0)\) is contained in \(D_0\). We define

where \(\hat{u}\) satisfies

Then \(\rho /\epsilon \) is called the unobservability index of \(u(0)\) along the trajectory \(u(t)\) at the level of \(\rho \).

Remark

The ratio \(\rho /\epsilon \) can be interpreted as follows: if the maximum error of the measured output, or sensor error, is \(\epsilon \), then the worst estimation error of \(u(0)\) is \(\rho \). Therefore, a small value of \(\rho /\epsilon \) implies strong observability of \(u(0)\). Different from most traditional definitions of observability, \(W\) is a subspace of the state space. However, in the special case that \(W\) equals the entire state space that has a finite dimension and if the system is linear, \(\epsilon ^2/\rho ^2\) equals the smallest eigenvalue of the observability Gramiam (See Sect. 5).

Remark

The effectiveness of this concept is verified in [15] using Burgers’ equation. A 4D-Var data assimilation, a method of state estimation in weather prediction, is applied to several data sets from different sensor locations. The result shows that the estimation using sensor locations with a smaller unobservability index results in more accurate estimates than those using the data from other sensor locations with higher unobservability indices.

To numerically compute a system’s observability, (6) is approximated using ODEs. In this paper, we consider a general approximation scheme using a sequence of ODEs,

and two sequences of linear mappings

The norm in \({\mathbb {R}}^N\) is represented by \(||\cdot ||_N\). We assume that the approximation scheme is well-posed, more specifically

-

For any bounded set \(B\) in \(D_0\), there exist \(M>0\) and \(\alpha >0\) so that any solution of (6) with \(u(0)\in B\) satisfies

$$\begin{aligned} ||u(t)-\Phi ^N(u^N(t))||_X \le \displaystyle \frac{M}{N^\alpha } \end{aligned}$$(14)uniformly in \([0, T]\), where \(P^N(u(0))=u^N(0)\).

-

For any \(u\in W\),

$$\begin{aligned}&||u||_X=||P^N(u)||_N+a_N||P^N(u)||_N\nonumber \\&\displaystyle \lim _{N\rightarrow \infty }a_N=0 \end{aligned}$$(15)

Given the space for estimation \(W\), we define a sequence of subspaces, \(W^N \subseteq {\mathbb {R}}^N\), by

They are used as the space for estimation in \({\mathbb {R}}^N\). If \(\{e_1,e_2,\ldots ,e_s\}\) is a basis of \(W\), then their projections to \(W^N\) are denoted by

So \(W^N=\text{ span }\{ e_1^N, e_2^N,\ldots ,e_s^N\}\).

Example

For a spectral method, approximate solutions can be expressed in terms of an orthonormal basis

For any function,

one can define

Obviously, \(\Phi ^N\) is defined by

In the case of \(l^2\)-norm for all \({\mathbb {R}}^N\), \(||\cdot ||_N\) is consistent with \(||\cdot ||_X\) if \(X=L^2(\Omega )\). Typically, the space for estimation consists of finite many modes

\(\square \)

Example

Some approximation methods, such as finite difference and finite element, are based on a grid defined by a set of points in space,

and a basis \(\{ q_k^N \}\) satisfying

In this case, the mappings in the approximation scheme is defined as follows

The inner product in \({\mathbb {R}}^N\) can be induced from \(L^2(\Omega )\), i.e. for \(u, v\in {\mathbb {R}}^N\),

If

then the norms \(||\cdot ||_N\) and \(||\cdot ||_X\) satisfy the consistency assumption (15). \(\square \)

Following [12], we define the observability for ODE systems.

Definition 2

Given \(\rho >0\) and a trajectory \(u^N(t)\) of (12) with \(u^N(0)\in W^N\). Denote \(\hat{y}^N(t)=\mathcal{H}\circ \Phi ^N(\hat{u}^N(t))\) and \(y^N(t)=\mathcal{H}\circ \Phi ^N(u^N(t))\). Let

where \(\hat{u}^N\) satisfies

Then \(\rho /\epsilon ^N\) is called the unobservability index of \(u^N(0)\) in the space \(W^N\).

4 The consistency of observability

In this section, we prove the consistency of observability.

Theorem 1

(Consistency) Suppose the initial value problem (6) and its approximation scheme (12)–(13) are well-posed. Suppose \(\mathcal{H}\) satisfies the continuity assumption (7) in \(C^0([0,T],\bigcup _{N=N_0}^\infty \Phi ^N({\mathbb {R}}^N))\) for some integer \(N_0>0\). Consider a nominal trajectory \(u(t)\) of (6) and its corresponding trajectory \(u^N(t)\) of (12) with an initial value \(u^N(0)=P^N(u(0))\). Assume that the sphere in \(W^N\) centered at \(u^N(0)\) with radius \(\rho \) is contained in \(P^N(D_0)\). Then,

To prove this theorem we need two lemmas.

Lemma 1

Given any sequence \(\{ v^N(t)\}_{N=N_0}^\infty \) where \(v^N(t)\) is a solution of (12) with \(v^N(0)\in P^N(D_0)\bigcap W^N\) and \(N_0>0\) is an integer. If \(\{ ||v^N(0)||_N\}_{N_0}^\infty \) is bounded and if \(\Phi ^N(v^N(0))\) converges to \(v(0)\in D_0\), where \(v(t)\) is a solution of (6), then \(\Phi ^N(v^N(t))\) converges to \(v(t)\) uniformly for \(t\in [0,T]\).

Proof

Let \(\tilde{v}_N(t)\) be the solution of the PDE (12) such that \(\tilde{v}_N(0) \in D_0\bigcap W\) and \(P^N(\tilde{v}_N(0))= v^N(0)\). Note that we do not assume \(\Phi ^N(P^N(\tilde{v}_N(0)))=\tilde{v}_N(0)\), although this is the case in many approximation schemes. The set \(\{ \tilde{v}_N(0)\}\) must be bounded in \(X\) because of (15) and the assumption that \(\{ ||v_N(0)||_N\}_{N_0}^\infty \) is bounded. Therefore, (14) implies

converges uniformly. In particular,

Because \(\Phi ^N(v^N(0))\) converges to \(v(0)\in D_0\), we know that \(\tilde{v}_N(0)\) converges to \(v(0)\). Because the solutions of the PDE are continuously dependent on their initial value (well-posedness),

uniformly in \(t\). Equations (23), (24) and the triangular inequality

imply

uniformly in \(t\). \(\square \)

Lemma 2

Given a sequence \(\hat{u}^{N}(t)\), \(N\ge N_0\), satisfying (21). There exists a subsequence, \(\hat{u}^{N_k}(t)\) such that \(\{ \Phi ^{N_k}(\hat{u}^{N_k}(t))\}_{k=1}^\infty \) converges uniformly to a solution of (11) as \(N_k\rightarrow \infty \).

Proof

For each \(\hat{u}^N(t)\), there exists a trajectory \(\hat{u}_N(t)\) of the PDE (6) satisfying \(\hat{u}_N(0)\in D_0\bigcap W\) and \(P^N(\hat{u}_N(0))=\hat{u}^N(0)\). From the consistency of norms, (15), and the fact \(||\hat{u}^N(0)||_N=\rho \), we know that \(\{\hat{u}_N(t)\}_{N_0}^\infty \) is a bounded set in \(W\). The convergence assumption (14) implies

As a bounded set in a finite dimensional space \(W\), \(\{\hat{u}_N(t)\}_{N_0}^\infty \) has a convergent subsequence \(\{\hat{u}_{N_k}(t)\}_{k=1}^\infty \). Let \(\hat{u}(0)\) be its limit and \(u(t)\) be the corresponding trajectory of (6). From (25), we know

From Lemma 1, \(\{ \Phi ^{N_k}(\hat{u}^{N_k})\}_{k=1}^\infty \) converges to \(\hat{u}(t)\) uniformly in \([0,T]\). Because of (15),

Therefore, \(\hat{u}(t)\) satisfies (11) and \(\{ \Phi ^{N_k}(\hat{u}^{N_k})\}_{k=1}^\infty \) converges uniformly to a solution of (11). \(\square \)

Proof of Theorem 1

First, we prove

Suppose this is not true, then \(\liminf \epsilon ^N < \epsilon \). There exists \(\alpha >0\) and a subsequence \(N_k\rightarrow \infty \) so that

for all \(N_k\). From the definition of \(\epsilon ^N\), there exist \(\hat{u}^{N_k}(t)\) satisfying (21) such that

where

From Lemma 2, we can assume that \(\Phi ^{N_k}(u^{N_k}(t))\) converges to \(\hat{u}(t)\) uniformly, where \(\hat{u}(t)\) satisfies (11). From the continuity of \(\mathcal{H}\) as defined in (7),

However, from the definition of \(\epsilon \), we know

A contradiction is found. Therefore, (26) must hold.

In the next step, we prove

It is adequate to prove the following statement: for any \(\alpha >0\), there exists \(N_1>0\) so that

for all \(N\ge N_1\). From the definition of \(\epsilon \), there exists \(\hat{u}\) satisfying (11) so that

Let \(\hat{u}^N\) be a solution of the ODE

with an initial value

Then the following limit converges uniformly

A problem with \(\hat{u}^N(0)\) is that its distance to \(u^N(0)\) may not be \(\rho \), which is required by (21). Let \(\bar{u}(t)\) be a solution of (12) with an initial value

Obviously

and \(\bar{u}(t)\) satisfies (21). Due to the consistency of the norms and the fact \(||\hat{u}(0)-u(0)||_X=\rho \), we know

By Lemma 1, the limit

converges uniformly on the interval \(t \in [0,T]\). Let \(\bar{y}^N(t)=\mathcal{H}(\Phi ^N(\bar{u}^N(t)))\) and \( y^N(t)=\mathcal{H}(\Phi ^N(u^N(t)))\). Then output continuity assumption (7) implies

There exits \(N_1>0\) so that

for all \(N\ge N_1\). From the definition of \(\epsilon ^N\), we know

for all \(N>N_1\). Therefore, (27) holds.

To summarize, the inequalities (26) and (27) imply

\(\square \)

5 The empirical Gramian

In this section, we assume that \(W^N\) and the space of \(y(t)\) are both Hilbert spaces with inner products \(<,>_N\) and \(<\cdot ,\cdot >_Y\), respectively. The ratio, \(\epsilon ^N/\rho \), is approximately equal to the smallest eigenvalue of a Gramian matrix. More specifically, let

be a set of orthonormal basis of \(W^N\). Let \(u^{+}_i(t)\) and \(u^{-}_i(t)\) be solutions of (12) satisfying

Define

The empirical Gramian is defined by

For a small value of \(\rho \), the unobservability index is approximated by

where \(\sigma _{min}\) is the smallest eigenvalue of \(G\). For linear ODEs, this approximation is accurate because it can be shown that

Comparing to the linear control theory, \(G\) is the same as the observability Gramian if \(W^N\) is the entire space and if \(y(t)\) lies in a \(L^2\)-space.

If \(\{e_1^N,e_2^N,\ldots ,e_s^N\}\) is not an orthonormal basis, we can modify the approximation as follows. Define

Let \(\sigma _{min}\) be the smallest eigenvalue of \(G\) relative to \(S\), i.e.

for some nonzero \(\xi \in {\mathbb {R}}^s\). Then

The approximation is accurate for linear systems.

For the heat equation approximated using (1), the associated mappings can be defined by

If we want to find the observability of the first \(s\) modes, Definition 2 is equivalent to the analysis using the traditional observability Gramian for \(N=s\). In fact, for all \(N\ge s\), \(G\) is a constant matrix and

Therefore, \(\epsilon ^N=\epsilon ^s\) for all \(N\ge s\) and \(\epsilon ^N\) is a consistent.

6 Examples

In this section, some examples are used to illustrate Definition 1. In the literature, a measure of controllability for linear systems is defined based upon the radius of matrices [19–21]. Using duality, we can define the observability radius, \(\gamma _o\), as the distance between the system and the set of unobservable systems. This radius is defined primarily for the measure of the robustness of observability, although it agrees with the partial observability defined above in some filtering problems. Using the following example, we illustrate the sameness and differences between \(\gamma _o\) and the unobservability index.

Example 1

Consider a linear system

where \(\delta \) is a constant number. In [19], it is proved that using Euclidean norm we have

For a small \(\delta \), the observability of the system can be qualitatively changed by a small perturbation, i.e. the observability is not robust. This makes sense because, when \(\delta =0\), the system is unobservable. Consider the observability of \(x(T)\) for some fixed time \(T\). The solution of (34) is

It it straightforward to derive the norm of \(y\)

where

Therefore, the unobservability index of \(x(T)\) is

where \(\sigma ^2_{min}\) is the smallest eigenvalue of the matrix in (35). It can be shown that

If \(T\) is large, then

Because \(\gamma _o=\delta \), (36) implies that the observability of \(x(T)\) defined in this paper coincides with the radius of observability \(\gamma _o\).

Although \(\gamma _o\) reflects the observability of the states at \(t=T\), \(\rho /\epsilon \) and \(\gamma _o\) have some fundamental differences. The value of \(\gamma _o\) represents the radius of observability which is a measure of observability robustness. It cannot tell the observability of \(x(t)\) when \(t\ne T\). In fact, for a small value of \(\delta \), the initial state \(x(0)\) can still be strongly observable if \(T\) is large, assuming that \(\delta \) is a known constant. Another difference lies in the fact that \(\rho /\epsilon \) is defined for the partial observability of a large system, which is not reflected by \(\gamma _o\). \(\square \)

In the following example we use Burgers’ equation to illustrate the partial observability in a subspace based on the Fourier exansion.

Example 2

Consider the following Burgers’ equation

where \(L=2\pi \), \(T=5\), and \(\kappa =0.14\). The output space consists of functions representing data from three sensors that measure the value of \(u(x,t)\) at fixed locations

where \(\Delta t=T/N_t\), \(N_t=20\). Figure 2 shows a solution with discrete sensor measurements marked by the stars. In the output space

The approximation scheme is based on equally spaced grid-points

where

System (37) is discretized using a central difference method

where \(u^N_0=u^N_{N}=0\). For any \(v(x)\in C([0,L])\), we define

For any \(v^N\in {\mathbb {R}}^{N-1}\), define

be the unique function of cubic spline determined by \(v^N\) and \((x_0,x_1,\ldots ,x_N)\) satisfying \(v(0)=v(L)=0\). We adopt \(L^2\)-norm in \(C[0, L]\). For any vector \(v^N \in {\mathbb {R}}^{N-1}\), its norm is defined as

The space for estimation is defined to be

In this section, \(K_F=2\). This means that we want to find the observability for the first five modes in the Fourier expansion of \(u(0)\). Or equivalently, we would like to find the observability of

Define

then

In this example, the nominal trajectory has the following initial value

Its solution is shown in Figure 2. To approximate its observability, we apply the empirical Gramian method to (39) in the space \(W^N\). The ratio \(\rho /\epsilon ^N\) is approximated for \(N=4k\), \(5\le k \le 21\). The value of unobservability index approaches (Figure 3)

\(\square \)

7 Conclusions

A definition of partial observability using dynamic optimization is introduced for PDEs. The advantage of this definition is to resolve several issues and concerns about observability in a unified framework. More specifically, using the concept one can achieve a quantitative measure of partial observability for PDEs. Furthermore, the observability can be numerically approximated. A practical feature of this definition for infinite dimensional systems is that the observability can be numerically computed using well-posed approximation schemes. It is mathematically proved that the approximated partial observability is consistent with that of the original PDE. A first order approximation is derived using empirical Gramian matrices. The concept is illustrated using several examples.

References

Isidori A (1995) Nonlinear control systems. Springer, London

Kailath T (1980) Linear systems. Prentice-Hall Inc, Englewood Cliffs

Gauthier JP, Kupka IAK (1994) Observability and observers for nonlinear systems. SIAM J Control Optim 32:975–994

Hermann R, Krener A (1977) Nonlinear controllability and observability. IEEE Trans Autom Control 22:728–740

Xia X, Gao W (1989) Nonlinear observer design by observer error linearization. SIAM J Control Optim 27:199–216

Infante JA, Zuazua E (1999) Boundary observability for the space semi-discretizations of the 1-d wave equation. Math Model Numer Anal ESAIM M2AN 33(2):407–438

Mohler RR, Huang CS (1988) Nonlinear data observability and information. J Frankl Inst 325:443–464

Zheng G, Boutat D, Barbot JP (2007) Single output dependent observability normal form. SIAM J Control Optimal 46(6):2242–2255

El Jai A, Simon MC, Zerrik E (1993) Regional observability and sensor structures. Sens Actuat A 39(2):95–102

Krener AJ, Ide K (2009) Measures of unobservability. In: Proceedings IEEE conference on decision and control, Shanghai, China, December, 2009

Tricaud C, Chen Y (2012) Optimal mobile sensing and actuation policies in cyber-physical systems. Springer, London

Kang W, Xu L (2009) Computational analysis of control systems using dynamic optimization, arXive.org, arXiv:0906.0215v2

Kang W, Xu L (2009) A Quantitative measure of observability and controllability. In: Proceedings IEEE conference on decision and control, Shanghai, China, 2009

Hohn SE, Dee DP (1988) Observability of discretized partial differential equations. SIAM J Numer Anal 25(3):586–617

Kang W, Xu L (2012) Optimal placement of mobile sensors for data assimilations. Tellus A 64:17133

Kang W, Xu L (2013) Partial observability and its consistency for linear PDEs. In: IFAC proceedings of NOLCOS, Toulouse, September, 2013

Hille E, Phillips RS (1957) Functional analysis and semi-groups. American Mathematical Society, Providence

Richtmyer RD (1978) Principles of advanced mathematical physics, vol 1. Springer, New York

Burns J, Peichl GH (2006) Control system radii and robustness under approximation. In: Kurdila AJ, Pardalos PM, Zabarankin M (eds) Robust optimization-directed design. Springer, Berlin

Eising R (1984) Between controllable and uncontrollable. Syst Control Lett 4:263–264

Kenney C, Laub AJ (1988) Controllability and stability radii for companion form systems. Math Control Signal Syst 1:239–256

Acknowledgments

The authors would like to express their gratitude to Professor Arthur J. Krener for his comments and discussions on the observability Gramian and the unobservability index. We would like to extend our thanks and appreciation to Professor Tong Yang for his suggestions on some basic assumptions made for the PDE and its solutions. This work was supported in part by NRL and AFOSR.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kang, W., Xu, L. Partial observability for some distributed parameter systems. Int. J. Dynam. Control 2, 587–596 (2014). https://doi.org/10.1007/s40435-014-0087-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-014-0087-4