Abstract

This work presents a novel formulation for the numerical solution of optimal control problems related to nonlinear Volterra fractional integral equations systems. A spectral approach is implemented based on the new polynomials known as Chelyshkov polynomials. First, the properties of these polynomials are studied to solve the aforementioned problems. The operational matrices and the Galerkin method are used to discretize the continuous optimal control problems. Thereafter, some necessary conditions are defined according to which the optimal solutions of discrete problems converge to the optimal solution of the continuous ones. The applicability of the proposed approach has been illustrated through several examples. In addition, a comparison is made with other methods for showing the accuracy of the proposed one, resulting also in an improved efficiency.

Similar content being viewed by others

1 Introduction

Optimal control problems (OCPs) are widely used in several fields, ranging from science and engineering to economics or biomedicine. They are essentially related to the identification of state trajectories for a dynamical system over a time interval that optimizes a specific performance index, by achieving the best possible outcome through endogenous control of a parameter within a mathematical model of the system itself. The associated problem is characterized by a cost or objective function, depending on both the state and control variables, as well as by a group of constraints. There are two important types of OCPs, respectively, subjected to differential equations and to integral equations. Originally, the classical optimal control theory was conceived to solve systems of controlled ordinary differential equations, hence referring to the first type, but the second type of OCPs recently gained significant success for handling a broad class of phenomena and mathematical models, such as, for example, technological, physical, economic, biological, and network control problems, as reported in Fig. 1.

OCPs are typically nonlinear and hence do not admit analytic solutions, especially when they are ruled by Volterra integral or Volterra integral derivative systems (second type). To overcome the difficulties related to obtaining an analytical solution to these problems, several authors have suggested different techniques providing a numerical solution.

Belbas described iterative methods with their convergence by assuming some conditions on the kernel of the integral equations involved, to solve optimal control of nonlinear Volterra integral equations (VIEs) Belbas (1999). Also, he discovered a technique to solve OCPs for VIEs which are based on approximating the controlled VIEs by systems of controlled ordinary differential equationsBelbas (2007, 2008). The existence and uniqueness of solutions for OCPs governed by VIEs can be found in Angell (1976).

In addition, orthogonal functions have been leveraged for finding the solution OCPs for VIEs. An iterative numerical method for solving optimal control using triangular functions is described in Maleknejad and Almasieh (2011). Maleknejad and Ebrahimzadeh introduced a collocation approach based on rationalized Legendre wavelets to approximate optimal control and state variables in Maleknejad and Ebrahimzadeh (2014). In Tohidi and Samadi (2013), Tohidi and Samadi investigated the use of Lagrange polynomials in solving OCPs for systems governed by VIEs and also analyzed the convergence of their proposed solution, characterized by a considerable efficiency, mainly for problems characterized by smooth solutions. Hybrid functions consisting of block-pulse functions and a Bernoulli polynomial method for OCPs described by integro-differential equations have been investigated by Mashayekhi et al. in Mashayekhi et al. (2013). In Peyghami et al. (2012), the authors proposed some hybrid approaches leveraging steepest descent and two-step Newton methods for achieving optimal control together with the associated optimal state. Some other methods have been described in El-Kady and Moussa (2013); Li (2010); Maleknejad et al. (2012).

In a recent paper Khanduzi et al. [22], proposed a novel revised method based on teaching-learning-based optimization (MTLBO), to gain an approximate solution of OCPs subjected to nonlinear Volterra integro-differential systems.

As said, the OCPs, which is the minimization of a performance index subject to the dynamical system, is one of the most practical subjects in science and engineering. As a generalization of the classical optimal control problems, fractional optimal control problems (FOCPs) involve the minimization of a performance index subject to dynamical systems in which fractional derivatives or integrals are used (See Moradi and Mohammadi (2019) and references therein). Even if fractional calculus is almost so old as the normal integer-order calculus, its application in various fields of science has got increasing attention in the last 3 decades. In related literature, considerable attention has been paid to fractional calculus to have a better description of the behavior of the natural processes Baleanu et al. (2016); Oldham and Spanier (1974); Samko et al. (1993); Srivastava et al. (2017).

Centered on the approach reported in [22], Maleknejad and Ebrahimzadeh (2014) and considering the interest in fractional calculus that has grown over the past few years, the main aim of this analysis is to establish a new computational method for solving the OCPs ruled by nonlinear Volterra integro-fractional differential systems (NVIFs)

subjected to the NVIFs

Here, y(t) and u(t) are the state and control functions, \({\mathfrak {D}^\alpha y(t)}\) denotes the fractional derivative of y(t) in the Caputo sense, a(t), b(t), and c(t) are functions, and \(\varphi \) is a linear or nonlinear function. Moreover, \(\mathcal {L}\) and \(\mathcal {G}\) are continuously differentiable operators.

In this investigation, a new type of orthogonal polynomial, which has been described by Chelyshkov for the first time, is considered. First, the \(\mathfrak {D}^{\alpha }(y)\) is expanded by means of Chelyshkov polynomials vector with unknown coefficients. The fractional integral operational matrix is employed to find the approximate solution of OCPs (1.1) subject to the dynamic system (1.2). By increasing the number of basis functions, the accuracy of numerical results is enhanced.

The novelty of this work is that in the dynamic system (1.2), we have considered the order as a fractional, while in the reported works (see Khanduzi et al. (2020),[22],Maleknejad and Ebrahimzadeh (2014) and references therein), the order of the dynamic system is considered \(\alpha =1\). In fact, we have proposed the new formulation for OCPs subject to nonlinear Volterra integral equations. One of the big advantages of this approach is that by setting \(\alpha =1\), our scheme can easily be applied to OCPs for NVIFs considered for examples in the work of Khanduzi et al. [22] and Maleknejad et al.Maleknejad and Ebrahimzadeh (2014), and to other similar methods. To verify this notable inference, the new technique is compared with MTLBO, TLBO, Legendre wavelet methods, and also GWO and local methods Khanduzi et al. (2020),[22], Maleknejad and Ebrahimzadeh (2014) when \(\alpha =1\). Comparing the results of this work with the other relevant ones available in the related literature, as those reported by Khanduzi et al. (2020),[22],Maleknejad and Ebrahimzadeh (2014), revealed that the newly proposed formulation provides better performances with respect to the previous ones.

The rest of the paper is structured as follows:

Section 2 deals with the essential definitions and notes of Riemann–Liouville and Caputo fractional derivatives. Section 3 provides the description and the properties of Chelyshkov polynomials. Section 4 evaluates the integration matrix based on these polynomials. Section 5 provides an optimization computational approach for nonlinear Volterra integro-fractional differential systems. The last section gives three numerical examples of the accuracy of the proposed numerical method. Finally, the key final remarks are outlined in Section 6.

2 Notations and definitions

In this section, we will briefly highlight basic definitions and some properties of fractional differentiation and integration operators . While a broad variety of problems can be modeled by fractional order operators, there is no unique definition of fractional derivatives. The definitions of Riemann Liouville and Caputo, which can be described as follows, seem to be the most widely used ones for fractional integral and derivative. For more information on the fractional derivatives and integrals, please refer to Baleanu et al. (2016); Oldham and Spanier (1974); Samko et al. (1993); Srivastava et al. (2017).

Definition 1

Let \(\mathfrak {f}\in C[0,\infty )\). Then, \(\mathfrak {f} \in C_{\mu }[0,\infty ), \mu \in \mathbb {R}\) if it can be written as \(\mathfrak {f}(t)=t^{p} \mathfrak {f}_{1}(t)\), \(t \in [0,\infty )\), with \(p \in \mathbb {R}\), \(p >\mu \), and \(\mathfrak {f}_{1}\in C[0,\infty )\) .

Moreover, \(\mathfrak {f} \in C_{\mu }^{n}[0,\infty ),~ n \in \mathbb {N}\) if its nth derivative \(\mathfrak {f} ^{(n)} \in C_{\mu }[0,\infty )\).

Definition 2

Let \(\mathfrak {f} \in C_{1}^{n}[0,\infty )\). The Caputo fractional derivative of the function \(\mathfrak {f}\), of order \(\alpha >0\), is

Definition 3

Let \(\mathfrak {f}\in C_{\mu }, \mu \ge -1\). The Riemann–Liouville fractional integration of \(\mathfrak {f}\), of order \(\alpha \ge 0\), is

The some useful properties of the Riemann–Liouville fractional integral operator \(\mathcal {I}^\alpha \) and the Caputo fractional operator \(\mathfrak {D}^{\alpha }\) are given by the following expressions:

-

\({\mathcal {I}^{\alpha _1} \left( \mathcal {I}^{\alpha _2} \mathfrak {f}(t) \right) = \mathcal {I}^{\alpha _2} \left( \mathcal {I}^{\alpha _1} \mathfrak {f}(t) \right) ,~~\alpha _1, \alpha _2 \ge 0,}\)

-

\(\mathcal {I}^{\alpha _1} \left( \mathcal {I}^{\alpha _2} \mathfrak {f}(t) \right) = \mathcal {I}^{\alpha _1 + \alpha _2} \mathfrak {f}(t),~~\alpha _1, \alpha _2 \ge 0,\)

-

\(\mathcal {I}^\alpha t^\lambda = \frac{\varGamma \left( \lambda + 1 \right) }{\varGamma \left( \lambda + \alpha + 1 \right) }t^{\alpha + \lambda },~ \alpha \ge 0, \lambda >-1,\)

-

\(\mathfrak {D}^{\alpha } \mathcal {I}^{\alpha } \mathfrak {f}(t) = \mathfrak {f}(t),\)

-

\(\mathfrak {D}^{\alpha } t^{\lambda } = \left\{ \begin{array}{ll} 0 &{} , \ for~\lambda \in \mathbb {N}_{0}~ and~ \lambda < \alpha , \\ \displaystyle \frac{{\varGamma (\lambda + 1)}}{{\varGamma (\lambda - \alpha + 1)}}t^{\lambda - \alpha } \ &{} , \ otherwise, \\ \end{array} \right. \)

Here, \(\mathbb {N}_{0}=\lbrace 0, 1, 2, ...\rbrace \), \(\mathfrak {f} \in C_{\mu },~ \mu , \lambda \ge -1\) and \(n-1<\alpha \le n\).

2.1 Chelyshkov polynomials

In this section, we will report the definition and some properties of Chelyshkov polynomials. These polynomials were introduced in 2006 by Chelyshkov Chelyshkov (2006). They constitute a family of new orthogonal polynomials defined by

in which

Moreover, the orthogonality condition for these polynomials is described as follows:

where \(\delta _{pq}\) represents Kronecker delta.

Remark 1

By paying attention to the definition of the Chelyshkov polynomials, we conclude the main difference between the these polynomials and other orthogonal polynomials in the interval [0, 1], where the nth polynomial has a degree n.

2.2 Function approximation

Any function \(\mathfrak {f}(t)\) which is integrable on [0, 1) can be approximated by applying the Chelyshkov polynomials as

where \(\varUpsilon (t)\) and C are \((N+1)\) vectors given by

and the coefficients \(c_{i}, i=0,1,...N \) can be derived by means of the expression

3 Operational matrices

This section concerns processing operational matrices of the Chelyshkov polynomials vector \(\varUpsilon (t)\). In the following, some explicit formulations for fractional integration operational matrix in the Riemann–Liouville sense and the product operational matrix for the Chelyshkov polynomials vectors will be given.

Theorem 1

The fractional integration of order \(\alpha \) of Chelyshkov polynomials vector can be obtained by

where \(\varUpsilon (t)\) is \((N+1)\) Chelyshkov polynomials vector, \( \varOmega ^{(\alpha )}\in \mathbb {R}^{N+1}\) is the fractional integration operational matrix of \(\varUpsilon (t)\), and each element of this matrix can be computed as

Proof

Let us consider the ith element of the vector \(\varUpsilon (t)\). The fractional integral of order \(\alpha \) for \(\chi _{i-1}(t)\), can be obtained as

we expand using the Chelyshkov polynomials the expression \(t^{\alpha +r +i-1 }\), and then, we have

where \(\theta _{r, j}\) can be obtained as

Now, by substituting (3.3) and (3.4) in (3.2), we have

Therefore, the desired outcome is extracted. \(\square \)

Theorem 2

Let \(Y\in \mathbb {R}^{N \times 1}\) be an arbitrary vector

where \(\varUpsilon (t)\in \mathbb {R}^{N + 1}\) is the Chelyshkov polynomial vector introduced in (2.5) and the (i, j)th element of the product operational matrix \(\tilde{Y}\) can be obtained as

Proof

Consider two Chelyshkov polynomial vectors \(\varUpsilon (t)\) and \(\varUpsilon ^{T}(t)\). The product of these two vectors is a matrix described as follows:

As a consequence, the relation (3.5) can be represented as

By multiplying \(\chi _{j}(t)\) on both sides of the relation (3.6) and integrating results over [0, 1], we have

Finally, the (i, j)th element of product operational matrix \(\tilde{Y}\) provided by

\(\square \)

4 Description of the proposed numerical method

Consider the NVIFs (1.1) with initial conditions (1.2). First of all, all functions involved in NVIFs are approximated as follows:

where \(\varUpsilon (t)\) is a vector defined as in relation (2.5). Moreover, O, A, B, and C are known coefficient vectors that can be determined as described in (2.4) and Y represents the unknown vector to be determined. By (2.1), we have

Moreover, from Eq. (3.1) along with Eq. (4.1), we also have

where \({\mathcal {Q}^\alpha }\) is the fractional derivative operational matrix.

In virtue of Eqs. (4.4)–(4.5), we get

Applying of Eqs. (4.1), (4.2), (4.3), and (4.6) in relation (1.2), we have:

Continuing, we can re-write u(t) in the following format using the Gauss–Legendre quadrature formula on [0, 1]:

where \(s_{k}\) and \(w_{k}\) are Gauss–Legendre quadrature weights and nodes, respectively.

Therefore, the performance index (1.1) is approximated as follows:

where

Additionally, the performance indicator \({\mathcal {J}}\left[ {y_{0}},{y_{1}},...,{y_{N}}\right] \) can be approximated by implementing the Gauss–Legendre quadrature formula on [0, 1], as follows:

where \(\mathbf {w}_{k}\) and \(t_{k}\) are Gauss–Legendre quadrature nodes and weights, respectively. Ultimately, the conditions required for the optimal performance indicator are

We solve the algebraic equation systems for the unknown vector Y to determine the optimal coefficient values \(y_{i}\) with \(i=0,1,...,N\). Next, we use the Newton’s iterative method to evaluate the coefficients of this modified problem, which is an algebraic equation system for the unknown vector Y. By identifying the vector Y and inserting vector Y in Eqs. (4.6) and (4.7), the state and control functions y(t) and u(t) can be approximated, respectively.

5 Selected numerical examples and comparisons

In this section, to investigate the effectiveness of the proposed method, the numerical results based on three examples are exhibited. In these examples, the exact solutions are compared with the numerical solutions. Moreover, the obtained results are compared with the results of the method suggested in Khanduzi et al. (2020),[22],Maleknejad and Ebrahimzadeh (2014). All the algorithms have been implemented using Maple 17 with 16 digits and M (number of Gauss–Legendre quadrature nodes and weights).

Example 1

Consider the following NVIFs: minimize the performance indicator

subjected to the initial dynamical system

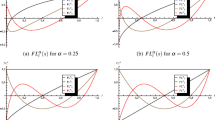

For \(\alpha =1\), \(\tilde{y}(t) = e^{t^2 },\tilde{u}(t) = 1 + 2t\) are the exact solutions. Hence, the solution of these NVIFs using the presented Chelyshkov polynomials-based approach for various values of N and \(\alpha \) has been approximated. As it can be seen from Fig. 2, the approximate solutions (for \(N=8\), \(M=N+2\) and \(\alpha =0.45,0.55,0.65,0.75,0.85,0.95,1\)) have been determined. The absolute errors of the numerical solution for y(t) and u(t) for \(\alpha =1, N=10\) are also shown in Fig. 3.

Table 1 summarizes the results obtained by using the presented method and the ones reported in other papers [22],Maleknejad and Ebrahimzadeh (2014) with various values of N, where \(\alpha =1\) and \(M=N+2\). In addition, as \(\alpha \) approaches 1, the numerical solutions converge to the exact one and agree well with it. That is, as the fractional order \(\alpha \) approaches 1, the optimal performance indicator J gets close to the optimal value (\(J = 0\)) of the integer-order \(\alpha = 1\). Based on the results, it can be concluded that the approach has been very successful in solving the above problem and outperformed the other analyzed techniques.

Numerical results for various values of \(\alpha \) and \(N = 8\) for y(t) and u(t) in Example 1

The absolute errors of numerical results for y(t) and u(t) for \(\alpha =1, N=10\) Example 1

Example 2

Consider the following NVIFs: minimize the performance indicator:

subjected to the initial dynamical system

where \(\tilde{y}(t) = t,\tilde{u}(t) =1 - te^{t^2 }\) are the exact solutions. The resulting plot of the approximate solutions (related to \(N=8\), \(M=N+2\), and \(\alpha =0.45,0.55,0.65,0.75,0.85,0.95,1\)) considering both state and control functions together is shown in Fig. 4, whereas the absolute errors for \(\alpha =1\) and \(N=10\) are plotted in Fig. 5. The solution of these NVIFs using the presented Chelyshkov polynomials approach for various values of N and \(\alpha \) has been approximated and a comparison between the obtained optimal performance indicator J results obtained with the presented method and the other ones referred in [22]Maleknejad and Ebrahimzadeh (2014) with different values of N, where \(\alpha =1\) and \(M=N+2\) are reported in Table 2.

Based on the numerical findings presented in these tables, the utility of the method for solving NVIFs is obvious, and in contrast to other approaches, the implementation of Chelyshkov polynomials is effective and accurate. In addition, as \(\alpha \) approaches 1, the numerical solutions converge to the exact one and agree well with it. That is, as the fractional order \(\alpha \) approaches 1, the optimal performance indicator J get close to the optimal value (\(J = 0\)) of the integer-order \(\alpha = 1\). From the outcome of our investigation, it is possible to conclude that also this experiment has given good results.

Numerical results for various values of \(\alpha \) and \(N = 8\) for y(t) and u(t) in Example 2

The absolute errors of numerical results for y(t) and u(t) for \(\alpha =1, N=10\) Example 2

Example 3

Now, consider the following NVIFs: minimize the performance indicator:

subjected to the initial dynamical system

Here, \(\tilde{y}(t) = e^{t},\tilde{u}(t) = e^{3t}\) are the exact solutions. The approximate solutions (related to \(N=6\), \(M=N+2\), and \(\alpha =0.45,0.55,0.65,0.75,0.85,0.95,1\)) are shown in Fig. 6 for both state and control functions. The absolute errors of the numerical solution for y(t) and u(t) for \(\alpha =1, N=10\) are also shown in Fig. 7.

Hence, the solution of these NVIFs using the suggested Chelyshkov polynomials approaches for various values of N and \(\alpha \) has been approximated. A comparison between the obtained optimal performance indicator J obtained with the suggested method and the other ones reported in [22],Maleknejad and Ebrahimzadeh (2014) with different values of N, where \(\alpha =1\) and \(M=N+2\) is reported in Table 3.

Based on the presented results, the utility of the method for solving NVIFs is obvious, and in contrast to other approaches, the implementation of Chelyshkov polynomials is efficient and accurate. In addition, as \(\alpha \) approaches 1, the numerical solutions converge to the exact one and agree well with it. That is, as the fractional order \(\alpha \) approaches 1, the optimal performance indicator J gets close to the optimal value (\(J = 0\)) of the integer-order \(\alpha = 1\). The findings of our research are quite convincing, and thus, it is possible to assert that the method is accurate and successful.

At the end, a comparison between the obtained optimal performance indicator J with the suggested method and the other ones reported in Khanduzi et al. (2020) for \(N=7\), where \(\alpha =1\) and \(M=N+2\) is reported in Table 4 (for Examples 1, 2 and 3). As can be seen, the superiority of the method for solving NVIFs is clear that the implementation of Chelyshkov polynomials is efficient and accurate.

Numerical results for various values of \(\alpha \) and \(N = 8\) for y(t) and u(t) in Example 3

The absolute errors of numerical results for y(t) and u(t) for \(\alpha =1, N=10\) Example 3

6 Conclusion

In this paper, an effective approach was introduced to approximate solutions of systems of Volterra fractional integral equations. The key characteristic of the proposed method is based on new polynomials as named Chelyshkov polynomials and their fractional operational matrix and it helps to reduce system of Volterra fractional integral equations into systems of algebraic equations to obtain approximate solutions. Three examples illustrating the usefulness and precision of the suggested method have been presented. In addition, a summary of our numerical findings and the numerical solutions obtained with some other methods already show that the Chelyshkov method of polynomials is more precise than other approaches. The obtained results by the proposed Chelyshkov polynomials emphasized that

-

The main contribution is that a new type of polynomials is applied to obtain numerical solutions.

-

Chelyshkov polynomials are efficient and successful for solving NVIFs.

-

Application of Chelyshkov polynomials is accurate and the results, as \(\alpha \) approach to 1, are better in comparison with other reported results.

-

The current strategy has ended well and with good results.

References

Angell TS (1976) On the optimal control of systems governed by nonlinear Volterra equations. J Opt Theory Appl 19:29–45

Baleanu D, Diethelm K, Scalas E, Trujillo JJ (2016) Fractional calculus: models and numerical methods. World Scientific, Singapore

Belbas SA (1999) Iterative schemes for optimal control of Volterra integral equations. Non- linear Anal 37:57–79

Belbas SA (2007) A new method for optimal control of Volterra integral equations. Appl Math Comput 189(2):1902–1915

Belbas SA (2008) A reduction method for optimal control of Volterra integral equations. Appl Math Comput 197(2):880–890

Bhrawy AH, Doha EH, Baleanu D, Ezz-Eldien SS (2015) An accurate numerical tech nique for solving fractional optimal control problems. Differ Equ 15:23

Burrage K, Cardone A, D’Ambrosio R, Paternoster B (2017) Numerical solution of time fractional diffusion systems. Appl. Numer. Math. 116:82–94

Cardone A, Conte D, Patenoster B (2018) Two-step collocation methods for fractional differential equations. Discr Cont Dyn Syst - B 23(7):2709–2725

Cardone A, D’Ambrosio R, Paternoster B (2019) A spectral method for stochastic fractional differential equations. Appl Numer Math 139:115–119

Cardone A, Conte D, Paternoster B (2009) A family of multistep collocation methods for volterra integro-differential equations. AIP Conf Proc 1168(1):358–361

Capobianco G, Conte D, Paternoster B (2017) Construction and implementation of two-step continuous methods for Volterra Integral Equations. Appl Numer Math 119:239–247

Chelyshkov VS (2006) Alternative orthogonal polynomials and quadratures. Electron Trans Numer Anal 25(7):17–26

Conte D, Cardone A, Stability analysis of spline collocation methods for fractional differential equations, submitted

Conte D, D’Ambrosio R, Paternoster B (2018) On the stability of \(\theta \)-methods for stochastic Volterra integral equations, Cont Dyn Sys - Ser B23(7):2695–2708

Conte D, Califano G (2018) Optimal Schwarz waveform relaxation for fractional diffusion-wave equations. Appl Numer Math 127(2017):125–141

Conte D, Paternoster B (2017) Parallel methods for weakly singular volterra Integral Equations on GPUs. Appl Numer Math 114:30–37

Conte D, Shahmorad S, Talaei Y (2020) New fractional Lanczos vector polynomials and their application to system of Abel-Volterra integral equations and fractional differential equations. J Comput Appl Math 366:112409

Ejlali N, Hosseini SM (2016) A pseudospectral method for fractional optimal control problems. J Opt Theory Appl 1–25

El-Kady M, Moussa H (2013) Monic Chebyshev approximations for solving optimal control problem with Volterra integro differential equations. Gen Math Notes 14(2):23–36

Jafari H, Ghasempour S, Baleanu D (2016) On comparison between iterative methods for solving nonlinear optimal control problems. J Vib Control 22(9):2281–2287

Khanduzi R, Ebrahimzadeh A, Panjeh S, Beikc Ali (2020) Optimal control of fractional integro-differential systems based on a spectral method and grey wolf optimizer. Int J Opt Control 10(1):55–65

Khanduzi R, Ebrahimzadeh A, Reza Peyghami M A modified teaching–learning-based optimization for optimal control of Volterra integral systems, Methodologies and Application, https://doi.org/10.1007/s00500-017-2933-8.

Li Y (2010) Solving a nonlinear fractional differential equation using Chebyshev wavelets. Commun Nonlinear Sci Numer Simul 15(9):2284–2292

Maleknejad K, Hashemizadeh E, Ezzati R (2011) A new approach to the numerical solution of Volterra integral equations by using Bernsteins approximation. Commun Nonlinear Sci Numer Simulat 16:647–655

Maleknejad K, Almasieh H (2011) Optimal control of Volterra integral equations via triangular functions. Math Comput Modelling 53:1902–1909

Maleknejad K, Ebrahimzadeh A (2014) Optimal control of Volterra integro-differential systems based on Legendre wavelets and collocation method. Int J Math Comput Sci 1(7):50–54

Maleknejad K, Nosrati Sahlan M, Ebrahimizadeh A (2012) Wavelet Galerkin method for the solution of nonlinear Klein–Gordon equations by using B-spline wavelets, Int Conf Scientific Comput, Las Vegas, Nevada

Mashayekhi S, Ordokhani Y, Razzaghi M (2013) Hybrid functions approach for optimal control of systems described by integro differential equations. Appl Math Model 37(5):3355–3368

Moradi L, Mohammadi F (2019) A comparative approach for time-delay fractional optimal control problems: discrete versus continuous chebyshev polynomials. Asian J Control 21(6):1–13

Oldham KB, Spanier J (1974) The Fractional Calculus. Academic Press, New York

Peyghami MR, Hadizadeh M, Ebrahimzadeh A (2012) Some explicit class of hybrid methods for optimal control of Volterra Integral Equations. J Inform Comput Sci 7(4):253–266

Riordan J (1980) An Introduction to Combinatorial Analysis. Wiley, New York

Razzaghi M, Yousefi S (2001) Legendre wavelet method for the solution of nonlinear problems in the calculus of variations. Math Comput Model 34(1–2):45–54

Samko SG, Kilbas AA, Marichev OI (1993) Fractional integrals and derivatives: theory and applications. Gordon and Breach, Langhorne

Saadatmandi A, Dehghan M (2010) A new operational matrix for solving fractional-order differential equations. Comput Math Appl 59(3):1326–1336

Srivastava MH, Kumar D, Singh H (2017) An efficient analytical technique for fractional model of vibration equation. Appl Math Model 45:192–204

Tohidi E, Samadi ORN (2013) Optimal control of nonlinear Volterra integral equations via Legendre polynomials. IMA J Math Control Inform 30:67–83

Acknowledgements

The authors would like to thank the anonymous referee who provided useful and detailed comments to improving the quality of the publication.

Funding

Open access funding provided by Universitá degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by José Tenreiro Machado.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is supported by GNCS-INDAM project and by PRIN2017-MIUR project. The authors Dajana Conte, Leila Moradi, and Beatrice Paternoster are GNCS-INDAM members.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Moradi, L., Conte, D., Farsimadan, E. et al. Optimal control of system governed by nonlinear volterra integral and fractional derivative equations. Comp. Appl. Math. 40, 157 (2021). https://doi.org/10.1007/s40314-021-01541-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-021-01541-3

Keywords

- Riemann–Liouville integral

- Chelyshkov polynomials

- Volterra fractional integral equations

- Fractional calculus