Abstract

This study is aimed to develop a new matrix method, which is used an alternative numerical method to the other method for the high-order linear Fredholm integro-differential-difference equation with variable coefficients. This matrix method is based on orthogonal Jacobi polynomials and using collocation points. The improved Jacobi polynomial solution is obtained by summing up the basic Jacobi polynomial solution and the error estimation function. By comparing the results, it is shown that the improved Jacobi polynomial solution gives better results than the direct Jacobi polynomial solution, and also, than some other known methods. The advantage of this method is that Jacobi polynomials comprise all of the Legendre, Chebyshev, and Gegenbauer polynomials and, therefore, is the comprehensive polynomial solution technique.

Similar content being viewed by others

Introduction

Orthogonal Jacobi polynomials

The systems of polynomials remain a very active research area in mathematics, physics, engineering and other applied sciences; and the orthogonal polynomials, among others, are definitely the most thoroughly studied and widely applied systems [1–3]. The three of these systems, namely, Hermite, Laguerre, and Jacobi, are called collectively the classical orthogonal polynomials [4]. There is excessive literature on these polynomials, and the most comprehensive single account of the classical polynomials is found in the classical treatise of Szegö [5].

Jacobi polynomials are the common set of orthogonal polynomials defined by the formula [4]

Here, \(\alpha\) and \(\beta\) are parameters that, for integrability purposes, are restricted to \(\alpha > - 1, \beta > - 1\). However, many of the identities and other formal properties of these polynomials remain valid under the less restrictive condition that neither \(\alpha\) is \(\beta\) a negative integer. Among the many special cases, the following is the most important [4]

-

(a)

The Legendre polynomials \(\left( {\alpha = \beta = 0} \right)\)

-

(b)

The Chebyshev polynomials \(\left( {\alpha = \beta = - 1/2} \right)\)

-

(c)

The Gegenbouer (or ultraspherical) polynomials \(\left( {\alpha = \beta } \right)\)

The Jacobi polynomials \(P_{n}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) are defined [6, 7] with respect to the weight function \(\omega^{\alpha ,\beta } \left( x \right) = \left( {1 - x} \right)^{\alpha } \left( {1 + x} \right)^{\beta } (\alpha > - 1,\beta > - 1)\) on \(\left( { - 1,1} \right)\). It is proved that the Jacobi polynomials satisfy the following relation [7]:

where

These polynomials play role in rotation matrices [8], in the trigonometric Reson–Morse potential [9], and the cases of a few exact solutions in quantum mechanics [10, 11].

In very recent years, several researchers developed new numerical algorithms for some problems using Jacobi polynomials. Eslahchi et al. [12] gave a numerical solution for some nonlinear ordinary differential equations using the spectral method. Bojdi et al. [13] proposed a Jacobi matrix method for differential-difference equations with variable coefficients. Kazem [14] used the Tau method for solving fractional-order differential equations by means of Jacobi polynomials.

Recently, Bharwy et al. [15–22] have used Jacobi polynomials both in operational matrix method and in spectral collocation method for solving some class of fractional differential equations; for instance, nonlinear sub-diffusion equations, delay fractional optimal control problems, time fractional Kdv equations, Caputo fractional diffusion-wave equations, fractional nonlinear cable equation, and fractional differential equations.

Integro-differential-difference equations

Fredholm integro-differential-difference equations (FIDDEs) are encountered in many model problems in biology, physics, and engineering. Also, they have been investigated using different methods by scientists [23–28]. Various numerical schemes for solving a partial integro-differential equation are presented by Dehghan [29].

In this study, we generate a procedure to find a Jacobi polynomial solution for the nth order linear FIDDE with variable coefficients

under mixed conditions

where \(P_{i} \left( x \right)\), \(Q_{j} \left( x \right)\), \(K\left( {x,t} \right)\), and \(g\left( x \right)\) are known functions and \(\alpha_{ki}\), \(\beta_{ki}\), \(\gamma_{ki}\), and \(\mu_{k}\) are appropriate constants, while \(y\left( x \right)\) is the unknown function. Note that \(a \le \eta \le b\) is a given point in the spatial domain of problem.

The main aim of our study, using orthogonal Jacobi polynomials, is to provide an approximate solution for the problem (4, 5), which is usually hard to find analytical solutions.

We assume a solution expressed as the truncated series of orthogonal Jacobi polynomials defined by

where \(P_{n}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\), \(n = 0, 1, \ldots , N\) denote the orthogonal Jacobi polynomials defined by (2, 3); \(N\) is chosen \(N \ge n\) and \(a_{n} , n = 0, 1, \ldots , N\) are unknown coefficients to be determined. Note that \(\alpha\) and \(\beta\) are arbitrary parameters, such that \(\left( {\alpha > - 1,\beta > - 1} \right)\).

Fundamental matrix relations

We can transform the orthogonal Jacobi polynomials \(P_{n}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) from algebraic form into matrix form as follow:

where

and

such that

We assume the solution \(y\left( x \right)\), which is defined by the truncated orthogonal Jacobi series (6) in matrix form as follow

where

By substituting the matrix form of Jacobi polynomials (7) to (11) into (4, 5), we can obtain the fundamental matrix equation of approximate solution of unknown function as

Matrix representation of differential-difference part of problem

Differential-difference part of problem is \(\mathop \sum \nolimits_{i = 0}^{n} P_{i} \left( x \right)y^{(i)} \left( x \right) + \mathop \sum \nolimits_{j = 0}^{m} Q_{j} \left( x \right)y^{(j)} \left( {x - \tau } \right).\) First, to explain the relation between the matrix form of the unknown function and the matrix form of its derivative \(y^{(i)} \left( x \right)\), we introduce the relation between \({\mathbf{X}}\left( x \right)\) and its derivatives \({\mathbf{X}}^{\left( i \right)} \left( x \right)\) can be expressed as

where

Then, using (13) and (14), we may write

Similarly, the relation between the matrix form of unknown function and matrix form of its delay forms’ derivatives \(y^{(j)} \left( {x - \tau } \right)\) can be expressed as

where

Thus, it is seen that

Using (16) and (19), the matrix form of differential-difference part of Eq. (4) becomes

Matrix representation of integral part of problem

Fredholm integral part of problem is \(\mathop \int \nolimits_{a}^{b} K\left( {x,t} \right)y\left( {t - \tau } \right){\text{d}}t\), where \(K\left( {x,t} \right)\) the kernel function of the Fredholm integral part of main problem is. This function can be written using the truncated Taylor Series [30] and the truncated orthogonal Jacobi series, respectively, as

and

where

is the Taylor coefficient and \(k_{mn}^{J}\) is the Jacobi coefficient. The expressions (21) and (22) can be written using matrix forms of the Jacobi polynomials, respectively, as

The following relation can be obtained from Eqs. (7), (23), and (24),

By substituting the Eqs. (19) and (25) into\(\mathop \int \nolimits_{a}^{b} K\left( {x,t} \right)y\left( {t - \tau } \right){\text{d}}t\), we derive the matrix relation

such that

where

Finally, substituting the form (7) into expression (26) yields the matrix relation

Matrix representation of conditions

In this section, we write to the matrix form of mixed conditions of the problem given Eq. (5), using the matrix relation (16), as

Method of solution

We substitute obtained matrix relations in the previous subsections given in Eqs. (20) and (27) into fundamental problem to build the fundamental matrix equation of the problem. For this purpose, we can define collocation points as follow:

As can be observed, standard collocation points dividing the domain interval \([a,b]\) of the problem into \(N\) equal parts are employed.

Accordingly, we obtain the system of matrix equations

The fundamental matrix equation becomes

where

Equation (29), which is matrix representation of the Eq. (4), corresponds to a system of \(N + 1\) algebraic equations. This system indicates \(N + 1\) unknown coefficients, such that \(a_{0} , a_{1} , a_{2} , \ldots , a_{N}\). Briefly, if we define

under last definition \({\mathbf{W}}\) matrix Eq. (29) transforms into the augmented matrix form

Similarly, from (28), the matrix form of mixed conditions can be obtained briefly as

such that

Consequently, to find the Jacobi polynomial solution of Eq. (4) under the mixed conditions (5), we replace the row matrix (31) by last \(n\) rows of the augmented matrix (30), which yields the new matrix equation form written as follow

If \({\text{rank}}\;{\tilde{\mathbf{W}}} = {\text{rank}}\left[ {{\tilde{\mathbf{W}}};{\tilde{\mathbf{G}}}} \right] = N + 1\), then we can find the matrix of unknown coefficient of Jacobi series via \({\mathbf{A}} = \left( {{\tilde{\mathbf{W}}}} \right)^{ - 1} {\tilde{\mathbf{G}}}\). Note that the matrix \({\mathbf{A}}\) (thereby, the coefficients \(a_{0} , a_{1} , a_{2} , \ldots , a_{N}\)) is uniquely determined [22]. Equation (4) has also a unique solution under the conditions (5). Thus, we get the Jacobi polynomial solution for arbitrary parameters \(\alpha\) and \(\beta\):

Error analysis

In this part of study, it is given to a useful error estimation procedure for orthogonal Jacobi polynomial solution of the problem. Also, this procedure is used to obtain the improved solution of the problem (4, 5) according to the direct Jacobi polynomial solution. For this purpose, we use the residual correction technique [31, 32] and error estimation by the known Tau method [33, 34].

Recently, Yüzbaşı and Sezer [35] solved a class of the Lane–Emden equations using the improved BCM with residual error function. Yüzbaşı et al. [36] proposed an improved Legendre method for to obtain the approximate solutions of a class of the integro-differential equations. Wei and Chen [37] presented a numerical method called spectral methods for classes Volterra type integro-differential equations with weakly singular kernel and smooth solutions.

For the purpose of calculating the corrected solution, we now define the residual function using the Jacobi polynomial solution by obtained the our method as

where \(y_{N}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) is the approximate solution of Eqs. (4, 5) for arbitrary parameters \(\alpha\) and \(\beta\). Hence, \(y_{N}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) satisfies the problem

The error function \(e_{N} \left( x \right)\) can also be defined as

where \(y\left( x \right)\) is the exact solution of the Eqs. (4, 5). Substituting (35) into (4, 5) and also using (33) and (34), we derive the error differential equation with homogenous conditions:

By solving the problem (36) using the present method given in the previous section, we get the error estimation function \(e_{N,M}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) to \(e_{N}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\). Note that \(M\) must be bigger than \(N\) and error estimation is found using the residual function \(R_{N} \left( x \right)\). Consequently, by means of the orthogonal Jacobi polynomials \(y_{N}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) and \(e_{N,M}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\), we obtain the corrected Jacobi solution

Finally, we construct the Jacobi error function \(e_{N}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) and the corrected Jacobi error function \(E_{N,M}^{(\alpha ,\beta )} \left( x \right)\)

Illustrative examples

We apply the Jacobi matrix method to four examples via the symbolic computation program Maple [38]. In these examples, the term \(\left| {e_{N}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)} \right|\) represent absolute error function and also \(\left| {E_{N,M}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)} \right|\) represent the absolute error function of the corrected Jacobi polynomial solution.

Example 1 As the first example, we consider the FIDDE [23, 24]

with initial condition

Here, \(m = 1, \tau = 1, P_{1} \left( x \right) = 1, P_{0} \left( x \right) = - 1, g\left( x \right) = x - 2, Q_{1} \left( x \right) = x, Q_{0} \left( x \right) = 1, K\left( {x,t} \right) = x + t, a = - 1, b = 1, \eta = 0, a_{00} = 1, \beta_{00} = - 2,\gamma_{00} = 1, \mu_{0} = 0\). We assume that the Eq. (40) has a Jacobi polynomial solution in the following form,

where \(N = 2\) and \(\left( {\alpha ,\beta } \right) = \left( {0.5, - 0.5} \right)\), which are chosen arbitrary; then, according to (8)

The collocation points are computed as

and from Eq. (30), the matrix equation of the Eq. (40) is

where

Hence, we obtain the matrix \({\mathbf{W}}\) as follows

Using Eq. (31), we can write the matrix equation of the condition of the problem as

Consequently, to find the Jacobi polynomial solution of the problem under the mixed conditions, we replace the row matrix \(\left[ {{\mathbf{U}};\lambda } \right]\) by last row of the matrix \(\left[ {{\mathbf{W}};{\mathbf{G}}} \right]\) and obtain the matrix \(\left[ {{\tilde{\mathbf{W}}};{\tilde{\mathbf{G}}}} \right]\) as

Solving the new augmented matrix \(\left[ {{\tilde{\mathbf{W}}};{\tilde{\mathbf{G}}}} \right]\), we obtain the Jacobi polynomial coefficient matrix

From Eq. (11), the Jacobi polynomial solution of the problem is \(y_{2}^{{\left( {0.5, - 0.5} \right)}} \left( x \right) = 3x + 4\), which is the exact solution of the problem. Furthermore, we can obtain the exact solution of the problem for any value of \(N\) and corresponding suitable values of \(\left( {\alpha ,\beta } \right)\).

Example 2 We consider a third-order FIDDE with variable coefficients

with the initial conditions\(y\left( 0 \right) = 0, y^{'} \left( 0 \right) = 1, y^{''} \left( 0 \right) = 0\). where \(g\left( x \right) = - \left( {x + 1} \right)\left( {\sin \left( {x - 1} \right) + \cos \left( x \right)} \right) - \cos \left( 2 \right) + 1\). The exact solution of problem is \(y\left( x \right) = \sin \left( x \right).\)

After several trials, it has been determined that \(\left( {\alpha ,\beta } \right) = \left( { - 0.4, 0.5} \right)\) gives the most accurate result; therefore, the approximate solution of the third-order FIDDE has been derived by employing these values, as \(y_{6}^{{\left( { - 0.4,0.5} \right)}} \left( x \right) = 0.2993926085 + 0.5377164192x - 0.4041110104\left( {x - 1} \right)^{2} - 0.6837651331e{-} 1\left( {x - 1} \right)^{3} + 0.4006530189e{-} 1\left( {x - 1} \right)^{4} + 0,2888171229e{-} 2\left( {x - 1} \right)^{5} - 0.8352418987e{-} 3\left( {x - 1} \right)^{6}\) and the estimated error function is \(e_{6,7}^{{\left( { - 0.4,0.5} \right)}} \left( x \right) = 0.585645023e{-} 3 + 0.460274411e{-} 2x - 0.1546818100e{-} 1\left( {x - 1} \right)^{2} - 0.2185948038e{-} 1\left( {x - 1} \right)^{3} - 0.4716294762e{-} 2\left( {x - 1} \right)^{4} + 0.2241198695e{-} 2\left( {x - 1} \right)^{5} - 0.1679942991e{-} 3\left( {x - 1} \right)^{6} - 0.1485433260e{-} 3\left( {x - 1} \right)^{7}\). Then, we calculate the corrected solution function simply as the sum of the approximate solution and the estimated error function

Table 1 shows the relative absolute error function \(\left| {e_{6}^{{\left( { - 0.4,0.5} \right)}} \left( x \right)} \right|\) and the corrected absolute error function \(\left| {E_{6,7}^{{\left( { - 0.4,0.5} \right)}} \left( x \right)} \right|\) for this example.

Now, we determine the maximum error for \(y_{N}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) as,

The maximum errors \(E_{N}^{{\left( {\alpha ,\beta } \right)}}\) for different values of \(N\) are given in Table 2, and it is seen that the error decreases continually as \(N\) increases.

The maximum error for the corrected Jacobi polynomial solution (37) is calculated in a similar way,

and the results are shown in Table 3 for miscellaneous values of \(N\), \(M\). The decrease in maximum error, as \(M\) increases, is indisputable.

Finally, the third-order FIDDE has also been solved using Legendre, Gegenbauer (also Chebyshev), and Jacobi polynomials, for comparison purposes. The maximum error values are given in Table 4, and it is seen that Jacobi-based solution gives slightly better results.

Example 3 The third example is a second-order FIDE [24–26] with variable coefficients

under the boundary conditions

Here, \(P_{2} \left( x \right) = 1, P_{1} \left( x \right) = 4x, P_{0} \left( x \right) = 0, Q_{0} \left( x \right) = 0, g\left( x \right) = - 8x^{4} /\left( {x^{2} + 1} \right)^{3} , \alpha = 0, \beta = 0, K\left( {x,t} \right) = - 2\left( {t^{2} + 1} \right)/\left( {x^{2} + 1} \right)^{2} , \tau = 0, a = 0\).

The exact solution of this problem is \(\left( {x^{2} + 1} \right)^{ - 1}\).

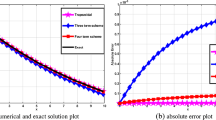

Figure 1 shows a comparison of the Jacobi polynomial solution \(y_{N}^{{\left( {0,0} \right)}} \left( x \right)\), and the corrected Jacobi polynomial solution is \(y_{N,M}^{{\left( {0,0} \right)}} \left( x \right)\), for \(\left( {N,M} \right) = \left( {5,6} \right)\) and \((\alpha = \beta = 0)\), with the exact solution \(y(x)\). It is apparently seen that the corrected Jacobi polynomial solution almost coincides with the exact solution.

Table 5 and Fig. 2 show a comparison of the absolute error with the corrected absolute errors, for \(N = 5, 8\) and \(M = 6, 7, 8,9\). The parameters are taken as \((\alpha = \beta = 0)\). The corrected absolute errors are corrected, once more and the last two columns show these values. It is noticed that sequential corrections tend to decrease the absolute error.

Example 4 [25, 26] The last example is the second-order Fredholm integro-differential equation

with conditions

The exact solution of problem is

Taking \((\alpha = 0.4, \beta = 0.5)\), the absolute errors of Jacobi polynomial solution for \(N = 7\) and the absolute errors of the improved Jacobi polynomial solution for \(N = 7, M = 8\) are compared with those of the wavelet Galerkin, the wavelet collocation, and the Chebyshev finite difference (ChFD) methods [25, 26], in Table 6. Considering the errors of the different methods, it is observed that the smallest errors are obtained using the improved Jacobi polynomial solution.

Example 5 Consider the first-order linear FIDDE [39]

We assume that the problem has a Jacobi polynomial solution in the form

where \(N = 3\) and \(\left( {\alpha ,\beta } \right) = \left( {0.2, - 0.3} \right)\), which are chosen arbitrary. Using the mentioned methods, the Jacobi polynomial solution of the problem is obtained by \(y_{3}^{{\left( {0.2, - 0.3} \right)}} \left( x \right) = x - x^{3}\), which is the exact solution of the problem [39]. Furthermore, we can obtain the exact solution of the problem for any value of \(N \ge 3\) and corresponding suitable values of \(\left( {\alpha ,\beta } \right)\).

Conclusions

A new matrix method based on Jacobi polynomials and collocation points has been introduced to solve high-order linear FIDDE with variable coefficients. Jacobi polynomials are the common set of orthogonal polynomials, which are the most extensively studied and widely applied systems. The solution of the FIDDE is expressed as a truncated series of orthogonal Jacobi polynomials, which is then transformed from algebraic form into matrix form. The problem and the mixed conditions are also represented in matrix form. Finally, the solution is obtained as a truncated Jacobi series written in matrix form using collocation points. A new error estimation procedure for polynomial solution and a technique to find a high accuracy solution are developed.

Most of the previous studies dealt with solutions using Legendre, Chebyshev, and Gegenbauer polynomials. In this study, however, we have proposed a Jacobi polynomial solution that comprises all of these polynomial solutions.

The new Jacobi matrix method has been applied to four illustrative examples. It is well seen from these examples that the method yields either the exact solution or a high accuracy approximate solution for delay integro-differential equation problems. The accuracy of the approximate solution can be increased using the proposed error analysis technique depending on residual function.

References

Pcoolen-Schrijner, P., Van Doorn, E.A.: Analysis of random walks using orthogonal polynomials. J. Comput. Appl. Math. 99, 387–399 (1998)

Fischer, B., Prestin, J.: Wavelets based on orthogonal polynomials. Math. Comp. 66, 1593–1618 (1997)

El-Mikkawy, M.E.A., Cheon, G.S.: Combinatorial and hypergeometric identities via the Legendre polynomials—a computational approach. Appl. Math. Comput. 166, 181–195 (2005)

Chihara, T.S.: An Introduction to Orthogonal Polynomials. Gordon and Breach, Philadelphia (1978)

Szegö, G.: Orthogonal Polynomials, vol. 23. Amer Mathema Soci, Colloquium Publication, New York (1939)

Grümbaum, F.A.: Matrix valued Jacobi polynomials. Bull. Des. Sci. Math. 127, 207–214 (2003)

Eslahchi, M.R., Dehghan, M.: Application of Taylor series in obtaining the orthogonal operational matrix. Comput. Math Appl. 61, 2596–2604 (2011)

Rose, M.E.: Elementary Theory of Angular Momentum. Wiley, Oxford (1957)

Bijker, R., et al.: Latin-American School of Physics: XXXV ELAF: Supersymmetry and Its Applications in Physics. AIP Conf. Series 744 (2004)

Weber, H.J.: A simple approach to Jacobi polynomials: Integ. Transf. Spec. Funct. 18, 217–221 (2007)

De, R., Dutt, R., Sukhatme, U.: Mapping of shape invariant potentials under point canonical transformations. J. Phys. A Math. Genet. 25, 843–850 (1992)

Eslahchi, M.R., Dehghan, M., Ahmadi-Asl, S.: The general Jacobi matrix method for solving some nonlinear ordinary differential equations. Appl. Math. Model. 36, 3387–3398 (2012)

Kalateh Bojdi, Z., Ahmadi-Asl, S., Aminataei, A.: The general shifted Jacobi matrix method for solving the general high order linear differential-difference equations with variable coefficients. J. Math. Res. Appl. 1, 10–23 (2013)

Kazem, S.: An integral operational matrix based on Jacobi polynomials for solving fractional-order differential equations. Appl. Math. Model. 37, 1126–1136 (2013)

Bhrawy, A.H., Taha, M., José, A.T.M.: A review of operational matrices and spectral techniques for fractional calculus. Nonlinear Dyn. 81(3), 1023–1052 (2015)

Bhrawy, A.H: A Jacobi spectral collocation method for solving multi-dimensional nonlinear fractional sub-diffusion equations. Num. Algorithms 1–23 (2015). doi:10.1007/s11075-015-0087-2

Bhrawy, A.H., Alofi, A.S.: The operational matrix of fractional integration for shifted Chebyshev polynomials. Appl. Math. Lett. 26(1), 25–31 (2013)

Bhrawy, A.H., Ezz-Eldien, S.S.: A new Legendre operational technique for delay fractional optimal control problems. Calcolo 1–23 (2015). doi:10.1007/s10092-015-0160-1

Bhrawy, A.H, et al.: A numerical technique based on the shifted Legendre polynomials for solving the time-fractional coupled KdV equations. Calcolo 1–17 (2015). doi:10.1007/s10092-014-0132-x

Bhrawy, A.H., Zaky, M.A., Van Gorder, R.A.: A space-time Legendre spectral tau method for the two-sided space-time Caputo fractional diffusion-wave equation. Numer. Algorithms 71(1), 151–180 (2016)

Bhrawy, A.H., Zaky, M.A.: Numerical simulation for two-dimensional variable-order fractional nonlinear cable equation. Nonlinear Dyn. 80(1-2), 101–116 (2015)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: A new Jacobi operational matrix: an application for solving fractional differential equations. Appl. Math. Model. 36(10), 4931–4943 (2012)

Gülsu, M., Sezer, M.: Approximations to the solution of linear Fredholm integro differential-difference equation of high order. J. Franklin Inst. 343, 720–737 (2006)

Saadatmandi, A., Dehghan, M.: Numerical solution of the higher-order linear Fredholm integro-differential-difference equation with variable coefficients. Comput. Math Appl. 59, 2996–3004 (2010)

Dehghan, M., Saadatmandi, A.: Chebyshev finite difference method for Fredholm integro-differential equations. Int. J. Comput. Math. 85, 123–130 (2008)

Behirly, S.H., Hasnish, H.: Wavelet methods for the numerical solution of Fredholm integro-differential equations. Int. J. Appl. Math. 11, 27–36 (2002)

Şahin, N., Yüzbaşi, Ş., Sezer, M.: A Bessel polynomial approach for solving general linear Fredholm integro-differential-difference equations. Int. J. Comput. Math. 88, 3093–3111 (2011)

Kurt, A., Yalçinbaş, S., Sezer, M.: Fibonacci collocation method for solving high-order linear Fredholm integro-differential-difference equations. Int. J. Math. Math. Sci.1–9 (2013). doi:10.1155/2013/486013

Dehghan, M.: Solution of a partial integro-differential equation arising from viscoelasticity. Int. J. Comput. Math. 83, 123–129 (2006)

Kurt, N., Sezer, M.: Polynomial solution of high-order linear Fredholm integro-differential equations with constant coefficients. J. Franklin Inst. 345, 839–850 (2008)

Oliveira, F.A.: Collocation and residual correction. Numer. Math. 36, 27–31 (1980)

Çelik, İ.: Collocation method and residual correction using Chebyshev series. Appl. Math. Comput. 174, 910–920 (2006)

Pour-Mahmoud, J., Rahimi-Ardabili, M.Y., Shahmorad, S.: Numerical solution of the system of Fredholm integro-differential equations by the Tau method. Appl. Math. Comput. 168, 465–478 (2005)

Shahmorad, S.: Numerical solution of the general form linear Fredholm-Volterra integro-differential equations by the Tau method with an error estimation. Appl. Math. Compt. 167, 1418–1429 (2005)

Yüzbaşi, Ş., Sezer, M.: An improved Bessel collocation method with a residual error function to solve a class of Lane-Emden differential equations. Math. Comput. Model. 57, 1298–1311 (2013)

Yüzbaşi, Ş., Sezer, M., Kemanci, B.: Numerical solutions of integro-differential equations and application of a population model with an improved Legendre method. Appl. Math. Model. 37, 2086–2101 (2013)

Wei, Y., Chen, Y.: Convergence analysis of the spectral methods for weakly singular Volterra integro-differential equations with smooth solutions. Adv. Appl. Math. Mech. 4, 1–20 (2012)

Maple 11 User Manual, Waterloo Maple Inc. http://www.maplesoft.com/view.aspx?sl=5883 (2007). Accessed 1 Jun 2016

Abdelkawy, M.A., Mohamed, A., Ezz-Eldien, S.S., Ahmad, Z.M.A.: A Jacobi spectral collocation scheme for solving Abel’s integral equations. Progr. Fract. Differ. Appl. 1(3), 1–14 (2015)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bahşı, M.M., Kurt Bahşı, A., Çevik, M. et al. Improved Jacobi matrix method for the numerical solution of Fredholm integro-differential-difference equations. Math Sci 10, 83–93 (2016). https://doi.org/10.1007/s40096-016-0181-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40096-016-0181-1