Abstract

In this article we present a way of treating stochastic partial differential equations with multiplicative noise by rewriting them as stochastically perturbed evolutionary equations in the sense of Picard and McGhee (Partial differential equations: a unified Hilbert space approach, DeGruyter, Berlin, 2011), where a general solution theory for deterministic evolutionary equations has been developed. This allows us to present a unified solution theory for a general class of stochastic partial differential equations (SPDEs) which we believe has great potential for further generalizations. We will show that many standard stochastic PDEs fit into this class as well as many other SPDEs such as the stochastic Maxwell equation and time-fractional stochastic PDEs with multiplicative noise on sub-domains of \({\mathbb {R}^d}\). The approach is in spirit similar to the approach in DaPrato and Zabczyk (Stochastic equations in infinite dimensions, Cambridge University Press, Cambridge, 2008), but complementing it in the sense that it does not involve semi-group theory and allows for an effective treatment of coupled systems of SPDEs. In particular, the existence of a (regular) fundamental solution or Green’s function is not required.

Similar content being viewed by others

1 Introduction

The study of stochastic partial differential equations (SPDEs) attracted a lot of interest in the recent years, with a wide range of equations already been investigated. A common theme in the study of these equations is to attack the problem of existence and uniqueness of solutions to SPDEs by taking solution approaches from the deterministic setting of PDEs and applying them to a setting that involves a stochastic perturbation. Examples for this are the random-field approach that uses the fundamental solution to the associated PDE in [5, 7, 39], the semi-group approach which treats evolution equations in Hilbert/Banach spaces via the semi-group generated by the differential operator of the associated PDE, see [8] or [20, 21] for a treatise, and the variational approach which involves evaluating the SPDE against test functions, which corresponds to the concept of weak solutions of PDEs, see [32, 34, 36].

In this article we aim to transfer yet another solution concept of PDEs to the case when the right-hand side of the PDE is perturbed by a stochastic noise term. This solution concept, see [26] for a comprehensive study and [28, 37, 42] for possible generalizations, is of operator-theoretic nature and takes place in an abstract Hilbert space setting. Its key features are establishing the time-derivative operator as a normal, continuously invertible operator on an appropriate Hilbert space and a positive definiteness constraint on the partial differential operator of the PDE (realized as an operator in space–time). Actually, this solution theory is a general recipe to solve a first-order (in time and space) system of coupled equations, and when solving a higher-order (S)PDE, it gets reduced to such a first-order system. In this sense the solution theory we will apply is roughly similar in spirit to the treatment of hyperbolic equations in [13, 19], see also [2] for an application to SPDEs.

We shall illustrate the class of SPDEs we will investigate using this approach. Throughout this article let H be a Hilbert space, that we think of as basis space for our investigation. We assume A to be a skew-self-adjoint, unbounded linear operator on H (i.e. \(\mathrm {i}A\) is a self-adjoint operator on H) which is thought of as containing the spatial derivatives. Furthermore, we denote by \(\partial _0\) the time-derivative operator that will be constructed as a normal and continuously invertible operator in Sect. 2.1. In particular, it can be shown that the spectrum of \(\partial _0^{-1}\) is contained in a ball of the right half plane touching \(0\in {\mathbb {C}}\). Let for some \(r>0\), \(M:B(r,r)\rightarrow L(H)\) be an analytic function, where B(r, r) is the open ball in \({\mathbb {C}}\) with radius \(r>0\) centered at \(r>0\), and L(H) the set of bounded linear operators on H. Then one can define via a functional calculus the linear operator \(M(\partial _0^{-1})\) as a function of the inverse operator \(\partial _0^{-1}\), which will be specified below. The idea to define this operator is to use the Fourier–Laplace transform as explicit spectral representation as multiplication operator for \(\partial _0\) yielding a functional calculus for both \(\partial _0\) and its inverse. The role that \(M(\partial _0^{-1})\) plays is coupling the equations in the first-order system. In applications, \(M(\partial _0^{-1})\) also contains the information about the ‘constitutive relations’ or the ‘material law’.

Throughout this article we consider the following (formal) system of coupled SPDEs

subject to suitable initial conditions, where u(t) admits values in a Hilbert space H, \(\sigma \) and B are Lipschitz-continuous (in some suitable norms) functions and W is a cylindrical G-valued Wiener process for some separable Hilbert space G (possibly different from H). Though seeming to represent first-order equations, only, it is possible to handle for instance the wave (or heat) equation with (1.1) as well, see below. Moreover, note that \(M(\partial _0^{-1})\) is an operator acting in space–time and \(\partial _0M(\partial _0^{-1})\) is the composition of time differentiation and the application of the operator \(M(\partial _0^{-1})\).

We emphasize that in the formulation of (1.1), A does not admit the usual form of stochastic evolution equations as, for instance, in [8]. Furthermore, (1.1) should not be thought of being of a similar structure as the problems discussed in [11, 22]. In fact, the coercitivity is encoded in \(M(\partial _0^{-1})\) rather than A.

In Eq. (1.1) possible boundary conditions are encoded in the domain of the (partial differential) operator A. The way of dealing with this issue will also be further specified below. The main achievement of this article is the development of a suitable functional analytic setting for the class of equations (1.1), which allows us to discuss well-posedness issues of this class of equations, that is, existence, uniqueness and continuous dependence of solutions on the input data.

Now we comment on a notational unfamiliarity in Eq. (1.1). Note that in (1.1) a stochastic integral appears on the right-hand side of the equation instead of the more familiar formal product \(\sigma (u(t))\dot{W}(t)\). We stress here that we are not aiming at solving a different class of equations, but in fact we deal with a more general formulation of the common way to write an SPDE. Let us illustrate this point using two common examples, the stochastic heat equation and the stochastic wave equation. The former is usually expressed in the classic formulation in the following way

or—formally dividing by dt—

where \(\Delta =\mathrm {div}{{\mathrm{grad}}}\) is the Laplacian on the Euclidean space \(\mathbb {R}^d\) with \(d\in \mathbb {N}\), and \(b,\sigma \) are linear or nonlinear mappings on some Hilbert space, for instance some \(L^2\)-space over \(\mathbb {R}^d\). See [8, Chap. 7] for more details on this formulation. We can reformulate this equation as a first-order system using the formal definition \(v:=-{{\mathrm{grad}}}\partial _0^{-1}u\), where \(\partial _0^{-1}\) denotes the inverse of the time derivative operator briefly mentioned above. Then the stochastic heat equation becomes

Thus, with \((\partial _0^{-1}b(u),0)=B(u)\) and if we interpret the term \(\partial _0^{-1}(\sigma (u)\dot{W})\) as a stochastic integral, we immediately arrive at (1.1). So the operator-valued function M and the operator A in (1.1) respectively equal

Indeed, with these settings, we get

In particular, \(H=L^2(\lambda _{{\mathbb {R}}^d} )^{d+1}\), where \(\lambda _{{\mathbb {R}}^d} \) denotes the Lebesgue measure on \({\mathbb {R}}^d\).

In a similar fashion, one can reformulate the stochastic wave equation, which in the classic formulation is given by

by using \(v=-{{\mathrm{grad}}}\partial _0^{-1}u\) as

where here

In comparison to the example of the stochastic heat equation, this formulation in terms of a first-order system is already well-known and heavily used. The main advantage of the formulation (1.1) is that many more examples of PDEs in mathematical physics can be written in this form, see [25]. The hand-waving arguments handling \(\partial _0^{-1}\) that we have used in the reduction to first-order systems will be made rigorous in Sect. 2.

This paper is structured in the following way. In Sect. 2 we present a brief overview over the solution theory for PDEs which will be used in this article, in particular we explain the construction of the time derivative operator and the concept of so-called Sobolev chains. We state the results and sketch the respective proofs referring to [26] for the details and highlight some further generalizations. In Sect. 3 we show how the solution theory for deterministic PDEs carries over to the case of SPDEs which we think of as random perturbations of PDEs. We clarify the way how to interpret the stochastic integral, and then present a solution theory to SPDEs with additive and multiplicative noise. In Sect. 4 we show using concrete examples how this solution theory can be successfully applied to concrete SPDEs, some of which—to the best of our knowledge—have not yet been solved in this level of generality. We conclude Sect. 4 with a SPDE of mixed type, that is, an equation which is hyperbolic, parabolic and elliptic on different space–time regions. This demonstrates the versatility of the approach presented as for instance the semi-group method fails to work in this example for there is no semi-group to formulate the (non-homogeneous) Cauchy problem in the first place. Furthermore, we provide some connections of this new solution concept to some already known approaches to solve SPDEs. More precisely, we draw the connection of variational solutions of the heat equation to the solutions obtained here. Further, for the stochastic wave equation, we show that the mild solution derived via the semi-group method coincides with the solution constructed in this exposition. We summarize our findings in Sect. 5.

In this article we denote the identity operator by 1 or by \(1_H\) and indicator functions by \(\chi _K\) for some set K. The Lebesgue measure on a measurable subset \({D} \subseteq {\mathbb {R}}^d\) for some \(d\in {\mathbb {N}}\) will be denoted by \(\lambda _{D}\). All Hilbert spaces in this article are endowed with \({\mathbb {C}}\) as underlying scalar field. \(L^2\)-spaces of (equivalence classes of) scalar-valued square integrable functions over a measure space \((\Omega ,\mathcal {A},\mu )\) are denoted by \(L^2(\mu )\). The corresponding space of Hilbert space H-valued \(L^2\)-functions will be denoted by \(L^2(\mu ;H)\). \({\mathbb {P}}\) will always denote a probability measure.

2 The deterministic solution theory

In this section we will review the solution theory for a class of linear partial differential equations developed in [26, Chap. 6] or [25]. This solution theory of partial differential equations relies on two main observations: (1) to establish the time-derivative operator as a normal and continuously invertible operator on an appropriate Hilbert space and (2) a positive definiteness constraint on the partial differential operators realized as operators in space–time.

2.1 Functional analytic ingredients

Let throughout this article \(\nu >0\). This is a free parameter which controls the growth of solutions to PDEs for large times. Consider the space

of \(L^2\)-functions with respect to the exponentially weighted Lebesgue measure \(\exp (-2\nu (\cdot ))\lambda _\mathbb {R}\). The latter space becomes a Hilbert space if endowed with the scalar product

where \(^*\) denotes complex conjugation. Note that the operator \(\exp (-\nu m)\) given by

of multiplying with the function \(t\mapsto e^{-\nu t}\) is unitary from \(H_{\nu ,0}({\mathbb {R}})\) to \(H_{0,0}({\mathbb {R}})(=L^2(\lambda _{\mathbb {R}}))\).

Define \(\mathrm{dom}(\partial _{0,\nu }):= \{ f\in H_{\nu ,0}(\mathbb {R}); f'\in H_{\nu ,0}(\mathbb {R})\}\), where \(f'\) is the distributional derivative of \(f\in L^1_{{\mathrm{loc}}}(\lambda _\mathbb {R})\), and

Then this operator has the following properties, see also [16, Corollary 2.5].

Lemma 2.1

\(\partial _{0,\nu }\) is a continuously invertible linear operator with \(||\partial _{0,\nu }^{-1}||\leqslant \frac{1}{\nu }\) and \(\mathfrak {R}\partial _{0,\nu }=\nu \).

Proof

Recall \(\exp (-\nu m)\) from (2.1) is unitary. By the product rule we deduce the equality

where \(\partial :H^1(\mathbb {R})\subseteq L^2(\lambda _\mathbb {R})\rightarrow L^2(\lambda _\mathbb {R})\) is the (usual) distributional derivative operator realized in \(L^2(\lambda _\mathbb {R})\). Indeed, for a smooth compactly supported function \(\phi \), we observe that

Since \(\partial \) is skew-self-adjoint in \(L^2(\lambda _\mathbb {R})\) [17, Chap. V, Example 3.14], the spectrum of \(\partial \) lies on the imaginary axis. Hence, the operator \(\partial +\nu \) is continuously invertible. By (2.3), the operators \(\partial +\nu \) and \(\partial _{0,\nu }\) are unitarily equivalent. Thus, the operator \(\partial _{0,\nu }\) is continuously invertible as well. The norm estimate also follows from (2.3) as so does the formula \(\mathfrak {R}\partial _{0,\nu }=\nu \) since \(\mathfrak {I}(\partial _{0,\nu })=\exp (-\nu m)^{-1}((-i)\partial )\exp (-\nu m)\), by the skew-self-adjointness of \(\partial \). \(\square \)

Remark 2.2

By [16, Corollary 2.5 (d)], we have

for all \(f\in H_{\nu ,0}({\mathbb {R}})\).

Note that for a Hilbert space H, there exists a canonical extension of \(\partial _{0,\nu }\) to the space \(H_{\nu ,0}(\mathbb {R};H)\) of corresponding H-valued functions by identifying \(H_{\nu ,0}(\mathbb {R};H)\) with \(H_{\nu ,0}(\mathbb {R})\otimes H\) and the extension of \(\partial _{0,\nu }\) by \(\partial _{0,\nu }\otimes 1_H\).

An important tool in this article is the (Hilbert space valued) Fourier transformation

defined by the unitary extension of

to \(L^2(\lambda _\mathbb {R};H)\). In fact, the norm preservation is the same as saying that Plancherel’s theorem also holds for the Hilbert space valued case. Recall that the inverse Fourier transform satisfies \((\mathcal {F}^{-1}\phi )(x)=(\mathcal {F}^*\phi )(x) = (\mathcal {F}\phi )(-x)\).

Next, recall [1, Volume 1, pp. 161–163] that for the derivative \(\partial :H^1(\mathbb {R})\subseteq L^2(\lambda _\mathbb {R})\rightarrow L^2(\lambda _\mathbb {R})\), the Fourier transformation realizes an explicit spectral representation for \(\partial \) as multiplication operator in the Fourier space:

where \((mf)(x):= xf(x)\) denotes the multiplication-by-argument-operator in \(L^2(\lambda _\mathbb {R};H)\).

We define the Fourier–Laplace transformation \(\mathcal {L}_\nu := \mathcal {F}\exp (-\nu m)\) with \(\exp (-\nu m)\) given in (2.1). Then, \(\mathcal {L}_\nu \) defines a spectral representation for \(\partial _{0,\nu }\) given in (2.2) (and hence also for \(\partial _{0,\nu }^{-1}\)). Indeed, we get \(\partial _{0,\nu } = \mathcal {L}_\nu ^* (\mathrm {i}m+\nu )\mathcal {L}_\nu \) and

The latter formula carries over to (operator-valued)-functions of \(\partial _{0,\nu }^{-1}\), that is, we set up a functional calculus for \(\partial _{0,\nu }^{-1}\). We define

where \(M:B(r,r)\rightarrow L(H)\) is analytic and bounded, \(r>\frac{1}{2\nu }\), as well as for all \(x\in \mathbb {R}\) and \(\phi \in C_c(\mathbb {R};H)\)

Note that the right hand side is the application of the bounded linear operator \(M\left( \frac{1}{\mathrm {i}x+\nu }\right) \in L(H)\) to the Hilbert space element \(\phi (x)\in H\).

In principle, one could cope with (operator-valued) functions M being defined on \(\partial B(r',r'){\setminus }\{0\}\) with \(r':= 1/(2\nu )\), only. In fact, (2.4) is still possible. However, in the solution theory to be developed in the next section, we want to establish causality for the solution operator, that is, the solution vanishes up to time t if the data do (see below for the details). But, vanishing up to time 0 is intimately related to analyticity:

We denote the open complex right half plane by \(\mathbb {C}_{>0}=\{ \mathrm {i}t+\nu ; t\in {\mathbb {R}}, \nu >0\}\).

Theorem 2.3

(Paley–Wiener, cf. [35, Chap. 19] and [25, Corollary 2.7]). Let H be a Hilbert space, \(u\in L^2(\lambda _{\mathbb {R}};H)\). Then the following properties are equivalent:

-

1.

\(\mathbb {C}_{>0} \ni \mathrm {i}t+\nu \mapsto (\mathcal {L}_{\nu } u)(t) \in H\) belongs to the Hardy–Lebesgue space

$$\begin{aligned} \mathcal {H}^2(H):= & {} \{ f:\mathbb {C}_{>0}\rightarrow H; f~\text {analytic,}\\&f(\mathrm {i}\cdot +\nu )\in L^2(\lambda _{\mathbb {R}};H)\, (\nu>0), \sup _{\nu >0}\Vert f(\mathrm {i}\cdot +\nu )\Vert _{L^2}<\infty \} \end{aligned}$$ -

2.

\(u=0\) on \((-\infty ,0)\).

We introduce Sobolev chains, which may be needed in the later investigation, see [26, Chap. 2], or [24]. These concepts are the natural generalizations of Gelfand triples to an infinite chain of rigged Hilbert spaces. We shall also refer to similar concepts developed in [10, 18] or, more recently, [9].

Definition 2.4

Let \(C:\mathrm{dom}(C)\subseteq H\rightarrow H\) be densely defined and closed. If C is continuously invertible, then we define \(H_k(C)\) to be the completion of \((\mathrm{dom}(C^{|k|}),||C^{k}\cdot ||_{H})\) for all \(k\in \mathbb {Z}\). The sequence \((H_k(C))_k\) is called Sobolev chain associated with C.

Obviously, \(H_k(C)\) is a Hilbert space for each \(k\in \mathbb {Z}\). Moreover, it is possible to extend the operator C unitarily to an operator from \(H_k(C)\) to \(H_{k-1}(C)\). We will use these extensions throughout and use the same notation. It can be shown that \(H_{k}(C^*)^*\) can be identified with \(H_{-k}(C)\) via the dual pairing

for all \(k\in \mathbb {Z}\), where we identify H with its dual space. Further, note that \(H_k\hookrightarrow H_m\) as long as \(k\geqslant m\). Hence, the name “chain”.

Example 2.5

-

(a)

A particular example for such operators C is the time-derivative \(\partial _{0,\nu }\). We denote \(H_{\nu ,k}(\mathbb {R}):= H_k(\partial _{0,\nu })\) for all \(k\in \mathbb {Z}\) and correspondingly for the Hilbert space valued case.

-

(b)

A second important example to be used later on is the case of a skew-self-adjoint operator A in some Hilbert space H. We build the Sobolev chain associated with \(C=A+1\).

2.2 The solution theory

The solution theory which we will apply covers a large class of partial differential equations in mathematical physics. We will summarize it in this section, and for convenience, we shall also provide outlines of the proofs. For the whole arguments, the reader is referred to [26] and [28, 42]. The following observation, a variant of coercitivity, provides the functional analytic foundation.

Lemma 2.6

Let G be a Hilbert space, \(B:\mathrm{dom}(B)\subseteq G\rightarrow G\) a densely defined, closed, linear operator. Assume there exists \(c>0\) with the property that

and

for all \(\phi \in \mathrm{dom}(B)\) and \(\psi \in \mathrm{dom}(B^*)\). Then \(B^{-1}\) exists as an element of L(G), the space of bounded linear operators on G and \(\Vert B^{-1}\Vert \leqslant 1/c\).

Proof

Using the Cauchy–Schwarz-inequality, we can read off from the first inequality (2.5) that B is one-to-one. More precisely, we have for all \(\phi \in \mathrm{dom}(B)\)

Thus, \(B^{-1}\) is well-defined on \(\mathrm{ran}(B)\), the latter being a closed subset of G. In fact, take \((\psi _n)_n\) in \(\mathrm{ran}(B)\) converging to some \(\psi \in G\). We find \((\phi _n)_n\) in \(\mathrm{dom}(B)\) with \(B\phi _n=\psi _n\). Then, again relying on the inequality involving B, we get

which shows that \((\phi _n)_n\) is a Cauchy-sequence in G, and, thus, convergent to some \(\phi \in G\). The closedness of B gives that \(\phi \in \mathrm{dom}(B)\) and \(B\phi =\psi \in \mathrm{ran}(B)\) as desired.

Next, again by the Cauchy–Schwarz inequality, we deduce that also \(B^*\) is one-to-one, or expressed differently \(\mathrm{ker}(B^*)=\{0\}\). Thus, by the projection theorem, \(G=\mathrm{ker}(B^*)\oplus \overline{\mathrm{ran}(B)}=\{0\}\oplus \mathrm{ran}(B)\) yielding that B is onto. The inequality for the norm of \(B^{-1}\) can be read off from (2.7) by setting \(\phi := B^{-1}g\) for any \(g\in \mathrm{ran}(B)=G\):

This finishes the proof. \(\square \)

Remark 2.7

Given a densely defined closed linear operator \(A_0:\mathrm{dom}(A_0)\subseteq H\rightarrow H\), there exists a closed, densely defined (canonical) extension A to \(H_{\nu ,0}(\mathbb {R};H)\) in the way that \((Au)(t):= A_0u(t)\) for \(t\in \mathbb {R}\) and \(u\in C_c (\mathbb {R};\mathrm{dom}(A_0))\). Indeed, the construction can be done similarly to the extension of the time-derivative by setting \(A:= 1_{H_{\nu ,0}(\mathbb {R})}\otimes A_0\). Then, if \(A_0\) is continuously invertible, then so is A. The adjoint of A is the extension of the adjoint of \(A_0\). Due to these similarities there is little use in distinguishing notationally \(A_0\) from its extension A. Hence, we will use throughout the same notation for \(A_0\) and its extension.

The next result is the main existence and uniqueness theorem in the deterministic setting.

Theorem 2.8

[26, Theorem 6.2.5],[25, Solution Theory]. Let H be a Hilbert space, \(A:\mathrm{dom}(A)\subseteq H\rightarrow H\) a skew-self-adjoint linear operator. For some \(r>0\), let \(M:B(r,r)\rightarrow L(H)\) be a bounded and analytic mapping. Assume that there exists \(c>0\) such that

Then for all \(\nu > 1/(2r)\) the operator

is closable with continuously invertible closure. Denoting \(S_\nu \) to be the inverse of the closure,

we get that \(S_\nu \) is causal, that is, for \(a\in \mathbb {R}\) and \(f,g\in H_{\nu ,0}(\mathbb {R};H)\) the implication

holds true. Moreover, \(\Vert S_\nu \Vert \leqslant c^{-1}\).

Proof

At first we show the existence and uniqueness of solutions to the equation

for given \(f\in H_{\nu ,0}({\mathbb {R}};H)\), which boils down to (closability and) continuous invertibility of the (closure of the) partial differential operator \(B_0 := \partial _{0,\nu }M(\partial _{0,\nu }^{-1})+A\).

At first, we observe that \(B_0\) with \(\mathrm{dom}(B_0)=\mathrm{dom}(A)\cap \mathrm{dom}(\partial _{0,\nu })\) is closable. Indeed, it is easy to check that \(\partial _{0,\nu }^*M(\partial _{0,\nu }^{-1})^*-A\) with dense domain \(\mathrm{dom}(B_0)\) is a formal adjoint. Hence, \(B_0\) is closable. For the proof of the continuous invertibility of \(B:= \overline{B_0}\), we apply Lemma 2.6 with \(G:= H_{\nu ,0}(\mathbb {R};H)\). To this end, take \(\phi \in \mathrm{dom}(B_0)\) and compute

where we have used that A is skew-self-adjoint (hence, \(\mathfrak {R}\langle A\phi ,\phi \rangle =-\mathfrak {R}\langle \phi ,A\phi \rangle =-\mathfrak {R}\langle A\phi ,\phi \rangle \)), (2.4), (2.8) as well as Plancherel’s identity, that is, the unitarity of \(\mathcal {L}_\nu \). This inequality carries over to all \(\phi \in \mathrm{dom}(B)\).

In order to use Lemma 2.6, we need to compute the adjoint of B. For this, note that \((1+\epsilon \partial _{0,\nu }^*)^{-1}\) converges strongly to the identity as \(\epsilon \rightarrow 0\). So, fix \(f\in \mathrm{dom}(B^*)\) and \(\epsilon >0\). Observe that \((1+\epsilon \partial _{0,\nu })^{-1}\) commutes with \(B_0\) and leaves the space \(\mathrm{dom}(B_0)\) invariant. Then we compute for \(\phi \in \mathrm{dom}(B_0)\)

Hence, as \(\mathrm{dom}(B_0)\) is a core for A, we infer that \((1+\epsilon \partial _{0,\nu }^*)^{-1}f\in \mathrm{dom}(A^*)\) and that

or, equivalently,

Note that also \(\mathrm{dom}(B_0) = \mathrm{dom}(\partial _{0,\nu }^*)\cap \mathrm{dom}(A)\), since \(\mathrm{dom}(\partial _{0,\nu })=\mathrm{dom}(\partial _{0,\nu }^*)\). Letting \(\epsilon \rightarrow 0\) in the last equality, we infer that

But as \(\mathfrak {R}\langle (\partial _{0,\nu }^*M(\partial _{0,\nu }^{-1})^*-A)\psi ,\psi \rangle \geqslant c\langle \psi ,\psi \rangle \) for all \(\psi \in \mathrm{dom}(B_0)\), we conclude that for all \(\psi \in \mathrm{dom}(B^*)\)

Hence, Lemma 2.6 implies that B is continuously invertible, and we denote \(S_\nu := B^{-1}\). The norm estimate for \(\Vert S_{\nu }\Vert \) follows from Lemma 2.6.

The next step is to show causality, and here we only sketch the arguments and we refer to [25, Sect. 2.2, Theorem 2.10] for the details. First of all, note that B commutes with time-translation \(\tau _hf:= f(\cdot +h)\) as it is also a function of \(\partial _{0,\nu }\). In fact, one has \(\tau _h =\mathcal {L}_\nu ^* e^{(\mathrm {i}m +\nu )h}\mathcal {L}_\nu \). Hence, causality needs only being checked for \(a=0\) in (2.9). Moreover, by the linearity of \(S_\nu \), it suffices to verify the implication in (2.9) for \(g=0\). So, take \(f\in H_{\nu ,0}(\mathbb {R};H)\) vanishing on \((-\infty ,0]\). We have to show that \(S_\nu f\) also vanishes on \((-\infty ,0]\). Observe that \(e^{-\nu m}f\in L^2(\lambda _{[0,\infty )};H)\). Hence, by the Paley–Wiener theorem \(\mathcal {L}_\nu f =\mathcal {F}e^{-\nu m} f\) belongs to the Hardy–Lebesgue space of analytic functions on the half plane being uniformly in \(L^2(\lambda _\mathbb {R};H)\) on any line parallel to the coordinate axis, see Theorem 2.3.

Next, \(\overline{((\mathrm {i}m +\nu )M(\frac{1}{\mathrm {i}m +\nu })+A)}^{-1}\) as multiplication operator on the Hardy–Lebesgue space leaves the Hardy–Lebesgue space invariant, by the boundedness of the inverse and the analyticity of both the resolvent map and the mapping M. Thus, \(\overline{((\mathrm {i}m +\nu )M(\frac{1}{\mathrm {i}m +\nu })+A)}^{-1}\mathcal {L}_\nu f\) belongs to the Hardy–Lebesgue space. Thus, \(\mathcal {F}^*\overline{((\mathrm {i}m +\nu )M(\frac{1}{\mathrm {i}m +\nu })+A)}^{-1}\mathcal {L}_\nu f\) is supported on \([0,\infty )\), by the Paley–Wiener theorem. Hence,

is also supported on \([0,\infty )\) only, yielding the assertion. \(\square \)

The operator \(S_\nu \) defined in the previous theorem is also denoted as solution operator to the PDE. The concept of causality is an action-reaction principle, i.e. only if there is some non-zero action on the right-hand side of the equation, the solution can become non-zero.

Remark 2.9

(a) As it was pointed out in [26, p. 494], we can freely work with \(\partial _{0,\nu }\) in the PDE so that instead of solving \((\partial _{0,\nu }M(\partial _{0,\nu }^{-1})+A)u=f\), we could also solve

and obtain the original solution \(u=\partial _{0,\nu } v\). This will be advantageous when dealing with irregular right-hand sides, especially stochastic ones. In particular, \(\partial _{0,\nu }^{-1}\) (and scalar functions thereof) commute with the solution operator \(S_\nu \) given in Theorem 2.8. Thus (see also [26, Theorem 6.2.5]), the solution theory obtained in Theorem 2.8 carries over to \(H_{\nu ,k}({\mathbb {R}};H)\), that is, the solution operator \(S_\nu \) admits a continuous linear extension to all \(H_{\nu ,k}\)-spaces:

(b) It can be shown that for all \(\varepsilon >0\) and \(u\in \mathrm{dom}(\overline{\partial _{0,\nu } M(\partial _{0,\nu }^{-1})+A})\) we have that \((1+\varepsilon \partial _{0,\nu })^{-1}u\in \mathrm{dom}(\partial _{0,\nu })\cap \mathrm{dom}(A)\), see [26, Theorem 6.2.5] or [42, Lemma 5.2].

(c) With the notion of Sobolev chains as introduced in the previous section, we may neglect the closure bar in

Indeed, the latter equation holds in \(H_{\nu ,0}({\mathbb {R}};H)\), but, since

we obtain equality (2.11) also in the space \(H_{\nu ,-1}({\mathbb {R}};H)\cap H_{\nu ,0}({\mathbb {R}};H_{-1}(A+1))\). Moreover, for \(u\in H_{\nu ,0}({\mathbb {R}};H)\), we have \(\partial _{0,\nu }M(\partial _{0,\nu }^{-1})u\in H_{\nu ,-1}({\mathbb {R}};H)\) and \(Au\in H_{\nu ,0}({\mathbb {R}};H_{-1}(A+1))\). Thus,

In fact, in the proof of Theorem 2.8 we have shown that \(\mathrm{dom}(\partial _{0,\nu })\cap \mathrm{dom}(A)\) is dense in

with respect to the graph norm of \(\overline{(\partial _{0,\nu }M(\partial _{0,\nu }^{-1})+A)}\). But, a sequence \((u_n)_n\) converging to u in \(\mathrm{dom}(\overline{(\partial _{0,\nu }M(\partial _{0,\nu }^{-1})+A)})\) converges to u particularly in \(H_{\nu ,0}({\mathbb {R}};H)\). So, since \(\partial _{0,\nu }:H_{\nu ,0}({\mathbb {R}};H)\rightarrow H_{\nu ,-1}({\mathbb {R}};H)\) and \(A:H_{\nu ,0}({\mathbb {R}};H)\rightarrow H_{\nu ,0}({\mathbb {R}};H_{-1}(A+1))\) are continuous, we obtain

with limits computed in \(H_{\nu ,-1}({\mathbb {R}};H)\cap H_{\nu ,0}({\mathbb {R}};H_{-1}(A+1))\).

We shall now sketch how to deal with initial value problems. In fact, until now we have only considered equations like

that is, equations with a source term on the right-hand side, and some boundary conditions encoded in the domain of the (partial differential) operator A, but no initial conditions. In fact, we will show now, how to incorporate them into the right-hand side of the PDE. For a simple case, we rephrase the arguments in [26, Sect. 6.2.5, Theorem 6.2.9].

Take \(u_0\in \mathrm{dom}(A)\), and \(f\in H_{\nu ,0}(\mathbb {R};H)\) with f vanishing on \((-\infty ,0]\). Then, our formulation for initial value problems is as follows. For the sake of presentation, we let \(M(\partial _{0,\nu }^{-1})=M_0+\partial _{0,\nu }^{-1}M_1\) for some self-adjoint, non-negative \(M_0\in L(H)\) and some \(M_1\in L(H)\) satisfying \(\nu M_0+ \mathfrak {R}M_1\geqslant c\). An example for this would be \(M_0=1_H\) and \(M_1=0\). Consider

Note that due to the exponential weight, we have \(\chi _{[0,\infty )}M_1 u_0 + \chi _{[0,\infty )}Au_0\in H_{\nu ,0}(\mathbb {R};H)\). The solution theory in Theorem 2.8 gives us a unique solution \(v\in H_{\nu ,0}(\mathbb {R};H)\). Moreover, v is supported on \([0,\infty )\), due to causality.

Lemma 2.10

With the notation above, \(u:= v+\chi _{[0,\infty )}u_0\) solves the initial value problem

Proof

Note that on \((0,\infty )\) we get

where these equalities hold in \(H_{\nu ,-1}(\mathbb {R};H)\cap H_{\nu ,0}(\mathbb {R};H_{-1}(A+1))\). Hence, as \(\partial _{0,\nu }M_0\chi _{[0,\infty )}u_0\) vanishes on \((0,\infty )\), we arrive at

It remains to check whether the initial datum is attained. From the equation

we see that \(\partial _{0,\nu }M_0v\in H_{\nu ,0}( \mathbb {R};H_{-1}(A+1))\). Thus, \(M_0v\in H_{\nu ,1}( \mathbb {R};H_{-1}(A+1))\). By the Sobolev embedding theorem (see e.g. [16, Lemma 5.2]), we infer \(M_0v \in C(\mathbb {R};H_{-1}(A+1))\). In particular, we get

with limits in \(H_{-1}(A+1)\). By causality, \(M_0v(0-)=0\) and, thus, we arrive at

which gives \((M_0u)(0+)=M_0u_0\), that is, the initial value is attained in \(H_{-1}(A+1)\). \(\square \)

The results above enable us to solve linear partial differential equations with initial conditions just by looking at non-homogeneous problems with \(H_{\nu ,0}\) right-hand sides. A few comments are in order.

Remark 2.11

-

(a)

The solution operator in Theorem 2.8 is independent of \(\nu \), in the following sense: let \(\nu ,\mu \) be sufficiently large and denote the corresponding solution operators by \(S_\nu \) and \(S_\mu \) respectively. Then for \(f\in H_{\nu ,0}(\mathbb {R};H)\cap H_{\mu ,0}(\mathbb {R};H)\) we have \(S_\nu f= S_\mu f\), see e.g. [26, Theorem 6.1.4] or [38, Lemma 3.6] for a detailed proof. Therefore we shall occasionally drop the index \(\nu \) in the time-derivative or the solution operator if there is no risk of confusion.

-

(b)

For the sake of presentation, we state the above treatment of the deterministic PDEs in a rather restricted way. In fact the solution theory mentioned in Theorem 2.8 can be generalized to maximal monotone relations A, see e.g. [37], or to non-autonomous coefficients, see [28, 42]. For our purposes of investigating random right-hand sides however, Theorem 2.8 is sufficient.

Now we present the last ingredient before turning to stochastic PDEs. We shall present a perturbation result which will help us to deduce well-posedness of SPDEs, where we interpret the stochastic part as a nonlinear perturbation on the right-hand side of the PDE. In order to do so, we give a definition of so-called evolutionary mappings, which is a slight variant of the notions presented in [40, Definition 2.1] and [16, Definition 4.7].

Definition 2.12

Let H, G Hilbert spaces, \(\nu _0>0\). Let

where \(\mathrm{dom}(F)\) is supposed to be a vector space. We call F evolutionary \((at~\nu _0)\), if for all \(\nu \geqslant \nu _0\), F satisfies the following properties

-

(i)

F is Lipschitz-continuous as a mapping

$$\begin{aligned} F_{0,\nu } :\mathrm{dom}(F)\subseteq H_{\nu ,0}(\mathbb {R};H)\rightarrow H_{\nu ,0}(\mathbb {R};G),\,\phi \mapsto F(\phi ), \end{aligned}$$ -

(ii)

\(\Vert F\Vert _{\mathrm{ev},\mathrm{Lip}}:= \limsup _{\nu \rightarrow \infty } \Vert F_\nu \Vert _{\mathrm{Lip}}<\infty \), with \(F_\nu := \overline{F_{0,\nu }}\).

The non-negative number \(\Vert F\Vert _{\mathrm{ev},{\mathrm{Lip}}}\) is called the the eventual Lipschitz constant of F.

If, in addition, \(F_\nu \) leaves \(\mathrm{dom}(F_\nu )=\overline{\mathrm{dom}(F)}^{H_{\nu ,0}}\) invariant, then we call F invariant evolutionary \((at~\nu _0)\).

Similar to the solution operator \(S_\nu \) to certain partial differential equations (see Remark 2.11), evolutionary mappings are independent of \(\nu \) in the following sense:

Lemma 2.13

Let F be evolutionary at \(\nu _0>0\). Assume that multiplication by the cut-off function \(\chi _{(-\infty ,a]}\) leaves the space \(\mathrm{dom}(F)\) invariant for all \(a\in \mathbb {R}\), that is, for all \(a\in {\mathbb {R}}\), \(\phi \in \mathrm{dom}(F)\)

Then \(F_\nu |_{\mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )}=F_\mu |_{\mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )}\) for all \(\nu \geqslant \mu \geqslant \nu _0\).

Proof

Take \(u\in \mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )\) and assume as a first step, that \(\chi _{(-\infty ,a]}u=0\) for some \(a\in \mathbb {R}\). By definition, there exists \((\phi _n)_n\) in \(\mathrm{dom}(F)\) such that \(\phi _n\rightarrow u\) in \(H_{\mu ,0}(\mathbb {R};H)\). As \(\mathrm{dom}(F)\) is a vector space and by being left invariant by multiplication by the cut-off function, we also have that \(\psi _n:=\chi _{(a,\infty )}\phi _n\in \mathrm{dom}(F)\) as well as \(\psi _n\rightarrow u\) in \(H_{\mu ,0}(\mathbb {R};H)\). From \(\nu >\mu \), we infer that \(\psi _n\rightarrow u\) in \(H_{\nu ,0}(\mathbb {R};H)\). Hence, as \(H_{\nu ,0}(\mathbb {R};G)\) and \(H_{\mu ,0}(\mathbb {R};G)\) are continuously embedded in \(L^2_{{\mathrm{loc}}}(\lambda _\mathbb {R};G)\),

For general \(u\in \mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )\), note that the sequence \((u_n)_{n\in \mathbb {N}}:= (\chi _{[-n,\infty )}u)_{n\in \mathbb {N}}\) converges in both spaces \(H_{\nu ,0}(\mathbb {R};H)\) and \(H_{\mu ,0}(\mathbb {R};H)\) by dominated convergence. The continuity of \(F_\nu \) and \(F_\mu \) implies convergence of \((F_\nu (u_n))_{n\in \mathbb {N}}\) and \((F_\mu (u_n))_{n\in \mathbb {N}}\) in \(H_{\nu ,0}(\mathbb {R};G)\) and \(H_{\mu ,0}(\mathbb {R};G)\), respectively. Therefore we get equality of the respective limits by \(F_\nu (u_n)=F_\mu (u_n)\) by the arguments in the first step of this proof, again by the fact that both spaces \(H_{\nu ,0}(\mathbb {R};G)\) and \(H_{\mu ,0}(\mathbb {R};G)\) are continuously embedded in \(L^2_{{\mathrm{loc}}}(\lambda _\mathbb {R};G)\). \(\square \)

Remark 2.14

In both articles [40, Definition 2.1] and [16, Definition 4.7], where the notion of evolutionary mappings was used, we assumed that the mappings under considerations are densely defined (and linear). Hence, the invariance condition is superfluous. But in the context of SPDEs, one should think of F to be a stochastic integral. This, however, is only a Lipschitz continuous mapping, if the processes to be integrated are adapted to the filtration given by the integrating process. The adapted processes form a closed subspace of all stochastic processes, and they will play the role of \(\mathrm{dom}(F_\nu )\). This shall be specified in the next section.

As the final statement of this section, we provide the perturbation result which is applicable to SPDEs.

Corollary 2.15

Let H be a Hilbert space, \(\nu _0>0\). Assume that F is invariant evolutionary (at \(\nu _0\)) as in Definition 2.12 for \(G=H\). Let \(r>\frac{1}{2\nu _0}\), and suppose that \(M:B(r,r)\rightarrow L(H)\) is analytic and bounded, satisfying

for all \(z\in B(r,r)\), all \(\phi \in H\) and some \(c>0\). Assume that \(\Vert F\Vert _{ ev , Lip }< c\) and that \(F_\nu \) is causal for all \(\nu >\nu _0\). Furthermore suppose that for all \(\nu >\nu _0\), we have \(S_\nu \phi \subseteq \mathrm{dom}(F_\nu )\), for all \(\phi \in \mathrm{dom}(F_\nu )\) with \(S_\nu \) from Theorem 2.8.

Then the mapping

with domain

admits a Lipschitz-continuous inverse mapping defined on the whole of \(\mathrm{dom}(F_\nu )\) for all \(\nu >\nu _0\) large enough. Moreover, \(\Phi _\nu ^{-1}\) is causal.

Proof

Choose \(\nu >\nu _0\) so large such that \(\Vert F_\nu \Vert _{\text {Lip}}<c\) and let \(f\in \mathrm{dom}(F_\nu )\). Now, \(u\in \mathrm{dom}(\Phi _\nu )\) satisfies

if and only if u is a fixed point of the mapping

Note that, since \(\mathrm{dom}(F_\nu )\) is a vector space, \(\Psi \) is in fact well-defined. Moreover, \(\Psi \) is a contraction, by the choice of \(\nu \). Indeed, let \(u,v\in \mathrm{dom}(F_\nu )\) then

so \(\Psi \) is strictly contractive as \(\Vert F_\nu \Vert _{\text {Lip}}<c\). Hence, the inverse of \(\Phi _\nu \) is a well-defined Lipschitz continuous mapping, by the contraction mapping principle.

Next, we show causality of the solution operator. For this it suffices to observe that \(\Psi \) is causal. But, by Theorem 2.8, \(\Psi \) is a composition of causal mappings, yielding the causality for \(\Psi \) and, hence, the same for the solution mapping of the equation under consideration in the present corollary. \(\square \)

As already mentioned, we use the above perturbation result to conclude well-posedness of stochastically perturbed partial differential equations. In the application, we have in mind, the invariance of \(\mathrm{dom}(F_\nu )\) under \(S_\nu \) is a consequence of causality. In fact, we will have that \(\mathrm{dom}(F_\nu )\) is the restriction of \(H_{\nu ,0}\)-functions to the class of predictable processes. A remark on the dependence of \(\Phi ^{-1}_\nu \) on \(\nu \) is in order.

Remark 2.16

In order to show independence of \(\nu \), that is, \(\Phi _\nu ^{-1}f=\Phi _\mu ^{-1}f\) for \(f\in \mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )\) for \(\nu ,\mu \) chosen large enough, we need to assume condition (2.12) in addition. Indeed, take \(f\in \mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )\) for \(\nu ,\mu \) sufficiently large as in Corollary 2.15. Then, with \(\Psi _\nu := \overline{(\partial _{0,\nu }M(\partial _{0,\nu }^{-1})+A)}^{-1}\left( f-F_\nu (\cdot )\right) \) (and similarly for \(\Psi _\mu \)), the proof of Corollary 2.15 shows that \(\Phi _\nu ^{-1}(f)=\lim _{n\rightarrow \infty } \Psi _\nu ^n(f)\). But, as \(f\in \mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )\), we get

by Remark 2.11(a) and Lemma 2.13. In particular, \(\Psi _\nu (f)\in \mathrm{dom}(F_\nu )\cap \mathrm{dom}(F_\mu )\). In the same way, one infers that \(\Psi _\nu ^n(f)=\Psi _\mu ^n(f)\) for all \(n\in \mathbb {N}\). Consequently, \(\Phi _\nu ^{-1}(f)\) and \(\Phi _\mu ^{-1}(f)\) are limits of the same sequence in \(\mathrm{dom}(F_\nu )\) and \(\mathrm{dom}(F_\mu )\), respectively. Thus, as both the latter spaces are continuously embedded into \(L^2_{\text {loc}}(\lambda _\mathbb {R};H)\) these limits coincide.

Due to Remark 2.16, in what follows, we will not keep track on the value of \(\nu >0\) in the notation of the operators involved as it will be clear from the context in which Hilbert space the operators are established in.

3 Application to SPDEs

In this section we show how to apply the solution theory from Sect. 2.2 to an SPDE of the form (1.1). The basic idea is to replace the Hilbert space H in Sect. 2.2 by \(L^2(\mathbb {P})\otimes H(\cong L^2(\mathbb {P};H))\), where \(L^2(\mathbb {P}) = L^2(\Omega ,\mathscr {A},\mathbb {P})\) is the \(L^2\)-space of a probability space \((\Omega ,\mathscr {A},\mathbb {P})\) and H is the Hilbert space where the (unbounded) operator A is thought of as being initially defined. A typical choice would be \(H=L^2(\lambda _D )^{d+1}\), for some open \(D\subseteq {\mathbb {R}^d}\), and A being some differential operator, but also more general operator equations are possible.

As already mentioned in the introduction, we consider the stochastic integral on the right-hand side as a perturbation of the deterministic partial differential equation. Therefore we need to make sense of the term

In Sect. 3.1 we establish that this term is well-defined, and after that, in Sect. 3.2, we can use this to treat SPDEs with multiplicative noise using the fixed-point argument carried out in Corollary 2.15. In principle, using this idea, one can also treat SPDEs with additive noise, but a slightly different analysis in Sect. 3.3 also gives us a result on SPDEs with additive noise.

3.1 Treatment of the stochastic integral

The concept of stochastic integration we use in the following is the same as in [8], and we repeat the most important points here.

Definition 3.1

(Wiener process) Let G be a separable Hilbert space, \((e_k)_{k\in \mathbb {N}}\) an orthonormal basis of G, \((\lambda _k)_{k\in \mathbb {N}}\in \ell _1(\mathbb {N})\) with \(\lambda _k\geqslant 0\) for all \(k\in \mathbb {N}\), and let \((W_k)_{k\in \mathbb {N}}\) be a sequence of independent real-valued Brownian motions. For \(t\in [0,\infty )\) we define the G-valued Wiener process, by

and we set \(W(t)=0\) for all negative times \(t\in (-\infty ,0)\).

In order to make sense of stochastic integration we reinterpret the notion of a filtration in an operator-theoretic way. We will use the notation \(A\leqslant B\) for two bounded linear operators on a Hilbert space H if \(\langle Ax,x\rangle \leqslant \langle Bx,x\rangle \) for all \(x\in H\).

Definition 3.2

(Filtration and predictable processes) (a) Let H be a Hilbert space, \(P=(P_t)_{t\geqslant 0}\) is called a filtration on H, if for all \(t\in \mathbb {R}_+\) the operator \(P_t\) is an orthogonal projection, \(P_s\leqslant P_t\leqslant 1_H\) for all \(s\leqslant t\).

(b) Let \(\nu >0\), \(Z:\mathbb {R}\rightarrow H\). We call Z predictable (with respect to P), if

where

and the closure is taken in \(H_{\nu ,0}(\mathbb {R};H)\).

(c) Let G be a Hilbert space. We say that \(Z:\mathbb {R}\rightarrow H\otimes G\) is predictable (with respect to P) with values in G, if Z is predictable with respect to \(P\otimes 1_G:= (P_t\otimes 1_G)_{t\in \mathbb {R}}\).

Remark 3.3

In applications, \(H=L^2(\mathbb {P})\) for some probability space \((\Omega ,\mathscr {A},\mathbb {P})\) and \((P_t)_{t\in \mathbb {R}}\) is given by a family of nested \(\sigma \)-algebras \((\mathcal {F}_t)_{t\in \mathbb {R}}\). More precisely,

In particular, if we are given a G-valued Wiener process W with underlying probability space \((\Omega ,\mathscr {A},\mathbb {P})\) as in Definition 3.1, the natural filtration is given by \(\mathcal {F}_t := \sigma (W_k(s);k\in \mathbb {N},-\infty < s\leqslant t)\), \(t\in \mathbb {R}\). The corresponding family of projections \(P_W=(P_t)_t\) is then given as in (3.2). Hence, \(S_{P_W}\) (see also (3.1)) reads

Note that \(S_{P_W}\) are also called simple predictable processes. In this case, one could also take \(\mathscr {A}= \mathscr {F}_\infty := \sigma \left( \bigcup _{t\geqslant 0} \mathscr {F}_t\right) \).

For later use, we also have to show that the solution map as defined in Sect. 2.2 does not destroy the predictability. This is however a direct consequence of the causality of the solution map stated in Theorem 2.8. For ease of presentation, we will freely identify \(H\otimes H_1\) with \(H_1\otimes H\) and \(H_{\nu ,0}(\mathbb {R};H)\) with \(H_{\nu ,0}(\mathbb {R})\otimes H\). In particular, this effects the following loose notation: a continuous operator M on H is then extended to a continuous linear operator on \(H_1\otimes H\) by \(M\otimes 1_{H_1}\) (and of course by \(1_{H_1}\otimes M\)), if we want to stress that it is extended at all (and not simply write M).

Theorem 3.4

Let H, G be Hilbert spaces, P a filtration on H. Let \(M:H_{\nu ,0}(\mathbb {R};G)\rightarrow H_{\nu ,0}(\mathbb {R};G)\) be a causal, continuous linear operator. Then the canonical extension of M to \(H_{\nu ,0}(\mathbb {R};G)\otimes H\) leaves the space of predictable processes invariant, that is,

Proof

By continuity of M, it suffices to prove \((M\otimes 1_H)[S_{P\otimes 1_{G}}]\subseteq H_{\nu ,0}(\mathbb {R};P\otimes 1_G)\). Let \(f\in S_{P\otimes 1_{G}}\). By linearity of \(M\otimes 1_H\), we may assume without loss of generality that \(f=\chi _{(s,t]}\eta \) for some \(\eta =(P_s\otimes 1_G)(\eta )\in H\otimes G\) and \(s,t\in {\mathbb {R}}\). Next, by the density of the algebraic tensor product of H and G, we find sequences \((\phi _n)_n\) in H and \((\psi _n)_n\) in G with the property

But,

Thus, without restriction, we may assume that \(\phi _n=P_s\phi _n\) for all \(n\in {\mathbb {N}}\). Since, by definition, the predictable mappings form a closed subset of \(H_{\nu ,0}(\mathbb {R};H\otimes G)\) and \(M\otimes 1_H\) is continuous, it suffices to prove that for all \(N\in {\mathbb {N}}\),

is predictable. By linearity of \(M\otimes 1_H\), we are left with showing that \((M\otimes 1_H) \left( \chi _{(s,t]}\phi \otimes \psi \right) \) is predictable for all \(\phi \in \mathrm{ran}(P_s)\) and \(\psi \in G\). Note that

Next, causality of M implies that \({{\mathrm{spt}}}M(\chi _{(s,t]}\psi ) \subseteq [s,\infty )\), where \({{\mathrm{spt}}}\) denotes the support of a function. We conclude with the observation that \(M(\chi _{(s,t]}\psi )\) can be approximated by simple functions in \(H_{\nu ,0}(\mathbb {R};G)\) supported on \((s,\infty )\) only. Hence, \((M(\chi _{(s,t]}\psi ))\otimes \phi \) is predictable.\(\square \)

Next, we come to the discussion of the stochastic integral involved:

Definition 3.5

(Stochastic integral) Let H, G be separable Hilbert spaces

a G-valued Wiener process. Let Z be a predictable stochastic process with respect to the natural filtration induced by W as in Remark 3.3 with values in \(L_2(G,H)\), the space of Hilbert–Schmidt operators from G to H. Then we define the stochastic integral of Z with respect to W for all \(t\in [0,\infty )\) as follows

We put \(\int _0^t Z(s) dW(s):= 0\) for all \(t<0\).

Remark 3.6

(Itô isometry) In the situation of Definition 3.5, the following Itô isometry holds

Moreover, the stochastic integral seen as a process in \(t\in \mathbb {R}\) is continuous and predictable with values in H, see [8, Chap. 4] for details.

Next, we will show the assumptions in Corollary 2.15 applied to \(F:u\mapsto \int _0^{(\cdot )}\sigma (u)dW\) with suitable Lipschitz continuous \(\sigma \). For this we need the following key observation; we recall also Remark 3.3. We denote the Hilbert–Schmidt norm also by \(\Vert \cdot \Vert _{L_2}\).

Theorem 3.7

Let G be a separable Hilbert space, and W a G-valued Wiener process, \((\Omega ,\mathscr {A},\mathbb {P})\) as its underlying probability space and \((\mathcal {F}_t)_t\) the natural filtration induced by W and corresponding filtration \(P_W=(\mathbb {E}(\cdot |\mathcal {F}_t))_t\) on \(L^2(\mathbb {P})\). Then the mapping

where F is given by

is evolutionary at \(\nu \) for all \(\nu >0\) with eventual Lipschitz constant 0.

Proof

Since F is linear and maps simple predictable processes to predictable processes, it suffices to prove boundedness of F. In order to do so, let \(Z\in S_{P_W\otimes 1_{L_2(G,H)}}\). Then, we get using Fubini’s Theorem and the Itô isometry,

From this we see that F is Lipschitz continuous, and that its Lipschitz constant goes to zero as \(\nu \rightarrow \infty \). \(\square \)

Remark 3.8

(On space–time white noise) Note that in the proof of the previous theorem, the crucial ingredients are Fubini’s Theorem and the Itô isometry. The Itô isometry is true also for the stochastic integral with the Wiener process attaining values in a possibly larger Hilbert space \(G'\supseteq G\). Hence, the latter theorem remains true, if we consider space–time white noise instead of the white noise discussed in this exposition, see (in particular) [12, formula (3.16)].

3.2 SPDEs with multiplicative noise

In this section we apply the solution theory presented in Sect. 2.2 to equations with a stochastic integral. As already mentioned in the introduction, we consider equations of the form (1.1) with a stochastic integral instead of the more common random noise term \(\sigma (u(t))\dot{W}(t)\). This formulation is however in line with the usual way of formulating an SPDE, since in some sense we consider “a once integrated SPDE” and we interpret the noise term \(\partial _0^{-1}(\sigma (u(t))\dot{W}(t))\) as the stochastic integral in Hilbert spaces with respect to a cylindrical Wiener process denoted by

As a matter of convenience, we treat the case of zero initial conditions first. In Remark 3.10(b) we shall comment on how non-vanishing initial data can be incorporated into our formulation.

Theorem 3.9

(Solution theory for (abstract) stochastic differential equations) Let H, G be separable Hilbert spaces, and let W be a G-valued Wiener process with underlying probability space \((\Omega ,\mathscr {A},\mathbb {P})\). Assume that the filtration \(P_W=(P_t)_{t}\) on \(L^2(\mathbb {P})\) is generated by W (see Remark 3.3). Let \(r>0\), and assume that \(M:B(r,r)\rightarrow L(H)\) is an analytic and bounded function, satisfying

for all \(z\in B(r,r)\), \(\phi \in H\) and some \(c>0\). Let \(A:\mathrm{dom}(A)\subseteq H\rightarrow H\) be skew-self-adjoint, and \(\sigma :H\rightarrow L_2(G,H)\) with

for some \(L\geqslant 0\).

Then there exists \(\nu _1\geqslant 0\) such that for all \(\nu >\nu _1\), and \(f\in H_{\nu ,0}(\mathbb {R};P_W\otimes 1_H)\) the equation

admits a unique solution \(u\in H_{\nu ,0}(\mathbb {R};P_W\otimes 1_H)\). The solution does not depend on \(\nu \) in the sense of Remark 2.11.

Proof

We apply Corollary 2.15 for \(F:Z\mapsto \int _0^{\cdot }\sigma (Z)dW(s)\). By Theorem 3.7 and the Lipschitz continuity of \(\sigma \), we infer that F is invariant evolutionary with eventual Lipschitz constant being 0. Indeed, since \(W(t)=0\) for \(t<0\), we may write

But, for all \(\nu >0\), \(s\mapsto \chi _{[0,\infty )}(s)\sigma (0) \in H_{\nu ,0}(\mathbb {R};P_W\otimes 1_{L_2(G;H)})\) and, therefore, we get for \(u\in H_{\nu ,0}(\mathbb {R};P_W\otimes 1_{H})\)

Moreover, it is equally easy to see that

is Lipschitz continuous with Lipschitz constant bounded by L. Hence, by Theorem 3.7, we obtain that

is Lipschitz continuous with eventual Lipschitz constant 0. By Theorem 3.4, we obtain that

with \(S_\nu \) from Theorem 2.8. Hence, the assertion follows from Corollary 2.15. (The independence of the solution of the parameter \(\nu \) follows from Lemma 2.13 because the multiplication with a cut-off function leaves the space of predictable processes invariant). \(\square \)

Remark 3.10

(a) The above result is of course stable under Lipschitz continuous perturbations of the right-hand side. Indeed, let B be an invariant evolutionary mapping, leaving the space of predictable processes invariant, with B being causal and with the property that the eventual Lipschitz constant of \(u\mapsto B(u)+\int _0^{(\cdot )}\sigma (u)dW(s)\) is strictly less than \(c>0\), then the assertion of Theorem 3.9 remains the same, if one considers the equation

instead of (3.5).

(b) (Initial value problems) Similarly to the deterministic case treated in Lemma 2.10, we can also formulate initial value problems for the special case \(M(\partial _{0,\nu }^{-1})=M_0 +\partial _{0,\nu }^{-1}M_1\). Indeed the following initial value problem

with given adapted H-valued process f vanishing on \((-\infty ,0]\) can be rephrased identifying \(M_0u_0\in H\otimes L^2(\mathbb {P})\). With this notation, the initial value problem above can be reformulated as

as our appropriate realization of the initial value problem. Note that the map

is still invariant evolutionary. Thus, solving for \(v\in H_{\nu ,0}(\mathbb {R};H\otimes L^2(\mathbb {P}))\) gives, follow the lines of Lemma 2.10, that \(M_0v(0-)=0=M_0v(0+)\in H_{-1}(A+1)\otimes L^2(\mathbb {P})\), which eventually leads to the attainment of the initial value \(M_0 u (0+)=M_0u_0\) in \(H_{-1}(A+1)\otimes L^2(\mathbb {P})\).

Example 3.11

As a particular example for Remark 3.10(a), any deterministic Lipschitz continuous mapping from H with values in H, is an eligible right-hand side in (3.6). These mappings have been used in [8, Chap. 7].

3.3 SPDEs with additive noise

In this section we investigate the solution theory of equations with additive noise, that is, the stochastic integral on the right-hand side in (1.1) is replaced by a stochastic process X: Let, in this section, X be any H-valued stochastic process, more specifically, the map \((t,\omega )\mapsto X(t,\omega )\) belongs to \(H_{\nu ,0}(\mathbb {R};H\otimes L^2(\mathbb {P}))\). This includes in particular stochastic processes on Hilbert spaces that have continuous or càdlàg paths, and in particular Lévy processes and fractional Brownian motions. Hence, the equation to be solved is given by

Then we can apply Theorem 2.8 to these equations and we will obtain a unique solution—for any stochastic process X whose paths are in \(H_{\nu ,0}(\mathbb {R};H\otimes L^2(\mathbb {P}))\), which is only a condition on the integrability of its paths.

Now we we are going to show a more general result. With the notation as in Theorem 2.8, we consider the equation

where the right-hand side is an element of \(H_{\nu ,-k}(\mathbb {R};H\otimes L^2(\mathbb {P}))\), for all \(k\in \mathbb {N}_0\). Then, the noise term is interpreted as the k-times distributional time derivative of the paths of the stochastic process X. The space \(H_{\nu ,-k}(\mathbb {R};H\otimes L^2(\mathbb {P}))\) is the distribution space belonging to \(\partial _{0,\nu }\) realized as an operator in \(H_{\nu ,0}(\mathbb {R};H\otimes L^2(\mathbb {P}))\). The solution theory for such a class of equations is then a corollary to the general solution theory in Theorem 2.8.

Theorem 3.12

Assume that M and A satisfy the conditions in Theorem 2.8. Suppose that X is a H-valued stochastic process whose paths belong to \(H_{\nu ,0}(\mathbb {R};H\otimes L^2(\mathbb {P}))\). Then there exists a unique solution u to (3.7) in \(H_{\nu ,-k}(\mathbb {R};H\otimes L^2(\mathbb {P}))\).

Proof

The assertion follows once observed that \((\partial _0M(\partial _0^{-1})+A)^{-1}\) can be realized as a continuous linear operator in \(H_{\nu ,-k}(\mathbb {R};H\otimes L^2(\mathbb {P}))\) with Lipschitz constant bounded above by 1 / c (see also Remark 2.9). \(\square \)

We note the main achievement of this section. The right-hand side has to be in \(H_{\nu ,-k}(\mathbb {R};H\otimes L^2(\mathbb {P}))\), only. Note that there are no stochastic integrals involved, neither did we make any assumption on the regularity of the noise term \(\partial _{0,\nu }^k X\), other than that it is the k-th time-derivative of a stochastic process X. Therefore we have found a way to make sense of stochastic differential equations in Hilbert spaces where the random noise can be a very irregular object, given by the distributional derivative of any stochastic process (Lévy, Markov etc.) with only the assumption of integrability of its paths. The solution to these equations is an element of the space of stochastic distributions (in the time argument).

4 Examples

In this section, we shall give some examples for the solution theory presented above. We emphasize, that—at least in principle—the only thing to be taken care of is the formulation of the respective problem in an appropriate way as an operator equation in appropriate Hilbert spaces. The way how we do it is to start with the equation given formally as a stochastic differential equation and, after some algebraic manipulations, we shall give the appropriate replacement to be solved with the solution theory based on Theorem 3.9 or Theorem 3.12. In the whole section, we let W be a G-valued Wiener process for some separable Hilbert space G and we assume that \(\sigma :H_0 \rightarrow L_2(G,H_0)\) is Lipschitz continuous, where \(H_0\) will be clear from the context. For simplicity of the exposition, we assume that we only have a stochastic term containing \(\sigma \) on the right-hand side and null initial conditions. The way how to incorporate a path-wise perturbation and/or non-zero initial conditions was shown in Remark 3.10.

For the stochastic heat as well as for the stochastic wave equation, we justify our findings and put them into perspective of more classical solution concepts. For this, we note a general observation: Although the solutions constructed in this exposition live on the whole real time line, the support of the solutions is concentrated on the positive real axis provided the one of the right-hand side is. Indeed, this is a consequence of causality of the respective solution operators.

4.1 Stochastic heat equation

We consider the following SPDE in an open set \(D\subseteq {\mathbb {R}^d}\)

where \(\Delta \) is the Laplace operator acting on the deterministic spatial variables \(x\in D\) only. This equation has been studied in [39], see also [8, Example 7.6] for a treatment in Hilbert spaces. We establish the boundary condition in the way that \(u\in H_0^1(D)\), the Sobolev space of the once weakly differentiable functions, which may be approximated in the \(H^1(D)\)-norm by smooth functions with compact support contained in D. Before we formulate the heat equation in our operator-theoretic setting, we need to introduce some differential operators.

Definition 4.1

We define

and let \(\mathrm {div}:= -{{\mathrm{grad}}}_c^*\),  as well as

as well as  .

.

Throughout this section, we will use these operators to reformulate the SPDEs in an adequate way. The meaning of these operators is that the ones with the superscript “ ” carry the homogeneous boundary conditions on \(\partial D\):

” carry the homogeneous boundary conditions on \(\partial D\):  carries zero Neumann boundary conditions and

carries zero Neumann boundary conditions and  carries zero Dirichlet boundary conditions. With these operators we can rewrite the Laplacian with homogeneous Dirichlet boundary conditions as

carries zero Dirichlet boundary conditions. With these operators we can rewrite the Laplacian with homogeneous Dirichlet boundary conditions as  .

.

We may now come back to the stochastic heat equation. We perform an algebraic manipulation to reformulate it as a system of first order SPDEs. First, we apply the operator \(\partial _0^{-1}\) to Eq. (4.1), see also Remark 2.9(a), and we arrive at

We interpret the right-hand side as the following stochastic integral

Observe that \(\partial _0^{-1}\) and any spatial (partial differential) operator commute (see also Lemma 4.6 below for a more precise statement). Therefore, formally, we can rewrite the second term in (4.2) as

Then, setting  , we arrive at the following first-order system

, we arrive at the following first-order system

which we think of being an appropriate replacement for (4.1).

Assuming that \(\sigma :L^2(\lambda _D )\rightarrow L_2(G,L^2(\lambda _D ))\) to be Lipschitz continuous, we can use Theorem 3.9 to show the existence and uniqueness of solutions to this system. The only things still to be checked are whether

is skew-self-adjoint and whether

satisfies condition (3.4), for some \(r>0\). The former statement being easy to check using the definition of \(\mathrm {div}\) and  as skew-adjoints of one another in Definition 4.1 and upon relying on Remark 2.7. In order to prove the validity of condition (3.4), we let \((\phi ,\psi )\in L^2(\lambda _D )\oplus L^2(\lambda _D )^d\) and compute using \(z^{-1}=\mathrm {i}t+\mu \) if \(z\in B(r,r)\) for some \(\mu >\frac{1}{2r}\) and \(t\in \mathbb {R}\)

as skew-adjoints of one another in Definition 4.1 and upon relying on Remark 2.7. In order to prove the validity of condition (3.4), we let \((\phi ,\psi )\in L^2(\lambda _D )\oplus L^2(\lambda _D )^d\) and compute using \(z^{-1}=\mathrm {i}t+\mu \) if \(z\in B(r,r)\) for some \(\mu >\frac{1}{2r}\) and \(t\in \mathbb {R}\)

which yields (3.4). Using Theorem 3.9, we have thus proven the following.

Corollary 4.2

With the notations from the beginning of this section, assume that \(\sigma :L^2(\lambda _D )\rightarrow L_2(G,L^2(\lambda _D ))\) satisfies

for all \(u,v\in H\) and some \(L\geqslant 0\).

Then there exists \(\nu _1\geqslant 0\) such that for all \(\nu >\nu _1\), the equation

has a unique solution \((u,q)\in H_{\nu ,0}(\mathbb {R};P_W\otimes 1_{L^2(\lambda _D )^{d+1}})\), which is independent of \(\nu \) (For a definition of \(P_W\) one might recall Remark 3.3).

The solution theory is not limited to the case of partial differential operators with constant coefficients. The following remark shows how to invoke partial differential operators with variable coefficients.

Remark 4.3

Starting out with a deterministic bounded measurable matrix-valued coefficient function \(a:D\rightarrow {\mathbb {C}}^{d\times d}\) being pointwise self-adjoint and uniformly strictly positive, that is, \(\langle a(x)\xi ,\xi \rangle \geqslant c\langle \xi ,\xi \rangle \) for all \(x\in D\), \(\xi \in {\mathbb {R}^d}\) and some \(c>0\), we consider the stochastic heat equation

with the same vanishing boundary and initial data as above. Substituting  , we arrive at the system

, we arrive at the system

with the same A as in the constant coefficient case and

Under the conditions on a, we can show the existence and uniqueness of solutions using Theorem 3.9 (Note that \(a(x)=a(x)^*\geqslant c>0\) implies \(a(x)^{-1}\geqslant c/\Vert a(x)\Vert ^2\) in the sense of positive definiteness).

4.1.1 Connection to variational solutions

We will compare our solution to the one defined in [36, Definition 2.1] (with \(g=0\) and \(\phi =0\)), see also [32, 34]. We understand the following notion as a variational/weak solution to the heat equation (4.1):

Definition 4.4

A predictable stochastic process u supported on \([0,\infty )\) with values in \(H^1_0(D)\) is called a variational solution to the stochastic heat equation if

for all \(0\leqslant T<\infty \) almost surely, has at most exponential growth in T almost surely (with some exponential growth bound \(\nu >0\)), and for all \(\eta \in H_0^1(D)\) the following equation holds almost surely for all \(t\geqslant 0\)

where \((e_k)_{k\in \mathbb {N}}\) is an orthogonal basis of \(L^2(\lambda _D )\), \((\lambda _k)_{k\in \mathbb {N}}\in \ell _1(\mathbb {N})\) is the sequence of eigenvalues of the covariance operator of W, and \((W_k)_{k\in \mathbb {N}}\) is a sequence of independent one-dimensional Brownian motions.

In the next few lines, we will show that any variational solution in the sense of Definition 4.4 is a solution constructed in Corollary 4.2. In order to avoid unnecessarily cluttered notation, we shall occasionally neglect referring to the real numbers in the notation of the vector-valued spaces to be studied in the following. For instance, for \(H_{\nu ,0}({\mathbb {R}};H_0^1(D))\) we write \(H_{\nu ,0}(H_0^1(D))\) instead.

Proposition 4.5

Let \(\nu >0\), \(u\in H_{\nu ,0}({\mathbb {R}};H_0^1(D)\otimes L^2(\mathbb {P}))\) a variational solution to the stochastic heat equation. Then,  solves the equation for (u, q) given in Corollary 4.2.

solves the equation for (u, q) given in Corollary 4.2.

Proof

Since u is supported on \([0,\infty )\) only, we get, using Remark 2.2,

Next, by Definition 3.5, we obtain

Hence, for all \(v\in H_{\nu ,0}({\mathbb {R}}), \eta \in H_0^1(D)\), we obtain from (4.5)

In consequence, by linearity and continuity, we obtain for all \(\phi \in H_{\nu ,0}({\mathbb {R}};H_0^1(D))\)

Substituting  , we obtain

, we obtain  . Hence, (u, q) solves the equation in Corollary 4.2 (even without the closure bar). \(\square \)

. Hence, (u, q) solves the equation in Corollary 4.2 (even without the closure bar). \(\square \)

For the reverse direction, we need an additional regularity assumption. Before commenting on this, we shall derive an equality, which is almost the one in (4.5). We need the following prerequisite of abstract nature.

Lemma 4.6

Let \(H_0\), \(H_1\) be Hilbert spaces, \(\nu >0\), \(C:\mathrm{dom}(C)\subseteq H_0 \rightarrow H_1\) densely defined, closed. Then \(\overline{\partial _0^{-1}C} = C\partial _0^{-1}\).

Proof

The operator \(\partial _0^{-1}\) is continuous from \(H_{\nu ,0}(H_0)\) into itself and the operator C is closed. Hence, \(\overline{\partial _0^{-1}C}\subseteq C\partial _0^{-1}\). On the other hand, note that \((1+\varepsilon C^*C)^{-1}\rightarrow 1\) and \((1+\varepsilon CC^*)^{-1}\rightarrow 1\) in the strong operator topology as \(\varepsilon \rightarrow 0\). Thus, for \(u\in \mathrm{dom}(C\partial _0^{-1})\) we let \(u_\varepsilon := (1+\varepsilon C^*C)^{-1}u\) and get \(u_\varepsilon \in \mathrm{dom}(C)=\mathrm{dom}(\partial _0^{-1} C)\). Moreover, \(C (1+\varepsilon C^*C)^{-1}\) is a continuous operator and \((1+\varepsilon CC^*)^{-1} C\subseteq C (1+\varepsilon C^*C)^{-1}\). Hence, for \(\varepsilon >0\)

Letting \(\varepsilon \rightarrow 0\) in the latter equality, we obtain \(u\in \mathrm{dom}(\overline{\partial _0^{-1}C})\) and \(\overline{\partial _0^{-1}C} u =C\partial _0^{-1} u\), which yields the assertion. \(\square \)

Theorem 4.7

Let \((u,q)\in H_{\nu ,0}({\mathbb {R}};(L^2(\lambda _D )\times L^2(\lambda _D )^d)\otimes L^2(\mathbb {P}))\) be a predictable process solving the equation in Corollary 4.2. Then \(q\in \mathrm{dom}(\mathrm {div})\) and  ,

,  and

and

almost surely.

Proof

By causality and the fact that \(W=0\) for negative times, we infer (u, q) is supported on \([0,\infty )\) only. Moreover, by Remark 2.9 ((a) and (b)), we obtain that

Furthermore, we have

Hence, the first line of the latter equality yields

Thus, using \((1+\varepsilon \partial _0)^{-1}\rightarrow 1\) as \(\varepsilon \rightarrow 0\) in the strong operator topology, we obtain by the closedness of \(\mathrm {div}\) that

Next, the second line of (4.7) reads

Thus, by Lemma 4.6, we obtain as \(\varepsilon \rightarrow 0\),

Therefore, from (4.8) we read off

Thus, testing the latter equality with  , and using that

, and using that

we infer the asserted equality. \(\square \)

Corollary 4.8

In the situation of Theorem 4.7, we additionally assume that \(u\in H_{\nu ,0}(H_0^1(D)\otimes L^2(\mathbb {P}))\). Then u is a solution in the sense of Definition 4.4.

4.2 Stochastic wave equation

Similarly to the treatment of the stochastic heat equation in the previous section, we show now how to reformulate the stochastic wave equation into a first order system and then prove the existence and uniqueness of solutions. This equation has been treated in [7, 39] with a random-field approach and for instance in [8, Example 5.8, Sect. 13.21] with a semi-group approach. Consider the following equation

As in the previous section, we first apply the operator \(\partial _0^{-1}\) to (4.9), write  , and finally define

, and finally define  . With these manipulations, we arrive at the following first-order system

. With these manipulations, we arrive at the following first-order system

which we think of as the appropriate formulation for the stochastic wave equation. Now we can show, with \(\sigma :L^2(\lambda _D )\rightarrow L_2(G,L^2(\lambda _D ))\) Lipschitz continuous, the existence and uniqueness of a solution to (4.10) with the help of Theorem 3.9. The only thing to be verified is that

satisfies condition (3.4) for all \(z\in B(r,r)\), for some \(r>0\). This, however, is easy (see also the computation in (4.4)). Thus, we just obtained the following:

Corollary 4.9

There is \(\nu _0>0\) such that for all \(\nu \geqslant \nu _0\), there is a unique \((u,v)\in H_{\nu ,0}({\mathbb {R}};P_W\otimes 1_{L^2(\lambda _D )^{d+1}})\) such that

The solution is independent of \(\nu \).

We emphasize that the way of writing the stochastic wave equation into a first-order-in-time system is not unique. Indeed, a more familiar way is to set \(w:= -\Delta \partial _0^{-1}u\). With this we arrive at the following system

The latter system is essentially the same as the system in (4.10), see [27, p. 16/17] for the mathematically rigorous statement. However, the spatial Hilbert spaces differ from one another: in (4.10) the spatial Hilbert space is \(H=L^2(\lambda _D )\oplus L^2(\lambda _D )^d\), and in (4.11) it coincides with \(H=H_0^1(D)\oplus L^2(\lambda _D )\). The domains of the two spatial partial differential operators

are  and \(\mathrm{dom}(\Delta )\oplus H_0^1(D)\), respectively, where

and \(\mathrm{dom}(\Delta )\oplus H_0^1(D)\), respectively, where  . However, the solvability of one system implies the solvability of the other one. In any case, for bounded D, endowing \(H_0^1(D)\) with the scalar product induced by \((u,v)\mapsto \langle {{\mathrm{grad}}}u,{{\mathrm{grad}}}v\rangle \), it can be shown that

. However, the solvability of one system implies the solvability of the other one. In any case, for bounded D, endowing \(H_0^1(D)\) with the scalar product induced by \((u,v)\mapsto \langle {{\mathrm{grad}}}u,{{\mathrm{grad}}}v\rangle \), it can be shown that

is skew-self-adjoint. For the latter assertion, it is sufficient to note the following proposition:

Lemma 4.10

Assume that \(D\subseteq {\mathbb {R}}^d\) is bounded. Let  with \(Cu=\Delta u\). Then, for \(C^*:\mathrm{dom}(C^*)\subseteq L^2(\lambda _D )\rightarrow H_0^1(D)\) we have

with \(Cu=\Delta u\). Then, for \(C^*:\mathrm{dom}(C^*)\subseteq L^2(\lambda _D )\rightarrow H_0^1(D)\) we have

where \(H_0^1(D)\) is endowed with the scalar product \((u,v)\mapsto \langle {{\mathrm{grad}}}u,{{\mathrm{grad}}}v\rangle \).

Proof

Let \(v\in L^2(\lambda _D )\) and \(f\in H_0^1(D)\). Then we compute

But,  is continuously invertible. In particular,

is continuously invertible. In particular,  is onto. Hence,

is onto. Hence,

The assertion follows. \(\square \)

Hence, with Lemma 4.10 in mind, in either formulation—(4.10) or (4.11)—our solution theory, Theorem 3.9, applies. We shall also note that the functional analytic framework provided serves to treat deterministic variable coefficients \(a:D\rightarrow {\mathbb {C}}^{d\times d}\) satisfying the same assumptions as in Remark 4.3 and to treat the corresponding wave equation

or, rather,

4.2.1 Connection to mild solutions

Next, we will comment on the relationship of the solution obtained for (4.11) to a more classical way of deriving the solution by means of \(C_0\)-semi-groups: On the bounded, open \(D\subseteq {\mathbb {R}}^d\) consider the classical reformulation of the stochastic wave equation as a first-order system

with zero initial conditions and homogeneous Dirichlet boundary conditions, see [8, Example 5.8] for this reformulation. So,  with a suitable domain. The solution to (4.12) can be computed using the semi-group approach in [8] to be

with a suitable domain. The solution to (4.12) can be computed using the semi-group approach in [8] to be

where \(\mathbf {S}(t)\) is the semi-group defined by

However, in (4.11), we have arrived at a different reformulation as a first-order system, given by

Our aim in this section will be to establish the following result.

Theorem 4.11

Let (u, v) satisfy (4.13). Then (u, w) solves (4.14) with

For this, we need some elementary prerequisites:

Lemma 4.12

Let \(r,t\in {\mathbb {R}}\). Then the following statements hold.

(a) For any \(\zeta \in {\mathbb {R}}_{>0}\), we have

and

(b) For all  we have

we have

and

Proof

The equations in (a) can be verified immediately. In order to settle (b), we use the spectral theorem for the (strictly positive definite) Dirichlet–Laplace operator \(-\Delta \) on the underlying open and bounded set D. Hence, (b) is a consequence of (a) by Fubini’s theorem. \(\square \)

Next, we proceed to a proof of the main result in this section.

Proof of Theorem 4.11

Using (4.15) together with the second line of (4.13), we obtain for \(\phi \in \mathrm{dom}(\Delta )\)

With Lemma 4.12, we further obtain

where in the last equality we used the first line of (4.13). We read off \(\partial _0^{-1}u \in \mathrm{dom}(\Delta )\) and

Moreover, we compute with (4.13) and Lemma 4.12,

Therefore, together with (4.15), we get

So, again by multiplying both these equations with \((1+\varepsilon \partial _0)^{-1}\) and setting \(u_\varepsilon := (1+\varepsilon \partial _0)^{-1}u\) as well as \(w_\varepsilon := (1+\varepsilon \partial _0)^{-1}w\), we obtain

Hence, by letting \(\varepsilon \rightarrow 0\), we obtain the assertion. \(\square \)

4.3 Stochastic Schrödinger equation with additive noise

In this section we treat the stochastic Schrödinger equation on an open set \(D\subseteq {\mathbb {R}^d}\), see for instance [3, Chap. 2]. It can be formulated as

with appropriate boundary conditions such that \(\Delta \) becomes a self-adjoint operator (recall that then \(\mathrm {i}\Delta \) is skew-selfadjoint) and

being Lipschitz continuous with Lipschitz constant less than \(\nu \). We assume that \(\partial _0 X\) is the derivative of a stochastic process as discussed in Sect. 3.3. Then the stochastic Schrödinger equation is well-posed according to Theorem 3.12.

4.4 Stochastic Maxwell equations

Before discussing the stochastic Maxwell equations, we need to introduce some vector-analytic operators. In the whole section let \(D\subseteq \mathbb {R}^3\) be open.

Definition 4.13

We define

where \(\partial _1,\partial _2,\partial _3\) are the partial derivatives with respect to the first, second and third spatial variable, respectively. Let \({{\mathrm{curl}}}:= {{\mathrm{curl}}}_c^*\) and  .

.

We introduce the linear operators \(\epsilon ,\mu ,\zeta \in L(L^2(\lambda _D )^3)\), modeling the respective material coefficients dielectricity, magnetic permeability and electric conductivity, with the following additional properties

-

\(\epsilon \) is self-adjoint and positive definite, \(\epsilon ^*=\epsilon \geqslant 0\),

-

\(\mu \) is self-adjoint, \(\mu ^*=\mu \),

-

both the operators \(\mu \) and \(\nu \epsilon +\mathfrak {R}\zeta \) are strictly positive definite if \(\nu >0\) is chosen large enough.

Then Maxwell’s equations can be written in the form

This first-order system is well-posed in solving for \((E,H)\in H_{\nu ,0}(\mathbb {R};L^2(\lambda _D )^6)\), where the quantity \(J\in H_{\nu ,0}(\mathbb {R};L^2(\lambda _D )^3)\), the external currents, is a given right-hand side. Indeed, this follows from our deterministic solution theory in Theorem 2.8, see [26, Sect. 3.1.12.4] for a detailed treatment. Hence, incorporating stochastic integrals in the Maxwell equations leads to

which in turn is well-posed by Theorem 3.9.

Remark 4.14

Note that the Maxwell equations with multiplicative noise have not been—to the best of our knowledge—discussed yet in the literature. The above formulation of this particular reformulation is in fact a possible way to understand the ‘stochastic Maxwell equations with multiplicative noise’. Stochastic Maxwell equations with additive noise have, however, been discussed in the literature, see for instance [6, 14, 15]. A solution theory for this line of problem can again be found in Sect. 3.3.

4.5 SPDEs with fractional time derivatives

Due to the generality of our ansatz with respect to the freedom in the operator coefficient \(M(\partial _0^{-1})\), we may also treat SPDEs with fractional time derivatives. As an instant, let us consider the following super-diffusion equation for \(\alpha \in (0,1)\):

subject to zero initial and, for instance, homogeneous Neumann boundary conditions in an open set \(D\subseteq {\mathbb {R}^d}\). Note that we can incorporate these homogeneous boundary conditions in the formulation of the abstract setting (without regards to the smoothness of the boundary of D) in the way that  , where

, where  carries the homogeneous Neumann boundary conditions. As in the previous sections, we define an auxiliary unknown \(v:= -{{\mathrm{grad}}}\partial _0^{-1}u\) and get the following system

carries the homogeneous Neumann boundary conditions. As in the previous sections, we define an auxiliary unknown \(v:= -{{\mathrm{grad}}}\partial _0^{-1}u\) and get the following system

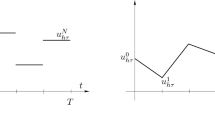

as the appropriate formulation for the stochastic super-diffusion equation discussed above. Recall that the part with the time derivative is given by \(\partial _0M(\partial _0^{-1})\), where here M is given by

It can be shown that this M satisfies the condition of strict positive definiteness for all \(z\in B(r,r)\) for all \(r>0\) in (3.4), see [30, Lemma 2.1 or Theorem 3.5]. Hence, Theorem 3.9 is applicable and well-posedness is established.

Remark 4.15