Abstract

Some accounts of evidence regard it as an objective relationship holding between data and hypotheses, perhaps mediated by a testing procedure. Mayo’s error-statistical theory of evidence is an example of such an approach. Such a view leaves open the question of when an epistemic agent is justified in drawing an inference from such data to a hypothesis. Using Mayo’s account as an illustration, I propose a framework for addressing the justification question via a relativized notion, which I designate security, meant to conceptualize practices aimed at the justification of inferences from evidence. I then show how the notion of security can be put to use by showing how two quite different theoretical approaches to model criticism in statistics can both be viewed as strategies for securing claims about statistical evidence.

Similar content being viewed by others

Notes

The precise sense, however, in which objectivity can be predicated of the ES account, however, is a subtle and disputed issue. Suffice it here to note two points. First, the error probabilities that figure into the application of criteria SR1 and SR2 are, as will be discussed, predicated on a statistical model on which the statistical inference is premised. Much of the present paper is concerned with statistical procedures for coping with the possible inadequacy of these premises for the kind of inference that the investigtor seeks to draw. Second, although the very fact that such premises are susceptible to the kind of criticism discussed here would seem to indicate that some notion of objectivity is applicable, the exact sense in which such a notion applies is not a simple matter of “correspondence to the facts.” As will be seen, for example, the procedure to be here discussed for testing statistical models does not test for the truth of their defining assumptions but for their statistical adequacy (see Section 5). Here I simply note these issues, as the main concern of this paper is not objectivity per se (granting that the notion does play a role in the discussion), but rather the justification of statistical inferences.

In epistemology, warrant is sometimes used to denote that which, in addition to truth, qualifies a belief as knowledge. For our purposes, it will suffice to regard the use of the term here as synonymous with justification.

Mayo has argued that Neyman and Pearson themselves should not be understood as having consistently advocated the orthodox N-P approach. Pearson distanced himself from the behavioristic interpretation typically associated with orthodox N-P (Mayo 1996; Mayo 1992; Pearson 1962), and Neyman advocated post-data power analyses similar to those employed in ES (see, e.g., Mayo and Spanos 2006; Neyman 1955).

Although degrees of severity as here deployed look mathematically like ordinary “p-values” as used in Fisherian significance testing, their methodological use in a post-data meta-statistical scrutiny distinguishes them from p-values.

Just how to formulate the semantics of such statements is, however, contested (see, e.g., Kratzer 1977; DeRose 1991; Chalmers 2011). To note one difficulty for Hintikka’s original understanding, consider the status of mathematical theorems. Arguably, if Goldbach’s conjecture is true, then it does follow from what I know (though I do not realize this), if I know the axioms of number theory. Yet it also seems correct to say that it is possible, for all I know, that Goldbach’s conjecture is false, even if I do know the axioms of number theory. More recently, contextualist and relativist approaches have been formulated (DeRose 1991; MacFarlane 2011). One reason for relativizing security to epistemic situations as characterized above, rather than to, e.g., sets of beliefs is that such approaches to modal semantics make factors beyond simply an agent’s beliefs or knowledge relevant to the status of an epistemic modal proposition. Relativizing security to epistemic situations makes the account sufficiently flexible to be compatible with such varied approaches to modal semantics.

As suggested by an anonymous referee, one might wish to formulate the idea of security in terms of ‘possible worlds’ (as used by Lewis and many others) or ‘state descriptions’ (as used by Carnap). No difficulty seems to arise on either approach, provided one keeps firmly in view that the modality at issue is neither subjunctive nor logical, but epistemic in nature.

In the history of statistics, Stigler traces the first mathematical contributions to robust estimation back to Laplace, but focuses on the work of Simon Newcomb and of P. J. Daniell as clear and rigorous exemplars of early contributions to robust estimation.

Henceforth, in making general points about robustness theory, I shall refer only to estimators. It must be borne in mind that robustness theory has been developed for testing as well as estimation and all the same general points obtain in that context, but with attention shifted from the properties of estimators to those of test statistics.

Jan Magnus and his collaborators have pursued a distinct but related approach to this problem that is noteworthy but not explored in the present paper. Magnus advocates a model-perturbation approach to sensitivity analysis that studies “the effect of small changes in model assumptions on an estimator of a parameter of interest” (see, e.g., Magnus and Vasnev 2007; Magnus 2007).

Here I discuss these developments in the context of frequentist statistics in the Neyman–Pearson tradition. However, robustness theory is also applicable in Bayesian settings and likelihood-based approaches (Hampel et al. 1986, 52–56). That this is so provides additional support to the argument above regarding the relevance of security for statistical approaches other than ES.

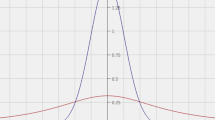

As Huber notes, this class turns out to include as special cases the sample mean (ρ(t) = t 2), the sample median (ρ(t) = |t |), and all maximum likelihood estimators (ρ(t) = − logf(t), where f is the assumed density of the distribution).

The following discussion owes much to Huber (1981). Many technical details are omitted, as the aim is to convey an intuitive notion that only approximates the more rigorous mathematical approach taken by Hampel.

Just what makes a function d “suitable” to be a distance function in this context, beyond some obvious but underdetermining constraints, is not perfectly clear. See Huber (1981, 25–34) for some functions that have received the attention of theorists.

Note that this notion only serves to characterize robustness with respect to assumptions about the distribution, not about dependence or heterogeneity, since the definition assumes the data are distributed independently and identically.

Fisher’s approach contrasts with the N-P approach by eschewing the specification of alternative hypotheses, emphasizing instead the effort to test the null hypothesis itself. Thus, when the null is rejected, no alternative is accepted, but rather the statistical signficance with which the null is rejected is reported. Fisherian testing also differs from a behaviorist construal of N-P insofar as its aim is not to control the cost of erroneous decisions, but to determine how well the observations agree with a particular hypothesis (see, e.g., Fisher 1949).

The model in question is the Normal autoregressive model, and the optimal test is a t-test; see Spanos (1999, 757–760) for details.

Another statistical methodology that can be put to use in securing inferences, not discussed here, is the use of nonparametric techniques, discussed recently by Jan Sprenger (2009).

References

Achinstein, P. (2001). The book of evidence. New York: Oxford University Press.

Box, G. E. P., & Tiao, G. C. (1973). Bayesian inference in statistical analysis. Reading, Mass.: Addison-Wesley.

Chalmers, D. (2011). The nature of epistemic space. In A. Egan, & B. Weatherson (Eds.), Epistemic modality. Oxford: Oxford University Press.

Cox, D. R. (2006). Principles of statistical inference. New York: Cambridge University Press.

DeRose, K. (1991). Epistemic possibilities. The Philosophical Review, 100, 581–605.

Fisher, R. A. (1949). The design of experiments (5th edn). New York: Hafner Publishing Co.

Hampel, F. (1968). Contributions to the theory of robust estimation. PhD thesis, University of California, Berkeley.

Hampel, F. (1971). A general qualitative definition of robustness. The Annals of Mathematical Statistics, 42, 1887–1896.

Hampel, F. (1974). The influence curve and its role in robust estimation. Journal of the American Statistical Association, 69, 383–393.

Hampel, F. R., Ronchetti, E. M., Rousseeuw, P. J., & Stahel, W. A. (1986). Robust statistics: The approach based on influence functions. New York: John Wiley and Sons.

Hintikka, J. (1962). Knowledge and belief: An introduction to the logic of the two notions. Ithaca: Cornell University Press.

Huber, P. (1964). Robust estimation of a location parameter. The Annals of Mathematical Statistics, 35, 73–101.

Huber, P. (1981). Robust statistics. New York: John Wiley and Sons.

Kratzer, A. (1977). What ‘must’ and ‘can’ must and can mean. Linguistics and Philosophy, 1, 337–355.

MacFarlane, J. (2011). Epistemic modals are assessment-sensitive. In A. Egan, & B. Weatherson (Eds.), Epistemic modality. Oxford: Oxford University Press.

Magnus, J. R. (2007). Local sensitivity in econometrics. In M. Boumans (Ed.), Measurement in economics: A handbook (pp. 295–319). Oxford: Academic.

Magnus, J. R., & Vasnev, A. L. (2007). Local sensitivity and diagnostic tests. Econometrics Journal, 10, 166–192.

Mayo, D. G. (1992). Did Pearson reject the Neyman–Pearson philosophy of statistics? Synthese, 90, 233–262.

Mayo, D. G. (1996). Error and the growth of experimental knowledge. Chicago: University of Chicago Press.

Mayo, D. G., & Spanos, A. (2004). Methodology in practice: Statistical misspecification testing. Philosophy of Science, 71, 1007–1025.

Mayo, D. G., & Spanos, A. (2006). Severe testing as a basic concept in a Neyman–Pearson philosophy of induction. The British Journal for the Philosophy of Science, 57(2), 323–357.

Neyman, J. (1950). First course in probability and statistics. New York: Henry Holt.

Neyman, J. (1955). The problem of inductive inference. Communications on Pure and Applied Mathematics, VIII, 13–46.

Pearson, E. S. (1962). Some thoughts on statistical inference. Annals of Mathematical Statistics, 33, 394–403.

Spanos, A. (1999). Probability theory and statistical inference. Cambridge: Cambridge University Press.

Sprenger, J. (2009). Science without (parametric) models: The case of bootstrap resampling. Synthese, published online. doi:10.1007/s11229-009-9567-z.

Staley, K. (2008). Error-statistical elimination of alternative hypotheses. Synthese, 163, 397–408.

Staley, K., & Cobb, A. (2010). Internalist and externalist aspects of justification in scientific inquiry. Synthese, published online. doi:10.1007/s11229-010-9754-y.

Stigler, S. (1973). Simon Newcomb, Percy Daniell, and the history of robust estimation 1885–1920. Journal of the American Statistical Association, 68, 872–879.

Tukey, J. (1960). A survey of sampling from contaminated distributions. In I. Olkin (Ed.), Contributions to probability and statistics: Essays in honor of Harold Hotelling (pp. 448–85). Stanford: Stanford University Press.

Acknowledgements

I am grateful to Deborah Mayo, Aris Spanos, Jan Sprenger, and two anonymous referees for their helpful suggestions for this paper, and also for stimulating comments from Teddy Seidenfeld, Michael Strevens, Paul Weirich, and audiences at the Missouri Philosophy of Science Workshop, the Second Valencia Meeting on Philosophy, Probability, and Methodology, and the 2009 EPSA meeting. Thanks also to Aaron Cobb for many fruitful conversations about security and evidence.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Staley, K.W. Strategies for securing evidence through model criticism. Euro Jnl Phil Sci 2, 21–43 (2012). https://doi.org/10.1007/s13194-011-0022-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13194-011-0022-x