Abstract

A number of perceptual (exteroceptive and proprioceptive) illusions present problems for predictive processing accounts. In this chapter we’ll review explanations of the Müller-Lyer Illusion (MLI), the Rubber Hand Illusion (RHI) and the Alien Hand Illusion (AHI) based on the idea of Prediction Error Minimization (PEM), and show why they fail. In spite of the relatively open communicative processes which, on many accounts, are posited between hierarchical levels of the cognitive system in order to facilitate the minimization of prediction errors, perceptual illusions seemingly allow prediction errors to rule. Even if, at the top, we have reliable and secure knowledge that the lines in the MLI are equal, or that the rubber hand in the RHI is not our hand, the system seems unable to correct for sensory errors that form the illusion. We argue that the standard PEM explanation based on a short-circuiting principle doesn’t work. This is the idea that where there are general statistical regularities in the environment there is a kind of short circuiting such that relevant priors are relegated to lower-level processing so that information from higher levels is not exchanged (Ogilvie and Carruthers, Review of Philosophy and Psychology 7:721–742, 2016), or is not as precise as it should be (Hohwy, The Predictive Mind, Oxford University Press, Oxford, 2013). Such solutions (without convincing explanation) violate the idea of open communication and/or they over-discount the reliable and secure knowledge that is in the system. We propose an alternative, 4E (embodied, embedded, extended, enactive) solution. We argue that PEM fails to take into account the ‘structural resistance’ introduced by material and cultural factors in the broader cognitive system.

Similar content being viewed by others

1 Introduction

Some accounts of cognition based on predictive processing and prediction error minimization (PEM) are framed in narrow, internalist terms where all of the important action is to be found in brain processes tightly wrapped inside a Markov blanket (a formalism that defines what counts as part of the system; see Friston 2013). Jakob Hohwy, for example, takes the concept of the Markov blanket to constitute a strict partition between brain and world to the extent that bodily, ecological or environmental factors seem irrelevant to explaining cognition.

PEM should make us resist conceptions of [a mind-world] relation on which the mind is in some fundamental way open or porous to the world, or on which it is in some strong sense embodied, extended or enactive. Instead, PEM reveals the mind to be inferentially secluded from the world, it seems to be more neurocentrically skull-bound than embodied or extended, and action itself is more an inferential process on sensory input than an enactive coupling with the environment. (Hohwy 2016, p. 259).

We note that Hohwy does not express certainty here; it only “seems to be more neurocentrically skull-bound.” The idea of the brain’s inferential seclusion, however, seems a central idea that explains why perception is necessarily inferential. Even Andy Clark, who is not an internalist, and who takes predictive processing to be more aligned with embodied and extended cognition, endorses the same idea: “[The brain] must discover information about the likely causes of impinging signals without any form of direct access to their source… [A]ll that it ‘knows’, in any direct sense, are the ways its own states (e.g., spike trains) flow and alter. In that (restricted) sense, all the system has direct access to is its own states. The world itself is thus off-limits…” (Clark 2013a, 183).

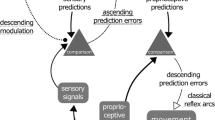

On the internalist view, the body, at best, plays the role of a sensory information source in a process where descending predictions in the brain are compared with ascending prediction errors from the periphery.Footnote 1 Descending and ascending information streams, communicating across a set of hierarchical levels, are an important part of the PEM explanation of cognition generally, including both perception and action. Such explanations also apply to perceptual illusions, which, we argue, present a difficult problem for PEM accounts. In this chapter we’ll review PEM explanations of several illusions, including the Müller-Lyer Illusion (MLI), the Rubber Hand Illusion (RHI) and the Alien Hand Illusion (AHI), to show how these illusions challenge the idea of open communication amongst putative hierarchical levels of the cognitive system. We’ll also suggest that, in contrast to the general claims made by Hohwy, to resolve these problems predictive processing needs to appeal to bodily and environmental factors.

2 Open Communication

In predictive processing models of cognition a principle of open communication is frequently postulated. The idea is that top-down and bottom-up exchanges of information across a hierarchical structure help the system to efficiently minimize prediction errors. According to the PEM explanation, on the basis of prior knowledge or beliefs (‘priors’), a generative model supplies predictive hypotheses about the hidden worldly causes of sensory signals. Sensory input either confirms the model, or generates prediction errors. The system then adjusts to the demands rising from lower layers to minimize any error signals. Accordingly, there is an exchange of information, a communication, amongst the different levels of the system. Weise and Metzinger (2017, 1) call it an “interplay between top-down and bottom-up processing” (also Gładziejewski 2017); Hohwy refers to it as a type of “message passing.”

Predictions are messages that descend in the internal structure of the system, to be tested against the incoming, ascending sensory signal. Any discrepancy between prediction and sensory signal gives rise to prediction error messages that then ascend from the sensors and upward in the system…. This basic notion of predictive coding provides an efficient message passing scheme because prediction errors carry information about the quality of the prediction and are used to update the model, leading to new predictions…. (Hohwy 2020a, 210).Footnote 2

Or as Wilkinson et al. (2019) explain: “whenever information from the world impacts on your sensory surfaces, it is already, even at the earliest stages, greeted by a downward-flowing prediction on the part of the nervous system” (p. 102). Going in the opposite direction sensory information may contradict the predictions, leading to the updating of priors, and adjustments in the predictive model that minimize prediction error. Perceptual processing is inferential and hierarchical: “hypotheses are tested by passing messages (predictions and prediction errors) up and down in the hierarchy” (Kiefer and Hohwy 2018, 2405; see Hohwy 2020b). Information flows in both directions, and in a free enough exchange to make the system relatively efficient and, ideally, Bayes-optimal. Here’s how Clark puts it:

the flow of representational information (the predictions), at least in the purest versions, is all downwards (and sideways). Nevertheless, the upward flow of prediction error is itself a sensitive instrument, bearing fine-grained information about very specific failures of match. That is why it is capable of inducing, in higher areas, complex hypotheses (consistent sets of representations) that can be tested against the lower level states” (Clark 2016, 46-47)

This communication is not all up and down; it can take diffeerent lateral routes and it can be complex: a “rich, integrated (generative) model takes a highly distributed form, spread across multiple neural areas that may communicate in complex context-varying manners” (Clark 2018, 522). According to PEM each processing layer trades on representational contents or neural codes (see Orlandi 2018; Orlandi and Lee 2019, 215) with the layer below, in order to strike a complex balance. The “message-passing schema” can also go “sideways (within a level) … so that each level is attempting to use what it knows to predict the evolving pattern of activity at the level below (Clark 2018, 523). The process is said to be iterative: inputs at each stage change as informational states at adjacent stages change, “and these changes will mandate a new update” (Orlandi and Lee 2019, 208).Footnote 3

The system can make its adjustments by either revising its model using perceptual inference, or by a process of active inference (moving around and making the world look more like the model). Active inference can still be conceived of as PEM, as Jacob Hohwy explains: “predictions are held stable rather than revised in the light of immediate prediction error, and action moves the organism around to change the input to the senses until the predictions come true…. Active inference increases the accuracy of internal models and perceptual inference optimizes these models” (2017, 2). Bottom-up sensory input that the system has to deal with (including reafference in the case of active inference) is still conceived in terms of prediction error, even as active inference increases the system’s confidence in the hypotheses to the extent that sensory input can be modified to match predictions. It is also the case that the system gains efficiency in attending only to discrepancies between top-down prediction and bottom-up error signals rather than to what remains the same—the broader or unchanged structures that continue to correlate well with the predictive model.

Computationally, perception can then be described as empirical Bayesian inference, where priors [that specify the generative model] are shaped through experience, development and evolution, and harnessed in the parameters of hierarchical statistical models of the causes of the sensory input. The best models are those with the best predictions passed down to lower levels, they have the highest posterior probability and thus come to dominate perceptual inference. (Hohwy 2016, 4).

Priors are complex. They may operate as beliefs/hypotheses formed by prior experience; they may be more structural factors deriving from evolution or development. Generally, they operate as a parameter that plays a role in forming a relevant generative model. They also play a role in precision. Precision-weighted prediction errors, the weight given to prediction errors depending on their predicted precision, play a central role in shaping the open communication of PEM. Precision estimates have a functional role in balancing priors and current sensory input in a statistically optimal way (Wiese and Metzinger 2017). As Clark (2013b) puts is, “precision-weighting amounts to altering the gain on select populations of prediction error units. This enables the flexible balancing of top-down and bottom-up influence.” Precision estimates are estimates of how reliable the error signal may be. If it’s estimated to be high, then a prediction error will be treated as important and entail a greater post-synaptic gain. A lower estimate, indicating lower precision, means the error signal will be discounted. Although some of the precision weighting involved in the system correlates to signal–noise ratios in the sensory information, prior expected precision also has a role to play. The more frequent the model has matched with sensory input in the past, the more precision is granted to the current prediction. PEM can be understood as an ongoing process of learning. Encountering prediction error, the model learns and accommodates (or assimilates in active inference) so as to reduce error the next time and improve the mind-to-world fit (or world-to-mind via active inference), improving its precision estimates as it goes.

Different sensory modalities, providing multiple, and purportedly insulated, streams of information (sources of evidence for hypothesis confirmation), allow for a gain in precision. The evidence from the separate streams is tested and integrated at a higher level. According to Hohwy, “within the brain the evidence delivered by different senses is integrated in an optimal Bayesian manner which depends on precision optimization…. [T]he integrated estimate is more reliable than either individual estimate. That is, under a model where the sensory estimates arise from one common cause in the world, it is best to rely on multiple sources of reasonably reliable sensory evidence from within the brain” (Hohwy 2013, 251; see 152ff). Multimodal consistency (more than one sense modality sending the same message) means your senses are ‘confirming’ the veracity or consistency of the communication.

3 The Problem of Perceptual Illusions

A number of perceptual (visual and proprioceptive) illusions seemingly present problems for PEM accounts of perception. For example, in the MLI two lines are perceived as having different lengths despite the fact that they are equal (Fig. 1).

That illusory experience persists even when we know that the two lines are of equal length. In spite of the open communication principle, where top–down and bottom–up exchanges are said to minimize prediction errors, perceptual illusions seemingly allow prediction errors to rule. Even if our priors include reliable and secure knowledge that the lines in the MLI are equal, the system seems unable to correct the sensory errors that form the illusion.

One classic way to explain perceptual illusions is to appeal to cognitive impenetrability. Traditional modularist accounts, for example, hold that sensory processes are modular and informationally encapsulated such that they provide input to the system, but do not receive input from central cognitive processes (Fodor 1983). This is a simple denial of open communication. Sensory modules involve a closed system of information processing; they block or filter out any information coming top–down from processes based on wider belief or knowledge. I may know that the lines in the MLI are indeed equal, but this knowledge does not penetrate the modular structure of the early visual processing areas, and I continue to see the lines as unequal.

In contrast, for predictive processing, open communication should mean that the knowledge gained from our prior experience with the illusion, or from being informed about the true length of the lines, will correct our perception, and we should be able to see that the lines are equal. Instead they continue to appear as unequal. We can measure the lines and adjust our hypothesis, which should then correct any prediction errors found in our sensory experience; but this attempted minimization of prediction errors fails. Likewise, with the RHI,Footnote 4 my model of the world, and thereby my prediction, is that this rubber hand is not part of my body, but we seem unable to eliminate the prediction errors coming from the combined tactile-visual stimulus (Fig. 2).

The Rubber Hand Illusion (from Metzinger 2009, with permission)

In the RHI the visual input dominates the proprioceptive sense of where my hand is located. Similarly, in the AHI,Footnote 5 the visual of what is supposedly my hand in the act of misdrawing a straight line from A to B dominates the kinaesthetic sense of what my hand is actually doing (Fig. 3). I can know all the details of the experiment, and that I am actually seeing the hand of the experimenter in a mirror, and so know full well that the hand that I see is not my hand, and that the movement is not my movement; but the effect (which is a weird feeling that my hand is doing something other than I want it to) persists (Gallagher and Sørensen 2006).

The Alien Hand Illusion (from Gallagher and Sørensen 2006, with permission)

Even in the context of discussing such illusions, the open communication in the cognitive system is often portrayed in a straight-forward manner. A recent example can be found in Matamala-Gomez et al. (2020). Here is how it works, according to them.

Conceptually, the [PEM process] assesses the improbability (surprise) of the sensory information under a hierarchical generative model…. In the case of body ownership illusions [for example, the RHI], altered body perception results from a self-representation that is updated dynamically by the brain to minimize sensory conflicts (i.e., the differences between the predictions about sensory data and the real sensory data at any level of the hierarchical model). Then, [with respect to] body ownership illusions our brain tries to minimize the “surprise” through the predictive coding scheme, when encountering a signal that was not predicted, it will generate prediction errors and will update the model in order to minimize the differences between the predictions about sensory data and the real sensory data at any level of the hierarchical model (e.g., synchronous visuo-tactile feedback in the RHI study…). Therefore, the subjects will update their internal body representation. (Matamala-Gomez et al. 2020, 4).

In other words, through this process of open communication, the system adjusts its hypothesis to accommodate the sensory experience: the rubber hand is part of my body.

Like any principle, however, open communication must allow for exceptions, or more precisely, according to PP, there is a flexibility built into the communication process. Different situations call for just this flexibility. There are circumstances in which it may be beneficial to allow priors to dominate and ignore prediction errors. Clark (2016) provides a good example. When driving on a foggy but familiar road one should rely on prior knowledge about how such conditions can affect our perception (Clark 2016). On the contrary, driving on an unfamiliar mountain road with sharp curves it would be wise to trust what our attuned senses are telling us even if they don’t match our expectations. “[V]ision needs to be flexible in the way that it deals with variations in context” (Ogilvie and Carruthers 2016, 725). This is a matter of adjusting the precision weights of specific hypotheses and prediction errors, since “the precise mix of top–down and bottom–up influence is not static or fixed. Instead, the weight given to sensory prediction error is varied according to how reliable (how noisy, certain, or uncertain) the signal is taken to be” (Clark 2016, 57).Footnote 6 Flexible precision-weighting allows for an “astonishingly fluid and context-responsive” system (Clark 2018, 523).

Precision-weighting requires the flexibility that Ogilvie and Carruthers mention and that Clark describes. This kind of flexibility, however, if it modulates the exchange of information based on reliability parameters, does not block communication entirely and cannot explain the persistence of illusions such as the MLI. It would be difficult to call it flexible and fluid if the communication flow is stopped. It would rather become a rigid system without the possibility of learning. If we accept the very basic idea that Bayesian inference operates with a variable learning rate, modulated by beliefs about precisions, the learning rate cannot reduce to zero, and there needs to be some degree of communication.

In the case of the RHI, to the extent that there continues to be some form of communication, even if constrained in terms of precision weights, one would think that reliability parameters would favor maintaining the original hypothesis based on one’s long-term body image, i.e., that the rubber hand is not my hand. Indeed, the subject, entering into the experiment, typically starts with that hypothesis, and at any point can access that hypothesis experientially quite simply and directly by closing her eyes. If perception is epistemically flexible, why doesn’t the adjustment, at least in the long term, go in that direction, i.e., in the direction of what I reliably know about my body, or have learned about rubber hands? Why don’t perception and cognition engage in effective exchange in order to minimize error, rather than preserving the sensory error and allowing it to rule? In light of the open communication principle, as Ogilvie and Carruthers (2016) put it, “it might seem mysterious why one’s belief should fail to modify the erroneous perceptual representation” (p. 726).

The explanation relies on the idea of a short circuiting by relevant priors operating at lower levels so that prediction errors are blocked from reaching higher levels of the system (Ogilvie and Carruthers 2016). The lack of ascending prediction errors can still count as information for higher levels, namely, the absence of an error signal in the communication process signifies that the stimulus is as predicted (although it isn’t); in effect there is no contradictory signal despite there being a contradiction in the system.

This resolution of the mystery, however, is not convincing, not only because the contradiction remains (that is, there is an assured belief in the system—“I know that this rubber hand is not my hand,” or in the case of the MLI, “I know that the lines are equal”—that contradicts the sensory signals), but also because it relies on the idea that the perceptual illusion is not ambiguous, i.e., not characterized by uncertainty.

We suggest that something like this [shortcircuiting] occurs when the visual system is processing depth and size information while one looks at a Müller-Lyer figure. As far as the early levels of processing are concerned, relative depth and size have been accurately calculated from unambiguous cues. Hence systems monitoring noise and error levels are being told that everything is in order: there is no need for further processing (Ogilvie and Carruthers 2016, 727).

One should think, however, that if perceptual illusions are unambiguous, then most other instances of perception should be unambiguous, and accordingly, there would never be call for higher-level PEM, since, as Ogilvie and Carruthers propose, when sensory input is sufficiently unambiguous the high-level priors need not come into play. In the case of perceptual illusions, at least, the high-level priors seemingly go silent. To borrow the message-passing language, they fail to send messages, or at least convincing messages. Or alternatively, the system gets the message but ignores the contradiction.

It is not at all clear, however, that in the case of perceptual illusions, our perceptions remain unambiguous, for two reasons. First, given what we know, that the lines are the same length in the MLI, or that the hand I see is not my hand in the RHI, there is no reason to think that this knowledge shouldn’t come into conflict with the sensory cues. Second, and this may be clearer in the RHI and the AHI, the perceptual ambiguity can be measured in terms of how surprising or unexpected the experience is. In the AHI, especially, the experience is totally unexpected and odd and remains so even when we know what the trick is.Footnote 7 The PEM short-circuit story denies the ambiguity, explains it away, when in fact PEM’s overarching explanation, where prior knowledge and sensory cues talk to one another (communicate, pass messages, trade in information), should predict the ambiguity that we do experience in these illusions.

Hohwy (2013) recognizes the challenge as he considers the MLI.

Even if we have a strong prior belief that they are of equal lengths, we still perceive one to be longer. So, the conscious experience here seems impenetrable: the higher-level belief fails to modulate perception. What can prediction error minimization say about this? (2013, 124-125).

At first it seems that Hohwy’s answer is different from that of Ogilvie and Carruthers since Hohwy affirms that the illusion provides viewers with an “ambiguous input” (p. 125). However, this ambiguity gets immediately resolved at the perceptual level because "the context provided by the wings [on the Müller-Lyer lines] trigger fairly low-level priors … [leading] to the inference that they are of different lengths rather than to the competing inference that they are of the same length” (2013, 125–126). In other words, the uncertainty or ambiguity is eliminated (short-circuited) early in visual processing in a low-level PEM process, “where the relevant priors occupy levels of the hierarchy within the early visual system itself” (125). Still, one wonders “why the higher-level prior belief in equal lengths cannot penetrate and create veridical experience of the lengths,” or why the higher-level prior belief does not correct the lower-order prior, or vice versa. The answer is that the “consequence of the early resolution to the ambiguity is that very little residual prediction error is shunted upwards in the system, and that therefore there is little work for any higher-level prior beliefs to do, including the true belief that the lines are of equal lengths” (126). If there is ambiguity in the initial sensory input, the system short-circuits it so that it never triggers higher-order priors. The question is never raised through the hierarchy to the level of the more general predictive model. Still, it is not clear why the question is not raised in a more circuitous way since we learn or are informed that the lines are in fact equal. It is not clear why this particular situation is not one in which the system realizes that “you cannot trust the signal from the world, but must arrive at a conclusion, so you rely on prior knowledge” (Hohwy 2013, 123). Indeed, the system seems to be in contradiction and it remains a puzzle why a system that is set up to decrease uncertainty by the open communication of information, does not do so when it has prior knowledge about the way the world is.

Even if there is early resolution of the ambiguity in the case of the MLI, in the RHI and the AHI ambiguity arguably persists. Hohwy (2015) discusses the RHI in terms of perceptual predictions. In the ambiguous case of the RHI, he suggests, the brain needs to determine which is the most probable—that the visual of the rubber hand is independent from the tactile situation of the real hand, or that there is a binding of the visual and the tactile and that you are experiencing the touch where you see it synchronously administered. Hohwy contends that the synchronicity is more expected on the binding hypothesis than on the independence hypothesis, and this leads to the illusion. In this case, the winning hypothesis is apparently on a higher level than the immediate sensory processes, but not high up enough to encounter a more certain hypothesis which, according to predictive processing, must also be represented in the brain, namely, that the rubber hand is not really part of my body, or that “the experimenter is the hidden cause of both the seen touch and the unseen touch on the real hand” (Gadsby and Hohwy 2019, 4).

One could also think of this in terms of intersensory precision and what happens proprioceptively.

The answer lies in the relative precision afforded to proprioceptive signals about the position of my arm and exteroceptive (visual and tactile) information—suggesting a synchronous common cause of my sensations. By experimental design, I am unable to elicit precise information about the position of my arm because I cannot move it and test hypotheses about where it is and how it is positioned. Conversely, by experimental design, the visual and tactile information is highly precise …. In other words, the precision of my arm position signals is much less than the precision of synchronous exteroceptive signals…. (Hohwy 2013, 107)

Vision, apparently, is the most precise, because it wins out over, and one might say, hijacks both proprioception and touch. It’s clear that the proprioceptive signal is weak since one is unable to move one’s tactilely stimulated hand during the experiment. Since proprioception is weak the predicted location of the touch takes its orders to march in synchrony with what vision says, and we experience the tactile stimulation in the rubber hand. In this case, the experimental set up, especially the synchrony of tactile and visual stimulation, introduces a bias into intersensory precision.

In the RHI Hohwy suggests that rather than complying with prior conceptual beliefs, there is a “suppression” of prediction errors—the system doesn’t eliminate them, it ignores them: “It is as if the perceptual system would rather explain away precise sensory input with a conceptually implausible model [this rubber hand is part of my body] than leave some precise sensory evidence (e.g., the synchrony) unexplained” (2013, 107). This still does not explain why our more plausible knowledge, that the rubber hand is not part of my body, fails to correct my experience. Importantly, perception involves a balancing act between prior expectations and sensory processes that are taken to have more or less precision. (1) If the system has high expectations for the precision of the sensory input, then that drives revision of hypotheses higher up the hierarchy. (2) If the expectation is that sensory input will be imprecise, it tends to be ignored, but then higher-order predictions will determine the perceptual inference (Hohwy 2013, 145). In either case the most plausible model should have a role to play. In the circumstance of the RHI, the latter (2) is more likely, since I know my real hand is under the blind; I know that the rubber hand is not my hand; and if I know how the experiment works, I should, at some level, expect the sensory input to be imprecise. Still the illusion works. One might also think that the experimentally imposed inability to move, or “cue ambiguity” when congruent visuo-tactile signals conflict with proprioception (Gadsby and Hohwy 2019), even if the proprioceptive signal is diminished, might signal low sensory precision and motivate a search for a higher-order resolution.Footnote 8 Indeed, this is what intersensory processes are designed to do, according to Hohwy. Granted, the conditional independence of the sensory systems, meant to increase overall precision (see Hohwy 2013, 143, 152ff, 251–252), is seemingly compromised if vision hijacks proprioception and touch. This means, at that level, there is a decline in precision that the system does not register. If this helps to explain the short circuit, there is still some further explanation required. We’ll come back to this issue.

In the case of the AHI, it is more difficult to suppress or explain away the sensory input. In this case, intersensory processes and the precision that they bring should be in good order since my hand is moving and generating kinaesthetic sensation. The kinaesthetic signal is strong, unlike the situation in the RHI, and there is consistent tactile pressure sense from holding the pencil and tracing the line; despite that, vision still hijacks the experience, but it leaves behind a strong ambiguity. The experience is not only surpising, but feels strange. The intersensory contradictions produce ambiguity rather than precision, and the ambiguity is never eliminated; if anything, there is an intensification of prediction errors that are hard to ignore. Yet my prior knowledge, that what I am seeing is not my hand, fails to correct or eliminate the illusion.

4 A Broader Enactive Architecture

The open communication principle is an ‘in principle’ principle: it is a piece of ideal theory. In reality, constraints are seemingly imposed at every level; policies are set up to discourage lateral exchange. Everyone can agree that there are complex negotiations concerning the flow of information and precision ongoing in the system. The question is why, or how best to explain this. Hohwy clearly suggests that it is down to brain architecture.

Bayes optimal integration [can be] compromised. Conditional independence [of the sensory streams] within the brain is made possible by the brain’s organic structure preventing information flow across processing streams in lower parts of the cortical hierarchy—in neuroscience, this is known as functional segregation. (Hohwy 2013, 252)

It’s not just the hierarchical structure, but differences in lateral insulation [a “kind of horizontal evidential insulation” (2013, 153)] operating at different levels—something that is ideally meant to provide gain in precision at the higher level, derived from the mix of “multiple sources of reasonably reliable sensory evidence from within the brain” (Hohwy 2013, 251). The result is, according the Hohwy, a complex combination of cognitive penetrability and impenetrability.

Cognitive impenetrability is related to evidential insulation imposed both by the nature of message-passing up and down in the perceptual hierarchy and by the need for independent witnesses in the shape of different senses and possibly individual intrasensory streams. This evidential architecture imposes limits on top-down and lateral modulation—it is not a free-for-all situation. However, what is predicted and how well it is predicted determines what signal is passed up through the hierarchy and thereby what perceptual inference is drawn (2013, 155).

Hierarchy and modular structure, however, if these are aspects of the “organic structure” of the brain, cannot explain the exceptional circumstances of perceptual illusion in contrast with our non-illusional perception since these structures are supposedly relatively stable, and remain the same for both exceptional and non-exceptional perceptual experience. Furthermore, in most predictive processing accounts the work is assumed to be done by inferential processes, representational models, prediction error, and the semantic content of the messages that are passed up and down. These are the things that change or adjust, more-so than organic structures. Organic structures stay relatively the same (even allowing for plasticity), and constrain processes in both non-illusory and illusory perception.

Hohwy sometimes obliquely points to other factors, although these do not become part of his systematic explanation of perception, and in fact would undermine his internalistic account.Footnote 9 For example, Hohwy, in regard to the RHI, reports, that it is.

striking that people are rather poor at using their prior belief to destroy the illusion. What we do get a lot in our lab is people who have an overwhelming urge to move their hands, remove the goggles, or otherwise intervene on the process. For example, one person we tested physically had to hold her hand fixed with her other hand to prevent it from moving (2013, 126).

Likewise, with regard to the MLI he notes that what prevents our use of the veridical prior belief about the length of the two lines is the constraints of the situation. We might try engaging the veridical prior belief in the equal length of the two lines but we fail because the experimental situation is constrained: “none of [our] predictions can get traction on the decontextualized line drawings in a proper, controlled experimental set-up” (127).Footnote 10 Indeed, the non-ecological aspects of the experimental environment is something that he consistently notes in the context of perceptual illusions.

What we can see in both of these illusions [MLI and RHI] is that even if the prior, true belief about the world is there, circumstances might prevent it from being confirmed by active inference. The true belief is therefore never tested and is denied access to the sensory prediction errors that would have otherwise confirmed it…. This idea generalizes. (127; emphasis added)

There is a kind of structural resistance introduced by experimental factors, or more generally, ecological factors. In that case, however, it’s not brain architecture, or a conditional independence of different sensory systems, or a conceptually implausible model, or the ignoring of prediction error that is doing the work; it’s the environment [which includes the “precision regularities in the world” (2013, 146)], and the non-ecological circumstances of the experimental situation that place constraints on the system and prevent the agent from taking action. In contrast, when subjects are allowed to move and act (e.g., by grasping), in some cases they can adapt to visual illusions, sometimes relatively rapidly, and such adaptations depend on both bodily movement and the size and location of the relevant objects, i.e., environmental circumstances (see Cesanek and Domini 2017).Footnote 11

These other factors, then, would involve a broader architecture that at the same time would not deny the important considerations about the organic structure of the brain. It would acknowledge that brain and body evolve and develop together and that the brain has the material structure that it has, and works the way it does because it has evolved with and has developed with the body (Gallagher et al. 2013); and that the brain-body operates within specific environments, and generally attunes to specific action-related affordances, as well as to material differences related to task requirements (Säfström and Edin 2004; also see Heyser and Chemero 2012). In other words, if we pursue this option, we are led to the idea that cognition is in some strong sense embodied, extended and enactive, rather than “inferentially secluded from the world, [or] neurocentrically skull-bound” (Hohwy 2016, 259), and, moreover, that perception involves enactive coupling with the environment rather than an inferential process on sensory input (for further discussion on this point, see de Bruin and Michael 2017; Gallagher and Allen 2018, Hipólito and Hutto, forthcoming).

This view is not too far removed from Peter Godfrey-Smith’s notion of environmental complexity, where, as he indicates, complexity is understood as heterogeneity, and applies to organisms as well (2002, 10). With respect to perceptual illusions, one way to put it is that the effect is in the brain-body-environment coupling rather than exclusively in brain circuitry. Something as simple (and as complex) as arm position, for example, can have an effect on the RHI (the effect disappears the further away from canonical position). As Michael Turvey (2018, 357) puts it, in reference to the MLI, “perception of a thing, x, (e.g., –) in one context (e.g., < >) may differ, on principle, from its perception in another context (e.g., > <). The principled origin of the difference lies in the impredicative nature of thing-in-context.” Just as the RHI changes when changes are made to the angular positions of the rubber hand (away from the canonical position), so there are changes in the MLI depending on the proportion of space taken up by the line between the wings (the MLI disappears when the line covers around 70% of the space, and reverses when the line covers around 50%). If, as Turvey suggests (and consistent with the idea of heterogeneity in the environment), encountering the reverse MLI is just as probable as encountering the MLI in natural settings, it’s questionable whether we should expect a low-level prediction bias that would short-circuit perceptual processes (see 2018, 357–358).

This is not to deny that prior experience, and perhaps features of the sensory-motor system derived from developmental and evolutionary processes, can lead to mistaken predictions. But the reason why ongoing experience, which is supposed to shape or reshape priors in order to allow the system to learn its way past the illusion, can’t get traction, is because of the material constraints imposed by both bodies and environments. This is something often reflected in correlated brain plasticity, that is, neural changes that can follow trauma or learning, or that reflect social and cultural experience. Indeed, Hohwy, despite his theoretical claims to the contrary, seemingly shows that it is wrong to downplay the role of embodiment in the RHI in his own lab using VR goggles.

[I]f there is no distance between [the real and the rubber hand], for example if a virtual reality goggle is used to create spatial overlap between the real and the rubber hand, then the illusion is strengthened (Hohwy 2013, 106).

This spatial overlap is precisely an embodied phenomenon, the result of a specific material arrangement of goggles, body and environment. Such extra-neural material arrangements can be exploited to increase or decrease the illusion.Footnote 12 To this we need to add even broader cultural features. We’ve known for a long time that there are cultural differences that change the experience of the illusions, in some cases eliminating the illusion altogether (see de Fockert et al. 2007; McCauley and Henrich 2006; Segall et al. 1963). Hohwy explains this as a difference in priors (2013, 121), but whether it involves different priors or different sensory processes (due, e.g., to plastic changes in early visual processing), these differences are due to either different environments or different cultural practices. Whatever the brain is doing, it is working in a wider embodied ecology that is subject to cultural permeation, and not simply to narrow cognitive penetrability or impenetrability (Hutto et al. 2020). In contrast to top–down models of cognitive penetration, where culturally acquired concepts or beliefs are said to influence early perceptual processing by means of a quick computational communication that binds sensory and conceptual contents when confronted with a particular, triggering stimulus, or the predictive processing view that cultural priors enter the system via top–down hypothesis formation, cultural permeation is the idea that cultural practices and experiences materially shape perception by directly affecting sensory-motor processes (including changes in dynamical connections and plastic changes in early sensory areas). In this respect, bodily processes are not segregated from cultural factors. As Soliman and Glenberg (2014) show in a set of experiments that involve cross-cultural studies of intersubjective joint action, perceptual and motoric processes are not simply modulated top-down by cultural practices, but rather, social and cultural factors are incorporated into bodily engagements, affecting, for example, motor control and spatial perception.

[C]ulture enters the scene not as a self-contained layer on top of behavior, but as the sum of sensorimotor knowledge brought about by a bodily agent interacting in a social and physical context. As such, culture diffuses the web of sensorimotor knowledge, and can only be arbitrarily circumscribed from other knowledge. (2014, 209)

Rather than trying to fit these cultural practices and embodied sensory-motor processes into a hierarchical arrangement in the brain, we can think of them as more fundamentally integrated with brain-body-environment to begin with. We can shift to a framework where these processes are not modular or distinct, but instead influence and permeate each other following principles of metaplasticity, where changes in any part of the system (brain, body, environment, social or cultural practices, normative institutions) can affect the system as a whole (Malafouris 2013).

The 4-or-more-E (embodied, embedded, extended, enactive, + ecological, + emotional) accounts would generalize these ideas to include all of the various factors that play into perception, action and cognition—material and structural factors that internalist PEM accounts have not taken into consideration. The material system of brain-body-environments (including social and cultural environments) is attuned by its history, by plastic (and metaplastic) material changes—in neurons, in motor processes and habits, in affective factors, in social and cultural practices, in normative structures, and so on. In this respect, in explanations of predictive processing, it is the narrow internalist features of some accounts that block or short-circuit the full account.

Notes

Hohwy (2013, 50) describes this comparison process: “the brain does have access to the predictions of sensory input generated by its own hypotheses, and the brain does have access to the sensory data that impinges on it. All it needs to do is compare the two, note the size of the difference, and revise the hypotheses (or change the input through action) in whatever way will minimize the difference. Given that the two things that are compared are accessible from within the skull, the difference between them is accessible from within too” (see also pp. 59, 75, 104, 170; also 2016, pp. 263, 276).

Or again: “Predictions are sent down where they attenuate as well as possible the input. Parts of the input it cannot attenuate are allowed to progress upwards, as prediction error” (Hohwy 2015, 7). Hipólito and Hutto (forthcoming) refer to this as the ‘communication assumption’, signaled by the “widespread reliance on the language of ‘message passing’ which is used to describe the exchanges between higher and lower levels of cognitive processing.”.

In the RHI, a repetitive tactile stimulation is synchronously applied to the subject’s hidden hand and a fake rubber hand that is positioned in view on a table directly in front of the subject. This typically induces an illusory sensation such that the tactile stimulation is felt on the rubber hand, producing a sense of ownership for the rubber hand (Botvinik & Cohen 1998).

The AHI (which might also be called the 'anarchic hand illusion') is based on an experiment by Nielsen (1963). It involves a mirror illusion in which subjects believe they see their own gloved hand, when in fact they are looking at the experimenter’s gloved hand (the “alien” hand) in a mirror (M2 in Fig. 3). Subjects are asked to draw a straight line from point A to point B. What they see is the experimenter’s hand drawing off course. The illusionary experience is the very odd feeling that their hand is acting on its own, doing something that they did not intend. (see Gallagher and Sørensen 2006 for more details).

In this paper we focus on what we are calling the shortcircuiting solution. Clark (2016) offers an alternative and somewhat pragmatic explanation of illusions. We discuss Clark’s solution in a different paper (see Hipólito and Hutto forthcoming).

Another quite different example involves moving walkways (travelators) in, for example, airports. People who are used to these walkways can typically step onto them without interrupting their stride. If the walkway is not operating, and you can see that it is not moving, when you step onto it (which should be no different from continuing to walk on the unmoving floor) your stride is disrupted. It’s as if, seeing the precise pattern of the walkway triggers your motor system to adjust for the moving walkway even when you know it is not moving and you can predict that you don’t have to change your stride.

“When prediction error has low precision, priors hold more (relative) weight and are thus given greater influence in sculpting the relevant perceptual representation or shape of policies selected” (Gadsby and Hohwy 2019, 9).

There are two important issues here that we cannot fully address in this paper. The first issue is whether hands, bodily movement, goggles (or artifacts and tools more generally), various ecological factors, material affordances, agentive history, extra-neural factors, etc., can simply be reduced to stored representations. If they can, of course, they would not undermine Hohwy’s internalism. We don’t think they can. The larger argument, however, has been made by many people in many books and publications, and we don’t think we can provide all of the evidence or arguments, or try to settle the representation wars, in a couple of paragraphs. See, for example Di Paulo et al. (2017); Gallagher (2005; 2017); Malafouris (2013); Merleau-Ponty (2012); Thompson (2008); Varela et al. (2016). The second issue involves an ongoing debate about whether predictive processing accounts can be made consistent with enactive accounts. Again, this is a debate that we set aside here. In this context the question would be whether Hohwy, by acknowledging bodily and environmental constraints, could easily take the extra step into 4E cognition territory, instead of retreating to brain processes and architecture—a step, of course, that he resists. Concerning this issue, see Bruineberg et al. (2018); Constant et al. (2021); Fabry (2018); Gallagher and Allen 2018; Kirchhoff and Kiverstein (2020); Ramstead et al. (2019); Kirchhoff (2018), and especially Di Paolo et al. (in press). Our thanks to two reviewers who flagged these two issues, respectively.

Of course, most of us experience the MLI outside of experimental contexts. But the ecological point still holds in the sense that the lines are typically presented as abstract drawings (as above in this paper) outside of any pragmatic situations that would involve such lines.

If we consider this a case of active inference, as predictive models suggest, one might expect that it would correct the system and reduce prediction error. However, this is not always the case. In many cases the visual illusion continues even if the motor system does not succumb to the illusion. And in some cases, despite taking action, the motor system is also fooled. Either way, in such cases, active inference partially or fully fails to minimize prediction error. For relevant studies involving the RHI, see Kammers et al. (2009, 2010), and Hohwy (2013, 235–236) for a brief discussion.

The practical importance of understanding the effects of such arrangements can be seen in the fact that they can be exploited in therapeutic settings using VR technology for purposes of rehabilitation from, for example, phantom limb pain, or sensory and motor impairments in neuropsychological and neurological diseases such as stroke (see e.g., Bolognini et al. 2015; Fotopoulou et al. 2011; Matamala-Gomez et al. 2020; Tosi et al. 2018).

References

Bolognini, N., C. Russo, and G. Vallar. 2015. Crossmodal illusions in neurorehabilitation. Frontiers in Behavioral Neuroscience 9: 212. https://doi.org/10.3389/fnbeh.2015.00212.

Botvinick, M., and J. Cohen. 1998. Rubber hands “feel” touch that eyes see. Nature 391: 756. https://doi.org/10.1038/35784.

Bruineberg, J., J. Kiverstein, and E. Rietveld. 2018. The anticipating brain is not a scientist: The free-energy principle from an ecological-enactive perspective. Synthese 195 (6): 2417–2444.

Cesanek, E., and F. Domini. 2017. Error correction and spatial generalization in human grasp control. Neuropsychologia 106: 112–122.

Clark, A. 2013a. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behavioral and Brain Sciences 36 (3): 181–204. https://doi.org/10.1017/S0140525X12000477.

Clark, A. 2013b. The many faces of precision: Replies to commentaries. Frontiers in Psychology 4: 270. https://doi.org/10.3389/fpsyg.2013.00270.

Clark, A. 2016. Surfing Uncertainty. Oxford: Oxford University Press.

Clark, A. 2018. A nice surprise? Predictive processing and the active pursuit of novelty. Phenomenology and the Cognitive Sciences 17 (3): 521–534.

Constant, A., A. Clark, M.D. Kirchhoff, and K. Friston. 2021. Extended active inference: Constructing prediction cognition beyond skulls. Mind & Language. https://doi.org/10.1111/mila.12330.

de Bruin, L., and J. Michael. 2017. Prediction error minimization: Implications for embodied cognition and the extended mind hypothesis. Brain and Cognition 112: 58–63.

de Fockert, J., J. Davidoff, J. Fagot, C. Parron, and J. Goldstein. 2007. More accurate size contrast judgments in the Ebbinghaus Illusion by a remote culture. Journal of Experimental Psychology: Human Perception and Performance 33 (3): 738.

Di Paolo, E., T. Buhrmann, and X. Barandiaran. 2017. Sensorimotor Life: An Enactive Proposal. Oxford: Oxford University Press.

Fabry, R. 2018. Betwixt and between: The enculturated predictive processing approach to cognition. Synthese 195 (6): 2483–2518.

Fodor, J.A. 1983. The Modularity of Mind. Cambridge: MIT Press.

Fotopoulou, A., P.M. Jenkinson, M. Tsakiris, P. Haggard, A. Rudd, and M.D. Kopelman. 2011. Mirror-view reverses somatoparaphrenia: Dissociation between first-and third-person perspectives on body ownership. Neuropsychologia 49: 3946–3955. https://doi.org/10.1016/j.neuropsychologia.2011.10.011.

Friston, K.J. 2013. Life as we know it. Journal of the Royal Society Interface. https://doi.org/10.1098/rsif.2013.0475.

Gadsby, S. and Hohwy, J. (2019). Why use predictive processing to explain psychopathology? The case of anorexia nervosa. S. Gouveia, R. Mendonça and M. Curado (eds.), The Philosophy and Science of Predictive Processing. London: Bloomsbury. Preprint at https://psyarxiv.com/y46z5/download?format=pdf. 1–16. Accessed 25 Sept 2021.

Gallagher, S. 2005. How the Body Shapes the Mind. Oxford: Oxford University Press.

Gallagher, S. 2017. Enactivist Interventions: Rethinking the Mind. Oxford: Oxford University Press.

Gallagher, S., and M. Allen. 2018. Active inference, enactivism and the hermeneutics of social cognition. Synthese 195 (6): 2627–2648. https://doi.org/10.1007/s11229-016-1269-8.

Gallagher, S., and J.B. Sørensen. 2006. Experimenting with phenomenology. Consciousness and Cognition 15 (1): 119–134.

Gallagher, S., D. Hutto, J. Slaby, and J. Cole. 2013. The brain as part of an enactive system. Behavioral and Brain Sciences 36 (4): 421–422.

Gładziejewski, P. 2017. The evidence of the senses—A predictive processing-based take on the Sellarsian dilemma. In Philosophy and predictive processing: 15, ed. T. Metzinger and W. Wiese. Frankfurt am Main: MIND Group.

Godfrey-Smith, P. 2002. Environmental complexity and the evolution of cognition. In The evolution of intelligence, ed. R. Sternberg and J. Kaufman, 223–249. Mahwah: Lawrence Erlbaum Associates.

Heyser, C.J., and A. Chemero. 2012. Novel object exploration in mice: Not all objects are created equal. Behavioural Processes 89 (3): 232–238.

Hipólito I. and Hutto, D. (forthcoming). Accommodating visual illusions: An interactionist account of perception.

Hohwy, J. 2013. The predictive mind. Oxford: Oxford University Press.

Hohwy, J. 2015. The neural organ explains the mind. In Open MIND, ed. T. Metzinger and J.M. Windt. Frankfurt am Main: MIND Group.

Hohwy, J. 2016. The self-evidencing brain. Nous 50 (2): 259–285.

Hohwy, J. 2017. How to entrain your evil demon. In Philosophy and predictive processing, ed. T. Metzinger and W. Wiese. Frankfurt am Main: MIND Group.

Hohwy, J. 2020a. New directions in predictive processing. Mind & Language 35: 209–223.

Hohwy, J. 2020b. Self-supervision, normativity and the free energy principle. Synthese. https://doi.org/10.1007/s11229-020-02622-2.

Hutto, D., S. Gallagher, J. Ilundáin-Agurruza, and I. Hipólito. 2020. Culture in mind—An enactivist account: Not cognitive penetration but cultural permeation. In Culture, mind, and brain: Emerging concepts, models, applications, ed. L.J. Kirmayer, S. Kitayama, C.M. Worthman, R. Lemelson, and C.A. Cummings, 163–187. New York: Cambridge University Press.

Kammers, M.P., F. de Vignemont, L. Verhagen, and H.C. Dijkerman. 2009. The rubber hand illusion in action. Neuropsychologia 47 (1): 204–211.

Kammers, M.P., J.A. Kootker, H. Hogendoorn, and H.C. Dijkerman. 2010. How many motoric body representations can we grasp? Experimental Brain Research 202 (1): 203–212.

Keller, G.B., and T.D. Mrsic-Flogel. 2018. Predictive processing: A canonical cortical computation. Neuron 100 (2): 424–435.

Kiefer, A., and J. Hohwy. 2018. Content and misrepresentation in hierarchical generative models. Synthese 195 (6): 2387–2415.

Kirchhoff, M.D. 2018. The body in action: Predictive processing and the embodiment thesis. In The Oxford Handbook of 4E Cognition, ed. A. Newen, L. De Bruin, and S. Gallagher. Oxford: Oxford University Press.

Kirchhoff, M.D., and J. Kiverstein. 2020. Attuning to the world: The diachronic constitution of the extended conscious mind. Frontiers in Psychology 11: 1966.

Malafouris, L. 2013. How Things Shape the Mind. Cambridge: MIT Press.

Matamala-Gomez, M., C. Malighetti, P. Cipresso, E. Pedroli, O. Realdon, F. Mantovani, and G. Riva. 2020. Changing body representation through full body ownership illusions might foster motor rehabilitation outcome in patients with stroke. Frontiers in Psychology 11: 1962. https://doi.org/10.3389/fpsyg.2020.01962.

McCauley, R.N., and J. Henrich. 2006. Susceptibility to the Müller-Lyer illusion, theory-neutral observation, and the diachronic penetrability of the visual input system. Philosophical Psychology 19 (1): 79–101.

Merleau-Ponty, M. 2012. Phenomenology of Perception. London: Routledge.

Metzinger, T. 2009. The Ego Tunnel: The Science of the Mind and the Myth of the Self. New York: Basic Books.

Nielsen, T.I. 1963. Volition: A new experimental approach. Scandinavian Journal of Psychology 4: 225–230.

Ogilvie, R., and P. Carruthers. 2016. Opening up vision: The case against encapsulation. Review of Philosophy and Psychology 7 (4): 721–742.

Orlandi, N. 2018. Predictive perceptual systems. Synthese 195 (6): 2367–2386.

Orlandi, N., and G. Lee. 2019. How radical is predictive processing? In Andy Clark and His Critics, ed. M. Colombo, E. Irvine, and M. Stapleton. New York: Oxford University Press.

Di Paolo, E., Thompson, E. and Beer, R. (in press). Laying down a forking path: Incompatibilities between enaction and the free energy principle.

Ramstead, M.J., M.D. Kirchhoff, and K.J. Friston. 2019. A tale of two densities: Active inference is enactive inference. Adaptive Behavior 28 (4): 225–239.

Säfström, D., and B.B. Edin. 2004. Task requirements influence sensory integration during grasping in humans. Learning & Memory 11 (3): 356–363.

Segall, M.H., D.T. Campbell, and M.J. Herskovits. 1963. Cultural differences in the perception of geometric illusions. Science 139 (3556): 769–771.

Soliman, T., and A.M. Glenberg. 2014. The embodiment of culture. In The Routledge Handbook of Embodied Cognition, ed. L. Shapiro, 207–219. London: Routledge.

Thompson, E. 2008. Mind in Life. Cambridge: Harvard University Press.

Tosi, G., D. Romano, and A. Maravita. 2018. Mirror box training in hemiplegic stroke patients affects body representation. Frontiers in Human Neuroscience 11: 617. https://doi.org/10.3389/fnhum.2017.00617.

Turvey, M.T. 2018. Lectures on Perception: An Ecological Perspective. London: Routledge.

Varela, F.J., E. Thompson, and E. Rosch. 2016. The Embodied Mind: Cognitive Science and Human Experience. Cambridge: MIT press.

Walsh, K.S., D.P. McGovern, A. Clark, and R.G. O’Connell. 2020. Evaluating the neurophysiological evidence for predictive processing as a model of perception. Annals of the New York Academy of Sciences 1464 (1): 242.

Wiese, W., and T. Metzinger. 2017. Vanilla PP for philosophers: A primer on predictive processing. In Philosophy and Predictive Processing: 1, ed. T. Metzinger and W. Wiese. Frankfurt am Main: MIND Group.

Wilkinson, S., G. Deane, K. Nave, and A. Clark. 2019. Getting warmer: Predictive processing and the nature of emotion. In The Value of Emotions for Knowledge, ed. L. Candiotto, 101–119. Springer/Palgrave Macmillan: Cham.

Acknowledgements

SG and DH are supported by the research program Minds in Skilled Performance. Australian Research Council (ARC). Australia. Grant No. DP170102987.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gallagher, S., Hutto, D. & Hipólito, I. Predictive Processing and Some Disillusions about Illusions. Rev.Phil.Psych. 13, 999–1017 (2022). https://doi.org/10.1007/s13164-021-00588-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13164-021-00588-9