Abstract

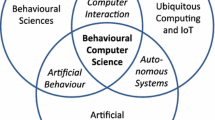

Modelling, analysis and synthesis of behaviour are the subject of major efforts in computing science, especially when it comes to technologies that make sense of human–human and human–machine interactions. This article outlines some of the most important issues that still need to be addressed to ensure substantial progress in the field, namely (1) development and adoption of virtuous data collection and sharing practices, (2) shift in the focus of interest from individuals to dyads and groups, (3) endowment of artificial agents with internal representations of users and context, (4) modelling of cognitive and semantic processes underlying social behaviour and (5) identification of application domains and strategies for moving from laboratory to the real-world products.

Similar content being viewed by others

Notes

See http://www.cogitocorp.com for a company working on the analysis of call centre conversations.

See https://www.cereproc.com for a company active in the field.

References

Allwood J, Björnberg M, Grönqvist L, Ahlsén E, Ottesjö C. The spoken language corpus at the department of linguistics, Göteborg university. Forum Qual Soc Res. 2000;1.

Allwood J, Cerrato L, Jokinen K, Navarretta C, Paggio P. The MUMIN coding scheme for the annotation of feedback, turn management and sequencing phenomena. Lang Resour Eval. 2007;41(3–4):273–87.

Altmann U. Studying movement synchrony using time series and regression models. In: Esposito A, Hoffmann R, Hübler S, Wrann B, editors. Program and Abstracts of the Proceedings of COST 2102 International Training School on Cognitive Behavioural Systems, 2011.

Ammicht E, Fosler-Lussier E, Potamianos A. Information seeking spoken dialogue systems–part I: semantics and pragmatics. IEEE Trans Multimed. 2007;9(3):532–49.

Anderson A, Bader M, Bard E, Boyle E, Doherty G, Garrod S, Isard S, Kowtko J, McAllister J, Miller J, et al. The HCRC map task corpus. Lang Speech. 1991;34(4):351–66.

André E. Exploiting unconscious user signals in multimodal human–computer interaction. ACM Trans Multimed Comput Commun Appl. 2013;9(1s):48.

André E. Challenges for social embodiment. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, (2014);35–7.

Androutsopoulos I, Lampouras G, Galanis D. Generating natural language descriptions from owl ontologies: the NaturalOWL system. 2014. arXiv preprint arXiv:14056164.

Ashenfelter KT, Boker SM, Waddell JR, Vitanov N. Spatiotemporal symmetry and multifractal structure of head movements during dyadic conversation. J Exp Psychol Human Percept Perform. 2009;35(4):1072.

Aylett R, Castellano G, Raducanu B, Paiva A, Hanheide M Long-term socially perceptive and interactive robot companions: challenges and future perspectives. In: Bourlard H, Huang TS, Vidal E, Gatica-Perez D, Morency LP, Sebe N. editors. Proceedings of the 13th International Conference on Multimodal Interfaces, ICMI 2011, Alicante, Spain, November, 2011. 14–18, ACM, p. 323–326.

Baig MM, Gholamhosseini H. Smart health monitoring systems: an overview of design and modeling. J Med Syst. 2013;37(2):9898.

Baker R, Hazan V LUCID: a corpus of spontaneous and read clear speech in british english. In: Proceedings of the DiSS-LPSS Joint Workshop 2010.

Batsakis S, Petrakis EG. SOWL: a framework for handling spatio-temporal information in owl 2.0. Rule-based reasoning, programming, and applications. New York: Springer; 2011.

Bengio Y, Courville A, Vincent P. Representation learning: a review and new perspectives. IEEE Trans Pattern Anal Mach Intell. 2013;35(8):1798–828.

Bickmore TW, Fernando R, Ring L, Schulman D. Empathic touch by relational agents. IEEE Trans Affect Comput. 2010;1(1):60–71.

BNC-Consortium. 2000. http://www.hcu.ox.ac.uk/BNC

Boker SM, Xu M, Rotondo JL, King K. Windowed cross-correlation and peak picking for the analysis of variability in the association between behavioral time series. Psychol Methods. 2002;7(3):338–55.

Boker SM, Cohn JF, Theobald BJ, Matthews I, Spies J, Brick T. Effects of damping head movement and facial expression in dyadic conversation using real-time facial expression tracking and synthesized avatars. Philos Trans R Soc B. 2009;364:3485–95.

Bonin F, Gilmartin E, Vogel C, Campbell N. Topics for the future: genre differentiation, annotation, and linguistic content integration in interaction analysis. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;5–8.

Bos J, Klein E, Lemon O, Oka T. DIPPER: description and formalisation of an information-state update dialogue system architecture. In: Proceedings of SIGdial Workshop on Discourse and Dialogue, 2003;115–24.

Bosma W, André E. Exploiting emotions to disambiguate dialogue acts. In: Proceedings of the International Conference on Intelligent User Interfaces, 2004;85–92.

Boujut H, Benois-Pineau J, Ahmed T, Hadar O, Bonnet P. A metric for no-reference video quality assessment for hd tv delivery based on saliency maps. In: Proceedings of IEEE International Conference on Multimedia and Expo, 2011;1–5.

Breazeal CL. Designing sociable robots. Cambridge: MIT press; 2004.

Bunt H. Dialogue control functions and interaction design. NATO ASI Series F Comput Syst Sci. 1995;142:197.

Cambria E, Huang GB. Extreme learning machines. IEEE Intell Syst. 2013;28(6):30–1.

Cambria E, Hussain A. Sentic album: content-, concept-, and context-based online personal photo management system. Cognit Comput. 2012;4(4):477–96.

Cambria E, Hussain A. Sentic computing: a common-sense-based framework for concept-level sentiment analysis. New York: Springer; 2015.

Cambria E, Mazzocco T, Hussain A, Eckl C. Sentic medoids: organizing affective common sense knowledge in a multi-dimensional vector space. In: Advances in Neural Networks, no. 6677 in LNCS, Springer, 2011. p. 601–610.

Cambria E, Benson T, Eckl C, Hussain A. Sentic PROMs: application of sentic computing to the development of a novel unified framework for measuring health-care quality. Expert Syst Appl. 2012;39(12):10,533–43.

Cambria E, Fu J, Bisio F, Poria S. Affective space 2: enabling affective intuition for concept-level sentiment analysis. In: Proceedings of the AAAI Conference on Artificial Intelligence (2015).

Campbell N. Approaches to conversational speech rhythm: speech activity in two-person telephone dialogues. In: Proceedings of the International Congress of the Phonetic Sciences, 2007. p. 343–48.

Cassell J. Embodied conversational agents. Cambridge: MIT press; 2000.

Chetouani M. Role of inter-personal synchrony in extracting social signatures: some case studies. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, 2014. p. 9–12.

Cimiano P, Buitelaar P, McCrae J, Sintek M. Lexinfo: a declarative model for the lexicon-ontology interface. Web Semant Sci Serv Agents World Wide Web. 2011;9(1):29–51.

Cohn J, Tronick E. Mother–infant face-to-face interaction: influence is bidirectional and unrelated to periodic cycles in either partner’s behavior. Dev Psychol. 1988;34(3):386–92.

Cohn JF, Ekman P. Measuring facial action by manual coding, facial emg, and automatic facial image analysis. In: Harrigan J, Rosenthal R, Scherer K, editors. Handbook of nonverbal behavior research methods in the affective sciences. Oxford: Oxford University Press; 2005. p. 9–64.

Core M, Allen J. Coding dialogs with the DAMSL annotation scheme. In: AAAI Fall Symposium on Communicative Action in Humans and Machines, 1997. p. 28–35.

Cristani M, Ferrario R. Statistical pattern recognition meets formal ontologies: towards a semantic visual understanding. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, 2014. p. 23–25.

Cristani M, Bicego M, Murino V. On-line adaptive background modelling for audio surveillance. In: Proceedings of the International Conference on Pattern Recognition. 2004;2:399–402.

Cristani M, Raghavendra R, Del Bue A, Murino V. Human behavior analysis in video surveillance: a social signal processing perspective. Neurocomputing. 2013;100:86–97.

Damian I, Tan CSS, Baur T, Schöning J, Luyten K, André E Exploring social augmentation concepts for public speaking using peripheral feedback and real-time behavior analysis. In: Proceedings of the International Symposium on Mixed and Augmented Reality, 2014.

Delaherche E, Chetouani M. Multimodal coordination: exploring relevant features and measures. In: Proceedings of the International Workshop on Social Signal Processing. 2010;47–52.

Delaherche E, Chetouani M, Mahdhaoui M, Saint-Georges C, Viaux S, Cohen D. Interpersonal synchrony : a survey of evaluation methods across disciplines. IEEE Trans Affect Comput. 2012;3(3):349–65.

Delaherche E, Dumas G, Nadel J, Chetouani M. Automatic measure of imitation during social interaction: a behavioral and hyperscanning-EEG benchmark. Pattern Recognition Letters (to appear), 2015.

DuBois JW, Chafe WL, Meyer C, Thompson SA. Santa Barbara corpus of spoken American English. CD-ROM. Philadelphia: Linguistic Data Consortium; 2000.

Edlund J, Beskow J, Elenius K, Hellmer K, Strömbergsson S, House D. Spontal: a swedish spontaneous dialogue corpus of audio, video and motion capture. In: Proceedings of Language Resources and Evaluation Conference, 2010.

Ekman P, Huang T, Sejnowski T, Hager J. Final report to NSF of the planning workshop on facial expression understanding. http://face-and-emotion.com/dataface/nsfrept/nsf_contents.htm. 1992.

Evangelopoulos G, Zlatintsi A, Potamianos A, Maragos P, Rapantzikos K, Skoumas G, Avrithis Y. Multimodal saliency and fusion for movie summarization based on aural, visual, textual attention. IEEE Trans Multimed. 2013;15(7):1553–68.

Gaffary Y, Martin JC, Ammi M. Perception of congruent facial and haptic expressions of emotions. In: Proceedings of the ACM Symposium on Applied Perception. 2014;135–135.

Galanis D, Karabetsos S, Koutsombogera M, Papageorgiou H, Esposito A, Riviello MT. Classification of emotional speech units in call centre interactions. In: Proceedings of IEEE International Conference on Cognitive Infocommunications. 2013;403–406.

Garrod S, Pickering MJ. Joint action, interactive alignment, and dialog. Topics Cognit Sci. 2009;1(2):292–304.

Georgiladakis S, Unger C, Iosif E, Walter S, Cimiano P, Petrakis E, Potamianos A. Fusion of knowledge-based and data-driven approaches to grammar induction. In: Proceedings of Interspeech, 2014.

Godfrey JJ, Holliman EC, McDaniel J. SWITCHBOARD: telephone speech corpus for research and development. Proc IEEE Int Conf Acoust Speech Signal Process. 1992;1:517–20.

Gottman J. Time series analysis: a comprehensive introduction for social scientists. Cambridge: Cambridge University Press; 1981.

Greenbaum S. ICE: the international corpus of English. Engl Today. 1991;28(7.4):3–7.

Grosz BJ. What question would Turing pose today? AI Mag. 2012;33(4):73–81.

Guarino N. Proceedings of the international conference on formal ontology in information systems. Amsterdam: IOS press; 1998.

Hammal Z, Cohn J. Intra- and interpersonal functions of head motion in emotion communication. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, in conjunction with the 16th ACM International Conference on Multimodal Interaction ICMI 2014. 12–16 November 2014. p. 19–22.

Hammal Z, Cohn JF, Messinger DS, Masson W, Mahoor M. Head movement dynamics during normal and perturbed parent-infant interaction. In: Proceedings of the biannual Humaine Association Conference on Affective Computing and Intelligent Interaction, 2013.

Hammal Z, Cohn JF, George DT. Interpersonal coordination of head motion in distressed couples. IEEE Trans Affect Comput. 2014;5(9):155–67.

Hoekstra A, Prendinger H, Bee N, Heylen D, Ishizuka M. Highly realistic 3D presentation agents with visual attention capability. In: Proceedings of International Symposium on Smart Graphics. 2007;73–84.

Howard N, Cambria E. Intention awareness: improving upon situation awareness in human-centric environments. Human-Centric Comput Inform Sci. 2013;3(1):1–17.

Hussain A, Cambria E, Schuller B, Howard N. Affective neural networks and cognitive learning systems for big data analysis. Neural Netw. 2014;58:1–3.

Ijsselmuiden J, Grosselfinger AK, Münch D, Arens M, Stiefelhagen R. Automatic behavior understanding in crisis response control rooms. In: Ambient Intelligence, Lecture Notes in Computer Science, vol 7683, Springer, 2012; 97–112.

ISO Language resource management: semantic annotation framework (SemAF), part 2: Dialogue acts, 2010.

Itti L, Koch C. A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Res. 2000;40(10):1489–506.

Jaffe J, Beebe B, Feldstein S, Crown CL, Jasnow M. Rhythms of dialogue in early infancy. Monogr Soc Res Child. 2001;66(2):1–149.

Janin A, Baron D, Edwards J, Ellis D, Gelbart D, Morgan N, Peskin B, Pfau T, Shriberg E, Stolcke A. The ICSI meeting corpus. Proc IEEE Int Conf Acoust Speech Signal Process. 2003;1:1–364.

Kaiser R, Fuhrmann F. Multimodal interaction for future control centers: interaction concept and implementation. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges, 2014.

Kaiser R, Weiss W. Virtual director. Media production: delivery and interaction for platform independent systems. New York: Wiley; 2014. p. 209–59.

Kalinli O. Biologically inspired auditory attention models with applications in speech and audio processing. PhD thesis, University of Southern California, 2009.

Kenny D, Mannetti L, Pierro A, Livi S, Kashy D. The statistical analysis of data from small groups. J Pers Soc Psychol. 2002;83(1):126.

Koutsombogera M, Papageorgiou H. Multimodal analytics and its data ecosystem. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;1–4.

Kristiansen H. Conceptual design as a driver for innovation in offshore ship bridge development. In: Maritime Transport VI, 2014;386–98.

Larsson S, Traum DR. Information state and dialogue management in the TRINDI dialogue move engine toolkit. Nat Lang Eng. 2000;6(3&4):323–40.

Lemke JL. Analyzing verbal data: principles, methods, and problems. Second international handbook of science education. New York: Springer; 2012. p. 1471–84.

Liu T, Feng X, Reibman A, Wang Y. Saliency inspired modeling of packet-loss visibility in decoded videos. In: International Workshop on Video Processing and Quality Metrics for Consumer Electronics, 2009. p. 1–4.

Madhyastha TM, Hamaker EL, Gottman JM. Investigating spousal influence using moment-to-moment affect data from marital conflict. J Fam Psychol. 2011;25(2):292–300.

Malandrakis N, Potamianos A, Iosif E, Narayanan S. Distributional semantic models for affective text analysis. IEEE Trans Audio Speech Lang Process. 2013;21(11):2379–92.

Malandrakis N, Potamianos A, Hsu KJ, Babeva KN, Feng MC, Davison GC, Narayanan S. Affective language model adaptation via corpus selection. In: Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing, 2014.

Martin P, Bateson P. Measuring behavior: an introductory guide. 3rd ed. Cambridge: Cambridge University Press; 2007.

Matsumoto D. Cultural influences on the perception of emotion. J Cross-Cultural Psychol. 1989;20(1):92–105.

Matsumoto D. Cultural similarities and differences in display rules. Motiv Emot. 1990;14(3):195–214.

McCowan I, Carletta J, Kraaij W, Ashby S, Bourban S, Flynn M, Guillemot M, Hain T, Kadlec J, Karaiskos V. The AMI meeting corpus. In: Proceedings of the International Conference on Methods and Techniques in Behavioral Research, 2005;88.

Messinger DS, Mahoor MH, Chow SM, Cohn JF. Automated measurement of facial expression in infant-mother interaction: a pilot study. Infancy. 2009;14(3):285–305.

Minato T, Nishio S, Ogawa K, Ishiguro H. Development of cellphone-type tele-operated android. In: Proceedings of the Asia Pacific Conference on Computer Human Interaction. 2012; 665–666.

Morency L. Modeling human communication dynamics. IEEE Signal Process Mag. 2010;27(5):112–6.

Moschitti A, Chu-Carroll J, Patwardhan S, Fan J, Riccardi G. Using syntactic and semantic structural kernels for classifying definition questions in jeopardy! In: Proceedings of the Conference on Empirical Methods in Natural Language Processing. 2011;712–24.

Mozer MC. The neural network house: an environment hat adapts to its inhabitants. In: Proceedings of AAAI Spring Symposium on Intelligent Environments. 1998;110–114.

Navalpakkam V, Itti L. An integrated model of top-down and bottom-up attention for optimizing detection speed. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2006;2049–56.

Nead C (ed). European Directory of Health Apps 2012–2013. Patent View 2012.

Nishida T, Nakazawa A, Ohmoto Y, Mohammad Y. Conversational informatics. New York: Springer; 2014.

Oertel C, Cummins F, Edlund J, Wagner P, Campbell N. D64: a corpus of richly recorded conversational interaction. J Multimodal User Interf. 2013;7(1–2):19–28.

Oullier O, de Guzman GC, Jantzen KJ, S Kelso JA, Lagarde J. Social coordination dynamics: measuring human bonding. Soc Neurosci. 2008;3(2):178–92.

Paggio P, Allwood J, Ahlsén E, Jokinen K. The NOMCO multimodal nordic resource—goals and characteristics. In: Proceedings of the Language Resources and Evaluation Conference, 2010.

Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng. 2010;22(10):1345–59.

Pantic M, Rothkrantz L. Facial action recognition for facial expression analysis from static face images. IEEE Trans Syst Man Cybern Part B: Cybern. 2004;34(4):1449–61.

Pardo JS. On phonetic convergence during conversational interaction. J Acoust Soc Am. 2006;119:2382–93.

Pentland A. Honest signals. Cambridge: MIT Press; 2008.

Picard RW. Affective computing. Cambridge: MIT Press; 2000.

Pickering MJ, Garrod S. Toward a mechanistic psychology of dialogue. Behav Brain Sci. 2004;27(02):169–90.

Piperidis S. The META-SHARE language resources sharing infrastructure: principles, challenges, solutions. In: Proceedings of Language Resources and Evaluation Conference. 2012;36–42.

Poria S, Gelbukh A, Hussain A, Howard N, Das D, Bandyopadhyay S. Enhanced SenticNet with affective labels for concept-based opinion mining. IEEE Intell Syst. 2013;28(2):31–8.

Poria S, Gelbukh A, Cambria E, Hussain A, Huang GB. EmoSenticSpace: a novel framework for affective common-sense reasoning. Knowl Based Syst. 2014;69:108–23.

Poria S, Cambria E, Hussain A, Huang GB. Towards an intelligent framework for multimodal affective data analysis. Neural Netw. 2015;63:104–16.

Potamianos A. Cognitive multimodal processing: from signal to behavior. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;27–34.

Ramseyer F, Tschacher W. Nonverbal synchrony in psychotherapy: coordinated body movement reflects relationship quality and outcome. J Consult Clin Psychol. 2011;79(3):284–95.

Rapantzikos K, Avrithis Y, Kollias S. Dense saliency-based spatiotemporal feature points for action recognition. In: Proceedings of IEEE International Conference on Computer Vision and Pattern Recognition. 2009;1454–61.

Renals S, Carletta J, Edwards K, Bourlard H, Garner P, Popescu-Belis A, Klakow D, Girenko A, Petukova V, Wacker P, Joscelyne A, Kompis C, Aliwell S, Stevens W, Sabbah Y. ROCKIT: roadmap for conversational interaction technologies. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;39–42.

Riccardi G. Towards healthcare personal agents. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;53–56.

Riccardi G, Hakkani-Tür D. Grounding emotions in human–machine conversational systems. Intelligent technologies for interactive entertainment. Lecture notes in computer science. New York: Springer; 2005. p. 144–54.

Rich C, Sidner CL. Collaborative discourse, engagement and always-on relational agents. In: Proceedings of the AAAI Fall Symposium on Dialog with Robots, 2010;FS-10-05.

Richardson D, Dale R. Looking to understand: the coupling between speakers’ and listeners’ eye movements and its relationship to discourse comprehension. Cognit Sci. 2005;29(6):1045–60.

Richardson M, Marsh K, Isenhower R, Goodman J, Schmidt R. Rocking together: dynamics of intentional and unintentional interpersonal coordination. Human Mov Sci. 2007;26(6):867–91.

Rickheit G, Wachsmuth I. Situated Communication. In: Rickheit G, Wachsmuth I, editors. Mouton de Gruyter; 2006.

Sacks H, Schegloff E, Jefferson G. A simplest systematics for the organization of turn-taking for conversation. Language. 1974;696–735.

Saint-Georges C, Mahdhaoui A, Chetouani M, Cassel RS, Laznik MC, Apicella F, Muratori P, Maestro S, Muratori F, Cohen D. Do parents recognize autistic deviant behavior long before diagnosis? Taking into account interaction using computational methods. PLoS One. 2011;6(7):e22,393.

Salah A. Natural multimodal interaction with a social robot: What are the premises? In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;43–45.

Scassellati B, Admoni H, Mataric M. Robots for use in autism research. Ann Rev Biomed Eng. 2012;14:275–94.

Scherer S, Glodek M, Schwenker F, Campbell N, Palm G. Spotting laughter in natural multiparty conversations: a comparison of automatic online and offline approaches using audiovisual data. ACM Trans Interact Intell Syst. 2012;2(1):4:1–31.

Scherer S, Weibel N, Morency L, Oviatt S. Multimodal prediction of expertise and leadership in learning groups. In: Proceedings of the International Workshop on Multimodal Learning Analytics, 2012.

Scherer S, Hammal Z, Yang Y, Morency L, Cohn J. Dyadic behavior analysis in depression severity assessment interviews. In: Proceedings of the ACM International Conference on Multimodal Interaction, 2014.

Schröder M, Bevacqua E, Cowie R, Eyben F, Gunes H, Heylen D, ter Maat M, McKeown G, Pammi S, Pantic M, Pelachaud C, Schuller B, de Sevin E, Valstar MF, Wöllmer M. Building autonomous sensitive artificial listeners. IEEE Trans Affect Comput. 2012;3(2):165–83.

Schroeder M. Expressive speech synthesis: past, present, and possible futures. Affective information processing. New York: Springer; 2009. p. 111–26.

Schuller B, Batliner A. Computational paralinguistics: emotion, affect, and personality in speech and language processing. New York: Wiley; 2014.

Schuller B, Steidl S, Batliner A, Burkhardt F, Devillers L, Müller C, Narayanan S. Paralinguistics in speech and language—state-of-the-art and the challenge. Comput Speech Lang. 2013;27(1):4–39.

Shockley K, Santana MV, Fowler CA. Mutual interpersonal postural constraints are involved in cooperative conversation. J Exp Psychol Human Percept Perform. 2003;29(2):326–32.

Shokoufandeh A, Marsic I, Dickinson SJ. View-based object recognition using saliency maps. Image Vision Comput. 1999;17(5):445–60.

Tkalčič M, Burnik U, Košir A. Using affective parameters in a content-based recommender system for images. User Model User-Adapt Interact. 2010;20(4):279–311.

Tomasello M. Origins of human communication. Cambridge: MIT Press; 2008.

Tomasello M, Carpenter M, Call J, Behne T, Moll H. Understanding and sharing intentions: the origins of cultural cognition. Behav Brain Sci. 2005;28(05):675–91.

Town C. Ontological inference for image and video analysis. Mach Vision Appl. 2006;17(2):94–115.

Turney PD, Littman ML. Measuring praise and criticism: inference of semantic orientation from association. ACM Trans Inform Syst. 2003;21(4):315–46.

Valstar M. Automatic behaviour understanding in medicine. In: Proceedings of the Workshop on Roadmapping the Future of Multimodal Interaction Research Including Business Opportunities and Challenges. 2014;57–60.

Van Engen KJ, Baese-Berk M, Baker RE, Choi A, Kim M, Bradlow AR. The wildcat corpus of native-and foreign-accented english: communicative efficiency across conversational dyads with varying language alignment profiles. Lang Speech. 2010;53(4):510–40.

Varni G, Volpe G, Camurri A. A system for real-time multimodal analysis of nonverbal affective social interaction in user-centric media. IEEE Trans Multimed. 2010;12(6):576–90.

Vinciarelli A, Mohammadi G. A survey of personality computing. IEEE Trans Affect Comput. 2014;5(3):273–91.

Vinciarelli A, Pantic M, Bourlard H. Social signal processing: survey of an emerging domain. Image Vision Comput. 2009;27(12):1743–59.

Vinciarelli A, Murray-Smith R, Bourlard H. Mobile Social Signal Processing: vision and research issues. In: Proceedings of the ACM International Conference on Mobile Human-Computer Interaction. 2010;513–516.

Vinciarelli A, Pantic M, Heylen D, Pelachaud C, Poggi I, D’Errico F, Schroeder M. Bridging the gap between social animal and unsocial machine: a survey of social signal processing. IEEE Trans Affect Comput. 2012;3(1):69–87.

W-S Chu FZ, la Torre FD. Unsupervised temporal commonality discovery. Proceedings of the European Conference on Computer Vision, 2012.

Wahlster W (ed). Verbmobil: Foundations of speech-to-speech translation. Springer; 2000.

Acknowledgments

This paper is the result of the discussions held at the “International Workshop on Roadmapping the Future of Multimodal Interaction Research including Business Opportunities and Challenges” (sspnet.eu/2014/06/rfmir/), held in conjunction with the ACM International Conference on Multimodal Interaction (2014). Alexandros Potamianos was partially supported by the BabyAffect and CogniMuse projects under funds from the General Secretariat for Research and Technology (GSRT) of Greece, and the EU-IST FP7 SpeDial project. Dirk Heylen was supported by the Dutch national program COMMIT. Giuseppe Riccardi was partially funded by EU-IST FP & SENSEI project. Mohamed Chetouani was partially supported by the Labex SMART (ANR-11-LABX-65) under French state funds managed by the ANR within the Investissements d’Avenir programme under reference ANR-11-IDEX-0004-02. Jeffrey Cohn and Zakia Hammal were supported in part by grant GM105004 from the US National Institutes of Health. Albert Ali Salah was partially funded by the Scientific and Technological Research Council of Turkey (TUBITAK) under Grant No. 114E481.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Vinciarelli, A., Esposito, A., André, E. et al. Open Challenges in Modelling, Analysis and Synthesis of Human Behaviour in Human–Human and Human–Machine Interactions . Cogn Comput 7, 397–413 (2015). https://doi.org/10.1007/s12559-015-9326-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-015-9326-z