Abstract

With the growth of web 2.0 technology, crowdsourcing contests now offer task-seekers an opportunity to access expertise and resources at a lower cost. Many studies have investigated the effects of influencing factors on solvers’ participation behaviors. However, there has been scant research on the effect of competition intensity and on the relationships between competition and reward. We collect data from the Taskcn website in China to build a two-equation model based on expectation-value theory to explore the effects of task reward and competition intensity on solvers’ registration and submission behaviors. The research results verify that task reward is positively associated with the number of registrations and submissions, and competition intensity is negatively associated with solvers’ submissions. In addition, the empirical results show that competition intensity moderates the relationship between task reward and submissions. These findings provide valuable contributions to the literature on crowdsourcing contests.

Similar content being viewed by others

Introduction

With the development of web 2.0 technology, the crowdsourcing contest has become an important and popular option for task-seekers, including organizations and individuals, to solve their difficulties with solutions sourced from solvers in the crowd (Boudreau and Lakhani 2013; Brabham 2008a, b; Poetz and Schreier 2012). The online crowdsourcing community launched by third-party providers is a typical and important form of crowdsourcing contest. In this approach, seekers release tasks in the platform and solvers register for tasks and submit their solutions in order to win the contest reward. Many crowdsourcing communities of this type have emerged, such as Taskcn in China and InnoCentive and TopCoder in the United States. Those online communities provide opportunities for task-seekers to obtain novel solutions to their problems in the form of online contests.

How to improve the performance of crowdsourcing contest is an important research question. Extant studies have shown that an increasing number of participants will bring more submissions and better results (Boudreau et al. 2011). To some extent, attracting as many submissions as possible gives seekers sufficient choices from which to select their preferred option (Terwiesch and Xu 2008). Thus, the basic challenge of the crowdsourcing contest is to attract more solvers.

Previous studies point out that task reward and competition play important roles in attracting solvers’ participation and submission in crowdsourcing contests (Liu et al. 2014; Yang et al. 2009). Reward is an incentive for solvers to obtain economic value and compensate their cost and effort. Many scholars point out that task reward can improve the performance of crowdsourcing contest and increase the number of solvers. In contrast, some empirical evidence suggests that task reward doesn’t effectively attract solvers to submit solutions (Yang et al. 2008a). Moreover, competition is a negative factor for solvers. In a crowdsourcing contest, competition among solvers will impact an individual’s expectancy of winning the task and their enthusiasm to expend effort and thus affect their work completion. Hence, competition intensity is an especially important factor for solvers’ submission. Prior studies have investigated the role of competition in the crowdsourcing contest, and those studies mainly operationalize competition intensity with the number of solvers (Yang et al. 2008a, 2009). However, the role of solvers’ experience and capability is ignored in previous studies. Competition in crowdsourcing contests should reflect the likelihood and difficulty of task solvers winning a task. It is common sense that the number of solvers directly impacts the number of submissions. Furthermore, the number of solvers may not affect the probability of winning a task. For example, the competition intensity perceived by a solver may be higher in a task if another solver with high experience and capability has registered than that of a task with ten solvers with low experience and capability. Therefore, using the number of solvers as a proxy of competition intensity in crowdsourcing contests is not an effective measuring method. Experience and capability of task solvers deliver a signal of expertise or probability of winning, which can reflect competition intensity of crowdsourcing contests (Liu et al. 2014). In addition, reward is an incentive for solvers to register tasks and submit solutions, but competition is a negative factor for solvers who might not submit their results (Yang et al. 2009). Therefore, the actual relationship between task reward and competition intensity becomes an important research question.

For a typical crowdsourcing website like Taskcn.com in China, there are normally two critical stages to solvers’ participation in a task: registration and submission (Yang et al. 2009). In the registration stage, solvers review the homepage of a task to learn about its rewards, requirements, duration, and so on. Based on this information, solvers will choose to register for the task or not. Therefore, task reward positively impacts the behavior of task-solvers in this stage (Liu et al. 2014). In the submission stage, solvers who have registered for the task will complete the work and submit it to the task-seeker. However, the competitive nature of the process means that the capability and experience of other solvers will impact an individual’s expectancy of winning the task and thus affect their work completion. Even after solvers have registered for a task, they might not submit any results (Liu et al. 2014). In this stage, competition intensity among solvers is especially an important factor. Hence, the role of task reward and competition intensity is significantly different in registration and submission stages.

Although crowdsourcing contests have been widely researched, the effect of task competition intensity on task solvers has not been sufficiently investigated and there is scant empirical literature on the relationship between reward and competition. To better understand the role of task reward and competition intensity in crowdsourcing contests, more research needs to be done. The objective of our research is to investigate the effects of task reward and competition intensity on task solvers’ behaviors in crowdsourcing websites, which is conducive to increasing the number of submissions for the task-seekers finally. Our research aims to address the following two research questions:

1. In the different stages, what are the roles of task reward and competition intensity, respectively?

2. What is the relationship between task reward and competition intensity?

We apply expectancy-value theory to explain our research question. Expectancy-value theory is a dominant theory from the view of value and expectancy to explain the relationship between motivation and human behaviors (Murray 1938; Nagengast et al. 2011). Task reward is a kind of incentive value that facilitates solvers’ behaviors, and competition intensity relates to solvers’ expectancy of task success. Thus, expectancy-value theory can be used to explain the roles of task reward and competition intensity for solvers in crowdsourcing contests. This theory also offers a chance to understand the relationship between task reward and competition intensity.

This paper constructs a two-equation model in order to investigate the role of task reward and competition intensity in crowdsourcing contests, from a process view. We divide the participation process into two stages: registration and submission. We then investigate the effects of task reward and competition intensity on solvers’ behaviors in these two stages, as well as the moderating effect of competition intensity in the submission stage. To test our hypotheses, we use 624 task data collected from a well-known crowdsourcing website in China (Taskcn.com). The empirical results reveal that task reward is positively associated with registration level and submission level. In addition, we use three constructs to represent competition intensity in a task; namely, solvers’ participation times, solvers’ winning times, and solvers’ credit values. However, the empirical results only support the proposition that solvers’ wining times are significantly associated with the number of submissions. In addition, the results suggest that competition intensity negatively moderates the relationship between task reward and the number of submissions.

This paper contributes to the theoretical understanding of participation in crowdsourcing contests in several ways. First, we apply expectancy-value theory to the area of crowdsourcing contest, which help us better investigate the effects of task reward and competition intensity on solvers’ participation behaviors. Second, from the view of solvers’ experience and capability, we contribute to the theoretical understanding of competition intensity among solvers in crowdsourcing contests. Third, we find a moderating effect of competition intensity on the relationship between reward and task solvers’ submissions. These findings deepen our understanding of the influences of reward and competition on task registration and submission in crowdsourcing contests, which provides insight into how the number of submissions can be increased.

The rest of this paper is structured as follows: Second section reviews the current literature and third section describes the theory and research hypotheses. fourth section presents our research model and empirical results. fifth section discusses the theoretical and practical implications of our work, discusses its limitations, and suggests possible avenues for future research. The conclusion is presented in sixth section.

Literature review

Crowdsourcing contests

Crowdsourcing contest platforms have changed our traditional modes of working. Crowdsourcing typically refers to openly collecting task solutions from a community and it has become an increasingly popular choice for sourcing open solutions (Liu et al. 2014). Scholars have paid considerable attention to how to facilitate more and better contributions in the crowdsourcing context (Brabham 2008b; Kaufmann et al. 2011; Leimeister et al. 2009; Zhao and Zhu 2014; Zheng et al. 2011).

Unlike traditional online communities, the online task in a crowdsourcing contest is a transaction, for which solvers can obtain real economic reward from task-seekers to compensate their efforts (Sun et al. 2012). Therefore, task reward is one of the most important factors in the design of crowdsourcing communities, and it has received considerable research attention. Liu et al. (2014) conducted a field experiment on Taskcn.com and found that task reward positively impacts the quantity and quality of submissions. DiPalantino and Vojnovic (2009) found that task rewards bring logarithmically diminishing results of task submissions, also based on empirical data from Taskcn.com. Horton and Chilton (2010) built a labor economics model to explore the effects of reward level on labor supply in crowdsourcing projects. Brabham (2010) investigated the factors that facilitate task-solvers to solve problems, based on 17 interviews. The results reveal that one of the most important factors is the opportunity to make money; that is, task reward. Chen et al. (2010) conducted a field experiment to research the influence of reward range on task solvers’ effort and task quality. The results show that higher reward improves solvers’ efforts, but does not improve the quality of the tasks. Archak and Sundararajan (2009) built a game theoretic model of a crowdsourcing contest and found that when task-solvers are risk-neutral, the task-seeker should offer a top reward to one solver. In contrast, when task solvers are risk-averse, task-seekers should offer more prizes than the desired number of submissions. In contrast, Yang et al. (2008a) also analyzed Taskcn.com data, but found that task reward does not effectively attract participants, as there is no statistically significant link between task reward and number of submissions.

Competition among solvers is also an important factor affecting the performance of crowdsourcing. Yang et al. (2009) build a structural model to investigate the number of solvers and the participation process in crowdsourcing contests. The results reveal that crowdsourcing is a process, and a solver’s behavior can be influenced by the number of other task solvers. Yang et al. (2008b) investigated users’ strategic behavior on Taskcn.com, and suggested that the number of solvers impacts the possibility of winning, and that users tend to participate in tasks with higher perceived odds of winning. In those studies, competition intensity in crowdsourcing contest is generally measured by the number of solvers. However, Liu et al. (2014) points out that the solvers’ experience competing in previous tasks can also impact other solvers’ perception on probability of winning, and then affecting other solvers’ submission behaviors. Hence, competition intensity should not be measured by the number of solvers alone, but also needs to be based on the experience that solvers bring to the competition.

Although previous studies have paid attention to the effects of task reward and competition intensity in crowdsourcing contests, there are still some shortcomings which are needed to be studied deeply. First, in literature on crowdsourcing contests, the role of competition intensity has not been sufficiently investigated. Previous studies on the effects of competition mainly focus on the number of task solvers. As solvers’ experience and capability can impact other solvers’ perception of competition (Liu et al. 2014), the number of solvers in crowdsourcing contest does not effectively reflect competition intensity. Second, there is scant research on the relationship between task reward and competition intensity in crowdsourcing contests. Task reward is an incentive factor to attract solvers’ participation and submission, but competition intensity is a negative factor to hinder solvers’ behaviors (Yang et al. 2009). Therefore, investigating the relationship between reward and competition can help understand solvers’ participation and submission.

Expectancy-value theory

Expectancy-value theory is the dominant theory of behavior motivation (Nagengast et al. 2011). This theory is used to explain the relationship between individuals’ behaviors and affecting factors (Murray 1938). Expectancy-value theory posits that an individual’s behaviors and actions depend on expectancy for success and incentive value. Expectancy refers to a kind of expected probability; that is, an individual’s evaluation or expectation of successfully performing and attaining the outcome of a particular task (Atkinson 1957). For example, individuals may possess a powerful expectation that they could perform well in certain work or win a task (Wigfield et al. 2009). Therefore, the individual’s expectation satisfies the primary requirement for successful performance. Incentive value refers to the relative attractiveness of performing successfully on a given task (Atkinson 1957). For instance, an individual may perceive that the reward for work or a task is attractive (Wigfield et al. 2009). Therefore, expectancy and incentive value could significantly impact individuals’ behaviors and contributions to outcomes (Vroom 1964).

Expectancy-value theory also posits that the influence of incentive value on individuals’ behavior will depend on expectancy (Shah and Higgins 1997), and this will change the effects of incentive value on individuals’ behaviors. This theory suggests that expectancy can moderate the relationship between incentive value and behavior (Nagengast et al. 2011). As expectancy increases, the effect of incentive value on a person’s behavior also significantly increases. However, expectancy can also negatively affect behaviors and actions. As expectancy decreases, the influence of incentive value on behavior significantly declines.

This theory has been applied in many research areas to explain individuals’ behaviors and motivational issues, such as software developers’ performance (Wu et al. 2007), participation in virtual communities (Sun et al. 2012), Web-based learning (Chiu and Wang 2008), and achievement motivation in educational psychology (Wigfield and Eccles 2000). In crowdsourcing contests, task reward acts as an incentive value, competition intensity is associated with solvers’ expectancy of winning a task. Thus, Expectancy-value theory is suitable for answering the question of how to increase submissions to crowdsourcing contests. However, there is scant research that applies expectancy-value theory to explain solvers’ behaviors in crowdsourcing contests. This paper fills these research gaps by building a research model based on EVT to investigate the effects of task reward and competition intensity in crowdsourcing contests.

Theory and research hypotheses

Registration and submission stage

To better understand the effects of task reward and competition intensity on task-solvers, we divide the crowdsourcing contest process into two stages and build a two-equation model to investigate the role of reward and competition intensity. Yang et al. (2009) points out that the process of a crowdsourcing contest can be divided into launch, registration, submission, and evaluation. In this paper, we only study the process of participation in crowdsourcing contests. Therefore, we simplify the process to focus on the registration and submission stages. Figure 1 shows the process of crowdsourcing contests in our research model. In the registration stage, solvers in online crowdsourcing communities search for task information. If solvers are interested in a task, they will review information about it on the task homepage. After considering the task description, reward, and duration, solvers will decide whether to register or not. In this stage, there is no significant competition among task-solvers because there is no task execution. Here, task reward is one of the most important incentives for task-solvers. Then, in submission stage, the goal of task-solvers is to perform a task activity, complete the task and submit solutions. Here, solvers can view others’ information of experience and compete with others to be the winner. If competition intensity is strong, solvers may give up on the task. Therefore, in this stage, both task reward and competition intensity could impact task solvers’ behaviors.

Research model and hypotheses

In this paper, we use expectancy-value theory to explain why solvers participate in a task in crowdsourcing communities. People undertake certain behaviors because they expect to obtain an appropriate value to meet their own needs (Deci and Ryan 2000). Incentive value is the main concept in expectancy value theory (Atkinson 1957). Thus, increasing incentive value is an effective way to stimulate the behavior of individuals. In an online community, monetary rewards can compensate participants for their time and effort (Chiu et al. 2006). In crowdsourcing communities, task rewards are the most important kind of tangible compensation for the time and effort solvers devote to a task. Therefore, monetary rewards are positively associated with participants’ behaviors and actions in online tasks.

In crowdsourcing communities, task-seekers provide monetary rewards to encourage solvers to register for a task and submit solutions. For task-solvers, these rewards can be considered to be a proxy of incentive value; that is the relative attractiveness of successfully performing certain work or tasks. If a solver wins the task, he/she will obtain the monetary rewards, which will compensate for his/her cost and effort. Monetary reward relates to the expected value of task achievement. If the task reward is high, task-solvers can expect to obtain more value from their achievement. On the contrary, when the reward is low, the effect of incentive value on solvers is small. Thus, task reward is one of the most important factors attracting solvers, and it is an incentive value that is associated with solvers’ intentions and behaviors.

Generally, there are two stages in the crowdsourcing contest participation process—registration and submission—and both represent participation behaviors. Registration is the antecedent of task submission in a crowdsourcing contest. In both the registration and submission stages, task reward plays an important role in attracting solvers to participate. Hence, we hypothesize that:

H1: In the registration stage, task reward is positively associated with the number of registrations.

H2: In the submission stage, task reward is positively associated with the number of submissions.

Expectation-value theory also posits that expectancy can significantly impact the participation behaviors of task solvers (Murray 1938). Expectancy is the expected probability of attaining the outcome of a task (Atkinson 1957). When individuals think it highly likely that they will achieve an outcome, they will be more motivated to undertake certain behaviors. On the contrary, when individuals have low expectations of success, they have little motivation to perform. Therefore, individuals’ expectancy of attaining the outcome will be associated with their submission behaviors.

In crowdsourcing communities, competition intensity is one of the most important factors that could be associated with solvers’ expectancy of the probability of task achievement. Task competition occurs among solvers who have registered for the task (Boudreau et al. 2011). The capability and experience of other solvers who decide to participate significantly affects competition intensity and individuals’ perceptions of success probability. As the capability and experience of other solvers’ grows, competition intensity increases and the probability of winning the task declines. Due to competition intensity, solvers expect to not obtain value from the task, which will not satisfy their own needs. Therefore, their submission behaviors will be weakened by competition intensity. Hence, we hypothesize that:

H3: Competition intensity is negatively associated with the number of submissions.

Expectation-value theory also suggests that there is a significant interaction effect between individuals’ expectancy and incentive value (Ajzen 1987; Cronbach and Snow 1977; Nagengast et al. 2011). Thus, incentive value can affect solvers’ behaviors when they think the probability of winning the task is high (Sun et al. 2012). When the probability of winning is low, the influence of monetary reward on solvers’ behavior declines. Even if rewards are high, solvers may not complete the task. On the contrary, when the probability of winning is high, the influence of monetary reward on solvers’ behavior increases. Hence, solvers’ perception of probability of winning moderates the relationship between monetary reward and task completion. This explains why some solvers initially register for a task but do not submit a solution. If solvers believe that the probability of winning is low, they will probably give up the task in the submission stage. Competition intensity is negatively associated with the probability of winning; so the relationship between reward and submission is weakened by increasing competition. Hence, we hypothesize that:

H4 Competition intensity negatively moderates the relationship between task reward and the number of submissions.

Figure 2 presents the research model for this paper.

Data and empirical methodology

Research setting

The data used in this paper was collected from Taskcn.com, which is one of the biggest crowdsourcing communities providing online innovation services in China. Taskcn is a crowdsourcing service platform that eliminates regional differences in labor services for most task-seekers. Taskcn is committed to helping employers, and small- and medium-sized enterprises find suitable workers to complete business projects, and to creating job opportunities for idle labor. By 2014, Taskcn had more than 3 million active solvers and more than a hundred thousand tasks had been completed through this platform. The function, nature, skills required, time involved, and openness of the website are similar to other Chinese and foreign crowdsourcing communities, such as Zhubajie, Epweiki, 680, Humangrid, Wilogo, and Freelancer. Therefore, Taskcn is a good research setting in which to investigate crowdsourcing communities.

In Taskcn, each task-seeker must first create a post for solvers, which includes task descriptions, task requirements, task duration, and task reward. Potential solvers view and evaluate all kinds of posts to find a task that satisfies their psychological expectations. They decide whether to register or not by considering the task’s requirements and rewards. After registration, solvers begin to complete the task and then submit their ideas and solutions. In this stage, solvers who have registered the task can view the experience values of other solvers, such as participation times, winning times, and credit values. Those values can reflect competition intensity of a task and impact solvers’ perception of likelihood of winning. Even after solvers have registered for a task, they might not submit their results (Liu et al. 2014). Therefore, competition intensity among solvers plays an important role in this stage. As discussed, solvers go through two stages to fulfill a task—registration and submission—and their behaviors and goals differ in each stage.

Data and variables

We wrote a JAVA program to automatically collect webpages containing information on tasks and results. This program collated a range of useful information, including task name, number of views, task duration, amount of reward, number of registrations and submissions, task category, and other solvers’ information (i.e., participation times, winning times, and credit values). We entered the acquired information into a database. We then developed another program to calculate and transform the data in the database, based on a task category we had chosen in advance.

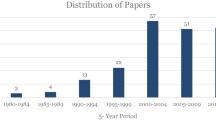

To ensure the validity of our data, we selected two task categories that are particularly popular on Taskcn: website design and logo design. We deleted invalid data for tasks that contained incomplete information and for tasks that had zero registrations. Our final data sample included data of 624 tasks.

This paper uses different dependent variables for the registration and submission equations. In the registration equation, the dependent variable is the number of solvers who have registered for the task. In the submission equation, the dependent variable is the number of submissions submitted by the solvers who have registered for the task.

The independent variables are task reward and competition intensity. In this paper, task reward refers to the monetary reward offered by task-seekers. In a crowdsourcing contest, tasks launched by seekers are a kind of transaction for solvers, which they can obtain monetary reward from task-seekers to compensate their efforts (Sun et al. 2012). Therefore, monetary reward is the most important factor to impact solvers’ behaviors. In the Taskcn website, task-seekers pay a monetary reward to participants who win the task to encourage and compensate their behavior. When the task reward paid by the seeker is high, solvers are more likely to register for the task and submit solutions.

The other independent variable of research model is competition intensity. This paper doesn’t use the number of registrations to measure competition intensity of a task, and instead use the solvers’ experience. We use this measurement for several reasons. Frist, the relationship that the number of registrations affects the number of submissions is quite common sense and does not add any major insight. Therefore, we use the number of registrations as a control variable of our research model. Second, as previously discussed, solvers’ capability and experience are different, and competition among them could be associated with the task result and the number of submissions. Thus, the capability and experience of task solvers reflect competition intensity, which can influence others’ expectancy of winning a task.

In our research context, solvers who had registered a task and not submitted the solution can obtain others’ information of experience and capability to perceive competition intensity. In our research model, we use three constructs to represent competition intensity in the task: participation times, winning times, and credit values. In Taskcn, participation times refer to the number of previous tasks a solver has participated in. Winning times refers to the number of previous tasks a solver has won. Credit values refer to the hundreds of CNY a solver has won in previous tasks. Figure 3 shows an example of solves’ information. This paper mainly investigates competition intensity of crowdsourcing contests in the task level. To represent competition intensity, we calculate the average values of the three constructs respectively for each task. Although this website doesn’t provide average values of all participators’ experience, solvers can view others’ information to evaluate and perceive competition intensity of a task. Hence, average values of all solvers’ experience and capability information can effectively reflect competition intensity in the task level.

In the registration equation, the control variables are the number of views and task duration. The number of views refers to how many solvers have read a task homepage, and task duration refers to the time limit set for a task. These two variables can impact the number of registrations. In the submission equation, our control variable is registration, because the number of registrations could affect the number of submissions. These variables control our two-equation model. Table 1 provides the description of variables. Table 2 presents the correlation of variables.

Model estimation

In this paper, we establish two equations to test our hypotheses. One equation investigates the number of registrations for the task; the other examines the number of submissions. We establish the following empirical model. However, because of the large variance in the dependent variable, the distribution of the variable is not normal. Thus, we developed the following log-linear regression model.

The registration equation is as follows:

The submission equation is as follows:

Let i = 1…N index the task. In the registration equation, a0 to a3 are the parameters to be estimated. For the submission equation, b0 to b8 are the parameters to be estimated. Log(Rewardi)*Log(Participationi), Log(Rewardi)*Log(Winningi) and Log(Rewardi)*Log(Crediti) are the interaction terms to test the moderating effects of competition intensity.

Results

We used STATA software to estimate this model. Table 3 presents the results of our regression by ordinary least square. The adjusted R-square of this model is reasonable, and the F-value is statistically significant. The variance inflation factor statistics of variables are less than 2.0, which means there is no significant multicollinearity among independent variables. We present the results of estimation hierarchically. We present results with independent variables in column 1, then show the interaction terms in column 2.

Hypothesis 1 predicted that task reward is positively associated with the number of registrations. The results in column 1 in stage 1 of Table 3 support this hypothesis. The coefficient of task reward (B = 0.240) is positive. The T-value of task reward is 9.928 and P-value < 0.01 means that the coefficient is statistically significant at 0.01 levels. Hence, our data suggests that task reward is positively associated with the number of registrations. Hypothesis 2 predicted that task reward is positively associated with the number of submissions. The results in column 1 in stage 2 of Table 3 support this hypothesis, because the coefficient (B = 8.873, T = 26.675, P < 0.01) is positive and statistically significant. Task reward relates to solvers’ expected value of task achievement. High task reward leads to high expected value for solvers, and low task reward leads to low value. Hence, task reward is positively associated with the number of registrations and submissions.

Hypothesis 3 posited that competition intensity is negatively associated with the number of submissions. The results in column 1 in stage 2 of Table 3 support this hypothesis, because the coefficient of winning times (B = −3.054) is negative. The T-value of coefficient is −4.252 and P < 0.01 means that the coefficient is statistically significant at 0.01 levels. However, we did not find evidence to support the effects of participation times and credit values on number of submissions. The coefficients of participation times (B = 1.175, T = 1.486, P > 0.1) and credit values (B = −0.105, T = −0.236, P > 0.1) are not statistically significant. High competition intensity decreases solvers’ perceptions of success probability, and then is negatively associated with the number of submissions.

Hypothesis 4 posited that competition intensity moderates the relationship between task reward and number of submissions. We used three constructs that reflect solvers’ capacity and experience to represent competition intensity. The results in column 2 in stage 2 of Table 3 support this hypothesis, because the coefficient of interaction term between winning times and reward (B = −6.459, T = −9.600, P < 0.01) is negative and statistically significant. However, we did not find evidence to support the moderating effects of participation times and credit values. The coefficients of interaction terms (Log(Reward)* Log(Participation), B = 0.357, T = 0.583, P > 0.1; Log(Reward)* Log(Credits), B = 4.042, T = 0.341, P > 0.1) are not statistically significant. When competition intensity is high, the influence of rewards on solvers’ behavior declines. Even if rewards are high, solvers may not complete the task. Figure 4 shows the results of our research model.

Robustness check

To check the robustness of research model, we divided all the data into two subsamples based on the task content of website design (group 1) and logo design (group 2) and then evaluated the research model twice. The results of the robustness check are presented in Table 4 and are consistent with the empirical results of the main model.

Discussion and implications

Discussion

It is widely believed that an increase in the number of solvers will lead to more submissions and better results. How to improve the number of solvers in crowdsourcing contests is an important research question. This paper builds a two-equation model to investigate the effects of task reward and competition intensity on participation in crowdsourcing contests. We hypothesized that, in crowdsourcing contests, task reward impacts solvers’ task registration and submission. Hypotheses H1 and H2 are supported by the results of the research model. This means that task reward is positively associated with solvers’ behaviors in the registration and submission stages. Monetary reward relates to the incentive value of a task in a crowdsourcing contest. Monetary rewards are the most important tangible compensation for the time and effort solvers devote to a task. Hence, monetary reward is positively associated with participants’ behaviors in crowdsourcing contests.

We also investigated how competition intensity is associated with crowdsourcing contests taking three aspects of solvers’ characteristics to represent competition intensity: participation times, winning times and credit values. We hypothesized that the solvers’ participation times, winning times, and credit values are negatively associated with solvers’ behaviors. The empirical results support the effect of winning times. We found that the number of task winning times is significantly and negatively associated with number of submissions. However, we did not find evidence to support the effects of participation times and credit values. One possible reason is that task winning history is a stronger signal of solvers’ capability and experience than task participation history and credit values. The number of task winning times directly represents how many times a solver has won in previous tasks. The number of task participation times only reflects solvers’ enthusiasm, not their ability. In addition, credit value is a relatively abstract concept for other solvers and not a direct expression of experience and capability. Therefore, those two variables are not statistically significant in our research model. For other researchers who want to investigate the role of competition, they should operationalize competition intensity with number of winning times because this construct appears to best reflect solvers’ experience.

Hypothesis 4 predicted that competition intensity moderates the relationship between task reward and number of submissions. The results of our empirical model supported the moderating effect of winning times. The effect of task reward on performance weakens as competition intensity increases. Figure 5 shows the suggested interaction relationship between competition intensity, task reward and number of submissions. Expectancy theory posits that competition relates to solvers’ perceptions of likelihood of success. When the probability of winning a task is low, the influence of task reward on solvers’ behavior declines. Even if task reward is high, solvers may not complete the task. On the contrary, when the probability of winning is high, the influence of task reward on solvers’ behavior increases. Hence, competition intensity moderates the relationship between task reward and number of submissions.

Theoretical implications

This paper advances theoretical development in the literature on crowdsourcing contests in several ways: First, we extend the application of expectancy-value theory to the crowdsourcing contest literature. Expectancy-value theory has been applied in areas such as software developer’s performance, participation in virtual communities, Web-based learning and educational psychology. The extant literature has not offered a suitable theory to explain the effects of task reward and competition intensity on solvers’ behaviors in crowdsourcing contests. We address this gap by using expectancy-value theory to investigate why and how task reward and competition intensity impact task solvers’ behaviors.

Second, this study contributes to the theoretical understanding of competition in crowdsourcing contests. Based on expectancy-value theory, we find that competition is an important factor that could impact solvers’ expectancy of the probability of task achievement. Previous studies have investigated the role of competition. However, it has tended to study the number of participants, which does not effectively reflect competition intensity in tasks. To better understand the role of competition, we use the experience of all task-solvers to measure of competition intensity; namely, participation times, winning times, and credit values. The empirical results show that the number of winning times negatively impacts the number of submissions. Hence, for other researchers who seek to investigate the role of competition, we suggest to operationalize competition intensity with number of past wins. These findings enrich our understanding of competition among task-solvers and offer more insight into the effect of competition in crowdsourcing contests.

Third, we find a moderating effect of competition intensity on the relationship between reward and task-solvers’ behaviors. This extends our understanding of the role of task value and expectancy in crowdsourcing contests. We apply expectancy-value theory to reveal the moderating effect of competition intensity in crowdsourcing contests. The effectiveness of task reward can decline as task competition intensity increases. In the submission stage, solvers evaluate task value and competition intensity to estimate if they can win, which impacts their submission behavior. When the probability of winning is low, the influence of reward on solvers’ submission decreases. Therefore, the effects of monetary reward on task submission will be weakened by increasing competition.

Implications for practice

Two major practical implications for task seekers and platform designers follow from our research. First, the results of our study have shown that task competition intensity is negatively associated with solvers’ behaviors on submission. Although solvers with high experience are negatively associated with the number of submissions, those solvers with high experience and capability could be useful for submissions of higher quality in a task. Therefore, platform designers might consider limiting solvers’ perception of competition intensity, while thinking of ways to keep solvers with high experience. One possible way is that platform designers should conceal solvers’ information of experience to reduce perceptions on competition intensity and to enhance solvers’ expectation of success. When solvers have no clue about others’ experience and capacity, their expectancy of success could increase, which is conducive to increasing the number of submissions. Hence, platform designers should consider new mechanisms which can hide information of experience for other solvers.

Second, competition intensity moderates the relationship between task reward and the number of submissions. Although task reward is positively associated with solvers’ behavior in the registration stage, its effects on submission behavior weaken as competition becomes more intense. Hence, in the submission stage, task-seekers should try to enhance the influence of rewards. One option is for task-seekers to offer additional rewards to solvers who have registered for a task, in order to increase the effectiveness of the reward and mitigate the negative effects of competition intensity. To keep a constant cost, task-seekers could provide two rewards based on the expected cost. One is the task reward, other one is the additional reward. For example, a task seeker whose expected cost is 500 can provide 400 task rewards in registration stage and 100 additional rewards in submission stage, which solvers who have not registered the task cannot see the additional reward.

Limitations and future research

This paper is not without limitations. First, we investigate the impact of competition and use an average of competition as the independent variable in our research model to reflect this concept. However, this method cannot sufficiently reflect the effect of competition intensity on each solver. Due to the difference of experience and capability, the probability of winning perceived by solvers and the influence of competition intensity on each solver are significantly different. For example, a solver with high experience may perceive low competition intensity in a task. In contrast, another solver with low experience and capability may feel high competition intensity. In the future, we will collect individual data to research the effect of competition intensity on each solver. We can use questionnaire to survey all solvers’ experience and the perception of competition intensity in a crowdsourcing contest. Although the method of this paper may be limited to some degree, average data still can reflect the competition intensity of a task.

Second, as competition intensity in crowdsourcing contests could reduce the number of submissions and moderate the role of task reward, the solvers with high experience and capability can provide high quality submissions. Therefore, the task seeker wants to keep the number of solvers with high experience. Reward is a good way to attract more solvers with high experience and capability. However, high task reward also could increase high competition intensity. In the future, we will investigate how to balance the relationship among task reward, competition intensity and the number of submissions.

Third, our research only uses cross-sectional data for the regression analysis. This approach could limit our investigation of dynamic change in competition intensity among solvers. For example, solvers who enter early may deter later solvers from entering the contest because the early enterers may make the later ones feel less likely to win the task. Therefore, our future research will use an empirical model based on panel data or time series data to validate dynamic change in competition intensity.

Fourth, the research was conducted on a specific crowdsourcing website. Crowdsourcing communities have unique reward and competition mechanisms that may limit the generalizability of our study to other websites. In the future, we will collect data from several different websites to test the effects of reward and competition on crowdsourcing participation.

Conclusion

Although previous studies have investigated the role of competition, those studies have tended to research the number of participants, which does not effectively reflect competition intensity in tasks. In addition, previous investigations of the effects of factors on solvers’ behaviours may not sufficiently explain the relationship between reward and competition in crowdsourcing contests. To address these research gaps, we developed a two-stage research model based on expectancy-value theory to explain how task reward and competition intensity affect solvers’ registration and submission. To better understand the role of competition, we use task-solvers’ experience and capability to measure competition intensity of a task. Competition intensity is considered to moderate the relationship between task reward and the number of submissions. The confirmed interaction influence points out that the effect of task reward on the number of submissions is contingent on the competition intensity in the task. This paper contributes to the theoretical understanding of competition in crowdsourcing contests based on solvers’ experience and capability, not the number of task solvers. The findings of this paper help us understand the effects of task reward and competition intensity on solvers’ behaviors and contribute to research on crowdsourcing contests.

References

Ajzen, I. (1987). Attitudes, traits, and actions: Dispositional prediction of behavior in personality and social psychology. Advances in Experimental Social Psychology, 20(1), 1–63.

Archak, N., & Sundararajan, A. (2009). Optimal design of crowdsourcing contests. ICIS 2009 Proceedings.

Atkinson, J. W. (1957). Motivational determinants of risk-taking behavior. Psychological Review, 64(61), 359–372.

Boudreau, K. J., & Lakhani, K. R. (2013). Using the crowd as an innovation partner. Harvard Business Review, 91(4), 60–69.

Boudreau, K. J., Lacetera, N., & Lakhani, K. R. (2011). Incentives and problem uncertainty in innovation contests: An empirical analysis. Management Science, 57(5), 843–863.

Brabham, D. C. (2008a). Crowdsourcing as a model for problem solving an introduction and cases. Convergence: The International Journal of Research into New Media Technologies, 14(1), 75–90.

Brabham, D. C. (2008b). Moving the crowd at iStockphoto: The composition of the crowd and motivations for participation in a crowdsourcing application. First Monday, 13(6), 1–22.

Brabham, D. C. (2010). Moving the crowd at Threadless: Motivations for participation in a crowdsourcing application. Information, Communication & Society, 13(8), 1122–1145.

Chen, Y., Ho, T.-H., & Kim, Y.-M. (2010). Knowledge market design: A field experiment at Google answers. Journal of Public Economic Theory, 12(4), 641–664.

Chiu, C.-M., & Wang, E. (2008). Understanding web-based learning continuance intention: The role of subjective task value. Information & Management, 45, 194–201.

Chiu, C.-M., Hsu, M.-H., & Wang, E. T. (2006). Understanding knowledge sharing in virtual communities: An integration of social capital and social cognitive theories. Decision Support Systems, 42(3), 1872–1888.

Cronbach, L. J., & Snow, R. E. (1977). Aptitudes and instructional methods: A handbook for research on interactions. New York: Irvington.

Deci, E. L., & Ryan, R. M. (2000). The “what” and “why” of goal pursuits: Human needs and the self-determination of behavior. Psychological Inquiry, 11(4), 227–268.

DiPalantino, D., & Vojnovic, M. (2009). Crowdsourcing and all-pay auctions. Paper presented at the Proceedings of the 10th ACM conference on Electronic commerce, 119–128.

Horton, J. J., & Chilton, L. B. (2010). The labor economics of paid crowdsourcing. Paper presented at the Proceedings of the 11th ACM conference on Electronic commerce. ACM, 209–218

Kaufmann, N., Schulze, T., & Veit, D. (2011). More than fun and money. Worker Motivation in Crowdsourcing-A Study on Mechanical Turk. Paper presented at the AMCIS.

Leimeister, J. M., Huber, M., Bretschneider, U., & Krcmar, H. (2009). Leveraging crowdsourcing: Activation-supporting components for IT-based ideas competition. Journal of Management Information Systems, 26(1), 197–224.

Liu, T. X., Yang, J., Adamic, L. A., & Chen, Y. (2014). Crowdsourcing with all-pay auctions: A field experiment on taskcn. Management Science, 60(8), 2020–2037.

Murray, H. A. (1938). Explorations in personality: A clinical and experimental study of fifty men of college age. New York: Oxford University Press.

Nagengast, B., Marsh, H. W., Scalas, L. F., Xu, M. K., Hau, K.-T., & Trautwein, U. (2011). Who took the “×” out of expectancy-value theory? A psychological mystery, a substantive-methodological synergy, and a cross-national generalization. Psychological Science, 22(8), 1058–1066.

Poetz, M. K., & Schreier, M. (2012). The value of crowdsourcing: Can users really compete with professionals in generating new product ideas? Journal of Product Innovation Management, 29(2), 245–256.

Shah, J., & Higgins, E. T. (1997). Expectancy× value effects: Regulatory focus as determinant of magnitude and direction. Journal of Personality and Social Psychology, 73(3), 447–458.

Sun, Y., Fang, Y., & Lim, K. H. (2012). Understanding sustained participation in transactional virtual communities. Decision Support Systems, 53(1), 12–22.

Terwiesch, C., & Xu, Y. (2008). Innovation contests, open innovation, and multiagent problem solving. Management Science, 54(9), 1529–1543.

Vroom, V. H. (1964). Work and motivation. 1964. NY: John Wiley &sons, 47–51.

Wigfield, A., & Eccles, J. (2000). Expectancy-value theory of achievement motivation. Contemporary Educational Psychology, 25, 68–81.

Wigfield, A., Tonks, S., & Klauda, S. L. (2009). Expectancy-value theory. Handbook of Motivation at School, 55–75.

Wu, C.-G., Gerlach, J., & Young, C. (2007). An empirical analysis of open source software developers' motivations and continuance intentions. Information & Management, 44(3), 253–262.

Yang, J., Adamic, L. A., & Ackerman, M. S. (2008a). Competing to share expertise: The taskcn knowledge sharing community. Paper presented at the ICWSM, 161–169.

Yang, J., Adamic, L.A., & Ackerman, M.S. (2008b). Crowdsourcing and knowledge sharing: strategic user behavior on taskcn. Paper presented at the Proceedings of the 9th ACM conference on Electronic commerce, 246–255

Yang, Y., Chen, P.-Y., & Pavlou, P. (2009). Open innovation: An empirical study of online contests. Paper presented at ICIS, 1–16.

Zhao, Y., & Zhu, Q. (2014). Evaluation on crowdsourcing research: Current status and future direction. Information Systems Frontiers, 16(3), 417–434.

Zheng, H., Li, D., & Hou, W. (2011). Task design, motivation, and participation in crowdsourcing contests. International Journal of Electronic Commerce, 15(4), 57–88.

Acknowledgements

The authors highly appreciate the Senior Editor Doug Vogel and Judith Gebauer and anonymous reviewers for their insightful comments and suggestions. All errors remain ours.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible Editors: Doug Vogel and Judith Gebauer

Rights and permissions

About this article

Cite this article

Li, D., Hu, L. Exploring the effects of reward and competition intensity on participation in crowdsourcing contests. Electron Markets 27, 199–210 (2017). https://doi.org/10.1007/s12525-017-0252-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12525-017-0252-7