Abstract

Robots have been recently used as valuable therapeutic devices in numerous studies (especially with children with developmental needs), but their role as more general support for therapists is less well studied. However, as robots become better integrated in therapeutic environments, they will also influence therapists; and if robots are designed correctly, they could positively influence therapists’ well-being. Understanding how robots could be used in such a way is especially important as therapists of autistic children (and therapists of mentally disabled people in general) have one of the highest risks of workplace burnout. This paper describes a series of studies conducted to understand therapists’ attitudes towards robotic support and to discover what is most needed in such devices; this paper also describes an experimental study of the feasibility of robots playing one of those roles. Through observational studies and a series of ten meetings, with a group of seven therapists of autism, a list of possible roles was created. In a larger questionnaire based study, therapists gave a ranking to various robot roles and functions (a child’s behaviour analyser and support in critical/dangerous situations were given the highest priority). Therapists also stated that they expect robots to help them in the workplace, help prepare documentation and make their work more systematic. In a Wizard-of-Oz type experimental study, a robot was used to play a role of “emotional mirror” with seven therapist-child pairs. Study participants stated that a robot was acceptable and was not disturbing, although most did not find it particularly useful. Our conclusions indicate that therapists want robots to play a larger role than just a therapeutic device, and such roles can be added to robots without disturbing sessions with clients.

Similar content being viewed by others

1 Introduction

Use of robots in therapy, particularly with children with autism, has proven feasible from the time of such projects as Aurora [5]. With newer research focusing on clinical trials [6], the time for robots to be used in actual therapy (as opposed to an experimental setting) comes near. With the prospect of widespread use of such robots comes the question of how such robots could be used in an environment of real therapy so as to positively influence not only a disabled child but also therapists.

The subject of using robots in larger therapy environments must take into account subjects such as everyday routines, common obstacles or stressful situations in therapists work. It is therefore crucial to gain deeper insight into therapists work context and the dynamics of social action. That is why we decided to conduct part of the research via a participatory research strategy where a group of 7 therapists participated in educating us about details of their work environment, in creating a list of possible roles for robots and ways that robots could help them and in helping create the possible scenario of a robotic assistant, which we then have tested through experiments. The same group of 7 therapists also participated in designing 3 robots that would be used for sensory therapy, explained in [19, 20]. In course of designing robots issues of wider spectrum of robotic uses became apparent, as therapists wanted robot that would support them in more ways than just be a therapeutic device.

When a robot is used in therapy, there can be a number of possible interactions between the actors, with a triad of interactions between a robot, child and therapist being the most notable (see Fig. 1). Some of these interactions can directly benefit the therapist. Our research aim was to study a scenario where robots could be used to support a therapist in therapeutic environment. Therefore our main questions are: what are the therapists’ needs when it comes to robot support? What functions should robots have in order for them to be useful?

Answers to such questions could guide us and other robot designer groups, in creating robots that are not only devices for the therapy, but a coherent and useful part of a larger therapy environment. Moreover, therapists working with mentally disabled people (especially with autism) have the largest burnout rate of all social workers [8]. This is connected to the fact that patients have a poor ability to communicate and make social bonds, and a limited set of possible activities, but also to factors related to the how employers are organized and the work setting [12]. Social features available in therapeutic social robots such as action and emotion recognition as well as the ability to use natural language could directly benefit therapy providers. This could be understood as robots being used on the margins of therapy environment—to help therapists with matters not directly connected to a child’s therapeutic or educational needs (see Fig. 1).

Our research was done in three parts:

First, a participatory study was done in a set of ten meetings with a group of 7 therapists of autism in a centre for autism therapy. Its aim was to provide directions for robot design as well as create a list of roles and robot functions that would be beneficial to therapists.

A second study, based on two questionnaires, was conducted to evaluate the list of possible robot roles and functions according to the opinion of a larger group of therapists.

Finally, an experimental study was conducted where robots had a supportive role for a therapist—as an emotional mirror, giving the therapist an insight into his own emotions. Details of such a role were prepared with our focus group of therapists. The aim of this study was to check the feasibility of a robot being supportive to therapists, but not being a part of any therapy.

2 Related Work

Several authors from robotics community who have focused on developing robots for therapeutic use have noted that practical use of robots in therapy is not only dependant on the benefits to patients, but also on their adaptation to the whole therapeutic environment. Researchers such as Michael Goodrich, noted that the use of robots will have a form of therapeutic triad of interactions where there are interactions not only between a child and the robot and between a child and therapists, but also between therapists and the robot [7] (see Fig. 1). Mark Colton suggests introducing the therapist as an active participant in what a robot does, so that, based on a child’s behaviour the therapist can modify the robot as part of the therapists-in-the loop setting [4].

Researchers proposed that therapists could not only control robots directly, but could also modify the robot’s program if the programming environment is designed to take into account therapists’ abilities to program. Emilia Barakova’s Wiki Therapist project acknowledges the role of the therapist and seeks to improve the ability of therapists to program the robots through an eas-to-use graphical programming environment (TiViPe); this explores the role of therapists as creators of roboticized tools and promotes social aspects of programming based on the web community of therapists and robot practitioners [3]. Wnuk proposed a programming language for therapists using natural language through the use of fuzzy logic [2].

Attitudes towards and concerns about social robots’ use of service workers from the Saskatchewan disability service organisation were surveyed in [18]. Authors surveyed the attitudes of therapists towards robotics applications (of service robots). Authors asked questions regarding functions of such robots or their qualities (such as mimicking human interaction and touch). In contrast to our studies, questions were focused on robotic roles in relation to people with disabilities and not on supporting roles for therapists.

3 Study to Create a List of Robot Roles and Functions in the Therapy Environment

Our focus in the first study described here was understanding the therapeutic environment, limitations of current tools and approaches, and finding possible ways in which robots could be used. This took the form of multiple meetings (10 meetings) over a period of a year, where a group of therapists explained their work and their obstacles, prototyped robots with a group of students of art and robotics and participated in brainstorming and more formalised ideation sessions.

3.1 Participants

Study was conducted in Navicula Centre for Autism Therapy. Therapy is realised through individual therapeutic-educational programmes, based on PEP-R or TTAP test results. The main method of working with children is Applied Behavioural Analysis, individualised for the needs of particular children. Therapists work mainly through isolated educational session or through incidental learning arranged around natural situations. Such sessions are usually individual or dyadic. The specific approach for a particular child is set by therapists with the agreement with the supervisor and with full agreement from the child’s parents. As the children may have low communication abilities or low levels of verbal development, alternative communication methods are used in everyday work such as PECS (Picture Exchange Communication System).

The main work system in Navicula is similar to a TEACCH model (Treatment and Education of Autistic and Related Communication Handicapped Children), where different work methods are combined with behavioural method. Therapists also cooperate with parents to engage them in a child’s therapeutic process. A workday is organised in such a way as to help the child function better. Activities are highly structured. In educational spaces picture day plans, activity plans are used, as well as visual descriptions of the space. Therapists working in the Navicula centre have status of special needs teachers and have to act in accordance with the Polish special educational system. This requires providing reports about a child’s behaviour and progress.

3.2 Methodology

3.2.1 Therapists Observations

Our process of acquiring understanding started with therapists observations, observing pairs of different therapists and children (around 30 min with each therapist-child pair) during their everyday activities. In whole, 12 pairs were observed, in a period of of two weeks.

As each of the therapists in our sessions had a different child, and usually did different tasks, we have been rapidly introduced to a range of tasks that therapists do. Observations were focused on therapeutic aids (i.e., technology used, computers and software), communication between therapists and obstructions in everyday work. Notes from observations formed a basis for interviews with therapists in the needfinding sessions.

3.2.2 Needfinding Sessions

We met ten times with the focus group of seven therapists from Navicula Centre for Autism Therapy. Four of these meetings were directly related to the role of robots as supporters for therapists. Other meetings were devoted to planning the concept of and designing new therapeutic aids (used for sensory therapy)—our focus group cooperated with designers and roboticists to produce novel therapeutic equipment, as we described in [19]. The first session lasted about three hours. It was attended by therapists and two researchers. The aim was to explain the possibilities and limitations of modern technology. Therapists became acquainted with the functions and the modes of action of various robots used in therapy, and with several artificial intelligence tools (e.g., Google Now, Siri, Wolfram Alpha) that are already available. The second and third sessions were devoted to the identification of therapists’ needs associated with their workplace. A workplace psychologist was invited to support our conversations and interviews. At the second session, we discussed the most troublesome issues connected with their job responsibilities, work environment and organization. At the third session, we asked therapists to explain more formally, both in written form and discussion the possible roles of robots in their workplace. The discussion was based on open questions such as “In what part of your work could a robot support you?” or “What are the features of robots that could help in your work?” The aim was to identify the specific roles for robots above and beyond the therapy. The session ended with drafting with the therapists a questionnaire that could be used to rank in priority order such roles by a larger group of therapists.

During the fourth session, therapists participated in a session to prototype robot’s behaviour in one of the roles (emotional mirror). Therefore, the therapists had to face the problem of designing a robot to assist themselves. This formed a basis for the experimental study explained in Sect. 5.

3.3 Results of Needfinding Study

The biggest obstacles that the therapists’ focus group stated in their work are: poor relations with supervisors and parents, lack of visible results (that could be shown to parents and supervisors), loneliness (there are frequent periods of time when therapists work alone with their clients) and bureaucracy.

Therapists were stressed about parents’ disbelief at the amount of work put in by the therapists (as compared to results, which could depend on a child’s mental abilities) or bureaucratic requirements of their work, with a need for “constant reporting”.

That suggested the use of robots in a supportive role, something which therapists showed a big interest in. After we explained the current limitations of state-of-the-art technologies of speech, emotion and activity recognition, the therapists showed interest in some particular robotic roles.

Therapists understood robots as always being focused and objective. Most of the focus group participants were interested in robots being able to recognise, analyse and record a child’s actions—mainly to ease reporting and improve therapy feedback. They were concerned with the ability to modify thresholds of such action recognition, that is changing the “definition of success” in order to facilitate the child’s improvement. Robots could have a database of each child’s profile, and could brief a therapist on a child’s particular needs or dislikes (e.g., when one therapist substituted another therapist).

Therapists were also concerned with the ability to modify robot’s behaviours and functions. Robot’s functions (both towards patient and therapist) should be easily controllable and changeable. They were interested in a common database of such functions from which the therapist could choose appropriate ones as this could save them time while still having the ability to create new therapeutic scenarios.

Therapists would also like a robot that could reduce their workload and mental load in general. Such robot could remind them of scheduled tasks, entertain a child during a break in activities and provide help with writing reports of a child’s daily behaviour.

Therapists also found interesting the idea of a robot being a third person in a room. Such a robot could echo the therapist’s commands and/or compliment a child on his or her actions. The robot would also be welcome to provide mental support through light-hearted comments or be a communication device to other therapists. Moreover, therapists saw big potential of having a robot in a critical situation. The ability to use natural language to ask for help was seen as very important. The capacity for recognizing emotions would also be useful—a robot could warn a therapist when he/she becomes nervous and starts negatively influencing the child. Also, some of therapists could see “soft robots” as a way to constrain children and small mobile robots as a way to distract them.

Therapists from the focus group suggested robot roles as described below:

-

a helper in critical/dangerous situations. As therapists frequently work alone with their patients, they can have a difficult time when the patient behaves in a way that requires help (being aggressive towards himself/herself or other people). A robot could be used to distract, call for help or be operated remotely by another person in order to soothe the client.

-

a record keeper and reporting device. Therapists work as part of a bigger institution and are frequently required to report particular patient’s behaviours and therapy progress. Also, patient’s parents can doubt that there is any progress or that some actions are being carried out. Robots can record parts of therapy and make reports, both for administrative purposes and for communication with parents.

-

an “emotional mirror” for both the patient and therapist. Therapy is a dynamic situation where it can be hard for a therapist to always understand the client’s emotions( as well as their own emotions), such as anger and frustration, which can negatively influence therapy. By informing about emotions as they occur, robots could give the therapist a chance to change the situation before it negatively influences the therapy.

-

a “team player”. A robot can influence therapy dynamics by stating that it does not like some behaviour (thereby moderating conflict [9]), proposing or finishing some activities (managing pace).

4 Questionnaire Studies of Attitudes Towards Supporting Robots and Robot Roles in the Therapeutic Environment

4.1 Methodology

In order to confirm the results of needfinding sessions with the focus group, we conducted a study on a larger group of therapists, not involved in the process of designing a robotic assistant (see previous subsection). The group of 21 people was recruited among the practitioners from the Navicula Cente in Lodz. It consisted of 20 persons aged 25–40 and one person aged 40–50. There were 16 women and 5 men in a group.

Therapists were asked to fill two questionnaires used to assess their attitudes towards robots performing support functions for therapists and ranking these functions. Participation in the study and responding to questions was voluntary, therapists could also answer partially. Questionnaires were distributed on paper and submitted anonymously.

The attitude towards robots was evaluated through picking a level of agreement to such statements as “a robot could give me feedback” or “a robot could improve value of my work” on a Likert scale. 31 Sentences (listed in Table 1) were based on list on workplace hazards, (such as lack of variety, self growth or support from supervisors, perceived low value of work, overtime and high workload) contributing to workplace burnout, that was developed basing on World Health Organisation review on this subject [16]. We wanted to confirm whether a larger group of therapists also would like to see an intelligent robot supporting their own work. Each survey started with a description: “Answer by agreeing or disagreeing to the following sentences to a question in which scenarios do you perceive an intelligent robot as useful in your work? Where would such a robot not be helpful?”

Likert scale answers were analysed through measuring frequency of particular answers and measuring median value.

In the second questionnaire, therapists were asked to arrange the possible roles of the robotic assistant from the most needed to the least needed. Also, possible functions of such robots had to be arranged from the most desirable to the least desirable. The roles and functions used in the questionnaire were taken from the needfinding sessions with the focus group. Therapists were asked to rank robot roles in order of their preferences and rank functions in each role (with 1 being the highest rank). Next, the mean rank of robotic roles and functions were calculated using the pmr package of the R language, described in [1].

4.2 Results of Questionnaire Studies

4.2.1 Attitudes Towards Robots in Workplace Study

Table 1 presents the 31 sentences which were presented to therapists, histograms of their responses, median values and frequency responses. While exact distributions can be seen in Table 1 when describing results, we will be taking together answers “agree”, “strongly agree” (therapists agree) and similarly “disagree” with “strongly disagree” (therapists disagree) when writing about frequencies.

Most therapists answered “a robot could accompany me in my workplace” (sent. 23) positively, (18/21).

Robot as a tool for feedback. Both focus groups and surveyed groups see strong viability in receiving feedback from such devices. Most therapists (17/19) agreed that robot could give them feedback (sent. 8) and give them objective reports about their work (sent. 11/19).

Support in the workflow. Despite focus group agreement on a strong conflict between the administrative and therapy responsibilities, that is large bureaucracy, most therapists (10) were neutral whether a robot could help them reconcile conflicting tasks (sent. 7), although the answers could differ if the question was formulated differently. Therapists agreed that robots could help them with repeatable tasks (sent. 3, 18/19). 11/19 therapists agreed that robots could give them more control over their work ( sent. 15) and more control over how the work responsibilities are performed (sent. 16).

Robot as an organisation tool. Therapists agreed that the use of robots could make their work more systematic (sent. 4 14/19). Answers to whether the use of robots would clarify their work goals are disparate ( 7 agree, 8 disagree out of 19).

Robot and perceived work value. A robot is seen as something that could be an important resource at work by 8 therapists, but most are neutral (10) (sent. 11). Also the opinions of whether a robot could support the creation of new therapeutic solutions are disparate. 18 out of 19 therapists agreed on sentence “robot could help me with repeatable tasks” (sent. 3). Seven practitioners agreed that the robot could help them to focus on important parts of working, 4 disagreed, and the rest (8) had no opinion on the subject.

Physical support. Therapists were neutral as to whether robots could improve their workplace ergonomy (sent. 21) or lessen physical workload (sent. 20). When asked if a robot could help with aggressive behaviour of patients (sent. 26), 3 were definitely against it and 15 were for (with 3 being definitely for). When questioned what such help could look like, therapists imagined a device to gently hug the patient.

Value of work. Most therapists agreed that robots could improve the value of their work (sent. 1 9/19) but were generally neutral (8/19) with 3 persons strongly disagreeing as to whether a robot would improve therapist value in the workplace (sent. 12). There was large variance in answers to the level of agreement as to whether robots could bring more creative solutions (sent. 28) with 5 persons strongly disagreeing and 11 persons agreeing out of 21.

Emotional support. Therapists are divided as to the value of robot to manage their emotions. 10 disagree and 7 agree to a sentence “robot could help me control my emotions”. Therapists are neutral whether “robot could motivate me”.

Self-improvement with a robot. Therapists were neutral as it comes to the possibility of self-development through the use of a robot (sent. 30), with 3 people strongly disagreeing. Also, therapists generally do not agree that use of robots could lead to their abilities being used more (sent. 27) or grow professionally ( sent. 5).

Work overloading. Therapists agreed that use of robots could lessen their workload (sent. 13, 13/19) but disagreed that robots could lessen their overtime ( sent. 14, 12/19). Most therapists were neutral as to whether robots could help them achieve a better life-work balance ( sent. 30, 11/21). Therapists were neutral as to whether robots could give them more time for breaks (sent. 31).

Interactions with supervisors. None of the therapists agreed that the use of robots could give them more support from their supervisors (sent. 24, disagree 13/21). Therapists also disagreed that robots could help make their work be evaluated more objectively (sent. 25, 10 disagreed with 4 strongly disagreeing and 2 agreeing). They also disagreed that robots could help set their range of responsibilities (sent. 10, 11/19).

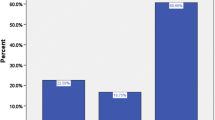

4.2.2 Robot Roles in a Workplace

The mean rank of robot roles is presented in Table 2, while mean rank of preferred functions of robots in Table 3. A lower score means being higher on the rank (e.g. 1—the most desirable role, 5—the least desirable role)

Both groups—focus group and a larger group agreed that it would be good if a robot that could record and analyse therapy progress. However, a larger group preferred robot actions focused on children’s behaviour, such as creating child’s profile, counting child’s actions and providing statistics rather than more general roles such as giving therapists feedback on therapy progress or voice dictation.

Therapists from the larger group valued robot roles to support themselves (such as an emotional mirror or record keeping) less than the focus group (which valued both types of roles similarly).

Both groups valued highly the ability to use the robot as a communication device in a critical/dangerous situations, in order to call their colleagues for help.

5 A Qualitative Experimental Study of Emotional Support Robot

To test the potential of using robots as a tool to improve the well-being of therapists, we co-designed with a focus group of therapists an experiment with a robot being emotional support or emotional mirror. The robot would recognise therapists’ emotions and react to them: in the first phase stating the recognised emotion and in the second phase reacting to recognised emotions through more social phases.

The aim of this study was to check whether the use of a robot not as a therapeutic device, but as a form of an observer of therapy, interacting with a therapist would be possible and if such a function could positively influence a therapist and check perception, and reactions of therapists to a social robotic partner.

Specific research questions related to this study were formulated as:

Would a therapist interact with a robot in any other way than passively listen to it, and would therapists consider such an interaction as something positive

Whether the therapists would prefer the a formal or informal form of interaction

Whether the session was influenced by the robot and/or therapy was stopped (paused)

As a test robot, we used the Ono robot which is designed as a social robot for children (see Fig. 2). It has a face capable of displaying a large range of emotions as well as the ability to use different parts of robot as touch sensors. The Ono robot is an open hardware platform, based on a Raspberry Pi [17], which allowed us to connect it to a Robot Operating System (ROS) and use the Ivona commercial-grade text-to-speech technology [10]. For the experiment the robot was also equipped with a bluetooth module paired with a Bluetooth headset.

We used a Wizard-of-Oz scheme—the robot was controlled by two people: one technician and one experienced therapist recognising emotions through video and audio transmitted from the test area. Because we wanted to check reactions to the robot actions that would be feasible with the current state of technology, the operators (Wizards) were instructed to react only to recognised emotions (and not, for example, to social cues) and to act by using only phrases from a limited list of expressions—as modern robots are not yet capable of free form socially-aware dialog.

5.1 Participants

We recruited 7 therapists and received informed consent from them and the parents of their patients. Both parents of children and therapists were informed about the details of experiment, risks and have provided their written consent for participation in the experiment, data recording and analysis.

Participating therapists had between 3 and 8 years of experience, six of them were female, one male. The children had a medium to high degree of autism, with some children having mental retardation and other disabilities.

5.2 Study Setup

The study took place in rooms where an educational session with a particular child would normally take place. Therapists were asked not to change their normal procedures for that particular day.

Special care was taken to minimise influences on the child. The robot was placed in the room in a way that it was not directly in front of the child, but that the therapist could see it (see Fig. 3). To further reduce any possible disturbance to the therapy, the robot’s voice was heard only by the therapist through a Bluetooth headset.

Two people controlled the robot, one being a qualified (and experienced) autism therapist, the other being a person responsible for technical matters. A third person (the experimentator) was responsible for taking notes, explaining the procedure to the subject and interviewing him.

The robot’s expressions were synchronised with a voice. To be able to recognise emotions, the group controlling the robot could see the room through two cameras, and hear voices through the Bluetooth headset. If there was written consent from the child’s parents and therapists video and audio was also recorded for further study.

5.3 Procedure

Each therapist was introduced to the setup by the experimentor, who explained the two phases of the experiment (called formal and informal), and received a list of six possible commands for the robot (such as repeat or stop). The experimentor proceeded with a short interview asking about the therapist’s attitudes towards robots and his or her previous experiences. They were also informed about the ways to stop or pause the experiment in case of any emergencies or problems. The experimentor also answered any questions.

The experiment consisted of three parts all attended by both the child and the therapist, and lasting 35 min. In the first 5 min, the robot introduced itself to the therapists through the Bluetooth headset and showed its different face expressions. In the second part the robot stated the emotional status of the observed therapist using a phrase “I see that you are [a bit| very]... [ nervous | sad | excited etc]”. In the third, the “informal” phase, the robot used more social, positive, phrases that were said when an operator recognised emotions. Phrases that we used were earlier discussed with the focus group of specialists.

After 35 min, the robot stated that the experiment was finished and the experimenter entered the room, and conducted a semi-structured interview with the therapist about his/her experience. Questions included whether (or not) and in what way the robot’s behaviour influenced the therapist and the patient, whether the therapist preferred the formal or informal phrases and about particular aspects of the robot—its appearance, voice, whether its behaviour was appropriate and comments useful.

The experiment concluded with informing the therapist about the fact that the robot had been operated remotely and inviting the therapist and the child to see how the robot was controlled.

5.4 Ethics

The study was approved by the director of Navicula Centre for Autism Therapy. The robot was used in an educational setting and could not do any harm to the children. Issues of robot behaviour being in any way harmful to a child were discussed with outside experts of autism therapy as well as our focus group and both groups agreed that setup and procedure described above could not be in any way considered harmful to an autistic child.

The level of possible disturbance could be compared to normal everyday situations in an autism centre. Also, an experienced therapist was always present in the control room and could interrupt the experiment.

Sentences used by the robot were also discussed beforehand with an occupational psychologist to minimise any risk of negative influence on therapists.

Parents of children and therapists involved in experiment were informed about the details of the experiment, risks, and provided written consent for participation in the experiment, data recording and analysis.

5.5 Experiment Results

Video recordings of participants’ interactions with the robot and observer’s notes were reviewed, and responses were grouped into the following three categories:

5.5.1 Acceptability of the Robot and Its Influence on Therapists

None of therapists participating in this experiment had experience previously with robots of any kind. Before the experiment, 3 out of 7 were sceptical about the practical use of robots as their personal support. After the experiment, 6 participants said that robots could have a positive influence on their work, although different participants understand the idea of help in different ways. All of the therapists said that the Ono’s (robot’s) voice heard through the Bluetooth headset influenced them the most, while the robot’s facial expressions were seen as less understandable.

The robot’s embodiment was important and most therapists looked at the robot after hearing something from him. Two therapists said that they enjoyed the robot “just being there”. The shape and colour of the robot was “acceptable”; some therapists stated that they could not imagine a different one. Its electronic voice also was not a problem. Some (2 people) considered its face expressions too exaggerated and unreadable.

Therapists differed also as to the preferred mode of the operation of the robot. Two preferred the formal way of stating recognised emotions, arguing that such a function could improve their understanding of the situation and their control over their own state. Four others preferred the robot’s informal comments. In both modes therapists disliked inaccurate or inadequate commands. Two therapists stated that the informal comments were distracting. Because the robot repeated the same phrases when reacting to the same emotions, some participants saw it as monotonous.

In the informal mode, after listening to some of robot’s comments most of therapists reacted with laughter or a smile (6 out of 7).

5.5.2 Influence on Children and on Therapy

While special care was taken to minimise the influence of the robot on the child’s behaviour, there was still a possibility of the child being interested in the robot and therefore no longer focusing on the educational task or of the robot distracting the therapist when, for example, explaining something to a child.

There was no interaction between the child and the robot in 4 out of 7 cases. Two children were asked by their therapists to pat the robot (to which the robot reacted by changing its face expression and responding with “it tickles”, which was heard by the therapist). In one case, a child became interested in the robot but only to the degree of looking at the robot from time to time.

When asked about how the robot might influence therapy, three therapists stated some concern about the robot’s voice distracting them when communicating with a child (in some situations, the robot’s comments were made when the therapist was talking to a child).

In two cases, after an informal comment by the robot (which said “it is calm today” as a response to recognising a degree of boredom) the therapists changed their actions. When asked about their behaviour they did not note the influence of the robot.

5.5.3 Interaction with the Robot

On all recorded sessions, therapists visibly react to the robot’s behaviour. Mostly, there was some laughter or a smile or, in case of therapists who perceiving the emotions stated by the robot as being mis-recognised, a degree of surprise or some level of irritation. Three of seven therapists used the commands provided to communicate with the robot but some (3 persons) also commented on the robot’s behaviour loudly or thanked it for nice complements.

Three therapists patted the robot or touched it. Two therapists also encouraged a child to pat the robot.

6 Discussion

Current social robotics projects increasingly place robots as a form of enhancement of care already in place, rather than a replacement of the human caregiver [13]. When working with children with autism, robots can be used to invoke interest and engagement [14] or as a diagnostic tool [15]. Generally in autism therapy, it is necessary for the therapist to be involved in the interaction between the child and the robot, which makes this a triadic form of interaction [4].

Is there a role for robots in a therapeutic environment other than being a therapeutic tool?

Therapists from our focus group and questionnaire studies generally answered—yes. Therapists, both from the focus group and from the larger group, expressed an interest in robots directly helping with their own needs. In our study, 18 out of 21 therapists agreed on having a robotic helper, in contrast with mostly sceptical answers of social workers being questioned about robots replacing them [18].

Moreover, the first ranked role is that of a child’s behaviour analyser; i.e. rather an observer than active participant in therapy (creating the child’s profile, collecting statistics on behaviour and providing feedback). This is easy to understand that therapists, faced with a highly sensible job, want to focus on the therapy personally while delegating bureaucracy to another intelligent actor. Therapists also believe that the use of robots will decrease their workload, but still want them to be under control. This points out the need for a robot controlled by the therapists yet easy and approachable in usage (i.e. not bringing additional mental strain). The foregoing was also stated in other studies: paper [3] suggests using a user-friendly programming environment for creating robot behaviours, so that therapists can control the robots themselves.

Therapists generally believe that an intelligent robot could improve the value of their work, provide feedback, give more control and reduce work overload. These are important factors contributing to burnout [16], and therefore, robots’ functions and well designed interfaces could reduce the risk of burnout.

Therapists also see robots as devices that could be used in particularly stressful situations—mainly as a way of easily asking for help from their peers (through voice orders to the robot) and helping to physically constrain or redirecting the attention of an aggressive child (see Tables 2 and 3 in Sect. 4.2.2).

Therapists, however, do not believe that the robots would influence the social issues of burnout phenomenon—having adequate motivation and support from supervisors, being objectively evaluated, having clear goals and responsibilities. This confirms study [18] where therapists did not see robots as human-like machines but more like instruments.

In our experiment, therapists interacted with a robot which had a minor influence on the therapy itself. That is positive, as it means that additional roles (such as providing statistics, emotional feedback) could be introduced without distracting a child or therapists. Even not a fully social robot could be useful to therapists as an assistant, as long as basic rules of conversation are followed (i.e., not interrupting when therapist is speaking, not repeating same phrase multiple times).

It is specific to the high level of autism in children participating in the experiment, but it is worth noting that, while therapists appreciated the robot “just being there” or focusing on the robot’s face when it was talking through the Bluetooth headset, children ignored the robot. Such reaction of autistic children towards robots is common; with children preferring simple appearance and behaviour over more human looking robots [11]. The robot could therefore use social cues directed towards therapists with a minimal influence on the therapy, as children ignored the robot if not asked by the therapists to look or interact with it.

7 Conclusions and Further Work

In this paper we have presented some results of the Roboterapia project where we studied the needs of autism therapists. We have used different methods, ranging from observations, focus group studies, questionnaires and experiments to see what kind of interactions are perceived as feasible and useful by therapists of autism. We can conclude by stating that a robot’s role in therapy does not need to be constrained to just being a tool for a child’s therapy and that therapists expect robots to help them in a more comprehensive manner.

The insight on therapists’ expectations about the robots and their usage provided by the questionnaire and studies involving the focus group presented in this paper were limited by their localised nature; that is all therapists were from the same city and institution. As perceptions and attitudes towards robots depend on culture and locality, a more general survey would be needed to provide more general conclusions.

The main limitation of this experimental study was that it was of the Wizard-of-Oz kind. Such studies provide only some aspects of interacting with a real robot, moreover real robots tend to have technological problems and are limited as to their range of reactions. While we did provide facilitators with a list of possible commands and provided guidelines as to when and how to react, there is always some level of spontaneity and social nature in human control that could influence the results. The limited amount of time spent with the robot by each therapists also limits our inferences about the long term response to such a social robot. Also, only one role was studied, with the design and experimental evaluation being work for the future.

We are planning to set up robots with functionalities that could improve the well-being of therapists, that is—robots which provide adequate feedback and can describe the level of progress. We are also working on an interface for robot control and programming that could be used by therapists in for it to be easy for them to program robots to perform repeatable tasks. In our longitudinal studies we will be analysing therapists’ burnout as a function of roboticized environment usage.

Our biggest hope is that this paper will provide insight into the needs of therapists and will lead to more attractive robot designs that also take into account the well-being of therapists.

References

Alvo M, Philip L (2014) Exploratory analysis of ranking data. In: Statistical methods for ranking data. Springer, pp 7–21

Arent K, Kabala M, Wnuk M (2005) Programowanie i konstrukcja kulistego robota spolecznego wspomagajacego terapie dzieci autystycznych. Ph.D. thesis, Politechnika Wroclawska

Barakova EI, Gillesen J, Huskens B, Lourens T (2013) End-user programming architecture facilitates the uptake of robots in social therapies. Robot Auton Syst 61(7):704–713

Colton MB, Ricks DJ, Goodrich MA, Dariush B, Fujimura K, Fujiki M (2009) Toward therapist-in-the-loop assistive robotics for children with autism and specific language impairment. In: AISB new frontiers in human-robot interaction symposium, vol 24. Citeseer, p. 25

Dautenhahn K (1999) Robots as social actors: aurora and the case of autism. In: Proceedings of CT99, the third international cognitive technology conference, August, San Francisco, vol 359, p 374

Diehl JJ, Schmitt LM, Villano M, Crowell CR (2012) The clinical use of robots for individuals with autism spectrum disorders: a critical review. Res Autism Spectr Disord 6(1):249–262

Goodrich MA, Colton M, Brinton B, Fujiki M, Atherton JA, Robinson L, Ricks D, Maxfield MH, Acerson A (2012) A robot into an autism therapy team

Jennett HK, Harris SL, Mesibov GB (2003) Commitment to philosophy, teacher efficacy, and burnout among teachers of children with autism. J Autism Dev Disord 33(6):583–593

Jung MF, Martelaro N, Hinds PJ (2015) Using robots to moderate team conflict: the case of repairing violations. In: Proceedings of the tenth annual ACM/IEEE international conference on human-robot interaction, HRI ’15. ACM, New York, NY, USA, pp 229–236. doi:10.1145/2696454.2696460

Kaszczuk M, Osowski L (2009) The ivo software blizzard challenge 2009 entry: improving ivona text-to-speech. In: Blizzard challenge workshop, Edinburgh, Scotland. Citeseer

Lee J, Takehashi H, Nagai C, Obinata G, Stefanov D (2012) Which robot features can stimulate better responses from children with autism in robot-assisted therapy? Int J Adv Robot Syst 9

Leka S, Jain A et al (2010) Health impact of psychosocial hazards at work: an overview, chap. 4.1.1 Burnout. World Health Organization

Matari DFaMJ (2010) Dry your eyes: examining the roles of robots for childcare applications. Interact Stud 11(2):208–213

Robins B, Dickerson P, Stribling P, Dautenhahn K (2004) Robot-mediated joint attention in children with autism: a case study in robot-human interaction. Interact Stud 5(2):161–198

Scassellati B (2007) How social robots will help us to diagnose, treat, and understand autism. In: Robotics research. Springer, pp 552–563

Stavroula Leka, AJ (2010) Health impact of the psychosocial hazards of work: an overview. World Health Organization

Vandevelde C, Saldien J, Ciocci C, Vanderborght B (2014) Ono, a diy open source platform for social robotics. In: International conference on tangible, embedded and embodied interaction

Wolbring G, Yumakulov S (2014) Social robots: views of staff of a disability service organization. Int J Soc Robot 6(3):457–468

Zubrycki I, Granosik G (2015) Technology and art—solving interdisciplinary problems. In: 6th internatinal conference on robotics in education, RiE 2015, HESSO. HEIG-VD, Yverdon-les-Bains, Switzerland

Zubrycki I, Granosik G (2016) Designing an interactive device for sensory therapy. In: Proceedings of the eleventh annual ACM/IEEE international conference on human-robot interaction extended abstracts. ACM

Acknowledgments

The authors would like to thank Anna Gawryszewska, Aneta Lenarczyk, Izabela Perenc, Karolina Przybyszewska, Marcin Kolesinski and Agnieszka Madej who greately helped with preparation of this paper. We also appreciate the very good cooperation with therapists and directors of Navicula Centre. We would also like to thank Jaroslaw Turajczyk for discussions which influenced the ideas described in this paper. This research was partially supported by the Foundation for Polish Science under Grant No. 132/UD/SKILLS/2015.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Zubrycki, I., Granosik, G. Understanding Therapists’ Needs and Attitudes Towards Robotic Support. The Roboterapia Project. Int J of Soc Robotics 8, 553–563 (2016). https://doi.org/10.1007/s12369-016-0372-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-016-0372-9