Abstract

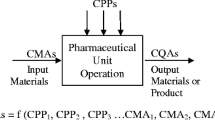

Simulation-based optimization is a research area that is currently attracting a lot of attention in many industrial applications, where expensive simulators are used to approximate, design, and optimize real systems. Pharmaceuticals are typical examples of high-cost products which involve expensive processes and raw materials while at the same time must satisfy strict quality regulatory specifications, leading to the formulation of challenging and expensive optimization problems. The main purpose of this work was to develop an efficient strategy for simulation-based design and optimization using surrogates for a pharmaceutical tablet manufacturing process. The proposed approach features surrogate-based optimization using kriging response surface modeling combined with black-box feasibility analysis in order to solve constrained and noisy optimization problems in less computational time. The proposed methodology is used to optimize a direct compaction tablet manufacturing process, where the objective is the minimization of the variability of the final product properties while the constraints ensure that process operation and product quality are within the predefined ranges set by the Food and Drug Administration.

Similar content being viewed by others

Introduction

Downstream pharmaceutical manufacturing involves a sequence of processing steps necessary to transform a mixture of powders into a final product such as tablet or capsule. Specifically, based on the properties of the main ingredient, the active pharmaceutical ingredient (API), as well as the process constraints and objectives, critical decision-making steps include the choice of the necessary additives (excipients) and the appropriate processing route, ranging from direct compaction to dry and wet granulation, in order to produce pharmaceuticals within specifications [11, 25, 26, 40]. The application of optimization to downstream pharmaceutical manufacturing engineering problems involves many challenges, such as the need to rely on expensive simulation-based process models, the uncertainty and variability introduced by the powder material properties, and, in certain cases, the lack of process understanding which leads to dependency on noisy experimental data-based correlations. In addition, optimizing the production of pharmaceuticals requires the overcoming of another challenge, namely, the identification of clear economical objectives, which can be attributed to the hitherto atypical manufacturing practices employed in the industry compared to other industrial processes such as petrochemicals, specialty chemicals, and foods. In fact, engineers in other industries started relying on computational tools for designing their processes since the early 1900s, minimizing the need to physically experiment or rely on heuristics and empirical knowledge [55]. Specifically, recent advances in the optimization literature are not only limited to profit-based optimization but also incorporate supplementary aspects to integrated process design, such as systems to improve environmental impact, simultaneous process and product design, and process intensification combining multiple operations into fewer components and multi-plant integration. In opposition, even though pharmaceutical tablets are products which are highly regulated in terms of their physical properties, consistency, appearance, and taste, their manufacturing procedure has been predominantly designed empirically due to the lack of process knowledge.

The pharmaceutical industry relies predominantly on batch processes, which may not be operated at optimal operating conditions. Their economic inefficiency increases further due to the need for off-line destructive and time-consuming quality assessment procedures based on which an entire batch is either accepted or discarded as waste. The reason for the aforementioned problems can be attributed to the fact that a large fraction of the industry did not invest toward efficient manufacturing methods since the potential economic profit seemed negligible compared to the enormous profits coming from patented products. Recently, however, the industry is facing difficulties due to patent expirations and the lack of new potential product pipelines, which is driving the new trends including quality by design initiatives, process analytical technology tools, and integrated and controlled continuous manufacturing [25, 38, 39, 41, 44, 63]. Furthermore, the development of a wide range of process models for specific unit operations, including powder mixing, roller compaction, wet granulation, and tablet compaction, has enabled the design of integrated manufacturing systems through dynamic flowsheet simulators [11, 27, 60, 61]. Consequently, this work is an attempt to employ simulation-based optimization techniques for the optimization of a solid-based flowsheet model for the production of pharmaceutical tablets. The impact of optimized and well-designed pharmaceutical processes is projected to be very significant since it will not only improve the quality and consistency of pharmaceutical products but also reduce the total waste, which is a high component of the unnecessary costs of the industry (20–40 %) [41].

The simulation-based optimization literature handles problems where analytic derivatives of the objective function are lacking while the results are obtained by complex simulations. Examples of expensive simulators used to approximate, design, and optimize real systems come from many different engineering fields [13, 23, 30, 33, 59, 64] and can range from complex computational fluid dynamic models to large-scale integrated flowsheet models. However, standard optimization techniques cannot be applied due to the lack of knowledge of analytic derivatives, possible discontinuities caused by if–then operations, high computational cost which prohibits the realization of multiple function evaluations, and, finally, due to the presence of various types of disturbances [5]. Often, due to the computational burden of expensive simulations, simulation-based optimization is associated with the use of surrogate approximations of the expensive model which is then used for optimization.

Simulation-based optimization using surrogates is an emerging research area in many industrial applications, in which an expensive simulator of a product or process must be optimized [7, 13, 23, 24, 31, 34, 35, 37, 45, 46, 59]. Even though a variety of different methodologies have been developed for various applications, the basic steps for performing surrogate-based optimization are common. The first step involves the design of a computer experiment in order to collect a set of sample points and finally produce a surrogate model to describe the process. Computationally expensive computer codes cannot be used for optimization; thus, the main purpose of a computer experiment is to acquire the necessary data in order to fit a cheaper predictor to the obtained data. Subsequently, optimization is performed based on the surrogate approximation model, while additional sampling locations are identified in areas which have promising objective function values or are highly uncertain based on a specific figure of merit. Finally, the expensive computer model is simulated for these conditions; then, the surrogate model is updated and a new optimum can be identified. This procedure is performed iteratively until the surrogate model does not change significantly and, consequently, the optimum is acquired with sufficient accuracy.

Surrogate-based optimization strategies will be greatly beneficial toward the optimization of solid-based complex flowsheet models which are computationally expensive and contain a large amount of noise due to the high uncertainty introduced by the powder raw materials. In this work, a methodology is proposed which combines commonly used tools in the surrogate-based optimization literature with a black-box feasibility analysis for the optimization of expensive noisy flowsheet models and complicating process constraints (Eq. 1). The goal of the approach is to minimize computational cost while simultaneously reducing the effect of noise which may lead to erroneous optimal locations. The novelty of this work lies mainly on the integration of different applied aspects, such as surrogate-based optimization, black-box feasibility, and noise handling, in order to solve a complex pharmaceutical simulation model.

The remainder of the paper is organized as follows. “Surrogate Simulation-Based Optimization: Steps and Identified Challenges” describes recent advances in simulation-based optimization using surrogates and the identified challenges. “Proposed Methodology” provides a detailed outline of the proposed steps for the optimization of noisy, constrained, and expensive problems, which is demonstrated through a low-dimensional global optimization benchmark problem. The case study which is used to test the proposed approach is described in “Case Study: Continuous Tablet Manufacturing,” while the results are presented and analyzed in “Results.” The last section of the paper presents some conclusions and future work.

Surrogate Simulation-Based Optimization: Steps and Identified Challenges

There are many innate challenges involved in most of the steps of surrogate-based optimization, such as (a) the design of an appropriate computer experiment, (b) the identification of an efficient surrogate approximation method which can handle uncertainty caused by various sources of noise, and (c) the determination of the suitable optimization technique which can successfully identify a local optimum with a limited number of function evaluations in the presence of noise and possible feasibility constraints.

Surrogate Model Building with Noise

A variety of different data-based response surface methodologies exist in the literature which can approximate any type of underlying function through optimization of the model parameters based on the available data. For example, response surface methodology [12] requires the definition of the form of the basis function (i.e., linear, quadratic, cubic) based on which the parameter estimation is performed through least-squares error minimization. Other examples commonly used in the literature are neural networks, radial basis functions, high-dimensional model representations, and kriging. One of the most popular approaches, which is employed in the current work, is the kriging algorithm, which has interpolating characteristics and has been shown to require fewer number of fitted parameters than other techniques, which leads to overfitting problems. Despite the vast majority of literature on surrogate-based modeling and optimization, there is no clear answer in terms of which method is most suitable in most applications. This decision should be made based on knowledge of the underlying simulation model and the presence of noise which dictates whether the basis function should be interpolating or not.

The kriging algorithm has been widely used for approximating expensive or black-box models [1, 16–18, 31, 32, 35–37, 42, 43, 47, 57, 58] due to its ability to provide predictions with their uncertainty intervals, with less function evaluations when compared to other methodologies. Specifically, each kriging prediction of an unknown sampling location is expressed as a realization of a stochastic process [15], which is described by both a mean and a variance that depend on a weighted sum of the observed function values and the location of those values with respect to the new point. The foundation of kriging is depicted in Eq. 2, where it is assumed that any two points are correlated with each other according to their spatial distance, d i,j .

where n is the number of input variables and θ k and p k are the tuning parameters of the kriging model controlling the effect of distance and the smoothness of the surrogate model, respectively. The effect of these two parameters in the form of the profile of the correlation function form can be found in [22, 36]. A general kriging response at an unsampled location is described by Eq. 3.

where μ(x j ) is the predicted average response at location x and ε(x j ) are normally distributed error terms which are correlated through a model which aims to capture the effect of decreasing correlation as the distance between two points increases (Eq. 4).

In most applications, it is shown that modeling the correlation of errors between observations is so influential that a mean response μ(x j ) is sufficient to describe the process output; however, the user has the flexibility to use a more complex basis function such as linear or polynomial according to the knowledge which is available on the true underlying model. More details about the derivation of the kriging mean response and variance can be found in the Appendix.

Even though the interpolating ability of kriging is the reason why it has attracted so much attention as a surrogate model, this property is not desirable in cases where the process data are noisy and/or stochastic, where the surrogate must be non-interpolating in order to smooth out any noise effects from the computational data. In this work, it is desirable for the methodology to be able to be less sensitive to noise, and allow for replication of samples in high-uncertainty regions, since various sources of uncertainty are incorporated within the parameters and process conditions of the pharmaceutical flowsheet. Details about the stochastic nature of the flowsheet are described in the next section. The transformation of the interpolating kriging method to a stochastic one can be achieved through the incorporation of an additional parameter in the covariance function, which allows for the response surface not to interpolate the data. This parameter has been defined as the “nugget effect” in the geostatistical literature [15], which modifies the basic assumption of kriging that two points will have an identical value as their spatial distance approaches to zero. The nugget parameter can be tuned to accommodate the desired effect of non-interpolation, and its absolute value can be based on the level of noise present in the data. In fact, the nugget effect parameter need not even be a constant value throughout the investigated space if the amount of noise in the response is different at different locations of x. Recent work employing a modified nugget effect kriging model has proven that by incorporating a variable nugget parameter results on modeling heteroscedastic variance models more accurately, with fewer number of required samples [62]. The effect of the nugget effect parameter to the kriging model equations and the kriging predictions are described in part B of the Appendix.

Design of Computer Experiments with Noise

The performance of a number of computer runs at a set of input configurations is what is formally defined as a computer experiment [47]. Computationally expensive computer codes cannot be used for optimization; thus, often, the purpose of a computer experiment is to acquire the necessary data in order to fit a cheaper predictor to the data. In fact, one of the most critical aspects in optimization through surrogate models is the minimization of the necessary sampling in order to obtain the global optimum of the real underlying function; the available literature on this topic is significant [14, 18, 19, 34–36, 42, 43, 47, 50]. Design and analysis of computer experiments is a challenging and critical step since it is very important to efficiently select the design points in order to study how a set of input variables affects an observed response. There are two different types of sampling strategies: (a) local search methods which always focus on a small subregion of the space within which samples are collected and metamodels are built and (b) global search techniques which initially require the sampling of the entire region based on which a surrogate model is built, which is then refined by sampling only in promising locations. In this work, a global search technique is employed since this approach allows an initial estimation of the overall feasible region of the investigated space.

After the initial global sampling is performed and the first surrogate model is built, identifying new sampling locations requires the algorithm to take into account both the mean predicted output at unsampled locations as well as the model uncertainty [16–18, 34]. Specifically in deterministic surrogate-driven optimization, the major source of error is the lack of fit between the predicted response and the numerical simulations of the computer model; however, this error can also be affected by other sources of uncertainty in stochastic cases. Once a surrogate model is built based on a number of function evaluations, any global minimizer of this model is an approximation of the real global minimum; however, it would be misleading to trust this prediction without taking into account the uncertainty of the model. For this purpose, most algorithms employ a figure of merit which balances any further search between subregions which have a minimum mean function value (in minimization problems) as well as regions which have large uncertainty. One of the most influential developments in the kriging optimization literature is the publication of Jones et al. [36] where the expected improvement (EI) criterion is used to guide the optimization search, which aims to balance between local and global search (Eq. 5).

where y is the calculated surrogate model approximation based on kriging and y min is the current iteration optimal location. In the analytical solution of the EI function, Φ and φ stand for the cumulative distribution and probability density function of a normal distribution, respectively, and s is the calculated kriging variance. The first term of the EI criterion accounts for the probability of the predicted output to be less than the current optimum, while the second term accounts for the uncertainty of the predicted output based on the kriging variance s. By maximizing the EI function, the point which has the highest probability of improving the current optimum is found and a simulation is run at this point, which updates the surrogate model. In this work, we employ the extension of the EGO algorithm in order to handle stochastic simulations is the work done by Huang et al. [32] which introduces the sequential kriging optimization (SKO) method, in which the process is allowed to have a noise term which follows a normal distribution with zero mean and standard deviation σ ε (ε ∼ N(0,σ ε )) which affects the ΕΙ function and transforms it to a stochastic expected improvement (SEI) criterion (Eq. 6). The additional term proposed to multiply the original EI function depends on the standard deviation of the random error and will be equal to 1 if this is not present. In Eq. 6, the term \( y_{\min}^s \) corresponds to the minimum of a set of identical samples which have the same set of input variables, but perhaps a slightly different output value due to the stochastic nature of the process. The SEI criterion allows for the figure of merit to choose points very close to each other, if the level of noise is high, since, as the kriging error (s(x)) is decreased within a region of the investigated space which has been sufficiently sampled, the effect of σ ε becomes significant. Thus, performing replicate runs in the same location increases the certainty of the final obtained optimal point.

The main contribution of this work is the modification of Eq. 6, where the noise term σ ε is again a function of x(σ ε (x)), since, based on the data obtained from the sensitivity analysis, the variation of the propagated noise as a function of input variables is available.

Sampling is the main source of computational expense in the types of models that are considered in this work, and it is well known that as the dimensionality of the problem increases, sampling increases exponentially. Consequently, it is advised that the initial selection of the important variables, which form the design space of the underlying system and should be included as inputs to the surrogate model, is performed carefully. Screening techniques [50] or sensitivity analysis (SA) methods [45] are suitable for the identification of the critical inputs and can reduce the dimensionality of the problem by eliminating insignificant variables that do not contribute considerably to the overall variance of the outputs which have an effect on the optimization objective. This initial step is of great significance since it can lead to a substantial decrease in the computational complexity of the next steps of the proposed approach, which include the design of the computer experiment and the actual optimization problem. In addition, if certain known correlations of the expensive simulation are simple and do not add to the computational cost, then these should be used in the optimization formulation in their original form, further reducing the dimensionality of the surrogate response surface model.

Black-Box Feasibility Analysis

One of the well-known problems in optimization methodologies is the presence of constraints or the feasibility since it is a challenge to find global but also feasible optima. Especially when the expensive surrogate-based optimization problem is not a box-constrained problem, the presence of complicating constraints cannot be handled by some of the global optimization solvers. In the literature, the majority of the developed methods deal with unconstrained optimization problems [5] or with box-constrained regions defined by upper and lower bounds of the input variables [32, 34–36]. However, in real systems, it is very common that the feasible region is defined by a set of linear or even nonlinear constraints which may or may not be known in advance. In [48], the authors consider black-box constraints; however, they treat each of the constraints as an additional response surface for which a surrogate model is built based on the obtained samples of the true function.

In this work, the feasibility of a point is quantified by one single value, which is called the feasibility function u (Problem 7) [28]. Previous work has dealt with black-box feasibility techniques [2–4, 9] which are used to approximate the feasible region, irrespective of shape, through the fitting of a single response surface to the value of the feasibility function. Specifically, the formulation of the feasibility analysis problem is given in Problem 6, where the scalar parameter u is defined as the feasibility function.

In Problem 7, the set of inequalities is a subset of the set of inequalities in Problem 1, for which the closed form is not known (black-box inequalities). A feasible operation can be attained at a specific location x of the investigated region when ψ(x) ≤ 0. There is a great advantage in employing black-box feasibility analysis as part of the surrogate-based optimization framework, namely, the fact that the number of initial simulations which are performed to form the surrogate response surface can be used to approximate the feasible region. Subsequently, not only the search for the next iterate sampling location is limited within the identified region, but also as the sampling set is iteratively expanded, the approximation of the feasible region is also updated.

Proposed Methodology

Methodology Steps

The outline of the steps of the method to solve the optimization problem described in Problem 1 is shown in Fig. 1; the details are explained below.

-

Step 1:

Assuming that the chosen input variables are independent, an initial set of sample locations is designed based on a computer design of experiments and the necessary function evaluations are performed. The designs can be based on grid designs or Latin hypercube sampling which has satisfactory space-filling properties in large dimensional spaces.

-

Step 2:

If complicated black-box constraints are present in the problem, then black-box feasibility analysis is performed based on the collected samples and an approximation of the closed-form solutions of the unknown constraints is developed. Through black-box feasibility analysis, based on the initial sampling of the expensive simulation, a surrogate model is built to approximate the value of u within the investigated region, which allows the approximation of the feasible region and the formation of a closed-form equation for each of the inequalities.

-

Step 3:

Parameter estimation of the surrogate model parameters is performed based on the collected data. Throughout this work, the modified or non-interpolating kriging methodology is used to develop an approximate metamodel for the objective as a function of the decision variables.

-

Step 4:

The current iteration optimum (y min) is found using a rigorous optimization solver which can handle constraints by solving Problem 1, where f(x) and a subset of the constraints are approximated by the two kriging models built in steps 2 and 3.

-

Step 5:

In this step, the SEI criterion is maximized based on the SKO figure of merit (Eq. 5), under the same constraints which are considered in step 4, using a constrained optimization technique since every function is now known in closed form. The constraint associated with the black-box feasibility is augmented by its corresponding kriging prediction error in order to ensure that the uncertainty of the predicted feasibility function is considered.

-

Step 6:

If the value of the SEI criterion is less than a prespecified tolerance, use current location as the optimum. If it is larger than the tolerance, then run an additional simulation for this location and go to step 2.

Test Example

In order to illustrate the basic steps of the approach, a benchmark problem, namely, the two-dimensional Rosenbrock function, is treated as the unknown expensive response surface and is optimized. This problem has been used as a benchmark example since, due to its nonlinear form, it has been considered as a challenge to locate the global optimum for several optimization solvers. In order to simulate a heteroscedastic variance case, artificial random noise is added to the function based on a uniform distribution of range (−0.01,0.01) for the region where x 2 ≥ 1.5 and of range (−0.05,0.05) in the remaining region (Fig. 2). A higher amount of noise has been added to the region where the optimum lies ([1,1]) in order make this test problem more challenging. In this example, both σ ε in Eq. 6 and the nugget parameter are a function of location x. Moreover, this has an effect on the nugget effect parameter of the kriging correlation function, which is also a function of the location of x. Even though the effect of noise is not very noticeable in Fig. 2—mainly due to the range of the response surface values—it is assured that the effect of this amount of noise is significant toward the identification of the true optimum since many gradient-based optimization solvers fail to converge for this problem. Finally, a constraint is added to the problem, which limits the feasible region within the space defined by (x 1 − 1)2 + (x 2 − 1)2 ≤ 1 (Fig. 2). However, the form of this constraint is assumed to be unknown and will be approximated through black-box feasibility.

The initial sampling was performed based on a 25-point Latin hypercube design. The algorithm converges after a total of 51 runs, resulting to a total of 176 necessary samples for the identification of the final obtained optimum of [0.98,0.95]. The deviation from the true optimum, subject to the fact that the measurements are noisy, is considered acceptable. In Fig. 3, the three first iteration expected improvement function forms are shown, where it can be verified that there is one single optimum point identified each time within the feasible region. In order to incorporate the effect of the uncertainty in the predicted feasibility region, the kriging variance for the feasibility function is added to u, such that there is an underestimation of the feasible region which gradually improves as more samples are collected and the kriging model for the feasibility function is improved in regions of interest. This way, we reinforce the possibility of remaining within the feasible region at all times. Finally, in Fig. 2b, the final predicted response surface along with the total number of samples is shown, where it can be observed that the predicted surface is not accurate within the entire investigated region. This is a result that is expected since the sampling has been restricted within a smaller region of the space which has a high potential to be optimal. The goal of this work was not to reproduce an accurate surface of the true optimum but locate the global optimum with a minimum number of calls to the expensive simulator.

Case Study: Continuous Tablet Manufacturing

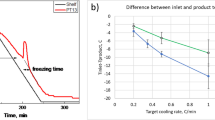

The expensive computer simulation in this application is a flowsheet simulation of a continuous tablet manufacturing process including powder feeders, mixers, hoppers, and a tablet press (Fig. 4). Specifically, the feeding system is modeled as a delay differential equation based on dynamic experimental data performed to characterize the dynamics of the process under different operating conditions. Powder blending is described as a two-dimensional population balance model, which is the main cause of the high computational burden of the process simulation [8, 52, 53]. The model captures the effect of the mixer rotation speed to the mean residence time of the material as well as the relative standard deviation (RSD) of the API concentration in the blender. Powder hoppers are modeled as buffer tank models, within which a mass flow regime and no further mixing are assumed. Finally, the tablet press is described by a mass balance between the powder entering the feed frame and the tablet production rate, assuming there is no loss of powder throughout the process. In addition, a widely accepted empirical correlation—Heckel equation—is used to correlate the compaction pressure of the tablet press to the porosity of the produced tablets [29].

In this case study, three main ingredients (API, excipient, and lubricant) are fed through individual powder feeders to the mixer within which they should be well blended in order to produce tablets of consistent API concentration, weight, porosity, and hardness. Specifically, the RSD of the API concentration calculated across the different locations of the mixing model is a critical parameter which quantifies mixing uniformity [56]. Process operating conditions, such as the mixing rotation rate as well as the amount of additives, such as the lubricant, affect the RSD as well as tablet properties, such as tablet hardness [20, 54]. Specifically, experimental studies have shown that there is a strong correlation between the RSD and the amount of lubricant concentration in the mixture, and this is incorporated in the flowsheet simulation as an additional empirical correlation. Another critical product quality is tablet porosity, which is a function of operating conditions within the tablet press, such as the compaction pressure. More details about the model can be found in Boukouvala et al. [10].

In order to operate this current integrated process continuously over a long period of time, the powder feeders must be refilled with material according to the formulation of the mixture and the total throughput of the process. This procedure is performed manually and has been shown to introduce a perturbation in the system, which is unavoidable given the current process specifications. In fact, at the time point in which one feeder is refilled, the output feed rate of this process instantaneously increases (pulse), introducing a large amount of material in the mixer which subsequently creates variability in the desired formulation. Subsequently, since the process is implemented in open loop, these perturbations are shown to propagate and upset the quality of a fraction of the production for a certain amount of time. The results obtained from this integrated simulation verify experimental observations regarding the effect of possible feeder refilling perturbations to the performance of downstream processes. However, it is also observed that refilling more often, before the amount of the material in the feeder has almost emptied, causes a less substantial perturbation [21]. In other words, the refilling strategy should be optimized in order to identify the refilling frequency, which leads to optimal performance of the open-loop tablet manufacturing line. It should be mentioned at this point that, as the research on process knowledge and monitoring for continuous powder-based pharmaceutical manufacturing evolves, ideally more advanced systems will be the subject of optimization, in which advanced control schemes will be incorporated. However, the goal of this work was to prove that pharmaceutical tablets may be produced continuously instead of in batch mode, faster and more efficiently, with the current process equipment available.

In order to make the flowsheet simulation more realistic, additional significant sources of variability can be incorporated, such as model parameter uncertainty and powder material property variabilities. This implies that for each call to the flowsheet simulation, the set of values for the chosen set of uncertain parameters will be randomly drawn from a known distribution, and this will lead to a slightly different output for the same set of decision variable values. The selection of the significant uncertain parameters is chosen based on a prior extensive SA study [10] of the flowsheet, and it includes material properties such as particle size distribution and bulk density of the incoming raw powder material as well as various model parameters. Prior work on sensitivity analysis included the simulation of 2,048 scenarios of the simulation model designed using Latin hypercube sampling, for each of which the input uncertain parameters were drawn from their corresponding distribution. The collected database aids in assessing the propagation of input uncertainty to—critical to the objective function—output values. The results from this SA study are not required in the optimization algorithm, but they are used to get more realistic estimates of the actual variability contained in the response, which is obtained from the simulation as a function of the different dimensions. This information is important to the modifications made to the surrogate response surface building step and the infill sampling criterion step.

For the implementation of the proposed methodology described in “Proposed Methodology,” MATLAB is interfaced with gPROMS through the go:Matlab interface in order to enable the automatic simulation of the flowsheet model at different design points

Definition of the Optimization Objective

The current integrated platform can be used to produce tablets continuously achieving higher production rates and faster time to market. However, the produced tablets must satisfy strict quality specifications, which implies that the optimization problem will include product design inequality constraints. In order to assess the applicability and potential economic benefits of producing pharmaceutical tablets in continuous mode using the current integrated platform, an optimization problem which includes the cost of operation of a 24-h continuous operation based on the current flowsheet is developed. A more comprehensive comparison of economic efficiency of continuous and batch pharmaceutical manufacturing is presented in [49], where the benefits of continuous manufacturing are clearly established for a different flowsheet configuration.

The objective function is given as the sum of operating cost, equipment cost, and cost of waste caused by the discarded off-spec tablets (Eq. 8).

Equipment cost is approximated through power-law empirical correlations [51] which connect process flow streams to the required process sizes (Table 1).

Operating cost is a sum of the consumed raw material cost which is calculated by the market cost of the individual materials used and utility cost (i.e., electricity). The cost of labor is not included in this study; however, when compared to batch, a continuous automated operation will in general require less human intervention. The set of decision variables are shown in Table 2, which include critical process operating conditions, refilling frequency, lubricant concentration, and the total material throughput. All of the decision variables have lower and upper bounds which are decided based on the currently available process specifications. It should be noted that, initially, a thorough analysis of all the possible inputs to the objective function from all the individual unit operations was performed in order to identify the significant decision variables which critically affect the value of the objective function. Specifically, a large sensitivity analysis study on the flowsheet model, which included uncertain inputs from various units of the model and their effect on critical outputs of the process flowsheet, rendered the chosen decision variables for this optimization problem [10]. In addition, certain input variables which do affect the objective or constraints of the problem, but are solely involved in simple algebraic equations which do not significantly increase the computational cost of the simulation, are not included as inputs to the surrogate response surface. The reason for this is that this will increase the dimensionality of the kriging model, leading to a need for additional model samples to increase model accuracy.

In addition, a set of complicated process constraints which involve final product quality specifications for the tablet concentration, hardness, porosity, and dissolution time must be satisfied at steady state (Eq. 10).

During a possible feeder refill, a perturbation is introduced into the system and the mixture composition is directly affected. Through the tracking of the powder and its mean residence time within each process, this perturbation is transported downstream, causing the product quality constraints to be violated for a specific time interval. This implies that the produced tablets will be discarded and, thus, the cost of waste is no more equal to zero until steady state is again reached. Finally, during a time period of 24 h of operation, a minimum required production must be achieved (106 tablets), which is assumed to be the minimum amount of tablets necessary to satisfy product demand.

In order to incorporate the effects of uncertainty caused by material property variations and model parameter uncertainty, the mean particle size of the three materials is drawn from their actual particle size distribution data; the input bulk density of the materials is also drawn from uniform distributions with upper and lower bounds defined by manufacturer specifications. Based on the results of prior sensitivity analysis, these two parameters have an effect on two of the decision variables of the simulated system: the total flow rate and MgSt concentration. The remaining decision variables are controllable by the user, thus do not contribute toward the final variability of the objective function. The significant model parameters which also contribute toward the final output variability are the Heckel model parameters as well as the tuned dynamic response parameters of the feeder models.

Results

Since the number of decision variables is N = 7, a total number of 77 design points are chosen initially and a Latin hypercube algorithm is used to identify the optimal sampling locations based on a max–min distance criterion. The number of design points of 77 is chosen based on the empirical rule of thumb of approximately 10N number of samples for an N-dimensional space. Based on these samples, the kriging model is built correlating the set of seven inputs to the value of the calculated objective function (total cost of a 24-h operation). In addition, three of the black-box inequalities of Problem 9 are used to form the feasibility problem, except for tablet porosity which is solely affected by tablet compaction pressure through an exponential algebraic equation; thus, this equation is used as is in the optimization problem. The certainty of this direct correlation is confirmed by the SA work, which has verified that the only factor which affects porosity is compaction pressure.

The desired formulation is set to 3 % of API (acetaminophen), 96 % of excipient (avicel), and only 1 % of lubricant (magnesium stearate). The algorithm requires a total number of 200 iterations in order to converge to optimality where the value of the objective function is $865,131. The total time required for the entire procedure, including the initial sampling of 77 simulations for the first surrogate mapping, optimization of kriging models, and the stochastic EI criterion, iteratively until convergence is in the order of 24 h.

At the identified optimum location, the values of the decision variables are given in Table 3. The most important result of this simulation-based optimization approach is the identification of a refilling strategy suggesting that if the feeders are refilled when the amount of the material present in each feeder is 54 % of the total capacity and operating at a total throughput of 54 kg/h of powder per day, the minimum production requirement of tablets is reached and the total cost of production is minimized. The problem of feeder refilling is a critical issue in the industry since it has been found to cause unavoidable perturbations in the system, which can be filtered out only to a certain extent by downstream processes. Thus, identifying an optimal refilling strategy for a specific production throughput and product in order to minimize the off-spec product is an important result. In addition, as expected based on experimental and mechanistic knowledge of the mixing performance, the optimal operating value of the mixing rotation rate is found to be approximately 100 rpm. This is in agreement with experimental evidence suggesting that at mid-range rotation rates, the mixing performance is optimal due to the balancing of the opposing effects of radial dispersion and axial movement of the particles [56]. The mixing rotation rate is a critical variable in the process of direct compaction where there is no further particle modification process downstream until tabletting since it controls the mixture’s variability which is directly related to the variability of the composition of the final tablets. Finally, based on the identified total throughput, the processes can be sized based on the correlations of Table 1. In the chemical industry, the preliminary process design includes the sizing of the equipment which will be used in the manufacturing process [6]. However, in the pharmaceutical industry, currently, there is no flexibility in the availability of the different-sized equipment. Despite this fact, the purpose of this work was to prove that a large enough production can be achieved through continuous integration of small-scale equipment. In fact, this result is very encouraging since the currently existing large-scale batch processes are often inefficient due to the large within-batch variability which hinders the implementation of effective control. Moreover, an equivalent size production of tablets through batch processing requires much more time since each processing step is performed sequentially, after which the powder is stored and perhaps transferred to the next process. This procedure of sequential and large-scale batch production not only increases time to market but also risks contamination and quality deterioration [44]. Finally, even though only a 24-h operation is simulated in this case study, it should be mentioned that the current continuous platform can be operated for a much larger time period, where the advantages of this mode of operation compared to batch processing will become even more apparent.

Moreover, based on the novel aspect of this work to combine black-box feasibility aspects, a single surrogate model for the feasibility of the integrated process becomes available. Consequently, one of the advantages of this approach is the fact that a closed-form expression for the design space of this multidimensional process is available and can be used to predict whether any combination of process conditions is expected to be within specifications. However, it should also be realized that the accuracy of the feasibility prediction is dependent on the density of sampling across the investigated space. Thus, if the samples collected during the optimization iterations are clustered within specific regions of the input space, the prediction of feasibility is expected to be more accurate locally. Due to the initial global sampling stage of the method, however, it can be ensured that a representative sample has been drawn from the entire input space. A set of three-dimensional plots of the design space predicted by the kriging response surface is shown in Fig. 5.

Conclusions and Perspectives

The present work aims at the solution of a very complex problem, namely, the simultaneous process and product optimization of continuous pharmaceutical tablet manufacturing, but also intends to introduce concepts of the simulation-based optimization literature to the pharmaceutical manufacturing audience. The advantages of process optimization techniques which are described in this work are, to this point, less popular in the pharmaceutical industry sector; however, this study aims to prove that simulation-based optimization techniques can have significant applications toward designing feasible processes which will produce consistent pharmaceutical products. The methodology used in this work integrates well-known concepts of the simulation-based optimization literature with concepts of black-box feasibility and heteroscedastic data regression in order to optimize a stochastic-constrained process which is computationally expensive. The presence of stochastic effects coupled with complex complicating constraints are the major challenges of the developed pharmaceutical process flowsheet, which current optimization methods cannot handle efficiently.

It is realized that the progress in pharmaceutical process flow sheeting is still at its preliminary stages, and there exist far more correlations and effects which are not yet captured by the simulation model. Consequently, these effects cannot be affecting the final optimization objective. For example, the current process flowsheet does not capture all the probable sources of variability of the process, which will affect RSD during the dynamic operation of the continuous platform, such as batch-to-batch variability or multiple material property effects related to flowability and cohesiveness. Nevertheless, this work aims to propose the platform which will be used in the future for the optimization of such models as they are refined to include more sources of variability. In addition, future work will focus on the refinement of the optimization objectives, which will be facilitated by ongoing work toward the implementation of continuous pharmaceutical manufacturing processes. It is true that as the complexity of the underlying flowsheet will increase, justification for the use of a surrogate simulation-based optimization approach will be higher; however, there are still many challenges for the establishment of a robust methodology which can handle black-box constraints and noise for the optimization of higher dimensional problems.

References

Baldi Antognini A, Zagoraiou M. Exact optimal designs for computer experiments via kriging metamodelling. J Stat Plan Infer. 2010;140(9):2607–17.

Banerjee I, Ierapetritou MG. Design optimization under parameter uncertainty for general black-box models. Ind Eng Chem Res. 2002;41(26):6687–97.

Banerjee I, Ierapetritou MG. Feasibility evaluation of nonconvex systems using shape reconstruction techniques. Ind Eng Chem Res. 2005;44(10):3638–47.

Banerjee I, Pal S, et al. Computationally efficient black-box modeling for feasibility analysis. Comput Chem Eng. 2010;34(9):1515–21.

Bertsimas D, Nohadani O, et al. Robust optimization for unconstrained simulation-based problems. Oper Res. 2010;58(1):161–78.

Biegler LT, Grossmann IE, et al. Systematic methods of chemical process design. Upper Saddle River: Prentice Hall; 1997.

Booker AJ, Dennis JE, et al. A rigorous framework for optimization of expensive functions by surrogates. Struct Multidiscip Optim. 1999;17(1):1–13.

Boukouvala F, Dubey A, et al. Computational approaches for studying the granular dynamics of continuous blending processes, 2-population balance and data-based methods. Macromol Mater Eng. 2012;297(1):9–19.

Boukouvala F, Ierapetritou MG. Feasibility analysis of black-box processes using an adaptive sampling kriging-based method. Comput Chem Eng. 2012;36:358–68.

Boukouvala F, Niotis V, et al. An integrated approach for dynamic flowsheet modeling and sensitivity analysis of a continuous tablet manufacturing process. Comput Chem Eng. 2012;42:30–47.

Boukouvala F, et al. Computer aided design and analysis of continuous pharmaceutical manufacturing processes, In: Computer aided chemical engineering, Elsevier. 2011;29:216–220.

Box GEP, Wilson KB. On the experimental attainment of optimum conditions. J R Stat Soc B (Methodological). 1951;13(1):1–45.

Caballero JA, Grossmann IE. An algorithm for the use of surrogate models in modular flowsheet optimization. AICHE J. 2008;54(10):2633–50.

Crary SB. Design of computer experiments for metamodel generation. Analog Integr Circ Sig Process. 2002;32(1):7–16.

Cressie, N. (1993). Statistics for Spatial Data (Wiley Series in Probability and Statistics), Wiley-Interscience

Davis E, Ierapetritou M. A kriging method for the solution of nonlinear programs with black-box functions. AICHE J. 2007;53(8):2001–12.

Davis E, Ierapetritou M. A kriging-based approach to MINLP containing black-box models and noise. Ind Eng Chem Res. 2008;47(16):6101–25.

Davis E, Ierapetritou M. A kriging based method for the solution of mixed-integer nonlinear programs containing black-box functions. J Glob Optim. 2009;43(2–3):191–205.

Davis E, Ierapetritou M. A centroid-based sampling strategy for kriging global modeling and optimization. AICHE J. 2010;56(1):220–40.

Dec RT, Zavaliangos A, et al. Comparison of various modeling methods for analysis of powder compaction in roller press. Powder Technol. 2003;130(1–3):265–71.

Engisch, W. and F. J. Muzzio (2010). Hopper refill of loss-in-weight feeding equipment. AIChE Annual Conference, Salt Lake City.

Forrester AIJ, Sóbester A. et al. Engineering design via surrogate modeling—a practical guide. New York: Wiley; 2008.

Fowler K, Jenkins E, et al. Understanding the effects of polymer extrusion filter layering configurations using simulation-based optimization. Optim Eng. 2010;11(2):339–54.

Fu MC. Feature article: optimization for simulation: theory vs. practice. INFORMS J Comput. 2002;14(3):192–215.

Gernaey KV, Cervera-Padrell AE, et al. A perspective on PSE in pharmaceutical process development and innovation. Comput Chem Eng. 2012;42:15–29.

Gernaey KV, Gani R. A model-based systems approach to pharmaceutical product-process design and analysis. Chem Eng Sci. 2010;65(21):5757–69.

Gruhn G, Werther J, et al. Flowsheeting of solids processes for energy saving and pollution reduction. J Clean Prod. 2004;12(2):147–51.

Halemane KP, Grossmann IE. Optimal process design under uncertainty. AICHE J. 1983;29(3):425–33.

Heckel RW. Density–pressure relationships in powder compaction. Trans Metall Soc AIME. 1961;221(4):671–5.

Horowitz B, Guimarães LJDN, et al. A concurrent efficient global optimization algorithm applied to polymer injection strategies. J Pet Sci Eng. 2010;71(3–4):195–204.

Huang D. Experimental planning and sequential kriging optimization using variable fidelity data, in Industrial and Systems Engineering. 2005, Ohio State University: Ohio.

Huang D, Allen T, et al. Global optimization of stochastic black-box systems via sequential kriging meta-models. J Glob Optim. 2006;34(3):441–66.

Husain A, Kim K-Y. Enhanced multi-objective optimization of a microchannel heat sink through evolutionary algorithm coupled with multiple surrogate models. Appl Therm Eng. 2010;30(13):1683–91.

Jakobsson S, Patriksson M, et al. A method for simulation based optimization using radial basis functions. Optim Eng. 2010;11(4):501–32.

Jones DR. A taxonomy of global optimization methods based on response surfaces. J Glob Optim. 2001;21(4):345–83.

Jones DR, Schonlau M, et al. Efficient global optimization of expensive black-box functions. J Glob Optim. 1998;13(4):455–92.

Kleijnen JPC. Kriging metamodeling in simulation: a review. Eur J Oper Res. 2009;192(3):707–16.

Leuenberger H. New trends in the production of pharmaceutical granules: batch versus continuous processing. Eur J Pharm Biopharm. 2001;52(3):289–96.

McKenzie P, Kiang S, et al. Can pharmaceutical process development become high tech? AICHE J. 2006;52(12):3990–4.

Ng KM. Design and development of solids processes—a process systems engineering perspective. Powder Technol. 2002;126(3):205–10.

Nunnally BK, McConnell JS. Six sigma in the pharmaceutical industry: understanding, reducing, and controlling variation in pharmaceuticals and biologics. Boca Raton: CRC; 2007.

Pedone P, Romano D, Vicario G. New Sampling Procedures in coordinate metrology based on kriging-based adaptive designs. Statistics for Innovation. 2009;103–21.

Pistone G, Vicario G. Design for computer experiments: comparing and generating designs in kriging models. Statistics for Innovation. 2009;91–102.

Plumb K. Continuous processing in the pharmaceutical industry: changing the mind set. Chem Eng Res Des. 2005;83(6):730–8.

Queipo NV, Haftka RT, et al. Surrogate-based analysis and optimization. Prog Aerosp Sci. 2005;41(1):1–28.

Regis RG. Stochastic radial basis function algorithms for large-scale optimization involving expensive black-box objective and constraint functions. Comput Oper Res. 2011;38(5):837–53.

Sacks J, Welch WJ, et al. Design and analysis of computer experiments. Stat Sci. 1989;4(4):409–23.

Sankaran S, Audet C, et al. A method for stochastic constrained optimization using derivative-free surrogate pattern search and collocation. J Comput Phys. 2010;229(12):4664–82.

Schaber SD, Gerogiorgis DI, et al. Economic analysis of integrated continuous and batch pharmaceutical manufacturing: a case study. Ind Eng Chem Res. 2011;50(17):10083–92.

Schonlau M, Welch W. Screening the input variables to a computer model via analysis of variance and visualization. Screening. 2006;308–27.

Seider WD. Product and process design principles: synthesis, analysis, and evaluation. Hoboken: Wiley; 2009.

Sen M, Ramachandran R. A multi-dimensional population balance model approach to continuous powder mixing processes. Adv Powder Technol. 2013;24(1):51–9.

Sen M, Singh R, et al. Multi-dimensional population balance modeling and experimental validation of continuous powder mixing processes. Chem Eng Sci. 2012;80:349–60.

Soh JLP, Wang F, et al. Utility of multivariate analysis in modeling the effects of raw material properties and operating parameters on granule and ribbon properties prepared in roller compaction. Drug Dev Ind Pharm. 2008;34(10):1022–35.

Stephanopoulos G, Reklaitis GV. Process systems engineering: from Solvay to modern bio- and nanotechnology. A history of development, successes and prospects for the future. Chem Eng Sci. 2011;66(19):4272–306.

Vanarase AU, Alcala M, et al. Real-time monitoring of drug concentration in a continuous powder mixing process using NIR spectroscopy. Chem Eng Sci. 2010;65(21):5728–33.

Villemonteix J, Vazquez E, et al. Global optimization of expensive-to-evaluate functions: an empirical comparison of two sampling criteria. J Glob Optim. 2009;43(2):373–89.

Villemonteix J, Vazquez E, et al. An informational approach to the global optimization of expensive-to-evaluate functions. J Glob Optim. 2009;44(4):509–34.

Wan X, Pekny JF, et al. Simulation-based optimization with surrogate models—application to supply chain management. Comput Chem Eng. 2005;29(6):1317–28.

Werther J, Reimers C, et al. Flowsheet simulation of solids processes—data reconciliation and adjustment of model parameters. Chem Eng Process. 2008;47(1):138–58.

Werther J, Reimers C, et al. Design specifications in the flowsheet simulation of complex solids processes. Powder Technol. 2009;191(3):260–71.

Yin J, Ng SH, et al. Kriging metamodel with modified nugget-effect: the heteroscedastic variance case. Comput Ind Eng. 2011;61(3):760–77.

Yu L. Pharmaceutical quality by design: product and process development, understanding, and control. Pharm Res. 2008;25(4):781–91.

Yuan J, Wang K, et al. Reliable multi-objective optimization of high-speed WEDM process based on Gaussian process regression. Int J Mach Tools Manuf. 2008;48(1):47–60.

Acknowledgments

The authors would like to acknowledge the funding provided by the ERC (NSF-0504497, NSF-ECC 0540855).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

-

A.

Deterministic Kriging Modeling

For a set of m observations for an n-dimensional space, the collected samples are X = {x (1),…,x (m)} T and the response measured at these points is Y = {Y(x (1)),…,Y(x (m))} T.

Consider the correlation matrix between all observed data points (m).

$$ \mathbf{R}=\left[ {\begin{array}{*{20}c} {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(1) }},{{\mathbf{x}}^{(1) }}} \right)} \right]} & \ldots & {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(1) }},{{\mathbf{x}}^{(m) }}} \right)} \right]} \\ \vdots & \ddots & \vdots \\ {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(m) }},{{\mathbf{x}}^{(1) }}} \right)} \right]} & \ldots & {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(m) }},{{\mathbf{x}}^{(m) }}} \right)} \right]} \\ \end{array}} \right]=\left[ {\begin{array}{*{20}c} 1 & \ldots & {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(1) }},{{\mathbf{x}}^{(m) }}} \right)} \right]} \\ \vdots & \ddots & \vdots \\ {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(m) }},{{\mathbf{x}}^{(1) }}} \right)} \right]} & \ldots & 1 \\ \end{array}} \right] $$By definition, the covariance matrix will be equal to

$$ \operatorname{Cov}\left( {\mathbf{Y,Y}} \right)={\sigma^2}\mathbf{R} $$(11)where σ 2 is the standard deviation of the data. Any two points are correlated based on the chosen basis function (i.e., Eq. 12)

$$ \operatorname{Cor}\left[ {\mathbf{Y} \left( {{{\mathbf{x}}^{(i) }}} \right),\mathbf{Y} \left( {{{\mathbf{x}}^{(j) }}} \right)} \right]=\exp \left( {-\sum\limits_{k=1}^n {{\theta_k}} {{{\left| {x_k^{(i) }-x_k^{(j) }} \right|}}^{{{p_k}}}}} \right) $$(12)Based on a set of observed data, in order to build a kriging model, it is required to minimize the error between the observed response Y and the predicted kriging response. This can be expressed as maximizing the likelihood of Y, which is given in Eq. 13.

$$ L=\frac{1}{{{{{\left( {2\pi {\sigma^2}} \right)}}^{{{m \left/ {2} \right.}}}}\sqrt{{\left| \mathbf{R} \right|}}}}\exp \left[ {-\frac{{{{{\left( {\mathbf{y}-\mathbf{1}\mu } \right)}}^T}{{\mathbf{R}}^{-1 }}\left( {\mathbf{y}-\mathbf{1}\mu } \right)}}{{2{\sigma^2}}}} \right] $$(13)which gives the solution of the maximum-likelihood estimators of the mean (\( \widehat{\mu} \)) and variance (\( {{\widehat{\sigma}}^2} \)) of the observed data for the optimum parameters θ and p.

$$ \begin{array}{*{20}c} {\hat{\mu}=\frac{{{{\mathbf{1}}^T}{{\mathbf{R}}^{-1 }}\mathbf{y}}}{{{{\mathbf{1}}^T}{{\mathbf{R}}^{-1 }}\mathbf{1}}}} \hfill \\ {{{\hat{\sigma}}^2}=\frac{{{{{\left( {\mathbf{y}-\mathbf{1}\mu } \right)}}^T}{{\mathbf{R}}^{-1 }}\left( {\mathbf{y}-\mathbf{1}\mu } \right)}}{m}} \hfill \\ \end{array} $$(14)For any new point, the objective is to maximize the likelihood of the sampled data and the prediction, given the parameters obtained by the model construction step. For this purpose, the correlation matrix is augmented by the correlation between the sampled points and the new point (r), which has unknown Y.

$$ {{\mathbf{R}}^{{\left( {\operatorname{aug}} \right)}}}=\left[ {\begin{array}{*{20}c} \mathbf{R} \hfill & \mathbf{r} \hfill \\ {{{\mathbf{r}}^T}} \hfill & 1 \hfill \\ \end{array}} \right] $$(15)where

$$ \mathbf{r}=\left[ {\begin{array}{*{20}c} {\operatorname{cor}\left( {Y\left( {{{\mathbf{x}}^{(1) }}} \right),Y\left( {{{\mathbf{x}}^{{\left( {\operatorname{new}} \right)}}}} \right)} \right)} \\ \vdots \\ {\operatorname{cor}\left( {Y\left( {{{\mathbf{x}}^{(m) }}} \right),Y\left( {{{\mathbf{x}}^{{\left( {\operatorname{new}} \right)}}}} \right)} \right)} \\ \end{array}} \right] $$Maximizing the likelihood of the augmented data leads to the solution of Eq. 1.

$$ \widehat{y}\left( {{{\mathbf{x}}^{{\left( {\operatorname{new}} \right)}}}} \right)=\widehat{\mu}+{{\mathbf{r}}^T}{{\mathbf{R}}^{-1 }}\left( {\mathbf{y}-1\widehat{\mu}} \right) $$(16) -

B.

Modified Kriging for Noisy Data

In the case where it is not desired to purely interpolate the experimental data, a nugget effect parameter (w) is added to the diagonal of R, such that in the case where the distance between two points approaches zero, the correlation is no longer equal to 1 (Eq. 17).

$$ \left| {{{\mathbf{x}}^{(i) }}-{{\mathbf{x}}^{{\left( {\operatorname{new}} \right)}}}} \right|\to 0\Rightarrow \operatorname{cor}\left( {{{\mathbf{x}}^{(i) }},{{\mathbf{x}}^{{\left( {\operatorname{new}} \right)}}}} \right)\to 1+w $$(17)$$ {{\mathbf{R}}^{{(\bmod )}}}=\mathbf{R}+w\mathbf{I}=\left[ {\begin{array}{*{20}c} {1+w} & \ldots & {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(1) }},{{\mathbf{x}}^{(m) }}} \right)} \right]} \\ \vdots & \ddots & \vdots \\ {\operatorname{cor}\left[ {Y\left( {{{\mathbf{x}}^{(m) }},{{\mathbf{x}}^{(1) }}} \right)} \right]} & \ldots & {1+w} \\ \end{array}} \right] $$(18)Employing the same exact procedure to obtain the kriging prediction leads to the y (mod).

$$ \begin{array}{*{20}c} {{{\widehat{y}}^{{\left( {\bmod } \right)}}}={{\widehat{\mu}}^{{\left( {\bmod } \right)}}}+{{\mathbf{r}}^T}\left( {\mathbf{R}+w\mathbf{I}} \right)\left( {\mathbf{y}-1{{\widehat{\mu}}^{{\left( {\bmod } \right)}}}} \right)} \hfill \\ {\operatorname{where}} \hfill \\ {{{\widehat{\mu}}^{{\left( {\bmod } \right)}}}=\frac{{{1^T}{{{\left( {\mathbf{R}+w\mathbf{I}} \right)}}^{-1 }}\mathbf{y}}}{{{1^T}{{{\left( {\mathbf{R}+w\mathbf{I}} \right)}}^{-1 }}1}}} \hfill \\ \end{array} $$(19)

Rights and permissions

About this article

Cite this article

Boukouvala, F., Ierapetritou, M.G. Surrogate-Based Optimization of Expensive Flowsheet Modeling for Continuous Pharmaceutical Manufacturing. J Pharm Innov 8, 131–145 (2013). https://doi.org/10.1007/s12247-013-9154-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12247-013-9154-1