Abstract

The main aim of this paper is to study the effect of the environmental noises in the asymptotic properties of a stochastic version of the classical SIRS epidemic model. The model studied here include white noise and telegraph noise modeled by Markovian switching. We obtained conditions for extinction both in probability one and in pth moment. We also established the persistence of disease under different conditions on the intensities of noises, the parameters of the model and the stationary distribution of the Markov chain. The highlight point of our work is that our conditions are sufficient and almost necessary for extinction and persistence of the epidemic. The presented results are demonstrated by numerical simulations.

Similar content being viewed by others

1 Introduction

In recent years, mathematical models have been used increasingly to support public health policy making in the field of infectious disease control. The first roots of mathematical modeling date back to the eighteenth century, when Bernoulli [1] used mathematical methods to estimate the impact of smallpox vaccination on life expectancy. However, a rigorous mathematical framework was first worked out by Kermack and Mckendrick [2]. Their model, nowadays best known as the SIR model, has been the basis of all further modeling [3–6]. The SIR epidemic model, classifies individuals as one of susceptible, infectious and removed with permanent acquired immunity. In fact, some removed individuals lose immunity and return to the susceptible compartment. This case can be modeled by SIRS epidemic model studied by many scholars (see, e.g., [3, 7] and the references cited therein). For a fixed population size, let S(t) be the frequency of susceptible individuals, I(t) be the frequency of infective individuals and R(t) be the frequency of removed individuals at time t. A simple SIRS epidemic model is described by the following differential system:

The meaning of parameters is as follows: \(\mu \) represents the birth and death rates, \(\beta \) is the infection coefficient, \(\lambda \) is the recovery rate of the infective individuals, \(\gamma \) is the rate at which recovered individuals lose immunity and return to the susceptible class. The dynamics of system (1) has been discussed in [3] in terms of the basic reproduction number \(\mathcal {R}_0=\frac{\beta }{\mu +\lambda }\). It is shown that if \(\mathcal {R}_0 \le 1\), the free-disease equilibrium state \(E_0\left( 1,0,0\right) \) is globally asymptotically stable. while \(\mathcal {R}_0>1\), \(E_0\) becomes unstable and there exists an endemic equilibrium state \(E_*\left( \frac{1}{\mathcal {R}_0},\frac{(\mu +\lambda )(\mu +\gamma )(\mathcal {R}_0-1)}{\beta (\mu +\lambda +\gamma )},\frac{\lambda (\mu +\lambda )(\mathcal {R}_0-1)}{\beta (\mu +\lambda +\gamma )}\right) \) which is globally asymptotically stable.

In real situation, parameters involved with the model are not absolute constants and always fluctuate randomly around some average value due to continuous fluctuation in the environment. Hence equilibrium distributions obtained from the deterministic analysis are not realistic rather they fluctuate randomly around some average value. Lu [8] introduced stochasticity into the SIRS model (1) via the technique of parameter perturbation. He replaced the infection coefficient \(\beta \) by \(\beta +\sigma \frac{dB}{dt}\), where B is a Brownian motion defined on the complete probability space \((\Omega ,\mathcal {F}, \{\mathcal {F}_{t}\}_{t\ge 0},\mathbb {P})\) with a filtration \( \{\mathcal {F}_{t}\}_{t\ge 0}\) satisfying the usual conditions and \(\sigma \) is the intensity of the noise. So, the stochastic version of the deterministic system (1) is

Lu [8] showed the local stability in probability of the disease-free equilibrium \(E_0\) under the condition

Lahrouz et al. [9] improved the model (2) by supposing the saturated incidence rate \(\frac{\beta SI}{1+a I}\) and disease-inflicted mortality. They proved the uniqueness and positivity of the solution and showed that the condition (3) is sufficient for the global stability in probability of \(E_0\). they also established condition for the global stability in pth moment of \(E_0\). Recently, in [10], Lahrouz and Settati found the threshold between persistence and extinction of the disease from the population. The technique of parameter perturbation has been used by many scholars (see, e.g., [11–13] and the references cited therein). However, this is not the only way to introduce stochasticity into the deterministic model (2).

There is another type of environmental noise, namely color noise, say telegraph noise [14–18]. Telegraph noise can be illustrated as a switching between two or more regimes of environment, which differ by factors such as nutrition, climatic characteristics or socio-cultural factorsfactors. The latter may cause the disease to spread faster or slower. Frequently, the switching among different environments is memoryless and the waiting time for the next switch is exponentially distributed. The regime-switching can hence be modeled by a finite-state Markov chain. Let r(t) be Markov chain defined in a finite state space \(\mathbb {S}=\{1,2,\ldots ,m\}\) with the generator \(\Theta =(\theta _{uv})_{1\le u,v\le m}\) given, for \(\delta >0\), by

Here, \(\theta _{uv}\) is the transition rate from u to v while

In this paper, we set up a stochastic SIRS model in random environments using the following stochastic differential equation under regime switching:

The mechanism of the SIRS epidemic model described by system (6) can be explained as follows: Assume that initially, the Markov chain \(r(0)=i\in \mathbb {S}\), then the model (6) satisfies

until r(t) jumps to another state, say, \(j\in \mathbb {S}\). Then the model obeys

for a random amount of time until r(t) jumps to a new state again.

Since system (6) describes an epidemic model, it is critical to find out when the epidemic die out from the population and when does not. As far as we know, there are no persistent and extinction results for system (6). The aim of this work is to investigate this problem. The remainder of the paper is organized as follows: In Sects. 2, 3 and 4, we study the extinction case of the SDE model (6) under different types of convergence. In Sects. 5 and 6, we prove that the non-linear SDE (6) is persistent under certain parametric conditions. In Sect. 7, we introduce numerical simulations to illustrate the main results. Finally, we close the paper with conclusions and future directions.

2 The global stability of the disease-free equilibrium state

In this section, we will discuss the extinction of SDE system (6) in order to provide the threshold condition for disease control or eradication. We introduce the notation

To begin the analysis of the model, define the subset

System (6) can be written as the following form:

where \(X(t)=\left( S(t),I(t),R(t)\right) \), B is a Brownian motion defined on the complete probability space \((\Omega ,\mathcal {F}, \{\mathcal {F}_{t}\}_{t\ge 0},\mathbb {P})\) and \((r(t))_{t\ge 0}\) is a right-continuous Markov chain defined on the same probability space, taking values in the finite state space \(\mathbb {S}=\{1,2,\ldots ,m\}\) and having the generator \(\Theta =(\theta _{uv})_{1\le u,v\le m}\) defined as in (4) and (5). With the reference to Zhu and Yin [19], the diffusion matrix is defined, for each \(j\in \mathbb {S}\) by

For use in rest of this paper, we introduce the generator \(\mathcal {L}\) associated with (7) as follows. For each \(j\in \mathbb {S}\), and for any twice continuously differentiable V(y, j),

where

To ensure that the model is well posed and thus biologically meaningful, we need to prove that the solution remains in \(\Delta \). By the similar proof of Theorem 2.1 in [20] or Theorem 2 in [9], we have the following theorem:

Theorem 2.1

The set \(\Delta \) is almost surely positively invariant by the system (6), that is, if \(\left( S(0),I(0),R(0)\right) \in \Delta ,\) then \(\mathbb {P}\left( \left( S(t),I(t),R(t)\right) \in \Delta \right) =1\) for all \(t\ge 0.\)

With reference to Khasminskii et al. [21] and Yuan and Mao [22], we have the following lemma giving sufficient condition for asymptotical stability in probability in term of Lyapunov fuctions. We refer to Khasminskii et al. [21] for the precise meaning of asymptotical stability in probability.

Lemma 2.1

Assume that that there are functions \(V\in \mathcal {C}^2\left( \mathbb {R}^3\times \mathbb {S};\mathbb {R}^+\right) \) and \(w\in \left( \mathbb {R}^3;\mathbb {R}^+\right) \) vanishes only at \(E_0\) such that

and

Then the equilibrium \(E_0\) of the system (6) is globally asymptotically stable in probability.

In what follows, we shall give a condition for extinction of disease expressed in terms of the parameters of the model. To begin with, let us define the following quantities. For all \(x\in \mathbb {R}\) and \(j\in \mathbb {S}\),

Theorem 2.2

For any initial values \((S_{0},I_{0}, R_{0})\in \Delta \). If \(\beta _{j}\ge \sigma _{j}^{2}\) for all \(j\in \mathbb {S}\), and

then the disease-free \(E_{0}\) of system (6) is globally asymptotically stable in probability.

Proof

Let \(\left( S(0),I(0),R(0)\right) \in \Delta \). Let us define the Lyapunov functions

where \(\omega _{1}\), \(\kappa \), \(\omega _{2}\) and \(a_{j}\) are real positive constants to be chosen in the following. We have

Since \(S,I\in (0,1)\) and \(I\le 1-S\), we have, for all \(\kappa \ge 1\),

Hence, by choosing \(\omega _{2}<\min _{j\in \mathbb {S}}\left\{ \frac{\omega _{1}\gamma _{j}}{\lambda _{j}}\right\} ,\) we get, for \(\kappa \ge 1\),

where the functions \(A_{j}\) are defined in (11). One can easily show, that if \(\beta _{j}\ge \sigma _{j}^{2}\) then the functions \(A_{j}\) are all increasing on (0,1), which means \(A_{j}(S)\le A_{j}(1)\) and then

where \(C_{j}=A_{j}(1)\) is defined in (11). Since the generator matrix \(\Theta \) is irreducible, then for \(C=(C_1,\ldots ,C_m)^T\), there exists \(\Lambda =(a_1,\ldots ,a_m)^T\) solution of the Poisson system [21]

where \(\mathbf {e}\) denotes the column vector with all its entries equal to 1. Inject (15) in (14), we obtain

By (12), we can choose a sufficiently large \(\kappa _{0}\) such that

Let us then choose \(\kappa \) and \(\omega _{1}\) such that \(\kappa >\max \{-\min _{j\in \mathbb {S}}\{ a_{j}\},\kappa _{0},1\},\) and

which means that the coefficients of \((1-S)^{2}, I^{2}\) and \(R^{2}\) in (16) are all negatives. According to Lemma 2.1 the proof is completed. \(\square \)

3 Almost sure exponential stability

Theorem 3.1

For any initial values \((S_{0},I_{0}, R_{0})\in \Delta \), the solution of the stochastic differential equation (6) obeys

Moreover, if

then the disease-free \(E_{0}\) is almost surely exponentially stable in \(\Delta \). In other words, the disease dies out with probability one.

Proof

Let us define the functions \(V_{2}(S,I,R,j)=\log \left( 1-S+I+R\right) .\) By the Itô’s formula, we have

Since

we can easily obtain

By integration, we get

where

We can easily show that the quadratic variation of \(M_{t}\) satisfies

Thus, by the large number theorem for martingales (see Mao [23]), we have

and by the ergodic theory of the Markov chain

From (18), (19) and (20) we obtain the desired asseration. \(\square \)

4 Moment exponential stability

Now, we present the following theorem which gives conditions for the moment exponential stability of the free-disease equilibrium state of the stochastic model (6).

Theorem 4.1

For any initial values \((S_{0},I_{0}, R_{0})\in \Delta \) and \(p>0\), the solution of the stochastic differential equation (6) obeys

Moreover, if

then the free-disease equilibrium state \(E_{0}\) is pth moment exponentially stable in \(\Delta \).

Proof

Let \(p>0\). From (18), we have

By the ergodic theory of Markov chain, we have, for all \(\varepsilon >0\) and for all t sufficiently large

Combining (22) and (23) and taking expectation yields

where \(pM_t\) is a real-valued continuous martingale, with \(pM_0=0\), having the quadratic variation

which implies, by using the ergodic propriety of r(t),

Hence, the associated exponential of \(pM_t\), that is \(e^{pM_t-\frac{1}{2}[pM_t]}\), is martingale. So, for t sufficiently large, we have

Combining (24) and (25) yields

Then

By letting \(\varepsilon \rightarrow 0\), we obtain the desired results. \(\square \)

5 Persistence of the disease

Firstly, let us begin with the following proposition which will be useful in our study of the persistence of SDE model (6).

Proposition 5.1

Let X and Y be two positive processus with initial values \(X_{0}\) and \(Y_{0}\) and satisfying the differential equation

where \(a_{j}>0\) and \(b_{j}>0\) are positives constants for all \(j\in \mathbb {S}.\) Then, we have

Proof

Let \(\varepsilon >0\) be sufficiently small. Then there exists \(t_{0}\) such that for all \(t\ge t_{0}\),

From (26), we have

By integration, we get

By letting \(t\rightarrow \infty \) and \(\varepsilon \rightarrow 0\), we get the required assertion (27).

Similarly, from (26) we have for all sufficiently small \(\varepsilon >0\), there exists \(t_{0}'\) such that for all \(t\ge t_{0}'\),

and by letting \(t\rightarrow \infty \) and \(\varepsilon \rightarrow 0\), we obtain th desired assertion (28). \(\square \)

Thereafter, we shall establish the persistence of disease under different conditions on the intensities of noises and the parameters of the model. To begin with, let us define the two sets \(J_{\ge }=\{j\in \mathbb {S}, \beta _{j}\ge \sigma ^{2}_{j}\}\), \( J_{<}=\{j\in \mathbb {S}, \beta _{j}<\sigma ^{2}_{j}\},\) and recall the following functions which will be used extensively in what follows,

Theorem 5.1

For any initial values \((S_{0},I_{0}, R_{0})\in \Delta \), if for any \(j\in J_{<}\)

where the quantities \(C_{j}\) are defined in (11), then the solution of SDE (6) obeys

-

(a)

\(\limsup _{t\rightarrow \infty }S(t)\ge \nu _{1}\), a.s.,

-

(b)

\(\liminf _{t\rightarrow \infty }S(t)\le \nu _{2}\), a.s,

-

(c)

\(\limsup _{t\rightarrow \infty }I(t)\ge \frac{\min _{j\in \mathbb {S}}(\mu _{j}+\gamma _{j})}{\min _{j\in \mathbb {S}}(\mu _{j}+\gamma _{j})+\max _{j\in \mathbb {S}}\lambda _{j}} (1-\nu _{2})\), a.s.,

-

(d)

\(\liminf _{t\rightarrow \infty }I(t)\le \frac{\max _{j\in \mathbb {S}}(\mu _{j}+\gamma _{j})}{\max _{j\in \mathbb {S}}(\mu _{j}+\gamma _{j})+\min _{j\in \mathbb {S}}\lambda _{j}} (1-\nu _{1})\) , a.s.,

-

(e)

\(\limsup _{t\rightarrow \infty }R(t)\ge \frac{\min _{j\in \mathbb {S}}\lambda _{j}}{\max _{j\in \mathbb {S}}(\mu _{j}+\gamma _{j})+\min _{j\in \mathbb {S}}\lambda _{j}} (1-\nu _{2})\), a.s.,

-

(f)

\(\liminf _{t\rightarrow \infty }R(t)\le \frac{\max _{j\in \mathbb {S}}\lambda _{j}}{\min _{j\in \mathbb {S}}(\mu _{j}+\gamma _{j})+\max _{j\in \mathbb {S}}\lambda _{j}} (1-\nu _{1})\) , a.s.,

where, for \(j\in J_{<}\), \(\xi _{j}\) and \(\xi \) are, respectively the unique positives roots, on (0, 1), of

and \(\nu _{1}=\min \left\{ \xi ,\min _{j\in J_{<}}\{\xi _{j}\}\right\} \) and \(\nu _{2}=\max \left\{ \xi ,\max _{j\in J_{<}}\{\xi _{j}\}\right\} \).

Proof

(a) By the Itô’s formula, we get from (6)

From (34), we have for any \(j\in J_{<}\),

Then, for any \(j\in J_{<}\), the equation \(A_j(x)=0\) admits a unique root \(\xi _j\in (0,1)\). Moreover \(A_j(x)\) is increasing on \((0,\xi _j)\) and for all sufficiently small \(\varepsilon >0\), for any \(j\in J_{<}\) and all x such that \(0<x\le \xi _{j}-\varepsilon \), we have

Similarly, by (34), the equation \(\sum _{j\in J_{\ge }}\pi _j A_j(x)=0\) admits a unique root \(\xi \in (0,1)\). So, one can easily show that, for all sufficiently small \(\varepsilon >0\), and x such that \(0<x\le \xi -\varepsilon \), we have

We now begin to prove assertion (a). If it is not true, then there is a sufficiently small \(\varepsilon >0\) such that

Let us put

Hence, for every \(\omega \in \Omega _{1}\), there is a \(T(\omega )>0\) such that

which means, for any \(s\ge T(\omega )\) such that \(r(s)\in J_{<}\), we have

Form (37) and (41) we have, for any \(s\ge T(\omega )\) such that \(r(s)\in J_{<}\)

On the other hand, for any \(j\in J_{\ge }\), the function \(A_j\) is increasing on (0, 1). This implies, by (40), that

Moreover, by the large number theorem for martingales, there is a \(\Omega _{2}\subset \Omega \) with \(\mathbb {P}(\Omega _{2})=1\) such that for every \(\omega \in \Omega _{2}\),

Now, fix any \(\omega \in \Omega _{1}\cap \Omega _{2}\). It then follows from (35), (42) and (43) that, for \(t\ge T(\omega )\)

By the ergodic theory of the Markov chain, we have,

and

From (37), (38), (44), (45), (46) and (47), we get

Whence \(\lim _{t\rightarrow \infty } I(t)=0\). On the other hand, form the third equation of (6) and (27), wa have

Hence, \(\lim _{t\rightarrow \infty } R(t)=0\) and then \(\lim _{t\rightarrow \infty }S(t)=1\). But this contradicts (40). The required assertion (a) must therefore hold.

(b) Similarly, if it were not true, we can then find an \(\varepsilon '>0\) sufficiently small such that \(\mathbb {P}\left( \Omega _{3}\right) >0,\) where

Hence, for every \(\omega \in \Omega _{3}\), there is a \(T'(\omega )>0\) such that

As in (42) and (43), we can easily check, by choosing \(\varepsilon '>0\) sufficiently small that

By (51), (52) and similarly to (48), we obtain

Whence \(\lim _{t\rightarrow \infty } I(t)=\infty .\) This contradicts \(I(t)<1\). The proof of (b) is completed.

(d) By (a) and the fact that \(I+R=1-S\), we have

By the third equation of (6) and (28), wa have

Combining (53) and (54) we get the required assertion (d).

(c) Similarly to (d) , it follows easily by (27), (b) and \(I+R=1-S\).

(e)–(f) they follow immediately from \(R=1-S-I\), (a), (b), (c) and (d). \(\square \)

Now, we make Theorem 5.1 more explicit in the tow following special cases.

Corollary 5.1

If for any \(j\in \mathbb {S}\),

then the estimates (a)–(f) of Theorem 5.1 hold with \(\nu _{1}=\nu _{2}=\xi \). Else if for any \(j\in \mathbb {S}\),

then (a)–(f) hold with \(\nu _{1}=\min _{j\in \mathbb {S}}\{\xi _{j}\}\) and \(\nu _{2}=\max _{j\in \mathbb {S}}\{\xi _{j}\}\).

6 Positive recurrence

In this section, we show the persistence of the disease in the population, but from another point of view. Precisely, we find a domain \(\mathcal {D }\subset \mathbb {R}^2_+\) which is positive recurrent for the process (S(t), I(t)). Generally, the process \(X^x_t\) where \(X_0=x\) is recurrent with respect to \(\mathcal {D}\) if, for any \(x\notin \mathcal {D}\), \(\mathbb {P}\left( \tau _{D}<\infty \right) =1\), where \(\tau _{D}\) is the hitting time of \(\mathcal {D}\) for the process \(X^x_t\), that is

The process \(X^x_t\) is said to be positive recurrent with respect to \(\mathcal {D}\) if \(\mathbb {E}(\tau _{D})<\infty \), for any \(x\notin \mathcal {D}\).

Theorem 6.1

Consider the stochastic system (6) with initial condition in \(\Delta \). Assume that

then there exists \(\alpha >0\) such that (S(t), I(t)) is positive recurrent with respect to the domain

Proof

Consider the positive functions defined on \((0,1)^{2}\times \mathbb {S}\) by

Here, \(\eta \) is a positive number sufficiently small satisfying \(\frac{1}{\eta }>-\underset{j\in \mathcal {S}}{\min }\varpi _j\), where \(\varpi =(\varpi _1,\ldots ,\varpi _m)^T\) will be determined in the rest of the proof. The differential operator \(\mathcal {L}\) acting on the Lyapunov function \(\psi \) gives

Using \(S=1-I-R\), we get, from (56),

Let \(S,I\in \mathcal {D}^c\), which implies by \(S+I<1\) that either \(S<\frac{1}{\alpha }\) or \(I<\frac{1}{\alpha }\). Firstly, if \(S<\frac{1}{\alpha }\) then from \(I,\,R,\, I+R\in (0,1)\) and (57), we have

therefore, for all \(\alpha > \max _{j\in \mathbb { S}}\left\{ \frac{\mu _j+\beta _j+\sigma _{j}^{2}}{\mu _j}\right\} \) and \(\eta \) sufficiently small, we have

Secondly, if \(I<\frac{1}{\alpha }\), then, from \(-\mu _j+\mu _jS<0\) and (57), we have

For \(\eta \) sufficiently small such that \(\frac{1}{\eta }>\max _{j\in \mathbb {S}}\frac{\beta _j}{\gamma _j }\), we have

then

On the other hand, the generator matrix \(\Theta \) is irreducible, then for \(C=(C_1,\ldots ,C_m)^T\) there exists \(\varpi ^T=(\varpi _1,\ldots ,\varpi _m)^T\) solution of the Poisson system [21]

where \(\mathbf {e}\) denotes the column vector with all its entries equal to 1. Substituting (60) in (59) yields

One can easily verify that for \(\eta \) sufficiently small there exists \(\alpha _{0}=\alpha _{0}(\eta ,\varpi _1,\ldots ,\varpi _m)\) such that for all \(\alpha >\alpha _{0}\) we have

which is allowed by the condition (55). Combining (61) and (62), we obtain in the case when \(I<\frac{1}{\alpha }\) such that \(\eta \) sufficiently small and \(\alpha >\alpha _{0}\)

From (58) and (63), we have for \(\eta \) sufficiently small and \(\alpha >\max \left( \alpha _{0}, \max _{j\in \mathbb { S}} \left\{ \frac{\mu _j+\beta _j+\sigma _{j}^{2}}{\mu _j}\right\} \right) \),

Now, Let \((S(0),I(0))\in \mathcal {D}^c\). Thanks to the generalized Itô formula established by Skorokhod [24] (Lemma 3, p.104) and using (64), we obtain

Thus, by the positivity of \(\psi \), one can deduce that

The proof is complete. \(\square \)

7 Examples and computer simulations

Let \((r(t))_{t\ge 0}\) be a right-continuous Markov chain taking values in \(\mathbb {S} = \{1, 2\}\) with the generator

Given a stepsize \(\Delta >0\), the Markov chain can be simulated by Computing the one-step transition probability matrix \(P=e^{\Delta \Theta }\). We refer the reader to Anderson [25] for more details. Hence for \(\Delta =0.0001\), the transition probability matrix and the stationary distribution are given, respectively, by

7.1 Extinction

Example 7.1

(\(C_{1}<0\) and \(C_{2}<0\)). To illustrate the extinction case of Theorem 2.2, firstly we set

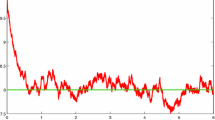

This implies that \(\pi _{1}{C_{1}}+\pi _{2}C_{2}=0.6667\times (-0.164) + 0.3333\times (-0.075)=-0.1343<0.\) Hence, the extinction condition of Theorem 2.2 is satisfied. The computer simulations in Fig. 1, using the Euler Maruyama method (see e.g., [26]), support these results clearly.

Example 7.2

(\(C_{1}<0\) and \(C_{2}>0\).) Let us choose

This gives \(\pi _{1}{C_{1}}+\pi _{2}C_{2}= 0.6667\times (-0.1640) + 0.3333\times (0.0960)= -0.0773<0.\) Then, the extinction condition of Theorem 2.2 is satisfied. The computer simulations in Fig. 2 , illustrates these results.

7.2 Persistence

Example 7.3

(\(C_{1}<0\) and \(C_{2}>0\)). Let us choose

We compute \(\pi _{1}{C_{1}}+\pi _{2}C_{2}=0.286>0\) and \(\beta _{1}\sigma _{1}>^{2}\), \(\beta _{2}\sigma _{2}>^{2}\). Hence, on one hand, the persistence condition of Corollary 5.1 is satisfied and the corresponding estimates hold with \(\xi =0.7168\), that is, S(t) rises to or above the level \(\xi _s=\xi =0.7168\),

infinitely often with probability one, which is clearly illustrated by Fig. 3.

Results of one simulation run of SDE (6) with initial condition (0.975, 0.03, 0.02) and its corresponding Markov chain r(t) using the parameter values of Example 7.3. Here, respectively, S(t), I(t) and R(t) rises to or above 0.7168, \([ 0.2326,\, 0.0584 ]\) and \([0.2248, \,0.0506]\) infinitely often with probability one

8 Conclusion

In this paper, we extended the classical SIRS epidemic model from a deterministic framework to a stochastic one by incorporating both white and color environmental noise. we have looked at the long-term behavior of our stochastic SIRS epidemic model. We established conditions for extinction and persistence of disease which are close to necessary. We also proved that the SIRS model (6) has a unique stationary distribution and the ergodic property.

The results show that The stationary distribution \((\pi _1,\ldots , \pi _m)\) of the Markov chain r(t) plays a very important role in determining extinction or persistence of the epidemic in the population. That is, if r(t) spends enough time in the states where \(C_j\) is negative, then the epidemic die out speedily from the population. If r(t) spends many time in the states where \(C_j\) is positive, then the epidemic will persists in the population if it is initially present.

We have not been able to determine the nature of the epidemic model for the case when \(\sum _{j=1}^m\pi _jC_j=0\), but the computer simulation shows that the disease would die out after a long period of time, as we suspect. We have illustrated our theoretical results with computer simulations. Finally, this paper is only a first step in introducing switching regime into an epidemic model. In future investigations we plan to introduce white and color noises into more realistic epidemic models such as the SEIRS models.

References

Bernoulli, D.: Essai d’une nouvelle analyse de la mortalité causée par la petite verole. Mémoires de Mathematiques et de Physique. Acad. R. Sci. Paris 1, 1–45 (1760)

Kermack, W.O., McKendrick, A.G.: Contribution to mathematical theory of epidemics. Proc. R. Soc. Lond. A Mat. 115, 700–721 (1927)

Hethcote, H.W.: Qualitative analyses of communicable disease models. Math. Biosci. 28, 335–356 (1976)

Beretta, E., Takeuchi, Y.: Global stability of a SIR epidemic model with time delay. J. Math. Biol. 33, 250–260 (1995)

Connell McCluskey, C., van den Driessche, P.: Global analysis of two tuberculosis models. J. Dyn. Differ. Equ. 16, 139–166 (2004)

Korobeinikov, A.: Lyapunov functions and global stability for SIR and SIRS epidemiological models with non-linear transmission. Bull. Math. Biol. 30, 615–626 (2006)

Lahrouz, A., Omari, L., Kiouach, D., Belmaati, A.: Complete global stability for an SIRS epidemic model with generalized non-linear incidence and vaccination. Appl. Math. Comput. 218, 6519–6525 (2012)

Lu, Q.: Stability of SIRS system with random perturbations. Phys. A 388, 3677–3686 (2009)

Lahrouz, A., Omari, L., Kiouach, D.: Global analysis of a deterministic and stochastic nonlinear SIRS epidemic model. Nonlinear Anal. Model. Control. 16, 59–76 (2011)

Lahrouz, A., Settati, A.: Necessary and sufficient condition for extinction and persistence of SIRS system with random perturbation. Appl. Math. Comput. 233, 10–19 (2014)

Dalal, N., Greenhalgh, D., Mao, X.: A stochastic model of AIDS and condom use. J. Math. Anal. Appl. 325, 36–53 (2007)

Gray, A., Greenhalgh, D., Hu, L., Mao, X., Pan, J.: A stochastic differential equation SIS epidemic model. SIAM J. Appl. Math. 71, 876–902 (2011)

Liu, M., Wang, K.: Stationary distribution, ergodicity and extinction of a stochastic generalized logistic system App. Math. Lett. 25, 1980–985 (2012)

Slatkin, M.: The dynamics of a population in a Markovian environment. Ecology 59, 249–256 (1978)

Takeuchi, Y., Du, N.H., Hieu, N.T., Sato, K.: Evolution of predator-prey systems described by a Lotka–Volterra equation under random environment. J. Math. Anal. Appl. 323, 938–957 (2006)

Du, N.H., Kon, R., Sato, K., Takeuchi, Y.: Dynamical behaviour of Lotka–Volterra competition systems: non autonomous bistable case and the effect of telegraph noise. J. Comput. Appl. Math. 170, 399–422 (2004)

Gray, A., Greenhalgh, D., Mao, X., Pan, J.: The SIS epidemic model with Markovian switching. J. Math. Anal. Appl. 394, 496–516 (2012)

Lahrouz, A., Settati, A.: Asymptotic properties of switching diffusion epidemic model with varying population size. Appl. Math. Comput. 219, 11134–11148 (2013)

Zhu, C., Yin, G.: Asymptotic properties of hybrid diffusion systems. SIAM J. Control Optim. 46, 1155–1179 (2007)

Han, Z., Zhao, J.: Stochastic SIRS model under regime switching. NONRWA. 14, 352–364 (2013)

Khasminskii, R.Z., Zhu, C., Yin, G.: Stability of regime-switching diffusions. Stoch. Process. Appl. 117, 1037–1051 (2007)

Yuan, C., Mao, X.: Robust stability and controllability of stochastic differential delay equations with Markovian switching. Automatica 40, 343–354 (2004)

Mao, X.: Stochastic Differential Equations and Applications. Horwood Publishing Limited, Chichester (1997)

Skorohod, A.V.: Asymptotic Methods in the Theory of Stochastic Differential Equations. American Mathematical Society, Providence (1989)

Anderson, W.J.: Continuous-Time Markov Chains. Springer, Berlin (1991)

Kloeden, P.E., Platen, E.: Numerical Solution of Stochastic Differential Equations. Springer, Berlin (1992)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Settati, A., Lahrouz, A., El Jarroudi, M. et al. Dynamics of hybrid switching diffusions SIRS model. J. Appl. Math. Comput. 52, 101–123 (2016). https://doi.org/10.1007/s12190-015-0932-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-015-0932-4