Abstract

Random Boolean networks (RBNs) are models of genetic regulatory networks. It is useful to describe RBNs as self-organizing systems to study how changes in the nodes and connections affect the global network dynamics. This article reviews eight different methods for guiding the self-organization of RBNs. In particular, the article is focused on guiding RBNs toward the critical dynamical regime, which is near the phase transition between the ordered and dynamical phases. The properties and advantages of the critical regime for life, computation, adaptability, evolvability, and robustness are reviewed. The guidance methods of RBNs can be used for engineering systems with the features of the critical regime, as well as for studying how natural selection evolved living systems, which are also critical.

Similar content being viewed by others

Self-organization and how to guide it

The concept of self-organization originated within cybernetics (Ashby 1947, 1962; von Foerster 1960) and has propagated into almost all scientific disciplines (Nicolis and Prigogine 1977; Luhmann 1995; Turcotte and Rundle 2002; Camazine et al. 2003; Skår and Coveney 2003). Given the broad domains where self-organization can be described, its formal definition is problematic (Gershenson and Heylighen 2003; Heylighen 2003; Gershenson 2007; Prokopenko et al. 2009). Nevertheless, we can use the concept to study a wide variety of phenomena.

To better understand self-organization, the following notion can be used: A system described as self-organizing is one in which elements interact, achieving dynamically a global function or behavior (Gershenson 2007, p. 32). In other words, a global pattern is produced from local interactions.

Examples of self-organizing systems include a cell (molecules interact to produce life), a brain (neurons interact to produce cognition), an insect colony (insects interact to perform collective tasks), flocks, schools, herds (animals interact to coordinate collective behavior), a market (agents interact to define prices), traffic (vehicles interact to determine flow patterns), an ecosystem (species interact to achieve ecological homeostasis), a society (members interact to define social properties such as language, culture, fashion, esthetics, ethics, and politics). In principle, almost any system can be described as self-organizing (Ashby 1962; Gershenson and Heylighen 2003). If a system has a set of “preferred” states, i.e., attractors, and we call those states organized, the system will self-organize toward them. It is useless to enter an ontological discussion on self-organization. Rather, the question is: when is it useful to describe a system as self-organizing? For example, a cell can be described as self-organizing, but also as a Boolean variable (0 = dead, 1 = alive). Which description is more accurate? It depends on the aim of the description (model). A model cannot be judged independently of the context where it is used.

Self-organization is a useful description when at least two levels of description are present (e.g., molecules and cells, insects, and colony) and we are interested in studying the relationship between the descriptions at these two levels (scales). In this way, one can describe how the interactions at the lower level affect the properties at the higher level. When only the interactions at the lower level are defined, the system can adapt to novel situations and be robust to perturbations, since the precise global behavior is not predefined. Because of this, the properties of self-organizing systems can be exploited in design and engineering (Gershenson 2007; Watson et al. 2010).

The balance between self-organization and design is precisely the aim of guided self-organization (GSO) (Prokopenko 2009). Although it is difficult to define, GSO can be described as the steering of the self-organizing dynamics of a system toward a desired configuration. Cybernetics (Wiener 1948; Ashby 1956) had already a similar aim, although there was a stronger focus on control and communication, as opposed to self-organization and information.

The dynamics of self-organizing systems lead them to an “organized” state or configuration. However, there can be several potential configurations available. The emerging study of GSO explores the constraints and conditions where self-organizing dynamics can be lead to a particular configuration. Similar to several “synthetic” approaches (Steels 1993), GSO can be useful on the one hand for understanding how natural systems self-organize and on the other hand for building artificial systems capable of self-organization. This article focuses on both aspects, exploiting the generality of random Boolean networks (RBNs): First, how can evolution guide the self-organization of genetic regulatory networks? Second, how can we manipulate RBNs to guide their self-organization toward a desired regime?

This article is structured in the following sections: “Random Boolean networks”, “Self-organization in random Boolean networks”, “Guiding the self-organization of random Boolean networks”, “Discussion”, and “Conclusions”.

Random Boolean networks

RBNs were originally proposed as models of genetic regulatory networks (Kauffman 1969, 1993). However, their generality has triggered an interest in them beyond their original purpose (Aldana-González et al. 2003; Gershenson 2004a).

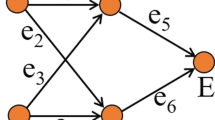

A RBN consists of N nodes linked by K connections each. Nodes are Boolean, i.e., their state is either “on” (1) or “off” (0). The state of a node at time t + 1 depends on the states of its K inputs at time t by means of a Boolean function. Connections and functions are chosen randomly when the RBN is generated and remain fixed during its temporal evolution. The randomly generated Boolean functions can be represented as lookup tables that represent all possible 2K combinations of input states. Figure 1 shows an example of a part of a RBN, where every node has exactly two inputs, i.e., K = 2. Table 1 shows an arbitrary lookup table to update the state of one of the nodes. The dynamics of a RBN with N = 40, K = 2 can be seen in Fig. 2.

Temporal evolution of a RBN with N = 40, K = 2 for a random initial state. Dark squares represent 0 and light squares represent 1. Time flows to the right, i.e., columns represent states of the network at a particular time, while a row represents the temporal evolution of the state of a node. Taken from RBNLab (Gershenson 2005)

Since RBNs are finite (they have 2N possible states) and deterministic, eventually a state will be revisited. Then, the network will have reached an attractor. The number of states in an attractor determines the period of the attractor. Point attractors have period one (a single state), while cyclic attractors have periods greater than one (multiple states, e.g., four in Fig. 2). A RBN can have one or more attractors. The set of states visited until an attractor is reached is called a transient. The set of states leading to an attractor form its basin. The basins of different attractors divide the state space. RBNs are dissipative, i.e., many states can flow into a single state (one state can have several predecessors), but from one state the transition is deterministic toward a single state (one state can have only one successor). The number of predecessors is also called in-degree. States without a predecessor are called “Garden of Eden” (GoE) states (in-degree = 0), since they can only be reached from an initial condition. Figure 3 illustrates the concepts presented above.

Example of state transitions. B is a successor state of A and a predecessor of C. States can have many predecessors (e.g., B), but only one successor. G is a Garden of Eden state since it has no predecessors. The attractor \(C\rightarrow D\rightarrow E \rightarrow F \rightarrow C\) has a period four

Note the difference between the topological network of a RBN (e.g., Fig. 1)—which represents how the states of nodes affect each other—and its state network (e.g., Fig. 3)—which represents the transitions of the whole state space. In the state network, each node represents a state of the network, i.e., there are 2N nodes in the state network, while RBN nodes are represented in the topological network, i.e., there are N nodes in the topological network. One of the main topics of RBN research is to understand how changes in the topological network (lower scale) affect the state network (dynamics of higher scale), which is far from obvious.

RBNs are a type of discrete dynamical network (Wuensche 1998), i.e., space, time, and states are discrete. RBNs are generalizations of Boolean cellular automata (von Neumann 1966; Wolfram 1986, 2002), where the states of cells are determined by K neighbors, i.e., not chosen randomly, and all cells are updated using the same Boolean function (Gershenson 2002).

Self-organization in random Boolean networks

RBNs can be described as self-organizing systems simply because they have attractors. If we describe the attractors as “organized,” then the dynamics self-organize toward them (Ashby 1962). Still, a better argument in favor of this description is that we are interested in understanding how the interactions between nodes (lower scale) affect the network dynamics and properties (higher scale). The concept of self-organization allows us to describe and relate both scales and their interactions under the same framework.

The self-organization of RBNs can also be interpreted in terms of complexity reduction. For example, the human genome has approximately 25,000 genes. Thus, in principle, each cell could be in one of the 225,000 possible states of that network. This is much more than the estimated number of elementary particles arising from the Big Bang. However, there are only about 300 cell types (attractors (Kauffman 1993; Huang and Ingber 2000)), i.e., cells self-organize toward a very limited fraction of all possible states. The main question addressed by these review paper is: in which ways can the self-organization of random Boolean networks be guided?

Before presenting multiple answers to that question, it is convenient to understand the different dynamical behaviors that RBNs can have (Wuensche 1998; Gershenson 2004a). There are two dynamical phases: ordered and chaotic. The phase transition is characterized by its criticality and is also known as the “edge of chaos” (Kauffman 1993).

In the ordered phase, most nodes do not change their state, i.e., they are static. RBNs are robust in this phase, i.e., damage does not spread through the network, since most nodes do not change. Also, similar states tend to converge to the same attractor. On average, states have many predecessors, which leads to a high convergence (many states go to few states), short transient times, and a high density of Garden of Eden states, i.e., the percentage of all states without a predecessor is high.

In the chaotic phase, most nodes are changing their state. Thus, damage spreads through chaotic networks. Therefore, RBNs are fragile in this phase. Similar states tend to diverge toward different attractors. On average, states have few predecessors, which leads to a low convergence, very long average transient times, and a relatively lower density of Garden of Eden states.

In the critical regime, i.e., close to the transition between the ordered and chaotic phases, the extremes of both phases are balanced: some nodes change and some are static. Therefore, damage or changes can spread, but not necessarily through all of the network. Similar states tend to lie in trajectories that neither converge nor diverge in state space (Kauffman 2000, p. 171). Few nodes have many predecessors, while many nodes have few predecessors. Actually, the in-degree distribution approximates a power law (Wuensche 1998). There is medium convergence. It has recently been found that RBNs near the critical regime maximize information storage and coherent information transfer (Lizier et al. 2008), as well as maximize Fisher information (Wang et al. 2010).

It has been argued that computation and life should occur at the edge of chaos (Langton 1990; Kauffman 1993, 2000; Crutchfield 1994). Even when criticality seems not to be a necessary condition for complexity (Mitchell et al. 1993), there is experimental evidence that the genetic networks of organisms from at least four kingdoms are near or within the critical regime (Balleza et al. 2008). The tendency toward criticality can be explained because of the following: On the one hand, ordered dynamics produce stability (robustness) which is desirable for preserving information (memory). However, a static system is not able to compute or adapt. On the other hand, chaotic dynamics give variability (exploration), which is necessary for computation and adaptation. Still, there is too much change within the chaotic phase to preserve information. A balance is reached in the critical regime, where the advantages of both phases can coexist: there can be enough stability and robustness to preserve information and enough variability to compute and explore. For this reason, it becomes a relevant question to ask how can we guide the self-organization of RBNs toward the critical regime. Being general models, the answers will give us information on how to achieve the same guidance within particular systems.

Guiding the self-organization of random Boolean networks

The criticality of RBNs can depend on many different factors. These factors can be exploited—by engineers or by natural selection—to guide the self-organization of RBNs and similar systems toward the critical regime.

Probability p

One of the most obvious factors affecting the dynamics of a RBN is the probability p of having ones on the last column of lookup tables (Derrida and Pomeau 1986). If p = 1, then all values in lookup tables will be one, so actually there will be no dynamics: all nodes will have a state of one after one iteration, independently on the initial state. The same case but with zero occurs for p = 0. When p = 0.5, there is the maximum variability possible in the lookup tables, i.e., no bias. As p approaches 0 or 1, RBNs tend to be more static, i.e., in the ordered regime.

Connectivity K

One of the most important factors determining the dynamical phase of RBNs is the connectivity K (Derrida and Pomeau 1986; Luque and Solé 2000). For p = 0.5, the ordered phase is found when K < 2, the chaotic phase occurs for K > 2, while the critical regime lies at the phase transition, i.e., K = 2. As p tends toward one or zero, the phase transition moves toward greater values of K.

If we focus on a single node i and calculate the probability that damaging its state (be it 0 or 1) will percolate changes through the network, then it is clear that the probability will increase with the connectivity K. We can choose a node j from one of the nodes that i can affect. There is a probability p that j will be 1, and thus a damage in i will modify j with a probability 1 − p. The complementary case is the same. Now, for K nodes, we can expect that at least one change will occur if \(\langle K \rangle 2p(1-p)\geq 1\) (Luque and Solé 1997b), i.e., chaos. Generalizing, the critical connectivity becomes (Derrida and Pomeau 1986):

Canalizing functions

A canalizing function (Kauffman 1969; Stauffer 1987; Szejka and Drossel 2007) is one in which at least one of the inputs has one value that is able to determine the value of the output of the function, regardless of the other inputs (Shmulevich and Kauffman 2004). In other words, the non-canalizing inputs of canalizing functions are not relevant. A different type of canalization is considered when a particular value of an input determines the output of a function, while for other(s) values of that input, the output of the function is determined by other inputs.

Independently of the particular type of canalization, if there is a bias favoring canalizing functions, the phase transition will move toward greater values of K. This is possible because in practice non-canalizing inputs are “ficticious,” i.e., removing them does not affect the state space nor the dynamics of a RBN.

For more than 150 transcriptional systems, it has been found that there is a strong canalizing bias (Harris et al. 2002). Systems have \(\langle K \rangle\) = 3, 4, or 5 for most cases, and few with even \(\langle K \rangle\) = 7, 8, or 9 and still fall within the ordered phase.

It has been shown that RBNs with nested canalizing functions have stable (ordered) dynamics, approaching criticality for low values of K (Kauffman et al. 2003, 2004).

Schemata can be used to describe canalization in RBNs in a concise way (Marques-Pita et al. 2008).

Silencing

During normal cell life, genes can be “silenced”, i.e., switched off by different mechanisms. Silencing is also a method used for perturbing genetic regulatory networks. The RBN equivalent of silencing would be to fix the value of a subset of nodes, independently on the state of their inputs.

It has been shown that even chaotic networks can be forced into regular behavior when a subset of nodes is not responsive to the internal network dynamics (Luque and Solé 1997a). It is straightforward to assume that if a higher percentage of nodes remains fixed, the dynamics will be more stable.

Some studies of silencing on RBNs have been made, e.g., Serra et al. (2004), although the precise relationship between silencing and criticality still remains to be studied.

Topology

Until recently, RBN studies used either a homogeneous topology (K is the same for all nodes) or a normal topology (there is an average \(\langle K \rangle\) inputs per node). This implies a uniform input distribution and no regularity in the wiring of nodes. However, the particular topology can have drastic effects on the properties of RBNs.

Link distribution

On the one hand, topologies with more uniform rank distributions, as those used commonly, exhibit more and longer attractors, but with less correlation in their expression patterns. On the other hand, skewed topologies exhibit less and shorter attractors, but with more correlations (entropy and mutual information) (Oosawa and Savageau 2002). A balance between these two extremes is achieved with scale-free topologies, i.e., few nodes have many inputs, while many nodes have few inputs. This advantageous balance can be used to explain why most natural networks have a scale-free topology (Aldana and Cluzel 2003; Oikonomou and Cluzel 2006).

RBNs with a scale-free topology (Aldana 2003) have been found to expand the advantages of the critical regime into the ordered phase, since well-connected elements can lead to the propagation of changes, i.e., adaptability even when the average connectivity would imply a static regime. It can be said that a scale-free topology expands the range of the critical regime. It has also been found that for RBNs with a scale-free distribution of outputs the average number of attractors is independent of networks size for more than three orders of magnitude (Serra et al. 2003).

Link regularity

Classical RBNs have the same probability of linking any node to any other node, i.e., they are random networks. For the same input distributions, the opposite extreme are regular networks, i.e., where nodes are connected to their neighbors, in a cellular automata fashion. The balance between those two extremes is achieved with small-world networks (Watts and Strogatz 1998; Bullock et al. 2010): many connections to neighbors and a few long-range connections lead to reduced average path lengths (common of random networks), while maintaining a high clustering coefficient (common of regular networks) (Watts and Strogatz 1998).

In RBNs, a small-world topology maximizes information transfer (Lizier et al. 2011), which is an indicator of critical dynamics.

Modularity

It is well know that modularity is a prevalent property of natural systems (Callebaut and Rasskin-Gutman 2005) and a desired feature of artificial systems (Simon 1996). Modularity is a property difficult to define precisely, but we can agree that as system is modular if it is composed by modules, i.e., the interactions within modules are more relevant than those between modules. Modules offer a level of organization that promotes at the same time robustness and evolvability (Wagner 2005b). On the one hand, damage within one module usually does not propagate through the whole system (robustness). On the other hand, useful changes can be exploited to find new configurations without affecting the functionality of other modules.

In the context of RBNs, initial explorations suggest that topological modules broaden the range of the critical regime toward higher connectivities (Poblanno-Balp and Gershenson 2010), i.e., a modular structure promotes critical dynamics within the theoretical chaotic phase. This is because even with high average connectivities, changes within one node have a low probability of propagating to other modules if there are few intermodular connections, in comparison with a non-modular network. These studies are related to topological modularity. One could argue that functional modularity is related to topological modularity (Calabretta et al. 1998), although this precise relationship remains to be studied.

Redundancy

Redundancy consists of having more than one copy of an element type. Duplication combined with mutation is a usual mechanism for the creation of genes in eukaryotes (Fernández and Solé 2004). When there are several copies of an element type, changes or damage can occur to one element, while others continue to function.

For RBNs (Gershenson et al. 2006), redundancy of links is not useful, since redundant links are fictitious inputs, i.e., they do not affect the state space. However, a redundancy of nodes prevents mutations from propagating through the network. Thus, redundant nodes increase neutrality (Kimura 1983; van Nimwegen et al. 1999; Munteanu and Solé 2008), i.e., can “smoothen” rough landscapes. This is an advantage for robustness and for evolvability, for the same reasons as the ones discussed concerning modularity, even when redundancy is a different mechanism from modularity, although both can potentially be combined.

Degeneracy

Degeneracy—also known as distributed robustness—is defined as the ability of elements that are structurally different to perform the same function or yield the same output (Edelman and Gally 2001; Fernández and Solé 2004). As modularity, it also widespread in biological systems and a promotor of robustness and evolvability. There is evidence that in genetic networks distributed robustness is equally or more important for mutational robustness (Wagner 2005a, b) and for evolvability (Whitacre and Bender 2010) than gene redundancy.

To date, particular studies of degeneracy on RBNs are lacking. Nevertheless, it could be speculated that degeneracy should promote critical dynamics, even when this still remains to be explored.

Discussion

In the previous section, a non-exhaustive list of factors that can be used to guide the self-organization of RBNs toward the critical regime was presented. Two categories of methods can be identified for guiding the self-organization toward criticality: moving the phase transition (with p, K, canalizing functions, or silencing) or broadening the critical regime (with balanced topologies, modularity, redundancy, or degeneracy). The first category seems to be directed more at the node functionality and lookup tables, while the second category seems to be directed more at the connectivity between nodes.

It can be speculated that natural selection can exploit these and probably other methods to guide the self-organization of genetic regulatory networks toward the critical regime. There is evidence that some of these methods are exploited by natural selection, but further studies are required to understand better the mechanisms, their constraints, how they are related, and which ones have been actually employed and to what degree by natural selection. In a similar way, engineers can guide the self-organization of RBNs and related systems using these and other methods. The purpose of designing artificial systems with criticality is to provide them with the advantages of this dynamical regime, that living systems have used to survive in an unpredictable environment.

Concerning the methods that move the phase transition, if a RBN is in the ordered phase, one or several of the following can be done:

-

Adjust p toward a value of 0.5. This will increase variety in lookup tables, and thus increase the number of nodes that change. In other words, dynamics will be promoted.

-

Increase the connectivity K. More connections also promote richer dynamics.

-

Decrease the number of canalizing functions (if any). Canalizing functions imply that some connections play no role on the dynamics. If these connections are changed, i.e., they become functional, dynamics will be richer.

If the RBN is in the chaotic phase, complementary measures can be taken:

-

Adjust p farther from a value of 0.5. This will increase homogeneity in lookup tables, and less nodes will be changing. This will decrease the damage sensitivity of the network, i.e., the dynamics will be less chaotic and more stable.

-

Decrease the connectivity K. Less connections promote stability.

-

Increase the number of canalizing functions. Canalizing functions reduce the effect of having several inputs, i.e., a high connectivity K, so changes cannot propagate as easily as with no canalizing functions.

-

Silence some nodes by fixing their state independently of their inputs.

Concerning the methods that broaden the critical regime, even when they are different, all of them can be exploited to guide the self-organization of RBNs toward the critical regime:

-

Promote a scale-free topology. When few nodes have many connections and most nodes have few connections, the desirable properties of the critical regime extend beyond the extremely narrow space of all possible networks that lies precisely on the phase transition (e.g., K = 2, p = 0.5). This “criticality enhancement” is possible because most nodes are stable, but the nodes with several connections (hubs) can trigger rich dynamics. In this way, changes can propagate through the RBN in a constrained fashion.

-

Promote a small world topology. Having a high clustering coefficient and a low average path length balances several advantages that lead to critical dynamics (Lizier et al. 2011).

-

Promote modularity. Modules make it difficult for damage to spread through all of the network, even if the local connectivity (within a module) is high. In this way, chaotic dynamics can be constrained within modules. This prevents avalanches, where change to one node might cause drastic changes in a large part of the network. Still, modularity allows for information flow between modules.

-

Promote redundancy. Having more than one copy of a node (or module) implies that a change on that node (or module) will not propagate through the network, since the redundant element(s) can perform the same function. Apart from smoothening rough fitness landscapes (Gershenson et al. 2006), it has been noted that redundancy is a useful feature in evolvable hardware (Thompson 1998).

-

Promote degeneracy. The effect of degeneracy is similar to that of redundacy, but acting at a functional level. Different components of a system perform the same function. In certain conditions, degeneracy might be advantageous over redundancy, e.g., when a change affects all copies of the same node (or module). Nevertheless, redundancy seems to be useful for exploration via duplication and mutation.

Yet another way of guiding the self-organization of RBNs toward the critical regime would be to promote certain properties as a part of the fitness function of an evolutionary algorithm. For example, one could evolve critical RBNs trying to maximize input entropy variance (Wuensche 1999), information storage, information transfer (Lizier et al. 2008), and/or Fisher information (Wang et al. 2010). Another criterion could be to try to approximate Lyapunov exponents to zero (Luque and Solé 2000). All of these properties characterize the critical regime. Thus, they can be used as a guidance of the evolutionary search. Nevertheless, it should be noted that guiding the self-organization of RBNs with a fitness function that promotes criticality is not useful to explain how natural systems evolved their criticality. Still, they are a valid approach for engineering critical systems.

It has been noted that the updating scheme can affect the behavior of RBNs (Harvey and Bossomaier 1997; Gershenson 2002). However, it does not affect the transition between the ordered and chaotic phases (Gershenson 2004b). Still, within the chaotic phase, random mutations have less effect when the updating is non-deterministic. However, this is because basins of attraction merge with a less-deterministic updating scheme (Gershenson et al. 2003), i.e., there are less attractors. On the one hand, non-determinism reduces sensitivity to random mutations. On the other hand, non-determinism reduces the functionality of RBNs. Having this tradeoff, and many different possible updating schemes (Giacobini et al. 2006; Darabos et al. 2009), it is difficult to argue in favor of any updating scheme in the context of this article.

Why criticality?

Some of the advantages of the critical regime were already presented, namely the balance between stability and variance that are requirements for life (Kauffman 1993, 2000) and computation (Langton 1990; Crutchfield 1994). In addition, the critical regime is also advantageous for adaptability, evolvability, and robustness.

Adaptability can be understood as the ability of a system to produce advantageous changes in response to an environmental or internal state that will help the system to fulfill its goals (Gershenson 2007). Suppose that a system that is modeled by a RBN (such as a genetic regulatory network) is situated in an unpredictable environment, it is desirable that the system will be able to adapt to changes in the environment. Does criticality increase adaptability? Not by itself, but it is useful. An ordered RBN will not be able to adapt so easily, because most changes will have no effect on the dynamics, so there will be no response to the environmental change. A chaotic RBN will pose the opposite difficulty: most changes will have a strong effect on the dynamics. Thus, it is highly probable that some of the functionality of the system will be lost. A balance is achieved by a critical RBN: changes can have an effect on the dynamics, but their propagation can be constrained, preserving most of the functionality of the system.

Evolvability is the ability of random variations to sometimes produce improvement (Wagner and Altenberg 1996). It can be seen as a particular type of adaptability, where changes occur from generation to generation. RBN evolution has already been explored (Stern 1999; Lemke et al. 2001). For the same reasons as those exposed for adaptability, evolvability will be higher for critical RBNs. Within an evolutionary context, however, it is also important to mention the advantages of criticality to scalability (Simon 1996), i.e., the ability to acquire novel functionalities. Since ordered RBNs have restricted dynamics and interactions, they cannot integrate novel functions too easily. Chaotic RBNs are also problematic, since novel functions most probably change the existing functionality. Critical RBNs are scalable, since novel functions can be integrated without altering existing functionalities. Intuitively, modularity promotes scalability in the most straightforward way, although other methods—i.e., critical RBNs without modularity—also allow for scalability.

A system is robust if it continues to function in the face of perturbations (Wagner 2005; Jen 2005). Robustness is a desirable property to complement adaptability and evolvability, since changes in the environment (perturbations) can damage or destroy a system before it can adapt or evolve. It is clear that evolution is not possible without robustness. Chaotic RBNs are not robust, since small perturbations produce drastic changes. Ordered RBNs are very robust, since they can resist most perturbations without producing changes. And when changes are produced, these do not propagate. However, ordered RBNs do not offer rich dynamics. Critical RBNs offer both advantages: rich dynamics and robustness (although not as high as the one of ordered RBNs. Note that the most robust RBNs are those without dynamics, e.g., with p = 1.).

Topology and modularity seem to be more relevant for adaptability and evolvability, while redundancy and degeneracy seem to be more relevant for robustness. However, evolvability and robustness are interrelated properties (Yu and Miller 2001; Ebner et al. 2002; Wagner 2005b), both of them desirable in natural and artificial systems.

It has been argued by Riegler (2008) that canalization is indispensable for the evolvability of complexity.

During the development of organisms, it seems that the “perfect” balance between adaptability and robustness changes with age, i.e., embryos are more plastic than adults (Neuman 2008, Chap. 13). Silencing seems to be a method used by organisms to tune this tradeoff, although other methods might be involved in this process as well.

Conclusions

This article described RBNs as self-organizing systems. Given the advantages of the critical regime of RBNs, different methods to guide the self-organization of RBNs toward criticality were reviewed.

One can ask: which comes first, criticality or some of the methods that promote it? Do they always come hand in hand? It seems not, since criticality can be present without canalizing functions, modularity, redundancy, degeneracy, or scale-free topologies. However, these properties facilitate (guide) criticality. Therefore, there is a selective pressure in favor of these properties. Which are the pressures that have actually guided genetic regulatory networks toward criticality (Balleza et al. 2008) is an open question. Which methods of the ones presented have actually been exploited by natural selection is another open question, although there is evidence of several of them at play. Yet another open question is how are the different methods related. This question actually comprises a whole set of questions, relevant for networks in general, e.g., how are scale-free, small world, and modular topologies related? Is there an advantage of having combinations of them, e.g., a scale-free modular topology, over only one of them? Do Apollonian networks (Andrade et al. 2005) offer a “maximal” criticality? What would be theoretically the maximal criticality possible for a given family of RBNs? When is redundancy or degeneracy preferable? What are the differences and advantages of critical RBNs produced with one or several of the presented methods? How are different methods related to adaptability, evolvability, and robustness? What is the proper balance between evolvability and robustness?

This long list of relevant questions, which could easily continue growing, should motivate researchers to continue exploring RBNs, their self-organization, and methods for guiding it. The answers should be relevant for the scientific study of networks, artificial life, and engineering.

References

Aldana M (2003) Boolean dynamics of networks with scale-free topology. Physica D 185(1):45–66. doi:10.1016/S0167-2789(03)00174-X

Aldana M, Cluzel P (2003) A natural class of robust networks. Proc Natl Acad Sci USA 100(15):8710–8714. doi:10.1073/pnas.1536783100. http://www.pnas.org/content/100/15/8710.abstract, http://www.pnas.org/content/100/15/8710.full.pdf+html

Aldana-González M, Coppersmith S, Kadanoff LP (2003) Boolean dynamics with random couplings. In: Kaplan E, Marsden JE, Sreenivasan KR (eds) Perspectives and problems in nonlinear science. A celebratory volume in honor of Lawrence Sirovich. Springer Applied Mathematical Sciences Series. http://www.fis.unam.mx/%7Emax/PAPERS/nkreview.pdf

Andrade JS, Herrmann HJ, Andrade RFS, da Silva LR (2005) Apollonian networks: simultaneously scale-free, small world, euclidean, space filling, and with matching graphs. Phys Rev Lett 94(1):018,702. doi:10.1103/PhysRevLett.94.018702

Ashby WR (1947) Principles of the self-organizing dynamic system. J Gen Psychol 37:125–128

Ashby WR (1956) An introduction to cybernetics. Chapman & Hall, London. http://pcp.vub.ac.be/ASHBBOOK.html

Ashby WR (1962) Principles of the self-organizing system. In: Foerster HV, Zopf GW Jr (eds) Principles of self-organization. Pergamon, Oxford, pp 255–278

Balleza E, Alvarez-Buylla ER, Chaos A, Kauffman S, Shmulevich I, Aldana M (2008) Critical dynamics in genetic regulatory networks: examples from four kingdoms. PLoS ONE 3(6):e2456. doi:10.1371/journal.pone.0002456

Bullock S, Barnett L, Di Paolo E (2010) Spatial embedding and the structure of complex networks. Complex Early Access. doi:10.1002/cplx.20338

Calabretta R, Nolfi S, Parisi D, Wagner GP (1998) Emergence of functional modularity in robots. In: Pfeifer R, Blumberg B, Meyer JA, Wilson S (eds) From animals to animats 5, MIT Press, pp 497–504. http://tinyurl.com/2fubay9

Callebaut W, Rasskin-Gutman D (2005) Modularity: understanding the development and evolution of natural complex systems. MIT Press

Camazine S, Deneubourg JL, Franks NR, Sneyd J, Theraulaz G, Bonabeau E (2003) Self-organization in biological systems. Princeton University Press. http://www.pupress.princeton.edu/titles/7104.html

Crutchfield J (1994) Critical computation, phase transitions, and hierarchical learning. In: Yamaguti M (ed) Towards the harnessing of chaos. Elsevier, Amsterdam, pp 93–10

Darabos C, Giacobini M, Tomassini M (2009) Transient perturbations on scale-free Boolean networks with topology driven dynamics. In: Artificial life: tenth European conference on artificial life, ECAL2009. Lecture Notes in Artificial Intelligence. Springer, Budapest

Derrida B, Pomeau Y (1986) Random networks of automata: a simple annealed approximation. Europhys Lett 1(2):45–49

Ebner M, Shackleton M, Shipman R (2002) How neutral networks influence evolvability. Complexity 7(2):19–33. doi:10.1002/cplx.10021

Edelman GM, Gally JA (2001) Degeneracy and complexity in biological systems. Proc Natl Acad Sci USA 98(24):13763–13768. doi:10.1073/pnas.231499798. http://www.pnas.org/content/98/24/13763.abstract. http://www.pnas.org/content/98/24/13763.full.pdf+html

Fernández P, Solé R (2004) The role of computation in complex regulatory networks. In: Koonin EV, Wolf YI, Karev GP (eds) Power laws, scale-free networks and genome biology, landes bioscience. http://arxiv.org/abs/q-bio.MN/0311012

Gershenson C (2002) Classification of random Boolean networks. In: Standish RK, Bedau MA, Abbass HA (eds) Artificial life VIII: proceedings of the eight international conference on artificial life. MIT Press, pp 1–8. http://alife8.alife.org/proceedings/sub67.pdf

Gershenson C (2004a) Introduction to random Boolean networks. In: Bedau M, Husbands P, Hutton T, Kumar S, Suzuki H (eds) Workshop and tutorial proceedings, ninth international conference on the simulation and synthesis of living systems (ALife IX), Boston, MA, pp 160–173. http://uk.arxiv.org/abs/nlin.AO/0408006

Gershenson C (2004b) Updating schemes in random Boolean networks: Do they really matter? In: Pollack J, Bedau M, Husbands P, Ikegami T, Watson RA (eds) Artificial life IX proceedings of the ninth international conference on the simulation and synthesis of living systems. MIT Press, pp 238–243. http://uk.arxiv.org/abs/nlin.AO/0402006

Gershenson C (2005) RBNLab. http://rbn.sourceforge.net. Accessed 25 Nov 2011

Gershenson C (2007) Design and control of self-organizing systems. CopIt Arxives, Mexico. http://tinyurl.com/DCSOS2007

Gershenson C, Heylighen F (2003) When can we call a system self-organizing? In: Banzhaf W, Christaller T, Dittrich P, Kim JT, Ziegler J (eds) Advances in artificial life, 7th European conference, ECAL 2003 LNAI 2801. Springer, Berlin, pp 606–614. http://uk.arxiv.org/abs/nlin.AO/0303020

Gershenson C, Broekaert J, Aerts D (2003) Contextual random Boolean networks. In: Banzhaf W, Christaller T, Dittrich P, Kim JT, Ziegler J (eds) Advances in artificial life, 7th European conference, ECAL 2003 LNAI 2801. Springer-Verlag, pp 615–624. http://uk.arxiv.org/abs/nlin.AO/0303021

Gershenson C, Kauffman SA, Shmulevich I (2006) The role of redundancy in the robustness of random Boolean networks. In: Rocha LM, Yaeger LS, Bedau MA, Floreano D, Goldstone RL, Vespignani A (eds) Artificial life X, proceedings of the tenth international conference on the simulation and synthesis of living systems. MIT Press, pp 35–42. http://uk.arxiv.org/abs/nlin.AO/0511018

Giacobini M, Tomassini M, De Los Rios P, Pestelacci E (2006) Dynamics of scalefree semi-synchronous Boolean networks. In: Rocha LM, Yaeger LS, Bedau MA, Floreano D, Goldstone RL, Vespignani A (eds) Artificial life X, proceedings of the tenth international conference on the simulation and synthesis of living systems. MIT Press, pp 1–7

Harris SE, Sawhill BK, Wuensche A, Kauffman S (2002) A model of transcriptional regulatory networks based on biases in the observed regulation rules. Complexity 7(4):23–40. doi:10.1002/cplx.10022

Harvey I, Bossomaier T (1997) Time out of joint: Attractors in asynchronous random Boolean networks. In: Husbands P, Harvey I (eds) Proceedings of the fourth European conference on artificial life (ECAL97). MIT Press, pp 67–75. http://tinyurl.com/yxrxbp

Heylighen F (2003) The science of self-organization and adaptivity. In: Kiel LD (ed) The encyclopedia of life support systems. EOLSS Publishers, Oxford. http://pcp.vub.ac.be/Papers/EOLSS-Self-Organiz.pdf

Huang S, Ingber DE (2000) Shape-dependent control of cell growth, differentiation, and apoptosis: switching between attractors in cell regulatory networks. Exp Cell Res 261:91–103

Jen E (ed) (2005) Robust design: a repertoire of biological, ecological, and engineering case studies. Santa Fe Institute Studies on the Sciences of Complexity, Oxford University Press. http://tinyurl.com/swtlz

Kauffman SA (1969) Metabolic stability and epigenesis in randomly constructed genetic nets. J Theor Biol 22:437–467

Kauffman SA (1993) The origins of order. Oxford University Press

Kauffman SA (2000) Investigations. Oxford University Press

Kauffman S, Peterson C, Samuelsson B, Troein C (2003) Random Boolean network models and the yeast transcriptional network. Proc Natl Acad Sci USA 100(25):14,796–14,799. doi:10.1073/pnas.2036429100. http://www.pnas.org/content/100/25/14796.abstract, http://www.pnas.org/content/100/25/14796.full.pdf+html

Kauffman S, Peterson C, Samuelsson B, Troein C (2004) Genetic networks with canalyzing Boolean rules are always stable. Proc Natl Acad Sci USA 101(49):17102–17107. doi:10.1073/pnas.0407783101. http://www.pnas.org/content/101/49/17102.abstract, http://www.pnas.org/content/101/49/17102.full.pdf+html

Kimura M (1983) The neutral theory of molecular evolution. Cambridge University Press, Cambridge

Langton C (1990) Computation at the edge of chaos: phase transitions and emergent computation. Physica D 42:12–37

Lemke N, Mombach JCM, Bodmann BEJ (2001) A numerical investigation of adaptation in populations of random Boolean networks. Physica A 301(1–4):589–600. doi:10.1016/S0378-4371(01)00372-7

Lizier J, Prokopenko M, Zomaya A (2008) The information dynamics of phase transitions in random Boolean networks. In: Bullock S, Noble J, Watson R, Bedau MA (eds) Artificial life XI—proceedings of the eleventh international conference on the simulation and synthesis of living systems. MIT Press, Cambridge, pp 374–381. http://tinyurl.com/3xzx9fr

Lizier J, Pritam S, Prokopenko M (2011) Information dynamics in small-world Boolean networks. Artif Life 17(4):293–314. doi:10.1162/artl_a_00040

Luhmann N (1995) Social systems. Stanford University Press. http://tinyurl.com/28exa5f

Luque B, Solé RV (1997a) Controlling chaos in Kauffman networks. Europhys Lett 37(9):597–602

Luque B, Solé RV (1997b) Phase transitions in random networks: simple analytic determination of critical points. Phys Rev E 55(1):257–260. http://tinyurl.com/y8pk9y

Luque B, Solé RV (2000) Lyapunov exponents in random Boolean networks. Physica A 284:33–45. http://tinyurl.com/trnd4

Marques-Pita M, Mitchell M, Rocha LM (2008) The role of conceptual structure in designing cellular automata to perform collective computation. In: Calude CS, Costa JF, Freund R, Oswald M, Rozenberg G (eds) UC, Lecture notes in computer science, vol 5204. Springer, pp 146–163

Mitchell M, Hraber PT, Crutchfield JP (1993) Revisiting the edge of chaos: evolving cellular automata to perform computations. Complex Syst 7:89–130. http://www.cs.pdx.edu/mm/rev-edge.pdf

Munteanu A, Solé RV (2008) Neutrality and robustness in evo-devo: emergence of lateral inhibition. PLoS Comput Biol 4(11):e1000226. doi:10.1371/journal.pcbi.1000226

Neuman Y (2008) Reviving the living: meaning making in living systems, studies in multidisciplinarity, vol 6. Elsevier, Amsterdam

Nicolis G, Prigogine I (1977) Self-organization in non-equilibrium systems: from dissipative structures to order through fluctuations. Wiley

Oikonomou P, Cluzel P (2006) Effects of topology on network evolution. Nat Phys 2:532–536. doi:10.1038/nphys359

Oosawa C, Savageau MA (2002) Effects of alternative connectivity on behavior of randomly constructed Boolean networks. Physica D 170:143–161

Poblanno-Balp R, Gershenson C (2010) Modular random Boolean networks. In: Fellermann H, Dörr M, Hanczyc MM, Laursen LL, Maurer S, Merkle D, Monnard PA, St\({\o}\)y K, Rasmussen S (eds) Artificial life XII proceedings of the twelfth international conference on the synthesis and simulation of living systems. MIT Press, Odense, pp 303–304. http://mitpress.mit.edu/books/chapters/0262290758chap56.pdf

Prokopenko M (2009) Guided self-organization. HFSP J 3(5):287–289. doi:10.2976/1.3233933

Prokopenko M, Boschetti F, Ryan A (2009) An information-theoretic primer on complexity, self-organisation and emergence. Complexity 15(1):11–28. doi:10.1002/cplx.20249

Riegler A (2008) Natural or internal selection? the case of canalization in complex evolutionary systems. Artif Life 14(3):345–362. doi:10.1162/artl.2008.14.3.14308

Serra R, Villani M, Agostini L (2003) On the dynamics of scale-free Boolean networks. In: Neural nets. Lecture notes in computer science, vol 2859. Springer, Berlin, pp 43–49. doi:10.1007/978-3-540-45216-4_4

Serra R, Villani M, Semeria A (2004) Genetic network models and statistical properties of gene expression data in knock-out experiments. J Theor Biol 227(1):149–157. doi:10.1016/j.jtbi.2003.10.018

Shmulevich I, Kauffman SA (2004) Activities and sensitivities in Boolean network models. Phys Rev Lett 93(4):048,701. doi:10.1103/PhysRevLett.93.048701

Simon HA (1996) The sciences of the artificial, 3rd edn. MIT Press

Skår J, Coveney PV (eds) (2003) Self-organization: the quest for the origin and evolution of structure. Philos Trans R Soc Lond A 361(1807), proceedings of the 2002 nobel symposium on self-organization

Stauffer D (1987) On forcing functions in Kauffman’s random Boolean networks. J Stat Phys 46(3):789–794. doi:10.1007/BF01013386

Steels L (1993) Building agents out of autonomous behavior systems. In: Steels L, Brooks RA (eds) The artificial life route to artificial intelligence: building embodied situated agents. Lawrence Erlbaum

Stern MD (1999) Emergence of homeostasis and noise imprinting in an evolution model. PNAS 96:10746–10751

Szejka A, Drossel B (2007) Evolution of canalizing Boolean networks. EPJ B 56(4):373–380. doi:10.1140/epjb/e2007-00135-2

Thompson A (1998) Hardware evolution: automatic design of electronic circuits in reconfigurable hardware by artificial evolution. Distinguished dissertation series, Springer-Verlag

Turcotte DL, Rundle JB (2002) Self-organized complexity in the physical, biological, and social sciences. Proc Natl Acad Sci USA 99(Suppl 1):2463–2465. doi:10.1073/pnas.012579399

van Nimwegen E, Crutchfield JP, Huynen M (1999) Neutral evolution of mutational robustness. Proc Natl Acad Sci USA 96(17):9716–9720. http://www.pnas.org/content/96/17/9716.abstract, http://www.pnas.org/content/96/17/9716.full.pdf+html

von Foerster H (1960) On self-organizing systems and their environments. In: Yovitts MC, Cameron S (eds) Self-organizing systems. Pergamon, New York, pp 31–50

von Neumann J (1966) In: A. W. Burks (ed) The theory of self-reproducing automata. University of Illinois Press

Wagner A (2005a) Distributed robustness versus redundancy as causes of mutational robustness. BioEssays 27(2):176–188. doi:10.1002/bies.20170

Wagner A (2005b) Robustness and evolvability in living systems. Princeton University Press, Princeton, NJ. http://www.pupress.princeton.edu/titles/8002.html

Wagner G, Altenberg L (1996) Complex adaptations and the evolution of evolvability. Evolution 50(3):967–976

Wang XR, Lizier J, Prokopenko M (2010) A Fisher information study of phase transitions in random Boolean networks. In: Fellermann H, Dörr M, Hanczyc MM, Laursen LL, Maurer S, Merkle D, Monnard PA, St\({\o}\)y K, Rasmussen S (eds) Artificial life XII proceedings of the twelfth international conference on the synthesis and simulation of living systems. MIT Press, Odense, pp 305–312. http://tinyurl.com/37qxgtn

Watson RA, Buckley CL, Mills R (2010) Optimisation in “self-modelling” complex adaptive systems. Complexity. http://eprints.ecs.soton.ac.uk/21051/

Watts D, Strogatz S (1998) Collective dynamics of ‘small-world’networks. Nature 393(6684):440–442

Whitacre JM, Bender A (2010) Degeneracy: a design principle for robustness and evolvability. J Theor Biol 263(1):143–153. doi:10.1016/j.jtbi.2009.11.008

Wiener N (1948) Cybernetics; or control and communication in the animal and the machine. Wiley, New York

Wolfram S (1986) Theory and application of cellular automata. World Scientific

Wolfram S (2002) A new kind of science. Wolfram Media. http://www.wolframscience.com/thebook.html

Wuensche A (1998) Discrete dynamical networks and their attractor basins. In: Standish R, Henry B, Watt S, Marks R, Stocker R, Green D, Keen S, Bossomaier T (eds) Complex systems ’98. University of New South Wales, Sydney, pp 3–21. http://tinyurl.com/y6xh35

Wuensche A (1999) Classifying cellular automata automatically: finding gliders, filtering, and relating space-time patterns, attractor basins, and the Z parameter. Complexity 4(3):47–66. http://tinyurl.com/y7pss7

Yu T, Miller J (2001) Neutrality and the evolvability of Boolean function landscape. In: Genetic programming, vol 2038. LNCS, Springer, pp 204–217. doi:10.1007/3-540-45355-5_16

Acknowledgments

This study was partially supported by SNI membership 47907 of CONACyT, México. The author would like to thank the guest editors of this special issue and anonymous referees for useful comments.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Gershenson, C. Guiding the self-organization of random Boolean networks. Theory Biosci. 131, 181–191 (2012). https://doi.org/10.1007/s12064-011-0144-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12064-011-0144-x