Abstract

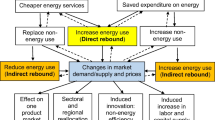

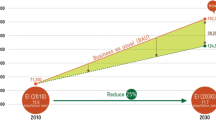

Today, many countries are promoting energy efficiency (EE) measures as part of their energy strategy. Among the goals sought with these actions are producing a decoupling between economic growth and energy consumption, reducing the dependence on fossil fuels as a primary energy source, and reducing greenhouse gas emissions. Measuring direct, indirect, and co-benefit effects of EE programs is crucial. However, in the current literature and practice, assessments of EE programs have focused on direct impacts (i.e., impacts whose energy savings can be directly and instantaneously quantified) due to their objectivity and simplicity to put evaluations in a cost-effectiveness framework. Moreover, several assessment methodologies studying the indirect effects of EE programs only focus on identifying the effects and quantifying a proxy of the effects in terms of the number of activities developed or the number of people attending EE training or dissemination events. Some few existing methodologies correctly assess the indirect effects of EE measures, but they often require a significant budget. We propose a new methodology to assess the impacts of EE programs, especially focusing on indirect effects (i.e., long-term effects on energy use), that is suitable for low-budget programs. We focus on those indirect effects having the capability of mobilizing long-term energy savings through transformations in energy markets. We attempt to measure the potential future energy savings that are sustainable in the long term due to a behavioral transformation of energy markets. In order to measure these indirect effects, we use three axes: presence, valuation, and mobilizing capacity. This methodology was applied to 12 EE programs (implemented during 2011 and 2012 in Chile) in order to obtain their indirect impact assessment.

Similar content being viewed by others

Notes

The cost-effectiveness assessment method used by the NEEA also proposes assessment method for indirect effects of EE programs. Regarding the indirect impact of EE initiatives, such as education/training skills or knowledge transfer, not only the number of people trained is assessed and reported in this methodology but also comparative indicators among different participants and nonparticipants of programs are included (NEEA 2015). These comparative indicators used in the evaluation of indirect effects of EE initiatives incorporate, for instance, EE actions reported by trained people, allowing a forecast of the market progress evaluation. However, most of the time, NEEA’s methodology measures the indirect effects of EE initiatives by just counting the number of participant in these EE initiatives, although a more comprehensive assessment methodology is available (NEEA 2014). This is particularly true in the case of small EE programs, where establishing comparative indicators among different participants and nonparticipants of the programs (like identifying and classifying EE actions reported by trained people) is relatively too costly (NEEA 2015).

The IG may be formed by participants or beneficiaries of programs, representatives of institutions or the government, etc. depending on the objective of the evaluation plan and the use of the information obtained therein.

A common problem for evaluators is that measurements vary within each assessment, making difficult establishing long-term trends. Accordingly, it may be useful reporting common metrics across different evaluations of different programs, so program managers and evaluators can easily compare the performance of programs involving similar activities.

References

Acaravci, A., & Ozturk, I. (2010). On the relationship between energy consumption, CO2 emissions and economic growth in Europe. Energy, 35, 5412–5420.

Allcott, H., & Greenstone, M. (2012). Is there an energy efficiency gap? The Journal of Economic Perspectives, 26, 3–28.

Alvial, C., Garrido, N., Jiménez, G., Reyes, L., & Palma, R. (2011). A methodology for community engagement in the introduction of renewable based smart microgrid. Energy for Sustainable Development, 15, 314–323.

Baillargeon, P., Schmitt, B., Michaud, N., & Megdal, L. (2012). Evaluating the market transformation impacts of a DSM program in the province of Quebec. Energy Efficiency, 5, 97–107.

Blumstein, C., Goldstone, S., & Lutzenhiser, L. (2000). A theory-based approach to market transformation. Energy Policy, 28(2), 137–144.

Blumstein, C. (2010). Program evaluation and incentives for administrators of energy-efficiency programs: can evaluation solve the principal/agent problem? Center for the study of energy markets. Berkeley:University of California.

Boonekamp Piet, G. M. (2006). Evaluation of methods used to determine realized energy savings. Energy Policy, 34, 3977–3992.

Carpio C., Coviello M. F. (2013). Eficiencia Energética en América Latina y el Caribe: aAvances y Desafíos del Último Quinquenio. http://www.cepal.org/publicaciones/xml/8/51608/Eficienciaenergetica.pdf. Accessed 30 March 2015.

Chilean Energy Efficiency Agency, AChEE. (2013). Development and implementation of a methodology for the measurement and evaluation of the impact of the programs of the AChEE in industry, mining, transportation and commerce sectors. Report for the AChEE. Available upon request to the AChEE.

Chilean Budget Direction, DIPRES. (2009). Annex methodology for impact evaluation. http://www.dipres.gob.cl/572/articles-37416_doc_pdf.pdf. Accessed 15 March 2013.

Chilean Ministry of Energy. (2013). Informe final programa agencia chilena de eficiencia energética. http://www.dipres.gob.cl/595/articles-109115_doc_pdf.pdf. Accessed 28 March 2015.

Cui, Q., Kuang, H., Wu, C., & Li, Y. (2014). The changing trend and influencing factors of energy efficiency: the case of nine countries. Energy, 64, 1026–1034.

Delina, L. (2012). Coherence in energy efficiency governance. Energy for Sustainable Development, 16, 493–499.

Efficiciency Valution Organization, EVO. (2012). International Performance Measurement and Verification Protocol. IPMVP Volume I. http://www.evoworld.org/index.php?option=com_docman&task=doc_details&gid=1378&Itemid=79&lang=es. Accessed 15 March 201a3.

Harmelink, M., Nilsson, L., & Harmsen, R. (2008). Theory-based policy evaluation of 20 energy efficiency instruments. Energy Efficiency, 1, 131–148.

Henriksson, E., & Söderholm, P. (2009). The cost-effectiveness of voluntary energy efficiency programs. Energy for Sustainable Development, 13, 235–243.

Gabardino S., Holland J. (2009). Quantitative and qualitative methods in impact evaluation and measuring results. Governance and Social Development Resource Centre. http://www.gsdrc.org/docs/open/eirs4.pdf. Accessed 10 March 2013.

Latin American and Caribbean Institute for Economic and Social Planning, ILPES. (2005). Methodology of the logical framework for planning, monitoring and evaluation of projects and programs. Manual No. 42. http://www.eclac.org/publicaciones/xml/9/22239/manual42.pdf. Accessed 10 March 2013.

Palmer, K., Grausz, S., Beasleya, B., & Brennan, T. (2013). Putting a floor on energy savings: comparing state energy efficiency resource. Utilities Policy, 25, 43–57.

Pardo, C. (2009). Energy efficiency developments in the manufacturing industries of Germany and Colombia, 1998–2005. Energy for Sustainable Development, 13, 189–201.

Pelenur, M., & Heather, J. (2012). Closing the energy efficiency gap: a study linking demographics with barriers to adopting energy efficiency measures in the home. Energy, 47, 348–357.

Poveda M. (2007). Eficiencia Energética: Recurso no aprovechado. Organización Latinoamericana de Energía (OLADE). http://www10.iadb.org/intal/intalcdi/PE/2009/02998.pdf. Accessed 20 March 2015.

Programa de Estudios e Investigaciones en Energía, PRIEN (2003). Estudio de las relaciones entre la eficiencia energética y el desarrollo económico. http://finanzascarbono.org/comunidad/mod/file/download.php?file_guid=43345. Accessed 20 March 2015.

The California Public Utilities Commission, CPUC. (2004). The California evaluation framework. http://calmac.org/publications/california_evaluation_framework_june_2004.pdf. Accessed 10 March 2013.

The California Public Utilities Commission, CPUC. (2006). California energy efficiency evaluation protocols: technical, methodological, and reporting requirements for evaluation professionals. http://www.calmac.org/events/EvaluatorsProtocols_Final_AdoptedviaRuling_06-19-2006.pdf. Accessed 10 March 2013.

Togeby, M., Dyhr-Mikkelsen, K., Larsen, A., & Bach, P. (2012). A Danish case: portfolio evaluation and its impact on energy efficiency policy. Energy Efficiency, 5, 37–49.

U.S. Department of Energy, DOE. (2007a). Model energy efficiency program impact evaluation guide. http://www.epa.gov/cleanenergy/documents/suca/evaluation_guide.pdf. Accessed 10 March 2013.

U.S. Department of Energy, DOE. (2007b). Impact evaluation framework for technology deployment programs. http://www.cee1.org/eval/impact_framework_tech_deploy_2007_main.pdf. Accessed 10 March 2013.

US Northwest Energy Efficiency Alliance, NEEA. (2015). Q4 2014 Quarterly performance report. http://neea.org/docs/default-source/quarterly-reports/neea-q4-2014-quarterly-report_final.pdf?sfvrsn=4. Accessed 16 March 2015.

US Northwest Energy Efficiency Alliance, NEEA. (2014). Strategic plan 2015–2019. http://neea.org/docs/default-source/default-document-library/neea-2015-2019-strategic-plan-board-approved.pdf?sfvrsn=2. Accessed 16 March 2015.

Van Den Wymelenberg, K., Brown, G. Z., Burpee, H., Djunaedy, E., et al. (2013). Evaluating direct energy savings and market transformation effects: a decade of technical design assistance in the northwestern USA. Energy Policy, 52, 342–353.

Vera, S., Bernal, F., & Sauma, E. (2013). Do distribution companies lose money with an electricity flexible tariff?: A review of the Chilean case. Energy, 55, 295–303.

Vine, E. (2008). Strategies and policies for improving energy efficiency programs: closing the loop between evaluation and implementation. Energy Policy, 36, 3872–3881.

Vine, E., Prahl, R., Meyers, S., & Turiel, I. (2010). An approach for evaluating the market effects of energy. Energy Efficiency, 3, 257–266.

Vine, E., Hall, N., Keating, K., Kushler, M., & Prahl, R. (2012). Emerging issues in the evaluation of energy-efficiency programs: the US experience. Energy Efficiency, 5, 5–17.

Vine, E., Saxonis, W., Peters, J., Tannenbaum, B., & Wirtshafter, B. (2013a). Training the next generation of energy efficiency evaluators. Energy Efficiency, 6, 293–303.

Vine, E., Hall, N., Keating, K., Kushler, M., & Prahl, R. (2013b). Emerging evaluation issues: persistence, behavior, rebound, and policy. Energy Efficiency, 6, 329–339.

Vine, E., Sullivan, M., Lutzenhiser, L., Blumstein, C., & Miller, B. (2014). Experimentation and the evaluation of energy efficiency programs. Energy Efficiency, 7(4), 627–640. doi:10.1007/s12053-013-9244-4.

Warr, B. S., & Ayres, R. U. (2010). Evidence of causality between the quantity and quality of energy consumption and economic growth. Energy, 35, 1688–1693.

World Energy Council, WEC. (2010). Energy efficiency: a recipe for success. http://www.worldenergy.org/publications/2010/energy-efficiency-a-recipe-for-success. Accessed 20 March 2015.

Worrell, E., Laitner, J., Ruth, M., & Finman, H. (2003). Productivity benefits of industrial energy efficiency measures. Energy, 28, 1081–1098.

Zhou, P., & Ang, B. W. (2008). Linear programming models for measuring economy-wide energy efficiency performance. Energy Policy, 36, 2911–2916.

Acknowledgments

The work reported in this paper was partially supported by the AChEE under a grant associated to the project reported in Chilean Energy Efficiency Agency (2013). Sonia Vera has been partially supported by a doctoral scholarship from National Committee of Scientific and Technological Research (CONICYT, for its acronym in Spanish), CONICYT-PCHA/Doctorado Nacional/2013. The work reported in this paper is based on the project reported in (Chilean Energy Efficiency Agency 2013), which was required by the AChEE authorities. The ideas presented in this paper are only responsibility of the authors, and they do not represent the position or thoughts of the AChEE.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sauma, E., Vera, S., Osorio, K. et al. Design of a methodology for impact assessment of energy efficiency programs: measuring indirect effects in the Chilean case. Energy Efficiency 9, 699–721 (2016). https://doi.org/10.1007/s12053-015-9380-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12053-015-9380-0