Abstract

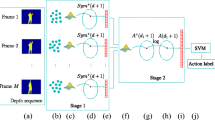

Recent technological advances have provided powerful devices with high processing and storage capabilities. Video cameras can be found in several different areas, such as banks, schools, stores, public streets and industry for a variety of tasks. Camera technology has increasingly improved, achieving higher resolution and acquisition frame rates. Nevertheless, most video analysis tasks are performed by human operators, whose performance may be affected by fatigue and stress. To address this problem, this work proposes and evaluates a method for action identification in videos through a new descriptor composed of autonomous fragments applied to a multilevel prediction scheme. The method is very fast and achieves over 90 % of accuracy in known public data sets. The developed system allows for the current video cameras the possibility of real-time action analysis, demonstrating to be a useful and powerful tool for surveillance purpose.

Similar content being viewed by others

References

Alcantara, M.F., Moreira, T.P., Pedrini, H.: Motion silhouette-based real time action recognition. In: 18th Iberoamerican Congress on Pattern Recognition, vol. 8259 (LNCS), pp. 471–478 (2013)

Alcantara, M.F., Moreira, T.P., Pedrini, H.: Real-time action recognition based on cumulative motion shapes. In: IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2917–2921, Florence, Italy, 4–9, May (2014)

Blank, M., Gorelick, L., Shechtman, E., Irani, M., Basri, R.: Actions as space-time shapes. In: International Conference on Computer Vision, pp. 1395–1402 (2005)

Bregonzio, M., Xiang, T., Gong, S.: Fusing appearance and distribution information of interest points for action recognition. Pattern Recognit. 45(3), 1220–1234 (2012)

Cai, J.X., Tang, X., Feng, G.C.: Learning pose dictionary for human action recognition. In: International Conference on Pattern Recognition, pp. 381–386 (2014)

Chaaraoui, A., Climent-Pérez, P., Flórez-Revuelta, F.: Silhouette-based human action recognition using sequences of key poses. Pattern Recognit. Lett. 34(15), 1799–1807 (2013)

Chaaraoui, A., Flórez-Revuelta, F.: Human action recognition optimization based on evolutionary feature subset selection. In: Genetic and Evolutionary Computation Conference, pp. 1229–1236 (2013)

Cheema, S., Eweiwi, A., Thurau, C., Bauckhage, C.: Action recognition by learning discriminative key poses. In: International Conference on Computer Vision, pp. 1302–1309 (2011)

Guo, K., Ishwar, P., Konrad, J.: Action recognition from video using feature covariance matrices. IEEE Trans. Image Process. 22(6), 2479–2494 (2013)

Hsieh, C.H., Huang, P., Tang, M.D.: The recognition of human action using silhouette histogram. In: Reynolds, M. (ed.) Australasian Computer Science Conference, vol. 113, pp. 11–16 (2011)

Ji, S., Xu, W., Yang, M., Yu, K.: 3D convolutional neural networks for human action recognition. Pattern Anal. Mach. Intell. 35(1), 221–231 (2013)

Junejo, I.N., Aghbari, Z.A.: Using SAX representation for human action recognition. J. Vis. Commun. Image Represent. 23(6), 853–861 (2012)

Kaewtrakulpong, P., Bowden, R.: An improved adaptive background mixture model for real-time tracking with shadow detection. In: European Workshop on Advanced Video Based Surveillance Systems, vol. 5308 (2001)

Karthikeyan, S., Gaur, U., Manjunath, B.S., Grafton, S.: Probabilistic subspace-based learning of shape dynamics modes for multi-view action recognition. In: International Conference on Computer Vision, pp. 1282–1286 (2011)

Laptev, I.: On space-time interest points. Int. J. Comput. Vis. 64(2–3), 107–123 (2005)

Mahbub, U., Imtiaz, H., Ahad, M.A.R.: Action recognition based on statistical analysis from clustered flow vectors. Signal Image Video Process. 8(2), 243–253 (2014)

Moghaddam, Z., Piccardi, M.: Histogram-based training initialisation of hidden markov models for human action recognition. In: International Conference on Advanced Video and Signal Based Surveillance, pp. 256–261 (2010)

Moghaddam, Z., Piccardi, M.: Training initialization of hidden Markov models in human action recognition. Autom. Sci. Eng. 36(99), 1–15 (2013)

Onofri, L., Soda, P.: Combining video subsequences for human action recognition. In: International Conference on Pattern Recognition, pp. 597–600 (2012)

Raja, K., Laptev, I., Perez, P., Oisel, L.: Joint pose estimation and action recognition in image graphs. In: International Conference on Image Processing, pp. 25–28 (2011)

Ryoo, M.S., Aggarwal, J.K.: Spatio-temporal relationship match: video structure comparison for recognition of complex human activities. In: International Conference on Computer Vision, pp. 1593–1600 (2009)

Schuldt, C., Laptev, I., Caputo, B.: Recognizing human actions: a local svm approach. In: 17th International Conference on Pattern Recognition, pp. 32–36 (2004)

Singh, S., Velastin, S.A., Ragheb, H.: MuHAVi: A multicamera human action video dataset for the evaluation of action recognition methods. In: Advanced Video and Signal Based Surveillance, pp. 48–55 (2010)

Sun, X., Chen, M., Hauptmann, A.: Action recognition via local descriptors and holistic features. In: Computer Vision and Pattern Recognition, pp. 58–65 (2009)

Ta, A.P., Wolf, C., Lavoue, G., Baskurt, A., Jolion, J.M.: Pairwise features for human action recognition. In: International Conference on Pattern Recognition, pp. 3224–3227 (2010)

Tsai, D.M., Chiu, W.Y., Lee, M.H.: Optical flow-motion history image (OF-MHI) for action recognition. Signal Image Video Process. 9(8), 1897–1906 (2015)

Wang, S., Huang, K., Tan, T.: A compact optical flowbased motion representation for real-time action recognition in surveillance scenes. In: International Conference on Image Processing, pp. 1121–1124 (2009)

Wu, C., Khalili, A.H., Aghajan, H.: Multiview activity recognition in smart homes with spatio-temporal features. In: International Conference on Distributed Smart Cameras, pp. 142–149 (2010)

Zhang, Z., Tao, D.: Slow feature analysis for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 34(3), 436–450 (2012)

Acknowledgments

The authors are thankful to FAPESP (Grants #2011/22749-8 and #2012/20738-1) and CNPq (Grant #307113/2012-4) for their financial support.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

de Alcantara, M.F., Moreira, T.P., Pedrini, H. et al. Action identification using a descriptor with autonomous fragments in a multilevel prediction scheme. SIViP 11, 325–332 (2017). https://doi.org/10.1007/s11760-016-0940-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-016-0940-3