Abstract

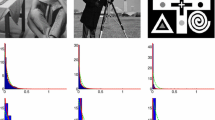

We propose a proximal approach to deal with a class of convex variational problems involving nonlinear constraints. A large family of constraints, proven to be effective in the solution of inverse problems, can be expressed as the lower-level set of a sum of convex functions evaluated over different blocks of the linearly transformed signal. For such constraints, the associated projection operator generally does not have a simple form. We circumvent this difficulty by splitting the lower-level set into as many epigraphs as functions involved in the sum. In particular, we focus on constraints involving \(\varvec{\ell }_q\)-norms with \(q\ge 1\), distance functions to a convex set, and \(\varvec{\ell }_{1,p}\)-norms with \(p\in \{2,{+\infty }\}\). The proposed approach is validated in the context of image restoration by making use of constraints based on Non-Local Total Variation. Experiments show that our method leads to significant improvements in term of convergence speed over existing algorithms for solving similar constrained problems.

Similar content being viewed by others

Notes

Note that the linear inequality over the auxiliary vector \(\zeta \) can be also replaced by an equality, even though it makes little difference in our approach.

\(D\) thus corresponds to \(K \le N\) lines of the identity \(N\times N\) matrix.

Methods for building \(\mathcal {N}_\ell \) and setting the associated weights are described in [59–61]. In our experiments, we use the \(\varvec{\ell }_{q,p}\)-TV regularization to obtain an estimate of \(x\), which we subsequently use to compute the weights through the self-similarity measure proposed in [61].

Note that the \(\varvec{\ell }_{1,2}\)-norm performs better on grayscale images.

By considering \(u_0\in \hbox {dom}\varphi \) and \(\xi _0 >\varphi (u_0)\), the required qualification condition is obviously satisfied.

References

Combettes, P.L., Pesquet, J.-C.: Proximal splitting methods in signal processing. In: Bauschke, H.H., Burachik, R.S., Combettes, P.L., Elser, V., Luke, D.R., Wolkowicz, H. (eds.) Fixed-Point Algorithms for Inverse Problems in Science and Engineering, pp. 185–212. Springer, New York (2011)

Parikh, N., Boyd, S.: Proximal algorithms. Found. Trends Optim. 1(3), 123–231 (2013)

Dupé, F.-X., Fadili, M.J., Starck, J.-L.: A proximal iteration for deconvolving Poisson noisy images using sparse representations. IEEE Trans. Image Process. 18(2), 310–321 (2009)

Aujol, J.-F., Gilboa, G., Chan, T., Osher, S.: Structure-texture image decomposition—modeling, algorithms, and parameter selection. Int. J. Comput. Vis. 67(1), 111–136 (2006)

Brice no-Arias, L.M., Combettes, P.L., Pesquet, J.-C., Pustelnik, N.: Proximal algorithms for multicomponent image recovery problems. J. Math. Imaging Vis. 41(1), 3–22 (Sep.2011)

Theodoridis, S., Slavakis, K., Yamada, I.: Adaptive learning in a world of projections. IEEE Signal Process. Mag. 28(1), 97–123 (2011)

Chaux, C., El Gheche, M., Farah, J., Pesquet, J.-C., Pesquet-Popescu, B.: A parallel proximal splitting method for disparity estimation from multicomponent images under illumination variation. J. Math. Imaging Vis. 47(3), 167–178 (2013)

Mallat, S.: A Wavelet Tour of Signal Processing. Academic Press, San Diego (1997)

Jacques, L., Duval, L., Chaux, C., Peyré, G.: A panorama on multiscale geometric representations, intertwining spatial, directional and frequency selectivity. Signal Process. 91(12), 2699–2730 (2011)

Van Den Berg, E., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31(2), 890–912 (2008)

Ciak, R., Shafei, B., Steidl, G.: Homogeneous penalizers and constraints in convex image restoration. J. Math. Imaging Vis. 47, 210–230 (2013)

Youla, D.C., Webb, H.: Image restoration by the method of convex projections. Part I—theory. IEEE Trans. Med. Imaging 1(2), 81–94 (1982)

Trussell, H.J., Civanlar, M.R.: The feasible solution in signal restoration. IEEE Trans. Acoust. Speech Signal Process. 32(2), 201–212 (1984)

Combettes, P.L.: Inconsistent signal feasibility problems: least-squares solutions in a product space. IEEE Trans. Signal Process. 42(11), 2955–2966 (1994)

Teuber, T., Steidl, G., Chan, R.H.: Minimization and parameter estimation for seminorm regularization models with I-divergence constraints. Inverse Probl. 29(3), 035007 (2013)

Stück, R., Burger, M., Hohage, T.: The iteratively regularized Gauss-Newton method with convex constraints and applications in 4Pi microscopy. Inverse Probl. 28(1), 015012 (2012)

Ono, S., Yamada I.: Poisson image restoration with likelihood constraint via hybrid steepest descent method. In: Proceedings of the International Conference on Acoustics, Speech and Signal Processing, pp. 5929–5933. Vancouver, Canada, May 2013

Harizanov, S., Pesquet, J.-C., Steidl, G.: Epigraphical projection for solving least squares anscombe transformed constrained optimization problems. Scale Space Var. Methods Comput. Vis. 7893, 125–136 (2013)

Ono, S., Yamada I.: Second-order total generalized variation constraint. In: Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Florence, Italy, May 4–9 2014

Tofighi, M., Kose, K., Cetin, A. E.: Signal reconstruction framework based on Projections onto Epigraph Set of a Convex cost function (PESC) (2014) (preprint)

Daubechies, I., Defrise, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57(11), 1413–1457 (2004). http://arxiv.org/abs/1402.2088

Chaux, C., Combettes, P.L., Pesquet, J.-C., Wajs, V.R.: A variational formulation for frame-based inverse problems. Inverse Probl. 23(4), 1495–1518 (2007)

Combettes, P.L., Pesquet, J.-C.: A Douglas–Rachford splitting approach to nonsmooth convex variational signal recovery. IEEE J. Sel. Top. Signal Process. 1(4), 564–574 (2007)

Figueiredo, M., Nowak, R., Wright S.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 5981(4), 586–598 (2007)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Fornasier, M., Schönlieb, C.-B.: Subspace correction methods for total variation and \(\ell _1\)-minimization. IMA J. Numer. Anal. 47(8), 3397–3428 (2009)

Steidl, G., Teuber, T.: Removing multiplicative noise by Douglas–Rachford splitting methods. J. Math. Imaging Vis. 36(3), 168–184 (2010)

Pesquet, J.-C., Pustelnik, N.: A parallel inertial proximal optimization method. Pac. J. Optim. 8(2), 273–305 (2012)

Esser, E., Zhang, X., Chan, T.: A general framework for a class of first order primal–dual algorithms for convex optimization in imaging science. SIAM J. Imaging Sci. 3(4), 1015–1046 (2010)

Chambolle, A., Pock, T.: A first-order primal–dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Brice no-Arias, L.M., Combettes, P.L.: A monotone+ skew splitting model for composite monotone inclusions in duality. SIAM J. Optim. 21(4), 1230–1250 (2011)

Combettes, P.L., Pesquet, J.-C.: Primal–dual splitting algorithm for solving inclusions with mixtures of composite, Lipschitzian, and parallel-sum type monotone operators. Set-valued Var. Anal. 20(2), 307–330 (2012)

Vũ, B.C.: A splitting algorithm for dual monotone inclusions involving cocoercive operators. Adv. Comput. Math. 38(3), 667–681 (2013)

Condat, L.: A primal–dual splitting method for convex optimization involving Lipschitzian, proximable and linear composite terms. J. Optim. Theory Appl. 158(2), 460–479 (2012)

Chen, P., Huang, J., Zhang, X.: A primal–dual fixed point algorithm for convex separable minimization with applications to image restoration. Inverse Probl. 29(2), 025011 (2013)

Alghamdi, M.A., Alotaibi, A., Combettes, P.L., Shahzad, N.: A primal-dual method of partial inverses for composite inclusions. Optim. Lett. (2014). doi:10.1007/s11590-014-0734-x

Moreau, J.J.: Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. Fr. 93, 273–299 (1965)

Bauschke, H. H., Combettes, P. L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces. Springer (2011)

Combettes, P.L.: A block-iterative surrogate constraint splitting method for quadratic signal recovery. IEEE Trans. Signal Process. 51(7), 1771–1782 (2003)

Kopsinis, Y., Slavakis, K., Theodoridis, S.: Online sparse system identification and signal reconstruction using projections onto weighted \(\ell _{1}\) balls. IEEE Trans. Signal Process. 59(3), 936–952 (2011)

Quattoni, A., Carreras, X., Collins, M., Darrell, T.: An efficient projection for \(\ell _{1,\infty }\) regularization. In: International Conference on Machine Learning, Montreal, Quebec, June 14–18 2009

Rudin, L., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60(1–4), 259–268 (1992)

Gilboa, G., Osher, S.: Nonlocal operators with applications to image processing. Multiscale Model. Simul. 7(3), 1005 (2009)

Gaetano, R., Chierchia, G., Pesquet-Popescu, B.: Parallel implementations of a disparity estimation algorithm based on a proximal splitting method. In Visual Communication and Image Processing, San Diego, USA (2012)

Candès, E.J., Wakin, M.B., Boyd, S.: Enhancing sparsity by reweighted \(\ell _1\) minimization. J. Fourier Anal. Appl. 14(5), 877–905 (2008)

Tikhonov, A.: Regularization of incorrectly posed problems. Sov. Math. Dokl. 4, 1624–1627 (1963)

Combettes, P.L., Pesquet, J.-C.: A proximal decomposition method for solving convex variational inverse problems. Inverse Probl. 24(6), 065014 (2008)

Wu, J., Liu, F., Jiao, L.C., Wang, X., Hou, B.: Multivariate compressive sensing for image reconstruction in the wavelet domain. IEEE Trans. Image Process. 20(12), 3483–3494 (2011)

Turlach, B.A., Venables, W.N., Wright, S.J.: Simultaneous variable selection. Technometrics 47(3), 349–363 (2005)

Chen, Y., Hero, A.O.: Recursive \(\ell _{1,\infty }\) lasso. IEEE Trans. Signal Process. 60(8), 3978–3987 (2012)

Studer, C., Goldstein, T., Yin, W., Baraniuk,, R. G.: Democratic representations. (Jan. 2014). http://arxiv.org/abs/1401.3420

Afonso, M.V., Bioucas-Dias, J.M., Figueiredo, M.A.T.: An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Process. 20(3), 681–695 (2011)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 8(1), 1–122 (2011)

Ding, C., Sun, D. F., Toh, K.-C.: An introduction to a class of matrix cone programming. Math. Program. 144(1–2), 141–179 (2014)

Donoho, D.L.: De-noising by soft-thresholding. IEEE Trans. Inf. Theory 41(3), 613–627 (1995)

Bach, F., Jenatton, R., Mairal, J., Obozinski, G.: Optimization with sparsity-inducing penalties. Found. Trends Mach. Learn. 4(1), 1–106 (2012)

Cetin, A.E., Tofighi, M.: Denosing using wavelets and projections onto the \(\ell _{1}\)-ball. Jun. (2014). http://arxiv.org/abs/1406.2528

Bredies, K., Kunisch, K., Pock, T.: Total generalized variation. SIAM J. Imaging Sci. 3(3), 492–526 (2010)

Buades, A., Coll, B., Morel, J.: A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 4(2), 490–530 (2005)

Gilboa, G., Osher, S.: Nonlocal linear image regularization and supervised segmentation. Multiscale Model. Simul. 6(2), 595–630 (2007)

Foi, A., Boracchi, G.: Foveated self-similarity in nonlocal image filtering. In: Proceedings of SPIE Electronic Imaging, vol. 8291. Burlingame, USA, January 2012

Li, Y., Osher, S.: A new median formula with applications to PDE based denoising. Commun. Math. Sci. 7(3), 741–753 (2009)

Bresson, X., Chan, T.F.: Fast dual minimization of the vectorial total variation norm and applications to color image processing. Inverse Probl. Imaging 2(4), 455–484 (2008)

Miyata, T., Sakai, Y.: Vectorized total variation defined by weighted L-infinity norm for utilizing inter channel dependency. In: Proceedings of the Intenational Conference on Image Processing, pp. 3057–3060. September 2012

Wang, Z., Bovik, A.C.: Mean squared error: love it or leave it? IEEE Signal Process. Mag. 26(1), 98–117 (2009)

Yuan, M., Lin, Y.: Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. B 68, 49–67 (2006)

Ekeland, I., Témam, R.: Convex Analysis and Variational Problems. SIAM, Philadelphia (1999)

Combettes, P.L., Pesquet, J.-C.: Proximal thresholding algorithm for minimization over orthonormal bases. SIAM J. Optim. 18(4), 1351–1376 (2007)

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Proof of Proposition 1

For every \((y,\zeta )\in {{\mathcal {H}}}\times {\mathbb {R}}\), let \((p,\theta )=P_{\hbox {epi}\varphi }(y,\zeta )\). If \(\varphi (y) \le \zeta \), then \(p = y\) and \(\theta = \zeta = \max \{\varphi (p),\zeta \}\). In addition,

which shows that (18) holds. Let us now consider the case when \(\varphi (y) > \zeta \). From the definition of the projection, we get

From the Karush–Kuhn–Tucker theorem [67, Theorem 5.2],Footnote 6 there exists \(\alpha \in [0,+\infty [\) such that

where the Lagrange multiplier \(\alpha \) is such that \(\alpha (\varphi (p)-\theta )\!=\!0\). Since the value \(\alpha = 0\) is not allowable (otherwise it would lead to \(p=y\) and \(\theta = \zeta \)), it can be deduced from the above equality that \(\varphi (p) = \theta \). In addition, differentiating the Lagrange functional in (40) w.r.t. \(\xi \) yields \(\varphi (p)\! =\! \theta \!=\! \zeta + \alpha \ge \zeta \). Hence, \((p,\theta )\) in (39) is such that \(\theta \!=\! \varphi (p) \!=\! \max \{\varphi (p),\zeta \}\) and

Furthermore, as \(\varphi (y) > \zeta \), we obtain

where we have used the fact that \(P_{{{{{\hbox {lev}}}_{\le \zeta }}\,}\varphi }(y)\) belongs to the boundary of \({{{{\hbox {lev}}}_{\le \zeta }}\,}\varphi \) which is equal to \(\big \{{u \in {{\mathcal {H}}}}~\big |~{\varphi (u)= \zeta }\big \}\) since \(\varphi \) is lower-semicontinuous and \(\hbox {dom}\varphi \) is open [38, Corollary 8.38]. We have then

Altogether, (41) and (43) lead to

which is equivalent to (18) since \(\frac{1}{2}(\max \{\varphi -\zeta ,0\})^2 \in \Gamma _0({{\mathcal {H}}})\).

1.2 Proof of Proposition 3

Since \((\max \{\varphi -\zeta ,0\})^2\) is an even function, \(\hbox {prox}_{\frac{1}{2} (\max \{\varphi -\zeta ,0\})^2}\) is an odd function [22, Remark 4.1(ii)]. In the following, we thus focus on the case when \(y \in ]0,+\infty [\). If \(\zeta \in ]-\infty ,0]\), then \((\max \{\varphi -\zeta ,0\})^2= (\varphi -\zeta )^2\) is differentiable and, according to the fact that \(p = \hbox {prox}_f(y)\) is uniquely defined as \(y-p \in \partial f(p)\), we deduce that \(p = \hbox {prox}_{\frac{1}{2} (\varphi -\zeta )^2}(y)\) is such that

where \(p \ge 0\) by virtue of [68, Corollary 2.5]. This allows us to deduce that \(p = \chi _0\).

Let us now focus on the case when \(\zeta \in ]0,{+\infty }[\). If \(y\in ]0,(\zeta /\tau )^{1/q}[\), it can be deduced from [68, Corollary 2.5], that \(p = \hbox {prox}_{\frac{1}{2} (\max \{\varphi -\zeta ,0\})^2}(y) \in [0,(\zeta /\tau )^{1/q}[\). Since \((\forall v \in [0,(\zeta /\tau )^{1/q}[)\) \(\max \{\varphi (v)-\zeta ,0\}=0\), we have \(p=y\). On the other hand if \(y > (\zeta /\tau )^{1/q}\), as the proximity operator of a function from \({\mathbb {R}}\) to \({\mathbb {R}}\) is continuous and increasing [68, Proposition 2.4], we obtain

Since \((\max \{\varphi -\zeta ,0\})^2\) is differentiable and, for each \(v \ge (\zeta /\tau )^{1/q}\), \((\max \{\varphi (v)-\zeta ,0\})^2=(\tau v^q-\zeta )^2\), we deduce that \(p\) is the unique value in \([(\zeta /\tau )^{1/q},{+\infty }[\) satisfying (45).

1.3 Proof of Proposition 4

Let us notice that \(\frac{1}{2}(\max \{\tau d_{C}^{q}-\zeta ,0\})^2 = \psi \circ d_{C}\) where \(\psi = \frac{1}{2} (\max \{\tau |\cdot |^{q}-\zeta ,0\})^2\). According to [47, Proposition 2.7], for every \({ y}\in {{\mathcal {H}}}\),

where \(\alpha = \frac{\hbox {prox}_{\psi }\big (d_{C}({ y})\big )}{d_{C}({ y})}\). In addition, we have

and, according to Proposition 2, when \(\zeta \!\! <\!\! 0\) and \(d_{C}({ y}) \!\le \! -\tau \zeta \), \(\hbox {prox}_{\psi }\big (d_{C}({ y})\big ) = 0\). This shows that (47) reduces to (25).

1.4 Proof of Proposition 5

For every \(({ y},\zeta ) \in {\mathbb {R}}^{M}\times {\mathbb {R}}\), in order to determine \(P_{\hbox {epi}\varphi }(y,\zeta )\), we have to find

For all \(\theta \in [0,+\infty [\), the inner minimization is achieved when, \(\forall m\in \{1,\ldots ,M\}, { p}^{(m)}\) is the projection of \({ y}^{(m)}\) onto the range \([-\theta /\tau ^{(m)},\theta /\tau ^{(m)}]\), as given by (31). Then, (49) reduces to

which is equivalent to calculate \(\theta =\hbox {prox}_{\phi +\iota _{[0,+\infty [}}(\zeta )\) where

The function \(\phi \) belongs to \(\Gamma _0({\mathbb {R}}\!)\) since, for each \(m\!\in \! \{1,\ldots ,M\}\), \(\max \{(\tau ^{(m)})^{-1}(\nu ^{(m)} - \cdot ),0\}\) is finite convex and \((\cdot )^2\) is finite convex and increasing on \([0,+\infty [\). In addition, \(\phi \) is differentiable and such that, for every \(v\in {\mathbb {R}}\) and \(k \in \{1,\ldots ,M+1\}\),

By using [23, Prop. 12], \(\theta = P_{[0,+\infty [}(\chi )\) with \(\chi =\hbox {prox}_{\phi }(\zeta )\). Therefore, there exists an \(\overline{m} \in \{1,\ldots ,M+1\}\) such that\(\nu ^{(\overline{m}-1)} < \chi \le \nu ^{(\overline{m})}\) and \(\zeta -\chi = \phi '(\chi )\), so leading to

and \(\nu ^{(\overline{m}-1)} < \chi \le \nu ^{(\overline{m})} \,\Leftrightarrow \,\) (33). The uniqueness of \(\overline{m} \in \{1,\ldots ,M+1\}\) follows from that of \(\hbox {prox}_{\phi }(\zeta )\).

Rights and permissions

About this article

Cite this article

Chierchia, G., Pustelnik, N., Pesquet, JC. et al. Epigraphical projection and proximal tools for solving constrained convex optimization problems. SIViP 9, 1737–1749 (2015). https://doi.org/10.1007/s11760-014-0664-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-014-0664-1