Abstract

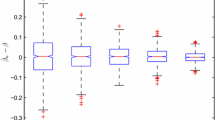

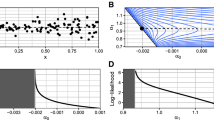

A smooth simultaneous confidence band (SCB) is obtained for heteroscedastic variance function in nonparametric regression by applying spline regression to the conditional mean function followed by Nadaraya–Waston estimation using the squared residuals. The variance estimator is uniformly oracally efficient, that is, it is as efficient as, up to order less than \(n^{-1/2}\), the infeasible kernel estimator when the conditional mean function is known, uniformly over the data range. Simulation experiments provide strong evidence that confirms the asymptotic theory while the computing is extremely fast. The proposed SCB has been applied to test for heteroscedasticity in the well-known motorcycle data and Old Faithful geyser data with different conclusions.

Similar content being viewed by others

References

Akritas MG, Van Keilegom I (2001) ANCOVA methods for heteroscedastic nonparametric regression models. J Am Stat Assoc 96:220–232

Bickel PJ, Rosenblatt M (1973) On some global measures of deviations of density function estimates. Ann Stat 31:1852–1884

Brown DL, Levine M (2007) variance estimation in nonparametric regression via the difference sequence method. Ann Stat 35:2219–2232

Cai T, Wang L (2008) Adaptive variance function estimation in heteroscedastic nonparametric regression. Ann Stat 36:2025–2054

Carroll RJ, Wang Y (2008) Nonparametric variance estimation in the analysis of microarray data: a measurement error approach. Biometrika 95:437–449

Carroll RJ, Ruppert D (1988) Transformations and weighting in regression. Champman and Hall, London

Claeskens G, Van Keilegom I (2003) Bootstrap confidence bands for regression curves and their derivatives. Ann Stat 31:1852–1884

Davidian M, Carroll RJ, Smith W (1988) Variance functions and the minimum detectable concentration in assays. Biometrika 75:549–556

De Boor C (2001) A practical guide to splines. Springer, New York

Dette H, Munk A (1998) Testing heteroscedasticity in nonparametric regression. J R Stat Soc Ser B 60:693–708

Fan J, Gijbels T (1996) Local polynomial modelling and its applications. Champman and Hall, London

Fan J, Yao Q (1998) Efficient estimation of conditional variance functions in stochastic regression. Biometrika 85:645–660

Hall P, Titterington MD (1988) On confidence bands in nonparametric density estimation and regression. J Multivar Anal 27:228–254

Hall P, Carroll RJ (1989) Variance function estimation in regression: the effect of estimating the mean. J R Stat Soc Ser B 51:3–14

Hall P, Marron JS (1990) On variance estimation in nonparametric regression. Biometrika 77:415–419

Härdle W (1989) Asmptotic maximal deviation of M-smoothers. J Multivar Anal 29:163–179

Härdle W (1992) Applied nonparametric regression. Cambridge University Press, Cambridge

Levine M (2006) Bandwidth selection for a class of difference-based variance estimators in the nonparametric regression: a possible approach. Comput Stat Data Anal 50:3405–3431

Liu R, Yang L, Härdle W (2013) Oracally efficient two-step estimation of generalized additive model. J Am Stat Assoc 108:619–631

Ma S, Yang L, Carroll RJ (2012) A simultaneous confidence band for sparse longitudinal regression. Stat Sin 22:95–122

Müller HG, Stadtmüller U (1987) Estimation of heteroscedasticity in regression analysis. Ann Stat 15:610–625

Silverman WB (1986) Density estimation for statistics and data analysis. Chapman and Hall, London

Song Q, Yang L (2009) Spline confidence bands for variance functions. J Nonparametr Stat 5:589–609

Tusnády G (1977) A remark on the approximation of the sample df in the multidimensional case. Periodica Mathematica Hungarica 8:53–55

Wang L, Brown LD, Cai T, Levine M (2008) Effect of mean on variance function estimation in nonparametric regression. Ann Stat 36:646–664

Wang J, Liu R, Cheng F, Yang L (2014) Oracally efficient estimation of autoregressive error distribution with simultaneous confidence band. Ann Stat 42:654–668

Wang L, Yang L (2007) Spline-backfitted kernel smoothing of nonlinear additive autoregression model. Ann Stat 35:2474–2503

Wang J, Yang L (2009a) Polynomial spline confidence bands for regression curves. Stat Sin 19:325–342

Wang L, Yang L (2009b) Spline estimation of single-index models. Stat Sin 19:765–783

Wang J, Yang L (2009c) Efficient and fast spline-backfitted kernel smoothing of additive models. Ann Inst Stat Math 61:663–690

Xia Y (1998) Bias-corrected confidence bands in nonparametric regression. J R Stat Soc Ser B 60:797–811

Xue L, Yang L (2006) Additive coefficient modeling via polynomial spline. Stat Sin 16:1423–1446

Zheng S, Yang L, Härdle W (2014) A smooth simultaneous confidence corridor for the mean of sparse functional data. J Am Stat Assoc 109:661–673

Acknowledgments

This work has been supported by NSF award DMS 1007594, Jiangsu Specially-Appointed Professor Program SR10700111, Jiangsu Key-Discipline Program ZY107992, National Natural Science Foundation of China award 11371272, and Research Fund for the Doctoral Program of Higher Education of China award 20133201110002. The authors thank the Editor and two Reviewers for helpful comments.

Author information

Authors and Affiliations

Corresponding author

Appendix A

Appendix A

Throughout this Appendix, we denote by \(\left\| \xi \right\| \) the Euclidean norm and \(\left| \xi \right| \) means the largest absolute value of the elements of any vector \(\xi \). We use \(c\), \(C\) to denote any positive constants in the generic sense. We denote for any given constant \( C>0\), a class of Lipschitz continuous functions by \(\text {Lip}\left( \left[ 0,1\right] ,C\right) =\left\{ \varphi \left| \left| \varphi \left( x\right) -\varphi \left( x^{\prime }\right) \right| \le C\left| x-x^{\prime }\right| \text {, }\forall x,x^{\prime }\in \left[ 0,1\right] \right. \right\} \).

1.1 A.1 Preliminaries

The Lemmas of this Subsection are needed for the proof of Propositions 1, 2 and 3. These Propositions clearly establish Theorems 1 and 2.

Lemma 1

Under Assumptions (A1)–(A5), there exists a constant \(C_{p}>0\), \(p>1\), such that for any \(m\in C^{p}\left[ 0,1\right] \) there is a spline function \(g_{p}\in G_{N}^{\left( p-2\right) }\) satisfying \(\left\| m-g_{p}\right\| _{\infty }\) \(\le CH^{p}\) and \(m-g_{p}\in \text {Lip}\left( \left[ 0,1\right] ,CH^{p-1}\right) \). The function \(\tilde{m}_{p}\left( x\right) \) given in Equation (12)

Moreover, for the function \(\tilde{\varepsilon }_{p}\left( x\right) \) given in Equation (12)

See Lemma A.1 of Song and Yang (2009), and also Wang and Yang (2009) for detailed proof.

Lemma 2

Under Assumption (A6), as \(n\rightarrow \infty \),

See Lemma A.4 of Song and Yang (2009) for detailed proof.

The strong approximation result of Tusnády (1977) is also needed.

Lemma 3

Let \(U_{1},\ldots ,U_{n}\) be i.i.d. r.v.’s on the \(2\) -dimensional unit square with \(P\left( U_{i}<\mathbf {t}\right) =\lambda \left( \mathbf {t}\right) ,\mathbf {0\le t\le 1,}\) where \(\mathbf {t=(} t_{1,}t_{2}\mathbf {)}\) and \(\mathbf {1=(}1,1\mathbf {)}\) are \(2\)-dimensional vectors, \(\lambda \left( \mathbf {t}\right) =t_{1}t_{2}.\) The empirical distribution function \(F_{n}^{u}\left( \mathbf {t}\right) \) based on sample \( \left( U_{1},\ldots ,U_{n}\right) \) is defined as \(F_{n}^{u}\left( \mathbf {t} \right) =n^{-1}\sum _{i=1}^{n}\mathrm{I}_{\left\{ U_{i}<\mathbf {t}\right\} }\) for \( \mathbf {0}\le \mathbf {t\le 1.}\) The \(2\)-dimensional Brownian bridge \( B\left( \mathbf {t}\right) \) is defined by \(B\left( \mathbf {t}\right) =W\left( \mathbf {t}\right) -\lambda \left( \mathbf {t}\right) W\left( \mathbf { 1}\right) \) for \(\mathbf {0}\le \mathbf {t\le 1}\), where \(W\left( \mathbf {t} \right) \) is a \(2\)-dimensional Wiener process. Then there is a version \( B_{n}\left( \mathbf {t}\right) \) of \(B\left( \mathbf {t}\right) \) such that

holds for all \(x\), where \(C,K,\) \(\lambda \) are positive constants.

Denote the well-known Rosenblatt transformation for bivariate continuous \( \left( X,\varepsilon \right) \) as

so that \(\left( X^{\prime },\varepsilon ^{\prime }\right) \) has uniform distribution on \(\left[ 0,1\right] ^{2}\), therefore

with \(F_{n}\left( x,\varepsilon \right) \) denoting the empirical distribution of \(\left( X,\varepsilon \right) \). Lemma 3 implies that there exists a version \(B_{n}\) of \(2\)-dimensional Brownian bridge such that

Lemma 4

Under Assumptions (A2)-(A5), as \(n\rightarrow \infty \), for any sequence of functions \(r_{n}\) \(\in \text {Lip}\left( \left[ 0,1\right] ,l_{n}\right) ,l_{n}>0\) with \({\left\| r_{n}\right\| _{\infty }=\rho }_{n}\ge 0\)

Proof

Step 1. We first discretize the problem by letting \( 0=x_{0}<x_{1}\,<\cdots <x_{M_{n}}=1,M_{n}=n^{4}\) by equally spaced points, the smoothness of kernel \(K\) in Assumption (A4) imples that

and the moment conditions on error \(\varepsilon \) in Assumption (A2), the rate of \(h\) in Assumption (A5) imply next that

Step 2. To truncate the error, we denote \(D_{n}=n^{\alpha }\) with \(\alpha \) as in Assumption (A5). Assumption (A5) implies that \(D_{n}n^{-1/2}h^{-1/2} \log ^{2}n\rightarrow 0,\) \(n^{1/2}h^{1/2}D_{n}^{-\left( 1+\eta \right) }\rightarrow 0\), \(\sum _{n=1}^{\infty }D_{n}^{-\left( 2+\eta \right) }<\infty \). Write \(\varepsilon _{i}=\varepsilon _{i,1}^{D_{n}}+\varepsilon _{i,2}^{D_{n}}\), where \(\varepsilon _{i,1}^{D_{n}}=\varepsilon _{i}\mathrm{I}\left\{ \left| \varepsilon _{i}\right| >D_{n}\right\} ,\varepsilon _{i,2}^{D_{n}}=\varepsilon _{i}\mathrm{I}\left\{ \left| \varepsilon _{i}\right| \le D_{n}\right\} \), and denote \(\mu ^{D_{n}}\left( x\right) =\mathsf {E}\left\{ \varepsilon _{i}\mathrm{I}\left\{ \left| \varepsilon _{i}\right| \le D_{n}\right\} \mid X_{i}=x\right\} \). One immediately obtains that

Next, since \(P\left( \left| \varepsilon _{i}\right| >D_{n}\right) \le \mathsf {E}\left| \varepsilon \right| ^{2+\eta }D_{n}^{-(2+\eta )}\), \(\sum \nolimits _{n=1}^{\infty }P\left( \left| \varepsilon _{n}\right| >D_{n}\right) \le \) \(\mathsf {E}\left| \varepsilon \right| ^{2+\eta }\sum \nolimits _{n=1}^{\infty }D_{n}^{-\left( 2+\eta \right) }<+\infty \), Borel–Cantelli Lemma then implies that

So one has for any \(k>0\)

Step 3. The truncated sum \(n^{-1}\sum _{i=1}^{n}K_{h}\left( X_{i}\!-\!x\right) r_{n}\left( X_{i}\right) \varepsilon _{i,2}^{D_{n}}\) equals \(\int _{\left| \varepsilon \right| \le D_{n}}K_{h}\left( u\!-\!x\right) r_{n}\left( u\right) \varepsilon dF_{n}\left( u,\varepsilon \right) \), while

according to (27). The above two Equations imply that

Step 4. The term \(n^{-1/2}\int _{\left| \varepsilon \right| \le D_{n}}K_{h}\left( u-x\right) r_{n}\left( u\right) \varepsilon dZ_{n}\left( u,\varepsilon \right) \) equals

Note that

by the growth constraint on \(D_{n}=n^{\alpha }\). Note also that

in which

Meanwhile

so the \(M_{n}\) Gaussian variables \(n^{-1/2}\int _{\left| \varepsilon \right| \le D_{n}}K_{h}\left( u-x_{j}\right) r_{n}\left( u\right) \varepsilon dW_{n}\left\{ M\left( u,\varepsilon \right) \right\} ,0\le j<M_{n}\) each has variance less than \(n^{-1}h^{-1}{\rho }_{n}^{2}C_{\sigma }^{2}C_{f}\), hence

Finally, putting together Eqs. (26), (28 ), (29), (30), (31 ) and (32) proves the Lemma.

1.2 A.2 Proof of Propositions

Proof of Proposition

1 It is obvious that \( \left| \mathrm{I}_{i,p}\right| \le 2\left\{ \tilde{m}_{p}\left( X_{i}\right) -m\left( X_{i}\right) \right\} ^{2}+2\tilde{\varepsilon }_{p}^{2}\left( X_{i}\right) .\) Meanwhile applied Lemma 1, \( \left\| m-\tilde{m}_{p}\right\| _{2,n}^{2}\le \left\| m-\tilde{m} _{p}\right\| _{\infty }^{2}=\mathcal {O}_{p}\left( H^{2p}\right) \), \( \left| \mathrm{I}\right| \) is bounded by

Proof of Proposition

2 By (12), \(\tilde{\varepsilon }_{p}( X_{i}) =\sum _{J=1-p}^{N}\tilde{a}_{J,p}B_{J,p}( X_{i}) \), Lemma 1 and Wang and Yang (2009) entail that \((\sum _{J=1-p}^{N}\tilde{a}_{J,p}^{2}) ^{1/2}=\mathcal {O}_{p}(\Vert \tilde{\varepsilon }_{p}(x) \Vert _{2,n}) =\mathcal {O}_{p}(n^{-1/2}N^{1/2}) \). Set \(r_{n}\left( x\right) =B_{J,p}\left( x\right) \), then Lemma 2 entails that \(\rho _{n}=\mathcal {O}\left( H^{-1/2}\right) \) and it is easy to verify that \( l_{n}=\mathcal {O}\left( H^{-3/2}\right) \). Applying Lemma 4, one obtains that

and hence

the lemma is proved.

Proof of Proposition

in which the spline function \(g_{p}\in G_{N}^{\left( p-2\right) }\) satisfies \(\left\| m-g_{p}\right\| _{\infty }\) \(\le CH^{p},m-g_{p}\in \text {Lip }\left( \left[ 0,1\right] ,CH^{p-1}\right) \) as in Lemma 1. Set \(r_{n}\left( x\right) =m\left( x\right) -g_{p}\left( x\right) \), then \(\rho _{n}=\mathcal {O}\left( H^{p}\right) ,l_{n}=\mathcal {O} \left( H^{p-1}\right) \), so applying Lemma 4 yields

Denoting \(g_{p}\left( x\right) -\tilde{m}_{p}\left( x\right) =\sum _{J=1-p}^{N}\gamma _{J,p}B_{J,p}\left( x\right) \) and applying Lemma 1, one has

which, together with (33) imply that

which, together with (34), prove the lemma.

Rights and permissions

About this article

Cite this article

Cai, L., Yang, L. A smooth simultaneous confidence band for conditional variance function. TEST 24, 632–655 (2015). https://doi.org/10.1007/s11749-015-0427-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-015-0427-5

Keywords

- B spline

- Confidence band

- Heteroscedasticity

- Infeasible estimator

- Knots

- Nadaraya–Waston estimator

- Variance function