Abstract

This paper deals with the max–min and min–max regret versions of the maximum weighted independent set problem on interval graphs with uncertain vertex weights. Both problems have been recently investigated by Talla Nobibon and Leus (Optim Lett 8:227–235, 2014), who showed that they are NP-hard for two scenarios and strongly NP-hard if the number of scenarios is a part of the input. In this paper, new complexity and approximation results for the problems under consideration are provided, which extend the ones previously obtained. Namely, for the discrete scenario uncertainty representation it is proven that if the number of scenarios \(K\) is a part of the input, then the max–min version of the problem is not at all approximable. On the other hand, its min–max regret version is approximable within \(K\) and not approximable within \(O(\log ^{1-\epsilon }K)\) for any \(\epsilon >0\) unless the problems in NP have quasi polynomial algorithms. Furthermore, for the interval uncertainty representation it is shown that the min–max regret version is NP-hard and approximable within 2.

Similar content being viewed by others

1 Introduction

We are given a family \(\mathcal {I}=\{I_1,I_2,\dots ,I_n\}\) of closed intervals of real line, where \(I_i=[a_i, b_i]\), \(i\in [n]\) (we use \([n]\) to denote the set \(\{1,\ldots n\}\)). The intervals in \(\mathcal {I}\) are not necessarily distinct. An undirected graph \(G=(V,E)\) with \(|V|=n\) vertices and \(|E|=m\) edges is called an interval graph for \(\mathcal {I}\) if \(v_i\in V\) corresponds to \(I_i\) and there is an edge \((v_i, v_j)\in E\) if and only if the intervals \(I_i\) and \(I_j\) have nonempty intersection. An independent set \(X\) in \(G\) is a subset of the vertices of \(G\) such for any \(v_i,v_j\in X\) it holds \((v_i,v_j)\notin E\). We will use \(\Phi \) to denote the set of all independent sets in \(G\). For each vertex \(v_i\in V\) a nonnegative weight \(w_i\) is specified. In the maximum weighted independent set problem (\(\textsc {IS}\) for short), we seek an independent set \(X\) in \(G\) of the maximum total weight \(F(X)=\sum _{v_i \in X} w_i\). Contrary to the problem in general graphs, IS for interval graphs is polynomially solvable [8]. It has some important practical applications and we refer the reader to [9, 10] for a description of them.

In [10] the following robust versions of the \(\textsc {IS}\) problem have been recently investigated. Suppose that the vertex weights are uncertain and they are specified as a scenario set \(\Gamma \). Namely, each scenario \(S\in \Gamma \) is a vector \((w_1^S,\dots ,w_n^S)\) of nonnegative integral vertex weights which may occur. Now the weight of a solution \(X\) depends on a scenario and we will denote it by \(F(X,S)=\sum _{v_i\in X} w_i^S\). Let \(F^*(S)=\max _{X\in \Phi } F(X,S)\) be the weight of a maximum weighted independent set in \(G\) under scenario \(S\). In this paper, we wish to investigate the following two robust problems:

The quantity \(Z(X)\) is called the maximum regret of solution \(X\). There are two popular methods of defining scenario set \(\Gamma \) (see, e.g., [4, 6]). For the discrete uncertainty representation set \(\Gamma =\{S_1,\dots ,S_K\}\) contains \(K\) explicitly given scenarios. For the interval uncertainty representation, for each vertex \(v_i\) an interval \([\underline{w}_i, \overline{w}_i]\) of its possible weights is specified and \(\Gamma \) is the Cartesian product of all these intervals.

Both uncertainty representations have been studied in [10], where it has been shown that for the discrete uncertainty representation the max–min and min–max regret versions of \(\textsc {IS}\) are NP-hard when \(K=2\) and strongly NP-hard when the number of scenarios \(K\) is a part of input. Furthermore, some pseudopolynomial algorithms for both problems, when \(K\) is constant, have been provided. For the interval uncertainty representation, the Max–Min IS problem can be trivially reduced to a deterministic polynomially solvable counterpart, but the complexity of Min–Max Regret IS remained open.

Our results We extend the complexity results obtained in [10] and provide new approximation ones for both discrete and interval uncertainty representations. Namely, for the discrete scenario uncertainty representation, we establish that when the number of scenarios \(K\) is a part of the input, the Max–Min IS problem is not at all approximable, the Min–Max Regret IS problem is approximable within \(K\) and not approximable within \(O(\log ^{1-\epsilon }K)\) for any \(\epsilon >0\) unless NP\(\in \)DTIME\((n^{\mathrm { polylog}\, n})\). We also show that both Max–Min IS and Min–Max Regret IS have fully polynomial-time approximation schemes (FPTAS’s), when \(K\) is constant. Furthermore, for the interval uncertainty representation, we prove that Min–Max Regret IS is NP-hard and approximable within 2.

2 Complexity and approximation results

In this section, we extend the complexity results for the Max–Min IS and Min–Max Regret IS problems provided in the recent paper [10] and give new approximation ones for both discrete and interval uncertainty representations. We start by considering the discrete scenario uncertainty representation.

Theorem 1

If \(K\) is a part of the input, then Max–Min IS is strongly NP-hard and not at all approximable unless \(P=NP\).

Proof

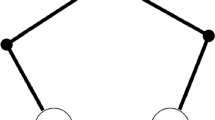

We provide a polynomial time reduction from the following Vertex Cover problem, which is known to be strongly NP-complete [3]. We are given an undirected graph \(G=(V,E)\), \(|V|=n\), and an integer \(L\). A subset of the vertices \(W\subseteq V\) is a vertex cover of \(G\) if for each \((v,w)\in E\) either \(v\in W\) or \(w\in W\) (or both). We ask if there is a vertex cover \(W\) of \(G\) such that \(|W|\le L\). We now construct an instance of Max–Min IS as follows. We first create a family of intervals \(\mathcal {I}=\{I_{ij}: i\in [n], j\in [L]\}\), where \(I_{ij}=[2j,2j+1]\) for each \(i\in [n]\) and \(j\in [L]\). It is easy to check that the resulting interval graph \(G'\) corresponding to \(\mathcal {I}\) is composed of \(L\) separate cliques of size \(n\) and each maximal independent set in \(G'\) contains exactly \(L\) vertices, one from every clique (see Fig. 1)—note that the intervals from \(\mathcal {I}\) refer to the vertices in \(G'\). We now form scenario set \(\Gamma \) as follows. For each edge \((v_k, v_l) \in E\), we create scenario under which the weights of intervals (resp. vertices) \(I_{kj}\) and \(I_{lj}\) are equal to 1 for each \(j\in [L]\) and the weights of the remaining intervals (resp. vertices) equal 0 (see Table 1).

We now show that there is a vertex cover of size at most \(L\) if and only if \(opt_1\ge 1\) in the constructed instance of Max–Min IS. Suppose that there is a vertex cover \(W\) of \(G\) such that \(|W|\le L\). We lose nothing by assuming that \(|W|=L\). One can easily meet this assumption by adding arbitrary additional vertices to \(W\), if necessary. Hence \(W=\{v_{i_1},\dots ,v_{i_L}\}\). Let us choose an independent set \(X\) consisting of the vertices in \(G'\) that correspond to the intervals \(I_{i_1,1},\dots ,I_{i_L,L}\). From the construction of the scenario set, it follows that \(F(X,S)\ge 1\) for all \(S\in \Gamma \) and, consequently, \(opt_1\ge 1\). Assume now that \(opt_1\ge 1\). So, there is an independent set \(X\) in \(G'\) such that \(F(X,S)\ge 1\) under each scenario \(S\in \Gamma \). The independent set \(X\) consists of the vertices corresponding to the intervals \(I_{i_1,1},\dots ,I_{i_L,L}\). Consider the set of vertices \(W=\{v_{i_1},\dots ,v_{i_L}\}\), \(|W|\le L\). From the construction of \(\Gamma \) it follows that each edge of \(G\) is covered by \(W\). Therefore, \(W\) is a vertex cover of size at most \(L\).

We are now ready to establish the inapproximability result. If the answer to Vertex Cover is ‘yes’, then \(opt_1\ge 1\), and if the answer is ‘no’, then \(opt_1=0\) (since all the vertex weights are nonnegative integers). Hence, any \(\rho (n)\)-approximation algorithm for Max–Min IS, i.e. the algorithm which outputs a solution \(\hat{X}\) for Max–Min IS such that \(opt_1\le \rho (n)\min _{S\in \Gamma }F(\hat{X},S)\), where \( \rho (n)>1\), would solve the NP-complete Vertex Cover problem in polynomial time. This negative result is still true when all the vertex weights are positive, and thus \(F(X,S)>0\) for each \(S\in \Gamma \). To see this it is enough to replace each 0 with 1 and each 1 with \((L+1) \rho (n)\) in the construction of scenario set \(\Gamma \). Now, if the answer to Vertex Cover is ‘yes’ then \(opt_1\ge (L+1) \rho (n)\) and if the answer is ‘no’, then \(opt_1\le L\). Applying the \(\rho (n)\)-approximation algorithm to the constructed instance of Max–Min IS we could solve the NP-complete Vertex Cover problem in polynomial time. \(\square \)

It has been shown in [10] that Min–Max Regret IS is strongly NP-hard when the number of scenarios is a part of the input. In order to establish the inapproximability result, we will use the following variant of the Label Cover problem (see e.g., [2, 7]):

Label Cover: We are given a regular bipartite graph \(G=(V\cup W,E)\), \(E\subseteq V\times W\); a set of labels \([N]\) and for each edge \((v,w)\in E\) a map (partial) \(\sigma _{v,w}:[N]\rightarrow [N]\). A labeling of \(G\) is an assignment of a subset of labels to each of the vertices of \(G\), i.e. a function \(l: V\cup W \rightarrow 2^{[N]}\). We say that a labeling satisfies an edge \((v,w)\in E\) if there exist \(a\in l(v)\) and \(b\in l(w)\) such that \(\sigma _{v,w}(a)=b.\) A total labeling is a labeling that satisfies all edges. We seek a total labeling whose value defined by \(\max _{x\in V\cup W}|l(x)|\) is minimal. This minimal value is denoted by \(val(\mathcal {L})\), where \(\mathcal {L}\) is the input instance.

Theorem 2

([7]) There exists a constant \(\gamma >0\) such that for any language \(L\in NP\), any input \(\mathbf {w}\) and any \(N>0\), one can construct a Label Cover instance \(\mathcal {L}\) with the following properties in time polynomial in the instance’s size:

-

The number of vertices in \(\mathcal {L}\) is \(|\mathbf {w}|^{O(\log N)}\),

-

If \(\mathbf {w}\in L\), then \(val(\mathcal {L})=1\),

-

If \(\mathbf {w}\not \in L\), then \(val(\mathcal {L})> N^{\gamma }\).

The following theorem will be needed to prove a lower bound on the approximation of Min–Max Regret IS.

Theorem 3

There exists a constant \(\gamma >0\) such that for any language \(L\in NP\), any input \(\mathbf {w}\), and any \(N>0\), one can construct an instance of Min–Max Regret IS with the following properties:

-

If \(\mathbf {w}\in L\), then \(opt_2\le 1\),

-

If \(\mathbf {w}\not \in L\), then \(opt_2\ge \lfloor N^\gamma \rfloor :=g\),

-

The number of intervals is at most \(|\mathbf {w}|^{O(\log N)}N\) and the number of scenarios is at most \(|\mathbf {w}|^{O(g\log N)}N^{g}\).

Proof

Let \(L\in NP\) and \(\mathcal {L}=(G=(V\cup W,E), N,\sigma )\) be the instance of Label Cover constructed for \(L\) (see Theorem 2). We now build the corresponding instance of Min–Max Regret IS in the following way. We first number the edges of \(G\) from 1 to \(|E|\) in arbitrary way. Then, for each edge \((v,w)\in E\), we create a family of at most \(N\) intervals \(\mathcal {I}_{v,w}=\{I_{v,w}^{i,j}\,:\,\sigma _{v,w}(i)=j, i\in [N]\}\). If \((v,w)\) has a number \(r\in \{1,\ldots , |E|\}\), then all the intervals in \(\mathcal {I}_{v,w}\) are equal to \([2r, 2r+1]\). We set \(\mathcal {I}=\cup _{(v,w)\in E} \mathcal {I}_{v,w}\). It is easily seen that the corresponding interval graph \(G'\) for \(\mathcal {I}\) is composed of exactly \(|E|\) separate cliques and each maximal independent set in this graph contains exactly \(|E|\) intervals, one from each clique. Note that the intervals from \(\mathcal {I}\) refer to the vertices in \(G'\). Fix vertex \(v\in V\). For each \(g\)-tuple of pairwise distinct edges \((v,w_1),\dots ,(v,w_g)\) incident to \(v\) and for each \(g\)-tuple of intervals \((I_{v,w_1}^{i_1,j_1},\dots ,I_{v,w_g}^{i_g,j_g})\in \mathcal {I}_{v,w_1}\times \dots \times \mathcal {I}_{v,w_g}\), where the labels \(i_1,\dots ,i_g\) are pairwise distinct, we form scenario under which all these intervals (resp. the vertices in \(G'\)) have the weight equal to 0 and all the remaining intervals (resp. the vertices in \(G'\)) have the weight equal to 1. We proceed in this way for each vertex \(v\in V\). Choose vertex \(w\in W\). For each \(g\)-tuple of pairwise distinct edges \((v_1,w),\dots ,(v_g,w)\) incident to \(w\) and for each \(g\)-tuple of intervals \((I_{v_1,w}^{i_1,j_1},\dots ,I_{v_g,w}^{i_g,j_g})\in \mathcal {I}_{v_1,w}\times \dots \times \mathcal {I}_{v_g,w}\), where the labels \(j_1,\dots ,j_g\) are pairwise distinct, we form scenario under which all these intervals (resp. the vertices) have the weight equal to 0 and all the remaining intervals (resp. the vertices) have the weight equal to 1. We repeat this construction for each vertex \(w\in W\). Finally, we add one scenario under which each vertex in \(G'\) has the weight equal to 1. We ensure in this way that the scenario set formed is not empty. An easy computation shows that in the above instance of Min–Max Regret IS the cardinality of set \(\mathcal {I}\) is at most \(|E| N\) and the cardinality of the scenario set \(\Gamma \) is at most \(|V||W|^gN^g+|W||V|^gN^g+1\). Hence and from the fact that the number of vertices (and also edges) in \(G\) is \(|\mathbf {w}|^{O(\log N)}\) (see Theorem 2), we have that \(|\mathcal {I}|\) is at most \(|\mathbf {w}|^{O(\log N)}N\) and \(|\Gamma |\) is at most \(|\mathbf {w}|^{O(g\log N)}N^{g}\).

Assume now that \(\mathbf {w}\in L\). Hence, there exists a total labeling \(l\), which assigns exactly one label \(l(v)\) to each \(v\in V\) and exactly one label \(l(w)\) to each \(w\in W\). Let us choose the interval \(I_{v,w}^{l(v),l(w)}\in \mathcal {I}_{v,w}\) for each \((v,w)\in E\). The vertices that refer to these intervals form an independent set \(X\) in \(G'\). There is at most one interval (vertex) with 0 weight under each scenario, and so \(F(X,S)\ge |E|-1\) under each \(S \in \Gamma \). Since \(F^*(S)=|E|\) for each \(S\in \Gamma \), \(opt_2\le 1\). Suppose that \(\mathbf {w}\notin L\), which gives \(val(\mathcal {L})>N^{\gamma }\) and, in consequence, \(val(\mathcal {L})> \lfloor N^{\gamma }\rfloor =g\). Assume, on the contrary, that \(opt_2<g\). Thus, there is an independent set \(X\) in \(G'\) such that \(F(X,S)>|E|-g\) under each scenario \(S\in \Gamma \). Note that \(X\) corresponds to a total labeling \(l\) which assigns labels \(i\) to \(v\) and \(j\) to \(w\) when the interval \(I_{u,v}^{ij}\) is selected from \(\mathcal {I}_{v,w}^{i,j}\). From the construction of \(\Gamma \), we conclude that \(l\) assigns less than \(g\) distinct labels to each vertex \(x\in V\cup W\), since otherwise \(F(X,S)=|E|-g\) for some scenario \(S\in \Gamma \). Hence, we get \(val(\mathcal {L})< g\), a contradiction. \(\square \)

Theorem 4

If \(K\) is a part of the input, then Min–Max Regret IS is not approximable within \(O(\log ^{1-\epsilon } K)\), for any \(\epsilon >0\), unless NP\(\in \)DTIME\((n^{\mathrm{polylog}\, n})\)

Proof

Let \(\gamma \) be a constant from Theorem 3. Consider a language \(L\in \) NP and an input \(\mathbf {w}\). Fix any constant \(\beta >0\) and set \(N=\lceil \log ^{\beta /\gamma } |\mathbf {w}|\rceil \). Theorem 3 allows us to construct an instance of Min–Max Regret IS with the number of scenarios \(K\) asymptotically bounded by \(|\mathbf {w}|^{\alpha N^\gamma \log N}N^{N^\gamma }\) for some constant \(\alpha >0\), \(opt_2\le 1\) if \(\mathbf {w}\in L\) and \(opt_2\ge \lfloor \log ^\beta |\mathbf {w}| \rfloor \) if \(\mathbf {w}\notin L\). We get \(\log K \le \alpha N^\gamma \log N \log |\mathbf {w}|+N^\gamma \log N\le \alpha ' \log ^{\beta +2}|\mathbf {w}|\) for some constant \(\alpha '>0\) and sufficiently large \(|\mathbf {w}|\). Therefore, \(\log |\mathbf {w}|\ge (1/\alpha ') \log ^{1/(\beta +2)}K\) and the gap is at least \(\lfloor \log ^\beta |\mathbf {w}| \rfloor \ge \lfloor 1/\alpha ' \log ^{\beta /(\beta +2)} K \rfloor \). The constant \(\beta >0\) can be arbitrarily large, and so the gap is \(O(\log ^{1-\epsilon } K)\) for any \(\epsilon =2/(\beta +2)>0\). Note that, the instance of Min–Max Regret IS can be built in \(O(|\mathbf {w}|^{\mathrm{poly log }|\mathbf {w}|})\) time, which completes the proof. \(\square \)

We now show that Min–Max Regret IS admits an approximation algorithm with some guaranteed worst case ratio, contrary to Max–Min IS, which is not at all approximable, when \(K\) is a part of the input (see Theorem 1). Namely, there exists a simple \(K\)-approximation algorithm, which outputs an optimal solution to the deterministic IS problem with the vertex weights computed as follows: \(\hat{w}_i:=\frac{1}{K}\sum _{k\in [K]} w_i^{S_k}\), \(i\in [n]\). This can be done in \(O(Kn+T(n))\) time, where \(T(n)\) is the time for solving the deterministic IS problem (e.g., \(T(n)=O(n\log n)\), see [9]).

Theorem 5

Min–Max Regret IS is approximable within \(K\).

Proof

The proof is adapted from [1], the proof of Proposition 4] to Min–Max Regret IS. Let \(\hat{w}_i=\frac{1}{K}\sum _{k\in [K]} w_i^{S_k}\) be the average weight of vertex \(v_i\in V\) over all scenarios. Let \(X^*\) be an optimal solution to Min–Max Regret IS and let \(\hat{X}\) be an optimal solution for the deterministic weights \(\hat{w}_i\), \(i\in [n]\). Clearly, \(\hat{X}\) can be computed in polynomial time. The following inequalities hold: \(opt_2=\max _{k\in [K]} (F^*(S_k)-F(X^*,S_k))\ge \frac{1}{K}\sum _{k\in [K]} (F^*(S_k)-F(X^*,S_k)) \ge \frac{1}{K}\sum _{k\in [K]} (F^*(S_k)-F(\hat{X},S_k)) \ge \frac{1}{K}\max _{k\in [K]} (F^*(S_k)-F(\hat{X},S_k)).\) Hence the maximum regret of \(\hat{X}\) is at most \(K\cdot opt_2\).

To see that the bound is tight consider a sample problem shown in Fig. 2, where an interval graph composed of \(2K\) vertices and the corresponding scenario set with \(K\) scenarios are shown. The average weight of each vertex equals \(1/K\). Hence the algorithm may return the independent set \(\hat{X}=\{v_{12},v_{22},v_{32},\dots ,v_{K2}\}\) whose maximal regret is equal to \(K\). But the maximal regret of the independent set \(X^*=\{v_{11},v_{21},v_{31},\dots ,v_{K-1,1},v_{K2}\}\) is equal to \(1\). \(\square \)

It turns out that Max–Min IS and Min–Max Regret IS have FPTAS’s, when \(K\) is constant.

Theorem 6

If \(K\) is constant, then both Max–Min IS and Min–Max Regret IS admit FPTAS’s.

Proof

The fact that Max–Min IS admits an FPTAS follows from [1, Theorem 1] and the existence of the pseudopolynomial algorithm for this problem, provided in [10], whose running time can be expressed by a polynomial in \(w_{\max }\) and \(n\), where \(w_{\max }=\max _{i\in [n], k\in [K]}w^{S_k}_i\). An FPTAS for Min–Max Regret IS is a consequence of [1, Theorem 2] and the existence of the pseudopolynomial algorithm for Min–Max Regret IS, built in [10], whose running time can be expressed by a polynomial in \(U\) and \(n\), where \(U\) is an upper bound on \( opt_2\) such that \(U\le K\cdot L\) and \(L\) is a lower bound on \( opt_2\). Of course, such lower and upper bounds can be provided by executing the \(K\)-approximation algorithm (see Theorem 5). \(\square \)

We now turn to the interval uncertainty representation. For a given solution \(X\in \Phi \), let \(S_X\) be the scenario under which the weights of \(v_i\in X\) are \(\underline{w}_i\) and the weights of \(v_i \notin X\) are \(\overline{w}_i\) for \(i\in [n]\). It has been shown in [10] that the maximal regret of \(X\) is \(Z(X)=F^*(S_X)-F(X,S_X)\). This property will be useful in proving the next two results. The first theorem gives an answer to a question about the complexity of Min–Max Regret IS under the interval uncertainty representation (only Max–Min IS has been known to be polynomially solvable [10], so far).

Theorem 7

Min–Max Regret IS under interval uncertainty representation is NP-hard.

Proof

We show a polynomial time reduction from the following Partition problem which is known to be NP-complete [3]. We are given a collection \(\mathcal {C}=(a_1,\dots ,a_n)\) of positive integers. We ask if there is a subset \(I\subseteq [n]\) such that \(\sum _{i\in I} a_i=\sum _{[n]\setminus I} a_i\). Let us define \(b=\frac{1}{2}\sum _{i\in [n]} a_i\). We now build the corresponding instance of Min–Max Regret IS as follows. The family of intervals \(\mathcal {I}\) contains two intervals \(I_{i1}=I_{i2}=[2i,2i+1]\) for each \(i\in [n]\) and one interval \(J=[1,2n+1]\). The corresponding interval graph for \(\mathcal {I}\) is shown in Fig. 3. The intervals from \(\mathcal {I}\) and the interval \(J\) refer to the vertices in \(G\).

Observe that each maximal independent set in \(G\) contains either one vertex \(J\) or exactly \(n\) vertices, one from each \(I_{i1}, I_{i2}\), \(i\in [n]\). The interval weight of \(I_{i1}\) is equal to \([3b-\frac{3}{2}a_i,3b]\), the interval weight of \(I_{i2}\) is equal to \([3b-a_i,3b-a_i]\), and the interval weight of vertex \(J\) is \([0,3nb-b]\). We now show that the answer to Partition is ‘yes’ if and only if there is an independent set \(X\) is \(G\) such that \(opt_2\le \frac{3}{2}b\).

Suppose that the answer to Partition is ‘yes’. Let \(I\subseteq [n]\) be such that \(\sum _{i\in I} a_i=\sum _{i\notin I} a_i=b\). Let us form an independent set \(X\) in \(G\) by choosing the vertices \(I_{i1}\) for \(i\in I\) and \(I_{i2}\) for \(i\notin I\). It holds \(F(X,S_X)=\sum _{i\in I} (3b-\frac{3}{2}a_i)+\sum _{i\notin I} (3b-a_i)=3nb-\frac{5}{2}b\) and \(F^*(S_X)=\max \{3nb-b, \sum _{i\in I} 3b+\sum _{i\notin I} (3b-a_i)\}=3nb-b\). Hence \(Z(X)= 3nb-b-3nb+\frac{5}{2}b=\frac{3}{2}b\).

Assume now that \(opt_2\le \frac{3}{2}b\), so there is an independent set \(X\) in \(G\) such that \(Z(X)\le \frac{3}{2}b\). It must be \(X\ne \{J\}\) since \(Z(\{J\})\ge 3nb\). Hence \(X\) is formed by the vertices \(I_{i1}\), \(I_{i2}\) for \(i \in [n]\). From the construction of graph \(G\) it follows that \(X\) contains either \(I_{i1}\) or \(I_{i2}\) for each \(i\in [n]\) (but not both). Let \(I\) be the subset of \([n]\) such that \(I_{i1}\in X\) for each \(i\in I\). It holds \(F(X,S_X)=\sum _{i\in I} (3b-\frac{3}{2}a_i)+\sum _{i\notin I} (3b-a_i)=3nb-2b-\frac{1}{2}\sum _{i\in I} a_i\) and \(F^*(S_X)=\max \{3nb-b,\sum _{i\notin I} 3b+\sum _{i\in I} (3b-a_i)\}=\max \{3nb-b,3nb-\sum _{i\in I} a_i\}\). In consequence

and \(Z(X) \le \frac{3}{2}b\) implies that \(\sum _{i\in I} a_i=b\) and, consequently, \(I\) forms a partition of \(\mathcal {C}\). \(\square \)

We now provide a simple approximation algorithm with a performance ratio of 2. It outputs an optimal solution to the IS problem with the deterministic vertex weights being the midpoints of the corresponding weight intervals, i.e. \(\hat{w}_i:=\frac{1}{2}(\underline{w}_i+\overline{w}_i)\) for all \(i\in [n]\). Obviously, its running time is \(O(T(n))\), where \(T(n)\) is time for solving the IS problem.

Theorem 8

Min–Max Regret IS under interval uncertainty representation is approximable within 2.

Proof

The analysis will be similar to that in [5]. The difference is that the underlying deterministic problems discussed in [5] are minimization ones, whereas the deterministic IS is a maximization problem. So, the result obtained in [5] cannot be directly applied to Min–Max Regret IS. Let \(\hat{w}_i=\frac{1}{2}(\underline{w}_i+\overline{w}_i)\) for all \(i\in [n]\) and let \(\hat{X}\) be an optimal solution for the deterministic weights \(\hat{w}_i\), \(i\in [n]\). Let us choose any \(X\in \Phi \). It holds \(\sum _{v_i\in \hat{X}}(\underline{w}_i+\overline{w}_i)\ge \sum _{v_i\in X}(\underline{w}_i+\overline{w}_i)\), which implies:

Therefore, \(Z(X)\) fulfills the following inequality:

Clearly, \(F(\hat{X},S_{\hat{X}})=F(X,S_{\hat{X}})+\sum _{v_i\in \hat{X}\setminus X} \underline{w}_i-\sum _{v_i\in X\setminus \hat{X}} \overline{w}_i\). Hence \(Z(\hat{X})=F^*(S_{\hat{X}})-F(\hat{X},S_{\hat{X}})=F^*(S_{\hat{X}})-F(X,S_{\hat{X}})+\sum _{v_i\in X\setminus \hat{X}} \overline{w}_i-\sum _{v_i\in \hat{X}\setminus X} \underline{w}_i\). Since \(Z(X)\ge F^*(S_{\hat{X}})-F(X,S_{\hat{X}})\), the maximal regret of \(\hat{X}\) can be bounded as follows:

Inequalities (1) and (2) imply \(Z(\hat{X})\le 2Z(X)\) for any \(X\in \Phi \), and \(Z(\hat{X})\le 2\cdot opt_2\).

The bound of 2 is tight which is shown in Fig. 4. The interval graph is a clique composed of 3 vertices. The corresponding interval weights are shown in Fig. 4. The algorithm may return solution \(X=\{v_3\}\). But \(Z(X)=2\) while a trivial verification shows that \(opt_2=1\). \(\square \)

3 Conclusions

In this paper, we have studied the max–min and min–max regret versions of the maximum weighted independent set problem on interval graphs with uncertain vertex weights modeled by scenarios. We have provided new complexity and approximation results on the problems, that complete the ones previously obtained in the literature. For the discrete scenario uncertainty representation, we have shown that if the number of scenarios \(K\) is a part of the input, then the max–min version of the problem is not at all approximable, the min–max regret version is approximable within \(K\) and not approximable within \(O(\log ^{1-\epsilon }K)\) for any \(\epsilon >0\) unless the problems in NP have quasi polynomial algorithms. Furthermore, both problems admit FTPAS’s, when \(K\) is constant. For the interval uncertainty representation, we have proved that the min–max regret version is NP-hard, providing in this way an answer to a question about the complexity of the problem. We have also shown that it is approximable within 2. There are still some open questions regarding the min–max regret version of the problem. It would be interesting to provide an approximation algorithm with better than \(K\) approximation ratio for the discrete uncertainty representation (when \(K\) is part of input) and better than 2 approximation ratio for the interval uncertainty representation. We also do not know whether the latter problem is strongly NP-hard, so it may be solved in pseudopolynomial time and even admits an FPTAS.

References

Aissi, H., Bazgan, C., Vanderpooten, D.: General approximation schemes for minmax (regret) versions of some (pseudo-)polynomial problems. Discrete Optim. 7, 136–148 (2010)

Arora, S., Lund, C.: Hardness of approximations. In: Hochbaum, D. (ed) Approximation Algorithms for NP-Hard Problems, chapter 10, pp. 1–54. PWS (1995)

Garey, M.R., Johnson, D.S.: Computers and Intractability. A Guide to the Theory of NP-Completeness. W. H. Freeman and Company, New York (1979)

Kasperski, A.: Discrete Optimization with Interval Data—Minmax Regret and Fuzzy Approach, volume 228 of Studies in Fuzziness and Soft Computing. Springer, Berlin, Heidelberg (2008)

Kasperski, A., Zieliński, P.: An approximation algorithm for interval data minmax regret combinatorial optimization problems. Inf. Process. Lett. 97, 177–180 (2006)

Kouvelis, P., Yu, G.: Robust Discrete Optimization and its Applications. Kluwer Academic Publishers, Dordrecht (1997)

Mastrolilli, M., Mutsanas, N., Svensson, O.: Single machine scheduling with scenarios. Theor. Computer Sci. 477, 57–66 (2013)

Pal, M., Bhattacharjee, G.: A sequential algorithm for finding a maximum weight k-independent set on interval graphs. Int. J. Computer Math. 60, 205–214 (1996)

Saha, A., Pal, M., Pal, T.K.: Selection of programme slots of television channels for giving advertisement: A graph theoretic approach. Inf. Sci. 177, 2480–2492 (2007)

Talla Nobibon, F., Leus, R.: Robust maximum weighted independent-set problems on interval graphs. Optim. Lett. 8, 227–235 (2014)

Acknowledgments

This work was partially supported by the National Center for Science (Narodowe Centrum Nauki), Grant 2013/09/B/ST6/01525.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Kasperski, A., Zieliński, P. Complexity of the robust weighted independent set problems on interval graphs. Optim Lett 9, 427–436 (2015). https://doi.org/10.1007/s11590-014-0773-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-014-0773-3