Abstract

A problem of optimally purchasing electricity at a real-valued spot price (that is, allowing negative prices) has been recently addressed in De Angelis et al. (SIAM J Control Optim 53(3), 1199–1223, 2015). The problem can be considered one of irreversible investment with a cost function which is non convex with respect to the control variable. In this paper we study optimal entry into the investment plan. The optimal entry policy can have an irregular boundary, with a kinked shape.

Similar content being viewed by others

1 Introduction

In this paper we consider the question of optimal entry into a plan of irreversible investment with a cost function which is non convex with respect to the control variable. The irreversible investment problem is that of [7], in which the investor commits to delivering a unit of electricity to a consumer at a future random time \(\Theta \) and may purchase and store electricity in real time at the stochastic (and potentially negative) spot price \((X_t)_{t \ge 0}\). In the optimal entry problem considered here, the consumer is willing to offer a single fixed initial payment \(P_0\) in return for this commitment and the investor must choose a stopping time \(\tau \) at which to accept the initial premium and enter the contract. If \(\Theta \le \tau \) then the investor’s opportunity is lost and in this case no cashflows occur. If \(\tau <\Theta \) then the inventory must be full at the time \(\Theta \) of demand, any deficit being met by a less efficient charging method whose additional cost is represented by a convex factor \(\Phi \) of the undersupply. The investor seeks to minimise the total expected costs, net of the initial premium \(P_0\), by choosing \(\tau \) optimally and by optimally filling the inventory from time \(\tau \) onwards.

Economic problems of optimal entry and exit under uncertain market prices have attracted significant interest. In the simplest formulation the timing of entry and/or exit is the only decision to be made and the planning horizon is infinite: see for example [8, 19], in which the market price is a geometric Brownian motion (GBM), and related models in [9, 22]. An extension of this problem to multiple types of economic activity is considered in [4] and solved using stochastic calculus. In addition to the choice of entry / exit time, the decision problem may also depend on another control variable representing for instance investment or production capacity. For example in [10] the rate of production is modelled as a progressively measurable process whereas in [13] the production capacity is a process of bounded variation. In this case the problem is usually solved by applying the dynamic programming principle to obtain an associated Hamilton–Jacobi–Bellman (HJB) equation. If the planning horizon is finite then the optimal stopping and control strategies are time-dependent and given by suitable curves, see for example [6].

Typically, although not universally, the costs in the aforementioned problems are assumed to be convex with respect to the control variable. In addition to being reasonable in a wide range of problems, this assumption usually simplifies the mathematical analysis. In the present problem the underlying commodity is electricity, for which negative prices have been observed in several markets (see, e.g., [12, 18]). The spot price is modelled by an Ornstein–Uhlenbeck process which is mean reverting and may take negative values and, as shown in [7], this makes our control problem neither convex nor concave: to date such problems have received relatively little attention in the literature. In our setting the control variable represents the cumulative amount of electricity purchased by the investor in the spot market for storage. This control is assumed to be monotone, so that the sale of electricity back to the market is not possible, and also bounded to reflect the fact that the inventory used for storage has finite capacity. The investment problem falls into the class of singular stochastic control (SSC) problems (see [1, 15, 16], among others).

Borrowing ideas from [13], we begin by decoupling the control (investment) problem from the stopping (entry) problem. The value function of this mixed stopping-then-control problem is shown to coincide with that of an appropriate optimal stopping problem over an infinite time-horizon whose gain function is the value function of the optimal investment problem with fixed entry time equal to zero. Unlike the situation in [13], however, the gain function in the present paper is a function of two variables without an explicit representation. Indeed [7] identifies three regimes for the gain function, depending on the problem parameters, only two of which are solved rigorously: a reflecting regime, in which the control may be singularly continuous, and a repelling regime, in which the control is purely discontinuous. We therefore only address these two cases in this paper and leave the remaining open case for future work.

The optimal entry policies obtained below depend on the spot price and the inventory level and are described by suitable curves. On the one hand, for the reflecting case we prove that the optimal entry time is of a single threshold type as in [10, 13]. On the other hand, the repelling case is interesting since it gives either a single threshold strategy or, alternatively, a complex optimal entry policy such that for any fixed value of the inventory level, the continuation region may be disconnected.

The paper is organised as follows. In Sect. 2 we set up the mixed irreversible investment-optimal entry problem, whose two-step formulation is then obtained in Sect. 3. Section 4 is devoted to the analysis of the optimal entry decision problem, with the repelling case studied separately in Sect. 5. In Sect. 5.2 we provide discussion of the complex optimal entry policy in this case, giving a possible economic interpretation.

2 Problem formulation

We begin by recalling the optimal investment problem introduced in [7]. Let \((\Omega ,\mathcal {A},\mathsf P)\) be a complete probability space, on which is defined a one-dimensional standard Brownian motion \((B_t)_{t\ge 0}\). We denote by \(\mathbb {F}:=(\mathcal{F}_t)_{t\ge 0}\) the filtration generated by \((B_t)_{t\ge 0}\) and augmented by \(\mathsf P\)-null sets. As in [7], the spot price of electricity X follows a standard time-homogeneous Ornstein–Uhlenbeck process with positive volatility \(\sigma \), positive adjustment rate \(\theta \) and positive asymptotic (or equilibrium) value \(\mu \); i.e., \(X^x\) is the unique strong solution of

Note that this model allows negative prices, which is consistent with the requirement to balance supply and demand in real time in electrical power systems and also consistent with the observed prices in several electricity spot markets (see, e.g., [12, 18]).

We denote by \(\Theta \) the random time of a consumer’s demand for electricity. This is modelled as an \(\mathcal {A}\)-measurable positive random variable independent of \(\mathbb {F}\) and distributed according to an exponential law with parameter \(\lambda >0\), so that effectively the time of demand is completely unpredictable. Note also that since \(\Theta \) is independent of \(\mathbb {F}\), the Brownian motion \((B_t)_{t\ge 0}\) remains a Brownian motion in the enlarged filtration \(\mathbb {G}:=(\mathcal {G}_t)_{t\ge 0}\), with \(\mathcal {G}_t:=\mathcal {F}_t \vee \sigma (\{\Theta \le s\}:\, s \le t)\), under which \(\Theta \) becomes a stopping time (see, e.g., Chapter 5, Section 6 of [14]).

We will denote by \(\tau \) any element of \(\mathcal {T}\), the set of all \((\mathcal {F}_t)\)-stopping times. At any \(\tau <\Theta \) the investor may enter the contract by accepting the initial premium \(P_0\) and committing to deliver a unit of electricity at the time \(\Theta \). At any time during \([\tau , \Theta )\) electricity may be purchased in the spot market and stored, thus increasing the total inventory \(C^{c,\nu } = (C^{c,\nu })_{t \ge 0}\), which is defined as

Here \(c\in [0,1]\) denotes the inventory at time zero and \(\nu _t\) is the cumulative amount of electricity purchased up to time t. We specify the (convex) set of admissible investment strategies by requiring that \(\nu \in \mathcal {S}^c_{\tau }\), where

The amount of energy in the inventory is bounded above by 1 to reflect the investor’s limited ability to store. The left continuity of \(\nu \) ensures that any electricity purchased at time \(\Theta \) is irrelevant for the optimisation. The requirement that \(\nu \) be \((\mathcal {F}_t)\)-adapted guarantees that all investment decisions are taken only on the basis of the price information available up to time t. The optimisation problem is given by

Here the first term represents expenditure in the spot market and the second is a penalty function: if the inventory is not full at time \(\Theta \) then it is filled by a less efficient method, so that the terminal spot price is weighted by a strictly convex function \(\Phi \). We make the following standing assumption:

Assumption 2.1

\(\Phi : \mathbb {R} \mapsto \mathbb {R}_+\) lies in \(C^2(\mathbb {R})\) and is decreasing and strictly convex in [0, 1] with \(\Phi (1)=0\).

For simplicity we assume that costs are discounted at the rate \(r=0\). This involves no loss of generality since the independent random time of demand performs an effective discounting, as follows. Recalling that \(\Theta \) is independent of \(\mathbb {F}\) and distributed according to an exponential law with parameter \(\lambda >0\), Fubini’s theorem gives that (2.3) may be rewritten as

with

setting this expectation equal to 0 on the set \(\{\tau = +\infty \}\). The discounting of costs may therefore be accomplished by appropriately increasing the exponential parameter \(\lambda \).

3 Decoupling the problem and background material

To deal with (2.4) we borrow arguments from [13] to show that the stopping (entry) problem can be split from the control (investment) problem, leading to a two-step formulation. We first briefly recall some results from [7], where the control problem has the value function

with

As was shown in [7, Sec. 2], the function

appears in an optimal stopping functional which may be associated with U. For convenience we let \(\hat{c}\in \mathbb {R}\) denote the unique solution of \(k(c)=0\) if it exists and write

We formally introduce the variational problem associated with U:

where \(\mathbb {L}_X\) is the second order differential operator associated to the infinitesimal generator of X:

As is standard in such control problems we define the inaction set for problem (3.1) by

The non convexity of the expectation (3.2) with respect to the control variable \(\nu _t\), which arises due to the real-valued factor \(X^x_t\), places it outside the standard existing literature on SSC problems. We therefore collect here the solutions proved in Sections 2 and 3 of [7].

Proposition 3.1

We have \(|U(x,c)|\le C(1+|x|)\) for \((x,c)\in \mathbb {R}\times [0,1]\) and a suitable constant \(C>0\). Moreover the following holds

-

(i)

If \(\hat{c}<0\) (i.e. \(k(\,\cdot \,)>0\) in [0, 1]), then \(U\in C^{2,1}(\mathbb {R}\times [0,1])\) and it is a classical solution of (3.5). The inaction set (3.7) is given by

$$\begin{aligned} \mathcal C=\{(x,c)\in \mathbb {R}\times [0,1]\,:\,x>\beta _*(c) \} \end{aligned}$$(3.8)for some function \(\beta _*\in C^1([0,1])\) which is decreasing and dominated from above by \(x_0(c)\wedge \hat{x}_0(c)\), \(c\in [0,1]\), with

$$\begin{aligned} x_0(c):=-\theta \mu \Phi '(c)/k(c)\quad \text {and}\quad \hat{x}_0(c):=\theta \mu /k(c), \end{aligned}$$(3.9)(cf. [7, Prop. 2.5 and Thm. 2.8]). For \(c\in [0,1]\) the optimal control is given by

$$\begin{aligned} \nu _t^*=\left[ g_*\left( \inf _{0 \le s \le t} X^x_s\right) -c\right] ^+, \quad t>0, \quad \nu _0^*= 0, \end{aligned}$$(3.10)with \(g_*(x):=\beta _*^{-1}(x)\), \(x\in (\beta _*(1),\beta _*(0))\), and \(g_* \equiv 0\) on \([\beta _*(0),\infty )\), \(g_* \equiv 1\) on \((-\infty ,\beta _*(1)]\).

-

(ii)

If \(\hat{c}>1\) (i.e. \(k(\,\cdot \,)<0\) in [0, 1]), then \(U\in W^{2,1,\infty }_{loc}(\mathbb {R}\times [0,1])\) and it solves (3.5) in the a.e. sense. The inaction set (3.7) is given by

$$\begin{aligned} \mathcal C=\{(x,c)\in \mathbb {R}\times [0,1]\,:\,x<\gamma _*(c) \} \end{aligned}$$(3.11)with suitable \(\gamma _*\in C^1([0,1])\), decreasing and bounded from below by \(\tilde{x}(c)\vee \overline{x}_0(c)\), \(c\in [0,1]\), with

$$\begin{aligned} \overline{x}_0(c):= \theta \mu \Phi (c)/\zeta (c)\quad \text {and}\quad \tilde{x}(c):=\theta \mu (1-c)/\zeta (c), \end{aligned}$$(3.12)(cf. [7, Thm. 3.1 and Prop. 3.4]). Moreover \(U(x,c)=x(1-c)\) for \(x\ge \gamma _*(c)\), \(c\in [0,1]\), and for any \(c\in [0,1]\) the optimal control is given by (cf. [7, Thm. 3.5])

$$\begin{aligned} \nu ^*_t:=\left\{ \begin{array}{ll} 0, &{}\quad t\le \tau _*,\\ (1-c), &{}\quad t>\tau _* \end{array} \right. \end{aligned}$$(3.13)with \(\tau _*:=\inf \big \{t\ge 0\,:\,X^x_t\ge \gamma _*(c)\big \}\).

We now perform the decoupling into two sub-problems, one of control and one of stopping.

Proposition 3.2

If \(\hat{c}<0\) or \(\hat{c}>1\) then the value function V of (2.4) can be equivalently rewritten as

with the convention \(e^{-\lambda \tau }(U(X^x_\tau ,c)-P_0):=\liminf _{t \uparrow \infty } e^{-\lambda t}(U(X^x_t,c)-P_0) =0\) on \(\{\tau =\infty \}\).

Proof

Let us set

Thanks to the results of Proposition 3.1 we can apply Itô’s formula to U, in the classical sense in case (i) and in its generalised version (cf. [11, Ch. 8, Sec. VIII.4, Thm. 4.1]) in case (ii). In particular for an arbitrary stopping time \(\tau \), an arbitrary admissible control \(\nu \in \mathcal {S}^c_\tau \) and with \(\tau _n:=\tau \wedge n\), \(n\in \mathbb {N}\) we get

where we have used standard localisation techniques to remove the martingale term, and decomposed the control into its continuous and jump parts, i.e. \(d\nu _t=d\nu _t^{cont}+\Delta \nu _t\), with \(\Delta \nu _t:=\nu _{t+}-\nu _t\). Since U solves the HJB equation (3.5) it is now easy to prove (cf. for instance [7, Thm. 2.8]) that, in the limit as \(n\rightarrow \infty \), one has

and therefore

for an arbitrary stopping time \(\tau \) and an arbitrary control \(\nu \in \mathcal {S}^c_\tau \). Hence by taking the infimum over all possible stopping times and over all \(\nu \in \mathcal {S}^c_\tau \), (2.4), (3.15) and (3.18) give \(w(x,c)\le V(x,c)\).

To prove that equality holds, let us fix an arbitrary stopping time \(\tau \). In case i) of Proposition 3.1, one can pick a control \(\nu ^\tau \in \mathcal {S}^c_\tau \) of the form

with \(\nu ^*\) as in (3.10), to obtain equality in (3.17) and hence in (3.18). In case ii) instead we define \(\sigma ^*_\tau :=\inf \{t\ge \tau \,:\,X^x_t\ge \gamma _*(c)\}\) and pick \(\nu ^\tau \in \mathcal {S}^c_\tau \) of the form

to have again equality in (3.17) and hence in (3.18). Now taking the infimum over all \(\tau \) we find \(w(x,c)\ge V(x,c)\).

To complete the proof we need to prove the last claim; that is, \(\liminf _{t \uparrow \infty } e^{-\lambda t}(U(X^x_t,c)-P_0) =0\) a.s. It suffices to show that \(\liminf _{t \uparrow \infty } e^{-\lambda t}|U(X^x_t,c)-P_0| =0\) a.s. To this end recall that \(|U(x,c)|\le C(1+|x|)\), for \((x,c)\in \mathbb {R}\times [0,1]\) and a suitable constant \(C>0\) (cf. Proposition 3.1), and then apply Lemma 7.1 in “Appendix 1”. \(\square \)

Remark 3.3

The optimal stopping problems (3.14) depend only parametrically on the inventory level c (the case \(c=1\) is trivial as \(U(\,\cdot \,,1)=0\) on \(\mathbb {R}\) and the optimal strategy is to stop at once for all initial points \(x\in \mathbb {R}\)).

It is worth noting that we were able to perform a very simple proof of the decoupling knowing the structure of the optimal control for problem (3.1). In wider generality one could obtain a proof based on an application of the dynamic programming principle although in that case it is well known that some delicate measurability issues should be addressed as well (see [13], “Appendix 1”). Although each of the optimal stopping problems (3.14) is for a one-dimensional diffusion over an infinite time horizon, standard methods find only limited application since no explicit expression is available for their gain function \(U(x,c)-P_0\).

In the next section we show that the cases \(\hat{c}<0\) and \(\hat{c}>1\), which are the regimes solved rigorously in [7], have substantially different optimal entry policies. To conclude with the background we prove a useful concavity result.

Lemma 3.4

The maps \(x\mapsto U(x,c)\) and \(x\mapsto V(x,c)\) are concave for fixed \(c\in [0,1]\).

Proof

We begin by observing that \(X^{px+(1-p)y}_t=pX^x_t+(1-p)X^y_t\) for all \(t\ge 0\) and any \(p\in (0,1)\). Hence (3.2) gives

and therefore taking the infimum over all admissible \(\nu \) we easily find \(U(px+(1-p)y,c)\ge p U(x,c)+(1-p)U(y,c)\) as claimed.

For V we argue in a similar way and use concavity of \(U(\,\cdot \,,c)\) as follows: let \(\tau \ge 0\) be an arbitrary stopping time, then

We conclude the proof by taking the infimum over all stopping times \(\tau \ge 0\). \(\square \)

4 Timing the entry decision

We first examine the optimal entry policy via a standard argument based on exit times from small intervals of \(\mathbb {R}\). An application of Dynkin’s formula gives that the instantaneous ‘cost of continuation’ in our optimal entry problem is given by the function

In the case \(\hat{c} < 0\), which is covered in Sect. 4.1, the function (4.1) is monotone decreasing in x (see the proof of Proposition 4.2 in “Appendix 2”). Since problem (2.3) is one of minimisation, it is never optimal to stop at points \((x,c)\in \mathbb {R}\times [0,1]\) such that \(\mathcal {L}(x,c)+\lambda P_0<0\); an easy comparison argument then shows there is a unique lower threshold that determines the optimal stopping rule in this case.

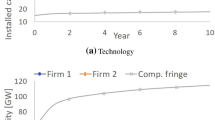

When \(\hat{c}>1\) the picture is more complex. The function (4.1) is decreasing and continuous everywhere except at a single point where it has a positive jump (cf. Proposition 5.1 below) and so can change sign twice. The comparison argument now becomes more subtle: continuation should not be optimal when the function (4.1) is positive in a ‘large neighbourhood containing the initial value x’. Indeed it will turn out in Sect. 5 that there are multiple possible optimal stopping regimes depending on parameter values. In particular the continuation region of the optimal stopping problem may be disconnected, which is unusual in the literature on optimal entry problems. The resulting optimal entry region can have a kinked shape, as illustrated in Fig. 1. The jump in the function (4.1) arises from the ‘bang-bang’ nature of the optimal investment plan when \(\hat{c} > 1\), and so this may be understood as causing this unusual shape for the optimal entry boundary.

An indicative example of an optimal entry region (shaded) when \(\hat{c} > 1\), together with the functions \(\gamma _*\) and \(x^0_1\), \(x^0_2\) (introduced in Proposition 5.1 below). The functions \(m_{1}\) and \(m_{2}\) (not drawn to scale) are important determinants for the presence of the kinked shape (see Remark 5.4 below). This plot was generated using \(\mu = 1\), \(\theta = 1\), \(\sigma = 3\), \(\lambda = 1\), \(P_{0} = 4\) and \(\Phi (c) = 2.2(1 - c) + 8(1 - c)^{2}\)

Before proceeding, we introduce two functions \(\phi _{\lambda }\) and \(\psi _\lambda \) that feature frequently below.

Definition 4.1

Let \(\phi _{\lambda } : \mathbb {R}\rightarrow \mathbb {R}^+\) and \(\psi _\lambda :\mathbb {R}\rightarrow \mathbb {R}^+\) denote respectively the decreasing and increasing fundamental solutions of the differential equation \(\mathbb {L}_Xf=\lambda f\) on \(\mathbb {R}\) (see “Appendix 1” for details).

4.1 The case \(\hat{c}<0\)

Let us now assume that \(\hat{c}<0\), i.e. \(k(c)>0\) for all \(c\in [0,1]\) [cf. (3.3)]. We first recall from Section 2.2 of [7] that in this case

where u is the value function of an associated optimal stopping problem with (cf. Sections 2.1 and 2.2 of [7])

and with \(\beta _*\) given as in Proposition 3.1-i). Moreover, defining

and recalling \(\phi _{\lambda }\) from Definition 4.1, u is expressed analytically as

for \(c\in [0,1]\), and it solves the variational problem

By the regularity of u and dominated convergence we have

for \((x,c)\in \mathbb {R}\times [0,1]\).

As is usual, for each \(c\in [0,1]\) we define the continuation region \(\mathcal C^c_V\) and stopping region \(\mathcal D^c_V\) for the optimal stopping problem (3.14) as

With the aim of characterising the geometry of \(\mathcal C^c_V\) and \(\mathcal D^c_V\) we start by providing some preliminary results on \(U-P_0\) that will help to formulate an appropriate free-boundary problem for V.

Proposition 4.2

For any given \(c\in [0,1]\), there exists a unique \(x^0(c)\in \mathbb {R}\) such that

We refer to “Appendix 2” for the proof of the previous proposition.

As discussed at the beginning of Sect. 4, it is never optimal in problem (3.14) to stop in \((x^0(c),\infty )\), \(c\in [0,1]\), for \(x^0(c)\) as in Proposition 4.2, i.e.

and consequently

Hence we conjecture that the optimal stopping strategy should be of single threshold type. In what follows we aim at finding \(\ell _*(c)\), \(c\in [0,1]\), such that \(\mathcal D^c_V=[-\infty ,\ell _*(c)]\) and

is optimal for V(x, c) in (3.14) with \((x,c)\in \mathbb {R}\times [0,1]\). The methodology adopted in [7, Sec. 2.1] does not apply directly to this problem due to the semi-explicit expression of the gain function \(U-P_0\).

4.1.1 Formulation of auxiliary optimal stopping problems

To work out the optimal boundary \(\ell _*\) we will introduce auxiliary optimal stopping problems and employ a guess-and-verify approach in two frameworks with differing technical issues. We first observe that since U is a classical solution of (3.5), an application of Dynkin’s formula to (3.14) provides a lower bound for V, that is

with

On the other hand, for \((x,c)\in \mathbb {R}\times [0,1]\) fixed, set \(\sigma ^*_\beta :=\inf \{t\ge 0\,:\,X^x_t\le \beta _*(c)\}\) with \(\beta _*\) as in Proposition 3.1, then for an arbitrary stopping time \(\tau \) one also obtains

by using the fact that U solves (3.5) and Dynkin’s formula. We can now obtain an upper bound for V by setting

so that taking the infimum over all \(\tau \) in (4.18) one obtains

It turns out that (4.16) and (4.20) allow us to find a simple characterisation of the optimal boundary \(\ell _*\) and of the function V in some cases. Let us first observe that \(0 \ge \Gamma _\beta (x,c) \ge \Gamma (x,c)\) for all \((x,c) \in \mathbb {R} \times [0,1]\). Defining for each fixed \(c \in [0,1]\) the stopping regions

it is easy to see that \(\mathcal D^c_{\Gamma } \subset \mathcal D^c_{\Gamma _\beta }\). Moreover, by the monotonicity of \(x\mapsto X^x_\cdot \) it is not hard to verify that \(x \mapsto \Gamma (x,c)\) and \(x\mapsto \Gamma _\beta (x,c)\) are decreasing. Hence we again expect optimal stopping strategies of threshold type, i.e.

for \(c\in [0,1]\) and for suitable functions \(\alpha ^*_i(\,\cdot \,)\), \(i=1,2\) to be determined.

Assume for now that \(\alpha ^*_1\) and \(\alpha ^*_2\) are indeed optimal, then we must have

Indeed, for all \((x,c) \in \mathbb {R}\times [0,1]\) we have \(\mathcal D^c_{\Gamma } \subset \mathcal D^c_{V}\) since \(\Gamma (x,c) \le V(x,c)-U(x,c)+P_0 \le 0\), and \(\mathcal D^c_{V} \subset \mathcal D^c_{\Gamma _\beta }\) since \(V(x,c)-U(x,c)+P_0 \le \Gamma _\beta (x,c) \le 0\). Notice also that since the optimisation problem in (4.19) is the same as the one in (4.17) except that in the former the observation is stopped when X hits \(\beta _*\), we must have

Thus for each \(c\in [0,1]\) we can now consider two cases:

-

1.

if \(\alpha _1^*(c) > \beta _*(c)\) we have \(\Gamma (x,c)= \Gamma _\beta (x,c)=\big (V-U+P_0\big )(x,c)\) for \(x\in \mathbb {R}\) and \(\ell _*(c) = \alpha _1^*(c)\),

-

2.

if \(\alpha _1^*(c) \le \beta _*(c)\) we have \(\alpha ^*_2(c)=\beta _*(c)\), implying that \(\ell _*(c) \le \beta _*(c)\).

Both 1. and 2. above need to be studied in order to obtain a complete characterisation of \(\ell _*\), however we note that case 1. is particularly interesting as it identifies V and \(\ell _*\) with \(\Gamma +U-P_0\) and \(\alpha ^*_1\), respectively. As we will clarify in what follows, solving problem (4.17) turns out to be theoretically simpler and computationally less demanding than dealing directly with problem (3.14).

4.1.2 Solution of the auxiliary optimal stopping problems

To make our claims rigorous we start by analysing problem (4.17). This is accomplished by largely relying on arguments already employed in [7, Sec. 2.1] and therefore we omit proofs here whenever a precise reference can be provided. Moreover, the majority of the proofs of new results are provided in “Appendix 2” to simplify the exposition.

In problem (4.17) we conjecture an optimal stopping time of the form

for \((x,c)\in \mathbb {R}\times [0,1]\) and \(\alpha \) to be determined. Under this conjecture \(\Gamma \) should be found in the class of functions of the form

for each \(c\in [0,1]\). Now, repeating the same arguments of proof of [7, Thm. 2.1] we obtain

Lemma 4.3

One has

for each \(c\in [0,1]\), with

To single out the candidate optimal boundary we impose the so-called smooth fit condition, i.e. \(\tfrac{d}{dx}\Gamma ^\alpha (\alpha (c),c)=0\) for every \(c\in [0,1]\). This amounts to finding \(\alpha ^*\) such that

Proposition 4.4

For \(c\in [0,1]\) define

For each \(c\in [0,1]\) there exists a unique solution \(\alpha ^*(c)\in (-\infty ,x^\dagger _0(c))\) of (4.28). Moreover \(\alpha ^*\in C^1([0,1))\) and it is strictly increasing with \(\lim _{c\rightarrow 1}\alpha ^*(c)=+\infty \).

For the proof of Proposition 4.4 we refer to “Appendix 2”.

To complete the characterisation of \(\alpha ^*\) and \(\Gamma ^{\alpha ^*}\) we now find an alternative upper bound for \(\alpha ^*\) that will guarantee \(\big (\mathbb L_X \Gamma ^{\alpha ^*}-\lambda \Gamma ^{\alpha ^*}\big )(x,c)\ge -\lambda (P_0-x\Phi (c))\) for \((x,c)\in \mathbb {R}\times [0,1]\). Again, the proof of the following result may be found in “Appendix 2”.

Proposition 4.5

For all \(c\in [0,1]\) we have \(\alpha ^*(c)\le P_0/\Phi (c)\) with \(\alpha ^*\) as in Proposition 4.4.

With the aim of formulating a variational problem for \(\Gamma ^{\alpha ^*}\) we observe that \(\tfrac{d^2}{dx^2}\Gamma ^{\alpha ^*}(x,c)<0\) for \(x>\alpha ^*(c)\), \(c\in [0,1]\) by (4.26), convexity of \(\phi _\lambda \) and the fact that \(\hat{G}(\alpha ^*(c),c)-P_0<0\). Hence \(\Gamma ^{\alpha ^*}\le 0\) on \(\mathbb {R}\times [0,1]\). It is not hard to verify by direct calculation from (4.26) and the above results that for all \(c\in [0,1]\) the couple \(\big (\Gamma ^{\alpha ^*}(\,\cdot \,,c),\alpha ^*(c)\big )\) solves the free-boundary problem

and \(\Gamma ^{\alpha ^*}(\,\cdot \,,c)\in W^{2,\infty }_{loc}(\mathbb {R})\). Following now the same arguments as in the proof of [7, Thm. 2.1], which is based on an application of the Itô–Tanaka formula and (4.30)–(4.32), we can verify our guess and prove the following theorem (whose details are omitted).

Theorem 4.6

The boundary \(\alpha ^*\) of Proposition 4.4 is optimal for (4.17) in the sense that \(\alpha ^*=\alpha ^*_1\) with \(\alpha ^*_1\) as in (4.21),

is an optimal stopping time and \(\Gamma ^{\alpha ^*}\equiv \Gamma \) [cf. (4.17)].

4.1.3 Solution of the original optimal stopping problem (3.14)

In Theorem 4.6 we have fully characterised \(\alpha ^*_1\) and \(\Gamma \) thus also \(\alpha ^*_2\) and \(\Gamma _\beta \) (cf. (4.19), (4.21) and (4.23)). Moreover we have found that \(\alpha ^*_1(\,\cdot \,)\) is strictly increasing on [0, 1). On the other hand, \(\beta _*(\,\cdot \,)\) is a strictly decreasing function [cf. Proposition 3.1-i)], hence there exists at most one \(c_* \in (0,1)\) such that

As already mentioned, it may be possible to provide examples where such a value \(c_*\) does not exist in (0, 1) and \(\alpha ^*_1(c)>\beta _*(c)\) for all \(c\in [0,1]\). In those cases, as discussed in Sect. 4.1.1, one has \(\ell _*=\alpha ^*_1\) and \(V=U-P_0+\Gamma \) and problem (3.14) is fully solved. Therefore to provide a complete analysis of problem (3.14) we must consider the case when \(c_*\) exists in (0, 1). From now on we make the following assumption.

Assumption 4.7

There exists a value \(c_*\in (0,1)\) (which is therefore unique) such that (4.34) holds.

As a consequence of the analysis in Sect. 4.1.2 we have the next simple corollary.

Corollary 4.8

For all \(c \in [c_*,1)\) it holds \(V(x,c)=(\Gamma +U-P_0)(x,c)\), \(x\in \mathbb {R}\) and \(\ell _*(c)=\alpha ^*_1(c)\), with \(\Gamma \) and \(\alpha ^*_1\) as in Theorem 4.6.

It remains to characterise \(\ell _*\) in the interval \([0,c_*)\) in which we have \(\ell _*(c) \le \beta _*(c)\). This is done in Theorem 4.13, whose proof requires other technical results which are cited here and proved in the appendix. Fix \(c\in [0,c_*)\), let \(\ell (c)\in \mathbb {R}\) be a candidate boundary and define the stopping time \(\tau _\ell (x,c):=\inf \big \{t\ge 0\,:\,X^x_t\le \ell (c)\big \}\) for \(x\in \mathbb {R}\). Again to simplify notation we set \(\tau _\ell =\tau _\ell (x,c)\) when no confusion may arise. It is now natural to associate to \(\ell (c)\) a candidate value function

whose analytical expression is provided in the next lemma.

Lemma 4.9

For \(c\in [0,c_*)\) we have

The candidate boundary \(\ell _*\), whose optimality will be subsequently verified, is found by imposing the smooth fit condition, i.e.

Proposition 4.10

For any \(c\in [0,c_*)\) there exists at least one solution \(\ell _*(c)\in (-\infty , x^0(c))\) of (4.37) with \(x^0(c)\) as in Proposition 4.2.

Remark 4.11

A couple of remarks before we proceed.

(i) The analytical representation (4.36) in fact holds for all \(c\in [0,1]\) and it must coincide with (4.26) for \(c\in [c_*,1]\). Furthermore, the optimal boundary \(\alpha ^*_1\) found in Sect. 4.1.2 by solving (4.28) must also solve (4.37) for all \(c\in [c_*,1]\) since \(\alpha ^*_1=\ell _*\) on that set. This equivalence can be verified by comparing numerical solutions to (4.28) and (4.37). Finding a numerical solution to (4.37) for \(c\in [0,c_*)\) (if it exists) is computationally more demanding than solving (4.28), however, because of the absence of an explicit expression for the function U.

(ii) It is important to observe that the proof of Proposition 4.10 does not use that \(c\in [0,c_*)\) and in fact it holds for \(c\in [0,1]\). However, arguing as in Sect. 4.1.2 we managed to obtain further regularity properties of the optimal boundary in \([c_*,1]\) and its uniqueness. We shall see in what follows that uniqueness can be retrieved also for \(c\in [0,c_*)\) but it requires a deeper analysis.

Now that the existence of at least one candidate optimal boundary \(\ell _*\) has been established, for the purpose of performing a verification argument we would also like to establish that for arbitrary \(c\in [0,c_*)\) we have \(V^{\ell _*}(x,c)\le U(x,c)-P_0\), \(x\in \mathbb {R}\). This is verified in the following proposition (whose proof is collected in the appendix).

Proposition 4.12

For \(c\in [0,c_*)\) and for any \(\ell _*\) solving (4.37) it holds \(V^{\ell _*}(x,c)\le U(x,c)-P_0\), \(x\in \mathbb {R}\).

Finally we provide a verification theorem establishing the optimality of our candidate boundary \(\ell _*\) and, as a by-product, also implying uniqueness of the solution to (4.37).

Theorem 4.13

There exists a unique solution of (4.37) in \((-\infty ,x^0(\bar{c})]\). This solution is the optimal boundary of problem (3.14) in the sense that \(V^{\ell _*}=V\) on \(\mathbb {R}\times [0,1)\) [cf. (4.36)] and the stopping time

is optimal in (3.14) for all \((x,c)\in \mathbb {R}\times [0,1)\).

Proof

For \(c\in [c_*,1)\) the proof was provided in Sect. 4.1.2 recalling that \(\ell _*=\alpha ^*_1\) on \([c_*,1)\) and \(V=U-P_0+\Gamma \) on \(\mathbb {R}\times [c_*,1)\) [cf. (4.17), Remark 4.11]. For \(c\in [0,c_*)\) we split the proof into two parts.

1. Optimality Fix \(\bar{c}\in [0,c_*)\). Here we prove that if \(\ell _*(\bar{c})\) is any solution of (4.37) then \(V^{\ell _*}(\,\cdot \,,\bar{c})= V(\,\cdot \,,\bar{c})\) on \(\mathbb {R}\) [cf. (3.14) and (4.36)].

First we note that \(V^{\ell _*}(\,\cdot \,,\bar{c})\ge V(\,\cdot \,,\bar{c})\) on \(\mathbb {R}\) by (3.14) and (4.35). To obtain the reverse inequality we will rely on Itô–Tanaka’s formula. Observe that \(V^{\ell _*}(\,\cdot \,,\bar{c})\in C^1(\mathbb {R})\) by (4.36) and (4.37), and \(V^{\ell _*}_{xx}(\,\cdot \,,\bar{c})\) is continuous on \(\mathbb {R}\setminus \big \{\ell _*(\bar{c})\big \}\) and bounded at the boundary \(\ell _*(\bar{c})\). Moreover from (4.36) we get

where the inequality in (4.40) holds by (4.12) since \(\ell _*(\bar{c})\le x^0(\bar{c})\) [cf. Proposition 4.10]. An application of Itô–Tanaka’s formula (see [17], Chapter 3, Problem 6.24, p. 215), (4.39), (4.40) and Proposition 4.12 give

with \(\tau \) an arbitrary stopping time and \(\tau _R:=\inf \big \{t\ge 0\,:\,|X^x_t| \ge R\big \}\), \(R>0\). We now pass to the limit as \(R\rightarrow \infty \) and recall that \(|U(x,\bar{c})|\le C(1+|x|)\) [cf. Proposition 3.1] and that \(\big \{e^{-\lambda \tau _R}|X^x_{\tau _R}|\,,\,R>0\big \}\) is a uniformly integrable family (cf. Lemma 7.2 in “Appendix 1”). Then in the limit we use the dominated convergence theorem and the fact that

to obtain \(V^{\ell _*}(\,\cdot \,,\bar{c})\le V(\,\cdot \,,\bar{c})\) on \(\mathbb {R}\) by the arbitrariness of \(\tau \), hence \(V^{\ell _*}(\,\cdot \,,\bar{c})=V(\,\cdot \,,\bar{c})\) on \(\mathbb {R}\) and optimality of \(\ell _*(\bar{c})\) follows.

2. Uniqueness Here we prove the uniqueness of the solution of (4.37) via probabilistic arguments similar to those employed for the first time in [20]. Let \(\bar{c}\in [0,c_*)\) be fixed and, arguing by contradiction, let us assume that there exists another solution \(\ell '(\bar{c})\ne \ell _*(\bar{c})\) of (4.37) with \(\ell '(\bar{c})\le x^0(\bar{c})\). Then by (3.14) and (4.35) it follows that

\(V^{\ell '}(\,\cdot \,,\bar{c})\in C^1(\mathbb {R})\) and \(V^{\ell '}_{xx}(\,\cdot \,,\bar{c})\in L^\infty _{loc}(\mathbb {R})\) by the same arguments as in 1. above. By construction \(V^{\ell '}\) solves (4.39) and (4.40) with \(\ell _*\) replaced by \(\ell '\).

Assume for example that \(\ell '(\bar{c})< \ell _*(\bar{c})\), take \(x<\ell '(\bar{c})\) and set \(\sigma ^*_{\ell }:=\inf \big \{t\ge 0\,:\,X^x_t\ge \ell _*(\bar{c})\big \}\), then an application of Itô–Tanaka’s formula gives (up to a localisation argument as in 1. above)

and

Recall that \(V^{\ell '}(X^x_{\sigma ^*_{\ell }},\bar{c})\ge V(X^x_{\sigma ^*_{\ell }},\bar{c})\) by (4.42) and that for \(x<\ell '(\bar{c})\le \ell _*(\bar{c})\) one has \(V(x,\bar{c})=V^{\ell '}(x,\bar{c})=U(x,\bar{c})-P_0\), hence subtracting (4.44) from (4.43) we get

By the continuity of paths of \(X^x\) we must have \(\sigma ^*_\ell >0\), \(\mathsf P\)-a.s. and since the law of X is absolutely continuous with respect to the Lebesgue measure we also have \(\mathsf P\big (\{\ell '(\bar{c})<X^x_t<\ell _*(\bar{c})\}\big )>0\) for all \(t > 0\). Therefore (4.45) and (4.40) lead to a contradiction and we conclude that \(\ell '(\bar{c})\ge \ell _*(\bar{c})\).

Let us now assume that \(\ell '(\bar{c})> \ell _*(\bar{c})\) and take \(x\in \big (\ell _*(\bar{c}),\ell '(\bar{c})\big )\). We recall the stopping time \(\tau ^*\) of (4.38) and again we use Itô–Tanaka’s formula to obtain

and

Now, we have \(V(x,\bar{c})\le V^{\ell '}(x,\bar{c})\) by (4.42) and \(V^{\ell '} \big ( X^x_{\tau ^*}, \bar{c} \big )=V\big (X^x_{\tau ^*},\bar{c}\big )=U(\ell _*(\bar{c}),\bar{c})-P_0\), \(\mathsf P\)-a.s. by construction, since \(\ell '(\bar{c})>\ell _*(\bar{c})\) and X is positively recurrent (cf. “Appendix 1”). Therefore subtracting (4.46) from (4.47) gives

Arguments analogous to those following (4.45) can be applied to (4.48) to find a contradiction. Then we have \(\ell '(\bar{c})= \ell _*(\bar{c})\) and by the arbitrariness of \(\bar{c}\) the first claim of the theorem follows. \(\square \)

Remark 4.14

The arguments developed in this section hold for all \(c\in [0,1]\). The reduction of (3.14) to the auxiliary problem of Sect. 4.1.1 is not necessary to provide an algebraic equation for the optimal boundary. Nonetheless, it seems convenient to resort to the auxiliary problem whenever possible due to its analytical and computational tractability. In contrast to Sect. 4.1.2, here we cannot establish either the monotonicity or continuity of the optimal boundary \(\ell _*\).

5 The case \(\hat{c}>1\)

In what follows we assume that \(\hat{c}>1\), i.e. \(k(c)<0\) for all \(c\in [0,1]\). As pointed out in Proposition 3.1-(ii) the solution of the control problem in this setting substantially departs from the one obtained for \(\hat{c}<0\). Both the value function and the optimal control exhibit a structure that is fundamentally different, and we recall here some results from [7, Sec. 3].

The function U has the following analytical representation:

with \(\gamma _*\) as in Proposition 3.1-(ii). In this setting U is less regular than the one for the case of \(\hat{c}<0\), in fact here we only have \(U(\,\cdot \,,c)\in W^{2,\infty }_{loc}(\mathbb {R})\) for all \(c\in [0,1]\) [cf. Proposition 3.1-(ii)] and hence we expect \(x\mapsto \mathcal {L}(x,c)+\lambda P_0:=(\mathbb {L}_X-\lambda )(U-P_0)(x,c)\) to have a discontinuity at the optimal boundary \(\gamma _*(c)\). For \(c\in [0,1]\) we define

where \(\mathcal {L}(x+,c)\) denotes the right limit of \(\mathcal {L}(\,\cdot \,,c)\) at x and \(\mathcal {L}(x-,c)\) its left limit.

Proposition 5.1

For each \(c\in [0,1)\) the map \(x\mapsto \mathcal {L}(x,c)+\lambda P_0\) is \(C^\infty \) and strictly decreasing on \((-\infty ,\gamma _*(c))\) and on \((\gamma _*(c),+\infty )\) whereas

Moreover, define

then for each \(c\in [0,1)\) there are three possible settings, that is

-

1.

\(\gamma _*(c) \le x^0_1(c)\) hence \(\mathcal {L}(x,c)+\lambda P_0> 0\) if and only if \(x< x^0_2(c)\);

-

2.

\(\gamma _*(c) \ge x^0_2(c)\) hence \(\mathcal {L}(x,c)+\lambda P_0>0\) if and only if \(x< x^0_1(c)\);

-

3.

\(x^0_1(c)< \gamma _*(c) < x^0_2(c)\) hence \(\mathcal {L}(x,c)+\lambda P_0>0\) if and only if \(x\in (-\infty ,x^0_1(c))\cup (\gamma _*(c),x^0_2(c))\).

Proof

The first claim follows by (5.1) and the sign of \(\Delta ^\mathcal {L}(\gamma _*(c),c)\) may be verified by recalling that \(\gamma _*(c)\ge \tilde{x}(c)\) [cf. Proposition 3.1-(ii)]. Checking 1, 2 and 3 is matter of simple algebra. \(\square \)

We may use Proposition 5.1 to expand the discussion in Sect. 4. In particular, from the first and second parts we see that if either \(\gamma _*(c) \ge x^0_2(c)\) or \(\gamma _*(c) \le x^0_1(c)\) then the optimal stopping strategy must be of single threshold type. On the other hand, for \(x^0_1(c)< \gamma _*(c) < x^0_2(c)\), as discussed in Sect. 4, there are two possible shapes for the continuation set. This is the setting for the preliminary discussion which follows.

If the size of the interval \((\gamma _*(c),x^0_2(c))\) is “small” and/or the absolute value of \(\mathcal {L}(x,c)+\lambda P_0\) in \((\gamma _*(c),x^0_2(c))\) is “small” compared to its absolute value in \((x^0_1(c),\gamma _*(c))\cup (x^0_2(c),+\infty )\) then, although continuation incurs a positive cost when the process is in the interval \((\gamma _*(c),x^0_2(c))\), the expected reward from subsequently entering the neighbouring intervals (where \(\mathcal {L}(x,c)+\lambda P_0<0\)) is sufficiently large that continuation may nevertheless be optimal in \((\gamma _*(c),x^0_2(c))\) so that there is a single lower optimal stopping boundary, which lies below \(x^0_1(c)\) (see Figs. 1, 2a).

The function \(x \mapsto \mathcal {L}(x,c)+\lambda P_0\) changes sign in both plots but, with the visual aid of Fig. 1, the stopping region is connected in a and is disconnected in b. (a) Illustration when \(c = 0\), (b) Illustration when \(c = 0.25\).

If the size of \((\gamma _*(c),x^0_2(c))\) is “big” and/or the absolute value of \(\mathcal {L}(x,c)+\lambda P_0\) in \((\gamma _*(c),x^0_2(c))\) is “big” compared to its absolute value in \((x^0_1(c),\gamma _*(c))\cup (x^0_2(c),+\infty )\) then we may find a portion of the stopping set below \(x^0_1(c)\) and another portion inside the interval \((\gamma _*(c),x^0_2(c))\). In this case the loss incurred by continuation inside a certain subset of \((\gamma _*(c),x^0_2(c))\) may be too great to be mitigated by the expected benefit of subsequent entry into the profitable neighbouring intervals and it becomes optimal to stop at once. In the third case of Proposition 5.1, the continuation and stopping regions may therefore be disconnected sets (see Figs. 1, 2b).

To make this discussion rigorous let us now recall \(\mathcal C_V^c\) and \(\mathcal D_V^c\) from (4.11). Note that for any fixed \(c\in [0,1)\) and arbitrary stopping time \(\tau \) the map \(x\mapsto \mathsf E[e^{-\lambda \tau }\big (U(X^x_\tau ,c)-P_0\big )]\) is continuous, hence \(x\mapsto V(x,c)\) is upper semicontinuous (being the infimum of continuous functions). Recall that X is positively recurrent and therefore it hits any point of \(\mathbb {R}\) in finite time with probability one (see “Appendix 1” for details). Hence according to standard optimal stopping theory, if \(\mathcal D_V^c\ne \emptyset \) the first entry time of X in \(\mathcal D_V^c\) is an optimal stopping time (cf. e.g. [21, Ch. 1, Sec. 2, Corollary 2.9]).

Proposition 5.2

Let \(c\in [0,1)\) be fixed. Then

-

(i)

if \(\gamma _*(c) \ge x^0_2(c)\), there exists \(\ell _*(c)\in (-\infty ,x^0_1(c))\) such that \(\mathcal D_V^c=(-\infty ,\ell _*(c)]\) and \(\tau _*=\inf \{t\ge 0\,:\,X^x_t\le \ell _*(c)\}\) is optimal in (3.14)

-

(ii)

if \(\gamma _*(c) \le x^0_1(c)\), there exists \(\ell _*(c)\in (-\infty ,x^0_2(c))\) such that \(\mathcal D_V^c=(-\infty ,\ell _*(c)]\) and \(\tau _*=\inf \{t\ge 0\,:\,X^x_t\le \ell _*(c)\}\) is optimal in (3.14)

-

(iii)

if \(x^0_1(c)< \gamma _*(c) < x^0_2(c)\), there exists \(\ell ^{(1)}_*(c)\in (-\infty ,x^0_1(c))\) such that \(\mathcal D_V^c\cap (-\infty ,\gamma _*(c)]=(-\infty ,\ell ^{(1)}_*(c)]\). Moreover, either (a): \(\mathcal D_V^c\cap [\gamma _*(c),\infty )=\emptyset \) and \(\tau _*=\inf \{t\ge 0\,:\,X^x_t\le \ell ^{(1)}_*(c)\}\) is optimal in (3.14), or (b): there exist \(\ell _*^{(2)}(c)\le \ell _*^{(3)}(c)\le x^0_{2}(c)\) such that \(\mathcal D_V^c\cap [\gamma _*(c),\infty )=[\ell _*^{(2)}(c),\ell _*^{(3)}(c)]\) (with the convention that if \(\ell _*^{(2)}(c)=\ell _*^{(3)}(c)=:\ell _*(c)\) then \(\mathcal D_V^c\cap [\gamma _*(c),\infty )=\{\ell _*(c)\}\)) and the stopping time

$$\begin{aligned} \tau ^{(II)}_*:=\inf \{t\ge 0\,:\,X^x_t\le \ell ^{(1)}_*(c)\,\,\text {or}\,\,X^x_t\in [\ell _*^{(2)}(c),\ell _*^{(3)}(c)]\} \end{aligned}$$(5.5)is optimal in (3.14).

Proof

We provide a detailed proof only for (iii) as the other claims follow by analogous arguments. Let us fix \(c\in [0,1)\) and assume \(x^0_1(c)< \gamma _*(c) < x^0_2(c)\).

Step 1 We start by proving that \(\mathcal D_V^c\ne \emptyset \). By localisation and an application of Itô’s formula in its generalised version (cf. [11, Ch. 8]) to (3.14) and recalling Proposition 5.1 we get

Arguing by contradiction we assume that \(\mathcal D_V^c=\emptyset \) and hence the optimum in (5.6) is obtained by formally setting \(\tau =+\infty \). Moreover by recalling that U solves (3.5) we observe that \(\mathcal {L}(X^x_t,c)\ge -X^x_t\Phi (c)\) \(\mathsf P\)-a.s. for all \(t\ge 0\) and (5.6) gives

where

It is not hard to see from (5.8) that for sufficiently negative values of x we have \(R(x,c)>0\) and (5.7) implies that \(\mathcal D_V^c\) cannot be empty.

Step 2 Here we prove that \(\mathcal D_V^c\cap (-\infty ,\gamma _*(c)]=(-\infty ,\ell ^{(1)}_*(c)]\) for suitable \(\ell ^{(1)}_*(c)\le x^0_1(c)\). The previous step has already shown that it is optimal to stop at once for sufficiently negative values of x. It now remains to prove that if \(x\in \mathcal D_V^c\cap (-\infty ,\gamma _*(c)]\) then \(x'\in \mathcal D_V^c\cap (-\infty ,\gamma _*(c)]\) for any \(x'<x\). For this, fix \(\bar{x}\in \mathcal D_V^c\cap (-\infty ,\gamma _*(c)]\) and let \(x'<\bar{x}\). Note that the process \(X^{x'}\) cannot reach a subset of \(\mathbb {R}\) where \(\lambda P_0+\mathcal {L}(\,\cdot \,,c)<0\) [cf. Proposition 5.1-(3)] without crossing \(\bar{x}\) and hence entering \(\mathcal D_V^c\). Therefore, if \(x'\in \mathcal C_V^c\) and \(\tau _*(x')\) is the associated optimal stopping time, i.e. \(\tau _*(x'):=\inf \{t\ge 0\,:\,X^{x'}_t\in \mathcal D^c_V\}\), we must have

giving a contradiction and implying that \(x'\in \mathcal D_V^c\).

Step 3 We now aim to prove that if \(\mathcal D_V^c\cap [\gamma _*(c),\infty )\ne \emptyset \) then \(\mathcal D_V^c\cap [\gamma _*(c),\infty )=[\ell _*^{(2)}(c),\ell _*^{(3)}(c)]\) for suitable \(\ell _*^{(2)}(c)\le \ell _*^{(3)}(c)\le x^0_{2}(c)\). The case of \(\mathcal D_V^c\cap [\gamma _*(c),\infty )\) containing a single point is self-explanatory. We then assume that there exist \(x<x'\) such that \(x,x'\in \mathcal D_V^c\cap [\gamma _*(c),\infty )\) and prove that also \([x,x']\subseteq \mathcal D_V^c\cap [\gamma _*(c),\infty )\).

Looking for a contradiction, let us assume that there exists \(y\in (x,x')\) such that \(y\in \mathcal C_V^c\). The process \(X^y\) cannot reach a subset of \(\mathbb {R}\) where \(\lambda P_0+\mathcal {L}(\,\cdot \,,c)<0\) without leaving the interval \((x,x')\) [cf. Proposition 5.1-(3)]. Then, by arguing as in (5.9), with the associated optimal stopping time \(\tau _*(y):=\inf \{t\ge 0\,:\,X^{y}_t\in \mathcal D^c_V\}\), we inevitably reach a contradiction. Hence the claim follows. \(\square \)

Before proceeding further we clarify the dichotomy in part iii) of Proposition 5.2, as follows. Lemma 5.3 below characterises the subcases iii)(a) and iii)(b) via condition (5.10). Remark 5.4 then shows that this condition does nothing more than to compare the minima of two convex functions.

Lemma 5.3

Fix \(c \in [0,1)\) and suppose that \(x^0_1(c)< \gamma _*(c) < x^0_2(c)\). Then \(\mathcal {D}_V^c \cap [\gamma _*(c),\infty )=\emptyset \) if and only if there exists \(\ell _*(c)\in (-\infty ,x^0_1(c))\) such that for every \(x \ge \gamma _*(c)\):

Proof

(i) Necessity If \(\mathcal {D}_V^c \cap [\gamma _*(c),\infty )=\emptyset \), then by Proposition 5.2-(iii) there exists a point \(\ell _*(c)\in (-\infty ,x^0_1(c))\) such that \(\mathcal {D}_V^c=(-\infty ,\ell _*(c)]\). Let \(x \ge \gamma _*(c)\) be arbitrary and notice that \(V(x,c) < U(x,c)-P_0\) since the current hypothesis implies \(x \in [\gamma _*(c),\infty ) \subset \mathcal {C}^c_V\). According to Proposition 5.2-(iii), the stopping time \(\tau _*\) defined by

is optimal in (3.14). On the other hand, since X has continuous sample paths and \(\mathsf {P}_{x}(\{\tau _* < \infty \}) = 1\) by positive recurrence of X, we can also show that

where the last line follows from (6.5). Since \(x \ge \gamma _*(c)\) was arbitrary we have proved the necessity of the claim.

(ii) Sufficiency Suppose now that there exists a point \(\ell _*(c)\in (-\infty ,x^0_1(c))\) such that (5.10) holds for every \(x \ge \gamma _*(c)\). Using the same arguments establishing the right-hand side of (5.12), noting that \(\tau _*\) as defined in (5.11) is no longer necessarily optimal, for every \(x \ge \gamma _*(c)\) we have

which shows \(\mathcal {D}_V^c \cap [\gamma _*(c),\infty )=\emptyset \). \(\square \)

Remark 5.4

Let us fix \(c \in [0,1)\) such that \(x^0_1(c)< \gamma _*(c) < x^0_2(c)\), or equivalently part (iii) of Proposition 5.2 holds. Writing

we will appeal to the discussion given at the start of Section 6 of [5]. Since \(\mathcal {L}(x,c)+\lambda P_0>0\) if and only if \(x\in (-\infty ,x^0_1(c))\cup (\gamma _*(c),x^0_2(c))\) (from Proposition 5.1), it follows from equation (*) in Section 6 of [5] that the function \(y \mapsto H(y)\) is strictly convex on \((0,F(x^0_1(c)))\) and on \((F(\gamma _*(c)),F(x^0_2(c)))\) and concave everywhere else on its domain. Define \(y_m^1\) and \(y_m^2\) by

By Eq. (5.1) above, and the fact that F is monotone increasing, we have that \(\lim _{y \rightarrow +\infty }H(y) = \lim _{x\rightarrow +\infty }\mathcal F(x) = +\infty \) (recall that \(\phi _\lambda \) is positive and decreasing). Also

where the second equality follows from the definition of \(y_m^2\) and the aforementioned geometric properties of \(y \mapsto H(y)\). It is therefore clear from Lemma 5.3 that when \(m_1<m_2\) then part (iii)(a) of Proposition 5.2 holds, while when \(m_1\ge m_2\) part (iii)(b) of Proposition 5.2 holds.

5.1 The optimal boundaries

We will characterise the four cases (i), (ii), (iii)(a), (iii)(b) of Proposition 5.2 through direct probabilistic analysis of the value function and subsequently derive equations for the optimal boundaries obtained in the previous section. We first address cases i and ii of Proposition 5.2.

Theorem 5.5

Let \(c\in [0,1)\) and \(\mathscr {B}\) be a subset of \(\mathbb {R}\). Consider the following problem: Find \(x \in \mathscr {B}\) such that

-

(i)

If \(\gamma _*(c) \ge x^0_2(c)\), let \(\ell _*(c)\) be given as in Proposition 5.2-i), then \(V(x,c)=V^{\ell _*}(x,c)\) (cf. (4.36)), \(x\in \mathbb {R}\) and \(\ell _*(c)\) is the unique solution to (5.18) in \(\mathscr {B} = (-\infty ,x^0_1(c))\).

-

(ii)

If \(\gamma _*(c) \le x^0_1(c)\), let \(\ell _*(c)\) be given as in Proposition 5.2-ii), then \(V(x,c)=V^{\ell _*}(x,c)\), \(x\in \mathbb {R}\) (cf. (4.36)) and \(\ell _*(c)\) is the unique solution to (5.18) in \(\mathscr {B} =(-\infty ,x^0_2(c))\).

Proof

We only provide details for the proof of i) as the second part is completely analogous.

From Proposition 5.2-(i) we know that \(\ell _*(c)\in (-\infty ,x^0_2(c))\) and that taking \(\tau _*(x):=\inf \{t\ge 0\,:\,X^x_t\le \ell _*(c)\}\) is optimal for (3.14), hence the value function V is given by (4.36) with \(\ell =\ell _*\) (the proof is the same as that of Lemma 4.9). If we can prove that smooth fit holds then \(\ell _*\) must also be a solution to (5.18). To simplify notation set \(\ell _*=\ell _*(c)\) and notice that

On the other hand, consider \(\tau _\varepsilon :=\tau _*(\ell _*+\varepsilon )=\inf \{t\ge 0\,:\,X^{\ell _*+\varepsilon }_t\le \ell _*\}\) and note that \(\tau _\varepsilon \rightarrow 0\), \(\mathsf P\)-a.s. as \(\varepsilon \rightarrow 0\) (which can be proved by standard arguments based on the law of the iterated logarithm) and therefore \(X^{\ell _*+\varepsilon }_{\tau _\varepsilon }\rightarrow \ell _*\), \(\mathsf P\)-a.s. as \(\varepsilon \rightarrow 0\) by the continuity of \((t,x)\mapsto X^x_t(\omega )\) for \(\omega \in \Omega \). Since \(\tau _\varepsilon \) is optimal in Eq. (3.14) with \(x = \ell _*+\varepsilon \) we obtain

The mean value theorem, (6.1) in “Appendix 1” and (5.20) give

with \(\xi _\varepsilon \in [X^{\ell _*}_{\tau _\varepsilon }, X^{\ell _*+\varepsilon }_{\tau _\varepsilon }]\), \(\mathsf P\)-a.s. From (5.1) one has that \(U_x(\,\cdot \,,c)\) is bounded on \(\mathbb {R}\), hence taking limits as \(\varepsilon \rightarrow 0\) in (5.19) and (5.21) and using the dominated convergence theorem in the latter we get \(V_x(\ell _*,c)=U_x(\ell _*,c)\), and since \(V(\,\cdot \,,c)\) is concave (see Lemma 3.4) it must also be \(C^1\) across \(\ell _*\), i.e. smooth fit holds. In particular this means that differentiating (4.36) at \(\ell _*\) we observe that \(\ell _*\) solves (5.18). The uniqueness of this solution can be proved by the same arguments as those in part 2 of the proof of Theorem 4.13 and we omit them here for brevity. \(\square \)

Next we address cases (iii)(a) and (iii)(b) of Proposition 5.2. Let us define

for \(\xi ,\zeta \in \mathbb {R}\).

Theorem 5.6

Let \(c\in [0,1)\) be such that \(x^0_1(c)<\gamma _*(c)<x^0_2(c)\) and consider the following problem: Find \(x<y<z\) in \(\mathbb {R}\) with \(x\in (-\infty ,x^0_1(c))\) and \(\gamma _*(c)<y<z<x^0_2(c)\) such that the triple (x, y, z) solves the system

-

(i)

In case (iii)(b) of Proposition 5.2 the stopping set is of the form \(\mathcal D_V^c=(-\infty ,\ell _*^{(1)}(c)]\cup [\ell _*^{(2)}(c),\ell _*^{(3)}(c)]\), and then \(\{x,y,z\}=\{\ell _*^{(1)}(c),\ell _*^{(2)}(c),\ell _*^{(3)}(c)\}\) is the unique triple solving (5.23)–(5.25). The value function is given by

$$\begin{aligned} V(x,c)= \left\{ \begin{array}{ll} \big (U(\ell ^{(3)}_*,c)-P_0\big )\frac{\phi _\lambda (x)}{\phi _\lambda (\ell ^{(3)}_*)} &{} \quad \text {for }\,x> \ell ^{(3)}_*\\ U(x,c)-P_0 &{}\quad \text {for }\, \ell ^{(2)}_*\le x \le \ell ^{(3)}_*\\ (U(\ell ^{(1)}_*,c)-P_0)\frac{F_1(x,\ell ^{(2)}_*)}{F_1(\ell ^{(1)}_*,\ell ^{(2)}_*)}+(U(\ell ^{(2)}_*,c) -P_0)\frac{F_1(\ell ^{(1)}_*,x)}{F_1(\ell ^{(1)}_*,\ell ^{(2)}_*)} &{}\quad \text {for }\, \ell ^{(1)}_*< x < \ell ^{(2)}_*\\ U(x,c)-P_0 &{} \quad \text {for }\, x \le \ell ^{(1)}_* \end{array} \right. \end{aligned}$$(5.26)where we have set \(\ell _*^{(k)}=\ell _*^{(k)}(c)\), \(k=1,2,3\) for simplicity.

-

(ii)

In case (iii)(a) of Proposition 5.2 we have \(\mathcal D_V^c=(-\infty ,\ell _*^{(1)}(c)]\), moreover \(V(x,c)=V^{\ell _*^{(1)}}(x,c)\), \(x\in \mathbb {R}\) (cf. (4.36)) and \(\ell _*^{(1)}(c)\) is the unique solution to (5.18) with \(\mathscr {B}=(-\infty ,x^0_1(c))\).

Proof

Proof of (i). In the case of Proposition 5.2-(iii)(b), the stopping time \(\tau ^{(II)}_*\) defined in (5.5) is optimal for (3.14):

Equation (5.26) is therefore just the analytical representation for the value function in this case. The fact that \(\ell ^{(1)}_*\), \(\ell ^{(2)}_*\) and \(\ell ^{(3)}_*\) solve the system (5.23)–(5.25) follows from the smooth fit condition at each of the boundaries. A proof of the smooth fit condition can be carried out using probabilistic techniques as done previously for Theorem 5.5. We therefore omit its proof and only show uniqueness of the solution to (5.23)–(5.25).

Uniqueness will be addressed with techniques similar to those employed in Theorem 4.13, taking into account that the stopping region in the present setting is disconnected. We fix \(c\in [0,1)\), assume that there exists a triple \(\{\ell '_1,\ell '_2,\ell '_3\} \ne \{\ell ^{(1)}_*,\ell ^{(2)}_*,\ell ^{(3)}_*\}\) solving (5.23)–(5.25) and define a stopping time

We can associate to the triple a function

and note that \(V'(\,\cdot \,,c)\) has the same properties as the value function \(V(\,\cdot \,,c)\) provided that we replace \(\ell _*^{(k)}\) by \(\ell '_k\) everywhere for \(k=1,2,3\). Moreover, Eq. (3.14) implies

Step 1 First we show that \((\ell ^{(2)}_*,\ell ^{(3)}_*)\cap (\ell '_2,\ell '_3)\ne \emptyset \). We assume that \(\ell '_2\ge \ell ^{(3)}_*\) but the same arguments would apply if we consider \(\ell ^{(2)}_*\ge \ell '_3\). Note that \(\ell '_1<\ell ^{(3)}_*\) since \(\ell '_1\in (-\infty ,x^0_1(c))\), then fix \(x\in (\ell '_2,\ell '_3)\) and define the stopping time \(\tau _3=\inf \{t\ge 0\,:\,X^x_t\le \ell _*^{(3)}\}\). We have \(V(x,c)<U(x,c)-P_0\) and by (5.28) it follows that \(V'(x,c)=U(x,c)-P_0\). Then an application of the Itô–Tanaka formula gives

where we have used Proposition 5.1-(3) in the first inequality on the right-hand side and the fact that \(V'(\ell _*^{(3)},c)\le U(\ell _*^{(3)},c)-P_0 \) in the second. We then reach a contradiction with (5.29) and \((\ell ^{(2)}_*,\ell ^{(3)}_*)\cap (\ell '_2,\ell '_3)\ne \emptyset \).

Step 2 Notice now that if we assume \(\ell '_3<\ell ^{(3)}_*\) we also reach a contradiction with (5.29) as for any \(x\in (\ell '_3,\ell ^{(3)}_*)\) we would have \(V'(x,c)<U(x,c)-P_0=V(x,c)\). Then we must have \(\ell '_3\ge \ell ^{(3)}_*\).

Assume now that \(\ell '_3>\ell _*^{(3)}\), take \(x\in (\ell _*^{(3)},\ell '_3)\) and \(\tau _3\) as in Step 1. above. Note that \(V'(x,c)=U(x,c)-P_0>V(x,c)\) whereas \(V(\ell _*^{(3)},c)=U(\ell _*^{(3)},c)-P_0=V'(\ell _*^{(3)},c)\) by Step 1. above and (5.28). Then using the Itô–Tanaka formula again we find

hence there is a contradiction with (5.29) and \(\ell _*^{(3)}=\ell '_3\).

Step 3 If we now assume that \(\ell _*^{(2)}<\ell '_2\) we find the same contradiction with (5.29) as in Step 2. as in fact for any \(x\in (\ell ^{(2)}_*,\ell '_2)\) we would have \(V'(x,c)<U(x,c)-P_0=V(x,c)\). Similarly if we assume that \(\ell '_1<\ell _*^{(1)}\) then for any \(x\in (\ell '_1,\ell ^{(1)}_*)\) we would have \(V'(x,c)<U(x,c)-P_0=V(x,c)\). These contradictions imply that \(\ell '_2\le \ell ^{(2)}_*\) and \(\ell '_1\ge \ell ^{(1)}_*\).

Let us assume now that \(\ell '_2<\ell ^{(2)}_*\), then taking \(x\in (\ell '_2,\ell ^{(2)}_*)\), applying the Itô–Tanaka formula until the first exit time from the open set \((\ell ^{(1)}_*,\ell ^{(2)}_*)\) and using arguments similar to those in Steps 1. and 2. we end up with a contradiction. Hence \(\ell '_2=\ell ^{(2)}_*\); analogous arguments can be applied to establish that \(\ell '_1=\ell _*^{(1)}\).

Proof of (ii). To prove (ii) we simply argue as in Theorem 5.5, concluding that \(V(x,c)=V^{\ell _*^{(1)}}(x,c)\), \(x\in \mathbb {R}\) and \(\ell _*^{(1)}\) solves (5.18) with \(\mathscr {B}=(-\infty ,x^0_1(c))\). \(\square \)

5.2 Discussion and economic considerations

As proved in Sect. 4.1 above, for cost functions \(\Phi \) with \(\hat{c}<0\) the control problem of purchasing in the spot market has a reflecting boundary and the optimal contract entry problem has a connected continuation region. These problem structures have been commonly observed in the literature on irreversible investment and optimal stopping respectively.

In contrast the results in the present Sect. 5 for \(\hat{c}>1\) include repelling control boundaries and disconnected optimal stopping regions, which to date have been observed less frequently in the literature. Here we provide further discussion on the results of this section, including simple examples and an economic interpretation of the optimal stopping rule.

If \(\hat{c}>1\) then k(c) is negative for all \(c \in (0,1]\) [see (3.3)]. It follows that the penalty function \(\Phi \) must satisfy

Since \(\theta /\lambda \) is positive, (5.32) establishes that the function \(c \mapsto \Phi (c)\) is bounded below by a positive, decreasing linear function for \(c \in (0,1)\). Given the role of \(\Phi \) as a penalisation function, this superlinearity is a natural property for \(\Phi \). Nonetheless we note that the slope of this linear lower bound increases as \(\lambda \downarrow 0\). Thus as the arrival rate of the demand decreases, this penalisation from \(\Phi \) must be increasingly strong in order to fall into the case \(\hat{c}>1\).

Although \(\lambda \) is the parameter in the exponential distribution of the arrival time of demand, we noted after (2.5) above that mathematically it is equivalent to a financial discount rate. Indeed this is analogous to the situation in the reduced-form methodology for credit risk modelling (see Chapter 7 of [14] for example), where a similar parameter \(\lambda \) can be interpreted as an adjustment to the discount rate due to the risk of default (in our case the ‘default’ event would correspond to the arrival of the demand, and hence the loss of the opportunity to enter the contract).

Now we give three examples of functions \(\Phi \) with \(\hat{c}>1\), drawn from functional forms commonly found in the economic literature. From now on let us fix \(\theta \) and \(\lambda \) and introduce an additional parameter a, specifying that \(a>1+\theta /\lambda \). Our examples involve respectively polynomial costs

exponential costs

and logarithmic costs

(Formally, for the third example we note that the assumption \(\Phi \in C^2(\mathbb {R})\) made above may be relaxed to \(\Phi \in C^2((0,1])\) if we restrict our study of (2.4) to \(\mathbb {R}\times (0,1]\). Indeed, since the inventory can only be increased, the behaviour of \(\Phi \) for \(c\le 0\) (and \(c>1\)) would play no role in our analysis.)

In order to explain the complex shape of the optimal entry boundary shown in Fig. 1 (where \(\hat{c}>1\)) we first identify two extremal regimes, referred to as cases 1 and 2 below. For each we provide the mathematical justification and an economic interpretation. Throughout the rest of this section it is important to recall from the problem setup in Sect. 2 that there are two costs to consider: the shortfall penalty and the expenditure in the spot market. Moreover, once the contract has been entered, the investor’s optimal purchasing strategy in the spot market is to instantaneously fill the inventory at some time. That is, for each value of c there is a unique critical price \(\gamma _*(c)\) above which it is optimal to instantaneously fill the inventory. This optimal policy explains the extremal cases 1 and 2 discussed below, and thus informs the intermediate case 3.

Case 1 To the left of Fig. 1, that is when c is small, it can be observed that \(x^0_1(c)< \gamma _*(c) < x^0_2(c)\) and \(m_2(c)>m_1(c)\). From Remark 5.4 this implies that part iii)(a) of Proposition 5.2 holds. The optimal entry policy is then of threshold type, and the continuation region has the lower boundary \(\ell ^{(1)}_*(c)\). Further, once the contract is entered, the optimal purchasing policy is then to wait until the price X is above the level \(\gamma _*(c)\), at which time the inventory is instantaneously filled.

When the inventory level c is small let us first consider the shortfall penalty term \(X_{\Theta }\Phi (c)\) incurred by the arrival of the demand. Since \(\Phi \) is decreasing, \(\Phi (c)\) is relatively large for small c. If the value of the spot price X is high, the investor is then exposed to the risk of significant costs from the shortfall penalty and it is not attractive to enter the contract. Conversely for low values of X the penalty \(X_{\Theta }\Phi (c)\) is relatively low, making the contract more attractive to enter.

Next we consider the expenditure in the spot market. As recalled above this is equal to \((1-c)\gamma _*(c)\), since the inventory is instantaneously filled when the price rises to \(\gamma _*(c)\). If the contract is entered at a price \(X<\gamma _*(c)\) then this cost is not incurred immediately, but at the later time when the price rises to \(\gamma _*(c)\). In this case the investor therefore benefits from the discounting effect of \(\lambda \) described above: the lower the price at entry, the greater is the average benefit from discounting. However a balance must be struck, since at the random time \(\Theta \) the demand arrives and the opportunity to enter the contract is lost, so there is a disadvantage to waiting for a very low entry price. This balance implies the existence of a lower optimal threshold \(\ell ^{(1)}_*(c)<\gamma _*(c)\).

Case 2 To the right of the figure, when c is close to 1, we have \(\gamma _*(c) \le x^0_1(c)\) and so part ii) of Proposition 5.2 holds and the optimal entry policy is again of threshold type. The continuation region has the lower boundary \(\ell _*(c)=\ell ^{(3)}_*(c)\) and once the contract is entered it is optimal to fill the inventory immediately.

Because of this immediate filling of the inventory there is no possibility of a shortfall penalty and we need only consider the expenditure in the spot market, which is equal to \(X(1-c)\). Lower spot prices are preferable but, since the opportunity to enter the contract is lost at the random time \(\Theta \), again there is a disadvantage to waiting for excessively low entry prices. However for values of c sufficiently close to 1 this expenditure becomes insignificant and it may be attractive to enter the contract even at relatively high spot prices X with \(X>\gamma _*(c)\) (even though filling is then immediate, so there is no benefit from discounting as in case 1).

Case 3 In the middle part of the figure we have \(x^0_1(c)< \gamma _*(c) < x^0_2(c)\) and \(m_2(c)<m_1(c)\) so that case iii)(b) of Proposition 5.2 applies. Then the continuation region is disconnected and is the union of a bounded interval \((\ell ^{(1)}_*(c), \ell ^{(2)}_*(c))\), whose endpoints satisfy the inequality \(\ell ^{(1)}_*(c)< \gamma _*(c) < \ell ^{(2)}_*(c)\), with the half-line \((\ell ^{(3)}_*(c),\infty )\). If the contract is entered when the spot price is \(\ell ^{(1)}_*(c)\) (or lower) then the optimal purchasing policy is as in case 1, otherwise the inventory is immediately filled upon entering the contract, as in case 2.

From the economic point of view this case is more difficult to interpret as it is a mixture of the previous two cases. However it may be noted that the bounded component \((\ell ^{(1)}_*(c), \ell ^{(2)}_*(c))\) of the continuation region contains the critical level \(\gamma _*(c)\) for the optimal purchasing policy. Thus if the problem begins when the spot price is at this critical level \(x=\gamma _*(c)\), the investor prefers to wait and learn more about the price movements. Recalling that the opportunity to enter the contract is lost at the random time \(\Theta \), if the spot price then falls sufficiently low the investor enters the contract and benefits both from lower risk from the undersupply penalty, and also from discounting, as described in case 1; alternatively if the spot price rises sufficiently far above \(\gamma _*(c)\) then the investor enters the contract and immediately fills the inventory, eliminating the potential undersupply penalty at the cost of a higher (and undiscounted) expenditure in the spot market.

6 Conclusion

In this paper we have studied the problem of optimal entry into an irreversible investment plan with a cost function which is non convex with respect to the control variable. This non convexity is due to the real-valued nature of the spot price of electricity. We show that the problem can be decoupled and that the investment phase can be studied independently of the entry decision as an investment problem over an infinite time horizon. The optimal entry decision depends heavily on the properties of the optimal investment policy.

The complete value function can be rewritten as that of an optimal stopping problem where the cost of immediate stopping involves the value function of the infinite horizon investment problem. It has been shown in [7] that the latter problem presents a complex structure of the solution, in which the optimal investment rule can be either singularly continuous or purely discontinuous, depending on the problem parameters. This feature in turn implies a non standard optimal entry policy. Indeed, the optimal entry rule can be either the first hitting time of the spot price at a single threshold, or can be triggered by multiple boundaries splitting the state space into non connected stopping and continuation regions. A possible economic interpretation of this complex structure is provided.

References

Alvarez, L.H.R.: A class of solvable singular stochastic control problems. Stoch. Stoch. Rep. 67, 83–122 (1999)

Bateman, H.: Higher Trascendental Functions, vol. II. McGraw-Hill Book Company, New York (1981)

Borodin, A.N., Salminen, P.: Handbook of Brownian Motion-Facts and Formulae, 2nd edn. Birkhäuser, Basel (2002)

Brekke, K.A., Øksendal, B.: Optimal switching in an economic activity under uncertainty. SIAM J. Control Optim. 32(4), 1021–1036 (1994)

Dayanik, S., Karatzas, I.: On the optimal stopping problem for one-dimensional diffusions. Stoch. Process. Appl. 107, 173–212 (2003)

De Angelis, T., Ferrari, G.: Stochastic partially reversible investment problem on a finite time-horizon: free-boundary analysis. Stoch. Process. Appl. 124, 4080–4119 (2014)

De Angelis, T., Ferrari, G., Moriarty, J.: A nonconvex singular stochastic control problem and its related optimal stopping boundaries. SIAM J. Control Optim. 53(3), 1199–1223 (2015)

Dixit, A.K.: Entry and exit decisions under uncertainty. J. Polit. Econ. 97(3), 620–638 (1989)

Dixit, A.K., Pindyck, R.S.: Investment Under Uncertainty. Princeton University Press, Princeton (1994)

Duckworth, J.K., Zervos, M.: An investment model with entry and exit decision. J. Appl. Prob. 37, 547–559 (2000)

Fleming, W.H., Soner, H.M.: Controlled Markov Processes and Viscosity Solutions, 2nd edn. Springer, New York (2005)

Geman, H., Roncoroni, A.: Understanding the fine structure of electricity prices. J. Bus. 79(3), 1225–1261 (2006)

Guo, X., Pham, H.: Optimal partially reversible investment with entry decision and general production function. Stoch. Process. Appl. 115, 705–736 (2005)

Jeanblanc, M., Yor, M., Chesney, M.: Mathematical Methods for Financial Markets. Springer, New York (2009)

Karatzas, I., Shreve, S.E.: Connections between optimal stopping and singular stochastic control I. Monotone follower problems. SIAM J. Control Optim. 22, 856–877 (1984)

Karatzas, I., Shreve, S.E.: Equivalent models for finite-fuel stochastic control. Stochastics 18, 245–276 (1986)

Karatzas, I., Shreve, S.E.: Brownian Motion and Stochastic Calculus, 2nd edn. Springer, New York (1998)

Lucia, J., Schwartz, E.S.: Electricity prices and power derivatives: evidence from the nordic power exchange. Rev. Deriv. Res. 5(1), 5–50 (2002)

McDonald, R.L., Siegel, D.R.: Investment and the valuation of firms when there is an option to shut down. Int. Econ. Rev. 26(2), 331–349 (1985)

Peskir, G.: On the American option problem. Math. Finance 15(1), 169–181 (2005)

Peskir, G., Shiryaev, A.: Optimal Stopping and Free-Boundary Problems. Lectures in Mathematics, ETH Zurich (2006)

Trigeorgis, L.: Real Options: Managerial Flexibility and Strategy in Resource Allocations. MIT Press, Cambridge (1996)

Acknowledgements

We wish to thank an anonymous referee for the useful and pertinent comments. The first, third and fourth named authors express their gratitude to the UK Engineering and Physical Sciences Research Council (EPSRC) for its Financial Support via Grants EP/K00557X/1 and EP/K00557X/2. Financial Support by the German Research Foundation (DFG) via Grant Ri–1128–4–2 is gratefully acknowledged by the second named author.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Some facts on the Ornstein–Uhlenbeck process

Recall the Ornstein–Uhlenbeck process X of (2.1). It is well known that X is a positively recurrent Gaussian process (cf., e.g., [3], Appendix 1, Section 24, pp. 136–137) with state space \(\mathbb {R}\) and that (2.1) admits the explicit solution

We introduced its infinitesimal generator \(\mathbb {L}_{X}\) in (3.6); the characteristic equation \(\mathbb {L}_{X}u = \lambda u\), \(\lambda > 0\), admits the two linearly independent, positive solutions (cf. [14], p. 280)

and

which are strictly decreasing and strictly increasing, respectively. In both (6.2) and (6.3) \(D_{\alpha }\) is the cylinder function of order \(\alpha \) (see [2], Chapter VIII, among others) and it is also worth recalling that (see, e.g., [2], Chapter VIII, Section 8.3, eq. (3) at page 119)

where \(\Gamma (\cdot )\) is Euler’s Gamma function.

We denote by \(\mathsf P_x\) the probability measure on \((\Omega , \mathcal{F})\) induced by the process \((X^x_t)_{t\ge 0}\), i.e. such that \(\mathsf P_x(\,\cdot \,) = \mathsf P(\,\cdot \,| X(0)=x)\), \(x \in \mathbb {R}\), and by \(\mathsf E_x[\,\cdot \,]\) the expectation under this measure. Then, it is a well known result on one-dimensional regular diffusion processes (see, e.g., [3], Chapter I, Section 10) that

with \(\phi _{\lambda }\) and \(\psi _{\lambda }\) as in (6.2) and (6.3) and \(\tau _{y}:=\inf \{t \ge 0: X^x_t = y\}\) the hitting time of \(X^x\) at level \(y \in \mathbb {R}\). Due to the recurrence property of the Ornstein–Uhlenbeck process X one has \(\tau _{y} < \infty \) \(\mathsf P_x\)-a.s. for any \(x,y \in \mathbb {R}\).

It is also useful to recall here some convergence and integrability properties of X.

Lemma 7.1

One has

Proof

Define \(\Xi :=\liminf _{t \uparrow \infty }e^{-\lambda t}|X^x_t|\) and notice that clearly \(\Xi \ge 0\) a.s. We now claim (and prove later) that \(\liminf _{t \uparrow \infty }e^{-\lambda t}\mathsf E\big [|X^x_t|\big ]=0\) to obtain by Fatou Lemma

that is, \(\mathsf E\big [\Xi \big ]=0\) and hence \(\Xi =0\) a.s. by nonnegativity of \(\Xi \).

To complete the proof we have thus only to show that \(\liminf _{t \uparrow \infty }e^{-\lambda t}\mathsf E\big [|X^x_t|\big ]=0\). By (6.1) and Hölder inequality one has

where also Itô isometry has been used. It is now easily checked that (6.7) implies the claim. \(\square \)

Lemma 7.2

Fix \(x\in \mathbb {R}\), and set \(\tau _R:=\inf \{t\ge 0\,:\,|X^x_t|\ge R\}\), \(R>0\), then the family \(\{e^{-\lambda \tau _R}|X^x_{\tau _R}|\,:\,R>0 \}\) is uniformly integrable.

Proof

It suffices to show that \(\{e^{-\lambda \tau _R}|X^x_{\tau _R}|\,:\,R>0 \}\) is uniformly bounded in \(L^2(\Omega ,\mathsf P)\). With no loss of generality we take \(x\in (-R,R)\) so that we can write \(\tau _R=\tau ^+_R\wedge \tau ^-_R\) \(\mathsf P\)-a.s. with \(\tau ^+_R:=\inf \{t\ge 0\,:\,X^x_t\ge R\}\) and \(\tau ^-_R:=\inf \{t\ge 0\,:\,X^x_t\le -R\}\). From recurrence of X we get

As \(R\rightarrow \infty \) the functions \(\phi _{2\lambda }(-R)\) and \(\psi _{2\lambda }(R)\) diverge to infinity with a super quadratic trend, hence there exists a constant \(C(x)>0\) depending only on \(x\in \mathbb {R}\) such that

\(\square \)

Appendix 2: Some proofs from Sect. 4.1

Proof of Proposition 4.2

Fix \(\bar{c}\in [0,1]\) and set \(\mathcal {L}(x,\bar{c}):=\big (\mathbb {L}_X-\lambda \big )U(x,\bar{c})\) for simplicity. By (4.2), (4.10) and recalling that \(u(x;\bar{c})=0\) for all \(x\in \mathbb {R}\) such that \(\bar{c}\le g_*(x)\) (or equivalently \(x\le \beta _*(\bar{c})\)) we get

Since \(g_*\) is continuous with \(g_*(\beta _*(\bar{c}))=\bar{c}\) one can verify that \(x\mapsto \mathcal {L}(x,\bar{c})\) is continuous, \(\lim _{x\rightarrow +\infty }\mathcal {L}(x,\bar{c})=-\infty \) and, by recalling also that \(g_*(x)=1\) for \(x\le \beta _*(1)\), \(\lim _{x\rightarrow -\infty }\mathcal {L}(x,\bar{c})=+\infty \). Since \(\beta _*\in C^1([0,1])\) and it is strictly monotone then \(g_*\) is differentiable for a.e. \(x\in \mathbb {R}\) with \(g'_*\le 0\). In particular \(\tfrac{d }{d\,x}\mathcal {L}(x,\bar{c})\) exists everywhere with the exception of points \(x=\beta _*(\bar{c})\) and \(x=\beta _*(1)\). It follows that

where in the second expression we have used that \(\Phi (1)=0\) by Assumption 2.1. Now we recall that \(\beta _*(c)\le \hat{x}_0(c)\) for \(c\in [0,1]\) [cf. Proposition 3.1-i)] and \(\theta \mu -k(c)\,\beta _*(c)>0\) on [0, 1]. Hence in particular for \(c=g_*(x)\), \(x\in [\beta _*(1),\beta _*(0)]\), we get \(\theta \mu -k(g_*(x))\,x>0\) and

We then obtain that \(x\mapsto \mathcal {L}(x,\bar{c})\) is continuous, strictly decreasing [by (6.10), (6.11) and (6.12)] and it equals zero at a single point for any given \(\bar{c}\in [0,1]\). Obviously the result extends to \(\mathcal {L}(x,\bar{c})+\lambda P_0=\big (\mathbb {L}_X-\lambda \big )\big (U(x,\bar{c})-P_0)\) and (4.12) follows. \(\square \)

Proof of Proposition 4.4

Existence, uniqueness and smoothness of \(\alpha ^*\) follow from arguments analogous to those employed to prove [7, Thm. 2.1]. For the limiting behaviour of \(\alpha ^*(c)\) as \(c\rightarrow 1\) we observe that \(\Phi (c)\downarrow 0\) and \(x^\dagger _0(c)\uparrow \infty \) when \(c\uparrow 1\). Since \(\alpha ^*\) is strictly increasing it has left-limit so we argue by contradiction and assume that \(\alpha ^*(c)\rightarrow \alpha _0\) as \(c\rightarrow 1\) for some \(\alpha _0<+\infty \). Then in the limit as \(c\rightarrow 1\) (4.28) gives

and we reach a contradiction. \(\square \)

Proof of Proposition 4.5

Fix \(c\in [0,1]\). It is clear that \(\alpha ^*(c)\) solves (4.28) if and only if \(K(\alpha ^*(c),c)=0\) where

From direct computation it is not hard to verify that \(x\mapsto K(x,c)\) is strictly decreasing and convex on \((-\infty ,x^\dagger _0(c))\), so that it is sufficient to show that \(K(z_0(c),c)<0\) for \(z_0(c):=P_0/\Phi (c)\) to conclude the proof. In fact we shall only consider the case \(z_0(c)\in (-\infty ,x^\dagger _0(c))\) as otherwise the result is trivial.

Set for simplicity \(z_0=z_0(c)\), then from straightforward algebra we find

Now, since \(\phi _\lambda ''>0\) and \(\mathbb L_X\phi _\lambda =\lambda \phi _\lambda \) on \(\mathbb {R}\) one has \((\mu -z_0)\tfrac{\phi '_\lambda (z_0)}{\phi _\lambda (z_0)}<\tfrac{\lambda }{\theta }\) hence from (6.14) it follows \(K(z_0,c)<0\). \(\square \)

Proof of Lemma 4.9

We recall that the Ornstein–Uhlenbeck process is positively recurrent (cf. “Appendix 1”), hence \(\tau _\ell (x,c)<+\infty \) \(\mathsf P\)-a.s. for any \(x\in \mathbb {R}\) and it follows that \(U(X^x_{\tau _\ell },c)=U(\ell (c),c)\) \(\mathsf P\)-a.s. The latter and (4.35) then imply

for \(x>\ell (c)\), where (6.5) has been used. \(\square \)

Proof of Proposition 4.10

Fix \(c\in [0,c_*)\). Since we are looking for a finite-valued boundary \(\ell _*\), solving (4.37) is equivalent to finding x such that \(\hat{H}(x,c)=0\) where

We recall (4.2), (4.3), (4.4) and that the function \(g_*\) is the inverse of \(\beta _*\) (cf. Proposition 3.1). As in (4.10) we can derive U with respect to x and take the derivative inside the integral so to obtain

for all \(x\in \mathbb {R}\) and where we have used that u and \(u_x\) equal zero for \(x\in \mathbb {R}\) such that \(c\le g_*(x)\).