Abstract

In this article, we analyze combined effects of LTP/LTD and synaptic scaling and study the creation of persistent activity from a periodic or chaotic baseline attractor. The bifurcations leading to the creation of new attractors have been detailed; this was achieved using a mean field approximation. Attractors encoding persistent activity can notably appear via generalized period-doubling bifurcations, tangent bifurcations of the second iterates or boundary crises, after which the basins of attraction become irregular. Synaptic scaling is shown to maintain the coexistence of a state of persistent activity and the baseline. According to the rate of change of the external inputs, different types of attractors can be formed: line attractors for rapidly changing external inputs and discrete attractors for constant external inputs.

Similar content being viewed by others

Notes

It is important to note that phenomena that are related to spike timing (such as synchrony of spikes) are not taken into account here; however, they may play an important role in cognition studies (a temporal-coding hypothesis). Synchronous firing may disrupt persistent activity (Compte 2006) because of the different time scales for the receptors (primarily AMPA and GABAA), and create oscillations (Compte et al. 2003). All receptors are assumed to have an identical time scale for simplicity; thus only asynchronous persistent activity is considered in the following.

Otherwise, only LTP/LTD are active, see Eq. (7).

An infinite set of maps indexed by the step n are thus considered.

A soft bifurcation induces new stable attractors in a small neighborhood of the old one. For example, tangent bifurcations far from the cusp and subcritical pitchfork bifurcations are hard, whereas supercritical pitchfork bifurcations are soft (Hoppensteadt and Izhikevich 1997).

For a normally hyperbolic invariant manifold (NHIM), the contraction vectors orthogonal to the manifold are stronger than those along the manifold (Hoppensteadt and Izhikevich 1997). NHIM can be interpreted as the generalization of a hyperbolic fixed point to non-trivial attractors. Formal definitions can be found in Fenichel (1972); Hirsch and Shub (1977), Pesin (2004). A fundamental property of these manifolds is that they are persistent under perturbations, i.e., the perturbed invariant manifold has normal and tangent subspaces that are close to the original manifold. This structural stability assures that an attractor of the perturbed system lies near an attractor of the unperturbed system.

Note that the assumption in Eq. (19) does not hold for \(\Updelta^{T_s} X_i(n), T_s \ll T_0\). Indeed, the terms X i (n) and X i (n − T s ) average almost identical trajectories, and therefore do not describe the attractors of two different dynamical systems (perturbed \(\tilde{G}(n)\) and unperturbed \(\tilde{G}(n-T_1)\)), but the difference between consecutive steps of the same attractor. In that case, these consecutive steps could be at a distance that is equal to the size of the attractor.

These populations should not be confused with excitatory and inhibitory populations which are common in neuroscience; for example in the Wilson-Conwan model (1972).

For simplicity, we assume γ+ = − γ− = γ > 0. Similar dynamics to the ones described in the following are also observed for other choices of γ− and are not restrained to this set of parameters.

These two curves are the equivalent of nullclines for a system of differential equations.

Other distribution functions that induce multiple discrete attractors could be considered. This article focus only on the uniform distribution to show the creation of line attractors.

Simply considering the symmetry of the change in weights, all neurons are similarly affected by synaptic plasticity.

“Complex” refers here to the Kolmogorov-Sinai entropy.

The creation of fractal basin boundaries is also observed for systems with continuous time (Ott 1993), and is not an artifact due to the discrete time used in the current article.

References

Abbott LF, Nelson SB (2000) Synaptic plasticity: taming the beast. Nat Neurosci 3:1178–1183

Abbott LF, Regehr WG (2004) Synaptic computation. Nat Biotechnol 431:796–803

Aihara K, Takabe T, Toyoda M (1990) Chaotic neural networks. Phys Lett A 144(6–7):333–340

Amari S-I (1971) Characteristics of randomly connected threshold-element networks and network systems. Proc IEEE 59(1):35–47

Amari S-I (1972) Learning patterns and pattern sequences by self-organizing nets of threshold elements. IEEE Trans Comput C-21(11):1197–1206

Artola A, Singer W (1993) Long-term depression of excitatory synaptic transmission and its relationship to long-term potentiation. Trends Neurosci 16:480–487

Bartos M, Vida I, Jonas P (2007) Synaptic mechanisms of synchronized gamma oscillations in inhibitory interneuron networks. Nat Rev Neurosci 8(1):45–56

Bienenstock EL, Cooper LN, Munro PW (1982) Theory for the development of neuron selectivity: orientation specificity and binocular interaction in visual cortex. J Neurosci 2:32–48

Brody CD, Romo R, Kepecs A (2003) Basic mechanisms for graded persistent activity: discrete attractors, continuous attractors, and dynamic representations. Curr Opin Neurobiol 13(2):204–211

Brunel N (1996) Hebbian learning of context in recurrent neural networks. Neural Comput Appl 8:1677–1710

Brunel N (2003) Dynamics and plasticity of stimulus-selective persistent activity in cortical network models. Cereb Cortex 13(11):1151–1161

Buonomano DV, Maass W (2009) State-dependent computations: spatiotemporal processing in cortical networks. Nat Rev Neurosci 10(2):113–125

Burkitt AN, Meffin H, Grayden DB (2004) Spike-timing-dependent plasticity: the relationship to rate-based learning for models with weight dynamics determined by a stable fixed point. Neural Comput Appl 16(5):885–940

Buxhoeveden DP, Casanova MF (2002) The minicolumn hypothesis in neuroscience. Brain Behav Evol 125:935–951

Buzsaki G (2002) Theta oscillations in the hippocampus. Neuron 33:325–340

Buzsaki G (2010) Neural syntax: Cell assemblies, synapsembles, and readers. Neuron 68(3):362–385

Caianiello ER (1961) Outline of a theory of thought-processes and thinking machines. J Theor Biol 1:204–235

Cessac B, Doyon B, Quoy M, Samuelides M (1994) Mean-field equations, bifurcation map and route to chaos in discrete time neural networks. Phys D 74:24–44

Churchland M et al (2010) Stimulus onset quenches neural variability: a widespread cortical phenomenon. Nat Neurosci 13:369–378

Compte A (2006) Computational and in vitro studies of persistent activity: edging towards cellular and synaptic mechanisms of working memory. Neurosci Behav Physiol 139:135–151

Compte A, Brunel N, Goldman-Rakic PS, Wang X-J (2000) Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb Cortex 10(9):910–923

Compte A, Constantinidis C, Tegner J, Raghavachari S, Chafee MV, Goldman-Rakic PS, Wang X-J (2003) Temporally irregular mnemonic persistent activity in prefrontal neurons of monkeys during a delayed response task. J Neurophysiol 90:3441–3454

Dauce E, Quoy M, Cessac B, Doyon B, Samuelides M (1998) Self-organization and dynamics reduction in recurrent networks: stimulus presentation and learning. Neural Networks 11(3):521–533

Doyon B, Cessac B, Quoy M, Samuelides M (1994) On bifurcations and chaos in random neural networks. Acta Biotheor 42(2):215–225

Durstewitz D, Seamans JK, Sejnowski TJ (2000) Neurocomputational models of working memory. Nat Neurosci 3:1184–1191

Faisal AA, Selen LPJ, Wolpert DM (2008) Noise in the nervous system. Nat Rev Neurosci 9(4):292–303

Fenichel N (1972) Persistence and smoothness of invariant manifolds for flows. Indiana Univ Math J 21(3):193–226

Funahashi S, Bruce CJ, Goldman-Rakic PS (1989) Mnemonic coding of visual space in the monkey’s dorsolateral prefrontal cortex. J Neurophysiol 61:331–349

Grebogi C, Ott E, Yorke JA (1983) Crises, sudden changes in chaotic attractors, and transient chaos. Physica D 7(1–3):181–200

Grebogi C, Ott E, Yorke JA (1986) Metamorphoses of basin boundaries in nonlinear dynamical systems. Phys Rev Lett 56:1011–1014

Hansel D, Sompolinsky H (1998) Modeling feature selectivity in local cortical circuits. In: Methods in neuronal modeling: from synapse to networks. MIT Press, Cambridge

Hebb D (1949) The organization of behavior. Wiley, New York

Heemels W, Lehmann D, Lunze J, De Schutter B (2009) Introduction to hybrid systems. In: Lunze J, Lamnabhi-Lagarrigue F (eds) Handbook of hybrid systems control—theory tools applications, Cambridge University Press, Cambridge

Hertz J, Krogh A, Palmer RG (1991) Introduction to the theory of neural computation. Addison-Wesley Longman Publishing Co, Inc, Boston

Hirsch MW, Pugh CC, Shub M (1977) Invariant manifolds. Springer, New York

Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. Proc Nat Acad Sci 79(8):2554–2558

Hoppensteadt FC, Izhikevich EM (1997) Weakly connected neural networks. Springer, New York

Ikegaya Y (2004) Synfire chains and cortical songs: temporal modules of cortical activity. Sci Agric 304:559–564

Ibarz B, Casado J, Sanjuan M (2011) Map-based models in neuronal dynamics. Phys Rep 501:1–74

Katori Y, Sakamoto K, Saito N, Tanji J, Mushiake H, Aihara K (2011) Representational switching by dynamical reorganization of attractor structure in a network model of the prefrontal cortex. PLoS Comput Biol 7(11):e1002266

Korn H (2003) Is there chaos in the brain? ii. Experimental evidence and related models. CR Biol 326(9):787–840

Kuznetsov YA (1998) Elements of applied bifurcation theory. 2nd edn. Springer, New York

Leleu T, Aihara K (2011) Sequential memory retention by stabilization of cell assemblies. In: Post-conference proceedings of the 3rd international conference on cognitive neurodynamics, 9–13 June 2011 (in press)

Li Y, Nara S (2008) Novel tracking function of moving target using chaotic dynamics in a recurrent neural network model. Cogn Neurodyn 2(1):39–48

Major G, Tank D (2004) Persistent neural activity: prevalence and mechanisms. Curr Opin Neurobiol 14(6):675–684

Matsumoto G, Aihara K, Hanyu Y, Takahashi N, Yoshizawa S, Nagumo J-I (1987) Chaos and phase locking in normal squid axons. Phys Lett A 123(4):162–166

McCulloch W, Pitts W (1943) A logical calculus of the ideas immanent in nervous activity. Bull Math Biophys 7:115–133

Miyashita Y (1988) Neuronal correlate of visual associative long-term memory in the primate temporal cortex. Nat Biotechnol 335(6193):817–820

Mongillo G, Amit DJ, Brunel N (2003) Retrospective and prospective persistent activity induced by hebbian learning in a recurrent cortical network. Eur J Neurosci 18(7):2011–2024

Moynot O, Samuelides M (2002) Large deviations and mean-field theory for asymmetric random recurrent neural networks. Probab Theory Relat Fields 123:41–75

Mushiake H, Saito N, Sakamoto K, Itoyama Y, Tanji J (2006) Activity in the lateral prefrontal cortex reflects multiple steps of future events in action plans. Neuron 50(4):631–641

Nagumo J, Sato S (1972) On a response characteristic of a mathematical neuron model. Biol Cybern 10:155–164

Naya Y, Sakai K, Miyashita Y (1996) Activity of primate inferotemporal neurons related to a sought target in pair-association task. Proc Nat Acad Sci 93:2664–2669

Ott E (1993) Chaos in dynamical systems. Cambridge University Press, New York

Ozaki TJ et al (2012) Traveling EEG slow oscillation along the dorsal attention network initiates spontaneous perceptual switching. Cogn Neurodyn 6(2):185–198

Pesin YB (2004) Lectures on partial hyperbolicity and stable ergodicity. Zurich Lect Adv Math

Pool RR, Mato G (2010) Hebbian plasticity and homeostasis in a model of hypercolumn of the visual cortex. Neural Comput Appl 22:1837–1859

Pulvermler F (1996) Hebb’s concept of cell assemblies an the psychophysiology of word processing. Psychophysiology 33(4):317–333

Rajan K, Abbott LF, Sompolinsky H (2010) Stimulus-dependent suppression of chaos in recurrent neural networks. Phys Rev E 82:011903

Ranck JB Jr (1985) Head direction cells in the deep cell layer of dorsal presubiculum in freely moving rats. In: Electrical activity of the archicortex. Publishing House of the Hungarian Academy of Sciences

Renart A, Moreno-Bote R, Wang X-J, Parga N (2007) Mean-driven and fluctuation-driven persistent activity in recurrent networks. Neural Comput Appl 19:1–46

Renart A, Song P, Wang X-J (2003) Robust spatial working memory through homeostatic synaptic scaling in heterogeneous cortical networks. Neuron 38(3):473–485

Romo R, Brody CD, Hernandez A, Lemus L (1999) Neuronal correlates of parametric working memory in the prefrontal cortex. Nat Biotechnol 399:470–473

Sakai K, Miyashita Y (1991) Neural organization for the long-term memory of paired associates. Nat Biotechnol 354:152–155

Sejnowski T (1999) The book of hebb. Neuron 24(4):773–776

Siri B, Berry H, Cessac B, Delord B, Quoy M (2008) A mathematical analysis of the effects of hebbian learning rules on the dynamics and structure of discrete-time random recurrent neural networks. Neural Comput Appl 20(12):2937–2966

Skarda C, Freeman W (1987) How brains make chaos in order to make sense of the world. Behav Brain Sci 10:161–195

Softky WR, Koch C (1992) Cortical cells should fire regularly, but do not. Neural Comput Appl 4:643–646

Sompolinsky H, Crisanti A, Sommers HJ (1988) Chaos in random neural networks. Phys Rev Lett 61(3):259–262

Tsuda I (2001) Toward an interpretation of dynamic neural activity in terms of chaotic dynamical systems. Behav Brain Sci 24:793–810

Turrigiano GG (2008) The self-tuning neuron: synaptic scaling of excitatory synapses. Cell (Cambridge, MA, US) 135(3):422–435

Turrigiano GG, Leslie KR, Desai NS, Rutherford LC, Nelson SB (1998) Activity-dependent scaling of quantal amplitude in neocortical neurons. Nat Biotechnol 391(6670):892–896

Turrigiano GG, Nelson SB (2004) Homeostatic plasticity in the developing nervous system. Nat Rev Neurosci 5(2):97–107

Wang L (2007) Interactions between neural networks: a mechanism for tuning chaos and oscillations. Cogn Neurodyn 1(2):185–188

Wang X-J (2001) Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci 24(8):455–463

Wennekers T, Palm G (2009) Syntactic sequencing in Hebbian cell assemblies. Cogn Neurodyn 3(4):429–441

Wilson HR, Cowan JD (1972) Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J 12(1):1–24

Yoshida H, Kurata S, Li Y, Nara S (2010) Chaotic neural network applied to two-dimensional motion control. Cogn Neurodyn 4(1):69–80

Zheng G, Tonnelier A (2008) Chaotic solutions in the quadratic integrate-and-fire neuron with adaptation. Cogn Neurodyn 3(3):197–204

Acknowledgments

This research is supported by the Global Center of Excellence (G-COE) “Secure-Life Electronics” sponsored by the Ministry of Education, Culture, Sports, Science and Technology (MEXT), and by the Aihara Project, the FIRST program from the Japan Society for the Promotion of Science (JSPS), Japan.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Parameters typically used for simulations

See Appendix Table 1

Appendix 2: Approximation of the effects of LTP/LTD alone

In the following, changes due to LTP/LTD are considered. From Eqs. (1)–(3), by taking the time average over \(\{ n-T_0, \ldots, n-1 \}\), we obtain the following expression:

where A i = a i f i is the “perceived” external input (see section "External inputs"), i.e., the level of external input averaged over window T 0.

The changes in connectivity in the window T 0 can be considered to be very small (quasi-constant). Note that for l < T 0 and e = 1 (see Eq. (7)),

By using Eq. (46) in Eq. (45), noting that \({{\frac{1}{T_0}} \sum\nolimits_{i \neq j} \sum\nolimits_{l=1}^{T_0} \sum\nolimits_{t=1}^{l} \Uplambda_{ij} (n-t) = {\mathcal{O}}(T_0 \lambda M),}\) we rewrite Eq. (45) as follows (T 1 ≫ T 0, thus \({\frac{1}{T_0}} \gg T_0 \lambda M\)):

By considering the changes in weights and activity over window T 1, we rewrite Eq. (47) as follows:

The above equation can also be written as follows:

where:

When the changes in weights are considered over a limited number of steps n such that \(n \lambda \ll \bar{\omega}\) and the assumption of Eq. (19) is verified, the following approximations hold, ∀ n:

Then the terms of Eqs. (50)–(53) are approximated as follows:

Finally, because \(\bar{\omega} p \ll 1\) and \(\bar{\omega} p \epsilon \gg {\frac{1}{T_0}},\) the approximation of Eq. (21) holds.

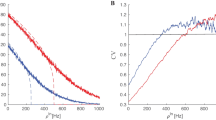

To verify the validity of the approximation, the terms t 1, t 2, t 3 and t 4 are compared for several steps \(m T_1, m = \{1, 2, \ldots\},\) as shown in Fig. 18. Simulations confirm that as long as the change in weights remains small, t 2 is dominant. Note that because \({t_2 = {\mathcal{O}}(\epsilon),}\) this approximation is consistent with the assumption of Eq. (20) as applied to the change in Y i (n).

Comparison of terms t 1, t 2, t 3 and t 4 of Eqs. (50)–(53), respectively, with the solid, dashed, dotted, and dash-dot lines in terms of steps \(m T_1, m = \{1, 2, \ldots\}\). So long as the change in weights remains small (i.e., for a small number of time steps m in this figure), the term t 2 is dominant

Appendix 3: Approximation of the combined effects of LTP/LTD and synaptic scaling

When the weights are scaled by synaptic scaling (see Eqs. (12) and (13)), the change in synaptic efficacy \(\Updelta^{T_1} \omega_{ij} (n) = \omega_{ij} (n) - \omega_{ij} (n-T_1)\) is given as follows:

The change in weights \(\Updelta^{T_1} \omega_{ij}\) when synaptic scaling is active is of the same magnitude as that when only LTP/LTD modify the weights (see Eq. (14)). Therefore, the approximation of Eq. (21) still holds in this case. Then, the time-averaged internal state can be written as follows using Eqs. (21) and (61) as follows:

By using Eq. (47), we rewrite this equation as follows:

Finally, when X j (n − T 1) remains small due to the scaling, \({\omega_{ij} (n_0) X_j(n-T_1) = \omega_{ij} (n_0) X_j(n_0) + {\mathcal{O}}(\eta)}\) (see Eq. (57)). Using this approximation and Eq. (47) once more, we can rewrite Eq. (63) as follows:

By developing Eq. (64), we obtain Eq. (22).

Appendix 4: Macroscopic mean field approximation

A single population of neurons is considered for the following demonstration. Generalization to multiple populations is immediate. For simplicity, the refractoriness is included in the distribution of weights (α = ω ii , and V i (n) = ∑ j ω ij x j (n)) and is thus assumed to be normally distributed. This approximation is possible so long as the refractoriness is of the same order of magnitude as μω. Because weights are randomized at each time step n, for a given m, the local chaos hypothesis considered in this study is given as follows:

-

1.

x j (n) and \(\mathit{\omega}_{ij}\) are independent ∀ i, j;

-

2.

x j (n) and x i (n) are independent ∀ i ≠ j;

-

3.

θ i (n) and V i (n) are independent ∀ i.

Internal states and activity are also random variables denoted by y i and x i , respectively, such that the system is a stochastic process. In the following, the mean of a random variable X belonging to the probability density function p X is denoted by m(X), the variance, v(X), covariance of X and Y, cv(X, Y):

The mean of the internal states y i and activity x i are simply denoted y and x, respectively. By taking the mean over the neurons i in Eqs. (1)–(3), the mean of internal activity y is given as follows (using the hypotheses of independence described above and the law of large numbers):

The mean activity is given as follows:

The variance of y i is given as follows (using the hypotheses of independence described above):

Note that by induction, y i (n + 1) is also given as follows:

When the number of steps n is very large, this can be expressed as

where \(\tilde{x}_j(n) = \sum\nolimits_{p=0}^{n} x_j(n-p) k^p\). This term is also considered to be non-random as in Amari (1971). Therefore, Eq. (72) can be written as follows:

Because the mean and standard deviation of the weights decrease proportionally to \({\frac{1}{M}}\) when \(M \rightarrow \infty , \) the variance is given as follows:

After a few time steps, the variance is constant, and is given as follows:

To validate the mean field approximation, we first verified the dependence of the variance of y(n) on \(\sigma_{\mathit{\theta}},\) as shown at the top of Fig. 19. Different from Amari (1971) and Cessac et al. (1994), the mean field can also exhibit periodic/chaotic dynamics. There are thus two types of variations: one due to the mean-field dynamics (“macroscopic”) and another due to the randomness in the connectivity and threshold (“microscopic”). The two types of variation are dependent on the factor \(\sigma_{\mathit{\theta}}\). To verify the validity of the mean field, we must quantify the distance between the trajectory of the mean of microscopic states and that of the macroscopic mean field approximation. In the bottom of Fig. 19, it is shown that as the number of neurons increases, the accuracy of the mean field approximation increases.

Mean field approximation. a The standard deviation \(\sqrt(v)\) of the internal states y i (n) is proportional to σθ; the coefficient of proportionality (given by the slope of the linear regression) is equal to \({\frac{1}{1-k}},\) as described in Eq. (77). b Distance S between the mean of the microscopic internal states and the macroscopic mean field approximation for increasing number of neurons M. As M increases, the approximation becomes more accurate

The mean field approximation is generalized to two populations of neurons having the same variances of weights and thresholds, but different mean weights due to LTP/LTD, which leads to Eq. (29).

Rights and permissions

About this article

Cite this article

Leleu, T., Aihara, K. Combined effects of LTP/LTD and synaptic scaling in formation of discrete and line attractors with persistent activity from non-trivial baseline. Cogn Neurodyn 6, 499–524 (2012). https://doi.org/10.1007/s11571-012-9211-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-012-9211-3