Abstract

Purpose

Easy acquisition of surgical data opens many opportunities to automate skill evaluation and teaching. Current technology to search tool motion data for surgical activity segments of interest is limited by the need for manual pre-processing, which can be prohibitive at scale. We developed a content-based information retrieval method, query-by-example (QBE), to automatically detect activity segments within surgical data recordings of long duration that match a query.

Methods

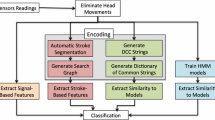

The example segment of interest (query) and the surgical data recording (target trial) are time series of kinematics. Our approach includes an unsupervised feature learning module using a stacked denoising autoencoder (SDAE), two scoring modules based on asymmetric subsequence dynamic time warping (AS-DTW) and template matching, respectively, and a detection module. A distance matrix of the query against the trial is computed using the SDAE features, followed by AS-DTW combined with template scoring, to generate a ranked list of candidate subsequences (substrings). To evaluate the quality of the ranked list against the ground-truth, thresholding conventional DTW distances and bipartite matching are applied. We computed the recall, precision, F1-score, and a Jaccard index-based score on three experimental setups. We evaluated our QBE method using a suture throw maneuver as the query, on two tool motion datasets (JIGSAWS and MISTIC-SL) captured in a training laboratory.

Results

We observed a recall of 93, 90 and 87 % and a precision of 93, 91, and 88 % with same surgeon same trial (SSST), same surgeon different trial (SSDT) and different surgeon (DS) experiment setups on JIGSAWS, and a recall of 87, 81 and 75 % and a precision of 72, 61, and 53 % with SSST, SSDT and DS experiment setups on MISTIC-SL, respectively.

Conclusion

We developed a novel, content-based information retrieval method to automatically detect multiple instances of an activity within long surgical recordings. Our method demonstrated adequate recall across different complexity datasets and experimental conditions.

Similar content being viewed by others

Notes

The variation of the standard dynamic time warping problem in which one seeks to align a sequence X with a contiguous subsequence \(Y_{a:b}\) of a long sequence Y has been called subsequence dynamic time warping, even though it is better described as substring dynamic time warping. We retain the former name for consistency, even if it is somewhat misleading.

\(Recall = TP/(TP+FN), Precision = (TP/(TP+FP),F1 = 2TP/(2TP+FN+FP)\), where \(TP = hit\), \(FN= |G|-hit\) and \(FP = |P| - hit\).

References

Ahmidi N, Gao Y, Béjar B, Vedula SS, Khudanpur S, Vidal R, Hager GD (2013) String motif-based description of tool motion for detecting skill and gestures in robotic surgery. In: Medical image computing and computer-assisted intervention–MICCAI 2013. Springer, Nagoya, Japan

Béjar B, Zappella L, Vidal R (2012) Surgical gesture classification from video data. In: Medical image computing and computer-assisted intervention—MICCAI 2012. Springer, Nice, France, pp 34–41

Bengio Y, Lamblin P, Popovici D, Larochelle H (2007) Greedy layer-wise training of deep networks. Adv Neural Inf Process Syst 19:153–160

Carlin M, Thomas S, Jansen A, Hermansky H (2011) Rapid evaluation of speech representations for spoken term discovery. In: Proceedings of the annual conference of the international speech communication association, INTERSPEECH, pp 821–824

Gao Y, Vedula SS, Reiley CE, Ahmidi N, Varadarajan B, Lin HC, Tao L, Zappella L, Bejar B, Yuh DD, Chen CCG, Vidal R, Khudanpur S, Hager GD (2014) The JHU-ISI gesture and skill assessment dataset (JIGSAWS): a surgical activity working set for human motion modeling. In: Medical image computing and computer-assisted intervention M2CAI—MICCAI workshop

Gao Y, Vedula SS, Lee GI, Lee MR, Khudanpur S, Hager GD (2016) Unsupervised surgical data alignment with application to automatic activity annotation. In: Proceedings of the IEEE international conference on robotics and automation—ICRA 2016 (Accepted)

Hazen T, Shen W, White C (2009) Query-by-example spoken term detection using phonetic posteriorgram templates. In: IEEE workshop on automatic speech recognition understanding, 2009. ASRU 2009, pp 421–426

Lea C, Hager GD, Vidal R (2015) An improved model for segmentation and recognition of fine-grained activities with application to surgical training tasks. In: 2015 IEEE Winter Conference on applications of computer vision (WACV), pp 1123–1129

Lea C, Vidal R, Hager GD (2016) Learning convolutional action primitives from multimodal timeseries data. In: Proceedings of the IEEE international conference on robotics and automation—ICRA 2016 (Accepted)

Malpani A, Vedula SS, Chen CCG, Hager GD (2015) A study of crowdsourced segment-level surgical skill assessment using pairwise rankings. Int J Comput Assis Radiol Surg 10(9):1435–1447

Muller M (2007) Dynamic time warping. In: Information retrieval for music and motion. Springer, New York

Palm RB (2012) Prediction as a candidate for learning deep hierarchical models of data. Master’s thesis

Sakoe H, Chiba S (1978) Dynamic programming algorithm optimization for spoken word recognition. IEEE Trans Acoust Speech Signal Process 26(1):43–49

Sefati S, Cowan NJ, Vidal R (2015) Learning shared, discriminative dictionaries for surgical gesture segmentation and classification. In: Modeling and monitoring of computer assisted interventions (M2CAI)—MICCAI workshop

Tao L, Elhamifar E, Khudanpur S, Hager GD, Vidal R (2012) Sparsehidden markov models for surgical gesture classification and skill evaluation. In: Information processing in computer-assisted interventions. Springer, Berlin, vol 7330, pp 167–177

Tao L, Zappella L, Hager GD, Vidal R (2013) Surgical gesture segmentation and recognition. In: Medical image computing and computer-assisted intervention—MICCAI 2013, Nagoya, Japan

Twinanda AP, de Mathelin M, Padoy N (2014) Fisher kernel based task boundary retrieval in laparoscopic database with single video query. In: Medical image computing and computer-assisted intervention—MICCAI 2014, Boston, MA

Varadarajan B, Reiley CE, Lin HC, Khudanpur S, Hager GD (2009) Data-derived models for segmentation with application to surgical assessment and training. In: Medical image computing and computer-assisted intervention—MICCAI 2009, Springer, pp 426–434

Vedula SS, Malpani A, Ahmidi N, Khudanpur S, Hager G, Chen CCG (2016) Task-level vs. segment-level quantitative metrics for surgical skill assessment. J Surg Educ 73(2). doi:10.1016/j.jsurg.2015.11.009

Vincent P, Larochelle H, Bengio Y, Manzagol PA (2008) Extracting and composing robust features with denoising autoencoders. In: Proceedings of the 25th international conference on machine learning—ICML ’08, pp 1096–1103

Zappella L, Béjar B, Hager GD, Vidal R (2013) Surgical gesture classification from video and kinematic data. Med Image Anal 17:732–745

Acknowledgments

We acknowledge Intuitive Surgical Inc., Sunnyvale, CA for facilitating capture of data from the da Vinci Surgical Systems for the JIGSAWS and MISTIC-SL datasets. We would also like to thank Anand Malpani and Madeleine Waldram for the MISTIC-SL dataset collection and processing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Ethical standard

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Funding

The Johns Hopkins Science of Learning Institute provided a research grant to conduct the study that yielded the MISTIC-SL dataset. Y. Gao was supported by Department of Computer Science, The Johns Hopkins University.

Informed consent

Informed consent was obtained from all individual participants included in the MISTIC-SL study. The JIGSAWS dataset is publicly accessible.

Rights and permissions

About this article

Cite this article

Gao, Y., Vedula, S.S., Lee, G.I. et al. Query-by-example surgical activity detection. Int J CARS 11, 987–996 (2016). https://doi.org/10.1007/s11548-016-1386-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-016-1386-3