Abstract

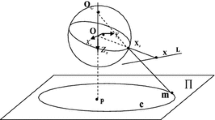

Revolution symmetry is a realistic assumption for modelling the majority of catadioptric and dioptric cameras. In central systems it can be described by a projection model based on radially symmetric distortion. In these systems straight lines are projected on curves called line-images. These curves have in general more than two degrees of freedom and their shape strongly depends on the particular camera configuration. Therefore, the existing line-extraction methods for this kind of omnidirectional cameras require the camera calibration by contrast with the perspective case where the calibration is not involved in the shape of the projected line-image. However, this drawback can be considered as an advantage because the shape of the line-images can be used for self-calibration. In this paper, we present a novel method to extract line-images in uncalibrated omnidirectional images which is valid for radially symmetric central systems. In this method we propose using the plumb-line constraint to find closed form solutions for different types of camera systems, dioptric or catadioptric. The inputs of the proposed method are points belonging to the line-images and their intensity gradient. The gradient information allows to reduce the number of points needed in the minimal solution improving the result and the robustness of the estimation. The scheme is used in a line-image extraction algorithm to obtain lines from uncalibrated omnidirectional images without any assumption about the scene. The algorithm is evaluated with synthetic and real images showing good performance. The results of this work have been implemented in an open source Matlab toolbox for evaluation and research purposes.

Similar content being viewed by others

Notes

In the case of an orthogonal system the derivative of the function at \({\hat{r}} = {\hat{r}}_{vl}\) is \(\infty \) meaning that this is not the proper point for linearization.

References

Alemán-Flores, M., Alvarez, L., Gomez, L., & Santana-Cedrés, D. (2014). Line detection in images showing significant lens distortion and application to distortion correction. Pattern Recognition Letters, 36, 261–271.

Alvarez, L., Gómez, L., & Sendra, J. (2009). An algebraic approach to lens distortion by line rectification. Journal of Mathematical Imaging and Vision, 35(1), 36–50.

Baker, S., & Nayar, S. K. (1999). A theory of single-viewpoint catadioptric image formation. International Journal of Computer Vision, 35(2), 175–196.

Barreto, J. P., & Araujo, H. (2005). Geometric properties of central catadioptric line images and their application in calibration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 27(8), 1327–1333.

Barreto, J. P., & Araujo, H. (2006). Fitting conics to paracatadioptric projections of lines. Computer Vision and Image Understanding, 101(3), 151–165.

Bazin, J. C., Demonceaux, C., Vasseur, P., & Kweon, I. (2010). Motion estimation by decoupling rotation and translation in catadioptric vision. Computer Vision and Image Understanding, 114(2), 254–273.

Bermudez-Cameo, J., Lopez-Nicolas, G., & Guerrero, J. J. (2012a). A unified framework for line extraction in dioptric and catadioptric cameras. In 11th Asian Conference on Computer Vision, (ACCV), vol. 7727.

Bermudez-Cameo, J., Puig, L., & Guerrero, J. J. (2012b). Hypercatadioptric line images for 3D orientation and image rectification. Robotics and Autonomous Systems, 60(6), 755–768.

Bermudez-Cameo, J., Lopez-Nicolas, G., Guerrero, & J. J. (2013). Line extraction in uncalibrated central images with revolution symmetry. In 24th British Machine Vision Conference (BMVC).

Brown, D. (1971). Close-range camera calibration. Photogrammetric Engineering, 37(8), 855–866.

Bukhari, F., & Dailey, M. N. (2013). Automatic radial distortion estimation from a single image. Journal of Mathematical Imaging and Vision, 45(1), 31–45.

Courbon, J., Mezouar, Y., Eck, L., & Martinet, P. (2007). A generic fisheye camera model for robotic applications. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (pp. 1683–1688).

Cucchiara, R., Grana, C., Prati, A., & Vezzani, R. (2003). A hough transform-based method for radial lens distortion correction. In 12th International Conference on Image Analysis and Processing (ICIAP) (pp. 182–187).

Devernay, F., & Faugeras, O. (2001). Straight lines have to be straight. Machine Vision and Applications, 13(1), 14–24.

Fischler, M. A., & Bolles, R. C. (1981). Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Communications of the ACM, 24(6), 381–395.

Fitzgibbon, A. W. (2001). Simultaneous linear estimation of multiple view geometry and lens distortion. In IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), vol. 1 (pp. I–125).

Gasparini, S., & Caglioti, V. (2011). Line localization from single catadioptric images. International Journal of Computer Vision, 94(3), 361–374.

Gasparini, S., Sturm, P., & Barreto, J. P. (2009). Plane-based calibration of central catadioptric cameras. In IEEE 12th International Conference on Computer Vision (ICCV) (pp. 1195–1202).

Geyer, C., & Daniilidis, K. (2000). A unifying theory for central panoramic systems and practical applications. In 6th European Conference on Computer Vision, (ECCV), vol. 2 (pp. 445–461).

Geyer, C., & Daniilidis, K. (2001). Catadioptric projective geometry. International Journal of Computer Vision, 45(3), 223–243.

Kannala, J., & Brandt, S. (2006). A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(8), 1335–1340.

Kannala, J., Brandt, S. S., & Heikkilä, J. (2008a). Self-calibration of central cameras by minimizing angular error. In 3rd International Conference on Computer Vision Theory and Applications (VISAPP) (pp. 28–35).

Kannala, J., Heikkilä, J., & Brandt, S. S. (2008b). Geometric camera calibration. Wiley encyclopedia of computer science and engineering. Hoboken: Wiley.

Kingslake, R. (1989). A history of the photographic lens. San Diego: Academic Press.

Mei, C., & Rives, P. (2007). Single viewpoint omnidirectional camera calibration from planar grids. In International Conference on Robotics and Automation (ICRA) (pp. 3945–3950).

Melo, R., Antunes, M., Barreto, J., Falco, G., & Gonalves, N. (2013). Unsupervised intrinsic calibration from a single frame using a “plumb-line” approach. In IEEE 14th International Conference on Computer Vision (ICCV) (pp. 1–6).

Puig, L., Bastanlar, Y., Sturm, P., Guerrero, J. J., & Barreto, J. (2011). Calibration of central catadioptric cameras using a dlt-like approach. International Journal of Computer Vision, 93(1), 101–114.

Puig, L., Bermudez-Cameo, J., Sturm, P., & Guerrero, J. J. (2012). Calibration of omnidirectional cameras in practice. A comparison of methods. Computer Vision and Image Understanding, 116, 120–137.

Ray, S. (2002). Applied photographic optics: Lenses and optical systems for photography, film, video, electronic and digital imaging. Oxford: Focal Press.

Rosten, E., & Loveland, R. (2011). Camera distortion self-calibration using the plumb-line constraint and minimal hough entropy. Machine Vision and Applications, 22(1), 77–85.

Scaramuzza, D., Martinelli, A., & Siegwart, R. (2006). A toolbox for easily calibrating omnidirectional cameras. In International Conference on Ingelligent Robots and Systems (IROS) (pp. 5695–5701).

Schneider, D., Schwalbe, E., & Maas, H. G. (2009). Validation of geometric models for fisheye lenses. Journal of Photogrammetry and Remote Sensing, 64(3), 259–266.

Stevenson, D., & Fleck, M. (1996). Nonparametric correction of distortion. In 3rd IEEE Workshop on Applications of Computer Vision (WACV) (pp. 214–219).

Strand, R., & Hayman, E. (2005). Correcting radial distortion by circle fitting. In 16th British Machine Vision Conference (BMVC).

Sturm, P., Ramalingam, S., Tardif, J. P., Gasparini, S., & Barreto, J. P. (2011). Camera models and fundamental concepts used in geometric computer vision. Foundations and Trends in Computer Graphics and Vision, 6(1–2), 1–183.

Swaminathan, R., & Nayar, S. K. (2000). Nonmetric calibration of wide-angle lenses and polycameras. IEEE Transactions on Pattern Analysis and Machine Intelligence, 22(10), 1172–1178.

Tardif, J., Sturm, P., & Roy, S. (2006). Self-calibration of a general radially symmetric distortion model. In 9th European Conference on Computer Vision (ECCV) (pp. 186–199).

Thormählen, T., Broszio, H., & Wassermann, I. (2003). Robust line-based calibration of lens distortion from a single view. In Proceedings of MIRAGE (pp. 105–112).

Wang, A., Qiu, T., & Shao, L. (2009). A simple method of radial distortion correction with centre of distortion estimation. Journal of Mathematical Imaging and Vision, 35(3), 165–172.

Wu Y., & Hu Z. (2005) Geometric invariants and applications under catadioptric camera model. In 10th IEEE International Conference on Computer Vision (ICCV), vol. 2 (pp. 1547–1554).

Ying, X., & Hu, Z. (2004a). Can we consider central catadioptric cameras and fisheye cameras within a unified imaging model?. In 8th European Conference on Computer Vision (ECCV).

Ying, X., & Hu, Z. (2004b). Catadioptric line features detection using hough transform. In 17th International Conference on Pattern Recognition (ICPR), vol. 4 (pp. 839–842).

Ying, X., & Zha, H. (2005). Simultaneously calibrating catadioptric camera and detecting line features using hough transform. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (pp. 412–417).

Acknowledgments

This work was supported by the Spanish Project VINEA DPI2012-31781 and FEDER funds. First author was supported by the FPU program AP2010-3849. Thanks to J. P. Barreto from ISR Coimbra for the set of high resolution paracatadioptric images.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by C. Schnörr.

Appendices

Appendix 1: Polynomials Describing Line-Images

Some of the line-images in central systems with revolution symmetry can be expressed as polynomials. In this Appendix we show the description of these line images using polynomials for Catadioptric systems, Stereographic-Fisheye, Orthogonal-Fisheye and Equisolid-Fisheye systems.

Catadioptric and Stereographic-Fisheye

where

Ortogonal-Fisheye

where

Equisolid-Fisheye

where

Appendix 2: Computing the Focal Distance in Hypercatadioptric Systems

In this Appendix we expand a way for computing the focal length in hypercatadioptic systems. This allows us to compute both calibration parameters. Instead of using the plumb-line constraint or a combination between plum-line and gradient constraints the normal \({\mathbf {n}}\) can be computed from a pair of points using the gradient constraint (23).

In practice, the solution is noisy and does not imply a real advantage with respect to the two point’s location approach (11).

The previous constraint (40) using two points and their gradients is enough to define a line-image. Therefore, when adding a third point with (23) in (40) one of the equations can be expressed in combination of the other two. In practice this means that,

where

This constraint is similar to (13) but using gradients (notice that location information is also used).

As noted before, gradient information is noisier than location information, therefore there is no advantage in using this constraint instead of (13). However, there is a case in which this constraint is useful. The constraint (13) is solved for each system in Table 3. Most of the devices taken into account have a single calibration parameter defining distortion. This is the case of equiangular, stereographic, orthogonal and equisolid. The parabolic case is defined by two parameters \(f\) and \(p\) but a coupled parameter \(\hat{r}_{vl} = fp\) can be used instead. In these cases the constraint involving three points can be used to estimate the calibration of the system. However, in the hyperbolic case the two parameters \(\chi \) and \(f\) can not be coupled and only one of them can be estimated from this constraint.

By contrast, when simplifying (41) for hypercatadioptric systems we found that mirror parameter \(\chi \) is not involved:

As consequence, the focal distance \(f\) can be computed from the gradient orientation and the location of three points lying on a line-image.

Equation (45) can be expressed as a polynomial of degree 8 (but bi-quartic),

where \(\beta _m = \beta _{m,123} + \beta _{m,213} + \beta _{m,312}\) and

This equation has four solutions (because the negative values for f have not sense).

Appendix 3: Coefficients for the Equisolid-Fisheye Plumb-Line Equation

In this Appendix we present the coefficient of the 16th degree polynomial to solve the equisolid plumb-line equation (19).

where \(\omega _m = \omega _{m,123} + \omega _{m,213} + \omega _{m,312}\) and

Rights and permissions

About this article

Cite this article

Bermudez-Cameo, J., Lopez-Nicolas, G. & Guerrero, J.J. Automatic Line Extraction in Uncalibrated Omnidirectional Cameras with Revolution Symmetry. Int J Comput Vis 114, 16–37 (2015). https://doi.org/10.1007/s11263-014-0792-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-014-0792-7