Abstract

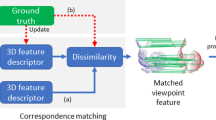

The observation likelihood approximation is a central problem in stochastic human pose tracking. In this article we present a new approach to quantify the correspondence between hypothetical and observed human poses in depth images. Our approach is based on segmented point clouds, enabling accurate approximations even under conditions of self-occlusion and in the absence of color or texture cues. The segmentation step extracts small regions of high saliency such as hands or arms and ensures that the information contained in these regions is not marginalized by larger, less salient regions such as the chest. To enable the rapid, parallel evaluation of many poses, a fast ellipsoid body model is used which handles occlusion and intersection detection in an integrated manner. The proposed approximation function is evaluated on both synthetic and real camera data. In addition, we compare our approximation function against the corresponding function used by a state-of-the-art pose tracker. The approach is suitable for parallelization on GPUs or multicore CPUs.

Similar content being viewed by others

Notes

The data sets used are available from the author upon request.

References

Azad, P., Asfour, T., & Dillmann, R. (2008). Robust real-time stereo-based markerless human motion capture. In 8th IEEE-RAS international conference on humanoid robots (pp. 700–707).

Baak, A., Müller, M., Bharaj, G., Seidel, H. P., & Theobalt, C. (2011). A data-driven approach for real-time full body pose reconstruction from a depth camera. In IEEE 13th international conference on computer vision (pp. 1092–1099).

Bernier, O., Cheungmonchan, P., & Bouguet, A. (2008). Fast nonparametric belief propagation for real-time stereo articulated body tracking. Computer Vision and Image Understanding, 113(1), 29–47.

Cayton, L. (2011). A nearest neighbor data structure for graphics hardware. In Proceedings of the first international workshop on accelerating data management systems using modern processor and storage architectures (ADMS 2010) (pp. 243–251).

Darby, J., Li, B., & Costen, N. (2008). Human activity tracking from moving camera stereo data. In British machine vision conference. http://www.bmva.org/bmvc/2008/papers/232.pdf.

Deutscher, J., & Reid, I. (2005). Articulated body motion capture by stochastic search. International Journal of Computer Vision, 61(2), 185–205.

Fontmarty, M., Lerasle, F., & Danes, P. (2007). Data fusion within a modified annealed particle filter dedicated to human motion capture. In IEEE/RSJ international conference on intelligent robots and systems (pp. 3391–3396).

Fontmarty, M., Lerasle, F., & Danes, P. (2009). Likelihood tuning for particle filter in visual tracking. In 16th IEEE international conference on image processing (pp. 4101–4104).

Gall, J., Stoll, C, de Aguiar, E., Theobalt, C., Rosenhahn, B., & Seidel, H. (2009). Motion capture using joint skeleton tracking and surface estimation. In: IEEE computer society conference on computer vision and pattern recognition (workshops), pp. 1746–1753.

Ganapathi, V., Plagemann, C., Koller, D., & Thrun, S. (2010). Real time motion capture using a single time-of-flight camera. In 23rd IEEE conference on computer vision and pattern recognition (pp. 755–762).

Girshick, R., Shotton, J., Kohli, P., Criminisi, A., & Fitzgibbon, A. (2011). Efficient regression of general-activity human poses from depth images. In IEEE 13th international conference on computer vision. IEEE Press, New York (pp. 415–422).

Isard, M., & Blake, A. (1998). Condensation—conditional density propagation for visual tracking. International Journal of Computer Vision, 29(1), 5–28.

Lehment, N. H., Arsić, D., & Rigoll, G. (2010). Cue-independent extending inverse kinematics for robust pose estimation in 3d point clouds. In 17th IEEE international conference on image processing (pp. 2465–2468).

Lichtenauer, J., Reinders, M., & Hendriks, E. (2004). Influence of the observation likelihood function on particle filtering performance in tracking applications. In 6th IEEE international conference on automatic face and gesture recognition (pp. 767–772).

Lorentz, H. (1915). The width of spectral lines. Koninklijke Nederlandse Akademie van Weteschappen Proceedings Series B Physical Sciences, 18, 134–150.

Markley, F., Cheng, Y., Crassidis, J., & Oshman, Y. (2007). Averaging quaternions. Journal of Guidance, Control, and Dynamics, 30(4), 1193.

Mikić, I., Trivedi, M., Hunter, E., & Cosman, P. (2003). Human body model acquisition and tracking using voxel data. International Journal of Computer Vision, 53, 199–223.

O’Leary, D. P. (1990). Robust regression computation using iteratively reweighted least squares. SIAM Journal on Matrix Analysis and Applications, 11, 466–480.

Poppe, R. (2007). Vision-based human motion analysis: an overview. Computer Vision and Image Understanding, 108(1–2), 4–18.

Rusu, R. B., & Cousins, S. (2011). 3D is here: point cloud library (PCL). In IEEE international conference on robotics and automation (ICRA) (pp. 1–4).

Shotton, J., Fitzgibbon, A., Cook, M., Sharp, T., Finocchio, M., Moore, R., Kipman, A., & Blake, A. (2011). Real-time human pose recognition in parts from a single depth image. In IEEE computer society conference on computer vision and pattern recognition (pp. 1297–1304).

Sudderth, E. B., Ihler, A. T., Ihler, E. T., Freeman, W. T., & Willsky, A. S. (2002). Nonparametric belief propagation. In IEEE computer society conference on computer vision and pattern recognition (Vol. 1, pp. 605–612).

Wilhelms, J., & Gelder, A. V. (2001). Efficient spherical joint limits with reach cones. Tech. rep., University of California at Santa Cruz. http://users.soe.ucsc.edu/~avg/Papers/jtl-tr.pdf.

Zhu, Y., & Fujimura, K. (2009). Bayesian 3D human body pose tracking from depth image sequences. In 9th Asian conference on computer vision (pp. 267–278).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lehment, N., Kaiser, M. & Rigoll, G. Using Segmented 3D Point Clouds for Accurate Likelihood Approximation in Human Pose Tracking. Int J Comput Vis 101, 482–497 (2013). https://doi.org/10.1007/s11263-012-0557-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-012-0557-0