Abstract

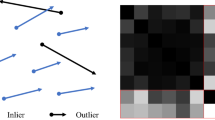

This paper studies the problem of matching two unsynchronized video sequences of the same dynamic scene, recorded by different stationary uncalibrated video cameras. The matching is done both in time and in space, where the spatial matching can be modeled by a homography (for 2D scenarios) or by a fundamental matrix (for 3D scenarios). Our approach is based on matching space-time trajectories of moving objects, in contrast to matching interest points (e.g., corners), as done in regular feature-based image-to-image matching techniques. The sequences are matched in space and time by enforcing consistent matching of all points along corresponding space-time trajectories.

By exploiting the dynamic properties of these space-time trajectories, we obtain sub-frame temporal correspondence (synchronization) between the two video sequences. Furthermore, using trajectories rather than feature-points significantly reduces the combinatorial complexity of the spatial point-matching problem when the search space is large. This benefit allows for matching information across sensors in situations which are extremely difficult when only image-to-image matching is used, including: (a) matching under large scale (zoom) differences, (b) very wide base-line matching, and (c) matching across different sensing modalities (e.g., IR and visible-light cameras). We show examples of recovering homographies and fundamental matrices under such conditions.

Similar content being viewed by others

References

Bergen, J., Anandan, P., Hanna, K., and Hingorani, R. 1992. Hierarchical model-based motion estimation. In European Conference on Computer Vision (ECCV). Santa Margarita Ligure, pp. 237–252.

Burt, P. and Kolczynski, R. 1993. Enhanced image capture through fusion. In: International Conference on Computer Vision (ICCV). Berlin, pp. 173–182.

Caspi, Y. and Irani, M. 2000. A step towards sequence-to-sequence alignment. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Hilton Head Island, South Carolina, pp. 682–689.

Caspi, Y., Simakov, D., and Irani, M. 2002. Feature-based sequence-to-sequence matching. In: ECCV, VAMODS Workshop. Copenhagen.

Faugeras, O., Luong, Q., and Papadopoulo, T. 2001. The Geometry of Multiple Images. MIT Press.

Ferrari, V., Tuytelaars, T., and Gool, L.V. 2003. Wide-baseline multiple-view Correspondences. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vol. 1. Madison, Wisconsin, pp. 718–725.

Fischler, M.A. and Bolles, R. 1981. RANSAC random sample concensus: A paradigm for model fitting with applications to image analysis and automated cartography. In Communications of the ACM, Vol. 24. pp. 381–395.

Giese, M.A. and Poggio, T. 2000. Morphable models for the analysis and synthesis of complex motion patterns. International Journal of Computer Vision 38(1):59–73.

Hampel, F., Rousseeuw, P., Ronchetti, E., and Stahel, W. 1986. Robust Statistics: The Approach Based on Influence Functions. John Wiley: New York.

Harris, C. and Stephens, M. 1988. A combined corner and edge detector. In 4th Alvey Vision Conference, pp. 147–151.

Hartley, R. and Zisserman, A. 2000. Multiple View Geometry in Computer Vision. Cambridge University Press: Cambridge.

Kadir, T., Zisserman, A., and Brady, M. 2004. An affine invariant salient region detector. In European Conference on Computer Vision (ECCV). Prague, Czech Republic.

Lee, L., Romano, R., and Stein, G. 2000. Monitoring activities from multiple video streams: establishing a common coordinate frame. IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI) (Special Issue on Video Surveillance and Monitoring), 22:758–767.

Lowe, D.G. 2004. Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision, 60(2):91–110.

Lucas, B. and Kanade, T. 1981, ‘An iterative image registration technique with an application to stereo vision. In: Image Understanding Workshop, pp. 121–130.

Matas, J., Chum, O., Martin, U., and Pajdla, T. 2002. Robust wide baseline stereo from maximally stable extremal regions. In P.L. Rosin and D. Marshall (eds.), British Machine Vision Conference, Vol. 1. London, UK, pp. 384–393.

Mikolajczyk, K. and Schmid, C., 2004. Scale and affine invariant interest point detectors. International Journal of Computer Vision 60(1):63–86.

Mindru, F., Tuytelaars, T., Gool, L.V., and Moons, T. 2004. Moment invariants for recognition under changing viewpoint and illumination. Comput. Vis. Imag Underst., 94(1–3):3–27.

Mundy, J. and Zisserman, A. 1992. Geometric invariance in computer vision. In MIT Press.

Rousseeuw, P. 1987. Robust Regression and Outlier Detection. Wiley: New York.

Shechtman, E., Caspi, Y., and Irani, M. 2002. Increasing video resolution in tim and space. In European Conference on Computer Vision (ECCV), Copenhagen.

Stauffer, C., and Tieu, K., 2003. Automated multi-camera planar tracking correspondence modeling. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Madison, Wisconsin, pp. 259–266.

Stein, G.P. 1998. Tracking from multiple view points: self-calibration of space and time. In DARPA IU Workshop, Montery CA, pp. 1037–1042.

Szeliski, R. and Shum., H.-Y. 1997. Creating full view panoramic image mosaics and environment maps. In Computer Graphics Proceedings, Annual Conference Series, pp. 251–258.

Tomasi, C. and Kanade, T. 1991. Detection and tracking of point features. Technical Report CMU-CS-91-132, Carnegie Mellon University.

Tresadern, P. and Reid, I. 2003. Synchronizing image sequences of non-rigid objects. In British Machine Vision Conference, Vol. 2, Norwich, pp. 629–638.

Tuytelaars, T. and Gool, L.V. 2004. Matching widely separated views based on affine invariant regions. International Journal of Computer Vision, 59(1):61–85.

Wong, K.-Y. K. and Cipolla, R. 2001. Structure and motion from silhouettes. In International Conference on Computer Vision (ICCV), Vol. II. Vancouver, Canada, pp. 217–222.

Xu, C. and Zhang, Z. 1996. Epipolar Geometry in Stereo, Motion and Object Recognition. Kluwer Academic Publishers: Dordecht, The Netherlands.

Zhang, Z., Deriche R., Faugeras, O., and Luong, Q. 1995, ‘A robust technique for matching two uncalibrated images through the recovery of the unknown epipolar geometry. Artificial Intelligence 78:87–119.

Zoghlami, I., Faugeras, O., and Deriche, R. 1997. Using geometric corners to build a 2d mosaic from a set of images. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 420–425.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Caspi, Y., Simakov, D. & Irani, M. Feature-Based Sequence-to-Sequence Matching. Int J Comput Vision 68, 53–64 (2006). https://doi.org/10.1007/s11263-005-4842-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-005-4842-z