Abstract

Markov chain Monte Carlo (MCMC) algorithms for Bayesian computation for Gaussian process-based models under default parameterisations are slow to converge due to the presence of spatial- and other-induced dependence structures. The main focus of this paper is to study the effect of the assumed spatial correlation structure on the convergence properties of the Gibbs sampler under the default non-centred parameterisation and a rival centred parameterisation (CP), for the mean structure of a general multi-process Gaussian spatial model. Our investigation finds answers to many pertinent, but as yet unanswered, questions on the choice between the two. Assuming the covariance parameters to be known, we compare the exact rates of convergence of the two by varying the strength of the spatial correlation, the level of covariance tapering, the scale of the spatially varying covariates, the number of data points, the number and the structure of block updating of the spatial effects and the amount of smoothness assumed in a Matérn covariance function. We also study the effects of introducing differing levels of geometric anisotropy in the spatial model. The case of unknown variance parameters is investigated using well-known MCMC convergence diagnostics. A simulation study and a real-data example on modelling air pollution levels in London are used for illustrations. A generic pattern emerges that the CP is preferable in the presence of more spatial correlation or more information obtained through, for example, additional data points or by increased covariate variability.

Similar content being viewed by others

1 Introduction

Spatially correlated data are prevalent in many of the physical, biological and environmental sciences. It is natural to model these processes in a Bayesian modelling framework, employing Markov chain Monte Carlo (MCMC) techniques for model fitting and prediction, in particular Gibbs sampling type algorithms (Gelfand and Smith 1990). There is a growing interest among researchers in regression models with spatially varying coefficients (Gelfand et al. 2003). Fitting these highly overparameterised and nonstationary models is challenging and computationally expensive. Latent processes correlated across space produce dense covariance matrices that require calculations of order \(O(n^3)\) to invert, for n spatial locations (Cressie and Johannesson 2008).

For normal linear hierarchical models with independent random effects, it is known that the ratio of the variance parameters determines the convergence rates of the Gibbs samplers (Papaspiliopoulos et al. 2003; Gelfand et al. 1995). When the data precision is relatively high, the centred parameterisation (CP) will yield an efficient Gibbs sampler, and when the data precision is relatively low, the non-centred parameterisation (NCP) is more efficient. Papaspiliopoulos et al. (2003) find that the NCP outperforms the CP for a Cauchy data model with Gaussian latent variables. Papaspiliopoulos and Roberts (2008) further investigate how the model parameterisation and the tail behaviour of the distributions of the data and the latent process all interact to determine the stability of the Gibbs sampler. They look at combinations of Cauchy, double exponential, Gaussian and exponential power distributions for the CP and the NCP. The heuristic remark that follows from this comparison is that the convergence of the CP is quicker when the data model has lighter tails than that of the latent variables, with the opposite scenario favouring the NCP.

There has been little investigation into the influence of correlation across the random effects on the rate of convergence of the Gibbs sampler. Simulation studies conducted by Papaspiliopoulos et al. (2003) on the spatial Poisson-log-Normal model suggest that stronger spatial correlation improves the sampling efficiency of the CP relative to that of the NCP. However, there are several unresolved questions regarding the choice of the CP versus NCP for the mean structure of a general multi-process Gaussian spatial model. Which of the two parameterisations will converge faster when spatial correlation is increased? What happens to the rates of convergence when tapering (Furrer et al. 2006; Kaufman et al. 2008) is introduced? How does the smoothness parameter in an assumed Matérn covariance function influence the rates? In addition, there are other unexplored issues regarding the choice and the number of blocks for the random effects, the influence of the scale of spatially varying covariates and the introduction of different levels of geometric anisotropy.

In this paper, we cast the general spatial model with multiple spatially varying covariates as a three stage normal linear hierarchical model. This model formulation allows us to compute the exact rates of convergence for both CP and NCP for known prior covariance matrices by following (Roberts and Sahu 1997). These exact rates of convergence facilitate comparison between the two rival parameterisations, CP and NCP. For an exponential correlation function, convergence for the CP is hastened when spatial correlation is stronger, the opposite being true for the NCP. This is also demonstrated in the context of tapered covariance matrices, geometric anisotropic correlation functions and the regression process associated with a spatially varying covariate. The exponential correlation function is a member of the broader Matérn family (Matérn 1986). When we increase the smoothness parameter, the effect is to slow convergence for both the CP and NCP. In the limiting case of the Gaussian correlation, when the smoothness parameter tends to infinity, both CP and NCP may fail to converge when the sample size is large enough due to the associated singularity of the covariance matrices, and Sect. 3.3 invesigates the issues.

When the prior covariance matrices are unknown, the exact convergence is intractable and so the CP and NCP are compared by statistics based on well-known convergence diagnostics on the potential scale reduction factor, see e.g., Gelman and Rubin (1992). We use a simulation and a real-data example to show that increasing the effective range for an exponential correlation function improves the sampling efficiency of the CP, whereas shortening the effective range helps the NCP.

The following remarks are in order. First, a related approach is to marginalise over the random effects, thus reducing the dimension of the posterior distribution. This approach can be employed when the error structures of the data and the random effects are both assumed to be Gaussian. Marginalised likelihoods are used by Gelfand et al. (2003) for fitting spatially varying coefficient models and by Banerjee et al. (2008) to implement Gaussian predictive process models. However, marginalisation results in a loss of conditional conjugacy of the variance parameters and means that they have to be updated by using Metropolis-type steps, which require difficult and time consuming tuning. On the other hand, the Gibbs sampler for the full model can potentially be completely automated and run without the need for any tuning.

Secondly, it is possible to generate intermediate partially centred parameterisations by considering CP and the NCP as extremes of a family of parameterisations. Indeed, this has been followed up by Bass and Sahu (2016) in a companion paper. Interweaving of the CP and NCP as proposed by Yu and Meng (2011) is particularly useful when the practitioner has little knowledge of the convergence properties of either parameterisation. These authors obtain an upper bound on the convergence rate of the interweaving algorithm based on an intractable maximal correlation between the latent variables under the two parameterisations and to our knowledge the exact convergence rate for the interweaving algorithm has not yet been computed. Lastly, both CP- and NCP-based computation methods are similar in spirit to various data augmentation (DA) schemes (Liu and Wu 1999; van Dyk and Meng 2001; Imai and van Dyk 2005; Filippone et al. 2013). A direct theoretical comparison between the exact convergence rates of the centring parameterisations for Gaussian process (GP)-based models and the DA algorithms is desirable, as has been done by Sahu and Roberts (1999) for the EM algorithm and the Gibbs sampler. However, this requires further methodological development of DA algorithms for the GP models and evaluation of their exact rates of convergence.

The rest of this paper is organized as follows. In Sect. 2, we give details of a general spatial model and obtain expressions for the rates of convergence. A simple example here illustrates the rates and brings out the rivalry between the two parameterisations. Section 3 is devoted to comparison of the rates of convergence under different settings of correlation structures and introduction of geometric anisotropy. It also studies the effect of tapering and the scale of the spatially varying covariates on the rates of convergence. In Sect. 4, we drop the assumption of known precision matrices and use two convergence diagnostics to judge the sampling efficiencies of the CP and NCP methods. Analyses are carried out on simulated data and PM10 concentration data taken from Greater London in 2011. Section 5 contains some concluding remarks. Appendices A and B, respectively, contain the technical details for calculating the rates of convergence and the full conditional distributions needed for Gibbs sampling.

2 General spatial model

2.1 Model specification

For data observed at a set of locations \(\varvec{s}_{1},\ldots ,\varvec{s}_{n}\), we consider the following normal linear model with spatially varying regression coefficients (Gelfand et al. 2003):

We model (measurement or micro-scale) errors \(\epsilon (\varvec{s}_{i})\) as independent and normally distributed with mean zero and variance \(\sigma ^2_{\epsilon }\). Spatially indexed observations \(\varvec{Y}=\{Y(\varvec{s}_{1}),\ldots ,Y(\varvec{s}_{n})\}^{ \mathrm {\scriptscriptstyle T} }\) are conditionally independent and normally distributed as

where \(\varvec{x}(\varvec{s}_{i})=\{1,x_{1}(\varvec{s}_{i}),\ldots ,x_{p-1}(\varvec{s}_{i})\}^{ \mathrm {\scriptscriptstyle T} }\) is a vector containing covariate information for site \(\varvec{s}_{i}\) and \(\varvec{\theta }=(\theta _{0},\ldots ,\theta _{p-1})^{ \mathrm {\scriptscriptstyle T} }\) is a vector of global regression coefficients. The kth element of \(\varvec{\theta }\) is locally perturbed by a realisation of a zero mean independent Gaussian process, denoted \(\beta _{k}(\varvec{s}_{i})\), which are collected into a vector \(\varvec{\beta }(\varvec{s}_{i})=\{\beta _{0}(\varvec{s}_{i}),\ldots ,\beta _{p-1}(\varvec{s}_{i})\}^{ \mathrm {\scriptscriptstyle T} }\). The n realisations of the Gaussian process associated with the kth covariate are given by \( \varvec{\beta }_{k}=\{\beta _{k}(\varvec{s}_{1}),\ldots ,\beta _{k}(\varvec{s}_{n})\}^{ \mathrm {\scriptscriptstyle T} }\sim N(0,\varvec{\Sigma }_{k})\quad (k=0,\ldots ,p-1), \) where \( \varvec{\Sigma }_{k}=\sigma ^2_{k}\varvec{R}_{k},\text {and} (\varvec{R}_{k})_{ij}=corr\{\beta _{k}(\varvec{s}_{i}),\beta _{k}(\varvec{s}_{j})\}. \) The form of the model given in (1) is known as the NCP. The CP is found by introducing the variables \(\tilde{\beta }_{k}(\varvec{s}_{i})=\theta _{k}+\beta _{k}(\varvec{s}_{i})\). Therefore, \(\tilde{\varvec{\beta }}_{k}=\{\tilde{\beta }_{k}(\varvec{s}_{1}),\ldots ,\tilde{\beta }_{k}(\varvec{s}_{n})\}^{ \mathrm {\scriptscriptstyle T} }\sim N(\theta _{k}\varvec{1},\varvec{\Sigma }_{k})\).

Global effects \(\varvec{\theta }\) are assumed to be multivariate normal a priori and so we write model (1) in its hierarchically centred form as

where \(\varvec{C}_{1}=\sigma ^2_{\epsilon }\varvec{I}\) and \(\varvec{X}_{1}=(\varvec{I},\varvec{D}_{1},\ldots ,\varvec{D}_{p-1})\) is the \(n\times np\) design matrix for the first stage where \(\varvec{D}_{k}\) is a diagonal matrix with entries \(\varvec{x}_{k}=\{x_{k}(\varvec{s}_{1}),\ldots ,x_{k}(\varvec{s}_{n})\}^{ \mathrm {\scriptscriptstyle T} }\). We denote by \(\tilde{\varvec{\beta }}=(\tilde{\varvec{\beta }}^{ \mathrm {\scriptscriptstyle T} }_{0},\ldots ,\tilde{\varvec{\beta }}^{ \mathrm {\scriptscriptstyle T} }_{p-1})^{ \mathrm {\scriptscriptstyle T} }\) the \(np\times 1\) vector of centred, spatially correlated random effects.

The design matrix for the second stage, \(\varvec{X}_{2}\), is a \(np\times p\) block diagonal matrix, the blocks made of vectors of ones of length n. The p processes are assumed independent a priori and so \(\varvec{C}_{2}\) is block diagonal where the kth block is \(\varvec{\Sigma }_{k}\).

2.2 Prior distributions

The global effects \(\varvec{\theta }=(\theta _{0},\theta _{1},\ldots ,\theta _{p-1})^{ \mathrm {\scriptscriptstyle T} }\) are assumed to be independent a priori with the kth element assigned an independent Gaussian prior distribution with mean \(m_{k}\) and variance \(\sigma ^2_{k}v_{k}\), and hence, we write \(\theta _{k}\sim N(m_{k},\sigma ^2_{k}v_{k})\) for \(k=0, \ldots , p-1\). Therefore, \(\varvec{m}=(m_{0},\ldots ,m_{p-1})^{ \mathrm {\scriptscriptstyle T} }\) and \(\varvec{C}_{3}\) is a diagonal matrix with diagonal entries \(\sigma ^2_{k}v_{k}\).

The realisations of the kth non-centred Gaussian process, \(\varvec{\beta }_{k},\) have a prior covariance matrix \(\varvec{\Sigma }_{k}=\sigma ^2_{k}\varvec{R}_{k}\). This prior covariance matrix is shared by the kth centred Gaussian process, \(\tilde{\varvec{\beta }}_{k}.\) The prior distributions for the variance parameters are \( \sigma ^2_{k}\sim IG(a_{k},b_{k})\quad (k=0,\ldots ,p-1),\quad \sigma ^2_{\epsilon }\sim IG(a_{\epsilon },b_{\epsilon }), \) where we write \(X\sim IG(a,b)\) if X has a density proportional to \(x^{-(a+1)}e^{-b/x}\). The entries of the \(\varvec{R}_{k}\) are \( (\varvec{R}_{k})_{ij}=corr\{\beta _{k}(\varvec{s}_{i}),\beta _{k}(\varvec{s}_{j})\}=\rho _{k}(d_{ij};\phi _{k},\nu _{k}) \) where \(d_{ij}=\Vert \varvec{s}_{i}-\varvec{s}_{j}\Vert \) denotes the distance between \(\varvec{s}_{i}\) and \(\varvec{s}_{j}\) and \(\rho _{k}\) is a correlation function from the Matérn family (Handcock and Stein 1993; Matérn 1986).

The Matérn correlation function for a pair of random variables at sites \(\varvec{s}_{i}\) and \(\varvec{s}_{j}\) is

where \(\Gamma (\cdot )\) is the gamma function and \(K_{\nu }(\cdot )\) is the modified Bessel function of the second kind of order \(\nu \) (Abramowitz and Stegun 1972, Sect. 9.6). The parameter \(\phi \) controls the rate of decay of correlation between two points as their separation increases. The smoothness of the realised random field is controlled by \(\nu \), as the process realisations are \(\lfloor \nu \rfloor \)-times mean-square differentiable. A number of parameterisations of the Matérn correlation function exist, for examples see Schabenberger and Gotway (2004, Sect. 4.7.2). The form given in (2) is taken from Rasmussen and Williams (2006, Sect. 4.2.1) and its special cases for different values of \(\nu \) are discussed in Sect. 3.2.

2.3 Exact rates of convergence

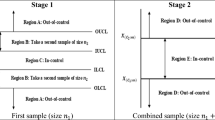

For a Gibbs sampler with Gaussian target distribution with a known precision matrix, we can compute the exact rate of convergence (Roberts and Sahu 1997). The convergence rate \(\lambda \) is bounded in the interval [0, 1], with \(\lambda =0\) indicating immediate convergence and \(\lambda =1\) indicating sub-geometric convergence (Meyn and Tweedie 1993).

Suppose we block update all random effects \(\varvec{\beta }\), or \(\tilde{\varvec{\beta }}\) in the case of the CP, and block update all global effects \(\varvec{\theta }\). Using the results given in Appendix 1 we can show that the respective rates of convergence for the CP and the NCP of model (1) are given by the maximum modulus eigenvalue of

and the maximum modulus eigenvalue of

In Sect. 3, we use this result to investigate how the entries of \(\varvec{X}_{1}\), \(\varvec{C}_{1}\) and \(\varvec{C}_{2}\) determine the rate of convergence for the CP and the NCP.

2.4 A simple example

To illustrate how parameterisation effects the posterior correlation of the model parameters and the rate of convergence of the Gibbs sampler, consider the following simple model, taken from Gelfand et al. (1996, Sect. 2). Let

with \(\beta _i\sim N(0,\sigma ^2_{\beta })\) and \(\epsilon _{i}\sim N(0,\sigma ^2_{\epsilon })\) independently distributed for all \(i=1,\ldots ,n\). The form of the model given by (5) is the NCP. The CP is found by replacing \(\beta _i\) with \(\tilde{\beta }_i=\beta _i+\theta ,\) and so \( Y_{i}=\tilde{\beta }_i+\epsilon _{i}, \) and \(\tilde{\beta }_i\sim N(\theta ,\sigma ^2_{\beta })\).

Assuming a locally uniform prior distribution for \(\theta ,\) and that \(\sigma ^2_{\beta }\) and \(\sigma ^2_{\epsilon }\) are known, Papaspiliopoulos et al. (2003) show that the exact convergence rates of the CP and the NCP of model (5) are

and \(\lambda _{nc}=1-\lambda _{c}\).

The rates of convergence highlight two important features of the sampling efficiency of the CP and the NCP. Firstly, that the ratio of the variance parameters is an important quantity in determining which parameterisation should be employed for model fitting, and secondly, that a change in variance ratio has opposing effects on each of the parameterisations.

3 CP versus NCP

In this section, we investigate how the rates of convergence are affected by the variance parameters and the correlation structure of the spatial processes. To focus on these relationships, we let \(p=1\) in model (1), giving us the following hierarchically centred model:

It follows from Eqs. (3) and (4) that the respective rates of convergence for the CP and the NCP of model (6) are

and

For independent random effects, the ratio of variance parameters is important in determining the rates of convergence and so we introduce the quantity: \(\delta _{0}=\sigma ^2_{0}/\sigma ^2_{\epsilon }\). In Sects. 3.1–3.5, we use expressions (7) and (8) to compare the convergence rates for the CP and the NCP for different values of \(\delta _{0}=\sigma ^{2}_{0}/\sigma ^{2}_{\epsilon }\) and forms of \(\varvec{R}_{0}\). In Sect. 3.6, we alter the model to include a covariate.

In Sects. 3.2–3.5, we confine ourselves to the case \(1/v_{0}=0\), such that we have an improper prior distribution for \(\theta _{0}\). This serves to clarify the effects of the other parameters on the convergence rates. To see the effect that the prior precision of \(\theta _{0}\) has on the convergence rate consider two different values for \(v_{0}\); \(v_{0,1}\) and \(v_{0,2}\), with corresponding rates of convergence \(\lambda _{c,1}\), \(\lambda _{nc,1}\), \(\lambda _{c,2}\) and \(\lambda _{nc,2}\). Comparing the ratio of the convergence rates for the two different priors, we have

and clearly if \(v_{0,1}<v_{0,2}\) then \(\lambda _{c,1}<\lambda _{c,2}\). The same result can be seen for the NCP where

Therefore, a more precise prior distribution for \(\theta _{0}\) will hasten convergence for both the CP and the NCP.

3.1 Convergence rates for equi-correlated random effects

To illustrate how changing the strength of correlation between the random effects influences the convergence rates of the different parameterisations, we begin by assuming a equi-correlation model. We suppose that

for \(0\le \rho <1\). We restrict \(\rho \) to take only non-negative values, as is usual in spatial data modelling. Roberts and Sahu (1997) consider a similar structure for the dispersion matrix of a Gaussian target distribution but do not include a global mean parameter as we do here.

To assist in the computation of convergence rates \(\lambda _{c}\) and \(\lambda _{nc}\) we make use of the following two matrix inversion identities. The first is the Sherman-Morrison-Woodbury formula, see for example Harville (1997, p. 423). Let \(\varvec{N}\) be an \(n\times n\) matrix, \(\varvec{U}\) be an \(n\times m\) matrix, \(\varvec{M}\) be an \(m\times m\) matrix and \(\varvec{V}\) be an \(m\times n\) matrix, then

The second result takes \(\varvec{I}\) to be the \(n\times n\) identity matrix and \(\varvec{J}\) the \(n\times n\) matrix of ones, then

for constants \(a>0\), \(b\ne -(a/n).\) This can be easily checked by direct multiplication and noting that

Note also that identity (11) follows from (10) if we set \(\varvec{N}=a\varvec{I}\), \(\varvec{U}=\varvec{1}\), \(\varvec{M}=b\varvec{I}\) and \(\varvec{V}=\varvec{1}^\prime \).

To compute the convergence rates given in (7) and (8) we must invert matrices \(\sigma ^2_{0}\varvec{R}_{0}\) and \(\displaystyle (1/\sigma ^{2}_{\epsilon }\varvec{I}+1/\sigma ^{2}_{0}\varvec{R}_{0}^{-1})\). Using Eq. (10) we see that

For \(\varvec{R}_{0}\) defined by (9), we write

and

and we can be invert the matrices given in (12) and (13) using Eq. (11).

After some cancellation we find the convergence rates for the CP and the NCP to be

and

If we assume an improper prior distribution, achieved by letting \(1/v_{0}=0\), then \(\lambda _{nc}=1-\lambda _{c}\), but otherwise equality does not hold. Note also that when \(\rho =0\), we recover the rates for the independent random effects model, see Sect. 2.4.

It is useful to re-write Eqs. (14) and (15) as

and

respectively. Consider first the case \(1/v_{0}=0\) and recall that a lower rate indicates faster convergence. For the CP, increasing either \(\delta _{0}\), \(\rho \) or n speeds up convergence. Increasing any one of these quantities has the opposing effect on the NCP. When \(1/v_{0}\ne 0\), \(\lambda _{nc}\) behaves as in the improper case. This is true of \(\lambda _{c}\) with respect to \(\delta _{0}\) and \(\rho \), but it is no longer monotonic in n.

3.2 Effect of spatial correlation

In spatial modelling, the correlation between two realisations of a latent process is usually assumed to be a function of their separation. Here, we consider exponential correlation functions, which are used widely in applications (Sahu et al. 2010; Berrocal et al. 2010; Sahu et al. 2007; Huerta et al. 2004). We have that

where \(\phi _{0}\) is the spatial decay parameter. The exponential correlation function belongs to the Matérn family and is found by letting \(\nu =0.5\) in Eq. (2). To see this we can use the following results taken from Schabenberger and Gotway (2004, Sect. 4.3.2)

We characterise the strength of correlation in terms of the effective range, which we define as the distance, \(d_{0}\), such that \(\displaystyle corr\{\beta _{0}(\varvec{s}_{i}),\beta _{0}(\varvec{s}_{j})\}=0.05\). For an exponential correlation function, we have that

We cannot compute explicit expressions for the entries of \(\varvec{R}_{0}^{-1}\), and hence, we cannot find expressions for the convergence rate in terms of \(\phi _{0}\). Therefore, we use a simulation approach. We take the unit square to be the spatial domain, randomly selecting \(n=40\) locations which will be used throughout the rest of this section.

We consider five values of the variance ratio \(\delta _{0}\) and vary the strength of spatial correlation by controlling the effective range \(d_{0}=-\log (0.05)/\phi _{0}\). For each value of \(\delta _{0}\), we compute the convergence rates given in Eqs. (7) and (8) for effective ranges between zero, implying no spatial correlation, and \(\surd {2}\), the maximum possible separation of two points in the domain.

Convergence rates are plotted against the effective range for the CP and the NCP in Fig. 1, where again a lower rate indicates faster convergence. For a fixed \(d_{0}\), we can see that increasing the \(\delta _{0}\) decreases the convergence rate for the CP, but increases it for the NCP, as we might expect given the results for independent random effects. We also observe that for a fixed level of \(\delta _{0}\) increasing \(d_{0}\), thus increasing the strength of correlation between the random effects, decreases the convergence rate for the CP and increases it for the NCP. Hence, the complimentary nature of the CP and NCP is maintained when we vary the strength of exponential correlation across the random effects. However, the two rates do not add to 1 unlike in the simpler case of just below-mentioned Eq. (15).

The convergence rates are dependent on the set of sampling locations. For a different set of locations, the convergence rates are changed, but for our given set of locations, the overall picture is not; increasing \(\delta _{0}\) or \(d_{0}\) quickens convergence for the CP and slows convergence for the NCP.

3.3 Effect of the smoothness parameter in the Matérn correlation function

In this section, we consider different correlation functions from the Matérn family, see Eq. (2) for the general form. \(\nu \) is a half integer, such that \(\nu =b+0.5\) for \(b=0,1,2,\ldots ,\) the correlation function takes on a simpler form. Taken from Rasmussen and Williams (2006, Sect. 4.2), we have that

In particular, when \(\nu =1.5\), the correlation function is

and \(\nu =2.5\), it becomes

As \(\nu \rightarrow \infty \), the correlation function goes to

which is sometimes known as the squared exponential or Gaussian correlation function.

Convergence rates for the CP of model (6) for different values of \(\nu \). a \(\delta _{0}=0.01\), b \(\delta _{0}=0.1\), c \(\delta _{0}=1\), d \(\delta _{0}=10\), e \(\delta _{0}=100\)

Convergence rates for the NCP of model (6) for different values of \(\nu \). a \(\delta _{0}=0.01\), b \(\delta _{0}=0.1\), c \(\delta _{0}=1\), d \(\delta _{0}=10\), e \(\delta _{0}=100\)

We consider model (6) and compare the convergence rates for the CP and the NCP for the exponential, \(\nu =1.5\), \(\nu =2.5\) and Gaussian correlation functions. In Sect. 3.2, the strength of correlation is considered in terms of the effective range, which for the exponential correlation function is \(-\log (0.05)/\phi _{0}\). In terms of \(\phi _{0}\), the effective range for the Gaussian correlation function is given by \(\sqrt{-2\log (0.05)}/\phi _{0}\). For other members of the Matérn class, there is no closed form expression for the effective range. Therefore, for the cases \(\nu \) is equal to 1.5 and 2.5, we take an effective range \(d_{0}\) and search for the value of \(\phi _{0}\) that solves

where \(\rho (d_{0},\phi _{0})\) is given by functions (18) and (19) respectively.

Convergence rates are computed for each parameterisation for effective ranges between 0 and \(\surd {2}\) and for five values of \(\delta _{0}=0.01,0.1,1,10,100\). Recall that as previously in Sect. 3.2 we take the unit square to be the spatial domain and randomly select 40 locations. The results for the CP are given in Fig. 2. We see that for fixed \(\nu \) and \(\phi _{0}\), increasing the \(\delta _{0}\) reduces the convergence rate. Also we see that for fixed \(\phi _{0}\) and \(\delta _{0}\), the convergence rate is increased when \(\nu \) is increased, except for the \(\delta _{0}=0.1\) case where the ordering only becomes apparent as the effective range is increased. Unlike in the case of \(\nu =0.5\), increasing the effective range does not reduce the convergence rate for other values of \(\nu \).

The equivalent plot for the NCP is given in Fig. 3. For fixed \(\nu \) and \(\phi _{0}\), the increasing \(\delta _{0}\) increases the convergence rate. For fixed \(\phi _{0}\) and \(\delta _{0}\), the increasing \(\nu \) slows convergence as it does for the CP. The convergence rate is monotonically increasing with the increasing effective range for all four correlation functions. We also note that convergence rates for the NCP are not as sensitive to changes in \(\nu \) as they are for the CP.

Figures 2 and 3 show that increasing \(\nu \), the smoothness parameter, leads to slower convergence for both the CP and NCP, which contradicts the complimentary behaviour of the rates of convergence seen in the previous two subsections. In order to understand this further, we investigate as follows. The rates of convergence, as obtained in (7) and (8) depend on \(R_0^{-1}\) which is guaranteed to be positive definite for any n when a member of the Matérn class of correlation functions is adopted. However, in practical numerical calculations with a large value of n (much greater than 40 used in Figs. 2 and 3) \(R_0\) becomes increasingly singular in the presence of high level of spatial correlation and smoothness. The minimum value of n for which this near singularity is observed depends on the minimum distance between the \(^nC_2\) pairs of the n locations and the values of the parameters \(\phi \) and \(\nu \) in the Matérn correlation function. To investigate this minimum value of n, we randomly sample 500 points in the unit square where the minimum Euclidean distance between any two points is greater than a threshold value, which is taken to be 0.01. This ensures that the singularity is not caused by very closely located points in the sample.

Next we find the minimum value of n for which \(R_0\) is approximately singular, viz., the value of the determinant less than \(10^{-7}\), for a given value of \(\nu \) and a given value of \(d_0\). The value of \(\phi _0\) is chosen by (20) \(\nu =1.5\) and 2.5 and \(\phi _0 = -\log (0.05)/d_{0}\) when \(\nu =0.5\), i.e. the case of exponential correlation function and \(\phi _0 = \sqrt{-2\log (0.05)}/d_{0}\) in the case of the Gaussian correlation function, when \(\nu \rightarrow \infty \). The minimum value is plotted in Fig. 4 against \(d_0\) for all four correlation functions considered here. As expected, for smaller values of \(d_0\), tending to the case of zero spatial correlation, the minimum value of n becomes very large. Increasing spatial correlation, as effected by increasing \(d_0\), leads to a smaller value of n at which near singularity of \(R_0\) is reached. Interestingly, increasing smoothness, through values of \(\nu \) hasten this except for the case of the exponential correlation function. This is due to the nonlinear effect of the \(\nu \) and \(\phi \) on the Matérn correlation function.

The near singularity in the limiting Gaussian case is related to the near predictability of the associated spatial processes. In the limiting Gaussian case, it is possible to predict \(Y(\varvec{s}^\prime )\) for any \(\varvec{s}^\prime \) in the same spatial domain upon observing the same spatial process \(Y(\varvec{s})\) at any location \(\varvec{s}\), see, e.g. page 62 of Banerjee et al. (2015). Such deterministic behaviour leads to the near singularity of covariance matrices which in turn leads to non-convergence. Indeed, Stein (1999) (page 70) explicitly recommends not to use the Gaussian correlation function to model physical processes. The investigation here confirms this view by pointing to non-convergence of the MCMC fitting algorithms for smooth and highly correlated processes for large values of n. This also allows us to conclude that increasing n in an infill asymptotic sense (Zhang 2004), in the presence of high spatial correlation, will lead to near singularity of the covariance matrices, which in turn will lead to non-convergence of either of the two parameterisations. This asymptotic result, however, does not spell disaster for the CP and NCP in practical problems where the strength of the spatial correlation is such that the resulting effective range (\(d_0\) in the exponential case) is significantly less than the maximum distance (\(\sqrt{2}\) in the unit square) between any two points in a closed spatial domain. Such a situation will allow modelling of a reasonably large number of spatial observations (e.g. low 100s) using the centring parameterisations.

3.4 Effect of introducing geometric anisotropy

The class of Matérn correlation functions is isotropic. This means that the correlation between the random variables at any two points, \(\varvec{s}_{i}\) and \(\varvec{s}_{j}\), depends on the distance between them \(d_{ij}=\Vert \varvec{s}_{i}-\varvec{s}_{j}\Vert \) (and parameters \(\phi \) and \(\nu \)), and hence, the contours of iso-correlation are circular. The assumption that spatial dependence is the same in all directions is not always appropriate and therefore we may seek an anisotropic specification for the correlation structure.

Anisotropic correlation functions are widely used and have been employed to model, for example, scallop abundance in the North Atlantic (Ecker and Gelfand 1999), extreme precipitation in Western Australia (Apputhurai and Stephenson 2013) and the phenotypic traits of trees in northern Sweden (Banerjee et al. 2010).

Correlation surface for \(\beta (\varvec{s}^*)\), \(\varvec{s}^*=(0.5,0.5)^{ \mathrm {\scriptscriptstyle T} }\), for exponential anisotropic correlation functions with transformation matrix \(\varvec{G}\) given in (22). Panels are given an alpha-numeric label. Numbers refer to three values of \(\alpha =0.5,1,2\). Letters (a), (b), and (c) refer to three values of \(\psi =0,\pi /4,\pi /2\)

Different forms of anisotropy exist, see Zimmerman (1993), but we consider only geometric anisotropy. Geometric anisotropic correlation functions can be constructed from isotropic correlation functions by taking a linear transformation of the lag vector \(\varvec{s}_{i}-\varvec{s}_{j}\). Let

where \(\varvec{G}\) is a \(2\times 2\) transformation matrix. In Euclidean space, (21) is equivalent to

where \(\varvec{H}=\varvec{G}^{ \mathrm {\scriptscriptstyle T} }\varvec{G}\). The matrix \(\varvec{H}\) must be positive definite, i.e. \(d^{*}_{ij}>0\) for \(\varvec{s}_{i}\ne \varvec{s}_{j}\), which is ensured if \(\varvec{G}\) is non-singular, see Harville (1997, Corrollary 14.2.14). By replacing \(d_{ij}\) with \(d^{*}_{ij}\) in (2), we have a geometric anisotropic Matérn correlation function with elliptical contours of iso-correlation.

As an example, we follow Schabenberger and Gotway (2004, Chap. 4) and let

and hence, the axes are rotated anti-clockwise through an angle \(\psi \) and then stretched in the direction of the x-axis by a factor \(1/\alpha >0\). The determinant of \(\varvec{G}\) is \(\alpha \) and so it is non-singular for \(\alpha \ne 0\), and hence, \(\varvec{H}\) is positive definite as required. For \(\varvec{G}\) given in (22), we have

If \(\alpha =1\), then \(\varvec{H}\) is the identity matrix and isotropy is recovered. If \(\psi =0\pm 2\pi m\), \(m=1,2,\ldots \), then

which is equivalent to just a stretch of the x-axis by \(1/\alpha \).

Convergence rates for the CP of model (6) with an anisotropic exponential correlation function for different values of \(\alpha \). a \(\delta _{0}=0.01\), b \(\delta _{0}=0.1\), c \(\delta _{0}=1\), d \(\delta _{0}=10\), e \(\delta _{0}=100\)

Convergence rates for the NCP of model (6) with an anisotropic exponential correlation function for different values of \(\alpha \). a \(\delta _{0}=0.01\), b \(\delta _{0}=0.1\), c \(\delta _{0}=1\), d \(\delta _{0}=10\), e \(\delta _{0}=100\)

To illustrate the effect of the transformation matrix \(\varvec{G}\), we consider \(\alpha =0.5,1,2\) and \(\psi =0,\pi /4,\pi /2\) with an anisotropic exponential correlation function such that

We take the point \(\varvec{s}^*=(0.5,0.5)^{ \mathrm {\scriptscriptstyle T} }\) in the unit square and fix decay parameter \(\phi =1\). We then compute the correlation between \(\varvec{s}^*\) and all points on a \(20\times 20\) grid, according to the correlation function given in (23). The values are then smoothed to produce a correlation surface. This is repeated for each of the nine combinations of \(\alpha \) and \(\psi \) and displayed in Fig. 5.

We can see that setting \(\alpha =0.5\) strengthens correlation in the x-direction. This is because for the purposes of computing correlation, the separation of two points in the x-direction is halved. When \(\alpha =1\), the angle of rotation \(\psi \) does not effect the contours as they are circular. Clearly, setting \(\alpha =2\) has the effect of weakening correlation in the x-direction.

To assess the impact of anisotropy on the convergence rates for the CP and the NCP we return to model (6). We consider an anisotropic exponential correlation function for the spatial process and so

where \(d^{*}_{ij}\) is given by Eq. (21). We begin by fixing \(\psi =0\) and letting \(\alpha =0.5,1,2\). This corresponds to panels 1(a), 2(a), and 3(a), in Fig. 5. We use five values for \(\delta _{0}=\sigma ^2_{0}/\sigma ^2_{\epsilon }=0.01,0.1,1,10,100\) and vary \(\phi _{0}\) such that \(3/\phi _{0}\in (0,\surd {2}]\). Here, the effective range is direction dependent so we no longer refer to \(3/\phi _{0}\) as the effective range.

We compute convergence rates for the CP and the NCP and plot results in Figs. 6 and 7 respectively. As \(\alpha \) is reduced, we increase the strength of correlation in the x-direction. This result is faster convergence for the CP and slower convergence for the NCP. This is consistent with the results of Sect. 3.2 which shows that increasing the effective range of an isotropic exponential correlation function, thus strengthening the correlation in all directions, helps the CP and hinders the NCP.

We now look at the effect of rotating the axis. If \(\alpha =1\) then a rotation has no impact on the correlation function as \(\varvec{H}\) is the identity. We consider four combinations of \(\alpha =0.5,2\) and \(\psi =\pi /4,\pi /2\). These values correspond to panels 1(b) and 1(c) for \(\alpha =0.5\), and 3(b) and 3(c) for \(\alpha =2\) in Fig. 5. Again, we let \(\delta _{0}=0.01,0.1,1,10,100\) and vary \(\phi _{0}\) such that \(3/\phi _{0}\in (0,\surd {2}]\).

The results for the CP and the NCP are given in Figs. 8 and 9 respectively. We can see that changing \(\psi \) has very little effect on the convergence rates of either parameterisation as expected since \(d^{*}_{ij}\) is free of \(\psi \). However, because we apply the rotation matrix first the subsequent stretch effectively acts in a different direction, that direction depending on \(\psi \), and the resulting values for \(d^{*}_{ij}\) may be different. Take the example we used here. Let \(\varvec{s}=(s_{1},s_{2})^{ \mathrm {\scriptscriptstyle T} }\) and \(\varvec{s}_{i}-\varvec{s}_{j}=(s_{i1}-s_{j1},s_{i2}-s_{j2})^{ \mathrm {\scriptscriptstyle T} }=(l_{1},l_{2})^{ \mathrm {\scriptscriptstyle T} }\). For \(\psi =\pi /4\), \(\displaystyle d^{*}_{ij}=\sqrt{0.5[(l_1-l_2)^2+\alpha ^2(l_1+l_2)^2]}\), whereas if \(\psi =\pi /2\) then \(\displaystyle d^{*}_{ij}=\sqrt{l^2_1+\alpha ^2 l^2_2}\). The different values of \(\displaystyle d^{*}_{ij}\) may lead to different rates of convergence. Further investigation is needed to determine whether similar results hold for patterned sampling locations.

Convergence rates for the CP of model (6) with an anisotropic exponential correlation function for different values of \(\alpha \) and \(\psi \). a \(\delta _{0}=0.01\), b \(\delta _{0}=0.1\), c \(\delta _{0}=1\), d \(\delta _{0}=10\), e \(\delta _{0}=100\)

Convergence rates for the NCP of model (6) with an anisotropic exponential correlation function for different values of \(\alpha \) and \(\psi \). a \(\delta _{0}=0.01\), b \(\delta _{0}=0.1\), c \(\delta _{0}=1\), d \(\delta _{0}=10\), e \(\delta _{0}=100\)

3.5 Effect of introducing tapered covariance matrices

When spatial association is modelled as a Gaussian process, the resulting covariances matrices are dense and inverting them can be slow or even infeasible for large n. One strategy to deal with this is covariance tapering (Furrer et al. 2006; Kaufman et al. 2008). The idea is to force to zero the entries in the covariance matrix that correspond to pairs of locations that are separated by a distance greater than a predetermined range. This results in sparse matrices that can be inverted more quickly than the original. In this section, we investigate the effect covariance tapering on the convergence rates for the CP and the NCP. We take model (6) with an exponential correlation function for \(\varvec{R}_{0}\) and compare the convergence rates found in Sect. 3.2 with those computed when we use a tapered covariance matrix.

The tapered correlation matrix, \(\varvec{R}_{Tap}\), is the element-wise product of the original correlation matrix \(\varvec{R}_{0}\) and the tapering correlation matrix \(\varvec{T}\), where \(\varvec{T}\) is a sparse matrix with ijth entry equal to zero if \(d_{ij}\) is greater than some threshold distance. Positive definiteness of \(\varvec{R}_{Tap}\) is assured if \(\varvec{T}\) is positive definite (Horn and Johnson 2012, Theorem 7.5.3).

Given that our original correlation function is an exponential one, we follow Furrer et al. (2006) and use a spherical tapering function such that

with decay parameter \(\chi \), where \(1/\chi \) is equal to the effective range, so that here we have \(\chi =-\phi _{0}/\log (0.05).\) Therefore,

where \(d_{0}=-\log (0.05)/\phi _{0}\) is the effective range.

We let \(\delta _{0}=0.01,0.1,1,10\) and 100 and vary \(d_{0}\) between 0 and \(\surd {2}\). The convergence rates for the CP and the NCP are given in Fig. 10. The dashed line represents the use of the tapered correlation matrix. The solid line for comparison are the rates achieved using the original correlation matrix \(\varvec{R}_{0}\) and are identical to those given in Fig. 1. Convergence rates are slowed by tapering for the CP and hastened for the NCP. Intuitively we can say that the under the CP stronger correlation is desirable and tapering reduces that, with the opposite being true for the NCP.

We can illustrate this effect by considering a spatial model with just two locations \(\varvec{s}_{1}\) and \(\varvec{s}_{2}\) such that \(\varvec{s}_{1}\ne \varvec{s}_{2}\). Let \(0\le corr(\beta (\varvec{s}_{1}),\beta (\varvec{s}_{2}))=\rho <1\). Suppose that we use a tapering function that takes values \(\rho ^*\) if \(d_{12}<d_{0}\) and zero otherwise, where \(0\le \rho ^*<1\). The tapered correlation is

Therefore, \(\rho _{Tap}\le \rho \), with equality attained only when \(\rho =0\). We know from Eqs. (16) and (17) that for equi-correlated random effects if \(\rho \) decreases, \(\lambda _{c}\) is increased and \(\lambda _{nc}\) is decreased. In other words, when \(n=2,\) tapering can only worsen the performance of CP and improve the performance of the NCP.

3.6 Effect of covariates

In this section, we investigate the effect of the covariates upon the rate of convergence. We consider the following model

which may be found by letting \(k=1,\ldots ,p-1,\) and \(p=2\) in model (1). Recalling that \(\tilde{\varvec{\beta }}_{1}=\{\tilde{\beta }_{1}(\varvec{s}_{1}),\ldots ,\tilde{\beta }_{1}(\varvec{s}_{n})\}^{ \mathrm {\scriptscriptstyle T} }\), where \(\tilde{\beta }_{1}(\varvec{s}_{i})=\beta _{1}(\varvec{s}_{i})+\theta _{1}\), and \(\varvec{x}_{1}=\{x_{1}(\varvec{s}_{1}),\ldots ,x_{1}(\varvec{s}_{n})\}^{ \mathrm {\scriptscriptstyle T} }\) and \(\varvec{D}_{1}=\text {diag}(\varvec{x}_{1})\), we can write model (24) in the following form

We consider only one covariate and so in the rest of this section we drop the subscript from \(\varvec{D}_{1}\) and \(\varvec{x}_{1}\).

First suppose that random effects are independent. This can be considered the limiting case for weakening spatial correlation. For the sake of notational clarity, under the assumption of spatial independence we write \(x(\varvec{s}_{i})=x_{i}\ (i=1,\ldots ,n)\). The convergence rate for the CP is

If we let \(1/v_{1}=0\), thus implying and improper prior for \(\theta _{1}\), we can write \(\lambda _{c}\) as

We introduce the variable \(\delta _{1}=\sigma ^2_{1}/\sigma ^2_{\epsilon }\). For fixed \(\varvec{x}\), we can see that as \(\delta _{1}\) tends to zero, the convergence rate for the CP of model (24) tends to one. As \(\delta _{1}\) gets larger, the convergence rate goes to zero. To see the effect of the scale of \(\varvec{x}\), we introduce variables \(u_{i}\), where

and \(\bar{\varvec{x}}\) and \(sd_{x}\) are the sample mean and sample standard deviation of \(\varvec{x}\) respectively. Substituting Eq. (27) into Eq. (26) we have

We suppose that the \(x_{i}\)’s have already been centred on zero and so \(\bar{\varvec{x}}=0\). For fixed variance parameters, the effect of the scale of \(\varvec{x}\) is clear; an increase in \(sd_{x}\) results in a decrease in the convergence rate and vice versa.

For the NCP, the convergence rate is

Letting \(1/v_{1}=0\), we can write \(\lambda _{nc}\) as

For fixed \(\varvec{x}\), if \(\sigma ^2_{\epsilon }/\sigma ^2_{1}\) goes to zero then \(\lambda _{nc}\) goes to one. In contrast, as the data variance dominates that of the random effects, the convergence rate falls. Note that in general \(\lambda _{c} + \lambda _{nc} \ne 1\).

To see the effect of the scale of \(\varvec{x}\) upon \(\lambda _{nc}\) we substitute Eq. (27) into equation (28). Then we have

Again, assuming \(\bar{\varvec{x}}=0\), we get

Fixing \(\sigma ^2_{\epsilon }\) and \(\sigma ^2_{1}\), as \(sd_{x}\) tends to infinity, \(\lambda _{nc}\) tends to 1, as \(sd_{x}\) tends to zero, \(\lambda _{nc}\) tends to 0.

Now we investigate the effect that increasing the strength of correlation between realisations of the slope surface has upon the performance of the CP and the NCP. We let \(\displaystyle (\varvec{R}_{1})_{ij}=\exp (-\phi _{1} d_{ij})\) and so the effective range \(d_{1}=-\log (0.05)/\phi _{1}\). To generate the values of \(\varvec{x}\) we select a point \(\varvec{s}_{x}\), which we may imagine to be the site of a source of pollution. We assume that the value for the observed covariate at site \(\varvec{s}\) decays exponentially at a rate \(\phi _{x}\) with increasing separation from \(\varvec{s}_{x}\), so that

The spatial decay parameter \(\phi _{x}\) is chosen such that there is an effective spatial range of \(\surd {2}/2\), i.e. if \(\Vert \varvec{s}-\varvec{s}_{x}\Vert =\surd {2}/2\) then \(x(\varvec{s})=0.05\). The values of \(\varvec{x}\) are standardised by subtracting their sample mean and dividing by their sample standard deviation.

We compute the convergence rate for the CP and the NCP for model (25) for five values of \(\delta _{1}=0.01,0.1,1,10,100\), and for an effective range \(d_{1}\) between 0 and \(\surd {2}\). Results are given in Fig. 11. We see that for the CP for a fixed \(d_{1}\), increasing \(\delta _{1}\) achieves faster convergence. If we fix \(\delta _{1}\) the performance of the CP is improved as the effective range is increased. The opposite is seen for the NCP, whose performance is improved by decreasing \(\delta _{1}\) or shortening the effective range. Therefore, the variance ratio \(\delta _{1}\) and the decay parameter \(\phi _{1}\) have same influence on the convergence rates of the CP and NCP as \(\delta _{0}\) and \(\phi _{0}\).

4 Practical examples with unknown covariance parameters

In this section, we focus on the practical implementation of the Gibbs sampler for the CP and the NCP for spatially varying coefficient models. The joint posterior distribution is unaffected by hierarchical centring and so inferential statements are the same under either parameterisation. However, what is affected is the efficiency of the Gibbs sampler used to make those statements.

In Sect. 3, the CP and the NCP are compared in terms of the exact convergence rates of the associated Gibbs samplers. The key assumption needed to compute these rates is that the joint posterior distribution is Gaussian with known precision matrix. Here we allow for the more common scenario that the precision matrix is known only up to a set of covariance parameters. In this case, we cannot compute the exact convergence rate. Therefore, we use the MCMC samples to assess the efficiency of the Gibbs samplers induced by the CP and the NCP. The full conditional distributions needed to construct the Gibbs samplers are given in Appendix 2.

We employ two diagnostic statistics to compare parameterisations. The first statistic we use is based on the multivariate potential scale reduction factor (MPSRF) (Brooks and Gelman 1998). We define the \(\hbox {MPSRF}_{M}(1.1)\) to be the number of iterations required for the MPSRF to fall below 1.1. To compute the \(\hbox {MPSRF}_{M}(1.1)\) we run five chains of length 25,000 from widely dispersed starting values. In particular, we take values that are outside of the intervals described by pilot chains. Moreover, the same starting values are used for both the CP and the NCP. At every fifth iteration, the MPSRF is calculated and number of iterations for its value to first drop below 1.1 is the value that we record. The second statistic we use is the effective sample size (ESS) of the model parameters (Robert and Casella 2004). The ESS is computed using all 125,000 MCMC samples and gives us a measure of the Markovian dependence between successive MCMC iterates, with values of 125,000 indicating independence. There is a negligible difference in the run times for the CP and the NCP and so we do not adjust these measures by computation time.

4.1 A simulation study

We simulate data from model (6) for \(n=40\) randomly chosen locations across the unit square assuming an exponential correlation function for the spatial process. We set \(\theta _{0}=0\) and generate data with five variance parameter ratios such that \(\delta _{0}=\sigma ^2_{0}/\sigma ^2_{\epsilon }=0.01,0.1,1,10,100\). This is done by letting \(\sigma ^2_{0}=1\) and varying \(\sigma ^2_{\epsilon }\) accordingly. For each of the five levels of \(\delta _{0}\), we have four values of the decay parameter \(\phi _{0}\), chosen such that there is an effective range of 0, \(\surd {2}/3\), \(2\surd {2}/3\) and \(\surd {2}\), where \(\surd {2}\) is the maximum possible separation of two points in the unit square. Hence, there are 20 combinations of \(\sigma ^2_{0}\), \(\sigma ^2_{\epsilon }\) and \(\phi _{0}\) in all. Each of these combinations is used to simulate 20 datasets, and so there are 400 datasets in total.

We fix the decay parameter at its known value and sample from the marginal posterior distributions of \(\theta _{0}\), \(\sigma ^2_{0}\) and \(\sigma ^2_{\epsilon }\). We let hyperparameters \(m_{0}=0\) and \(v_{0}=10^4\). Recall that the variance parameters are given inverse gamma prior distributions with \(\pi (\sigma ^2_{0})=IG(a_{0},b_{0})\) and \(\pi (\sigma ^2_{\epsilon })=IG(a_{\epsilon },b_{\epsilon })\). We let \(a_{0}=a_{\epsilon }=2\) and \(b_{\epsilon }=b_{\epsilon }=1\), implying a prior mean of one and infinite prior variance for \(\sigma ^2_{0}\) and \(\sigma ^2_{\epsilon }\). These are common hyperparameters for inverse gamma prior distributions, see Sahu et al. (2007, (2010), Gelfand et al. (2003).

Figure 12 shows boxplots of the \(\hbox {MPSRF}_{M}\)(1.1) (top row) and the ESS of \(\theta _{0}\) (bottom row) for the CP. Each panel contains the results for a fixed value of \(\delta _{0}\), increasing from 0.01 on the left to 100 on the right. Each panel contains four boxplots corresponding to the four effective ranges of 0, x / 3, 2x / 3, and x, where \(x=\surd {2}\). As the effective range increases, we have stronger spatial correlation between the random effects. Each boxplot is produced from the 20 values obtained for a given combination of \(\delta _{0}\) and \(\phi _{0}\). We can see that the performance of the CP improves with increasing \(\delta _{0}\) and also with increasing strength of correlation between the random effects.

The equivalent plot for the NCP is given in Fig. 13. We can see a reverse of the pattern displayed by the CP. The performance of the NCP is worsened as \(\delta _{0}\) increases and the detrimental effect of increasing the strength of correlation between the random effects is also clearly evident. Therefore, \(\delta _{0}\) and the \(d_{0}\) have the same influence on the CP and the NCP as we saw for the exact convergence rates when the variance parameters were assumed to be known in Sect. 3.2.

Figure 14 gives the ESS of \(\sigma ^2_{0}\) (top row) and the ESS of \(\sigma ^2_{\epsilon }\) (bottom row) for the CP. We can see a general increasing trend in the ESS of \(\sigma ^2_{0}\) for increasing \(\delta _{0}\), but a downward trend is seen for \(\sigma ^2_{\epsilon }\). However, for a fixed value of \(\delta _{0}\) we can see an improvement as the effective range increases, particularly in \(\sigma ^2_{\epsilon }\). This is because for the case when there is zero effective range, marginally the data variance is \((\sigma ^2_{0}+\sigma ^2_{\epsilon })\varvec{I}\), and so increasing the effective range moves us away from the unidentifiable case which can result in poor mixing of the chains.

Figure 15 shows the ESS of \(\sigma ^2_{0}\) and \(\sigma ^2_{\epsilon }\) for the NCP. We see that the ESS of \(\sigma ^2_{0}\) is stable under changes in \(\delta _{0}\) and \(d_{0}\), with the exception being the case where \(\delta _{0}=0.1\) and \(d_{0}=0\). In this case, the results are again explained by the lack of identifiability of the variance parameters for independent random effects. The ESS of \(\sigma ^2_{\epsilon }\) is reduced by increasing \(\delta _{0}\). For a fixed value of \(\delta _{0}\), we can see an improvement in the ESS as \(d_{0}\) increases. This was also observed for \(\sigma ^2_{\epsilon }\) under the CP and is similarly explained.

4.2 Real-data example

In this section, we compare the sampling efficiency of the CP and the NCP when they are fitted to a real dataset. We have annual PM10 concentrations, in micrograms per cubic metre (\(\mu \)g/m\(^3\)), taken from 70 monitoring sites in Greater London, UK. We use data from 50 sites for model fitting, leaving out data from 20 sites for model validation, see Fig. 16. The mean and standard deviation for the 50 data sites is 26.47 and 5.00 \(\upmu \)g/m\(^3\) respectively.

In addition to the observed data, we have output from the Air Quality Unified Model (AQUM), a numerical model giving air pollution predictions at 1-km grid cell resolution (Savage et al. 2013). The AQUM is used as a covariate in the model, where \(x(\varvec{s})\) is the AQUM output for the grid cell containing \(\varvec{s}\). Therefore, we use a downscaler model as employed by Berrocal et al. (2010).

To stabilise the variance, we model the data on the square root scale. However, in order not to underestimate their variability, predictions are obtained on the original scale.

We fit model (1) with \(p=2\) and so we have two processes, an intercept and a slope process. Each process has a corresponding global variance and decay parameters and so \(\varvec{\theta }=(\theta _{0},\theta _{1})^{ \mathrm {\scriptscriptstyle T} }\), \(\varvec{\sigma ^2}=(\sigma ^2_{0},\sigma ^2_{1})^{ \mathrm {\scriptscriptstyle T} }\) and \(\varvec{\phi }=(\phi _{0},\phi _{1})^{ \mathrm {\scriptscriptstyle T} }\). In addition, we have the data variance, \(\sigma ^2_{\epsilon }\). For the prior distribution of \(\varvec{\theta }, \) we let \(\varvec{m}=(0,0)^{ \mathrm {\scriptscriptstyle T} }\) and \(v_{0}=v_{1}=10^4\). We let \(a_{0}=a_{1}=a_{\epsilon }=2\) and \(b_{0}=b_{1}=b_{\epsilon }=1\), so that each variance parameter is assigned an IG(2, 1) prior distribution.

We begin by estimating the decay parameters using an empirical Bayes method by performing a grid search over a small number of values, then choosing those values that minimise some calibration criterion. This is a common approach adopted by many authors, e.g., Sahu et al. (2011), Berrocal et al. (2010), Sahu et al. (2007) since Zhang (2004) showed that it is not possible to consistently estimate these in a model with Matérn covariance function in the presence of other unknown parameters. In our Bayesian inference setting, this will imply weak identifiability in the posterior distribution when non-informative prior distributions are assumed.

The greatest distance between any two of the 70 monitoring stations is 96.2 kilometres (km), and so we select values of \(\phi _{0}\) and \(\phi _{1}\) corresponding to effective ranges of 5, 10, 25, 50 and 100 km. Predictions are made at the 20 validations sites and we compute the values of three measures of prediction error; the mean absolute prediction error (MAPE), the root mean square prediction error (RMSPE) and the continuous ranked probability score (CRPS), see for example Gneiting et al. (2007).

For each of the 25 pairs of spatial decay parameters, we generate a single chain of 25,000 iterations and discard the first 5,000. The values of the MAPE, RMSPE and CRPS are given in Table 1. Recall that \(d_{0}\) and \(d_{1}\) denote the effective ranges implied by \(\phi _{0}\) and \(\phi _{1}\) respectively. By all three measures, the prediction error is minimised when \(d_{0}=5\) and \(d_{1}=50\), and so our estimates for the spatial decay parameters are

We now compare the sampling efficiencies of the CP and NCP for the London PM10 data when the values of the decay parameters are fixed at the above optimal values. For each parameterisation, we generate five Markov chains of length 25,000 from the same widely dispersed starting values. The \(\hbox {MPSRF}_{M}(1.1)\) and the ESS of \(\varvec{\theta }=(\theta _{0},\theta _{1})^\prime \), \(\varvec{\sigma ^2}=(\sigma ^2_{0},\sigma ^2_{1})^\prime \) and \(\sigma ^2_{\epsilon }\) are computed and given in Table 2. We can see that the CP requires far fewer iterations for the MPSRF to drop below 1.1 than the NCP: 275 versus 1985. The ESS of the mean parameters is greater for the CP than the NCP, especially for \(\theta _{1}\) reflecting the stronger spatial correlation for the slope process and the estimate for \(\delta _{1}\). For the variance parameters \(\sigma ^2_{0}\) and \(\sigma ^2_{1}\), the ESS for the CP is nearly double that of the NCP. The NCP achieves better mixing in the \(\sigma ^2_{\epsilon }\) coordinate than does the CP.

For making inference, we generate a single long chain for 50,000 iterations and discard the first 10,000. Parameter estimates and their 95% credible intervals are given in Table 3. An estimate for the global intercept \(\theta _{0}\) of 5.148 reflects that the data are modelled on the square root scale. The global regression parameter \(\theta _{1}\) for the AQUM output is not significant given the inclusion in the model of the regression process. Of particular interest to us are the estimates of the variance parameters. Weak spatial correlation in the intercept process means that the estimate for \(\sigma ^2_{0}\) is the smallest of the three variance parameters. More of the spatial variability is explained by the intercept process, and so the estimate for \(\sigma ^2_{1}\) is the greatest of the three variance parameters. We also include estimates and 95% credible intervals for the variance ratios: \(\delta _{0}=\sigma ^2_{0}/\sigma ^2_{\epsilon }\) and \(\delta _{1}=\sigma ^2_{1}/\sigma ^2_{\epsilon }\).

5 Conclusion

We have compared the efficiencies of the CP and the NCP of spatial models. We find that in addition to the ratio of the variance parameters, the correlation structure between the random effects plays a key role in determining the rate of convergence.

For known variance and correlation parameters, the exact rate of convergence has been examined. We have shown that for spatial models with an exponential correlation function, increasing the variance of the random effects relative to that of the data, as well as increasing the strength of correlation, works to hasten the convergence of the CP but slows the convergence of the NCP. However, when the covariance matrix is tapered to remove long-range correlation, convergence for the CP is hindered, but convergence for the NCP is favoured. Introducing geometric anisotropy to strengthen the correlation in one direction has, for randomly selected locations, a similar effect to strengthening it in all directions; the CP is favoured and the NCP hindered. Both these results are consistent with the notion that the performance of CP is improved in the presence of greater spatial correlation but the performance of the NCP is worsened. We have seen that, as the smoothness parameter in the Matérn correlation function is increased, both the CP and the NCP are slower to converge, and in the presence of moderate spatial correlation, both parameterisations will fail to converge when the sample size is large enough.

When the variance parameters are unknown, the sampling efficiencies of the parameterisations are compared via the \(\hbox {MPSRF}_{M}(1.1)\) and the ESS of the unknown model parameters. We have seen that the relationships between the sampling efficiencies of the respective parameterisations, and the ratio of the variance parameters and the strength of spatial correlation, still hold for unknown variance parameters. The CP performs better when the data precision is relatively high and when the correlation is strong. In contrast to this, the NCP performs best when the data are less informative and the correlation is weak.

References

Abramowitz, M., Stegun, I.A.: Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Courier Dover Publications, New York (1972)

Apputhurai, P., Stephenson, A.G.: Spatiotemporal hierarchical modelling of extreme precipitation in Western Australia using anisotropic Gaussian random fields. Environ. Ecol. Stat. 20(4), 667–677 (2013)

Banerjee, S., Gelfand, A.E., Finley, A.O., Sang, H.: Gaussian predictive process models for large spatial data sets. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 70(4), 825–848 (2008)

Banerjee, S., Finley, A.O., Waldmann, P., Ericsson, T.: Hierarchical spatial process models for multiple traits in large genetic trials. J. Am. Stat. Assoc. 105(490), 506–521 (2010)

Banerjee, S., Carlin, B.P., Gelfand, A.E.: Hierarchical Modeling and Analysis for Spatial Data, 2nd edn. CRC Press, Boca Raton (2015)

Bass, M.R., Sahu, S.K.: Dynamically updated spatially varying parameterizations of hierarchical Bayesian models for spatially correlated data. Submitted (2016)

Berrocal, V.J., Gelfand, A.E., Holland, D.M.: A spatio-temporal downscaler for output from numerical models. J. Agric. Biol. Environ. Stat. 15(2), 176–197 (2010)

Brooks, S.P., Gelman, A.: General methods for monitoring convergence of iterative simulations. J. Comput. Graph. Stat. 7(4), 434–455 (1998)

Cressie, N., Johannesson, G.: Fixed rank kriging for very large spatial data sets. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 70(1), 209–226 (2008)

Ecker, M.D., Gelfand, A.E.: Bayesian modeling and inference for geometrically anisotropic spatial data. Math. Geol. 31(1), 67–83 (1999)

Filippone, M., Zhong, M., Girolami, M.: A comparative evaluation of stochastic-based inference methods for gaussian process models. Mach. Learn. 93(1), 93–114 (2013)

Furrer, R., Genton, M.G., Nychka, D.: Covariance tapering for interpolation of large spatial datasets. J. Comput. Graph. Stat. 15(3), 502–523 (2006)

Gelfand, A.E., Smith, A.F.: Sampling-based approaches to calculating marginal densities. J. Am. Stat. Assoc. 85(410), 398–409 (1990)

Gelfand, A.E., Sahu, S.K., Carlin, B.P.: Efficient parameterisations for normal linear mixed models. Biometrika 82(3), 479–488 (1995)

Gelfand, A.E., Sahu, S.K., Carlin, B.P.: Efficient parameterizations for generalized linear mixed models, (with discussion). In: Bernardo, J.M., Berger, J.O., Dawid, A.P., Smith, A.F.M. (eds.) Bayesian Statistics 5, pp. 165–180. Oxford University Press, Oxford (1996)

Gelfand, A.E., Kim, H.-J., Sirmans, C., Banerjee, S.: Spatial modeling with spatially varying coefficient processes. J. Am. Stat. Assoc. 98(462), 387–396 (2003)

Gelman, A., Rubin, D.B.: Inference from iterative simulation using multiple sequences. Stat. Sci. 7(4), 457–472 (1992)

Gneiting, T., Balabdaoui, F., Raftery, A.E.: Probabilistic forecasts, calibration and sharpness. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 69(2), 243–268 (2007)

Handcock, M.S., Stein, M.L.: A Bayesian analysis of kriging. Technometrics 35(4), 403–410 (1993)

Harville, D.A.: Matrix Algebra from a Statistician’s Perspective. Springer, New York (1997)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge (2012)

Huerta, G., Sansó, B., Stroud, J.R.: A spatiotemporal model for Mexico City ozone levels. J. R. Stat. Soc. Ser. C (Appl. Stat.) 53(2), 231–248 (2004)

Imai, K., van Dyk, D.: A bayesian analysis of the multinomial probit model using marginal data augmentation. J. Econom. 124, 311–334 (2005)

Kaufman, C.G., Schervish, M.J., Nychka, D.W.: Covariance tapering for likelihood-based estimation in large spatial data sets. J. Am. Stat. Assoc. 103(484), 1545–1555 (2008)

Liu, J.S., Wu, Y.N.: Parameter expansion for data augmentation. J. Am. Stat. Assoc. 94, 1264–1274 (1999)

Matérn, B.: Spatial Variation, 2nd edn. Springer, Berlin (1986)

Meyn, S.P., Tweedie, R.L.: Markov Chains and Stochastic Stability. Springer, London (1993)

Papaspiliopoulos, O., Roberts, G.: Stability of the Gibbs sampler for Bayesian hierarchical models. Ann. Stat. 36(1), 95–117 (2008)

Papaspiliopoulos, O., Roberts, G.O., Sköld, M.: Non-centered parameterisations for hierarchical models and data augmentation (with discussion). Bayesian Statistics 7 (Bernardo, JM and Bayarri, MJ and Berger, JO and Dawid, AP and Heckerman, D and Smith, AFM and West, M): Proceedings of the Seventh Valencia International Meeting, pp. 307–326. Oxford University Press, USA (2003)

Rasmussen, C.E., Williams, C.K.I.: Gaussian Processes for Machine Learning. MIT press, Cambridge, MA (2006)

Robert, C.O., Casella, G.: Monte Carlo Statistical Methods, 2nd edn. Springer, New York (2004)

Roberts, G.O., Sahu, S.K.: Updating schemes, correlation structure, blocking and parameterization for the Gibbs sampler. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 59(2), 291–317 (1997)

Sahu, S.K., Roberts, G.O.: On convergence of the em algorithm and the gibbs sampler. Stat. Comput. 9, 55–64 (1999)

Sahu, S.K., Gelfand, A.E., Holland, D.M.: High resolution space-time ozone modeling for assessing trends. J. Am. Stat. Assoc. 102(480), 1221–1234 (2007)

Sahu, S.K., Gelfand, A.E., Holland, D.M.: Fusing point and areal level space-time data with application to wet deposition. J. R. Stat. Soc. Ser. C (Appl. Stat.) 59(1), 77–103 (2010)

Sahu, S.K., Yip, S., Holland, D.M.: A fast Bayesian method for updating and forecasting hourly ozone levels. Environ. Ecol. Stat. 18(1), 185–207 (2011)

Savage, N., Agnew, P., Davis, L., Ordóñez, C., Thorpe, R., Johnson, C., O’Connor, F., Dalvi, M.: Air quality modelling using the met office unified model (aqum os24-26): model description and initial evaluation. Geosci. Model Dev. 6(2), 353–372 (2013)

Schabenberger, O., Gotway, C.A.: Statistical Methods for Spatial Data Analysis. CRC Press, Boca Raton (2004)

Stein, M.L.: Interpolation of Spatial Data: Some Theory for Kriging. Springer, New York (1999)

van Dyk, D., Meng, X.-L.: The art of data augmentation (with discussion). J. Comput. Graph. Stat. 10, 1–50 (2001)

Yu, Y., Meng, X.-L.: To center or not to center: That is not the question: an Ancillarity-Sufficiency Interweaving Strategy (ASIS) for boosting MCMC efficiency. J. Comput. Graph. Stat. 20(3), 531–570 (2011)

Zhang, H.: Inconsistent estimation and asymptotically equal interpolations in model-based geostatistics. J. Am. Stat. Assoc. 99(465), 250–261 (2004)

Zimmerman, D.L.: Another look at anisotropy in geostatistics. Math. Geol. 25(4), 453–470 (1993)

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Computing the exact convergences for the CP and NCP

For Gibbs samplers with Gaussian target distributions with known precision matrices, we have analytic results for the exact convergence rate (Roberts and Sahu 1997). We let \(\varvec{\xi }\) denote the set of all mean parameters in the model: \(\varvec{\xi }=(\varvec{\beta }^{ \mathrm {\scriptscriptstyle T} },\varvec{\theta }^{ \mathrm {\scriptscriptstyle T} })^{ \mathrm {\scriptscriptstyle T} }\). Suppose that \(\varvec{\xi }\mid \varvec{y}\sim N(\varvec{\mu },\varvec{\Sigma })\), and let \(\varvec{Q}=\varvec{\Sigma }^{-1}\) be the posterior precision matrix (PPM). Further suppose that \(\varvec{\xi }\) is partitioned into l blocks for updating within the Gibbs sampler. To compute the convergence rate first, partition \(\varvec{Q}\) according to the l blocks where the ijth block is denoted by \(\varvec{Q}_{ij}, i, j=1, \ldots l.\)

Let \(\varvec{A}=\varvec{I}-\text {diag}(\varvec{Q}^{-1}_{11},\ldots ,\varvec{Q}^{-1}_{ll})\varvec{Q}\) and \(\varvec{F}=(\varvec{I}-\varvec{L})^{-1}\varvec{U}\), where \(\varvec{L}\) is the block lower triangular matrix of \(\varvec{A}\), and \(\varvec{U}=\varvec{A}-\varvec{L}\). Roberts and Sahu (1997) show that the Markov chain induced by the Gibbs sampler with components block updated according to the above blocking scheme, has a Gaussian transition density with mean \(E\{\varvec{\xi }^{(t+1)}|\varvec{\xi }^{(t)}\}=\varvec{F}\varvec{\xi }^{(t)}+\varvec{f},\) where \(\varvec{f}=(\varvec{I}-\varvec{F})\varvec{\mu }\) and covariance matrix \(\varvec{\Sigma }-\varvec{F}\varvec{\Sigma }\varvec{F}^{ \mathrm {\scriptscriptstyle T} }\). Their observation leads to the following theorem:

Theorem 5.1

(Roberts and Sahu 1997, Theorem 1) A Markov chain with transition density

has a convergence rate equal to the maximum modulus eigenvalue of \(\varvec{F}\).

Corollary 5.2

If we update \(\varvec{\xi }\) in two blocks so that \(l=2\), then

and the convergence rate is the maximum modulus eigenvalue of

We use Theorem 5.1 to compare the convergence rates of Gibbs samplers associated with the CP and the NCP. First, we must compute the PPM for each parameterisation. To identify the PPM for the CP write

where the last equation only includes the terms containing both \(\tilde{\varvec{\beta }}\) and \(\varvec{\theta }\). Therefore, the posterior precision matrix for the CP is given by

By corollary 5.2, if we update all random effects as one block and all global effects as another, then the convergence rate for the CP is the maximum modulus eigenvalue of

Similarly for the NCP, we find the PPM by writing

and hence, we have

By Corollary 5.2, the convergence rate of the Gibbs sampler for the NCP is the maximum modulus eigenvalue of

Appendix 2: Full conditional distributions

1.1 (a) Posterior distributions for the CP

In this section, we give the joint posterior and full conditional distributions for the CP of model (1). We denote by \(\varvec{\sigma ^2}=(\sigma ^2_{0},\ldots ,\sigma ^2_{p-1})^{ \mathrm {\scriptscriptstyle T} }\) the vector containing the variance parameters of the random effects and let \(\varvec{\phi }=(\phi _{0},\ldots ,\phi _{p-1})^{ \mathrm {\scriptscriptstyle T} }\) contain the decay parameters. We let \(\varvec{\xi }=(\tilde{\varvec{\beta }}^{ \mathrm {\scriptscriptstyle T} },\varvec{\theta }^{ \mathrm {\scriptscriptstyle T} },\varvec{\sigma ^2}^{ \mathrm {\scriptscriptstyle T} },\sigma ^2_{\epsilon },\varvec{\phi }^{ \mathrm {\scriptscriptstyle T} })^{ \mathrm {\scriptscriptstyle T} }\) contain all np random effects, p global effects, \(p+1\) variance parameters and p decay parameters. The joint posterior distribution of \(\varvec{\xi }\) is given by

where \(\varvec{D}_{0}\) is defined to be the identity matrix \(\varvec{I}\).

We use Gibbs sampling to sample from \(\pi (\varvec{\xi }|\varvec{y})\) for the CP, given in (29). We assume that the random effects will be block updated according to their process, i.e. we jointly update the n-dimensional vector \(\varvec{\beta }_{k}\), for \(k=0,\ldots ,p-1\). All other parameters in \(\varvec{\xi }\) are updated as single univariate components. The full conditional distributions that we need for the CP are given below:

-

The full conditional distribution for the centred spatially correlated random effects \(\tilde{\varvec{\beta }}_{k}\), \(k=0,\ldots ,p-1\), is

$$\begin{aligned} \tilde{\varvec{\beta }}_{k}|\tilde{\varvec{\beta }}_{-k},\varvec{\theta },\varvec{\sigma ^2},\sigma ^2_{\epsilon },\varvec{\phi },\varvec{y}\sim N(\varvec{m}_{k}^{*},\varvec{\Sigma }^{*}_{k}), \end{aligned}$$where we denote by \(\tilde{\varvec{\beta }}_{-k}\) the vector of all random effects \(\tilde{\varvec{\beta }}\) without the realisations of the kth process \(\tilde{\varvec{\beta }}_{k}\) and

$$\begin{aligned} \varvec{\Sigma }^{*}_{k}= & {} \left( \dfrac{1}{\sigma ^2_{\epsilon }}\varvec{D}^{ \mathrm {\scriptscriptstyle T} }_{k}\varvec{D}_{k}+ \varvec{\Sigma }^{-1}_{k}\right) ^{-1},\\ \varvec{m}^{*}_{k}= & {} \varvec{\Sigma }^{*}_{k}\left[ \frac{1}{\sigma ^2_{\epsilon }}\varvec{D}_{k}\left( \varvec{y}-\sum ^{p-1}_{\begin{array}{c} j=0 \\ j\ne k \end{array}}\varvec{D}_{j}\tilde{\varvec{\beta }}_{j}\right) +\varvec{\Sigma }^{-1}_{k}\theta _{k}\varvec{1}\right] .\\ \end{aligned}$$ -

The full conditional distribution for the global effects \(\theta _{k}\), \(k=0,\ldots ,p-1\), for the CP is

$$\begin{aligned} \theta _{k}|\tilde{\varvec{\beta }},\varvec{\theta }_{-k},\varvec{\sigma ^2},\sigma ^2_{\epsilon },\varvec{\phi },\varvec{y}\sim N(m_{k}^{*},v^{*}_{k}), \end{aligned}$$where

$$\begin{aligned} v^{*}_{k}= & {} \left( \varvec{1}^{{ \mathrm {\scriptscriptstyle T} }}\varvec{\Sigma }^{-1}_{k}\varvec{1}+\frac{1}{\sigma ^2_{k}v_{k}}\right) ^{-1},\\ m^{*}_{k}= & {} v^{*}_{k}\left( \varvec{1}^{ \mathrm {\scriptscriptstyle T} }\varvec{\Sigma }^{-1}_{k}\tilde{\varvec{\beta }}_{k}+ \frac{m_{k}}{\sigma ^2_{k}v_{k}}\right) . \end{aligned}$$ -

The full conditional distribution for the random effects variance \(\sigma ^2_{k}\), \(k=0,\ldots ,p-1\), for the CP is

$$\begin{aligned}&\sigma ^2_{k}|\tilde{\varvec{\beta }},\varvec{\theta },\varvec{\sigma ^2}_{-k},\sigma ^2_{\epsilon },\varvec{\phi },\varvec{y}\sim IG\bigg \{\dfrac{n+1}{2}\\&\quad +a_{k}, \dfrac{1}{2}\Big [ \left( \tilde{\varvec{\beta }}_{k}-\theta _{k}\varvec{1}\right) ^{ \mathrm {\scriptscriptstyle T} }\varvec{R}^{-1}_{k}\left( \tilde{\varvec{\beta }}_{k}-\theta _{k}\varvec{1}\right) \\&\quad +\dfrac{(\theta _{k}-m_{k})^2}{v_{k}}+2b_{k}\Big ]\bigg \}. \end{aligned}$$ -

The full conditional distribution for data variance \(\sigma ^2_{\epsilon }\) for the CP is

$$\begin{aligned}&\sigma ^2_{\epsilon }|\tilde{\varvec{\beta }},\varvec{\theta },\varvec{\sigma ^2},\varvec{\phi },\varvec{y}\sim IG\left\{ \frac{n}{2}+a_{\epsilon },\frac{1}{2}\right. \\&\left. \quad \left[ \left( \varvec{Y}-\sum ^{p-1}_{k=0}\varvec{D}_{k}\tilde{\varvec{\beta }}_{k}\right) ^{ \mathrm {\scriptscriptstyle T} }\left( \varvec{Y}-\sum ^{p-1}_{k=0}\varvec{D}_{k}\tilde{\varvec{\beta }}_{k}\right) +2b_{\epsilon }\right] \right\} . \end{aligned}$$

1.2 Posterior distributions for the NCP

We now look at the joint posterior and full conditional distributions of the model parameters for the NCP. For the NCP, we have \(\varvec{\xi }=(\varvec{\beta }^{ \mathrm {\scriptscriptstyle T} },\varvec{\theta }^{ \mathrm {\scriptscriptstyle T} },\varvec{\sigma ^2}^{ \mathrm {\scriptscriptstyle T} },\sigma ^2_{\epsilon },\varvec{\phi }^{ \mathrm {\scriptscriptstyle T} })^{ \mathrm {\scriptscriptstyle T} }\), and

where we define \(\varvec{x}_{0}\) to be the vector of ones.

The full conditional distributions for the NCP are given below:

-

The full conditional distribution for the non-centred spatially correlated random effects \(\varvec{\beta }_{k}\), \(k=0,\ldots ,p-1\), is

$$\begin{aligned} \varvec{\beta }_{k}|\varvec{\beta }_{-k},\varvec{\theta },\varvec{\sigma ^2},\sigma ^2_{\epsilon },\varvec{\phi },\varvec{y}\sim N\left( \varvec{m}_{k}^{*},\varvec{\Sigma }^{*}_{k}\right) , \end{aligned}$$where

$$\begin{aligned} \varvec{\Sigma }^{*}_{k}= & {} \left( \frac{1}{\sigma ^2_{\epsilon }}\varvec{D}^{ \mathrm {\scriptscriptstyle T} }_{k}\varvec{D}_{k}+ \varvec{\Sigma }^{-1}_{k}\right) ^{-1},\\ \varvec{m}^{*}_{k}= & {} \varvec{\Sigma }^{*}_{k}\left[ \frac{1}{\sigma ^2_{\epsilon }}\varvec{x}_{k}^{{ \mathrm {\scriptscriptstyle T} }} \left( \varvec{y}-\sum ^{p-1}_{\begin{array}{c} j=0 \\ j\ne k \end{array}}\varvec{D}_{j}\varvec{\beta }_{j}-\sum ^{p-1}_{j=0}\varvec{x}_{j}\theta _{j}\right) \right] . \end{aligned}$$ -

The full conditional distribution for the global effects \(\theta _{k}\), \(k=0,\ldots ,p-1\), for the NCP is

$$\begin{aligned} \theta _{k}|\varvec{\beta },\varvec{\theta }_{-k},\varvec{\sigma ^2},\sigma ^2_{\epsilon },\varvec{\phi },\varvec{y}\sim N\left( m_{k}^{*},v^{*}_{k}\right) , \end{aligned}$$where

$$\begin{aligned} v^{*}_{k}= & {} \left( \frac{1}{\sigma ^2_{\epsilon }}\varvec{x}_{k}^{{ \mathrm {\scriptscriptstyle T} }}\varvec{x}_{k}+\frac{1}{\sigma ^2_{k}v_{k}}\right) ^{-1},\\ m^{*}_{k}= & {} v^{*}_{k}\left[ \frac{1}{\sigma ^2_{\epsilon }}\varvec{x}_{k}^{ \mathrm {\scriptscriptstyle T} }\left( \varvec{y}-\sum ^{p-1}_{j=0}\varvec{D}_{j}\varvec{\beta }_{j}-\sum ^{p-1}_{\begin{array}{c} j=0 \\ j\ne k \end{array}}\varvec{x}_{j}\theta _{j}\right) + \frac{m_{k}}{\sigma ^2_{k}v_{k} }\right] . \end{aligned}$$ -

The full conditional distribution for the random effects variance \(\sigma ^2_{k}\), \(k=0,\ldots ,p-1\), for the NCP is

$$\begin{aligned}&\sigma ^2_{k}|\varvec{\beta },\varvec{\theta },\varvec{\sigma ^2}_{-k},\sigma ^2_{\epsilon },\varvec{\phi },\varvec{y}\sim IG\left\{ \frac{n+1}{2}\right. \\&\quad \left. +a_{k}, \frac{1}{2}\left( \varvec{\beta }_{k}^{ \mathrm {\scriptscriptstyle T} }\varvec{R}^{-1}_{k}\varvec{\beta }_{k}+\frac{(\theta _{k}-m_{k})^2}{v_{k}}+2b_{k}\right) \right\} . \end{aligned}$$ -

The full conditional distribution for the data variance \(\sigma ^2_{\epsilon }\) for the NCP is

$$\begin{aligned}&\sigma ^2_{\epsilon }|\varvec{\beta },\varvec{\theta },\varvec{\sigma ^2},\varvec{\phi },\varvec{y}\sim IG\Bigg \{\frac{n}{2}+a_{\epsilon }\\&\quad \frac{1}{2}\left[ \left( \varvec{Y}-\sum ^{p-1}_{k=0}\Big (\varvec{D}_{k}\varvec{\beta }_{k}+\varvec{x}_{k}\theta _{k}\Big )\right) ^{ \mathrm {\scriptscriptstyle T} }\right. \\&\left. \left. \left( \varvec{Y}-\sum ^{p-1}_{k=0}\Big (\varvec{D}_{k}\varvec{\beta }_{k}+\varvec{x}_{k}\theta _{k}\Big )\right) +2b_{\epsilon }\right] \right\} . \end{aligned}$$

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article