Abstract

Bayesian inference for Markov processes has become increasingly relevant in recent years. Problems of this type often have intractable likelihoods and prior knowledge about model rate parameters is often poor. Markov Chain Monte Carlo (MCMC) techniques can lead to exact inference in such models but in practice can suffer performance issues including long burn-in periods and poor mixing. On the other hand approximate Bayesian computation techniques can allow rapid exploration of a large parameter space but yield only approximate posterior distributions. Here we consider the combined use of approximate Bayesian computation and MCMC techniques for improved computational efficiency while retaining exact inference on parallel hardware.

Similar content being viewed by others

1 Introduction

Stochastic kinetic models describe the probabilistic evolution of a dynamical system. Motivated by the need to incorporate intrinsic stochasticity in the underlying mechanics of the systems, stochastic models are increasingly used to model a wide range of real-world problems, particularly, but not limited to, computational systems biology, predator–prey population models, and single species population development (Kitano 2002; Boys et al. 2008; Gillespie and Golightly 2010). Systems are governed by a network of reactions which change the state of the system by a discrete amount, and hence are most naturally represented by a continuous time Markov process on a discrete state space. Typically, exact realisations from the model are obtained using the direct method (Gillespie 1977). There are a number of faster, but approximate algorithms, such as the diffusion approximation, moment closure, or hybrid simulation strategies (Gillespie 2000, 2009; Salis and Kaznessis 2005).

Our goal is to perform statistical inference for the parameters that govern the dynamics, where data are partially missing and prior knowledge of reaction rates may be poor. Likelihood functions for such problems are rarely analytically tractable but it is possible to leverage particle Markov chain Monte Carlo (pMCMC) methods to perform exact inference (Golightly and Wilkinson 2011; Andrieu et al. 2010). Typically, pMCMC algorithms exhibit a high computational cost, since at each iteration we run a sequential Monte Carlo (SMC) filter, which requires multiple forward simulations. This cost can become overly prohibitive if convergence is slow.

Approximate Bayesian computation (ABC) techniques, allow posterior inference to be made for problems where evaluation of the likelihood function is unavailable, relying heavily on the ability to simulate from the model (Tavare et al. 1997; Pritchard et al. 1999; Beaumont et al. 2002). An approximation to the true posterior distribution of interest is made via samples of parameter vectors, \(\theta \), that yield simulated data deemed “close” to the observed data. Here the definition of close is controlled by a tolerance, \(\epsilon \), and distance function \(\rho (\cdot )\), hence retained samples are from \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) \le \epsilon )\). The simple rejection algorithm typically leads to high numbers of proposed parameter vectors being rejected for meaningful tolerance values (Pritchard et al. 1999). Further developments within this framework lead to MCMC schemes which avoid calculation of the likelihood, as well as sequential Monte Carlo algorithms, which typically exhibit better acceptance rates than the simple rejection sampler (Marjoram et al. 2003; Del Moral et al. 2006; Sisson et al. 2007; Toni et al. 2009). Such techniques have successfully been applied to stochastic kinetic models for approximate inference (Drovandi and Pettitt 2011; Fearnhead and Prangle 2012).

In this article, we explore a scenario where we desire exact posterior inference for model rate parameters, while exploiting parallel hardware. By coupling scalable, approximate and exact techniques, we are able to increase computational efficiency of posterior sampling via parallel particle MCMC and improve convergence properties of chains that suffer expensive burn–in periods to further increase efficiency.

2 Stochastic kinetic models

Consider a network of reactions involving a set of \(u\) species \(\mathcal{X}_{1}, \ldots , \mathcal{X}_{u}\) and \(v\) reactions \(\mathbf {R}_{1}, \ldots , \mathbf {R}_{v}\) where each reaction \(\mathbf {R}_{i}\) is given by

Let \(P\) be the \(v \times u\) matrix of pre–reaction coefficients of reactants \(p_{i,j}\), similarly \(Q\), the \(v \times u\) matrix of post–reaction coefficients of products. The stoichiometry matrix, defined as

is a useful way to encode the structure of the reaction network. Also define \(X_{t}\) to be the vector \((X_{1,t},\ldots ,X_{u,t})\) denoting the number of species \(\mathcal{X}\) present at time \(t\).

Each reaction \(\mathbf {R}_{i}\) is assumed to have an associated rate constant, \(\theta _{i}\), and a hazard function \(h_{i}(X_{t},\theta _{i})\) which gives the overall propensity for a type \(i\) reaction to occur. The form of \(h_{i}(X_{t},\theta _{i})\) in many cases can be considered as arising from interactions between components in a well mixed population. This leads to reactions following the law of mass action kinetics where the hazard function for a reaction of type \(i\) takes the form

If \(\theta = (\theta _{1}, \ldots , \theta _{v})\) and \(h(X_{t},\theta ) = (h_{1}(X_{t},\theta ),\ldots ,\) \(h_{v}(X_{t},\theta _{v}))\) then values for \(\theta \) and \(X_{0}\) complete full specification of this Markov process.

2.1 The direct method

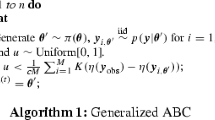

Exact simulations of the evolution of such a system can be obtained via the Direct method (Gillespie 1977). Algorithm 1 describes the procedure for exact forward simulation of a stochastic kinetic model, given its stoichiometry matrix, \(S\), a vector of reaction rates, \(\theta \), an initial state vector \(X_{0}\) and an associated hazard function \(h(X_{t},\theta )\). Reactions simulated to occur via the Direct method incorporate the discrete and stochastic nature of the system since each reaction, which increments or decrements species levels by discrete amounts, are chosen probabilistically.

Less computationally expensive simulation algorithms such as the chemical Langevin equation (CLE) relax the restriction imposed by the discrete state space but retain the stochasticity of the underlying mechanics, giving approximate realisations of the progression of the species (Gillespie 2000). For the purpose of exact inference and this article we consider only exact realisations of the reaction network via the direct method. For a more in depth background into stochastic kinetic modelling see Wilkinson (2011).

We anticipate data, \(\mathcal {D} = (d_{0},d_{1},\ldots ,d_{T})\), to be a collection of noisy, possibly partial observations of the system \(X = (X_{0},X_{1},\ldots ,X_{T})\) at discrete time intervals.

3 Methods for Bayesian inference in intractable likelihood problems

Methods for likelihood-free Bayesian inference have become popular due to the increasing use of models in which the likelihood function is either deemed too computationally expensive to compute, or analytically unavailable. In particular, approximate Bayesian computation (ABC), and particle filtering methods have proven to be two classes of algorithms in which one can proceed with inference in a scenario where evaluation of the likelihood is unavailable, commonly termed likelihood–free inference.

3.1 Approximate Bayesian computation

The goal of approximate Bayesian computation techniques is to obtain a collection of samples from the posterior distribution \(\pi (\theta |\mathcal {D})\) where the likelihood function \(\pi (\mathcal {D}|\theta )\) is unavailable, due to either high computational cost or analytical intractability. Typically we rely on the assumption that simulation from the model given a set of parameters, \(\theta \), is relatively straightforward, as in Algorithm 1. Given a set of proposed parameter vectors we would ideally keep any such vectors which yield data simulations that are equal to the observations that we have. In reality however, we will typically match the simulated data to the observations perfectly with low probability. To avoid rejecting all proposals, we instead keep parameters that yield simulations deemed sufficiently close to the observations. We characterise a set of simulated data, \(\mathcal {D}^{*}\) as sufficiently close if, for a given metric, \(\rho (\cdot )\), the distance between \(\mathcal {D}^{*}\) and observed data \(\mathcal {D}\) is below a tolerance threshold, \(\epsilon \). The simple rejection sampler is described in Algorithm 2.

Rather than leading to a sample from the exact posterior distribution, the samples instead have the distribution \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon )\). As \(\epsilon \rightarrow 0\) this tends to the true posterior distribution if \(\rho (\cdot )\) is a properly defined metric on sufficient statistics. Conversely as \(\epsilon \rightarrow \infty \) one recovers the prior distribution on the parameters. Since it is usually the case that sufficient statistics are not available in this type of problem, further approximation can be made by choosing a set of summary statistics, \(S(\cdot )\), which are not sufficient, but it is hoped describe the data well. A set of summary statistics is often used over the observed data in problems in which the dimension of data is large. This is to combat the well–known curse of dimensionality that leads to intolerably poor acceptance rates in this type of scheme. In this case we replace step 3 of Algorithm 2 with a step that first calculates \(S(\mathcal {D}^{*})\) then assigns distance according to \(\rho (S(\mathcal {D}),S(\mathcal {D}^{*}))\). The criteria for forming a good choice of a set of informative summary statistics is beyond the scope of this article and we refer the reader to the following paper which summarises the current research in this area, Blum et al. (2013). Throughout this article we use a Euclidean metric on the simulated data points as we found that this gave sufficient acceptance rates. If a large value of \(\epsilon \) is chosen, a large number of proposed candidates are accepted, but little is learnt about the posterior distribution. Choosing a small tolerance yields a much better approximation to \(\pi (\theta |\mathcal {D})\) but at the expense of poorer acceptance rates and hence increased computational cost. This rejection based approach has been included in a number of sampling techniques.

Within the context of this article we consider a sequential approach to ABC inference. An example of such a scheme, based on importance sampling, is described in Algorithm 3, see Toni et al. (2009) for details.

Algorithm 3 as described here allows us choice of an essentially arbitrary perturbation kernel \(K_{t}(.)\) for preventing degeneracy of particles, and the sequence of tolerances \(\epsilon _{0},\ldots ,\epsilon _{T}\). In practice the choice of \(K_{t}(.)\) will have an important effect on the overall efficiency of the algorithm. A perturbation which gives small moves will typically allow acceptance rates to remain higher, whilst exploring the space slowly. Conversely making large moves allows better exploration of the parameter space, but usually at the cost of reduced acceptance rates. We shall use the ‘optimal’ choice of a random walk perturbation kernel for this algorithm as detailed in Filippi et al. (2013). For a multivariate Gaussian random walk kernel at population \(t\), we choose \(\varSigma ^{(t)}\) as

where \(N\) is the number of weighted samples we have from the previous population \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon _{t-1})\) with weights \(\omega ,\, N_{0}\) is the number of those samples, \(\tilde{\theta }\), that satisfy \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon _{t})\) with associated normalised weights \(\tilde{\omega }\). This builds on the work of Beaumont et al. (2009).

The choice of sequence of tolerances \(\epsilon _{0},\ldots ,\epsilon _{T}\) also has a large effect on the efficiency of the algorithm. A sequence which decreases too slowly will yield high acceptance rates but convergence to the posterior will be slow. Whereas a rapidly decreasing sequence typically yields poor acceptance rates. We will, throughout this article, use an adaptive choice of \(\epsilon _{t}\) determined by taking a quantile of the distances at \((t-1)\). It should be noted that Silk et al. (2013) observe that there can be issues with convergence when using an adaptive tolerance based on quantiles. However the authors are unaware of a generally accepted solution to this problem.

3.1.1 ABC methods in parallel

Non MCMC–based ABC techniques, such as those described in Algorithms 2 and 3 are often amenable to parallelisation. Since all proposed parameters are independent samples from the same distribution, and the acceptance step for each does not depend on the previously accepted particle, the bulk of computation for a sequential ABC scheme can be run in parallel, greatly reducing overall CPU time required to obtain a sample from the target. Some thread communication is necessary as we move from one distribution in the sequence to the next, something that would be avoided if using the simple rejection sampler, but typically this is small in comparison to the work done in forward simulation.

Coding of a parallel ABC algorithm adds little complexity over a non-parallelised version. This is particularly true for the simple rejection sampler where one effectively just runs a sampler on each of N processors and no communication between threads is needed.

3.2 Markov chain Monte Carlo

Suppose interest lies in \(\pi (\theta |\mathcal {D})\), and that we wish to construct a MCMC algorithm whose stationary distribution is exactly this posterior. Using an appropriate proposal function \(q(\theta ^{*}|\theta )\) we can construct a Metropolis Hastings algorithm to do this as described in Algorithm 4. This can often be impractical due to unavailability of the likelihood term, \(\pi (\mathcal {D}|\theta )\).

In practice one normally chooses a proposal function \(q(\theta ^*|\theta )\) such that proposed moves from \(\theta \) to \(\theta ^*\) are distributed symmetrically about \(\theta \) giving rise to random walk Metropolis Hastings. Typical choices include a uniform \(\mathcal {U}(\theta - \sigma , \theta + \sigma )\) or Gaussian \(\mathcal {N}(\theta ,\varSigma )\) distribution. In this article we shall consider Gaussian innovations for random walk proposals. It has been shown that under various assumptions about the target, the optimal scaling for a random walk Metropolis algorithm using a Gaussian proposal, \(\varSigma _q\), for a \(d\) dimensional distribution is

where \(\varSigma \) is the posterior covariance (Roberts et al. 1997; Roberts and Rosenthal 2001). Typically, however, the posterior covariance is unavailable.

3.2.1 Parallel MCMC

MCMC algorithms are somewhat less amenable to parallelisation than rejection sampling algorithms. Essentially there are two options to be considered, parallelisation of a single MCMC chain or the construction of parallel chains. For an in depth discussion see Wilkinson (2006). Parallelisation of a single chain in many scenarios is somewhat difficult due to the inherently iterative nature of a Markov chain sampler and will not be discussed in detail here. Running parallel chains, however, is straightforward.

3.2.2 Parallel chains

In practice, chains initialised with an arbitrary starting point will be, after an appropriate burn-in period, essentially sampling from the target. In the context of a serial implementation there is still some debate over whether it is better to run a single long chain, or many shorter chains. The benefits of the single chain are that any burn in period is only suffered once, whereas the argument for multiple shorter chains is that one may better diagnose convergence to the stationary distribution. The argument changes when considering parallel implementation. Indeed, if burn-in periods are relatively short, running independent chains on separate processors can be a very time efficient way of learning about the distribution of interest.

Burn–in is still a potential limiter on the scaling of the performance to be had when employing parallel chains with the number of processors. The greater the burn–in period of a chain, the more time each processor has to waste computing samples that will eventually be thrown away. The theoretical speed up calculation given \(N\) processors to obtain \(n\) stored samples with a burn–in period \(b\) is

which is clearly limited for any \(b>0\), as \(N \rightarrow \infty \) (Wilkinson 2006). A “perfect” parallelisation of multiple chains then is one in which we have no burn–in period, i.e initialise each chain with an independent draw from the target. The performance gain when run on \(N\) processors is then of factor \(N\). Clearly this “perfect” situation will not be possible to implement in practice since we typically are unable to sample from the target.

3.2.3 Particle MCMC

Re-consider the Metropolis Hastings MCMC algorithm from Sect. 3.2. The ability to construct such a scheme relies on the evaluation of the likelihood function \(\pi (\mathcal {D}|\theta )\) among others. Crucially, the problems of interest discussed in this article are such that the likelihood term \(\pi (\mathcal {D}|\theta )\) is unavailable. However Andrieu and Roberts (2009) proposed a pseudo marginal MCMC approach to this problem. In the acceptance ratio of the MCMC scheme, replace the intractable likelihood, \(\pi (\mathcal {D}|\theta )\), with a non–negative Monte Carlo estimate. It can be shown that, provided \(E[\hat{\pi }(\mathcal {D}|\theta )] = \pi (\mathcal {D}|\theta )\), the stationary distribution of the resulting Markov chain is exactly the distribution of interest. In the context of inference for state space models, it is natural to make use of sequential Monte Carlo techniques through a bootstrap particle filter, (Doucet et al. 2001), to obtain estimates of the likelihood \(\hat{\pi }(\mathcal {D}|\theta )\). The bootstrap particle filter for application to Markov processes is described in Algorithm 5.

Substituting the estimate of \(\hat{\pi }(\mathcal {D}|\theta )\) in place of the likelihood function yields a MH algorithm with acceptance probability

It turns out that the particle filter’s estimate of marginal likelihood is unbiased, giving rise to an “exact approximate” pseudo-marginal MCMC algorithm. This use of a SMC sampler within a pseudo-marginal algorithm is a special case of the particle marginal Metropolis Hastings (PMMH) algorithm described in Andrieu et al. (2010), which in general can be used to target the full joint posterior on the state and rate \(\pi (\theta ,X|\mathcal {D})\). In this article we consider only the pseudo-marginal special case. As discussed in section 3.2.1 this type of scheme does not lend itself well to parallelisation unless we have the opportunity to initialise chains with a sample from the posterior distribution \(\pi (\theta |\mathcal {D})\). We recognise that rather sophisticated parallel particle filter algorithms which have the potential for increasing the speed of moves within a single chain are available. However the speed up is limited by necessary communication and synchronisation between processors and typically do not scale well.

The pseudo–marginal MCMC scheme requires specification of a number of particles, \(N\), to be used in the boot–strap filter. It was noted, (Andrieu and Roberts 2009), that the efficiency of the scheme decreases as the variance of the estimated marginal likelihood increases. This can be overcome by increasing the value of \(N\), albeit at an increased computational cost. An optimal choice of \(N\) was the subject of Pitt et al. (2012) and Doucet et al. (2012). The former suggest that \(N\) should be chosen such that the variance in the estimated log–likelihood is around 1 while the latter show that the efficiency penalty is small for values between 0.25 and 2.25.

3.3 Combined use of ABC and pMCMC

Since it is typically not possible to initialise a MCMC chain with a draw from the desired target, we propose an approach to parallel MCMC by choosing initial parameter vectors according to samples from an approximate posterior distribution. The intuition is that if we have a reasonable approximation to the target of interest, samples from the approximation will closely match those from \(\pi (\theta |\mathcal {D})\). Because of this we expect that after a very short burn–in we are sampling from the desired target. Hence we are approaching the scenario of near perfect parallelisation of MCMC. Clearly the better the approximation the shorter the burn-in for each chain will be.

If we first run an ABC scheme targeting an approximation to this distribution, we can exploit parallel hardware to eliminate a large region of prior parameter space very quickly. Then take a set of \(N\) independent samples from \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon )\) to initialise \(N\) independent, parallel, pMCMC chains each targeting the exact posterior distribution \(\pi (\theta |\mathcal {D})\).

We can in some sense consider this process of obtaining a sample from an ABC posterior as an artificial burn–in period. Crucially however, since the ABC algorithm yields a large set of samples from \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) \le \epsilon )\) the computational price of performing the artificial burn–in has only to be paid once and can be parallelised. With a set of samples we can initialise an arbitrary number of MCMC chains each with an independent parameter vector \(\theta _{0}\) which comes from a distribution close to the target \(\pi (\theta |\mathcal {D})\).

Since ABC is being used only for initialisation, it is not used as a prior for the MCMC algorithm, nor does it form part of the proposal. Hence, the ABC approximation does not affect the exactness of the pMCMC target.

3.4 Random walk pMCMC using ABC

We now refer back to the optimal choice of Gaussian random walk kernel of Roberts and Rosenthal (2001) mentioned in Sec. 3.2. Since we hope that we start with a reasonable approximation to the true posterior, we likewise consider that the covariance of a sample from \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon )\) denoted here \(\varSigma _\mathtt{ABC }\), will be close to the covariance of true posterior samples. This allows us to use the approximate posterior covariance structure as an informative tool for calibrating the random walk innovations. In most MCMC applications, since the true posterior variance is unknown, \(\varSigma _{q}\) requires tuning, typically from pilot runs. In the case of using \(\varSigma _\mathtt{ABC }\) we hope that we can also remove the necessity to tune \(\varSigma _{q}\). In practice we take

In addition we use our approximation to automatically tune the number of particles required for the particle filter. In practice we take the average of the approximate distribution, \(\bar{\theta }_\mathtt{ABC }\) to calculate the number of particles required to satisfy

in line with Sherlock et al. (2013). We do this by running the particle filter a number of times with varying numbers of particles until the condition is satisfied. The hybrid ABC pMCMC algorithm is outlined in Algorithm 6.

4 Applications

4.1 The Lotka–Volterra system

The Lotka–Volterra predator–prey system is a simple stochastic kinetic model (Lotka 1952; Volterra 1926). The system is characterised by a set of three reactions detailing interactions between two species; prey \(X\) and predators \(Y\):

The reactions \(\mathbf {R}_{1},\, \mathbf {R}_{2}\) and \(\mathbf {R}_{3}\) can be thought of as prey birth, an interaction resulting in a prey death and predator birth, and predator death respectively. Whilst this is a relatively simple example of a model in this context, it highlights many of the difficulties associated with more complex systems. We summarise the system by its stoichiometry matrix, \(S\) and hazard function \(h(\mathbf {X}_{t},\theta )\):

Figure 1(a) is a set of synthetic, noisy observations of the two species simulated using the Gillespie algorithm with true parameter values \(\log (\theta ) = (0,-5.30,-0.51)\) together with a Gaussian measurement error structure, \(d_{j,t} \sim \mathcal {N}(X_{j,t},10^{2})\).

4.1.1 pMCMC for Lotka–Volterra

Investigating computational issues with pMCMC for the Lotka–Volterra model defined in Sect. 4.1. a The true underlying synthetic data set. Species are observed at discrete time points and corrupted with \(N(0, 10^{2})\) noise. b Twelve trace plots of \(\theta _{1}\) from pMCMC chains initialised with random draws from the prior (see expression 13). The chains fail to explore the space. c shows the median and 95% interval for estimates of the log-likelihood from the particle filter for varying \(\theta _{1}\) close to the true value, for \(\theta _{2}\) and \(\theta _{3}\) fixed at the true values. d shows that the variance of log-likelihood estimates increases away from the true values

Exact parameter inference is possible for this system using a pMCMC scheme; provided the chain is initialised near the posterior mode (Wilkinson 2011). However under poor initialisation, a pMCMC scheme will perform very badly.

Suppose we have little prior knowledge on the rate parameters,

We take a prior distribution for the initial state \(X_{0}\) on each individual species as Poisson distributions with rate parameter equal to the true initial conditions,

Further we assume that the variance, \(\sigma ^{2}\), of the Gaussian measurement error is known.

Using a Gaussian random walk proposal on \(\log (\theta )\), \(q(\log (\theta )^{*}|\log (\theta ))\) we construct a MH algorithm using a bootstrap particle filter, targeting \(\pi (\theta |\mathcal {D})\) as in Algorithm 4. Figure 1(b) shows that when initialising the chain using random draws from the weakly informative prior, the chains do not explore the space, failing to converge. Further investigation shows why this is happening. Figure 1c, d show that away from the true values, the variance of the log–likelihood estimates from the bootstrap particle filter increases sharply. Figure 1 (c) shows the \(95\%\) interval of log–likelihood estimates over 100 runs of a particle filter using 150 particles. Figure 1 (d) shows that even close to the true value, \(\theta _{1} = 1\), the variance of the log-likelihood estimates increases dramatically with a small move. In both Fig. 1c, d the other two parameters were kept fixed at the true values. When we are in a region with neligible likelihood the chain has a tendency to stick as a result of the variability in the likelihood estimates. On top of this, small proposed moves can lead to large variation in the estimated likelihood, leading to poor exploration of the tails in the posterior distribution. Whilst we are guaranteed to eventually converge to the stationary distribution, the required computational cost, without carefully thought out initialisation, could be very high. We note that this is not a failure of the theory or algorithm, but a consequence of the sensitivity to initialisation of parameter values experienced in this type of model.

We therefore apply the proposed ABC initialisation for a “perfect” parallel pMCMC scheme.

4.1.2 Results for the Lotka–Volterra model using a hybrid approach

We use a sequence of seven distributions \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon _{t}),\, t = 0,\ldots ,6\) to obtain our approximation to the posterior. For each population \(t\) we take \(\epsilon _{t}\) as the 0.3 quantile of the distribution of distances from the samples at \(t-1\) and propose candidates until we reach a sample of 1,000 for each \(t\). At each stage we perform the forward simulation of the model given proposed parameter values in parallel. Figure 2 (a) shows summaries for log of the reaction 1 rate parameter \(\log (\theta _{1})\) for each of the distributions in the series through the sequential ABC. The distributions quickly remove a large region of space that, had we sampled from the prior distribution to initialise the chain, are likely to have been poor starting points. The scheme converges around the true value \(\log (\theta _{1}) = 0\). Given the sample from \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon _{6})\) we initialise eight MCMC chains on separate processors with random draws. The results in Fig. 2(b, c, d) are then, the 20,000 pooled samples from the eight independent parallel chains, each of which has been thinned by a factor of 100 to give 2,500 samples. Each chain is sampling from the same stationary distribution as seen in the trace plot for \(\theta _{1}\), Fig. 2 (c), and mixing is good, Fig. 2 (b). Further the true parameter values, \(\log (\theta ) = (0,-5.30,-0.51)\), used to simulate the data are well identified within the posterior densities, Fig. 2 (d).

Analysis of results for the synthetic data for the Lotka–Volterra model. a are successive distributions of \(\log (\theta _{1})\) in the sequential ABC scheme, Algorithm 3. b shows autocorrelations for chain 1, representative of each of the parallel chains, and c are traces of eight parallel MCMC chains for \(\log (\theta _{1})\). Note that each chain is sampling from the same stationary distribution and mixing appears good. d are the posterior densities for \(\log (\theta )\), each chain leads to a posterior density plot that is very close to that of every other chain. True values \(\log (\theta ) = (0.0,-5.30,-0.51)\) are well identified

4.2 Real-data problem: aphid model

Next we consider a model of aphid population dynamics as proposed in Matis et al. (2007). The system can be represented by the following reactions:

and summarised in terms of its stoichiometry matrix \(S\), and hazard function \(h(\mathbf {X}_{t},\theta )\),

\(\mathbf {X}_{0} = (N_{0},C_{0}),\, \theta = (\lambda ,\mu )\).

\(N_{t}\) and \(C_{t}\) are the numbers of aphids alive at time \(t\) and the cumulative number of aphids that have lived up until time \(t\) respectively. In the first reaction, when a new aphid is introduced into the system we get an increase in both the current number of aphids and the cumulative count. When an aphid is removed the number of aphids decreases by one but the cumulative count remains the same. Aphid death is considered to be proportional not only to the number of aphids currently in the system, but also to the cumulative count, representing the idea that over time they are making their own environment less habitable.

Given initial conditions \(\mathbf {X}_{0} = (N_{0},C_{0})\) and a set rate of parameters \(\theta \), we can simulate from the model. However the difficulty in practice with the model presented here is that we will never have observations of the cumulative count. Observations then are noisy, discrete time measurements of just a single species of the system.

4.2.1 Aphid data

We now consider the data described in Matis et al. (2008) consisting of cotton-aphid counts for twenty seven treatment-block combinations. The treatments consisted of three nitrogen levels (blanket, variable and none), three irrigation levels (low, medium and high) and three blocks. The sampling times of the data are \(t = 0, 1.14, \,2.29,3.57,4.57\) weeks, or every seven to eight days. We restrict our investigation to a single treatment combination, three data sets with blanket nitrogen level and low irrigation. If we denote the block by \(i \in \{1,2,3\}\) then the data \(\mathcal {D}_{i}\) is the number of aphids, \(N\), in block \(i\) at each time \(t\). The data are plotted in Fig. 3(a).

We make the assumption that the counts are observed with error such that

and use a set of weakly informative priors on the rate parameters \(\theta \)

We place a prior of the form

to reflect the fact that we are unable to measure \(C_{0}\).

We treat the three sets of observations as repeats of the same experiment. Likelihood estimates are obtained by running a particle filter for each of the three data sets and taking the product of the individual estimates. A full treatment of all twenty-seven data sets using a fixed effects model can be found in Gillespie and Golightly (2010). We consider the initial aphid counts to be the true values, as in Gillespie and Golightly (2010), on the basis that there should be no error in counting such small populations.

Analysis of the real data for the aphid growth model. a There are the three data sets of aphid counts each with of five observations. b The posterior predictive model fit given a sample from the collection of posterior densities. c, d output from each MCMC chain, highlight that we are sampling from the same stationary distribution

4.2.2 Results for the aphid growth model

We use the same criteria for the choice of \(\epsilon _{t}\) for the ABC section of the inference as with the Lotka–Volterra model in 4.1.2, namely the 0.3 quantile of the distribution of distances. A sequence of five distributions gives us 1000 samples from \(\pi (\theta |\rho (\mathcal {D},\mathcal {D}^{*}) < \epsilon _{4})\) which we use to initialise eight parallel chains. We record 20,000 samples from the exact target posterior \(\pi (\theta |\mathcal {D})\) after appropriate thinning. Figure 3(c and d) shows the analysis of the MCMC chains. Again we find that each chain is sampling from the same target and posterior densities are very close from all eight chains. Figure 3(b) shows posterior predictive quantiles given a sample from the pooled posterior distribution. Model fit appears to be reasonable. The results are consistent with those seen in Gillespie and Golightly (2010) where they assume that observations are made without error and make use of an approximate simulation algorithm for realisations of the model.

4.3 Gene expression

Finally, we consider a simple gene regulation model characterised by three species (DNA, mRNA, denoted R, and protein, P) and four reactions. The reactions represent transcription, mRNA degradation, translation and protein degradation respectively. The system has been analysed by Komorowski et al. (2009) and Golightly et al. (2014) among others:

with stoichiometry matrix \(\mathbf {S}\), and hazard function \(h(\mathbf {X}_{t},\theta )\)

where \(\mathbf {X}_{t} = (R_{t}, P_{t})\) and \(\theta = (\gamma _{R}, \kappa _{P}, \gamma _{P}, b_{0}, b_{1}, b_{2}, b_{3})\) where we note that, as in Komorowski et al. (2009), we take \(\kappa _{R,T}\) to be the time dependent transcription rate. Specifically,

such that the transcription rate increases for \(t < b_{2}\) and tends towards a baseline value, \(b_{3}\), for \(t > b_{2}\). As above the goal is inference on the unknown parameter vector, \(\theta \). In keeping with inference in Komorowski et al. (2009) we create a data poor scenario, 100 observations of synthetic data simulated given initial conditions \(X_{0} = (10,150)\) and parameter values \((0.44,0.52,10,15,0.4,7,3)\) corrupted with measurement error, \(Y_{t} \sim \mathcal {N}(X_{t},I\sigma ^{2}),\, \sigma = 10\), with observations on the mRNA discarded. The data is shown in Fig. 4(a).

a is the noisy pseudo-data for the Protein levels in the model. The other plots show the individual densities from pMCMC chains after appropriate thinning having been initialised via an ABC run as described in Sect. 3.4. The plots clearly show that each of the chains are in agreement with regard to sampling from the stationary distribution

We follow Komorowski et al. (2009) by assuming the same prior distributions, including informative priors for the degradation rates to ensure identifiably. Specifically

where \(\varGamma (a,b)\) is the gamma distribution with mean \(a/b\) and \(\mathrm{Exp }(a)\) is the Exponential distribution with mean 1/a.

For simplicity we assume that both the initial state, \(X_{0} = (10,150)\), and the measurement error standard deviation, \(\sigma = 10\), are known.

4.3.1 Results for gene expression data

We follow the same procedure as with the two examples above. Using a sequential ABC run to obtain a sample of 1,000 parameters vectors distributed according to the approximate posterior. We then use eight random draws from the final ABC sample to initialise the parallel pMCMC chains with tuning parameters chosen as described in Sect. 3.4. The posterior densities, a sample of 4000 from each chain having been subjected to appropriate thinning, are shown in Fig. 4. It is clear that each of the chains is sampling from the same target giving us confidence in the resulting densities. The posteriors obtained are consistent with those in Golightly et al. (2014) and true parameter values are well identified. Figure 5 shows that the sample from the final iteration of the sequential ABC algorithm is markedly different from that in the pMCMC algorithm. There is a measurable improvement in using this type of scheme over using solely ABC in this way. We characterise the difference shown here as an improvement due to the fact that we know that pMCMC is asymptotically exact.

A comparison between the final sample using the ABC SMC algorithm and the pMCMC for the gene regulation model for the four parameters in the time dependent hazard. The plot shows that their is a distinct difference between the two posterior samples. Plots for the other three parameters show similar but are omitted here

5 Discussion

We have proposed an approach to inference for Markov processes that is asymptotically exact and combines the relative strengths of ABC and pMCMC methodology to increase computational efficiency through use of parallel hardware. Through use of an approximation to the posterior distribution of interest, obtained via a sequential ABC algorithm which is easy to parallelise, we can set up a parallel implementation of pMCMC which has numerous desirable properties. By enabling the construction of independent parallel chains initialised close to the stationary distribution, this enables fast convergence and sampling from an exact posterior distribution that scales well with available computational resources. Throughout our analyses we have made use of parallel computation, however we believe that the proposed approach will also be of interest in situations where parallel hardware is not available, as it still addresses the pMCMC initialisation and tuning problem. Algorithmic tuning parameters required for pMCMC, such as the variance of Gaussian random walk proposals and numbers of particles for the particle filter can be chosen without the need for additional pilot runs, as a consequence of having a sample from an ABC posterior. In addition, independent parallel chains allow verification of convergence and the computational saving in burn–in times extends to repeat MCMC analyses.

We have demonstrated this approach by applying it to three stochastic kinetic models. With the Lotka–Volterra predator prey system, a relatively simple model in which both species can be observed, we highlighted clear issues with practical implementation of a pseudo-marginal approach in a scenario in which prior information on reaction rate parameters is poor. This issue can be alleviated by first obtaining a sample from an approximation to the posterior, then using it to guide an exact pMCMC scheme. The approach discussed performed similarly well in the application to a set of real data for a model for aphid growth dynamics in which one of the species in the system can never be observed, where we again imposed weak prior conditions on the rate parameters governing the system and had access to repeat data. Finally we applied the scheme to a gene regulation model in which we had partially observed data and rate parameters were not all time homogeneous. The analyses of results show that we can verify that we are sampling from the same target distribution adding to our belief that we have converged to the true posterior of interest in each case.

References

Andrieu, C., Roberts, G.O.: The pseudo-marginal approach for efficient Monte Carlo computations. Ann. Stat. 37(2), 697–725 (2009)

Andrieu, C., Doucet, A., Holenstein, R.: Particle Markov chain Monte Carlo methods. J. Royal Stat. Soc. 72(3), 269–342 (2010)

Beaumont, M.A., Zhang, W., Balding, D.J.: Approximate Bayesian computation in population genetics. Genetics 162(4), 2025–2035 (2002)

Beaumont, M.A., Cornuet, J.M., Marin, J.M., Robert, C.P.: Adaptive approximate Bayesian computation. Biometrika 96(4), 983–990 (2009)

Blum, M.G., Nunes, M.A., Prangle, D., Sisson, S.A., et al.: A comparative review of dimension reduction methods in approximate bayesian computation. Stat. Sci. 28(2), 189–208 (2013)

Boys, R.J., Wilkinson, D.J., Kirkwood, T.B.: Bayesian inference for a discretely observed stochastic kinetic model. Stat. Comput. 18(2), 125–135 (2008)

Del Moral, P., Doucet, A., Jasra, A.: Sequential Monte Carlo samplers. J. Royal Stat. Soc. 68(3), 411–436 (2006)

Doucet, A., de Freitas, N., Gordon, N.: Sequential Monte Carlo Methods in Practice. Springer, New York (2001)

Doucet, A., Pitt, M., Kohn, R.: Efficient implementation of Markov chain Monte Carlo when using an unbiased likelihood estimator (2012). arXiv:12101871.

Drovandi, C.C., Pettitt, A.N.: Estimation of parameters for macroparasite population evolution using approximate Bayesian computation. Biometrics 67(1), 225–233 (2011)

Fearnhead, P., Prangle, D.: Constructing summary statistics for approximate Bayesian computation: semi-automatic approximate Bayesian computation. J. Royal Stat. Soc. 74(3), 419–474 (2012)

Filippi, S., Barnes, C.P., Cornebise, J., Stumpf, M.P.: On optimality of kernels for approximate bayesian computation using sequential monte carlo. Stat. Appl. Genet. Mol. Biol. 12(1), 87–107 (2013)

Gillespie, C.S.: Moment-closure approximations for mass-action models. IET Syst. Biol. 3(1), 52–58 (2009). doi:10.1049/iet-syb:20070031

Gillespie, C.S., Golightly, A.: Bayesian inference for generalized stochastic population growth models with application to aphids. J. Royal Stat. Soc. 59(2), 341–357 (2010)

Gillespie, D.T.: Exact stochastic simulation of coupled chemical reactions. J. Phys. Chem. 81(25), 2340–2361 (1977)

Gillespie, D.T.: The chemical Langevin equation. J. Chem. Phys. 113(1), 297–306 (2000)

Golightly, A., Wilkinson, D.J.: Bayesian parameter inference for stochastic biochemical network models using particle Markov chain Monte Carlo. Interface Focus 1(6), 807–820 (2011)

Golightly, A., Henderson, D., Sherlock, C.: Delayed acceptance particle mcmc for exact inference in stochastic kinetic models. Stat. Comput. pp 1–17 (2014). doi:10.1007/s11222-014-9469-x

Kitano, H.: Computational systems biology. Nature 420(6912), 206–210 (2002)

Komorowski, M., Finkenstädt, B., Harper, C.V., Rand, D.A.: Bayesian inference of biochemical kinetic parameters using the linear noise approximation. BMC Bioinform 10(1), 343 (2009)

Lotka, A.J.: Elements of Physical Biology. Williams & Wilkins Baltimore, Baltimore (1925)

Marjoram, P., Molitor, J., Plagnol, V., Tavaré, S.: Markov chain Monte Carlo without likelihoods. Proceedings of the National Academy of Sciences 100(26):15,324–15,328 (2003)

Matis, J.H., Kiffe, T.R., Matis, T.I., Stevenson, D.E.: Stochastic modeling of aphid population growth with nonlinear, power-law dynamics. Math. Biosci. 208(2), 469–494 (2007)

Matis, T.I., Parajulee, M.N., Matis, J.H., Shrestha, R.B.: A mechanistic model based analysis of cotton aphid population dynamics data. Agric. For. Entomol. 10(4), 355–362 (2008)

Pitt, M.K., RdS, Silva: On some properties of Markov chain Monte Carlo simulation methods based on the particle filter. J. Econ. 171(2), 134–151 (2012)

Pritchard, J.K., Seielstad, M.T., Perez-Lezaun, A., Feldman, M.W.: Population growth of human y chromosomes: a study of y chromosome microsatellites. Mol. Biol. Evol. 16(12), 1791–1798 (1999)

Roberts, G.O., Rosenthal, J.S.: Optimal scaling for various Metropolis-Hastings algorithms. Stat. Sci. 16(4), 351–367 (2001)

Roberts, G.O., Gelman, A., Gilks, W.R.: Weak convergence and optimal scaling of random walk metropolis algorithms. Ann. Appl. Probab. 7(1), 110–120 (1997)

Salis, H., Kaznessis, Y.: Accurate hybrid stochastic simulation of a system of coupled chemical or biochemical reactions. J. Chem. Phys. 122(054), 103 (2005)

Sherlock, C., Thiery, AH., Roberts, GO., Rosenthal, JS.: On the efficiency of pseudo-marginal random walk Metropolis algorithms. arXiv:13097209 (2013)

Silk, D., Filippi, S., Stumpf, M.P.: Optimizing threshold-schedules for sequential approximate Bayesian computation: applications to molecular systems. Stat Appl Genet Mol Biol 12(5), 603–618 (2013)

Sisson, S., Fan, Y., Tanaka, M.M.: Sequential Monte Carlo without likelihoods. Proc. Natl. Acad. Sci. 104(6), 1760–1765 (2007)

Tavare, S., Balding, D.J., Griffiths, R., Donnelly, P.: Inferring coalescence times from dna sequence data. Genetics 145(2), 505–518 (1997)

Toni, T., Welch, D., Strelkowa, N., Ipsen, A., Stumpf, M.P.: Approximate Bayesian computation scheme for parameter inference and model selection in dynamical systems. J. Royal Soc. Interface 6(31), 187–202 (2009)

Volterra, V.: Fluctuations in the abundance of a species considered mathematically. Nature 118, 558–560 (1926)

Wilkinson, D.J.: Parallel Bayesian computation, Statistics Textbooks and Monographs, vol 184. MARCEL DEKKER AG (2006)

Wilkinson DJ (2011) Stochastic modelling for systems biology, Chapman & Hall/CRC mathematical biology and medicine series, vol 44, 2nd edn. CRC Press

Acknowledgments

The authors gratefully acknowledge the referees for their detailed and constructive comments. JO was supported by an EPSRC studentship.

Author information

Authors and Affiliations

Corresponding author

Additional information

R-package: https://github.com/csgillespie/abcpmcmc.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Owen, J., Wilkinson, D.J. & Gillespie, C.S. Scalable inference for Markov processes with intractable likelihoods. Stat Comput 25, 145–156 (2015). https://doi.org/10.1007/s11222-014-9524-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-014-9524-7