Abstract

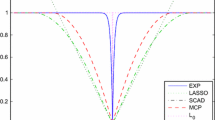

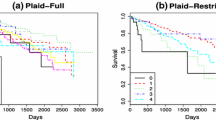

In high-dimensional data analysis, penalized likelihood estimators are shown to provide superior results in both variable selection and parameter estimation. A new algorithm, APPLE, is proposed for calculating the Approximate Path for Penalized Likelihood Estimators. Both convex penalties (such as LASSO) and folded concave penalties (such as MCP) are considered. APPLE efficiently computes the solution path for the penalized likelihood estimator using a hybrid of the modified predictor-corrector method and the coordinate-descent algorithm. APPLE is compared with several well-known packages via simulation and analysis of two gene expression data sets.

Similar content being viewed by others

References

Akaike, H.: Information theory and an extension of the maximum likelihood principle. In: Proc. 2nd International Symposium on Information Theory (1973)

Barron, A., Birge, L., Massart, P.: Risk bounds for model selection via penalization. Probab. Theory Relat. Fields 113, 301–413 (1999)

Breheny, P., Huang, J.: Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection. Ann. Appl. Stat. 5(1), 232–253 (2011)

Breiman, A.G., Gao, H.Y.: Understanding waveshrink: variance and bias estimation. Biometrika 83, 727–745 (1996)

Chen, J., Chen, Z.: Extended Bayesian information criteria for model selection with large model spaces. Biometrika 95(3), 759–771 (2008)

Chen, S., Donoho, D.L.: On basis pursuit. Tech. Rep., Dept. Statistics, Stanford Univ. (1994)

Consortium, M.: The microarray quality control (maqc)-ii study of common practices for the development and validation of microarray-based predictive models. Nat. Biotechnol. 28, 827–841 (2010)

Daubechies, I., Defrise, M., Mol, C.D.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57, 1413–1457 (2004)

Efron, B., Hastie, T., Johnstone, I., Tibshirani, R.: Least angle regression (with discussion). Ann. Stat. 32, 407–499 (2004)

Fan, J., Li, R.: Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 96, 1348–1360 (2001)

Fan, J., Lv, J.: A selective overview of variable selection in high dimensional feature space. Stat. Sin. 20, 101–148 (2010)

Fan, J., Xue, L., Zou, H.: Strong oracle optimality of folded concave penalized estimation (2012). arXiv:1210.5992

Feng, Y., Li, T., Ying, Z.: Likelihood adaptively modified penalties. Manuscript (2012)

Friedman, J., Hastie, T., Tibshirani, R.: Pathwise coordinate optimization. Ann. Stat. 1, 302–332 (2007)

Friedman, J., et al.: Regularization paths for generalized linear models via coordinate descent. J. Stat. Softw. 33, 1–22 (2010)

Fu, W.: Penalized regressions: the bridge versus the lasso. J. Comput. Graph. Stat. 7(3), 397–416 (1998)

van de Geer, S.: High-dimensional generalized linear models and the lasso. Ann. Stat. 36, 614–645 (2008)

Genkin, A., Lewis, D.D., Madigan, D.: Large-scale Bayesian logistic regression for text categorization. Technometrics 49(3), 291–304 (2007)

Golub, T.R., Slonim, D.K., Tamayo, P., Huard, C., Gaasenbeek, M., Mesirov, J.P., Coller, H.: Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science 286(5439), 531–537 (1999)

Hastie, T., Hastie, T., Rosset, S., Tibshirani, R., Zhu, J.: The entire regularization path for the support vector machine. J. Mach. Learn. Res. 5, 1391–1415 (2004)

Kim, S.J., Koh, K., Lustig, M., Boyd, S., Gorinevsky, D.: An interior-point method for large-scale l 1 regularized least squares. J. Mach. Learn. Res. 8, 1519–1555 (2007)

Krishnapuram, B., Carin, L., Figueiredo, M., Hartemink, A.: Sparse multinomial logistic regression: fast algorithms and generalization bounds. IEEE Trans. Pattern Anal. Mach. Intell. 27, 957–968 (2005)

Lee, S.I., Lee, H., Abbeel, P., Ng, A.Y.: Efficient l 1 regularized logistic regression. In: Proceedings of the National Conference on Artificial Intelligence, vol. 21, pp. 401–408 (2006)

Mallows, C.L.: Some comments on c p . Technometrics 12, 661–675 (1973)

McCullagh, P., Nelder, J.A.: Generalized Linear Model, 2nd edn. Chapman and Hall, New York (1989)

Meier, L., Geer, S.V.D., Bühlmann, P.: The group lasso for logistic regression. J. R. Stat. Soc. B 70(1), 53–71 (2008)

Osborne, M., Presnell, B., Turlach, B.: A new approach to variable selection in least squares problems. IMA J. Numer. Anal. 20(3), 389–404 (2000)

Park, M.Y., Hastie, T.: An l 1 regularization-path algorithm for generalized linear models. J. R. Stat. Soc. B 69, 659–677 (2007)

Rosset, S., Zhu, J.: Piecewise linear regularized solution paths. Ann. Stat. 35(3), 1012–1030 (2007)

Schwarz, G.: Estimating the dimension of a model. Ann. Stat. 6, 461–464 (1978)

Shevade, K., Keerthi, S.: A simple and efficient algorithm for gene selection using sparse logistic regression. Bioinformatics 19, 2246–2253 (2003)

Tibshirani, R.: Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B 9, 1135–1151 (1996)

Wei, F., Zhu, H.: Group coordinate descent algorithms for nonconvex penalized regression. Comput. Stat. Data Anal. 56(2), 316–326 (2012)

Wu, T.T., Lange, K.: Coordinate descent method for lasso penalized regression. Ann. Appl. Stat. 2, 224–244 (2008)

Wu, Y.: An ordinary differential equation-based solution path algorithm. J. Nonparametr. Stat. 23(1), 185–199 (2011)

Yuan, M., Zou, H.: Efficient global approximation of generalized nonlinear ℓ 1-regularized solution paths and its applications. J. Am. Stat. Assoc. 104(488), 1562–1574 (2009)

Zhang, C.H.: Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 38(2), 894–942 (2010)

Zhang, C.H., Huang, J.: The sparsity and bias of the lasso selection in high-dimensional regression. Ann. Stat. 36, 1567–1594 (2008)

Zhu, J., Hastie, T.: Classification of gene microarrays by penalized logistic regression. Biostatistics 5(3), 427–443 (2004)

Zou, H., Li, R.: One-step sparse estimates in nonconcave penalized likelihood models. Ann. Stat. 38, 1509–1533 (2008)

Acknowledgements

The authors thank the editor, the associate editor, and referees for their constructive comments. The authors thank Diego Franco Saldaña for proofreading.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Logistic regression

1.1 A.1 LASSO

In logistic regression, we assume (x i , y i ), i=1,…,n are i.i.d. with \(\mathbb{P}(y_{i}=1|\boldsymbol{x}_{i})=p_{i}=\exp(\boldsymbol{\beta}'\boldsymbol{x}_{i})/(1+\exp(\boldsymbol{\beta}'\boldsymbol{x}_{i}))\). Then the target function for the LASSO penalized logistic regression is defined as

The KKT conditions are given as follows.

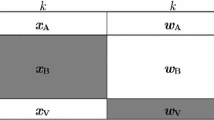

We define active set A k as

To update, we define

then \(\boldsymbol{s}^{(k)} = (0, \boldsymbol{s}^{(k)'}_{-0})'\), \(\boldsymbol{d}^{(k)} = (0, \boldsymbol{d}^{(k)'}_{-0})'\), where

To correct,

1.2 A.2 MCP

For MCP penalized logistic regression, we define the target function as

The KKT conditions are given as follows.

For a given λ k , define the active set A k as

where

and

To perform adaptive rescaling on γ, define

To update, the derivatives are defined as follows,

and

To correct we use

where

and

Appendix B: Poisson regression

2.1 B.3 LASSO

In Poisson regression, we assume (x i , y i ), i=1,…,n are iid with \(\mathbb{P}(Y=y_{i})=e^{-\lambda_{i}}\lambda_{i}^{y_{i}}/(y_{i})!\), where logλ i =β′x i . Then criterion for the LASSO penalized Poisson regression is defined as

The KKT conditions are given as follows.

For a given λ k , we define the active set A k as follows.

To update, we define

then

and

To correct,

2.2 B.4 MCP

For MCP penalized Poisson regression, we define the target function as

The KKT conditions are,

For a given λ k , the active set is defined as

where

and

To update, the derivatives are defined as follows,

and

To correct we use

where

and

Rights and permissions

About this article

Cite this article

Yu, Y., Feng, Y. APPLE: approximate path for penalized likelihood estimators. Stat Comput 24, 803–819 (2014). https://doi.org/10.1007/s11222-013-9403-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-013-9403-7