Abstract

In this study, we analysed the statistical association between e-journal use and research output at the institution level in South Korea by performing comparative and diachronic analyses, as well as the analysis by field. The datasets were compiled from four different sources: national reports on research output indicators in science fields, two statistics databases on higher education institutions open to the public, and e-journal usage statistics generated by 47 major publishers. Due to the different data sources utilized, a considerable number of missing values appeared in our datasets and various mapping issues required corrections prior to the analysis. Two techniques for handling missing data were applied and the impact of each technique was discussed. In order to compile the institutional data by field, journals were first mapped, and then the statistics were summarized according to subject field. We observed that e-journal use exhibited stronger correlations with the number of publications and the times cited, in contrast to the number of undergraduates, graduates, faculty members and the amount of research funds, and this was the case regardless of the NA handling method or author type. The difference between the maximum correlation for the amount of external research funding with two average indicators and that of the correlation for e-journal use were not significant. Statistically, the accountability of e-journal use for the average times cited per article and the average JIF was quite similar with external research funds. It was found that the number of e-journal articles used had a strong positive correlation (Pearson’s correlation coefficients of r > 0.9, p < 0.05) with the number of articles published in SCI(E) journals and the times cited regardless of the author type, NA handling method or time period. We also observed that the top-five institutions in South Korea, with respect to the number of publications in SCI(E) journals, were generally across a balanced range of academic activities, while producing significant research output and using published material. Finally, we confirmed that the association of e-journal use with the two quantitative research indicators is strongly positive, even for the analyses by field, with the exception of the Arts and Humanities.

Similar content being viewed by others

Introduction

Usage statistics data by themselves are not a new phenomenon (Matthews 2009). Traditionally, libraries have maintained statistics records for gate counts, circulation, inter-library loans, and information services (NISO 2007). Academic libraries also frequently gather statistics on reserve items and submit reports to government agencies, parent institutions and library boards. Such information can then be used to understand work flow and improve library services. Since the rapid emergence of electronic resources and the explosion of online services becoming available through the internet, many institutions have acknowledged the benefits of investing in the collecting, reporting and analysis of usage statistics (Welch 2005). Usage statistics for online resources have also been a primary analytical data source for public and private library consortia. The Virtual Library of Virginia consortia have undertaken a project to streamline, automate and standardize statistical data collection and the reporting process (Matthews 2009). Joint Information Systems Committee has been developing the Journal Usage Statistics Portal providing a ‘one-stop-service’ for librarians to view, download and analyze their usage reports. Since 2009, the Korea Institute of Science and Technology Information (KISTI) has consolidated the usage statistics of member institutions in order to provide integrated usage data with subscription and bibliographic information to members, as well as to monitor the use of e-journal packages distributed through the Korea Electronic Site Licensing Initiative (KESLI) consortia (Jung et al. 2013).

Recently, a number of researchers have attempted to measure scientific or research impact from usage data available online from publishers, aggregators, electronic resource management systems and link resolvers (Bollen et al. 2009). Larsen and Von Ins (2010) argued that the coverage of SCI has declined with the rapid growth of scientific publications. Existing citation databases such as WoS, Scopus and Google Scholar have limitations in coverage and depth for non-English language journals. Zainab et al. (2012) and Choi et al. (2013) argue that WoS and Scopus are comprehensive databases for English journals but do not adequately cover most national journals published in developing countries.

Usage data regarding PDF downloads, HTML views and SNS saves are considered as valuable, and complement traditional citation-based assessment metrics. The PLoS Medicine Editors (2006) predicted that new measures including the number of times downloaded, coverage in mainstream media, and times referenced in policy documents might be useful inputs to measure the impact of rarely cited but influential articles. “Altmetrics” builds on information from social media use, and is suggested to present a nuanced and multidimensional view of multiple research impact measures over time, together with traditional citation-based metrics (Priem and Piwowar 2012). Bollen et al. (2009) analyzed and compared citation and usage networks, as well as various social network and hybrid measures to explore the most suitable methods for expressing and interpreting scientific impact. As a result, the ‘Usage Closeness Centrality’ incorporating 39 measures was deemed the best candidate for determining a ‘consensus’ view of scientific impact. Priem and Piwowar (2012) demonstrated that PDF downloads and HTML views correlate at moderate to high levels with almost all other Altmetrics. In particular, a moderately strong relationship between citation count and PDF/HTML download count for the three journals analyzed was reported in the study.

Brody and Harnad (2005) postulated that if a correlation exists between citations and downloads, a higher rate of downloads in the first year of an article could be used to predict a higher number of eventual citations later. They identified an overall correlation of 0.4 between the citation numbers and download impact of articles in physics and mathematics archived in the UK’s arXiv.org. Moed (2005) determined that during the first 3 months after an article is cited, the number of downloads increased 25 % compared to what would be expected had the article not been cited. In contrast, no relation between the usage factor and two measures of citation impact including the Journal Impact Factor and Elsevier’s Source Normalized Impact per Paper (SNIP) metric was reported (CIBER Research Limited 2011). Nevertheless, this particular report concluded that the new usage-based metric opens up the possibility of developing new ways of looking at scholarly communication, with different journals occupying very different niches within a complex ecosystem. Two reasons for the importance of download impact were highlighted by Brody and Harnad (2005): (1) The proportion of download variance correlated with citation counts provides an early estimate of probable citation impact that can be tracked from the instant an article is made Open Access, and attains its maximum predictive power after 6 months; and (2) The portion of download variance that does not correlate with citation counts provides a second, partly independent estimate of the impact of an article, sensitive to another form of research usage that is not reflected in citation counts. In the same context, efforts to develop and substantiate usage-based metrics have been undertaken by several research groups (Bollen and Van de Sompel 2008; Gorraiz and Gumpenberger 2010) as well as the Counting Online Usage of NeTworked Electronic Resources (COUNTER) group (Shepherd 2011).

It has generally been assumed that economic factors such as Gross Domestic Product (GDP), Gross Domestic Expenditures on Research and Development (GERD), and human resources have a linear or exponential relationship with research performance at the national level (Yoon 2007; Vinkler 2010). According to Wood (1990), personal characteristics and differences in research style, processes and techniques for research, as well as funding availability are the most important factors influencing the research productivity of academics. Heavy teaching loads were seen as a distraction from research activity in general. In particular, for the majority of science departments in academic institutions, the extent and continuation of funding are critical for facilitating research and many serious problems arise due to funding restrictions. However, to the best of our knowledge, there have been no previous studies investigating the statistical relationship between the production of resesarch publications and the use of them at the national or institutional level. Although the use of research publications is a daily necessity for researchers, investigations into the relationship between the use and production of research output at the institutional level in comparison to studies based on journal-specific metrics have been lacking.

In this study, our objective is to explore the relationship between research performance and the usage of publications at the level of institutions by performing comparative, diachronic analyses and an analysis by field. Specifically, we address the following three research questions:

-

1.

Which is most highly correlated with research output at the institutional level: human resources, economic factors or the use of research publications?

-

2.

Does the extent of correlation of e-journal use with four research output measures for long term data differ when assessed from a short term perspective?

-

3.

What is the statistical relationship between the use of research publications and research productivity at the institutional level (by field)?

Data, methodology and limitations

Dataset

In order to address our research questions, we referred to SCI Analysis Research, an annual report on national science and technology indicators covering a range of different scientific fields, research institutions, journals and regions in South Korea, published by the National Science and Technology Commission (NSTC). The reports are compiled as a result of search and analysis of the Web of Science (WoS) and NSI databases every year. They include the number of articles published in Science Citation Index [SCI(E)] journals, times cited, average times each article is cited and average journal impact factor (JIF)Footnote 1 as research performance indicators for each institution (NSTC 2011). The institutional statistics are calculated according to author type in three ways: (1) by quantifying every affiliation present in the author list in each article (Vinkler 2010), (2) by accounting for the affiliation of the first author only, and (3) accounting for the affiliation of the corresponding author only.

Neither the NSTC report nor NSI databases include institutional statistics related to human resources, research funding and journal use. We therefore decided to collect the relative institutional data from several different data sources. In order to obtain institutional statistics on human resources and research funds, we used two websites available to the public; Statistics of Korean Universities managed by the Korea University Accreditation Institute (KUAI),Footnote 2 which provides the statistics for the number of undergraduates and graduate students, and the average internal/external research funding available per faculty member. The number of faculty members at Korean academic institutes is recorded by the Korea Educational Statistics Service (KESSFootnote 3), operated by the Korea Education Development Institute (KEDI).

The usage statistics for e-journals by academic institutions are generated and managed by each content provider and are not publicly available. The KISTI manages the KESLI consortia, the biggest library consortia in South Korea, and has developed an automated collection system for e-journal usage statistics from 47 major content providers on behalf of member libraries (Jung and Kim 2013). The publisher-generated statistics for 522 institutions are collected automatically on a monthly basis, and include Elsevier, Springer, Wiley, Nature Publishing Group, the American Association for Advancement of Science (AAAS), the American Chemical Society, the Institute of Electrical and Electronics Engineers, American Institute of Physics, American Physical Society, Institute of Physics and others. The data format follows Release 3 of the COUNTER code of practice for e-journals, Journal Report 1 (JR1), where ‘total use’ denotes the sum of HTML views and PDF downloads (COUNTER 2008).Footnote 4

Most major content providers provide COUNTER-compliant usage statistics data for their clients, however it is not very common for a publisher to provide such usage data stretching back considerably over many years. The more prolific publishers and those with a higher readership necessarily incur greater costs to maintain extensive records of past usage data. The International Coalition of Library Consortia (ICOLC) recommends that publishers maintain a minimum of 3 years of such historical data. These data should ideally be made available in separate files containing specified data elements that can be downloaded and manipulated locally (ICOLC 2006). Most major publishers follow this recommendation and provide several years of past usage data. Thanks to KISTI’s automated collection of e-journal usage data on behalf of the majority of academic institutions in South Korea since 2009, the usage data for several publishers including AAAS, the National Academy of Science and Berkeley Electronic Press have been recorded since 2000, and the past usage data for most content providers is available for 2007 onwards (Jung and Kim 2013).

The data obtained from the four different sources were merged based on the NSTC reports. Only the institutional data appearing in the NSTC reports were considered for the analysis. According to the NSTC report, the number of articles authored by Korean researchers totaled 41,114 in 2010, and 270,420 for the 10 years from 2001 to 2010. The number of institutions that published at least one article in an SCI(E) journal decreased in relation to author type from 292 to 202. The three datasets generated according to author type were used to address the first and the second research questions. Only the number of publications in SCI(E) journals and the times cited are available as research output indicators in the corresponding author dataset. Table 1 presents the explorative description of data used for the 2010 analysis according to author type.

The number of publications in SCI(E) journals, times cited, and the e-journal use for 47 content providers is highly skewed to the right. Only Average JIF is close to a normal distribution with skewness and kurtosis of 0.45 and 3.41, respectively, in the co-author basis data. Times cited presents the most skewed distribution and the sharpest curve, followed by the number of articles published in SCI(E) journals. Table 1 reveals that the number of publications in SCI(E) journals, times cited and numbers for e-journal use exhibit a Pareto distribution, as is empirically observed for many natural phenomena. The highest number of publications in SCI(E) journals and the articles most cited come from only a few institutions for all author types. In addition, the majority of articles distributed through the 47 major publishers have also been used by a small number of institutions.

Due to the different sources used in the dataset, a considerable number of values are missing, as shown in Table 1. To address these discrepancies, techniques for handling missing data were applied to the analysis (described in the following section). In regards to the number of missing values, there were a lower number of missing values in institutional statistics constructed by KISTI than in institutional data constructed by KUAI and KEDI.

Table 2 details the dataset used for addressing the second question: the long term correlation analysis between research output and the use of e-journals by institutions.Footnote 5 Only the data derived from the research output indicators based on co-author assessments were used for the long term analysis.

The distribution of the five long term variables differs from that of the short term. The number of publications in SCI(E) journals, times cited for 355 institutions and the e-journal usage statistics for 260 institutions over 10 years exhibited a right-skewed distribution. The central peaks of these three variables also became sharper. Although more institutions have been involved in academic research output over time, only a few outstanding institutions have been responsible for the majority of research output over a long term perspective. The mean values for the number of publications, the times cited and e-journal usage statistics are higher than for the third quartiles, whereas the median values fall around the first quartiles. A few very large values for the three variables greatly influence the mean values, as seen in the short term data distribution. Two average variables, the average times cited per article and average JIF are quite close to a normal distribution in the long term dataset. The average times cited per article for 10 years exhibits a normal distribution, whereas the average times cited per article in 2010 exhibits a moderately skewed distribution. Median, mean, the first and the third quartiles, and the maximum values for average JIF over 10 years are lower than those for average JIF in 2010. The finding shows that the level of journals targeted for publication by Korean researchers has increased compared to the past. The two average variables are heavily affected by the institutions publishing a small number of articles. For example, the top-five institutions, with the exception of Pohang University of Science and Technology (POSTEC), in terms of the average times cited per article over 10 years published <50 articles and were cited <600 times, although the average times cited per article exceeded 11.6.

To address our third research question, further field-specific analysis was conducted, with the institutional statistics for articles co-authored by Korean researchers in 2010 used as shown in Table 3.

The high performance fields in South Korea are Chemistry, Engineering, Materials Science, Molecular Biology and Genetics (three bold marks), followed by Biology and Biochemistry, Clinical Medicine, Neuroscience and Behavior, Physics and Space Science (two bold marks). The top subjects for which articles are most used by Korean researchers are Engineering, Chemistry, Materials Science, Clinical Medicine, Multidisciplinary and Physics. The fields used most are well represented as the fields in which Korean academic institutions produced the most research outcomes. Although Table 3 underlines the age-old notion that ‘the more you read, the better you write’, this study attempts to shed further light on the strong statistical relationship between e-journal use and research performance at the institution level in terms of comparative and diachronic analysis, as well as correlation analysis by field in the following sections.

Methodology and limitations

We encountered a number of issues, as the datasets used for the study were derived from four different data sources. Each issue was dealt with as follows.

Identification and mapping of institution names

Due to the different sources for institutional data, the names of the institutions need to be identified first and then the data scale requires tuning for the analysis. The institutional data from KUAI and KEDI and the COUNTER JR1 are generated at a campus level whereas the research output data from NSTC reports are not. Thus the categorized statistics by campus were been summed up to derive the institutional data. For example, usage statistics for the Seoul Campus, Wonju Campus and the Medical College of Yonsei University were combined to derive the total usage statistics for Yonsei University. The names of institutions were written in the datasets originating from KUAI, KEDI and NSTC reports in Korean, whereas the names of institutions in JR1 generated by overseas publishers were written in English. In addition, some institution names change over time or the institutions may have closed when the statistics were compiled. KISTI has constructed the database of pairs of English and Korean institution names for integrating KESLI consortia information with overseas publishers. It holds the authority data on changes to institution names as well. This data was used for merging the four different data types. In addition, we searched the internet to identify the current institution if the name was not found in KISTI’s authority data.

Handling of missing data

As described in Tables 1, 2 and 3, a considerable number of missing values were observed in the datasets. Howell (2007) postulated that the only way to obtain an unbiased estimate is to use a model that accounts for the missing data. Such a model could then be incorporated into a more complex model for estimating missing values. In order to identify the missing values in our dataset, we examined the cause of the missing values in the institutional usage statistics.

For the exhaustive analysis, the usage statistics of 292 institutions that published at least one article in SCI(E) journals in 2010 should ideally be used to estimate the statistical relationships each other. However, only usage statistics for 182 out of 292 institutions were available for the analysis. As described in section “Dataset”, the number of publications, times cited and the numbers for e-journal use are heavily skewed to the right. Only 113 institutions achieved publication authorship exceeding 5 % in 2010, as shown in Table 4. The usage data for 111 out of 113 (98.23 %) was available for the analysis. For the long-term analysis, usage data for all 112 institutions whose authorship in SCI(E) publications exceeded 5 % were acquired.

In general, the institutions whose usage data was not available also did not subscribe to the journals published by the 47 listed publishers, so the usage statistics for these institutions were not generated. Such institutions not subscribing to the content of major publishers are generally of low ranking for research output, as shown in Table 4. The missing values originating from KUAI and KEDI placed in low ranks as well. The two public statistics sources on higher education institutions covered the major institutions in South Korea. This fact implies that most missing values in our dataset were not random and present in the tail of the highly right-skewed distribution. In this study, issues arising from missing data were dealt with using conventional missing data techniques such as listwise deletion and mean (a representative value) substitution. For the calculation of skewness and kurtosis to identify the distribution of the variables, the missing values were omitted. We employed both listwise deletion and mean/median substitution to perform the correlation analysis between the four research output indicators and the human resources, the research funds, and the numbers for e-journal use. The missing values with the right-skewed distribution were omitted or substituted with median values. Missing data were also observed for average JIF. They were omitted or substituted with mean values, considering that the missing data was small and the distribution of average JIF is quite close to a normal distribution.

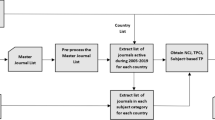

Mapping journal titles for subject analysis

In order to analyse the statistical relationship between the research output indicators and the numbers for e-journal usage by field, the institutional data by field were needed. The NSTC reports originate from NSI databases, and the WoS standard field is used for the subject classification system. No subject classification is assigned to the journal in COUNTER JR1 reports. Although DDC assigned by Ulrich or the British Library to journals is provided to KESLI members through KISTI’s usage statistics service, there is no mapping table between the WoS standard field and DDC. Thus, the usage statistics of journals appearing in NSTC reports are summarized at the institutional level by field. Title, P-ISSN and publisher’s names for e-journals were used as mapping keys.

Long-term analysis

To perform the long term analysis, two sets of institutional data have been compiled: (1) the research performance data in 2010 and e-journal usage statistics in 2008, 2009 and 2010; and (2) the research performance data and e-journal usage statistics for 10 years from 2001 to 2010. Due to the difficulties in estimating the length of time required for each researcher to review existing articles when writing a new journal article, we used the approximation that researchers use existing articles one or 2 years prior to the year of publication of the production article. The statistics for article use in 2008, 2009 and 2010 for each institution were used to investigate whether the research output in 2010 was affected by the use of e-journals during the previous 2 years. Cumulative usage statistics for each institution for 10 years were used to analyse the relationship between the research output and e-journal use from a long-term perspective.

Limitations

COUNTER JR1 only reflects access per title in the current calendar year and provides no information about the accessed publication years as summarized in Gumpenberger et al. (2012). COUNTER JR5, which includes the usage statistics according to the publication year, was not available for the study since JR5 are only currently provided by a small number of publishers.

Results and discussion

Pearson’s correlation coefficients (r) have been used to calculate the degree of the correlation between variables in the following sections.

Comparison of e-journal usage with human resources and research funds

To analyse the statistical relationship between e-journal use and research performance by academic institutions, other institutional factors should be compared. The extent of internal/external/total research funds and the number of undergraduates/graduates/full-time faculty member at each institution were compared. As explained in section “Data, methodology and limitations”, a considerable number of missing values were observed in the institutional data. Two NA handling methods were applied in order to understand the different influences. Only the number of publications in SCI(E) journals and times cited determined from the corresponding author basis data were used to compare the strength of correlation with the seven variables.

Tables 5, 6 and 7 show that the correlations of three factors including the numbers for e-journal use, the amount of internal/external/total research funds and the number of undergraduates/graduates/full-time faculty members with the research output indicators based on co-author, first author and corresponding author, respectively, in 2010.

External funds have a stronger correlation than Internal funds because academic institutions with better research performance tend to receive more funds from the government or industry in general. Unexpectedly, the number of graduate students had a strong statistical relationship with the two quantitative research performance indicators and ‘average cites per articles’ compared to the number of faculty members in academic institutions, regardless of the author-type and the NA handling method. The number of faculty had a stronger correlation with ‘average JIF’ only in co-author basis data when missing data were omitted, when compared to the number of graduates. However, the difference is quite slight at 0.003, thus it does not seem to be significant. e-Journal use and the number of graduates and full-time faculty members shows a strong relationship with the number of publications in SCI(E) journals and times cited, whereas the average extent of external/total funding per faculty member, and the number of undergraduates show moderate correlation values with the two indicators.

With two qualitative indicators including ‘average cites per article’ and ‘average JIF’, the total amount of research funds had the highest correlation in co-author and first author basis data when the missing data were omitted.

The first author basis data showed similar results to the co-author basis data, except that the correlation value of e-journal use is highest when the missing data are substituted with the mean or median values, as shown in Table 6. The four research performance indicators had the highest correlation with the number of e-journal use in first author basis data set when the missing values were substituted with mean or median values.

As explained in section “Data, methodology and limitations”, two research performance indicators were available for the correlation analysis in corresponding author basis data as presented in Table 7.

The association of the seven independant variables with two indicators did not differ in terms of the corresponding author data from the two previous author types.

E-journal use shows the highest correlation with two quantitative performance indicators ‘# Publication’ and ‘Times cited’, regardless of NA handling method and author type, as shown in Tables 6, 7 and 8. In other words, the number of articles published in SCI indexed journals and the times cited are more strongly correlated with e-journal use at the academic institution level than with the economic factors and number of researchers. With regard to the average times cited per article, e-journal usage has the highest correlation only with the first author basis data when NAs are substituted with mean or median values. With the average JIF, e-journal usage shows the highest values in the co-author and the first author basis data only when NAs are substituted with mean or median values. However, the differences between the maximum values and that of the correlation with e-journal use using two average indicators were not significant. Statistically, the accountability of e-journal use for the average times cited per article and the average JIF is quite close to that of the total extent of research funds. Moreover, the accountability of e-journal use for the two qualitative indicators is better than that for the number of graduates or the number of faculty members in two author data types. Figure 1 presents the pairs plot for variables, including the four outcome indicators and the seven variables when missing data are omitted. Figure 2 presents the plot with mean/median substitution for co-author basis data.

As seen in Fig. 1, the statistics for the number of e-journals exhibit some degree of correlation with the two average research output indicators when missing data are omitted. The NA handling method chosen influenced correlation with the two average variables. As described in section “Methodology and limitations”, many of the statistics for e-journal use at institutions that published a lower than average number of publications are missing. In section “Dataset” shows that the average times cited per article and average JIF have been heavily influenced by institutions that publish a relatively small number of articles. With listwise deletion, many of those institutions that published fewer publications become omitted. This results in stronger correlations between the statistics for article use and the two average indicators for institutions that remain in the analysis.

Long-term relationship between article use and research performance

We investigated the statistical relationship between the research output indicators and the article use indicator at the institutional level over an extended period of time. As explained in section “Data, methodology and limitations”, missing values were observed in the average JIF and the institutional e-journal usage statistics. Six do not have published data for average JIF in 2010 and ten institutions do not have 10-year data. There are 118, 114, 110 and 95 institutions that did not release statistics for e-journal use in 2008, 2009, 2010 and during the 10-year period, respectively. The missing values for average JIF have been replaced with mean values, whereas the numbers for e-journal use are replaced with their median values if the mean/median substitution technique is applied.

Correlation between e-journal use in 2008, 2009, 2010 and four research output indicators in 2010

The association between the usage of scholarly information over previous years and current research performance indicators has been examined. Due to the difficulties in estimating the length of time required for each researcher to review existing articles when writing a new journal article, we used the approximation that researchers use existing articles one or 2 years prior to the year of publication of the production article. The statistics for e-journal use in 2008, 2009 and 2010 for each institution were used to investigate whether the research output in 2010 was affected by the use of e-journals during the previous years, including 2010. Table 8 presents the correlation of e-journal use in each time window (1 year, 2 years, 3 years) with four research output indicators calculated based on three types of author according to two NA handling methods.

If medians are replaced for e-journal usage numbers, the correlation increases with the two counting variables slightly for each year, and the time-window (2, 3 years) when compared to listwise deletion. The correlation between e-journal use and the two average variables of research output are slightly stronger when listwise deletion is applied.

In contrast to what was assumed, the statistics for article use in the prior one and 2 years did not appear more effect on the research output in 2010. The numbers for e-journal use in 2010 exhibited the strongest correlation with the four research output indicators in 2010, regardless of the NA handling method used.

Correlation between total use and four research output indicators for 10 years

Association between e-journal use and the four research performance indicators of each institution for an extended time period has also been examined as well. Only co-author basis data on research output for 10 years are used. Pearson’s correlation coefficients (r) between four research output indicators and the article use indicator from 2001 to 2010 are presented in Table 9.

The analysis of long-term data shows that the correlation between the numbers for e-journal use and the number of publications in SCI(E) journals and times cited is weaker than that for short-term data, whereas the r values between the numbers for e-journal use and the average cites per article are higher in the long-term data regardless of the NA handling method. The difference in correlation values according to NA handling method is small for the long-term data whereas the handling method affected correlations for article use with the two average variables in the shorter-term data.

In conclusion, the numbers for e-journal usage retains a strong positive correlation (r > 0.9, p < 0.05) with the number of articles published in SCI(E) journals and times cited from a long term perspective, in addition to the fact that the r values between the numbers for e-journal use and the average cites per articles are higher in the long-term data than for the short-term data regardless of the NA handling method used.

Star plots of five indicators for institutions in 2010 and for the years 2001–2010 (10 years)

Star plots for four research output indicators derived from the co-author basis data and e-journal usage at the institutional level are illustrated in Fig. 3, detailing scholarly activities for the 1-year and 10-year periods. Each star represents a single institution in South Korea. The stars are arranged in order of the highest number of publications in SCI(E) journals.

As seen in Fig. 3, data from the top five institutes produce star plots that take the shape of full pentagons. From the sixth to the tenth institute, the size of the stars decreases, with one axis receding disproportionately. The following stars lose their pentagonal shape and some become triangular or single lines. It can be seen that the top five institutions are performing evenly in terms of article publications in SCI(E) journals with high IFs and being highly cited, and using a considerable number of existing publications. Specific statistics for the five variables over 10 years for the top-ten institutions are shown in Table 10.

The listed institutions in Table 10 are also regarded as top South Korean universities in terms of the number of qualified faculty and the size of research funds.

Relationship between article use and research output by subject

We analyzed the statistical relationship between the research output indicators including the number of publications in SCI(E) journals, times cited and average JIF, and e-journal use by field. Table 11 presents the correlation values for e-journal usage with the three research output indicators in 23 WoS standard fields.

The correlation coefficient values for e-journal use with research performance indicators differ by field. The r value for e-journal use with the number of publications varies dramatically from −0.06 to 0.96. The highest scoring fields are Computer Science and Social Sciences, general in terms of the correlation of e-journal use with the number of publications in SCI(E) journals. The lowest field is Arts and Humanities. Clinical Medicine, Immunology and Social Sciences, general have the highest r value between e-journal use and times cited. The highest correlation value, 0.52, between e-journal use and average JIF is presented in Physics. The weakest relationship between the numbers for e-journal use and average JIF was for Computer Science which has the strongest association between e-journal usage and publications in SCI(E) journals. The degree of association between e-journal use and research output at each institution by field did not correlate with the strength of research performance by field. However, the numbers for e-journal use had a strong positive correlation with the number of publications in SCI(E) journals and the times cited in every WoS standard field, except the Arts and Humanities, as illustrated in Fig. 4.

In this study, we observed that measures of research article use had a strong positive relationship with two research output indicators and approximately medium correlations with the two average indicators in our institutional dataset, regardless of the time-period or the subject field. In the comparative analysis, the numbers for e-journal use had the strongest association with the number of publications in SCI(E) journals and the times cited than measures for human resources or research funds. The difference in r for e-journal use with two average values on research output quality was not significant from that of the extent of external fund per faculty (which had the highest value).

Miller (1992) concluded that the combination of organizational and bibliometric indicators offered a valid option to assess the quality of research produced by research organizations. We suggest that the numbers for e-journal use by institution may be included in organizational data or as indicators for assessing the institutions. We expect that the number of articles used may function as a more direct and reliable indicator for estimating research performance at each institution.

Conclusions and further work

In this study, we explored the statistical relationship between research output and e-journal usage at institutions in South Korea by performing the comparative and diachronic analyses, and the analysis by field. Three sets of data according to author type were compiled for the comparative analysis and the diachronic analysis. Due to the different data sources utilized for the analyses, a considerable number of missing values appeared in our datasets and the mapping issues had to be solved prior to the analysis. Two techniques for handling missing data were applied and the effect of each technique was discussed. In order to analyse the institutional data by field, journals were mapped first, and the statistics were then summarized according to subject field.

We found that the distribution for number of times cited, the number of articles published in SCI(E) journals and the number of articles used by institutions was highly skewed to the right, whereas average JIF values exhibited an almost normal distribution. The distribution of average times cited per article was slightly skewed to the right in the 1 year data, however, the distribution of the same variable in the 10-year dataset was reasonably close to a normal distribution. In addition, we investigated the statistical relationship between research output indicators and article use with short- and long-term datasets. Although the considerable amount of missing data was problematic, we have identified the missing data and have applied two NA handling methods to calculate the correlation between the four research output indicators and article use. As a result, we observed that e-journal usage showed a stronger correlation with the number of publications and the times cited regardless of NA handling method or author type compared to the number of undergraduates, graduates, faculty members and the extent of research funding. The differences between the maximum correlation values for average external research funding per full-time faculty with two average indicators and e-journal usage was not significant. Statistically, the accountability of e-journal usage for the average times cited per article and the average JIF was quite close to that of the amount of external research funding. It was found that the statistics for article use exhibited a strong positive correlation with the number of articles published in SCI(E) journals and the times cited regardless of the author type, time period, subject category and NA handling method. The average times cited per article and average JIF are heavily influenced by the institutions that publish lower numbers of articles. This has resulted in differences in correlations between the total articles used and the two average variables, depending on the time period and NA handling method employed. With median substitution, correlations for usage numbers, average times cited per article and average JIF are relatively weak, whereas with listwise deletion, correlations between them are improved when analyzing short term data. However, differences due to the NA handling method in correlation with article use and the two average variables in the long-term data was not significant. We observed that the top-five institutions in South Korea, with respect to the number of publications in SCI(E) journals, generally engage in a balance across the types of academic specialties, while producing outstanding research output and using existing publications. Finally, we confirmed that the association of e-journal use with the two quantitative research indicators is strong in the analysis by field, with the exception of the Arts and Humanities. These results may be utilized to predict trends in research and development at the institutional level and the country level.

From the viewpoint of science policy studies, trends in research are of significant importance (Vinkler 2010). The identification and prediction of emerging or declining research fields by tracking the use of articles by subject may contribute as informative tools for science policy-makers. We intend to conduct a further study at the journal level with identical data sources to address this question. Furthermore, it would be of interest to explore the relationship between research output and article use at different levels, such as that of the individual, research group, nation or region, and these investigations could take into account various indicators and data input sources. If a strong relationship between research output and article use is found in general, then the usage data could conceivably contribute to a better understanding of scholarly communication, activities and impact.

Notes

The average JIF for each institution is based on the Journal Citation Report published by the WoS according to publication year, and is compiled by the NSTC.

Past data for the number of undergraduates, graduates, faculty members and the average internal/external research fund per faculty member were not available for the long term study. The institutional statistics before 2010 from KUAI and KESS are currently not available to the public.

References

Bollen, J., & Van de Sompel, H. (2008). Usage impact factor: the effects of sample characteristics on usage-based impact metrics. Journal of American Society for Information Science and Technology, 59(1), 136–149.

Bollen, J., Van de Sompel, H., Hagberg, A., & Chute, R. (2009). A principal component analysis of 39 scientific impact measures. PLoS One, 4(6), e6022. doi:10.1371/journal.pone.0006022.

Brody, T., & Harnad, S. (2005). Earlier web usage statistics as predictors of later citation impact. http://arxiv.org/abs/cs/0503020. Accessed October 30, 2012.

Choi, H., Kim, B., Jung, Y., & Choi, S. (2013). Korean scholarly information analysis based on Korea Science Citation Database (KSCD). Collnet Journal of Scientometrics and Information Management, 7(1), 1–33. doi:10.1080/09737766.2013.802625.

CIBER Research Ltd. (2011). The journal usage factor: Exploratory data analysis. Stage 2 Final Report. http://www.projectcounter.org/documents/CIBER_final_report_July.pdf. Accessed May 23, 2013.

COUNTER. (2008). Release 3 of the COUNTER code of practice for journals and databases. http://www.projectcounter.org/code_practice.html. Accessed November 8, 2010.

Gorraiz, J., & Gumpenberger, G. (2010). Going beyond citation: SERUM—A new tool provided by a network of libraries. Liber Quarterly, 20(1), 80–93.

Gumpenberger, C., Wernisch, A., & Gorraiz, J. (2012). Reality-check: Cost-related journal assessment from a practical point of view1,2. Journal of Scientometric Research, 1(1), 35–43.

Howell, D. C. (2007). The analysis of missing data. In W. Outhwaite & S. Turner (Eds.), Handbook of social science methodology. London: Sage.

International Coalition of Library Consortia. (2006). Revised guidelines for statistical measures of usage of web-based information resources. http://icolc.net/statement/revised-guidelines-statistical-measures-usage-web-based-information-resources. Accessed November 6, 2012.

Jung, Y., & Kim, J. (2013). Hybrid standard platform for e-journal usage statistics management. Lecture Notes in Electrical Engineering, 215, 1105–1115. doi:10.1007/978-94-007-5860-5_132.

Jung, Y., Kim, J., & Kim, H. (2013). STM e-journal use analysis by utilizing KESLI usage statistics consolidation platform. Collnet Journal of Scientometrics and Information Management, 7(2), 205–215. doi:10.1080/09737766.2013.832903.

Larsen, P. O., & Von Ins, M. (2010). The rate of growth in scientific publication and the decline in coverage provided by Science Citation Index. Scientometrics, 84(3), 575–603. http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=2909426&tool=pmcentrez&rendertype=abstract. Accessed November 6, 2012.

Matthews, T. E. (2009). Improving usage statistics processing for a library consortium: The virtual library of Virginia’s experience. Journal of Electronic Resources Librarianship, 21(1), 37–47. doi:10.1080/19411260902858573.

Miller, R. (1992). The influence of primary task on R&D laboratory evaluation: A comparative bibliometric analysis. R&D Management, 22, 003–020. doi:10.1111/j.1467-9310.1992.tb00785.x.

Moed, H. F. (2005). Statistical relationships between downloads and citations at the level of individual documents within a single journal. Journal of the American Society for Information Science and Technology, 56(10), 1088–1097. doi:10.1002/asi.20200.

National Science and Technology Commission. (2011). SCI Analysis Research. Daejeon: National Science and Technology Commission.

NISO. (2007). Standardized usage statistics harvesting initiative (SUSHI): Z39.93. http://www.niso.org/apps/group_public/download.php. Accessed November 8, 2010.

Priem, J., Piwowar, H. A., & Hemminger B. M. (2012). Altmetrics in the wild: Using social media to explore scholarly impact. http://arxiv.org/html/1204.4745v1. Accessed May, 4, 2012.

Shepherd, P. (2011). The journal usage factor project: Results, recommendations and next steps. http://www.projectcounter.org/documents/Journal_Usage_Factor_extended_report_July.pdf. Accessed December 7, 2011.

The PLoS Medicine Editors. (2006). The impact factor game: It is time to find a better way to assess the scientific literature. PLoS Medicine, 3(6), e291. doi:10.1371/journal.pmed.0030291.

Vinkler, P. (2010). Scientometric assessments: Application of scientometrics for the purposes of science policy. The evaluation of research by scientometric indicators. Oxford, UK: Chandos Publishing.

Welch, J. M. (2005). Who says we’re not busy? Library web page usage as a measure of public service activity. Reference Services Review, 33(4), 371–379. doi:10.1108/00907320510631526.

Wood, F. (1990). Factors influencing research performance of university academic staff. Higher Education, 19, 81–100.

Yoon, H. (2007). Correlation analysis between national competitiveness and national research competitiveness in OECD countries. Journal of Korean Society for Library and Information Science, 41(1), 105–123.

Zainab, A. N., Abrizah, A., Husna, M. Z. N., Raj, R. G., Aruna, T. Dzul Nizam, M. P., & ZUlfadhli, M. Z. (2012). Adding value to Malaysian scholarly journals through MyCite, Malaysian citation indexing system. In Proceedings of international conference on journal citation systems in Asia Pacific countries (pp. 1–16).

Acknowledgments

This work was supported by a KISTI Grant funded by the Korea government (No.: K-15-L02-C01-S01).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Jung, Y., Kim, J., So, M. et al. Statistical relationships between journal use and research output at academic institutions in South Korea. Scientometrics 103, 751–777 (2015). https://doi.org/10.1007/s11192-015-1563-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-015-1563-0