Abstract

Purpose

To evaluate the dimensionality and measurement invariance of the aphasia communication outcome measure (ACOM), a self- and surrogate-reported measure of communicative functioning in aphasia.

Methods

Responses to a large pool of items describing communication activities were collected from 133 community-dwelling persons with aphasia of ≥ 1 month post-onset and their associated surrogate respondents. These responses were evaluated using confirmatory and exploratory factor analysis. Chi-square difference tests of nested factor models were used to evaluate patient–surrogate measurement invariance and the equality of factor score means and variances. Association and agreement between self- and surrogate reports were examined using correlation and scatterplots of pairwise patient–surrogate differences.

Results

Three single-factor scales (Talking, Comprehension, and Writing) approximating patient–surrogate measurement invariance were identified. The variance of patient-reported scores on the Talking and Writing scales was higher than surrogate-reported variances on these scales. Correlations between self- and surrogate reports were moderate-to-strong, but there were significant disagreements in a substantial number of individual cases.

Conclusions

Despite minimal bias and relatively strong association, surrogate reports of communicative functioning in aphasia are not reliable substitutes for self-reports by persons with aphasia. Furthermore, although measurement invariance is necessary for direct comparison of self- and surrogate reports, the costs of obtaining invariance in terms of scale reliability and content validity may be substantial. Development of non-invariant self- and surrogate report scales may be preferable for some applications.

Similar content being viewed by others

Introduction

Aphasia is an acquired neurogenic impairment of language performance, usually resulting from focal brain damage involving the dominant (usually left) hemisphere [1]. In most cases, communication deficits are present in all input and output modalities (i.e., speaking, understanding, reading, and writing), and they are disproportionate to any other cognitive impairments that may be present [1]. The term aphasia specifically excludes motor speech disorders resulting from muscle weakness or incoordination (e.g., dysarthria), as well as communication impairments resulting from dementia, delirium, coma, or sensory loss [1]. Stroke is the most common cause of aphasia [2], and approximately 20 % of stroke survivors have persisting aphasia [3]. The worldwide incidence and prevalence of aphasia are not known, but there are currently estimated to be more than 1 million people living with the condition in the United States [4]. The negative consequences of aphasia include psychosocial difficulties, reduced functional independence, and diminished vocational opportunities.

The measurement of communication outcomes is critical to the care of patients with aphasia and to the evaluation of stroke rehabilitation programs. In addition to traditional performance-based and clinical indicators of communication functioning, increasing emphasis has been placed on patient-centered assessments. Several patient-reported stroke outcome assessments include sub-scales of communication functioning [5–7], and additional scales have been developed specifically for patients with aphasia [8–12].

One issue that has concerned developers and users of these and related scales is the extent to which stroke survivors in general, and stroke survivors with aphasia specifically, can provide valid self-reports of their own functioning [13–21]. This concern has led to the collection of proxyFootnote 1 reports and their direct comparison with patients’ self-reports [13–20]. It has also been noted that proxy reports may constitute a valid perspective in their own right, regardless of their correspondence with patients’ ratings [22, 23].

Stroke-specific studies that have included participants with aphasia are in agreement with the more general literature that patient and proxy respondents demonstrate higher agreement on ratings of more directly observable domains (e.g., physical function vs. energy) and that proxies tend to rate patients as more limited than patients rate themselves [16–18]. In these studies, the strength of association between patient and proxy reports, expressed as intraclass correlation coefficients, has ranged from 0.50 to 0.70 for language and communication scales. Studies specific to patients with aphasia have produced similar findings [13–15]. Some researchers in this area have concluded that, in cases where patients with aphasia are unable to give valid self-reports, substitution with proxy reports is appropriate [13, 16]. Others have been more cautious [14, 17].

One limitation of these patient–proxy comparison studies is that they have not evaluated whether the scales in question have invariant measurement properties in the two groups. Investigation of measurement invariance asks whether a scale measures the same construct in the same way in two different populations. Questions of measurement invariance may be addressed using latent variable modeling approaches to psychological measurement. Within this framework, observed responses to test items are taken as indicators of unobserved (latent) constructs that are the actual objects of study [24]. Thus, a model relating the observed scores to the underlying latent construct is necessary, and when group comparisons are made, it must be shown that this model is structured similarly for the groups involved [25, 26]. Without demonstration of invariance, between-group comparisons of means, variances, and covariances may be confounded [25, 27, 28]. While investigations of measurement invariance in patient-reported health-status assessment have frequently focused on cultural, ethnic, gender, and age differences [29–35], the issue is equally applicable to potential differences in how patients and their proxies use self-report scales.

A related issue concerns the underlying conceptual structure of communication functioning. In order to evaluate measurement invariance, the structure of the latent variable in question must first be established within a reference population. Among the many instruments that have been developed to assess various aspects of functional communication in aphasia [7–12, 22, 36–43], there is a general lack of a unifying conceptual structure [40] and much variability in how the construct has been operationalized [44]. Some instruments propose multiple subdomains of communication functioning that may be assessed individually or in combination [36, 41] others provide only an overall score [22, 39], and still others have chosen to measure communication as an undifferentiated aspect of general cognition [33, 34].

In this context, we have begun to develop a new self- and surrogate-reportedFootnote 2 instrument for measuring communication functioning in persons with aphasia: the aphasia communication outcome measure (ACOM). Initial steps in developing the ACOM item pool were reported in a prior paper [45]. In the present study, we asked the following questions: (1) Do items describing self- and surrogate-reported communication functioning in aphasia reflect a single unidimensional scale? We plan to develop one or more communication functioning item banks calibrated to an item response theory model [43]. Because the most easily applied item response theory models assume unidimensionality, the present paper is focused on defining valid single-factor scales. (2) Do self- and surrogate ratings of communication functioning demonstrate measurement invariance? That is, can they be interpreted and directly compared using a common scale? (3) To what extent do self- and surrogate ratings of communication functioning agree? (4) Are persons with severe aphasia able to provide meaningful self-reports about their own communication functioning?

Methods

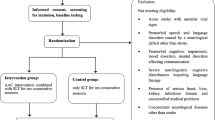

Participants were 133 persons with aphasia (PWAs) and 133 surrogate respondents. PWAs met the following inclusion criteria: diagnosis of aphasia ≥ 1 months post-onset; community dwelling; self-reported normal pre-morbid speech-language function; pre-morbid literacy with English as a first language; negative self-reported history of progressive neurological disease, psychopathology, and substance abuse; ≥ 0.6 delayed/immediate ratio on Arizona Battery for Communication Disorders of Dementia Story Retell [46]; ≤ 5 self-reported depressive symptoms on the 15-item Geriatric Depression Rating Scale [47]; and Boston Diagnostic Aphasia Exam severity rating ≥ 1. Surrogate (SUR) respondents met similar criteria, except for diagnosis of aphasia and reported weekly or more frequent contact with their respective PWA both prior to and after aphasia onset. A subset of the PWAs (n = 116) was also administered the Porch Index of Communicative Ability [48], a performance-based test of communication impairment. Demographic and clinical characteristics of the sample are summarized in Tables 1 and 2.

The initial ACOM item pool was comprised of 177 items describing various communication activities. The content of the items is presented in Appendices A and B. Participants were asked to rate on a 4-point scale (not at all, somewhat, mostly, completely) how effectively the PWA performs each activity. “Effectively” was defined as “accomplishing what you want to, without help, and without too much time or effort.” Respondents were also permitted to indicate that they had no basis for rating a particular item or that the PWA did not do the activity in question for some reason other than his/her aphasia, in which cases the responses were coded as missing data. For example, many surrogates indicated that they had no basis for rating the item “get help in an emergency” because they had never observed their partner do this, and many PWAs responded similarly because they had not experienced any emergencies since the onset of their aphasia.

Responses from PWAs and surrogates were collected separately by trained research staff using an interviewer-assisted administration format. Each item was displayed on a computer screen in large font along with the stem “How effectively do you…” (for PWAs) or “How effectively does your partner…” (for surrogates). The examiner read each item aloud and also permitted the respondent to read it. The computer screen also displayed a vertical bar representing the response categories with text labels. Participants were permitted to give their responses verbally, by pointing to the screen, or a combination. In cases where there was any uncertainty about the validity of the response, the examiner verified the response by verbally repeating the item and the response back to the participant and also indicating the chosen category on the screen.

Analyses and results

Item reduction

To address our research questions, we took a factor-analytic approach, using Mplus version 5.2 [49] with the weighted least squares mean-and-variance-adjusted estimator. We began the analysis by collapsing item response categories with < 10 observed responses in either the PWA or SUR data with adjacent categories. For example, if the response category “completely” was used for a particular item by fewer than ten PWA, we collapsed “completely” with “mostly” and treated these two responses as the same for this particular item. Also, we excluded items with ≥ 5 % missing responses for either the PWA or SUR. Missing data were handled with pairwise deletion. Items retained in the analyses (n = 101) described below are presented in Online Resource 1. Items excluded by the missing data criterion (n = 76) are presented in Online Resource 2.

An initial attempt to fit the 101 retained items to single-factor model yielded poor fit for both the PWA and SUR data [comparative fit index (CFI) < 0.9, Tucker–Lewis Index (TLI) < 0.95, and root mean square error of approximation (RMSEA) > 0.10]. Next, we performed separate exploratory factor analyses on the PWA and SUR data. A three-factor model provided marginally adequate fit for both PWA (CFI = 0.949, TLI = 0.971, RMSEA = 0.074) and SUR (CFI = 0.949, TLI = 0.979, RMSEA = 0.081).

The factors identified in these exploratory models defined coherent groupings of item content and were predominantly consistent across the two sources of report. The item content and salient loadings (> 0.4) are presented in Online Resource 1. For both groups, the items that loaded onto the first factor were primarily related to verbal expression (talking), with the second and third factors related to writing (including typing) and comprehension (both auditory and written), respectively. The factor correlation matrix, presented in Table 3, was similar across the PWA and SUR samples.

Based on the above analysis, we selected three item subsets, henceforth referred to as domains, based on the content groupings identified by the three factors that the PWA and SUR participants had in common: Talking, Comprehension, and Writing. The subsequent analysis steps were carried out separately for each domain and included: item reduction, testing of measurement invariance, and analysis of patient–surrogate agreement.

First, we fit a series of unidimensional confirmatory factor models separately for the PWA and SUR items within each domain. When a one-factor model demonstrated poor fit, an exploratory model was estimated and items with non-salient loadings on the primary factor were excluded until adequate fit to a unidimensional model was achieved. We also inspected the model modification indices provided by Mplus and excluded items that contributed substantially to model misfit. We considered a model to have adequate fit when the following criteria were met: CFI > 0.95, TLI > 0.95, RMSEA < 0.08, and weighted root mean square residual (WRMR) < 1.0 [47].Footnote 3 In excluding items based on the factor analysis results, we also attempted to retain the largest possible groups of items with the most directly related content.

Starting with an initial set of 50 Talking items, we retained 24 items that fit a unidimensional model for both sources of report. The content of the retained items was primarily related to verbal conversation and social interaction, for example, “tell people about yourself” and “start a conversation with other people.” By contrast, much of the excluded item content related to de-contextualized verbal performance, for example, “say the names of clothing items,” and basic communication, for example, “say your name.” Item reduction for the Comprehension domain began with 29 items. Ten items were retained in the final model, all of which described auditory comprehension activities, for example, “follow group conversation,” and “follow tv shows.” For the Writing domain, item reduction began with 18 items. Fourteen items were retained in the final factor model, including “write down a phone message” and “write your name.”

Measurement invariance

To evaluate measurement invariance for each scale, we tested a series of nested confirmatory factor models [24, 25, 28], using the theta parameterization option in Mplus and the DIFFTEST option for Chi-square difference testing of nested models. Because of the potential dependency between the PWA and SUR item pairs with identical content, we did not conduct a traditional multiple group analysis, but instead treated the paired PWA and SUR responses as a single case [50]. We specified a series of 2-factor models in which the PWA responses loaded on the first factor and the SUR responses loaded on the second. In order to model the PWA–SUR dependency, the errors for each item pair were permitted to covary. The first model tested in each domain evaluated configural invariance, which requires that items respond to the same factor(s) in both groups [24]. This model permitted item thresholds, factor loadings, and factor variances to vary across the two groups [49]. Next, we evaluated weak and strong factorial invariance in a single step. Weak invariance requires that factor loadings be equal across groups and permits valid comparisons of estimated factor variances and covariances. Strong invariance adds the constraint that item thresholds are equal for both groups and supports valid comparison of estimated group means [24]. In this second step, we tested a model in which the factor loadings and thresholds for each PWA–SUR item pair were constrained to be equal. Finally, we evaluated strict factorial invariance, which adds the additional constraint that the residual variance for each item must be equivalent in the two groups. When strict factorial invariance is met, observed score variances and covariances may be validly compared, and additional support for the validity of group mean comparisons is provided as well [24]. In each case, we used Chi-square difference testing to evaluate whether the added model constraints significantly (p < 0.05) worsened fit.

As shown in Table 4, the strong invariance model for the Talking scale was rejected. Modification indices showed that the constraints on the factor loadings for two items, “speak to family members and friends on the phone” and “ask questions to get information,” were the largest contributors to the significant Chi-square difference test. We estimated a model in which these constraints were relaxed, permitting the loadings for these items to be freely estimated across patients and surrogates. This partial invariance model [24, 28] was tenable. Table 5 presents the results of measurement invariance testing for the Comprehension scale. The strong and strict invariance models were both tenable. For the Writing scale, shown in Table 6, the strong invariance model was rejected. Modification indices showed that the constraints on the thresholds for the item “dial a telephone number” were the strongest contributors to misfit. A model that estimated separate PWA and SUR thresholds for this item provided support for partial strong invariance. A partial strict invariance model that maintained free estimation of the thresholds for this item also showed adequate fit and a non-significant Chi-square difference test.

Patient–surrogate agreement

Having established measurement invariance for the three scales, we evaluated agreement between self- and surrogate reports in three ways. First, we inspected the correlations between the PWA and SUR factor scores for each scale. The correlations were 0.71, 0.50, and 0.89 for Talking, Comprehension, and Writing, respectively, suggesting moderate-to-strong relationships between self- and surrogate reports in each domain.

Second, we further constrained the restricted invariance factor models described above to test the equality of the means and variances between self- and surrogate reports. For the Talking and Writing scales, the models specifying equal PWA and SUR means were tenable, but the models specifying equal variances were not (see Tables 4, 6). In both cases, the SUR distribution had higher variance. For the Comprehension scale, there were no significant differences between PWA and SUR means or variances.

To evaluate the magnitude of individual PWA–SUR differences and their relationship to overall level of reported functioning, we constructed Bland–Altman plots for each domain [51]. These plots, displayed in Fig. 1, show the PWA–SUR difference as a function of the average of the PWA and SUR scores, which serves as an estimate of the true level of functioning. For the Talking and Writing scales, there was a weak, but statistically significant negative correlation between the PWA–SUR difference and the average. This suggests that for PWA with lower reported functioning, SUR participants tended to underestimate ability relative to PWA, and for PWA with higher reported functioning, SUR participants tended to overestimate ability relative to PWA. We also used the estimated reliability for each scale (Talking: 0.94; Comprehension: 0.86; Writing: 0.93) to compute the 95 % CI about the assumption of a null difference between individual PWA and SUR score pairs. These confidence intervals are shown in Fig. 1. Cases falling outside these intervals showed statistically significant disagreement at p < 0.05. Thirty-three percent of PWA–SUR differences were significant on the Talking scale, 26 % were significant on the Comprehension scale, and 15 % were significant on the Writing scale.

Bland–Altman plots for each ACOM scale. The plots for Talking and Writing demonstrate a weak but significant tendency for SUR respondents to give more extreme scores than PWA respondents. The dashed lines in each plot mark the 95 % CI about the assumption of null PWA–SUR difference. Points outside these lines indicate significant disagreement at p < 0.05

Effects of comprehension impairment on patient responses

Finally, in order to evaluate whether comprehension impairment negatively affected PWAs’ ability to provide meaningful responses, we conducted an additional series of factor analyses. We included in these analyses only the 116 participants for whom we had PICA scores, and we began by stratifying this sample into two sub-groups based on comprehension performance. Specifically, we divided the sample into groups with severe (n = 39), and mild or moderate (n = 77) comprehension impairments based on the average of their raw scores on the PICA auditory and reading comprehension subtests.

We then evaluated measurement invariance between the severely impaired sub-sample and the remaining participants, using an approach similar to that described above. This analysis was motivated by the hypothesis that if comprehension impairment prevented participants with severe aphasia from understanding and validly responding to the questions, this should be reflected in non-invariant parameter estimates for the severe group compared with the rest of the sample. Put differently, if participants with severe aphasia were responding based on incorrect understanding of the items, the items’ positions relative to one another on the latent trait scale and the relative strength of their relationships to the latent trait should be affected. The major difference between the present analyses and the analyses of PWA–SUR invariance described above was that in this case the sub-samples were independent, permitting us to conduct traditional multiple group analyses in which only one factor for each scale was specified. Also, for these analyses, we tested only configural, weak, and strong invariance, because tests of strict invariance are not particularly relevant for this question.

The results of these analyses are presented in Table 7. For the Talking and Comprehension scales, the Chi-square difference tests were not significant, suggesting that severity of comprehension impairment was not associated with reliable differences in factor loadings or intercepts. For the Writing scale, the test was significant (p = 0.048). Inspection of the modification indices revealed that the constrained intercepts for the item “communicate by email” were the single largest contributor to model misfit. Participants with severe comprehension impairment found this item to be harder (relative to the other items in the Writing scale) than did the participants with mild-to-moderate comprehension impairment. With this constraint relaxed, the Chi-square difference test was no longer significant.

Discussion

This is the first investigation of agreement between patient and proxy reports of communication functioning in aphasia that has demonstrated measurement invariance of the scales in question, a necessary precondition for making the comparison. The first aim of this study was to evaluate whether self- and surrogate-reported communication functioning can be measured on the same unidimensional scale. We conducted a series of exploratory and confirmatory factor analyses to reduce a large initial item pool to form three single-factor scales: Talking, Comprehension, and Writing. The Comprehension scale demonstrated full strict measurement invariance between self- and surrogate reports. The Talking and Writing scales demonstrated partial strict invariance, after relaxing cross-group equality constraints on a small number of parameters in each model.

The second aim of this study was to evaluate the level of agreement between self- and surrogate-reported communication functioning. Correlations between PWA and SUR factor scores for Talking (0.71) and Comprehension (0.50) were moderately strong, while the correlation between Writing scores was stronger (0.89). This replicates the previous finding, noted above [13, 16, 17], that patients and proxies show better agreement on reports of functioning in more directly observable domains. Finally, we evaluated whether aphasic comprehension impairment prevented participants with severe aphasia from responding meaningfully to the items. Factor analyses of the ACOM scales using participant sub-samples stratified by severity of comprehension impairment suggested that even the participants with the most severe aphasia understood the questions sufficiently well to provide meaningful and coherently related responses.

Regarding self- and surrogate agreement, testing of nested confirmatory factor models in each domain further suggested that there was no average bias for surrogates to over- or under-report functioning relative to PWA. This finding contrasts with prior reports that proxies are generally biased to report lower functioning and/or well-being [13, 14, 17]. We also found that surrogate-reported scores had higher variance than self-reported scores in two domains, Talking and Writing. The Bland–Altman plots presented in Fig. 1 offer perspective on this finding. They show a weak but significant tendency for surrogates to assign more extreme scores than PWA in both domains. Thus, for PWA with lower ability in a given domain, SUR reports tended to result in lower score estimates and for PWA with higher ability, SUR reports tended to result in higher score estimates.

The plots in Fig. 1 also show that, despite the moderate-to-strong relationships between self- and surrogate reports, there was statistically significant disagreement in a substantial number of individual cases. Although the present analyses do not establish the clinical meaningfulness of the observed differences, we do note that for the Talking and Comprehension scales, the standard deviation of the PWA–SUR differences (0.83 and 0.94, respectively) was comparable to the standard deviation of the PWA scale scores (0.93 and 0.89, respectively). Thus, despite the lack of overall bias and moderately strong association between self- and surrogate reports, we conclude that substituting the latter for the former is inadvisable.

The construction of invariant scales is necessary for direct comparisons of self- and surrogate reports and is fundamental to any research directed at understanding the disagreements between patients and their surrogate raters. However, invariant scales will in most cases be shorter than scales that are not subject to this requirement. For example, the current Talking scale contained 24 items demonstrating configural factorial invariance across self- and surrogate reports, out of the initial 50-item pool for the Talking domain. Had we not required invariance, an additional 9 items would have been retained in the single-factor model for the SUR data. Likewise, a unidimensional Comprehension scale based solely on the PWA data would have retained all 29 items identified with that factor in the initial exploratory analysis.

This exclusion of item content in the service of measurement invariance has two potential negative effects [52]. First, it reduces reliability. On first consideration, this might not seem like pressing concern, given the adequate reliability for group measurement of the three invariant scales reported here. However, the ACOM scales are also intended for clinical use with individual patients, which requires a minimum reliability of 0.90, or more preferably 0.95 [53]. Also, it is our intention to make the ACOM available in a computerized adaptive testing (CAT) format where larger item banks are desirable. Second, item exclusion may reduce content validity. This concern is particularly relevant to the ACOM Comprehension scale. Although the initial exploratory factor analyses suggested that auditory and reading comprehension were associated with a single factor for both PWA and SUR respondents, exclusion of all reading comprehension items was necessary to obtain configural invariance. Thus, if the goal is not to directly compare self- and surrogate reports, but instead to measure outcomes for the purpose of evaluating an intervention or a service delivery model, then the costs of achieving measurement invariance may not be justified. Self- and surrogate reports on non-invariant scales could still be obtained and used as alternative perspectives on outcome that are not directly comparable to one another.

One limitation of this study concerns the exploratory nature of the analyses used to derive the scales. In developing the ACOM, we cautiously proposed that a large item pool with relatively diverse item content might nevertheless approximate unidimensionality [45]. This hypothesis was based on prior work with patient- and surrogate-reported scales of communication functioning in aphasia [54–56] and factor-analytic studies of performance-based language functioning in aphasia [57, 58]. However, initial analyses of the current data set clearly disconfirmed this hypothesis. We elected therefore to pursue construction of modality-based scales for the domains of Talking, Comprehension, and Writing. This inconsistency with prior results may be due in part to the fact that the present investigation employed more rigorous tests of dimensionality. In any case, the fact that each of the ACOM domain scales reported here was constructed from larger initial item pools through exploratory analyses means that their good fit to the measurement models tested here may have resulted from particular characteristics of the participant sample and may not generalize to other samples. Thus, it will be important to cross-validate these results in an independent sample.

Two other limitations concern the question of whether participants with severe aphasia were able to understand the questions sufficiently well to provide meaningful responses. First, in order to evaluate this question, it was necessary to split the sample into smaller sub-samples, resulting in increased estimation error for model parameters and lower power for detecting differences between models. For this reason, our findings related to this question should be taken as preliminary rather than definitive. Second, while the current sample did include individuals with severe comprehension impairments, individuals with profound comprehension impairments (i.e., with BDAE severity ratings of 0, indicating no usable speech or comprehension) were excluded from the study. Thus, we do not claim based on our results that all persons with aphasia can provide meaningful self-reports about their own communication functioning, but rather that significant comprehension impairments do not necessarily prevent persons with aphasia from responding meaningfully to well-constructed and administered questions.

A final limitation of the present study concerns the heterogeneity of the participant sample with respect to time post-onset and frequency of contact between patients and surrogates. Either of these variables could conceivably affect the factor structure of the instrument and/or PWA–surrogate agreement. However, constraining our participant selection criteria with respect to these variables would have made it difficult or impossible to obtain the sample size necessary to address the questions of primary interest. However, post hoc analyses of measurement invariance with respect to time post-onset (≤ 36 vs. > 36 months) suggested that factor loadings and intercepts were consistent for all three scales (all Chi-square difference test p values > 0.09). As with the analyses of comprehension severity, these results should be interpreted cautiously because of the small sample size. Also, time post-onset did not correlate significantly with signed or absolute patient–surrogate agreement for any of the scales (Pearson r’s ranged from −0.25 to 0.14, all ps > 0.12). Likewise, separate analysis of the PWA–surrogate pairs reporting daily or more frequent contact produced results that were not materially different from the full analyses reported above, and frequency of contact was not significantly correlated with PWA–surrogate agreement. In any case, these issues remain important avenues for further investigation.

Despite these limitations, the current findings have important implications for the development of patient- and surrogate-reported measures of communication functioning in aphasia. First, it is clear from the present results that patient and surrogate reports represent distinct perspectives and are not interchangeable. A second, related conclusion is that attempts to develop interchangeable scales that are equivalent for patients and surrogates may result in scales with restricted item content that may fail to capture the full range of relevant behavior and are too brief to provide reliable measurement [52]. It is therefore likely that future work on the ACOM will de-emphasize efforts to develop parallel scales for patients and surrogates. Instead, our focus will be on developing maximally reliable and valid scales for each source of report, without requiring them to be directly comparable to one another.

Notes

The term “proxy” has been used with two distinct meanings in the literature. Some authors have used the term to refer to a person close the patient who responds as he or she believes that the patient would respond [9, 16]. Others have used the term to refer to a person close to the patient who provides his or her own assessment, without considering how the patient might respond [12, 14]. In still other cases, the meaning is not clearly specified [13].

We use the term “surrogate” here to specify the second meaning of the word “proxy” discussed above in Footnote 1, i.e., a person close to the patient who provides his or her own assessment, without trying to respond as he or she thinks that the patient would respond.

The CFI and TLI are measures of incremental or relative fit that compare the tested model to a null model, which assumes that there are no relationships between any of the observed variables. They both adjust for model complexity, the CFI with an expression that subtracts the model degrees of freedom from the model Chi-square value, while the TLI is based on the ratio of the Chi-square to its degrees of freedom. CFI and TLI values of zero indicate worst possible fit, while values close to 1 indicate relatively good fit. The RMSEA is a badness-of-fit measure where a value of zero indicates best possible fit. It is based on the model Chi-square, its degrees of freedom, and the sample size. The WRMR is a newer statistic that measures the weighted average difference between the observed and model-estimated population variances and covariances.

Abbreviations

- ACOM:

-

Aphasia communication outcome measure

- CFI:

-

Comparative fit index

- PWA:

-

Person with aphasia

- RMSEA:

-

Root mean square error of approximation

- SUR:

-

Surrogate

- TLI:

-

Tucker–Lewis index

- WRMR:

-

Weighted root mean square residual

References

Darley, F. L. (1982). Aphasia. Philadelphia, PA: W.B. Saunders.

Chapey, R., & Hallowell, B. (2001). Introduction to language intervention strategies in adult aphasia. In R. Chapey (Ed.), Language intervention strategies in aphasia and related neurogenic communication disorders (pp. 3–17). Baltimore, MD: Lippincott Williams and Wilkins.

Kauhanen, M. L., Korpelainen, J. T., Hiltunen, P., et al. (2000). Aphasia, depression, and non-verbal cognitive impairment in ischaemic stroke. Cerebrovascular Diseases, 10, 455–461.

Code, C. (2010). Aphasia. In J. S. Damico, N. Muller, & M. J. Ball (Eds.), The handbook of speech and language disorders (pp. 317–338). West Sussex, UK: Wiley-Blackwell.

Williams, L. S., Weinberger, M., Harris, L. E., et al. (1999). Development of a stroke-specific quality of life scale. Stroke, 30, 1362–1369.

Duncan, P. W., Wallace, D., Lai, S. M., et al. (1999). The stroke impact scale version 2.0: Evaluation of reliability, validity, and sensitivity to change. Stroke, 30, 2131–2140.

Doyle, P. J., McNeil, M. R., Mikolic, J. M., et al. (2004). The Burden of Stroke Scale (BOSS) provides valid and reliable score estimates of functioning and well-being in stroke survivors with and without communication disorders. Journal of Clinical Epidemiology, 57, 997–1007.

Hilari, K., Byng, S., Lamping, D. L., et al. (2003). Stroke and Aphasia Quality of Life Scale-39 (SAQOL-39): evaluation of acceptability, reliability, and validity. Stroke, 34, 1944–1950.

Long, A., Hesketh, A., Paszek, G., et al. (2008). Development of a reliable self-report outcome measure for pragmatic trials of communication therapy following stroke: the Communication Outcome after Stroke (COAST) scale. Clinical Rehabilitation, 22, 1083–1094.

Chue, W. L., & Rose, M. L. (2010). The reliability of the Communication Disability Profile: A patient-reported outcome measure for aphasia. Aphasiology, 64, 940–956.

Glueckauf, R. L., Blonder, L. X., Ecklund-Johnson, E., et al. (2003). Functional outcome questionnaire for aphasia: Overview and preliminary psychometric evaluation. NeuroRehabilitation, 18, 281–290.

Paul, D. R., Fratalli, C. M., Holland, A. L., et al. (2004). Quality of communication life scale. Rockville, MD: The American Speech-Language-Hearing Association.

Hilari, K., Owen, S., & Farrelly, S. J. (2007). Proxy and self-report agreement on the Stroke and Aphasia Quality of Life Scale-39. Journal of Neurology Neurosurgery and Psychiatry, 78, 1072–1075.

Cruice, M., Worrall, L., Hickson, L., et al. (2005). Measuring quality of life: Comparing family members’ and friends’ ratings with those of their aphasic partners. Aphasiology, 19, 111–129.

Hesketh, A., Long, A., & Bowen, A. (2010). Agreement on outcome: Speaker, carer, and therapist perspectives on functional communication after stroke. Aphasiology, 25, 291–308.

Duncan, P. W., Lai, S. M., Tyler, D., et al. (2002). Evaluation of proxy responses to the Stroke Impact Scale. Stroke, 33, 2593–2599.

Williams, L. S., Bakas, T., Brizendine, E., et al. (2006). How valid are family proxy assessments of stroke patients’ health-related quality of life? Stroke, 37, 2081–2085.

Sneeuw, K. C., Aaronson, N. K., de Haan, R. J., et al. (1997). Assessing quality of life after stroke. The value and limitations of proxy ratings. Stroke, 28, 1541–1549.

Rautakoski, P., Korpijaakko-Huuhka, A.-M., & Klippi, A. (2008). People with severe and moderate aphasia and their partners as estimators of communicative skills: A client-centred evaluation. Aphasiology, 22, 1269–1293.

Skolarus, L. E., Sanchez, B. N., Morgenstern, L. B., et al. (2010). Validity of proxies and correction for proxy use when evaluating social determinants of health in stroke patients. Stroke, 41, 510–515.

Doyle, P. J., McNeil, M. R., Hula, W. D., et al. (2003). The Burden of Stroke Scale (BOSS): Validating patient-reported communication difficulty and associated psychological distress in stroke survivors. Aphasiology, 17, 291–304.

Lomas, J., Pickard, L., Bester, S., et al. (1989). The communicative effectiveness index: Development and psychometric evaluation of a functional communication measure for adult aphasia. Journal of Speech and Hearing Disorders, 54, 113–124.

Long, A., Hesketh, A., & Bowen, A. (2011). Communication outcome after stroke: A new measure of the carer’s perspective. Clinical Rehabilitation, 23, 846–856.

Meredith, W., & Teresi, J. A. (2006). An essay on measurement and factorial invariance. Medical Care, 44, S69–S77.

Meredith, W. (1993). Measurement invariance, factor analysis and factorial invariance. Psychometrika, 58, 525–543.

Borsboom, D. (2006). The attack of the psychometricians. Psychometrika, 71, 425–440.

Vandenberg, R. J., & Lance, C. E. (2000). A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods, 3, 4–70.

Gregorich, S. E. (2006). Do self-report instruments allow meaningful comparisons across diverse population groups? Testing measurement invariance using the confirmatory factor analysis framework. Medical Care, 44, S78–S94.

Baas, K. D., Cramer, A. O., Koeter, M. W., et al. (2011). Measurement invariance with respect to ethnicity of the Patient Health Questionnaire-9 (PHQ-9). Journal of Affective Disorders, 129, 229–235.

Sousa, R. M., Dewey, M. E., Acosta, D., et al. (2010). Measuring disability across cultures–the psychometric properties of the WHODAS II in older people from seven low- and middle-income countries. The 10/66 Dementia Research Group population-based survey. International Journal of Methods Psychiatric Research, 19, 1–17.

Rivera-Medina, C. L., Caraballo, J. N., Rodriguez-Cordero, E. R., et al. (2010). Factor structure of the CES-D and measurement invariance across gender for low-income Puerto Ricans in a probability sample. Journal of Consulting and Clinical Psychology, 78, 398–408.

Heckman, B. D., Berlin, K. S., Watakakosol, R., et al. (2011). Psychosocial headache measures in Caucasian and African American headache patients: psychometric attributes and measurement invariance. Cephalalgia, 31, 222–234.

Coster, W. J., Haley, S. M., Ludlow, L. H., et al. (2004). Development of an applied cognition scale to measure rehabilitation outcomes. Archives of Physical Medicine and Rehabilitation, 85, 2030–2035.

Haley, S. M., Coster, W. J., Andres, P. L., et al. (2004). Activity outcome measurement for postacute care. Medical Care, 42, I49–I61.

Zhang, B., Fokkema, M., Cuijpers, P., et al. (2011). Measurement invariance of the center for epidemiological studies depression scale (CES-D) among chinese and dutch elderly. BMC Medical Research Methodology, 11, 74.

Taylor, M. L. (1965). A measurement of functional communication in aphasia. Archives of Physical Medicine and Rehabilitation, 46, 101–107.

Blomert, L., Kean, M.-L., Koster, C., et al. (1994). Amsterdam-Nijmegen everyday language test: Construction, reliability and validity. Aphasiology, 8, 381–407.

Lincoln, N. B. (1982). The speech questionnaire: An assessment of functional langauge ability. International Rehabilitation Medicine, 4, 114–117.

Holland, A. L., Frattali, C., & Fromm, D. (1999). Communication activities of daily living (2nd ed.). Austin, TX: Pro-Ed.

Bayles, K. A., & Tomoeda, C. K. (1994). Functional linguistic communication inventory. Phoenix: Canyonlands.

Frattali, C. M., Thompson, C. K., Holland, A. L., et al. (1995). The Amercian Speech-Language-Hearing Association functional assessment of communication skills for adults (ASHA FACS). Rockville, MD: American Speech-Language Hearing Association.

Holland, A. L. (1980). Communicative activities in daily living. Baltimore: University Park Press.

Wirz, S., Skinner, C., & Dean, E. (1990). Revised Edinburgh Functional Communication Profile. Tucson, AZ: Communication Skill Builders.

Frattali, C. M. (1992). Functional assessment of communication: Merging public policy with clinical views. Aphasiology, 6, 63–83.

Doyle, P. J., McNeil, M. R., Le, K., et al. (2008). Measuring communicative functioning in community dwelling stroke survivors: Conceptual foundation and item development. Aphasiology, 22, 718–728.

Bayles, K. A., & Tomoeda, C. K. (1993). Arizona Battery for Communication Disorders of Dementia. Tucson, AZ: Canyonlands Publishing, Inc.

Sheikh, J. I., & Yesavage, J. A. (1986). Geriatric Depression Scale (GDS) Recent Evidence and Development of a Shorter Version. In T. L. Brink (Ed.), Clinical Gerontology: A Guide to Assessment and Intervention (pp. 165–173). New York: Hawthorn Press.

Porch, B. (2001). Porch index of communicative ability. Albuquerque, NM: PICA Programs.

Muthén, L. K., & Muthén, B. O. (2007). Mplus User’s Guide (5th ed.). Los Angeles, CA: Muthén & Muthén.

South, S. C., Krueger, R. F., & Iacono, W. G. (2009). Factorial Invariance of the Dyadic Adjustment Scale across Gender. Psychological Assessment, 21, 622–628.

Bland, J. M., & Altman, D. G. (1986). Statistical methods for assessing agreement between two methods of clinical measurement. Lancet, 1, 307–310.

McHorney, C. A., & Fleishman, J. A. (2006). Assessing and understanding measurement equivalence in health outcome measures. Issues for further quantitative and qualitative inquiry. Medical Care, 44, S205–S210.

Nunnally, J. C., & Bernstein, I. H. (1994). Psychometric theory (3rd ed.). New York: McGraw-Hill.

Doyle, P. J., Hula, W. D., McNeil, M. R., et al. (2005). An application of Rasch analysis to the measurement of communicative functioning. Journal of Speech, Language, and Hearing Research, 48, 1412–1428.

Donovan, N. J., Rosenbek, J. C., Ketterson, T. U., et al. (2006). Adding meaning to measurement: Initial Rasch analysis of the ASHA FACS Social Communication Subtest. Aphasiology, 20, 362–373.

Rodriguez, A., Donovan, N. J, Velozo, C. A., et al. (2007) Measurement properties of the functional outcomes questionnaire for aphasia. Presented to the Clinical Aphasiology Conference, Scottsdale, AZ. Clinical Aphasiology Conference.

Schuell, H., Jenkins, J. J., & Carrol, J. B. (1962). A factor analysis of the Minnesota test for the differential diagnosis of aphasia. Journal of Speech and Hearing Research, 5, 350–369.

Clark, C., Crockett, D. J., & Klonoff, H. (1979). Factor analysis of the porch index of communication ability. Brain and Language, 7, 1–7.

Acknowledgments

The authors gratefully acknowledge the assistance of Beth Friedman, Jessica Rapier, Mary Sullivan, Brooke Swoyer, Neil Szuminsky, and Sandra Wright.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Doyle, P.J., Hula, W.D., Austermann Hula, S.N. et al. Self- and surrogate-reported communication functioning in aphasia. Qual Life Res 22, 957–967 (2013). https://doi.org/10.1007/s11136-012-0224-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-012-0224-5