Abstract

For Markov chains with a finite, partially ordered state space, we show strong stationary duality under the condition of Möbius monotonicity of the chain. We give examples of dual chains in this context which have no downwards transitions. We illustrate general theory by an analysis of nonsymmetric random walks on the cube with an interpretation for unreliable networks of queues.

Similar content being viewed by others

1 Introduction

The motivation of this paper stems from a study on the speed of convergence to stationarity for unreliable queueing networks, as in Lorek and Szekli [13]. The problem of bounding the speed of convergence for networks is a rather complex one, and is related to transient analysis of Markov processes, spectral analysis, coupling or duality constructions, drift properties, monotonicity properties, among others (see for more details Dieker and Warren [9], Aldous [1], Lorek and Szekli [13]). In order to give bounds on the speed of convergence for some unreliable queueing networks, it is necessary to study the availability vector of unreliable network processes. This vector is a Markov chain with the state space representing sets of stations with down or up status via the power set of the set of nodes (typical state is the set of broken nodes). Such a chain represents at the same time a random walk on the vertices of the finite dimensional cube. We are concerned in this paper with walks on the vertices of the finite dimensional cube which are up–down in the natural (inclusion) ordering on the power set. This study is a special case of a general duality construction for monotone Markov chains.

To be more precise, we shall study strong stationary duality (SSD) which is a probabilistic approach to the problem of speed of convergence to stationarity for Markov chains. SSD was introduced by Diaconis and Fill [7]. This approach involves strong stationary times (SST) introduced earlier by Aldous and Diaconis [2, 3] who gave a number of examples showing useful bounds on the total variation distance for convergence to stationarity in cases where other techniques utilizing eigenvalues or coupling were not easily applicable. A strong stationary time for a Markov chain (X n ) is a stopping time T for this chain for which X T has the stationary distribution π and is independent of T. Diaconis and Fill [7] constructed an absorbing dual Markov chain with its absorption time equal to the strong stationary time T for (X n ). In general, there is no recipe for constructing particular dual chains. However, a few cases are known and tractable. One of the most basic and interesting ones is given by Diaconis and Fill [7] (Theorem 4.6) when the state space is linearly ordered. In this case, under the assumption of stochastic monotonicity for the time reversed chain, and under the condition that for the initial distribution ν, ν≤ mlr π (that is, for any k 1>k 2, \({\nu(k_{1})\over\pi(k_{1})}\leq{\nu(k_{2})\over\pi(k_{2})}\)) it is possible to construct a dual chain on the same state space. A special case is a stochastically monotone birth-and-death process for which the strong stationary time has the same distribution as the time to absorption in the dual chain, which turns out to be again a birth-and-death process on the same state space. Times to absorption are usually more tractable objects in a direct analysis than times to stationarity. In particular, a well-known theorem, usually attributed to Keilson, states that, for an irreducible continuous-time birth-and-death chain on \(\mathbb {E}=\{0,\ldots ,M\}\), the passage time from state 0 to state M is distributed as a sum of M independent exponential random variables. Fill [11] uses the theory of strong stationary duality to give a stochastic proof of an analogous result for discrete-time birth-and-death chains and geometric random variables. He shows a link for the parameters of the distributions to eigenvalue information about the chain. The obtained dual is a pure birth chain. Similar structure holds for more general chains. An (upward) skip-free Markov chain with the set of nonnegative integers as a state space is a chain for which upward jumps may be only of unit size; there is no restriction on downward jumps. Brown and Shao [5] determined, for an irreducible continuous-time skip-free chain and any M, the passage time distribution from state 0 to state M. When the eigenvalues of the generator are all real, their result states that the passage time is distributed as the sum of M independent exponential random variables with rates equal to the eigenvalues. Fill [12] gives another proof of this theorem. In the case of birth-and-death chains, this proof leads to an explicit representation of the passage time as a sum of independent exponential random variables. Diaconis and Miclo [8] recently obtained such a representation, using an involved duality construction; for some recent references related to duality and stationarity, see this paper.

Our main result is an SSD construction which generalizes the above mentioned construction of Diaconis and Fill [7]. We consider a partially ordered state space instead of a linearly ordered one and utilize Möbius monotonicity instead of the usual stochastic monotonicity. This construction opens new ways to study particular Markov chains by a dual approach and is of independent interest. It has a special feature that the dual state space is again the same state space as for the original chain, similarly as in SSD for birth-and-death processes. Moreover, we show that the dual chain can have an upwards drift in the sense that it has no downwards transitions. We formulate the main result in Sect. 3, explaining the needed notation and definitions in detail in Sect. 2. We elaborate on the topic of Möbius monotonicity because it is almost not present in the literature. The only papers we are aware of are the following two: Massey [14] recalls Möbius monotonicity as considered earlier by Adrianus Kester in his PhD thesis, and proves that Möbius monotonicity implies a weak stochastic monotonicity. The second paper is by Falin [10] where a similar result to the one by Massey can be found. We introduce two versions of Möbius monotonicity, and we define a new notion of Möbius monotone functions which appear in a natural way in our main result on SSD. We characterize Möbius monotonicity by an invariance property on the set of Möbius monotone functions. Utilization of Möbius monotonicity involves a general problem of inverting a sum ranging over a partially ordered set, which appears in many combinatorial contexts; see, for example, Rota [15]. The inversion can be carried out by defining an analog of the difference operator relative to a given partial ordering. Such an operator is the Möbius function, and the analog of the fundamental theorem of calculus obtained in this context is the Möbius inversion formula on a partially ordered set, which we recall in Sect. 2.

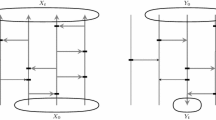

In Sect. 3 we present our main result on SSD with a proof and give some corollaries which show other possible duals, including an alternative dual for linearly ordered state spaces (Corollary 3.2). In Sect. 4 we show an SSD result for nonsymmetric nearest neighbor walks on the finite dimensional cube. It gives an additional insight into the structure of eigenvalues of this chain. It is interesting that the dual (absorbing) chain here is a chain which jumps only upwards to neighboring states or stays at the same state. This structure of the dual chain allows us to read all eigenvalues for the transition matrix P and its dual P ∗ from the diagonal of P ∗ since P ∗ is upper-triangular. The symmetric walk was considered by Diaconis and Fill [7]. They used the symmetry to reduce the problem of the speed of convergence to a birth-and-death chain setting. The problem of the speed of convergence to stationarity for the nonsymmetric case was studied by Brown [4], were the eigenvalues were identified by a different method. Finally, it is worth mentioning that Möbius monotonicity of nonsymmetric nearest neighbor walks is a stronger property than the usual stochastic monotonicity for this chain.

2 SSD, Möbius monotonicity

2.1 Time to stationarity and strong stationary duality

Let P be an irreducible aperiodic transition matrix on a finite, partially ordered state space \((\mathbb {E}, \preceq)\). We enumerate the states using natural numbers ℕ in such a way that for the partial order ⪯, for all i,j∈ℕ, e i ⪯e j implies i<j. Each distribution ν on \(\mathbb {E}\) we regard as a row vector, and ν P denotes the usual vector times matrix multiplication.

Consider a Markov chain X=(X n ) n≥0 with transition matrix P, initial distribution ν, and (unique) stationary distribution π. One possibility of measuring distance to stationarity is to use the separation distance (see Aldous and Diaconis [3]), given by \(s(\nu \mathbf {P}^{n},\pi)=\max_{\mathbf {e}\in \mathbb {E}} (1-\nu \mathbf {P}^{n}(\mathbf {e})/\pi(\mathbf {e}) )\). Separation distance s provides an upper bound on the total variation distance: \(s(\nu \mathbf {P}^{n},\pi)\ge d(\nu \mathbf {P}^{n},\pi ):=\max_{B\subset \mathbb {E}}|\nu \mathbf {P}^{n}(B)-\pi(B)|\).

A random variable T is a Strong Stationary Time (SST) if it is a randomized stopping time for X=(X n ) n≥0 such that T and X T are independent, and X T has distribution π. SST was introduced by Aldous and Diaconis in [2, 3]. In [3], they prove that s(ν P n,π)≤P(T>n) (T implicitly depends on ν). Diaconis [6] gives some examples of bounds on the rates of convergence to stationarity via an SST. However, the method to find an SST is specific to each example.

Diaconis and Fill [7] introduced the so-called Strong Stationary Dual (SSD) chains. Such chains have a special feature, namely for them the SST for the original process has the same distribution as the time to absorption in the SSD one.

To be more specific, let X ∗ be a Markov chain with transition matrix P ∗, initial distribution ν ∗ on a state space \(\mathbb {E}^{*}\). Assume that \(\mathbf {e}^{*}_{a}\) is an absorbing state for X ∗. Let \(\varLambda \equiv \varLambda (\mathbf {e}^{*},\mathbf {e}), \mathbf {e}^{*}\in \mathbb {E}^{*}, \mathbf {e}\in \mathbb {E}\), be a kernel, called a link, such that \(\varLambda (\mathbf {e}_{a}^{*},\cdot )=\pi\) for \(\mathbf {e}^{*}_{a}\in \mathbb {E}^{*}\). Diaconis and Fill [7] prove that if (ν ∗,P ∗) is an SSD of (ν,P) with respect to Λ in the sense that

then there exists a bivariate Markov chain (X,X ∗) with the following marginal properties:

-

X is Markov with the initial distribution ν and the transition matrix P,

-

X ∗ is Markov with the initial distribution ν ∗ and the transition matrix P ∗,

-

the absorption time T ∗ of X ∗ is an SST for X.

Recall that \(\overleftarrow {\mathbf{X}}=(\overleftarrow{X}_{n})_{n\ge0}\) is the time reversed process if its transition matrix is given by

where diag(π) denotes the matrix which is diagonal with stationary vector π on the diagonal.

The following theorem (Diaconis and Fill [7], Theorem 4.6) gives an SSD chain for linearly ordered state spaces under some stochastic monotonicity assumption. In the formulation below, we set g(M+1)=0, \(\overleftarrow{P}(M+1,\{1,\ldots,i\})=0\), for all \(i\in \mathbb {E}\).

Theorem 1

Let X∼(ν,P) be an ergodic Markov chain on a finite state space \(\mathbb {E}=\{1,\ldots,M\}\), linearly ordered by ≤, having initial distribution ν, and stationary distribution π. Assume that

-

(i)

\(g(i)={\nu(i)\over\pi(i)}\) is non-increasing,

-

(ii)

\(\overleftarrow{\mathbf {X}}\) is stochastically monotone.

Then there exists a Strong Stationary Dual chain X ∗∼(ν ∗,P ∗) on \(\mathbb {E}^{*}=\mathbb {E}\) with the following link kernel

where H(j)=∑ k:k≤j π(k). Moreover, the SSD chain is uniquely determined by

Theorem 2 is our main result on SSD chains. It is an extension of Theorem 1 to Markov chains on partially ordered state spaces by replacing monotonicity in condition (i) and stochastic monotonicity in condition (ii) with Möbius monotonicity. We state this theorem in Sect. 3, after introducing required definitions and background material. Theorem 2 reveals the role of Möbius functions in finding SSD chains. Consequently, it is possible to reformulate Theorem 1 in terms of the corresponding Möbius function (in a similar way as in Corollary 3.2).

2.2 Möbius monotonicities

Consider a finite, partially ordered set \(\mathbb {E}=\{\mathbf {e}_{1},\ldots,\mathbf {e}_{M}\}\), and denote a partial order on \(\mathbb {E}\) by ⪯. We select the above enumeration of \(\mathbb {E}\) to be consistent with the partial order, i.e., e i ⪯e j implies i<j.

Let X=(X n ) n≥0∼(ν,P) be a time homogeneous Markov chain with an initial distribution ν and transition function P on the state space \(\mathbb {E}\). We identify the transition function with the corresponding matrix written for the fixed enumeration of the state space. Suppose that X is ergodic with the stationary distribution π.

We shall use ∧ for the meet (greatest lower bound) and ∨ for the join (least upper bound) in \(\mathbb {E}\). If \(\mathbb {E}\) is a lattice, it has unique minimal and maximal elements, denoted by \(\mathbf {e}_{1}:=\hat{\mathbf{0}}\) and \(\mathbf {e}_{M}:=\hat{\mathbf{1}}\), respectively.

Recall that the zeta function ζ of the partially ordered set \(\mathbb {E}\) is defined by: ζ(e i ,e j )=1 if e i ⪯e j and ζ(e i ,e j )=0 otherwise. If the states are enumerated in such a way that e i ⪯e j implies i<j (assumed in this paper), then ζ can be represented by an upper-triangular, 0–1 valued matrix C, which is invertible. It is well known that ζ is an element of the incidence algebra (see Rota [15], p. 344), which is invertible in this algebra, and the inverse to ζ, denoted by μ, is called the Möbius function. Using the enumeration which defines C, the corresponding matrix describing μ is given by the usual matrix inverse C −1.

Throughout the paper, μ will denote the Möbius function of the corresponding ordering.

For the state space \(\mathbb {E}=\{\mathbf {e}_{1},\ldots,\mathbf {e}_{M}\}\) with the partial ordering ⪯, we define the following operators acting on all functions \(f:\mathbb {E}\to \mathbb {R}\)

and

In the matrix notation, we shall use the corresponding bold letters for functions, and we have F=fC, \(\bar{\mathbf{F}}=\mathbf{f}\mathbf {C}^{T}\), where f=(f(e 1),…,f(e M )), F=(F(e 1),…,F(e M )), and \(\bar{\mathbf{F}}=(\bar{F}(\mathbf {e}_{1}),\ldots,\bar{F}(\mathbf {e}_{M}))\).

The following difference operators D ↓ and D ↑ are the inverse operators to the summation operators S ↓ and S ↑, respectively,

and

In the matrix notation, we have g=fC −1 and h=f(C T)−1.

If, for example, the relations (2.2) and (2.3) hold then

and

respectively.

Definition 2.1

For a Markov chain X with the transition function P, we say that P (or alternatively that X) is

-

↓-Möbius monotone if

$$\mathbf {C}^{-1}\mathbf {P}\mathbf {C}\ge0,$$ -

↑-Möbius monotone if

$$\bigl(\mathbf {C}^T\bigr)^{-1}\mathbf {P}\mathbf {C}^T \ge0,$$

where P is the matrix of the transition probabilities written using the enumeration which defines C, and ≥0 means that each entry of a matrix is nonnegative.

Definition 2.2

A function \(f: \mathbb {E}\to \mathbb {R}\) is

-

↓-Möbius monotone if f(C T)−1≥0,

-

↑-Möbius monotone if fC −1≥0.

For example, in terms of the Möbius function μ and the transition probabilities, ↓- Möbius monotonicity of P means that for all \((\mathbf {e}_{i},\mathbf {e}_{j}\in \mathbb {E})\)

where P(⋅,⋅) denotes the corresponding transition kernel, i.e., \(P(\mathbf {e}_{i},\{\mathbf {e}_{j}\}^{\downarrow })=\sum_{\mathbf {e}:\mathbf {e}\preceq \mathbf {e}_{j}} {\mathbf {P}}(\mathbf {e}_{i},\mathbf {e})\), and {e j }↓={e:e⪯e j }. In order to check such a condition, an explicit formula for μ is needed. Note that the above definition for monotonicity can be rewritten as follows, f is ↓-Möbius monotone if for some nonnegative vector m≥0, f=mC T holds, and f is ↑-Möbius monotone if f=mC. The last equality means that f is a nonnegative linear combination of the rows of matrix C. This monotonicity implies that f is non-decreasing in the usual sense (f non-decreasing means: e i ⪯e j implies f(e i )≤f(e j )).

Proposition 2.1

P is ↑-Möbius monotone iff

-

f is ↑-Möbius monotone implies that Pf T is ↑-Möbius monotone.

Proof

Suppose that P is ↑-Möbius monotone, that is, (C T)−1 PC T≥0. Take arbitrary f which is ↑-Möbius monotone, i.e., take f=mC for some arbitrary m≥0. Then (C T)−1 PC T m T≥0, which is (using transposition) equivalent to fP T C −1≥0, which, in turn, gives (by definition) that Pf T is ↑-Möbius monotone. Conversely, for all f=mC, where m≥0, we have fP T C −1≥0 since Pf T is ↑-Möbius monotone. This implies that (C T)−1 PC T m T≥0 and (C T)−1 PC T≥0. □

Many examples can be produced using the fact that the set of Möbius monotone matrices is a convex subset of the set of transition matrices. We shall give some basic examples in Sect. 4. These examples can be used to build up a large class of Möbius monotone matrices.

Proposition 2.2

-

(i)

If P 1 and P 2 are ↑-Möbius monotone (↓-Möbius monotone) then P 1 P 2 is ↑-Möbius monotone (↓-Möbius monotone).

-

(ii)

If P is ↑-Möbius monotone (↓-Möbius monotone) then (P)k is ↑-Möbius monotone (↓-Möbius monotone) for each k∈ℕ.

-

(iii)

If P 1 is ↑-Möbius monotone (↓-Möbius monotone) and P 2 is ↑-Möbius monotone (↓-Möbius monotone) then

$$p\mathbf {P}_1+(1-p)\mathbf {P}_2$$is ↑-Möbius monotone (↓-Möbius monotone) for all p∈(0,1).

Proof

(i) Since (C T)−1 P 1 C T≥0 and (C T)−1 P 2 C T≥0, one has

The statements (ii), (iii) are immediate by definition. □

3 Main result: SSD for Möbius monotone chains

Now we are prepared to state our main result on SSD.

Theorem 2

Let X∼(ν,P) be an ergodic Markov chain on a finite state space \(\mathbb {E}=\{\mathbf {e}_{1},\ldots,\mathbf {e}_{M}\}\), partially ordered by ⪯, with a unique maximal state e M , and with stationary distribution π. Assume that

-

(i)

\(g(\mathbf {e})={\nu(\mathbf {e})\over\pi(\mathbf {e})}\) is ↓-Möbius monotone,

-

(ii)

\(\overleftarrow{\mathbf {X}}\) is ↓-Möbius monotone.

Then there exists a Strong Stationary Dual chain X ∗∼(ν ∗,P ∗) on \(\mathbb {E}^{*}=\mathbb {E}\) with the following link kernel

where \(H(\mathbf {e}_{j})=S_{\downarrow} \pi(\mathbf {e}_{j})=\sum_{\mathbf {e}:\mathbf {e}\preceq \mathbf {e}_{j}}\pi(\mathbf {e})\) (H=π C). Moreover, the SSD chain is uniquely determined by

The corresponding matrix formulas are given by

where g=(g(e 1,…,g(e M )) (row vector).

Proof of Theorem 2

We have to check the conditions (2.1). The first condition given in (2.1), ν=ν ∗ Λ, reads for arbitrary \(\mathbf {e}_{i}\in \mathbb {E}\) as

which is equivalent to

From the Möbius inversion formula (2.4), we get

which gives the required formula. From the assumption that \(g={\nu\over \pi}\) is ↓-Möbius monotone, it follows that ν ∗≥0. Moreover, since ν=ν ∗ Λ and Λ is a transition kernel, it is clear that ν ∗ is a probability vector.

The second condition given in (2.1), Λ P=P ∗ Λ, means that for all \(\mathbf {e}_{i},\mathbf {e}_{j}\in \mathbb {E}\)

Taking the proposed Λ, we have to check that

that is,

Using \({\pi(\mathbf {e})\over\pi(\mathbf {e}_{j})}\mathbf {P}(\mathbf {e},\mathbf {e}_{j})=\overleftarrow {\mathbf {P}}(\mathbf {e}_{j},\mathbf {e})\), we have

For each fixed e i we treat \({1\over H(\mathbf {e}_{i})} \overleftarrow{P}(\mathbf {e}_{j}, \{\mathbf {e}_{i}\}^{\downarrow })\) as a function of e j and again use the Möbius inversion formula (2.4) to get from (3.1)

In the matrix notation, we have

therefore,

Since, from our assumption, \(\mathbf {C}^{-1}\overleftarrow {\mathbf {P}}\mathbf {C}\ge0\), we have P ∗≥0. Now Λ P=P ∗ Λ implies that P ∗ is a transition matrix. □

Note that in the context of Theorem 2, if the original chain starts with probability 1 in the minimal state, i.e., \(\nu=\delta_{\mathbf {e}_{1}}\), then \(\nu^{*}=\delta_{\mathbf {e}_{1}}\).

For \(\mathbb {E}=\{1,\ldots,M\}\), with linear ordering ≤, the Möbius function is given by μ(k,k)=1,μ(k−1,k)=−1, and μ equals 0 otherwise. In this case, the link is given by \(\varLambda (j,i)=\mathbb{I}(i\le j) {\pi(i)\over H(j)}\), and we obtain from Theorem 2 (as a special case) Theorem 1, which is a reformulation of Theorem 4.6 from Diaconis and Fill [7].

In a similar way, we construct an analog SSD chain for ↑-Möbius monotone P. We skip the corresponding matrix formulation and a proof. This analog SSD chain will be used in Corollary 3.2 to give an alternative SSD chain to the one given in Theorem 1.

Corollary 3.1

Let X∼(ν,P) be an ergodic Markov chain on a finite state space \(\mathbb {E}=\{\mathbf {e}_{1},\ldots,\mathbf {e}_{M}\}\), partially ordered by ⪯, with a unique minimal state e 1, and with the stationary distribution π. Assume that

-

(i)

\(g(\mathbf {e})={\nu(\mathbf {e})\over\pi(\mathbf {e})}\) is ↑-Möbius monotone,

-

(ii)

\(\overleftarrow{\mathbf {X}}\) is ↑-Möbius monotone.

Then there exists a Strong Stationary Dual chain X •∼(ν •,P •) on \(\mathbb {E}^{\bullet}=\mathbb {E}\) with the following link

where \(\bar{H}(\mathbf {e}_{j})=S_{\uparrow } \pi(\mathbf {e}_{j})\). Moreover, the SSD is uniquely determined by

Note that in the setting of Corollary 3.1, if the original chain starts with probability 1 in the maximal state, i.e., \(\nu=\delta_{\mathbf {e}_{M}}\), then \(\nu^{\bullet}=\delta_{\mathbf {e}_{M}}\).

From Corollary 3.1 we obtain an alternative dual result for linearly ordered spaces assuming that \({\nu(i)\over\mu(i)} \) is non-decreasing. Roughly speaking, Theorem 1 and Corollary 3.2 describe two complementary situations, namely when an initial distribution for the original chain is in a sense (mlr ordering) smaller or bigger than the stationary distribution, then one can create (and use) different (alternative) dual chains as described in these statements.

Corollary 3.2

Let X∼(ν,P) be an ergodic Markov chain on a finite state space \(\mathbb {E}=\{1,\ldots,M\}\), linearly ordered by ≤, with stationary distribution π. Assume that

-

(i)

\(g(i)={\nu(i)\over\pi(i)}\) is non-decreasing,

-

(ii)

\(\overleftarrow{\mathbf {X}}\) is stochastically monotone.

Then there exists a Strong Stationary Dual chain X •∼(ν •,P •) on \(\mathbb {E}^{\bullet}=\mathbb {E}\) with the following link kernel

where \(\bar{H}(j)=\sum_{k:k\ge j}\pi(k)\). Moreover, the SSD is uniquely determined by

4 Nearest neighbor Möbius monotone walks on a cube

Consider the discrete time Markov chain X={X n ,n≥0}, with the state space \(\mathbb {E}=\{0,1\}^{d}\), and transition matrix P given by

where \(\mathbf {e}=(e_{1},\ldots,e_{d})\in \mathbb {E}, \ \ e_{i}\in\{0,1\}\), and s i =(0,…,0,1,0,…,0) with 1 at the ith coordinate.

We use the following partial order:

To make our presentation simpler, we assume that ν=δ (0,…,0) (this assumption can be waived).

For example, such a Markov chain is a model for a set of working unreliable servers where the repairs and breakdowns of servers are independent for different servers and only one server can be broken or repaired at a transition time.

Assume that all α i and β i are positive and that there exists at least one state e such that P(e,e)>0. Then the chain is ergodic.

Theorem 3

For the Markov chain X={X n ,n≥0}, \(\mathbb {E}=\{0,1\}^{d}\) with the transition matrix P given by (4.1), and ν=δ (0,…,0), assume that \(\sum_{i=1}^{d}(\alpha_{i}+\beta_{i})\leq1\). Then there exists a dual chain on \(\mathbb {E}^{*}=\mathbb {E}\) given by ν ∗=ν, and

Proof

A direct check shows that X is time-reversible with stationary distribution

Let \(|\mathbf {e}|=\sum_{i=1}^{d} e_{i}\). Note that \(\mathbb {E}\) with ⪯ is a Boolean lattice, and the corresponding Möbius function is given by

The assumption \(\sum_{i=1}^{d}(\alpha_{i}+\beta_{i})\leq1\) implies ↓-Möbius monotonicity. Indeed, calculating

we find conditions for its nonnegativity, which implies ↓-Möbius monotonicity of the chain and its time reversed chain:

which is nonnegative (because we assumed that \(\sum_{i=1}^{d}(\alpha_{i}+\beta_{i})\leq1\)).

Fix s i =(0,…,0,1,0,…,0) with 1 in position i. Then

For each state of the form e i=(e 1,…,e i−1,0,e i+1,…,e d ),

Denote by z(e)={k:e k =0} the index set of zero coordinates. We shall compute \({H(\mathbf {e}^{i}+\mathbf {s}_{i})\over H(\mathbf {e}^{i})}\). Let \(G=\prod_{j=1}^{d}(\alpha_{j}+\beta_{j})\).

And thus

and

Now, fix some i e={e 1,…,e i−1,1,e i+1,…,e d }.

Fix j∈{1,…,d}∖z(i e). The following cases are possible:

Summing up all possibilities, we get

For each e, we have

Therefore, we get

□

It is worth mentioning that the condition for ↓-Möbius monotonicity (i.e., \(\sum_{i=1}^{d}(\alpha_{i}+\beta_{i})\le1\)) is equivalent to the condition that all eigenvalues of P are nonnegative.

The time to absorption of the above defined dual chain has the following “balls and bins” interpretation. Consider n multinomial trials with cell probabilities p i =α i +β i ,i=1,…,d and \(p_{d+1}=1-\sum_{i=1}^{d}(\alpha_{i}+\beta_{i})\). Then, the time to absorption of the dual chain P ∗ is equal (in distribution) to the waiting time until all cells are occupied. To be more specific, let T be the waiting time until all cells 1,…,d are occupied and let A n be the event that at least one cell is empty. Then, since T is some SST for P, we have

In particular, for \(\alpha_{i}=\beta_{i}={1\over2d}\), we have P(e,e)=1/2, and \(\mathbf {P}^{*}(\mathbf {e},\mathbf {e})={|\mathbf {e}|\over d}\). Moreover, T is equal in distribution to \(\sum_{i=1}^{d} N_{i}\), where (N i ) are independent, N i has geometric distribution with parameter \({i\over d}\). In the “balls and bins” scheme, \(p_{i}={1\over d}\), i=1,…,d, and p d+1=0. From the coupon collector’s problem solution, we recover a well known bound

4.1 Further research

The problem of finding SSD chains for walks on the cube, which are not nearest neighbor walks, is open and seems to be a difficult one. Moreover, a more difficult task is to find SSD chains which have an upper triangular form (potentially useful for finding bounds on times to absorption). We have some observations for three dimensional cubes which might be of some interest. Consider the random walk on the three-dimensional cube, \(\mathbb {E}=\{0,1\}^{3}\), which is a special case of the random walk given in (4.1). We define on \(\mathbb {E}\) the partial ordering: for all \(\mathbf {e}=(e_{1},e_{2},e_{3})\in \mathbb {E}\), \(\mathbf {e}'=(e'_{1},e'_{2},e'_{3})\in \mathbb {E}\), e⪯e′ iff \(e_{1}\leq e_{1}', e_{2}\leq e_{2}', e_{3}\leq e'_{3}\).

We consider the transition matrix P under the state space enumeration: e 1=(0,0,0), e 2=(1,0,0), e 3=(0,1,0), e 4=(0,0,1), e 5=(1,1,0), e 6=(1,0,1), e 7=(0,1,1), e 8=(1,1,1) of the form

with the dual P ∗

One possibility to extend the model to allow up–down jumps not only to neighboring states is to take powers of the nearest neighbor transitions matrix P, that is, to look at two step chain. It turns out that the matrix P 2 is again Möbius monotone, and has a dual with an upper-triangular form if α=β.

Another way to modify the nearest neighbor walk is to transform some rows of P to get distributions bigger in the supermodular ordering. To be more precise, recall that we say that two random elements X,Y of \(\mathbb {E}\) are supermodular stochastically ordered (and write X≺ sm Y or Y≻ sm X) if Ef(X)≤Ef(Y) for all supermodular functions, i.e., functions such that, for all \(x,y\in \mathbb {E}\),

A simple sufficient criterion for ≺ sm order when \(\mathbb {E}\) is a discrete (countable) lattice is given as follows.

Lemma 4.1

Let P 1 be a probability measure on a discrete lattice ordered space \(\mathbb {E}\) and assume that for not comparable points \(x\neq y\in \mathbb {E}\) we have P 1(x)≥κ and P 1(y)≥κ for some κ>0. Define a new probability measure P 2 on \(\mathbb {E}\) by

Then P 1≺ sm P 2.

If in Lemma 4.1 the state space \(\mathbb {E}\) is the set of all subsets of a finite set (i.e., the cube) then the transformation described in (4.3) is called as in Li and Xu [16] a pairwise g + transform, and Lemma 4.1 specializes then to their Proposition 5.5.

If we modify rows numbered 1, 3, 6, 8 by such a transformation (notice that e 1,e 3,e 6,e 8 lie on a symmetry axis), that is, we consider an up–down walk which allows jumps not only to the nearest neighbors, then it can be checked that it is Möbius monotone, and the dual matrix again has an upper-triangular form, for an appropriate selection of α and κ.

An upper-triangular form of dual matrices gives us the corresponding eigenvalues since they are equal to the diagonal elements. They can be used to find bounds on the speed of convergence to stationarity via

If \(\nu=\delta_{\mathbf {e}_{1}}\), P ∗ has an upper-triangular form, and in addition it has positive values only directly above the diagonal then \(T^{*}=\sum_{i=1}^{M-1} N_{i}\), where N i are independent geometric random variables with parameters 1−λ i ,i=1,…,M−1, where λ 1,…,λ M =1 denote the diagonal entries of P ∗. This case corresponds to a skip-free structure to the right as described for example by Fill [12]. In other cases, it is possible to bound P(T ∗>n) by P(T′>n), where T′ is the time to absorption in a reduced chain representing the stochastically maximal passage time from e 1 to e M . We will skip details of such a possibility giving, however, an example which illustrates this idea. Consider P ∗ given above in (4.2). Analyzing all possible paths from e 1 to e M , we see that T ∗ is stochastically bounded by T′, which is the time to absorption in a chain with the following transition matrix

We have \(T'=\sum_{i=1}^{3} N_{i}\), where N i are independent geometric random variables with parameters i(α+β), i=1,2,3. The expected time to absorption is \(ET' ={11\over6}(\alpha +\beta)\), and using Markov inequality we have (for any c>0)

for \(n=c\cdot{11\over6}(\alpha+\beta)\).

There are several other examples of chains which are Möbius monotone on some other state spaces. We shall study this topic in a subsequent paper.

References

Aldous, D.J.: Finite-time implications of relaxation times for stochastically monotone processes. Probab. Theory Relat. Fields 77, 137–145 (1988)

Aldous, D.J., Diaconis, P.: Shuffling cards and stopping times. Am. Math. Mon. 93, 333–348 (1986)

Aldous, D.J., Diaconis, P.: Strong uniform times and finite random walks. Adv. Appl. Math. 8, 69–97 (1987)

Brown, M.: Consequences of monotonicity for Markov transition functions. Technical report, City college, CUNY (1990)

Brown, M., Shao, Y.S.: Identifying coefficients in the spectral representation for first passage time distributions. Probab. Eng. Inf. Sci. 1, 69–74 (1987)

Diaconis, P.: Group Representations in Probability and Statistics. IMS, Hayward (1988)

Diaconis, P., Fill, J.A.: Strong stationary times via a new form of duality. Ann. Probab. 18, 1483–1522 (1990)

Diaconis, P., Miclo, L.: On times to quasi-stationarity for birth and death processes. J. Theor. Probab. 22, 558–586 (2009)

Dieker, A.B., Warren, J.: Series Jackson networks and non-crossing probabilities. Math. Oper. Res. 35, 257–266 (2010)

Falin, G.I.: Monotonicity of random walks in partially ordered sets. Russ. Math. Surv. 43, 167–168 (1988)

Fill, J.A.: The passage time distribution for a birth-and-death chain: Strong stationary duality gives a first stochastic proof. J. Theor. Probab. 22, 543–557 (2009)

Fill, J.A.: On hitting times and fastest strong stationary times for skip-free and more general chains. J. Theor. Probab. 22, 587–600 (2009)

Lorek, P., Szekli, R.: On the speed of convergence to stationarity via spectral gap: queueing networks with breakdowns and repairs (submitted to JAP). arXiv:1101.0332 [math.PR]

Massey, W.A.: Stochastic ordering for Markov processes on partially ordered spaces. Math. Oper. Res. 12, 350–367 (1987)

Rota, G.C.: On the foundations of combinatorial theory I. Theory of Möbius functions. Z. Wahrscheinlichkeitstheor. 2, 340–368 (1964)

Xu, S.H., Li, H.: Majorization of weighted trees: A new tool to study correlated stochastic systems. Math. Oper. Res. 25, 298–323 (2000)

Acknowledgements

Work supported by NCN Research Grant UMO-2011/01/B/ST1/01305 (first author) and by MNiSW Research Grant N N201 394137 (second author).

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Lorek, P., Szekli, R. Strong stationary duality for Möbius monotone Markov chains. Queueing Syst 71, 79–95 (2012). https://doi.org/10.1007/s11134-012-9284-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11134-012-9284-z

Keywords

- Strong stationary times

- Strong stationary duals

- Speed of convergence

- Random walk on cube

- Möbius function

- Möbius monotonicity