Abstract

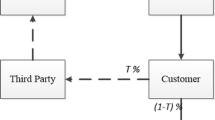

This paper proposes a decentralized closed-loop supply chain network model consisting of raw material suppliers, manufacturers, retailers, and recovery centers. We assume that the demands for the product and the corresponding returns are random and price-sensitive. Retailers and recovery centers face penalties associated with shortage demand and supply, respectively. We derive the optimality conditions of the various decision-makers, and establish that the governing equilibrium conditions can be formulated as a finite-dimensional variational inequality problem. The qualitative properties of the solution to the variational inequality are discussed. Numerical examples are provided to illustrate the effects of demand and return uncertainties on quantity shipments and prices.

Similar content being viewed by others

References

Atkinson AA (1979) Incentives, uncertainty, and risk in the newsboy problem. Decis Sci 10(3):341–357

Bloomberg D, LeMay S, Hanna J (2002) Logistics. Prentice Hall

Chen L-H, Chen Y-C (2010) A multiple-item budget-constraint newsboy problem with a reservation policy. Omega 38(6):431–439

Dafermos S (1980) Traffic equilibrium and variational inequalities. Transp Sci 14(1):42–54

Dafermos S (1990) Exchange price equilibria and variational inequalities. Math Program 46(1):391–402

Dong J, Zhang D, Nagurney A (2004) A supply chain network equilibrium model with random demands. Eur J Oper Res 156(1):194–212

Facchinei F, Fischer A, Kanzow C, Peng J-M (1999) A simply constrained optimization reformulation of kkt systems arising from variational inequalities. Appl Math Optim 40(1):19–37

Feng Z, Wang Z, Chen Y (2014) The equilibrium of closed-loop supply chain supernetwork with time-dependent parameters. Transp Res Part E: Logist Transp Rev 64:1–11

Fleischmann M, Krikke HR, Dekker R, Flapper SDP (2000) A characterisation of logistics networks for product recovery. Omega 28(6):653–666

Friesz TL, Harker PT, Tobin RL (1984) Alternative algorithms for the general network spatial price equilibrium problem. J Reg Sci 24(4):475

Guide VDR, Van Wassenhove LN (2009) Or forum-the evolution of closed-loop supply chain research. Oper Res 57(1):10–18

Hammond D, Beullens P (2007) Closed-loop supply chain network equilibrium under legislation. Eur J Oper Res 183(2):895–908

He B-S, Xu W, Yang H, Yuan X-M (2011) Solving over-production and supply-guarantee problems in economic equilibria. Netw Spat Econ 11(1):127–138

Hu X, Ralph D (2007) Using epecs to model bilevel games in restructured electricity markets with locational prices. Oper Res 55(5):809–827

Huang D, Zhou H, Zhao Q-H (2011) A competitive multiple-product newsboy problem with partial product substitution. Omega 39(3):302–312

Huang N, Ma C, Liu Z (2012) A new extragradient-like method for solving variational inequality problems. Fixed Point Theory Appl 2012(1):1–14

Jiang H, Ralph D, Scholtes S (2004) Equilibrium problems with equilibrium constraints: A new modelling paradigm for revenue management, report. In: The 4th annual INFORMS revenue management and pricing section conference

Jiang H, Xu H (2008) Stochastic approximation approaches to the stochastic variational inequality problem. IEEE Trans Autom Control 53(6):1462–1475

Jofré A, Rockafellar RT, Wets RJ (2007) Variational inequalities and economic equilibrium. Math Oper Res 32(1):32–50

Khobotov EN (1987) Modification of the extra-gradient method for solving variational inequalities and certain optimization problems. USSR Comput Math Math Phys 27(5):120–127

Kocabiyikoglu A, Popescu I (2011) An elasticity approach to the newsvendor with price-sensitive demand. Oper Res 59(2):301–312

Metzler C, Hobbs BF, Pang J-S (2003) Nash-cournot equilibria in power markets on a linearized dc network with arbitrage: formulations and properties. Netw Spat Econ 3(2):123–150

Monteiro RD, Svaiter BF (2010) On the complexity of the hybrid proximal extragradient method for the iterates and the ergodic mean. SIAM J Optim 20 (6):2755–2787

Monteiro RD, Svaiter BF (2012) Iteration-complexity of a newton proximal extragradient method for monotone variational inequalities and inclusion problems. SIAM J Optim 22(3):914–935

Nagurney A (2013) Network economics: a variational inequality approach, vol 10. Springer Science & Business Media

Nagurney A, Toyasaki F (2005) Reverse supply chain management and electronic waste recycling: a multitiered network equilibrium framework for e-cycling. Transp Res Part E: Logist Transp Rev 41(1):1–28

Nagurney A, Zhang D (1996) On the stability of an adjustment process for spatial price equilibrium modeled as a projected dynamical system. J Econ Dyn Control 20(1):43–62

Nagurney A, Zhou L (1993) Networks and variational inequalities in the formulation and computation of market disequilibria: the case of direct demand functions. Transp Sci 27(1):151–161

Nagurney A, Dong J, Zhang D (2002) A supply chain network equilibrium model. Transp Res Part E: Logist Transp Rev 38(5):281–303

Oggioni G, Smeers Y, Allevi E, Schaible S (2012) A generalized nash equilibrium model of market coupling in the european power system. Netw Spat Econ 12(4):503–560

Petruzzi NC, Dada M (1999) Pricing and the newsvendor problem: a review with extensions. Oper Res 47(2):183–194

Qiang Q, Ke K, Anderson T, Dong J (2013) The closed-loop supply chain network with competition, distribution channel investment, and uncertainties. Omega 41(2):186–194

Ramezani M, Kimiagari AM, Karimi B, Hejazi TH (2014) Closed-loop supply chain network design under a fuzzy environment. Knowl-Based Syst 59:108–120

Ravat U, Shanbhag UV (2011) On the characterization of solution sets of smooth and nonsmooth convex stochastic nash games. SIAM J Optim 21(3):1168–1199

Shao H, Lam WH, Tam ML (2006) A reliability-based stochastic traffic assignment model for network with multiple user classes under uncertainty in demand. Netw Spat Econ 6(3–4):173–204

Sherali HD, Soyster AL, Murphy FH (1983) Stackelberg-nash-cournot equilibria: characterizations and computations. Oper Res 31(2):253–276

Sheu J-B, Chou Y-H, Hu C-C (2005) An integrated logistics operational model for green-supply chain management. Transp Res Part E: Logist Transp Rev 41(4):287–313

Shi J, Zhang G, Sha J (2011) Optimal production planning for a multi-product closed loop system with uncertain demand and return. Comput Oper Res 38(3):641–650

Sobel MJ et al (1971) Noncooperative stochastic games. Ann Math Stat 42(6):1930–1935

Souza GC (2013) Closed-loop supply chains: a critical review, and future research*. Decis Sci 44(1):7–38

Sun X, Li Y, Govindan K, Zhou Y (2013) Integrating dynamic acquisition pricing and remanufacturing decisions under random price-sensitive returns. Int J Adv Manuf Technol 68(1–4):933–947

Takayama T, Judge GG (1971) Spatial and temporal price and allocation models. Technical report, North-Holland Amsterdam

Thierry M, Salomon M, Van Nunen J, Van Wassenhove L (1995) Strategie issues in product recovery management. Calif Manage Rev 37(2):114–135

Tinti F (2005) Numerical solution for pseudomonotone variational inequality problems by extragradient methods. In: Variational analysis and applications. Springer, pp 1101–1128

Xu M, Chen YF, Xu X (2010) The effect of demand uncertainty in a price-setting newsvendor model. Eur J Oper Res 207(2):946–957

Yang G-F, Wang Z-P, Li X-Q (2009) The optimization of the closed-loop supply chain network. Transp Res Part E: Logist Transp Rev 45(1):16–28

Yao L, Chen YF, Yan H (2006) The newsvendor problem with pricing: extensions. Int J Manag Sci Eng Manag 1(1):3–16

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Proof of Lemma 1

Knowing that \(y^{\prime }_{j}(p_{j})<0\), one sees that \(-(p_{j}+\delta _{j}) y_{j}^{\prime }(p_{j})r_{j}(z_{j})\ge \frac {1}{2}\) is equivalent to M j (s j , p j )≤0 where \(M_{j}(s_{j},p_{j})=\frac {1}{r_{j}(z_{j})}+2(p_{j}+\delta _{j})y_{j}^{\prime }(p_{j})\). It is easy to verify that:

Note that \(\frac {\partial ^{2} M_{j}}{\partial {s_{j}^{2}}}\ge 0\) if \(2(r_{j}^{\prime }(z_{j}))^{2}-r_{j}(z_{j}) r_{j}^{\prime \prime }(z_{j})\ge 0\). The determinant of the hessian of M j , given by \({\Delta }_{j}=\frac {\partial ^{2} M_{j}}{\partial {s_{j}^{2}}}\frac {\partial ^{2} M_{j}}{\partial {p_{j}^{2}}}-\left (\frac {\partial ^{2} M_{j}}{\partial s_{j}p_{j}}\right )^{2}\) simplifies to

From Assumptions 1 and 2a, \(2(r_{j}^{\prime }(z_{j}))^{2}-r_{j}(z_{j}) r_{j}^{\prime \prime }(z_{j})\ge 0\), \(r_{j}^{\prime }(z_{j})\ge 0\), \(2y_{j}^{\prime \prime }(p_{j})+p_{j}y^{\prime \prime \prime }(p_{j})\ge 0\) and \(y_{j}^{\prime \prime }(p_{j})\ge 0\) therefore Δ j has the same sign as \(2y_{j}^{\prime \prime }(p_{j})+(p_{j}+\delta _{j})y^{\prime \prime \prime }(p_{j})\). For y ″′(p j ) ≥ 0, one notice that \(2y_{j}^{\prime \prime }(p_{j})+(p_{j}+\delta _{j})y^{\prime \prime \prime }(p_{j})\ge 0 \) Since y ″(p j ) ≥ 0 and \(p_{j}+\delta _{j}\ge p_{j}-\lambda _{j}^{+}\ge 0\). Next for y ″′(p j )≤0, \(2y_{j}^{\prime \prime }(p_{j})+(p_{j}+\delta _{j})y^{\prime \prime \prime }(p_{j})\ge 0 \) since \(2y_{j}^{\prime \prime }(p_{j})+p_{j}y^{\prime \prime \prime }(p_{j})\ge 0\) by Assumption 1 and δ j y ″′(p j ) ≥ 0 since δ j ≤ 0 by definition. Hence Δ j ≥ 0 implying that the function M j is convex which in turn implies that the set \({{\Gamma }^{1}_{j}}\) is convex.

Appendix B: Proof of Theorem 1

First, without loss of generality, assume that \(p_{Ij}^{*}=\min \limits _{j} \{p_{ij}^{*}\}\). Optimization problem (6) can then be formulated as follows:

where \(\tilde {Q}_{j}=(q_{ij})_{i=1}^{I-1}\), \({{\Pi }_{j}^{1}}(s_{j},p_{j})=p_{j} y_{j}(p_{j}) -(p_{j}+\lambda _{j}^{-}) e_{j}^{-}(z_{j})+ \lambda _{j}^{+} e_{j}^{+}(z_{j}) - c_{j} s_{j} -p_{Ij}^{*} s_{j}\) and \({{\Pi }_{j}^{2}}(\tilde {Q}_{j})=- \sum \limits _{i=1}^{I-1} (p_{ij}^{*}-p_{Ij}^{*}) q_{ij}\).

Clearly, the function \({{\Pi }_{j}^{2}}(\tilde {Q}_{j})\) is concave. We need to prove that \({{\Pi }_{j}^{1}}(s_{j},p_{j})\) is concave. One easily verifies that the first derivative of \({{\Pi }_{j}^{1}}\) with respect to s j and p j are given by

Straightforward computations show that:

Let H j denotes the hessian matrix associated with \({{\Pi }_{j}^{1}}(s_{j},p_{j})\). The matrix H j is computed as:

with \(H_{s_{j}s_{j}}=- \left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ) f_{j}(z_{j}), H_{p_{j}s_{j}}=H_{s_{j}p_{j}}=y^{\prime }_{j}(p_{j}) \left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ) f_{j}(z_{j})+1-F_{j}(z_{j})\)and \(H_{p_{j}p_{j}}=-y_{j}^{\prime }(p_{j})^{2}\left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ) f_{j}(z_{j})+2y_{j}^{\prime }(p_{j}) F_{j}(z_{j})+y_{j}^{\prime \prime }(p_{j}) \left [\left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right )\right . \left .F_{j}(z_{j})-\lambda _{j}^{-}\right ]\).

We will show that the matrix − H j is positive definite. Clearly, \(-H_{s_{j}s_{j}} =\left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ) f_{j}(z_{j})>0\). If we show that d e t( − H j ) ≥ 0 then \(-H_{p_{j}p_{j}}\geq \frac {\left (H_{p_{j} s_{j}}\right )^{2}}{-H_{s_{j}s_{j}}}\geq 0\). d e t( − H j ) is calculated as:

The first term in the above is nonnegative because \(2 y^{\prime }_{j}(p_{j})+ p_{j} y_{j}^{\prime \prime }(p_{j})\leq 0 \). The second term is also nonnegative because \(y_{j}^{\prime \prime }(p_{j})\geq 0\). The last term is nonnegative since it can be written as \(2 (1-F_{j}(z_{j}))^{2}\left [\eta ^{1}_{j}(s_{j},p_{j})-1/2\right ]+2 (1-F_{j}(z_{j}))^{2}\max (\lambda _{j}^{-}-\lambda _{j}^{+},0)(-y_{j}^{\prime }(p_{j})r_{j}(z_{j}))\) and \({\eta ^{1}_{j}}(s_{j},p_{j})\ge 1/2\). Hence, we have d e t( − H j ) ≥ 0 implying that the matrix − H j is positive definite. Therefore, \({{\Pi }_{j}^{1}}\) is concave in the set \({{\Gamma }^{1}_{j}}\).

Appendix C: Proof of Proposition 1

We need to show that the optimal vector \((s_{j}^{*},p_{j}^{*})\) belongs to the set \({{\Gamma }^{1}_{j}}\). In other words, we will show that \({\eta _{j}^{1}}(s_{j},p_{j})\ge 1/2\) when \(\frac {{\partial {\Pi }_{j}^{1}}}{\partial p_{j}}=0\).

Since \(y_{j}^{\prime }(p_{j})\le 0\) and \(y_{j}(p_{j})-e_{j}^{-}(z_{j})\ge 0\) one see that when \(\frac {{\partial {\Pi }_{j}^{1}}}{\partial p_{j}}=0\) one has \((p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+})F_{j}(z_{j})-\lambda _{j}^{-} > 0\).

The last inequality holds if y j (p j ) + κ j (z j ) ≥ 0 where \(\kappa _{j}(z_{j})=-e^{-}_{j}(z_{j})-\frac {F_{j}(z_{j})}{2r_{j}(z_{j})}.\) Note that κ j (A j ) = A j and \(\kappa _{j}^{\prime }(z_{j})=\frac {1-F_{j}(z_{j})}{2}+\frac {r^{\prime }(z_{j})F(z_{j})}{2r_{j}(z_{j})^{2}}\geq 0\) because \(r_{j}^{\prime }(z_{j})\geq 0\). This yields κ j (z j ) ≥ A j for all z j ∈[A j , B j ]. Then \(y_{j}(p_{j})+\kappa _{j}(z_{j})\ge y_{j}(\bar {p}_{j})+A_{j} =0\). Therefore, \({\eta _{j}^{1}}(s_{j},p_{j})\ge 1/2\) when \(\frac {{\partial {\Pi }_{j}^{1}}}{\partial p_{j}}=0\).

Appendix D: Proof of Lemma 2

Knowing that z j = s j /y j (p j ) ≥ 0, one sees that \(\frac {-(p_{j}+\delta _{j}) y_{j}^{\prime }(p_{j})g_{j}(z_{j})}{y_{j}(p_{j})}\ge \frac {1}{2}\) is equivalent to N j (s j , p j )≤0 where \(N_{j}(s_{j},p_{j})=\frac {y_{j}(p_{j})}{g_{j}(z_{j})}+2(p_{j}+\delta _{j})y_{j}^{\prime }(p_{j})\). It is easy to check that:

Note that \(\frac {\partial ^{2} N_{j}}{\partial {s_{j}^{2}}}\ge 0\) if \(2(g_{j}^{\prime }(z_{j}))^{2}-g_{j}(z_{j}) g_{j}^{\prime \prime }(z_{j})\ge 0\). The determinant of the hessian of N j is given by

From Assumptions 1 and 2b, \(2(g_{j}^{\prime }(z_{j}))^{2}-g_{j}(z_{j}) g_{j}^{\prime \prime }(z_{j})\ge 0\), \(g_{j}^{\prime }(z_{j})\ge 0\), \(2y_{j}^{\prime \prime }(p_{j})+p_{j}y^{\prime \prime \prime }(p_{j})\ge 0\) and \(y_{j}^{\prime \prime }(p_{j})\ge 0\) therefore Θ j has the same sign as \(2y_{j}^{\prime \prime }(p_{j})+(p_{j}+\delta _{j})y^{\prime \prime \prime }(p_{j})\). For y ″′(p j ) ≥ 0, one notice that \(2y_{j}^{\prime \prime }(p_{j})+(p_{j}+\delta _{j})y^{\prime \prime \prime }(p_{j})\ge 0 \) since y ″(p j ) ≥ 0 and \(p_{j}+\delta _{j}\ge p_{j}-\lambda _{j}^{+}\ge 0\). Next for y ″′(p j )≤0, \(2y_{j}^{\prime \prime }(p_{j})+(p_{j}+\delta _{j})y^{\prime \prime \prime }(p_{j})\ge 0 \) since \(2y_{j}^{\prime \prime }(p_{j})+p_{j}y^{\prime \prime \prime }(p_{j})\ge 0\) by Assumption 1 and δ j y ″′(p j ) ≥ 0 since δ j ≤ 0 by definition. Hence Θ j ≥ 0 implying that the function N j is convex. We can then conclude that the set \({{\Gamma }^{2}_{j}}\) is convex.

Appendix E: Proof of Theorem 2

As in the additive case, optimization problem (8) can be reformulated as:

where \(\tilde {Q}_{j}=(q_{ij})_{i=1}^{I-1}\), \({{\Pi }_{j}^{1}}(s_{j},p_{j})=p_{j} y_{j}(p_{j})(1- e_{j}^{-}(z_{j}))+y_{j}(p_{j})\left ( \lambda _{j}^{+} e_{j}^{+}(z_{j}) - \lambda _{j}^{-} e_{j}^{-}(z_{j})\right ) - c_{j} s_{j}-p_{Ij}^{*} s_{j}\)and \({{\Pi }_{j}^{2}}(\tilde {Q}_{j})=- \sum \limits _{i=1}^{I-1} (p_{ij}^{*}-p_{Ij}^{*}) q_{ij}\).

Clearly, the function \({{\Pi }_{j}^{2}}(\tilde {Q}_{j})\) is concave. We need to prove that \({{\Pi }_{j}^{1}}(s_{j},p_{j})\) is concave. Let us consider the first derivative of \({{\Pi }_{j}^{1}}\) taken with respect to s j and p j :

Straightforward computations show that:

Let H j denotes the hessian matrix associated with \({{\Pi }_{j}^{1}}(s_{j},p_{j})\). The matrix H j is computed as:

with \(H_{s_{j}s_{j}}=- \frac {1}{y_{j}(p_{j})}\left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ) f_{j}(z_{j}), H_{p_{j}s_{j}}=H_{s_{j}p_{j}}=\frac {z_{j}}{y_{j}(p_{j})}y^{\prime }_{j}(p_{j})\left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ) f_{j}(z_{j})+1-F_{j}(z_{j})\) and

We will show that the matrix − H j is positive definite. Clearly, \(-H_{s_{j}s_{j}} =\frac {1}{y_{j}(p_{j})}\left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ) f_{j}(z_{j})\) is positive. If d e t( − H j )>0 then \(-H_{p_{j}p_{j}}> \frac {\left (H_{p_{j} s_{j}}\right )^{2}}{-H_{s_{j}s_{j}}}> 0\).

The first term in the above is nonnegative because \(2 y^{\prime }_{j}(p_{j})+ p_{j} y_{j}^{\prime \prime }(p_{j})\leq 0 \). The second term is also nonnegative because \(y_{j}^{\prime \prime }(p_{j})\geq 0\). The last term is nonnegative since it can be written as \(2 (1-F_{j}(z_{j}))^{2}\left [\eta ^{2}_{j}(s_{j},p_{j})-1/2\right ]+2 (1-F_{j}(z_{j}))^{2}\max (\lambda _{j}^{-}-\lambda _{j}^{+},0) \frac {-y_{j}^{\prime }(p_{j})g_{j}(z_{j})}{y_{j}(p_{j})}\) and \({\eta ^{2}_{j}}(s_{j},p_{j})\ge 1/2\). Hence, we have d e t( − H j ) ≥ 0 implying that the matrix − H j is positive definite. Therefore, \({{\Pi }_{j}^{1}}\) is concave in the set \({{\Gamma }^{2}_{j}}\).

Appendix F: Proof of Proposition 2

We need to show that the optimal vector \((s_{j}^{*},p_{j}^{*})\) belongs to the set \({{\Gamma }^{2}_{j}}\). In other words, we need to show that \({\eta _{j}^{2}}(s_{j},p_{j})\ge 1/2\) when \(\frac {{\partial {\Pi }_{j}^{1}}}{\partial p_{j}}=0\).

Since \(y_{j}^{\prime }(p_{j})\le 0\) and \({\int }_{A_{j}}^{z_{j}}xf_{j}(x)\,dx+z_{j}(1-F_{j}(z_{j}))\ge 0\) one see that when \(\frac {{\partial {\Pi }_{j}^{1}}}{\partial p_{j}}=0\) one has \(\left (p_{j}+\lambda _{j}^{-}-\lambda _{j}^{+}\right ){\int }_{A_{j}}^{z_{j}}xf_{j}(x)\,dx -\lambda _{j}^{-}> 0\).

The last inequality holds if ψ j (z j ) ≥ 0 where \(\psi _{j}(z_{j})={\int }_{A_{j}}^{z_{j}}xf_{j}(x)\,dx+z_{j}(1-F_{j}(z_{j}))-\frac {{\int }_{A_{j}}^{z_{j}}xf_{j}(x)\,dx}{2g_{j}(z_{j})}.\) Note that ψ j (A j ) = A j and \(\psi _{j}^{\prime }(z_{j})=\frac {1-F_{j}(z_{j})}{2}+\frac {g_{j}^{\prime }(z_{j}){\int }_{A_{j}}^{z_{j}}xf_{j}(x)\,dx}{2g_{j}(z_{j})^{2}}\geq 0\) because \(g_{j}^{\prime }(z_{j})\geq 0\). This yields ψ j (z j ) ≥ A j for all z j ∈[A j , B j ]. Then ψ j (z j ) ≥ 0. Therefore, \({\eta _{j}^{2}}(s_{j},p_{j})\ge 1/2\) when \(\frac {{\partial {\Pi }_{j}^{1}}}{\partial p_{j}}=0\).

Appendix G: Proof of Theorem 3

As in the retailer case, without loss of generality, we assume that \(p_{mI}^{*}=\max \limits _{i} \{p_{mi}^{*}\}\). Optimization problem (11) can be then reformulated as:

where \(\tilde {Q}_{m}=(q_{mi})_{i=1}^{I-1}\), \( {{\Pi }_{m}^{1}}(q_{m},p_{m})=p_{mI}^{*} q_{m} + s^{rec}y_{m}(p_{m}) -\left ({c_{m}^{u}}+c^{u} \bar {\chi }_{m}\right )q_{m} -p_{m} y_{m}(p_{m})+\lambda _{m}^{+} e_{m}^{+}(z_{m}) - \lambda _{m}^{-} e_{m}^{-}(z_{m})\)and \({{\Pi }_{m}^{2}}(\tilde {Q}_{m})=\sum \limits _{i=1}^{I-1} (p_{mi}^{*}-p_{mI}^{*})q_{mi}\).

Clearly, the function \({{\Pi }_{m}^{2}}(\tilde {Q}_{m})\) is concave. We need to show that \({{\Pi }_{m}^{1}}(q_{m},p_{m})\) is concave. The first derivative of \({{\Pi }_{m}^{1}}\) taken with respect to q m and p m and are given as:

Straightforward computations show that:

Let H m denotes the hessian matrix associated with \({{\Pi }_{m}^{1}}(q_{m},p_{m})\). The matrix H m is calculated as:

with \(H_{q_{m}q_{m}}=-\frac {1}{{\chi _{m}^{2}}} \left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m}), H_{p_{m}q_{m}}=H_{q_{m}p_{m}}=\frac {y^{\prime }_{m}(p_{m})}{\chi _{m}}\left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m})\)and \(H_{p_{m}p_{m}}=-y_{m}^{\prime }(p_{m})^{2}\left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m})-2y_{m}^{\prime }(p_{m}) +y^{\prime \prime }_{m}(p_{m}) \left [\left (\lambda _{m}^{-}-\lambda _{m}^{+}\right )F_{m}(z_{m})+s^{rec}-p_{m}+\lambda _{m}^{+}\right ]\).

We will show that the matrix − H m is positive definite. Clearly, \(-H_{q_{m}q_{m}} =\frac {1}{{\chi _{m}^{2}}} \left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m})>0.\) If d e t( − H m ) ≥ 0 then \(-H_{p_{m}p_{m}}\ge \frac {\left (H_{p_{m} q_{m}}\right )^{2}}{-H_{q_{m}q_{m}}}\ge 0\).

The first part of the last term is nonnegative because \(2 y^{\prime \prime }_{m}(p_{m})+ p_{m} y_{m}^{\prime \prime }(p_{m})\geq 0 \). The second part is also nonnegative because \(y_{m}^{\prime \prime }(p_{m})\leq 0\). Hence, we have d e t( − H m ) ≥ 0 implying that the matrix − H m is positive definite. Therefore, \({{\Pi }_{m}^{1}}\) is concave.

Appendix H: Proof of Theorem 4

Similar to the additive case, optimization problem (13) can be reformulated as:

where \(\tilde {Q}_{m}=(q_{mi})_{i=1}^{I-1}\), \( {{\Pi }_{m}^{1}}(q_{m},p_{m})=p_{mI}^{*} q_{m} + s^{rec}y_{m}(p_{m}) -\left ({c_{m}^{u}}+c^{u} \bar {\chi }_{m}\right )q_{m} -p_{m}y_{m}(p_{m})+y_{m}(p_{m})\left (\lambda _{m}^{+} e_{m}^{+}(z_{m}) - \lambda _{m}^{-} e_{m}^{-}(z_{m})\right )\)and \({{\Pi }_{m}^{2}}(\tilde {Q}_{m})=\sum \limits _{i=1}^{I-1} (p_{mi}^{*}-p_{mI}^{*})q_{mi}\).

Clearly, the function \({{\Pi }_{m}^{2}}(\tilde {Q}_{m})\) is concave. We need to show that \({{\Pi }_{m}^{1}}(q_{m},p_{m})\) is concave. The first derivative of \({{\Pi }_{m}^{1}}\) taken with respect to q m and p m and are computed as:

Straightforward computations show that:

Let H m denotes the hessian matrix associated with \({{\Pi }_{m}^{1}}(p_{m},p_{m})\). The matrix H m is calculated as:

with \(H_{q_{m}q_{m}}=-\frac {1}{{\chi _{m}^{2}} y_{m}(p_{m})} \left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m}), H_{q_{m}p_{m}}=H_{p_{m}q_{m}}\frac {y^{\prime }_{m}(p_{m})z_{m}}{{\chi _{m}^{2}} y_{m}(p_{m})}\left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m})\)and \(H_{p_{m}p_{m}}=-\frac {{z_{m}^{2}}y_{m}^{\prime }(p_{m})^{2}}{y_{m}(p_{m})}\left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m})-2y_{m}^{\prime }(p_{m}) +y^{\prime \prime }_{m}(p_{m}) \left [s^{rec}-p_{m}+\lambda _{m}^{-}-(\lambda _{m}^{-}-\lambda _{m}^{+})\left ((1+e_{m}^{-}(z_{m}))-z_{m} F_{m}(z_{m})\right )\right ]\).

We will show that the matrix − H m is positive definite. Clearly, \(-H_{q_{m}q_{m}} =\frac {1}{{\chi _{m}^{2}} y_{m}(p_{m})} \left (\lambda _{m}^{-}-\lambda _{m}^{+}\right ) f_{m}(z_{m})>0\). If d e t( − H m )>0 then \(-H_{p_{m}p_{m}}\ge \frac {\left (H_{p_{m} q_{m}}\right )^{2}}{-H_{q_{m}q_{m}}} \ge 0\).

The first part of the last term is nonnegative because \(2 y^{\prime \prime }_{m}(p_{m})+ p_{m} y_{m}^{\prime \prime }(p_{m})\geq 0 \). The second part is also nonnegative because \(y_{m}^{\prime \prime }(p_{m})\leq 0\). Hence, we have d e t( − H m ) ≥ 0 implying that the matrix − H m is positive definite. Therefore, \({{\Pi }_{m}^{1}}\) is concave.

Appendix I: Proof of Theorem 9

We will only consider the case with additive demand and returns. The proof is similar in the multiplicative case. The expression \(\langle \mathcal {F}(X^{\prime })- \mathcal {F}(X^{\prime \prime }), X^{\prime }-X^{\prime \prime }\rangle \ge 0 \) is equivalent to (after some algebraic simplification):

which is equivalent to (I)+(I I)+(I I I)+(I V)+(V)+(V I)+(V I I) ≥ 0. Based on the convexity of the cost functions, \({f^{r}_{n}},{f^{r}_{i}},{f^{u}_{i}},c_{ni},c_{mi}\),and c i j , one can have (I) ≥ 0, (I I) ≥ 0, and (I I I) ≥ 0. The proof of (I V)+(V) ≥ 0 can be derived from Theorem 1 since the function π j is concave for each j, J = 1,...,J. Similarly, we can also show that (V I)+(V I I) ≥ 0 under the assumptions of Theorem 3. Therefore \(\mathcal {F}(x)\) is monotone.

Rights and permissions

About this article

Cite this article

Hamdouch, Y., Qiang, Q.P. & Ghoudi, K. A Closed-Loop Supply Chain Equilibrium Model with Random and Price-Sensitive Demand and Return. Netw Spat Econ 17, 459–503 (2017). https://doi.org/10.1007/s11067-016-9333-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11067-016-9333-y