Abstract

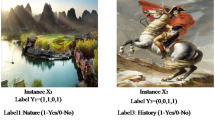

Multi-attribute network refers to network data with multiple attribute views and relational view. Although semi-supervised collective classification has been investigated extensively, little attention is received for such kind of network data. In this paper, we aim to study and solve the semi-supervised learning problem for multi-attribute networks. There are two important challenges: (1) how to extract effective information from the rich multi-attribute and relational information; (2) how to make use of unlabeled data in the network. We propose a new generative model with network regularization, called MARL, which addresses the two challenges. In the approach, a generative model based on the probabilistic latent semantic analysis method is developed to leverage attribute information, and a network regularizer is incorporated to smooth label probability with relational information and unlabeled data. Comprehensive experiments on various data sets have been conducted to demonstrate the effectiveness of the proposed MARL, and the results reveal that our approach outperforms existing collective classification methods and multi-view classification methods in terms of accuracy.

Similar content being viewed by others

References

McDowell L, Gupta K, Aha D (2009) Cautious collective classification. J Mach Learn Res 10:2777–2836

Sen P, Namata G, Bilgic M, Getoor L, Galligher B, Eliassi-Rad T (2008) Collective classification in network data. AI Mag 29(3):93

Shi R, Wu Q, Ye Y, Ho S (2014) A generative model with network regularization for semi-supervised collective classification. In: Proceedings of the 2014 SIAM international conference on data mining, pp 64–72

Kato T, Kashima H, Sugiyama M (2009) Robust label propagation on multiple networks. IEEE Trans Neural Netw 20(1):35–44

Xu C, Tao D, Xu C (2013) A survey on multi-view learning. In ArXiv preprint 1304(5634)

Blum A, Mitchell T (1998) Combining labeled and unlabeled data with co-training. In: Proceedings of the eleventh annual conference on Computational learning theory, pp 92–100

Zhuang F, Karypis G, Ning X, He Q, Shi Z (2012) Multi-view learning via probabilistic latent semantic analysis. Inf Sci 199:20–30

Shi X, Paiement J, Grangier D, Philip S (2012) Learning from heterogeneous sources via gradient boosting consensus. In: Proceedings of the SIAM international conference on data mining, pp 224–235

Vijayan P, Subramanian S, Ravindran B (2014) Multi-label collective classification in multi-attribute multi-relational network data. In: Advances in social networks analysis and mining, pp 509–514

McDowell L, Aha D (2012) Semi-supervised collective classification via hybrid label regularization. In: Proceedings of the 29th international conference on machine learning, pp 975–982

Shi X, Li Y, Yu P (2011) Collective prediction with latent graphs. In: Proceedings of the 20th ACM international conference on information and knowledge management, pp 1127–1136

Bilgic M, Mihalkova L, Getoor L (2010) Active learning for networked data. In: Proceedings of the 27th international conference on machine learning, pp 79–86

McDowell L, Aha D (2013) Labels or attributes? Rethinking the neighbors for collective classification in sparsely-labeled networks. In: Proceedings of the 22nd ACM international conference on information and knowledge management, pp 847–852

Kumar A, Rai P, Daum H (2011) Co-regularized multi-view spectral clustering. In: Advances in neural information processing systems, pp 1413–1421

Liu J, Jiang Y, Li Z, Zhou Z, Lu H (2014) Partially shared latent factor learning with multiview data. IEEE Trans Neural Netw Learn Syst. doi:10.1109/TNNLS.2014.2335234

Liu J, Wang C, Gao J, Han J (2013) Multi-view clustering via joint nonnegative matrix factorization. In: Proceedings of the SIAM international conference on data mining, pp 252–260

Cai D, Wang X, He X (2009) Probabilistic dyadic data analysis with local and global consistency. In: Proceedings of the 26th annual international conference on machine learning, pp 105–112

Paige C, Saunders M (1982) Lsqr: an algorithm for sparse linear equations and sparse least squares. ACM Trans Math Softw 8(1):43–71

Jacob Y, Denoyer L, Gallinari P (2011) Classification and annotation in social corpora using multiple relations. In: Proceedings of the 20th ACM international conference on information and knowledge management, pp 1215–1220

Greene D, Cunningham P (2013) Producing a unified graph representation from multiple social network views. In: Proceedings of the 5th annual ACM web science conference, pp 118–121

Macskassy SA, Provost F (2007) Classification in networked data: a toolkit and a univariate case study. J Mach Learn Res 8:935–983

Neville J, Jensen D (2000) Iterative classification in relational data. In: Proceedings of AAAI-2000 workshop on learning statistical models from relational data, pp 13–20

McCallum A, Nigam K (1998) A comparison of event models for naive bayes text classification. In: Proceedings of the AAAI workshop on learning for text categorization, pp 41–48

Acknowledgments

This research was supported in part by NSFC under Grant Nos. 61572158, 61272538 and 61562027, Shenzhen Science and Technology Program under Grant No. JCYJ20140417172417128, Shenzhen Strategic Emerging Industries Program under Grant No. JCYJ20130329142551746 and Social Science Planning Project of Jiangxi Province under Grant No. 15XW12. Raymond Y.K. Lau’s work was supported by a grant from the Research Grants Council of the Hong Kong Special Administrative Region, China (Project: CityU11502115), and the Shenzhen Municipal Science and Technology R&D Funding–Basic Research Program (Project No. JCYJ20140419115614350).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Definition 1

\({\mathcal {U}}(\varTheta , {\varTheta }^{\prime })\) is an auxiliary function for \({\mathcal {O}}(\varTheta )\) if the following conditions are satisfied

Lemma 1

If \({\mathcal {U}}\) is an auxiliary function of \({\mathcal {O}}\), then \({\mathcal {O}}\) is non-decreasing under the update

Proof

\(\square \)

Lemma 2

Function

is an auxiliary function for the objective function \({\mathcal {O}}(\varTheta )\) in Eq. (5), where

is the parameters,

Proof

According to Jensen’s inequality, we have

It is also easy to verify that

Hence, the result follows. \(\square \)

Lemma 3

Maximizing

is equivalent to maximizing the update in Eq. (16), where \({\mathcal {Q}}(\varTheta )\) is the expected complete data log-likelihood in Eq. (7).

Proof

The second term is independent of \(\varTheta \), which can be treated as constant. Thus, maximizing the expected complete data log-likelihood function \({\mathcal {Q}}(\varTheta )\) is equivalent to maximizing \({\mathcal {U}}(\varTheta , {\varTheta }^{r})\).

\(\square \)

Next, we give the proof of Theorem 1.

Proof

According to Lemma 2, we know that \({\mathcal {U}}(\varTheta , \varTheta ^r)\) is an auxiliary function for \({\mathcal {O}}(\varTheta )\). From Lemma 1, we thus know that iteratively maximizing \({\mathcal {U}}(\varTheta , \varTheta ^r)\) leads to a non-decreasing of function \({{\mathcal {O}}}(\varTheta )\). Lemma 3 tells us that maximizing \({\mathcal {U}}(\varTheta , \varTheta ^r)\) is equivalent to maximizing \({{\mathcal {Q}}}(\varTheta )\). As Eqs. (8) and (14) are the exact update rules for maximizing \({\mathcal {Q}}(\varTheta )\), we thus know that \({\mathcal {O}}(\varTheta )\) is also non-decreasing under the two update rules. The result follows. \(\square \)

Rights and permissions

About this article

Cite this article

Wang, S., Ye, Y., Li, X. et al. Semi-supervised Collective Classification in Multi-attribute Network Data. Neural Process Lett 45, 153–172 (2017). https://doi.org/10.1007/s11063-016-9517-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-016-9517-y