Abstract

Many recent studies have proposed methods for the classification of dynamic textures (DT). A method involving local binary patterns on three orthogonal planes (LBP-TOP) has shown promising results and generated considerable interest. However, LBP-TOP and most of its variants suffer from drawbacks caused by the accumulation process in the TOP technique. This process uses features from all frames in the DT sequence, including irrelevant frames, and thus disregards the distinct characteristics of each frame. To overcome this problem, we propose a codebook-based DT descriptor that aggregates salient features on three orthogonal planes. Given a DT sequence, only those frame features that are highly correlated with each cluster are selected and aggregated from the perspective of visual words. The proposed DT descriptor removes the feature from outlier frames that suddenly or rarely appear in a particular context, thus enhancing the emphasis of the salient features. Experimental results using public DT and dynamic scene datasets demonstrate the superiority of the proposed method over comparative approaches. The proposed method also yields outstanding results compared to the state-of-the-art DT representation.

Similar content being viewed by others

References

Almaev, T. R., & Valstar, M. F. (2013). Local gabor binary patterns from three orthogonal planes for automatic facial expression recognition. In 2013 Humaine association conference on affective computing and intelligent interaction (ACII), IEEE (pp. 356–361).

Arandjelovic, R., & Zisserman, A. (2013). All about VLAD. In 2013 IEEE conference on computer vision and pattern recognition (CVPR), IEEE (pp. 1578–1585).

Arthur, D., & Vassilvitskii, S. (2007). k-means++: The advantages of careful seeding. In Proceedings of the eighteenth annual ACM-SIAM symposium on Discrete algorithms, Society for Industrial and Applied Mathematics (pp. 1027–1035).

Bruna, J., & Mallat, S. (2013). Invariant scattering convolution networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(8), 1872–1886.

Chen, J., Zhao, G., Salo, M., Rahtu, E., & Pietikäinen, M. (2013). Automatic dynamic texture segmentation using local descriptors and optical flow. IEEE Transactions on Image Processing, 22(1), 326–339.

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273–297.

Csurka, G., Dance, C., Fan, L., Willamowski, J., & Bray, C. (2004). Visual categorization with bags of keypoints. Workshop on statistical learning in computer vision, ECCV, Prague (Vol. 1, pp. 1–2).

de Freitas Pereira, T., Anjos, A., De Martino, J. M., & Marcel, S. (2013). LBP-TOP based countermeasure against face spoofing attacks. In Computer vision-ACCV 2012, Springer (pp. 121–132).

Doretto, G., Chiuso, A., Wu, Y. N., & Soatto, S. (2003). Dynamic textures. International Journal of Computer Vision, 51(2), 91–109.

Douze, M., Jégou, H., Schmid, C., & Pérez, P. (2010). Compact video description for copy detection with precise temporal alignment. In Computer vision–ECCV 2010, Springer (pp. 522–535).

Douze, M., Revaud, J., Schmid, C., & Jégou, H. (2013). Stable hyper-pooling and query expansion for event detection. In 2013 IEEE international conference on computer vision (ICCV), IEEE (pp. 1825–1832).

Dubois, S., Péteri, R., & Ménard, M. (2015). Characterization and recognition of dynamic textures based on the 2D+T curvelet transform. Signal, Image and Video Processing, 9(4), 819–830.

FFMPEG. (2016). FFMPEG thumbmail filter. https://ffmpeg.org/ffmpeg-filters.html#thumbnail.

Garg, V., Vempati, S., & Jawahar, C. (2011). Bag of visual words: A soft clustering based exposition. In: 2011 Third national conference on computer vision, pattern recognition, image processing and graphics (NCVPRIPG), IEEE (pp. 37–40).

Ghanem, B., & Ahuja, N. (2010). Maximum margin distance learning for dynamic texture recognition. In Computer vision–ECCV 2010, Springer (pp. 223–236).

Guo, Z., Zhang, L., & Zhang, D. (2010). Rotation invariant texture classification using LBP variance (LBPV) with global matching. Pattern Recognition, 43(3), 706–719.

Harandi, M., Sanderson, C., Shen, C., & Lovell, B. C. (2013). Dictionary learning and sparse coding on grassmann manifolds: An extrinsic solution. In 2013 IEEE international conference on computer vision (ICCV), IEEE (pp. 3120–3127).

Jain, M., Jégou, H., & Bouthemy, P. (2013). Better exploiting motion for better action recognition. In 2013 IEEE conference on computer vision and pattern recognition (CVPR), IEEE (pp. 2555–2562).

Jégou, H., Douze, M., Schmid, C., & Pérez, P. (2010). Aggregating local descriptors into a compact image representation. In 2010 IEEE conference on computer vision and pattern recognition (CVPR), IEEE (pp. 3304–3311).

Ji, H., Yang, X., Ling, H., & Xu, Y. (2013). Wavelet domain multifractal analysis for static and dynamic texture classification. IEEE Transactions on Image Processing, 22(1), 286–299.

Jiang, B., Valstar, M. F., & Pantic, M. (2011). Action unit detection using sparse appearance descriptors in space-time video volumes. In 2011 IEEE international conference on automatic face & gesture recognition and workshops (FG), IEEE (pp. 314–321).

Kellokumpu, V., Zhao, G., & Pietikäinen, M. (2008). Human activity recognition using a dynamic texture based method. In Proceedings of the British machine vision conference (BMVC) (Vol. 1, p. 2).

Kellokumpu, V., Zhao, G., Li, S.Z., & Pietikäinen, M. (2009). Dynamic texture based gait recognition. In Advances in biometrics, Springer (pp. 1000–1009).

Kim, T. E., & Kim, M. H. (2015). Improving the search accuracy of the vlad through weighted aggregation of local descriptors. Journal of Visual Communication and Image Representation, 31, 237–252.

Mattivi, R., & Shao, L. (2009). Human action recognition using LBP-TOP as sparse spatio-temporal feature descriptor. In Computer analysis of images and patterns, Springer (pp. 740–747).

Mironică, I., Duţă, I. C., Ionescu, B., & Sebe, N. (2015). A modified vector of locally aggregated descriptors approach for fast video classification. Multimedia Tools and Applications (pp. 1–28).

Nanni, L., Brahnam, S., & Lumini, A. (2011). Local ternary patterns from three orthogonal planes for human action classification. Expert Systems with Applications, 38(5), 5125–5128.

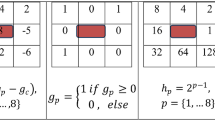

Ojala, T., Pietikäinen, M., & Mäenpää, T. (2002). Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(7), 971–987.

Oliva, A., & Torralba, A. (2001). Modeling the shape of the scene: A holistic representation of the spatial envelope. International Journal of Computer Vision, 42(3), 145–175.

Peng, X., Wang, L., Qiao, Y., & Peng, Q. (2014). Boosting VLAD with supervised dictionary learning and high-order statistics. In Computer vision–ECCV 2014, Springer (pp. 660–674).

Perronnin, F., Sánchez, J., & Mensink, T. (2010). Improving the fisher kernel for large-scale image classification. In Computer vision–ECCV 2010, Springer (pp. 143–156).

Péteri, R., Fazekas, S., & Huiskes, M. J. (2010). Dyntex: A comprehensive database of dynamic textures. Pattern Recognition Letters, 31(12), 1627–1632.

Quan, Y., Huang, Y., & Ji, H. (2015). Dynamic texture recognition via orthogonal tensor dictionary learning. In Proceedings of the IEEE international conference on computer vision (pp. 73–81).

Ravichandran, A., Chaudhry, R., & Vidal, R. (2009). View-invariant dynamic texture recognition using a bag of dynamical systems. In IEEE conference on computer vision and pattern recognition, 2009, CVPR 2009, IEEE (pp. 1651–1657).

Ren, J., Jiang, X., Yuan, J., & Wang, G. (2014). Optimizing LBP structure for visual recognition using binary quadratic programming. IEEE Signal Processing Letters, 21(11), 1346–1350.

Rivera, A. R., & Chae, O. (2015). Spatiotemporal directional number transitional graph for dynamic texture recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(10), 2146–2152.

Ryu, J., Hong, S., & Yang, H. S. (2015). Sorted consecutive local binary pattern for texture classification. IEEE Transactions on Image Processing, 24(7), 2254–2265.

Shan, C., Gong, S., & McOwan, P. W. (2009). Facial expression recognition based on local binary patterns: A comprehensive study. Image and Vision Computing, 27(6), 803–816.

Shi, M., Avrithis, Y., & Jégou, H. (2015). Early burst detection for memory-efficient image retrieval. In 2015 IEEE conference on computer vision and pattern recognition (CVPR).

Shroff, N., Turaga, P., & Chellappa, R. (2010). Moving vistas: Exploiting motion for describing scenes. In 2010 IEEE conference on computer vision and pattern recognition (CVPR), IEEE (pp. 1911–1918).

Sifre, L., & Mallat, S. (2013). Rotation, scaling and deformation invariant scattering for texture discrimination. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 1233–1240).

Subramanian, (2015). Key frame extraction from video using videoreader. https://www.mathworks.com/matlabcentral/fileexchange/51238-key-frame-extraction-from-video-using-videoreader/

Sun ,Y., Xu, Y., & Quan, Y. (2015). Characterizing dynamic textures with space-time lacunarity analysis. In 2015 IEEE international conference on multimedia and expo (ICME), IEEE (pp. 1–6).

Uijlings, J., Duta, I., Sangineto, E., & Sebe, N. (2014). Video classification with Densely extracted HOG/HOF/MBH features: An evaluation of the accuracy/computational efficiency trade-off. International Journal of Multimedia Information Retrieval, 4(1), 33–44.

Vedaldi, A., & Fulkerson, B. (2008). VLFeat: An open and portable library of computer vision algorithms. http://www.vlfeat.org/.

Wang, L., Liu, H., & Sun, F. (2016). Dynamic texture video classification using extreme learning machine. Neurocomputing, 174, 278–285.

Wang, Y., See, J., Phan, R. C. W., & Oh, Y. H. (2015). LBP with six intersection points: Reducing redundant information in LBP-TOP for micro-expression recognition. In Computer vision–ACCV 2014, Springer (pp. 525–537).

Wang, Z., Di, W., Bhardwaj, A., Jagadeesh, V., & Piramuthu, R. (2014). Geometric VLAD for large scale image search.

Woolfe, F., & Fitzgibbon, A. (2006). Shift-invariant dynamic texture recognition. In European conference on computer vision, Springer (pp. 549–562).

Xie, Y., Ho, J., & Vemuri, B. (2013). On a nonlinear generalization of sparse coding and dictionary learning. In Machine learning: Proceedings of the International Conference. International Conference on Machine Learning, NIH Public Access (p. 1480).

Xu, H., Zha, H., & Davenport, M. A. (2014). Manifold based dynamic texture synthesis from extremely few samples. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3019–3026).

Xu, Y., Huang, S., Ji, H., & Fermüller, C. (2012). Scale-space texture description on sift-like textons. Computer Vision and Image Understanding, 116(9), 999–1013.

Xu, Y., Quan, Y., Zhang, Z., Ling, H., & Ji, H. (2015). Classifying dynamic textures via spatiotemporal fractal analysis. Pattern Recognition, 48(10), 3239–3248.

Yang, F., Xia, G. S., Liu, G., Zhang, L., & Huang, X. (2016). Dynamic texture recognition by aggregating spatial and temporal features via ensemble svms. Neurocomputing, 173, 1310–1321.

Zhao, G. (2007). Guoying zhao homepage. http://www.ee.oulu.fi/~gyzhao/.

Zhao, G., & Pietikainen, M. (2007). Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(6), 915–928.

Zhao, G., & Pietikäinen, M. (2007). Improving rotation invariance of the volume local binary pattern operator. In Machine vision applications (MVA) (pp. 327–330).

Zhao, G., Ahonen, T., Matas, J., & Pietikainen, M. (2012). Rotation-invariant image and video description with local binary pattern features. IEEE Transactions on Image Processing, 21(4), 1465–1477.

Zhuang, Y., Rui, Y., Huang, T. S., & Mehrotra, S. (1998). Adaptive key frame extraction using unsupervised clustering. In 1998 International conference on image processing, 1998. ICIP 98. Proceedings, IEEE (Vol. 1, pp. 866–870).

Acknowledgements

This work was supported by the ICT R&D program of MSIP/IITP. [14-811-12-002, Development of personalized and creative learning tutoring system based on participational interactive contents and collaborative learning technology] The contents of this paper are the results of the research project of the Ministry of Oceans and Fisheries of Korea (A fundamental research on maritime accident prevention—phase 2).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hong, S., Ryu, J. & Yang, H.S. Not all frames are equal: aggregating salient features for dynamic texture classification. Multidim Syst Sign Process 29, 279–298 (2018). https://doi.org/10.1007/s11045-016-0463-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-016-0463-7