Abstract

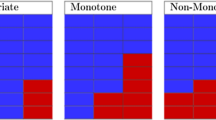

Real world classification tasks may involve high dimensional missing data. The traditional approach to handling the missing data is to impute the data first, and then apply the traditional classification algorithms on the imputed data. This method first assumes that there exist a distribution or feature relations among the data, and then estimates missing items with existing observed values. A reasonable assumption is a necessary guarantee for accurate imputation. The distribution or feature relations of data, however, is often complex or even impossible to be captured in high dimensional data sets, leading to inaccurate imputation. In this paper, we propose a complete-case projection subspace ensemble framework, where two alternative partition strategies, namely bootstrap subspace partition and missing pattern-sensitive subspace partition, are developed for incomplete datasets with even missing patterns and uneven missing patterns, respectively. Multiple component classifiers are then separately trained in these subspaces. After that, a final ensemble classifier is constructed by a weighted majority vote of component classifiers. In the experiments, we demonstrate the effectiveness of the proposed framework over eight high dimensional UCI datasets. Meanwhile, we apply the two proposed partition strategies over data sets with different missing patterns. As indicated, the proposed algorithm significantly outperforms existing imputation methods in most cases.

Similar content being viewed by others

References

Banfield, R. E., Hall, L. O., Bowyer, K. W., & Kegelmeyer, W. P. (2007). A comparison of decision tree ensemble creation techniques. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(1), 173–180.

Batista, G. E. A. P. A., & Monard, M. C. (2002). A study of k-nearest neighbour as an imputation method. HIS, 87(251–260), 48.

Batista, G. E. A. P. A., & Monard, M. C. (2003). An analysis of four missing data treatment methods for supervised learning. Applied Artificial Intelligence, 17(5–6), 519–533.

Bertoni, A., Folgieri, R., & Valentini, G. (2005). Bio-molecular cancer prediction with random subspace ensembles of support vector machines. Neurocomputing, 63, 535–539.

Bryll, R., Gutierrez-Osuna, R., & Quek, F. (2003). Attribute bagging: Improving accuracy of classifier ensembles by using random feature subsets. Pattern Recognition, 36(6), 1291–1302.

Cao, J., & Lin, Z. (2015). Extreme learning machines on high dimensional and large data applications: A survey. Mathematical Problems in Engineering, 2015, 1–12.

Cao, J., Lin, Z., Huang, G.-B., & Liu, N. (2012). Voting based extreme learning machine. Information Sciences, 185(1), 66–77.

Donders, A. R. T., van der Heijden, G. J. M. G., Stijnen, T., & Moons, K. G. M. (2006). Review: A gentle introduction to imputation of missing values. Journal of Clinical Epidemiology, 59(10), 1087–1091.

Enders, C. K. (2001). A primer on maximum likelihood algorithms available for use with missing data. Structural Equation Modeling, 8(1), 128–141.

Graham, J. W., Olchowski, A. E., & Gilreath, T. D. (2007). How many imputations are really needed? Some practical clarifications of multiple imputation theory. Prevention Science, 8(3), 206–213.

Ho, T. K. (1998). Nearest neighbors in random subspaces. In Advances in pattern recognition (pp. 640–648). Springer.

Huang, W., Yang, Y., Lin, Z., Huang, G.-B., Zhou, J., Duan, Y., Xiong, W. (2014). Random feature subspace ensemble based extreme learning machine for liver tumor detection and segmentation. In Engineering medicine and biology society (EMBC), 2014 36th annual international conference of the IEEE (pp. 4675–4678). IEEE.

Huang, G.-B. (2015). What are extreme learning machines? Filling the gap between Frank Rosenblatts dream and John von Neumanns puzzle. Cognitive Computation, 7(3), 263–278.

Huang, G., Huang, G.-B., Song, S., & You, K. (2015). Trends in extreme learning machines: A review. Neural Networks, 61, 32–48.

Huang, G. B., Zhou, H., Ding, X., & Zhang, R. (2012). Extreme learning machine for regression and multiclass classification. IEEE Transactions on Systems Man and Cybernetics Part B Cybernetics A Publication of the IEEE Systems Man and Cybernetics Society, 42(2), 513–529.

Kuncheva, L., Rodríguez, J. J., Plumpton, C. O., Linden, D. E. J., Johnston, S. J., et al. (2010). Random subspace ensembles for fMRI classification. IEEE Transactions on Medical Imaging, 29(2), 531–542.

Lichman, M. (2013). UCI Machine Learning Repository. http://archive.ics.uci.edu/ml.

Li, X., & Mao, W. (2016). Extreme learning machine based transfer learning for data classification. Neurocomputing, 174, 203–210.

Little, R. J. A., & Rubin, D. B. (2014). Statistical analysis with missing data. New York: Wiley.

Marlin, B. M. (2008). Missing data problems in machine learning. Doctoral.

Scheffer, J. (2002). Dealing with missing data. Research Letters in the Information and Mathematical Sciences, 53(1), 153–160.

Sharpe, P. K., & Solly, R. J. (1995). Dealing with missing values in neural network-based diagnostic systems. Neural Computing and Applications, 3(2), 73–77.

Skurichina, M., & Duin, R. P. W. (2001). Bagging and the random subspace method for redundant feature spaces. In Multiple classifier systems (pp. 1–10). Springer.

Xie, Z., Xu, K., Liu, L., & Xiong, Y. (2014). 3d shape segmentation and labeling via extreme learning machine. In Computer graphics forum (Vol. 33. No.5, pp. 85–95). Wiley Online Library.

Acknowledgments

This work is supported by the Major State Basic Research Development Program of China (973 Program) under the Grant No. 2014CB340303, and the Natural Science Foundation under Grant No. 61402490.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gao, H., Jian, S., Peng, Y. et al. A subspace ensemble framework for classification with high dimensional missing data. Multidim Syst Sign Process 28, 1309–1324 (2017). https://doi.org/10.1007/s11045-016-0393-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-016-0393-4