Abstract

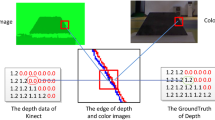

Kinect v2 adopts a time-of-flight (ToF) depth sensing mechanism, which causes different type of depth artifacts comparing to the original Kinect v1. The goal of this paper is to propose a depth completion method, which is designed especially for the Kinect v2 depth artifacts. Observing the specific types of depth errors in the Kinect v2 such as thin hole-lines along the object boundaries and the new type of holes in the image corners, in this paper, we exploit the position information of the color edges extracted from the Kinect v2 sensor to guide the accurate hole-filling around the object boundaries. Since our approach requires a precise registration between color and depth images, we also introduce the transformation matrix which yields point-to-point correspondence with a pixel-accuracy. Experimental results demonstrate the effectiveness of the proposed depth image completion algorithm for the Kinect v2 in terms of completion accuracy and execution time.

Similar content being viewed by others

References

Abdel-Aziz YI (1971) Direct linear transformation from comparator coordinates in close-range photogrammetry. In: ASP Symposium on Close-Range Photogrammetry in Illinois

Breuer T, Bodensteiner C, Arens M (2014) Low-cost commodity depth sensor comparison and accuracy analysis. In: SPIE Security+ Defence International Society for Optics and Photonics, pp 92500G–92500G

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 6:679–698

Chen C, Cai J, Zheng J, Cham T-J, Shi G (2013) A color-guided, region-adaptive and depth-selective unified framework for kinect depth recovery. In: Multimedia Signal Processing (MMSP), 2013 I.E. 15th International Workshop on. IEEE, pp 7–12

Chen L, Lin H, Li S (2012) Depth image enhancement for Kinect using region growing and bilateral filter. In: Pattern Recognition (ICPR), 2012, 21st IEEE International Conference, pp 3070–3073

El-laithy RA, Huang J, Yeh M (2012) Study on the use of microsoft kinect for robotics applications. In: Proceedings of Position Location and Navigation Symposium (PLANS), pp 1280–1288

Ho YS (2013) Challenging technical issues of 3D video processing. J Converg 4(1):1–6

Horng Y-R, Tseng Y-C, Chang T-S (2010) Stereoscopic images generation with directional Gaussian filter. In: Proceedings of 2010 I.E. International Symposium on Circuits and Systems (ISCAS), pp 2650–2653

Jung S-W, Choi O (2013) Color image enhancement using depth and intensity measurements of a time-of-flight depth camera. Opt Eng 52(10):103104–103104

Kinect 1 vs. Kinect 2, a quick side-by-side reference. Available online: http://channel9.msdn.com/coding4fun/kinect/Kinect-1-vs-Kinect-2-a-side-by-side-reference. Accessed 11 Oct 2015

Lai K, Bo L, Ren X, Fox D (2011) A large-scale hierarchical multi-view rgb-d object dataset. In: Robotics and Automation (ICRA), 2011 I.E. International Conference on, pp 1817–1824

Le AV, Jung S-W, Won CS (2014) Directional joint bilateral filter for depth images. Sensors 14(7):11362–11378

Levin A, Lischinski D, Weiss Y (2004) Colorization using optimization. ACM Trans Graph 23(3):689–694

Qi F, Han J, Wang P, Shi G, Li F (2013) Structure guided fusion for depth map inpainting. Pattern Recogn Lett 34(1):70–76

Rosten E, Drummond T (2006) Machine learning for high-speed corner detection. In: Computer vision–ECCV 2006. Springer, Berlin Heidelberg, pp 430–443

Rother C, Kolmogorov V, Blake A (2004) Grabcut: interactive foreground extraction using iterated graph cuts. ACM Trans Graph 23(3):309–314

Rothwell CA, Forsyth DA, Zisserman A, Mundy JL (1993) Extracting projective structure from single perspective views of 3D point sets. In: Proceedings of Fourth International Conference on Computer Vision, pp 573–582

Scharstein D, Pal C (2007) Learning conditional random fields for stereo. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), pp 1–8

Tomasi C, Manduchi R (1998) Bilateral filtering for gray and color images. In: Sixth International Conference on Computer Vision, 1998, pp 839–846

Vipparthi SK, Nagar SK (2014) Color directional local quinary patterns for content based indexing and retrieval. Hum Centric Comput Inf Sci 4(1):1–13

Wasza J, Bauer S, Hornegger J (2011) Real-time preprocessing for dense 3-D range imaging on the GPU: defect interpolation, bilateral temporal averaging and guided filtering. In: Computer Vision Workshops (ICCV Workshops), 2011 I.E. International Conference on, pp 1221–1227

Yang J, Ye X, Li K, Hou C, Wang Y (2014) Color-guided depth recovery from RGB-D data using an adaptive auto-regressive model. IEEE Trans Image Process 23(8):3443–3458

Acknowledgments

This work was supported by the MSIP (Ministry of Science, ICT and Future Planning), Korea, under ITRC support program (IITP-2016-H8501-16-1014) supervised by IITP and by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2015R1D1A1A01057269). C.S.Won was supported by the research program of Dongguk University, 2016.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Song, W., Le, A.V., Yun, S. et al. Depth completion for kinect v2 sensor. Multimed Tools Appl 76, 4357–4380 (2017). https://doi.org/10.1007/s11042-016-3523-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-3523-y