Abstract

In this work we lower bound the individual sequence anytime regret of a large family of online algorithms. This bound depends on the quadratic variation of the sequence, \(Q_T\), and the learning rate. Nevertheless, we show that any learning rate that guarantees a regret upper bound of \(O(\sqrt{Q_T})\) necessarily implies an \(\varOmega (\sqrt{Q_T})\) anytime regret on any sequence with quadratic variation \(Q_T\). The algorithms we consider are online linear optimization forecasters whose weight vector at time \(t+1\) is the gradient of a concave potential function of cumulative losses at time t. We show that these algorithms include all linear Regularized Follow the Leader algorithms. We prove our result for the case of potentials with negative definite Hessians, and potentials for the best expert setting satisfying some natural regularity conditions. In the best expert setting, we give our result in terms of the translation-invariant relative quadratic variation. We apply our lower bounds to Randomized Weighted Majority and to linear cost Online Gradient Descent. We show that our analysis can be generalized to accommodate diverse measures of variation beside quadratic variation. We apply this generalized analysis to Online Gradient Descent with a regret upper bound that depends on the variance of losses.

Similar content being viewed by others

1 Introduction

For any sequence of losses, it is trivial to tailor an algorithm that has no regret on that particular sequence. The challenge and the great success of regret minimization algorithms lie in achieving low regret for every sequence. This bound may still depend on a measure of sequence smoothness, such as the quadratic variation or variance. The optimality of such regret upper bounds may be demonstrated by proving the existence of some “difficult” loss sequences. Their existence may be implied, for example, by stochastically generating sequences and proving a lower bound on the expected regret of any algorithm (see, e.g., Cesa-Bianchi and Lugosi 2006). This type of argument leaves open the possibility that the difficult sequences are, in some way, atypical or irrelevant to actual user needs. In this work we address this question by proving lower bounds on the regret of any individual sequence, in terms of its quadratic variation.

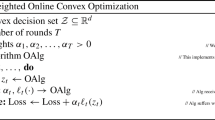

We first consider the related task of characterizing algorithms that have individual sequence non-negative regret. We focus our attention on linear forecasters that determine their next weight vector as a function of current cumulative losses. More specifically, if \({\mathbf {L}}_t\in {{\mathbb {R}}}^N\) is the cumulative loss vector at time t, and \({\mathbf {x}}_{t+1}\in {{\mathcal {K}}}\) is the next weight vector, then \({\mathbf {x}}_{t+1}=g({\mathbf {L}}_t)\) for some continuous g. The algorithm then incurs a loss of \({\mathbf {x}}_{t+1}\cdot {\mathbf {l}}_{t+1}\), where \({\mathbf {l}}_{t}\) is the loss vector at time t. We show that such algorithms have individual sequence non-negative regret if and only if g is the gradient of a concave potential function. We then show that this characteristic is shared by all linear cost Regularized Follow the Leader (RFTL) regret minimization algorithms, which include Randomized Weighted Majority (RWM) and linear cost Online Gradient Descent (OGD).

As our main result, we prove a trade-off between the upper bound on an algorithm’s regret and a lower bound on its anytime regret, namely, its maximal regret for any prefix of the loss sequence. In particular, if the algorithm has a regret upper bound of \(O(\sqrt{Q})\) for any sequence with quadratic variation Q, then it must have an \(\varOmega (\sqrt{Q})\) anytime regret on any sequence with quadratic variation \(\varTheta (Q)\).

We prove our result for two separate classes of continuously twice-differentiable potentials. One class has negative definite Hessians in a neighborhood of \({\mathbf {L}}={\mathbf {0}}\), and includes OGD. The other comprises potentials for the best expert setting whose Hessians in a neighborhood of \({\mathbf {L}}={\mathbf {0}}\) have positive off-diagonal entries; in other words, such potentials increase the weights of experts as their performance relatively improves, which is a natural property of regret minimization algorithms. For the first class, we use the usual Euclidean quadratic variation, or \(\sum _{t=1}^T\Vert {\mathbf {l}}_t\Vert _2^2\). For the best expert setting, however, we use the more appropriate relative quadratic variation, \(\sum _{t=1}^T(\max _i\{l_{i,t}\}-\min _i\{l_{i,t}\})^2\).

Our proof is comprised of several steps. We add a learning rate \(\eta \) to any potential \(\varPhi \) by defining a new potential \(\varPhi _{\eta }({\mathbf {L}})=(1/\eta )\varPhi (\eta {\mathbf {L}})\). We give an exact expression for the regret using the Taylor expansion of the potential, and use it to prove an \(\varOmega (\min \{1/\eta ,\eta Q\})\) lower bound on the anytime regret of any sequence with quadratic variation Q. In addition, we construct two specific loss sequences with variation Q, one with an \(\varOmega (1/\eta )\) regret, and the other with \(\varOmega (\eta Q)\) regret. Thus, we must have \(\eta =\varTheta (1/\sqrt{Q})\) to ensure a regret of \(O(\sqrt{Q})\) for every sequence with variation Q, and our lower bound on the anytime regret becomes \(\varOmega (\sqrt{Q})\). We demonstrate our result on RWM, as an example of a best expert potential, and on linear cost OGD, as an example of a potential with a negative definite Hessian.

We show that our analysis may be generalized to accommodate diverse notions of variation instead of quadratic variation. Our lower bounds on the anytime regret and our trade-off result in fact apply to any notion of variation V that is upper bounded for any loss sequence by a constant times the quadratic variation, namely \(V\le \beta Q\). This condition is satisfied by key notions of variation featured in the regret minimization literature, notably Hazan and Kale (2010), Chiang et al. (2012). We apply this generalized analysis to the OGD algorithm in the online linear optimization setting, with V taken as the variance-like measure \({\text {Var}}_T = \sum _{t=1}^T \Vert {{\mathbf {l}}}_t-\varvec{\mu }_T\Vert _2^2\) (where \(\varvec{\mu }_T\) is the mean of the losses). We obtain in particular that a learning rate that guarantees a regret upper bound of \(O(\sqrt{V})\) for any sequence with \({\text {Var}}_T\le V\) necessarily implies an \(\varOmega (\sqrt{{\text {Var}}_T})\) anytime regret for any sequence with \({\text {Var}}_T = \varTheta (V)\).

We remark that our lower bounds are not applicable to every online learning algorithm or scenario. For instance, in the best expert setting, algorithms that set their learning rate as a function of the cumulative loss of the best expert may circumvent our lower bounds. In Sects. 3 and 4 we give a precise definition of the relevant setting and in Sect. 7.1 we further discuss the scope of our results.

Finally, we consider extensions to our results and highlight two applications that demonstrate the usability of our work. We will briefly mention here the first of these applications, which was included in the conference version of this work, and pertains to the financial problem of option pricing. Specifically, by leveraging our lower bounds on the anytime regret of RWM it is possible to obtain a lower bound on the price of “at the money” European call options in an arbitrage-free market.Footnote 1 The price lower bound obtained for such options is \(\exp (0.1\sqrt{Q})-1\approx 0.1\sqrt{Q}\) for \(Q<0.5\), where Q is the assumed quadratic variation of the stock’s log price ratios. This bound has the same asymptotic behavior as the Black-Scholes price, which is approximately \(\sqrt{Q}/\sqrt{2\pi }\approx 0.4\sqrt{Q}\), and improves on a previous result by DeMarzo et al. (2006), who gave a Q / 10 lower bound for \(Q<1\). For an in-depth treatment of the financial aspect of this work, see Gofer and Mansour (2012), Gofer (2014b).

1.1 Related work

There are numerous results providing worst case upper bounds for regret minimization algorithms and showing their optimality (see Cesa-Bianchi and Lugosi 2006). In the best expert setting, RWM has been shown to have an optimal regret of \(O(\sqrt{T\log N})\). In the online convex optimization paradigm (Zinkevich 2003), upper bounds of \(O(\sqrt{T})\) have been shown, along with \(\varOmega (\sqrt{T})\) lower bounds for linear cost functions (Hazan 2006). In both cases, regret lower bounds are proved by invoking a stochastic adversary and lower bounding the expected regret. Upper bounds based on various notions of quadratic variation and variance are given in Cesa-Bianchi et al. (2007) for the expert setting, in Hazan and Kale (2010) for online linear optimization and the expert setting in particular, and in Chiang et al. (2012) for the more general setting of online convex optimization.

A trade-off result is given in Even-Dar et al. (2008), where it is shown that a best expert algorithm with \(O(\sqrt{T})\) regret must have a worst case \(\varOmega (\sqrt{T})\) regret to any fixed average of experts. To the best of our knowledge, there are no results that lower bound the regret on loss sequences in terms of their individual quadratic variation.

1.2 Outline

In Sect. 2 we provide notation and definitions. Section 3 characterizes algorithms with non-negative individual sequence regret, proves they include linear cost RFTL, and provides basic regret lower bounds. Section 4 presents our main result on the trade-off between upper bounds on regret and lower bounds on individual sequence anytime regret. In Sect. 5 we apply our bounds to linear cost OGD and to RWM. In Sect. 6 we go beyond quadratic variation and generalize our analysis to other popular notions of variation. In Sect. 7 we consider extensions and describe applications of our results.

A shorter version of this work was published in the proceedings of The Twenty-Third International Conference on Algorithmic Learning Theory (ALT 2012). The present version contains new additions that are given in Sects. 6 and 7. A key new contribution is the generalization of previous analysis to diverse notions of variation beyond quadratic variation.

2 Preliminaries

2.1 Regret minimization

In the best expert setting, there are N available experts, and at each time step \(1\le t\le T\), an online algorithm A selects a distribution \({\mathbf {p}}_{t}\) over the N experts. After the choice is made, an adversary selects a loss vector \({\mathbf {l}}_{t}=(l_{1,t},\ldots ,l_{N,t})\in {\mathbb {R}}^N\), and the algorithm experiences a loss of \(l_{A,t}={\mathbf {p}}_{t}\cdot {\mathbf {l}}_{t}\). We write \(L_{i,t}=\sum _{\tau =1}^{t}l_{i,\tau }\) for the cumulative loss of expert i at time \(t,{\mathbf {L}}_{t}=(L{}_{1,t},\ldots ,L_{N,t})\), and \(L_{A,t}=\sum _{\tau =1}^{t}l_{A,\tau }\) for the cumulative loss of A at time t. The regret of A at time T is \(R_{A,T}=L_{A,T}-\min _{j}\{L_{j,T}\}\). The aim of a regret minimization algorithm is to achieve small regret regardless of the loss vectors chosen by the adversary. The anytime regret of A is the maximal regret over time, namely, \(\max _t\{R_{A,t}\}\). We will sometimes use the notation \(m(t)=\arg \min _{i}\{L_{i,t}\}\), where we take the smallest such index in case of a tie. Similarly, we write M(t) for the expert with the maximal cumulative loss at time t. We also use the notation \(\delta ({\mathbf {v}})=\max _{i}\{v{}_{i}\}-\min _{i}\{v_{i}\}\) for any \({\mathbf {v}}\in {\mathbb {R}}^{N}\), so \(\delta ({\mathbf {L}}_t)=L_{M(t),t}-L_{m(t),t}\).

The best expert setting is a special case of the more general setting of online linear optimization. In this setting, at time t the algorithm, or linear forecaster, chooses a weight vector \({\mathbf {x}}_{t}\in {\mathbb {R}}^N\), and incurs a loss of \({\mathbf {x}}_{t}\cdot {\mathbf {l}}_{t}\). In this paper we assume that the weight vectors are chosen from a compact and convex set \({\mathcal {K}}\). The regret of a linear forecaster A is then defined as \(R_{A,T}=L_{A,T}- \min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}_{T}\}\). The best expert setting is simply the case where \({\mathcal {K}}=\varDelta _{N}\), the probability simplex over N elements.

2.1.1 Quadratic variation

We define the quadratic variation of the loss sequence \({\mathbf {l}}_1,\ldots ,{\mathbf {l}}_T\) as \(Q_T=\sum _{t=1}^{T}\Vert {\mathbf {l}}_{t} \Vert _2 ^{2}\). For the best expert setting, we will use the slightly different notion of relative quadratic variation, defined as \(q_{T}=\sum _{t=1}^{T}\delta ({\mathbf {l}}_t)^{2}\). We write Q for a known lower bound on \(Q_T\) and q for a known lower bound on \(q_T\).

2.2 Convex functions

We mention here some basic facts about convex and concave functions that we will require. For more on convex analysis, see Rockafellar (1970), Boyd and Vandenberghe (2004), and Nesterov (2004), among others.

We will discuss functions defined on \({\mathbb {R}}^N\). A function \(f:C\rightarrow {\mathbb {R}}\) is convex, if C is a convex set and if for every \(\lambda \in [0,1]\) and \({\mathbf {x}},{\mathbf {y}}\in C,f(\lambda {\mathbf {x}}+(1-\lambda ){\mathbf {y}}) \le \lambda f({\mathbf {x}})+(1-\lambda )f({\mathbf {y}})\). The function f is concave if \(-f\) is convex. The function f is strictly convex if the inequality is strict for \({\mathbf {x}}\ne {\mathbf {y}}\) and \(\lambda \in (0,1)\). The function f is strongly convex with parameter \(\alpha >0\), if for every \({\mathbf {x}},{\mathbf {y}}\in C\) and \(\lambda \in [0,1],f(\lambda {\mathbf {x}}+(1-\lambda ){\mathbf {y}}) \le \lambda f({\mathbf {x}})+(1-\lambda )f({\mathbf {y}})-(\alpha /2) \lambda (1-\lambda )\Vert {\mathbf {x}}-{\mathbf {y}}\Vert _{2}^{2}\). If f is differentiable on a convex set C, then f is convex iff for every \({\mathbf {x}},{\mathbf {y}}\in C,\nabla f({\mathbf {y}})\cdot ({\mathbf {y}}-{\mathbf {x}})\ge f({\mathbf {y}})-f({\mathbf {x}})\ge \nabla f({\mathbf {x}})\cdot ({\mathbf {y}}-{\mathbf {x}}); f\) is strictly convex iff the above inequalities are strict for \({\mathbf {x}}\ne {\mathbf {y}}\). If f is twice differentiable, then it is convex iff its Hessian is positive semi-definite: for every \({\mathbf {x}}\in C,\nabla ^2f({\mathbf {x}}) \succeq 0\). The convex conjugate of f (defined on \(\text {dom}f\)) is the function \(f^{*}({\mathbf {y}})=\sup _{{\mathbf {x}}\in \text {dom}f}\{{\mathbf {x}}\cdot {\mathbf {y}}-f({\mathbf {x}})\}\), which is convex, and its effective domain is \(\text {dom}f^{*}=\{{\mathbf {y}}:f^{*}({\mathbf {y}})<\infty \}\).

2.3 Seminorms

A seminorm on \({\mathbb {R}}^{N}\) is a function \(\Vert \cdot \Vert :{\mathbb {R}}^{N}\rightarrow {\mathbb {R}}\) with the following properties:

-

Positive homogeneity: for every \(a\in {\mathbb {R}},{\mathbf {x}}\in {\mathbb {R}}^{N},\Vert a{\mathbf {x}}\Vert =|a|\Vert {\mathbf {x}}\Vert \).

-

Triangle inequality: for every \({\mathbf {x}},{\mathbf {x}}'\in {\mathbb {R}}^{N},\Vert {\mathbf {x}}+{\mathbf {x}}'\Vert \le \Vert {\mathbf {x}}\Vert +\Vert {\mathbf {x}}'\Vert \).

Clearly, every norm is a seminorm. A seminorm satisfies \(\Vert {\mathbf {x}}\Vert \ge 0\) for every \({\mathbf {x}}\), and \(\Vert {\mathbf {0}}\Vert =0\). However, unlike a norm, \(\Vert {\mathbf {x}}\Vert =0\) does not imply \({\mathbf {x}}={\mathbf {0}}\). We will not deal with the trivial all-zero seminorm. Thus, there always exists a vector with non-zero seminorm, and by homogeneity, there exists a vector with seminorm a for any \(a\in {\mathbb {R}}^{+}\).

2.4 Miscellaneous notation

For \({\mathbf {x}},{\mathbf {y}}\in {\mathbb {R}}^{N}\), we write \([{\mathbf {x}},{\mathbf {y}}]\) for the line segment between \({\mathbf {x}}\) and \({\mathbf {y}}\), namely, \(\{a{\mathbf {x}}+(1-a){\mathbf {y}}:0\le a\le 1\}\). We use the notation \(\text {conv}(A)\) for the convex hull of a set \(A\subseteq {\mathbb {R}}^N\), that is, \(\text {conv}(A)=\{\sum _{i=1}^k\lambda _i {\mathbf {x}}_i : {\mathbf {x}}_i\in A, \; \lambda _i\ge 0, \; i=1,\ldots ,k, \sum _{i=1}^k\lambda _i=1, k\in {\mathbb {N}}\}\).

3 Non-negative individual sequence regret

Our ultimate goal is to prove strictly positive individual sequence regret lower bounds for a variety of algorithms. In this section, we will characterize algorithms for which this goal is achievable, and prove some basic regret lower bounds. This will be done by considering the larger family of algorithms that have non-negative regret for any loss sequence. This family, it turns out, can be characterized exactly, and includes the important class of linear cost RFTL algorithms.

We focus on linear forecasters whose vector at time t is determined as \({\mathbf {x}}_{t}=g({\mathbf {L}}_{t-1}),\) for \(1\le t\le T\), where \(g:{\mathbb {R}}^{N}\rightarrow {\mathcal {K}}\subset {\mathbb {R}}^{N}\) is continuous and \({\mathcal {K}}\) is compact and convex. For such algorithms we can write \(L_{A,T}=\sum _{t=1}^{T}g({\mathbf {L}}_{t-1})\cdot ({\mathbf {L}}_{t}-{\mathbf {L}}_{t-1})\).

A non-negative-regret algorithm satisfies that \(L_{A,T}\ge \min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}} \cdot ({\mathbf {L}}_{T}-{\mathbf {L}}_{0})\}\) for every \({\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\in {\mathbb {R}}^{N}\). We point out that we allow both positive and negative losses. Synonymously, we will also say that g has non-negative regret. Note that if \({\mathbf {L}}_{0}={\mathbf {L}}_{T}\) (a closed cumulative loss path), then for any \({\mathbf {u}}\in {\mathcal {K}}\), it holds that \({\mathbf {u}}\cdot ({\mathbf {L}}_{T}-{\mathbf {L}}_{0})=0\), and non-negative regret implies that \(\sum _{t=1}^{T}g({\mathbf {L}}_{t-1}) \cdot ({\mathbf {L}}_{t}-{\mathbf {L}}_{t-1})\ge 0\).

The following theorem gives an exact characterization of non-negative-regret forecasters as the gradients of concave potentials.Footnote 2

Theorem 1

A linear forecaster based on a continuous function g has individual sequence non-negative regret iff there exists a concave potential function \(\varPhi :{\mathbb {R}}^{N}\rightarrow {\mathbb {R}}\) s.t. \(g=\nabla \varPhi \).

Proof

Let g have non-negative regret. For any path \(\gamma :[0,1]\rightarrow {\mathbb {R}}^{N}\) with finite length, we denote by \(\int _{\gamma }\sum _{j=1}^{N}g_{j}dL_{j}\) the path integral of the vector field g along \(\gamma \). For a closed path \(\gamma \), we have that

where the inequality is true because g has non-negative regret, and the last equality is true because \(\gamma (1)=\gamma (0)\). For the reverse path \(\gamma _{r},\) defined by \(\gamma _{r}(t)=\gamma (1-t)\), we have \(-\int _{\gamma }\sum _{j=1}^{N}g_{j}dL_{j}=\int _{\gamma _{r}}\sum _{j=1}^{N}g_{j}dL_{j}\ge 0\), where the inequality is true by the fact that \(\gamma _{r}\) is also a closed path. We thus have that \(\int _{\gamma }\sum _{j=1}^{N}g_{j}dL_{j}=0\) for any closed path, and therefore, g is a conservative vector field. As such, it has a potential function \(\varPhi \) s.t. \(g=\nabla \varPhi \).

Next, consider the case \({\mathbf {L}}_{0}={\mathbf {L}},{\mathbf {L}}_{1}={\mathbf {L}}',{\mathbf {L}}_{2}={\mathbf {L}}\), for some arbitrary \({\mathbf {L}},{\mathbf {L}}'\in {\mathbb {R}}^{N}\). Since g has non-negative regret, we have that \(0\le g({\mathbf {L}})\cdot ({\mathbf {L}}'-{\mathbf {L}})+g({\mathbf {L'}}) \cdot ({\mathbf {L}}-{\mathbf {L'}})=(g({\mathbf {L}})-g({\mathbf {L}}'))\cdot ({\mathbf {L}}'-{\mathbf {L}})\). Therefore, \((\nabla \varPhi ({\mathbf {L}})-\nabla \varPhi ({\mathbf {L}}'))\cdot ({\mathbf {L}}-{\mathbf {L}}')\le 0\) for any \({\mathbf {L}},{\mathbf {L}}'\in {\mathbb {R}}^{N}\), which means that \(\varPhi \) is concave (see, e.g., Nesterov 2004, Theorem 2.1.3).

In the other direction, let \({\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\in {\mathbb {R}}^{N}\). We have that

where the first two inequalities are by the concavity of \(\varPhi \). \(\square \)

As a by-product of the proof, we get the following:

Corollary 1

If algorithm A uses a non-negative-regret function \(g({\mathbf {L}})=\nabla \varPhi ({\mathbf {L}})\), then it holds that \(R_{A,T}\ge \varPhi ({\mathbf {L}}_{T})-\varPhi ({\mathbf {L}}_{0})- \min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}} \cdot ({\mathbf {L}}_{T}-{\mathbf {L}}_{0})\}\ge 0\).

If \(\varPhi \) is continuously twice-differentiable, its second order Taylor expansion may be used to derive a similar lower bound, which now includes a non-negative quadratic regret term.

Theorem 2

Let A be an algorithm using a non-negative-regret function \(g=\nabla \varPhi \), and let \({\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\in {\mathbb {R}}^{N}\). If \(\varPhi \) is continuously twice-differentiable on the set \(\text {conv}(\{{\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\})\), then

where \({\mathbf {z}}_{t}\in [{\mathbf {L}}_{t-1},{\mathbf {L}}_{t}]\).

Proof

For every \(1\le t\le T\), we have \(\varPhi ({\mathbf {L}}_{t})-\varPhi ({\mathbf {L}}_{t-1}) =\nabla \varPhi ({\mathbf {L}}_{t-1})\cdot {\mathbf {l}}_{t}+(1/2) {\mathbf {l}}_{t}^{\top }\nabla ^{2}\varPhi ({\mathbf {z}}_{t}){\mathbf {l}}_{t}\) for some \({\mathbf {z}}_{t}\in [{\mathbf {L}}_{t-1},{\mathbf {L}}_{t}]\). Summing over \(1\le t\le T\), we get

Therefore,

\(\square \)

Note that the regret is the sum of two non-negative terms. We will sometimes refer to the first one, \(\varPhi ({\mathbf {L}}_{T})-\varPhi ({\mathbf {L}}_{0})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot ({\mathbf {L}}_{T}-{\mathbf {L}}_{0})\}\), as the first order regret term, and to the second, \(-\frac{1}{2}\sum _{t=1}^{T}{\mathbf {l}}_{t}^{\top }\nabla ^{2}\varPhi ({\mathbf {z}}_{t}){\mathbf {l}}_{t}\), as the second order regret term. The second order term is non-negative by the concavity of \(\varPhi \). These two terms play a key role in our bounds.

3.1 Relation to Regularized Follow the Leader

The class of concave potential algorithms contains the important class of linear cost RFTL algorithms. (For an in-depth discussion of the RFTL algorithm, see Hazan 2011.) Following Hazan (2011), let \({\mathcal {K}}\subset {\mathbb {R}}^{N}\) be compact, non-empty, and convex. \(RFTL(\eta ,{\mathcal {R}})\) is a linear forecaster with two parameters: the learning rate, \(\eta >0\), and a strongly convex and continuously twice-differentiable regularizing function, \({\mathcal {R}}:{\mathcal {K}}\rightarrow {\mathbb {R}}\). \(RFTL(\eta ,{\mathcal {R}})\) determines its weights according to the rule \({\mathbf {x}}_{t+1}=g({\mathbf {L}}_{t})=\arg \min _{{\mathbf {x}}\in {\mathcal {K}}}\{{\mathbf {x}}\cdot {\mathbf {L}}_{t}+{\mathcal {R}}({\mathbf {x}})/\eta \}\).

The next theorem shows that linear RFTL is a concave potential algorithm, with a potential function that is directly related to the convex conjugate of the regularizing function. These are known properties (see, e.g., Lemma 15 in Shalev-Shwartz 2007), and a proof, which uses basic calculus, is given in Appendix 2 for completeness.

Theorem 3

If \({\mathcal {R}}:{\mathcal {K}}\rightarrow {\mathbb {R}}\) is continuous and strongly convex and \(\eta >0\), then \(\varPhi ({\mathbf {L}})=(-1/\eta ){\mathcal {R}}^{*}(-\eta {\mathbf {L}})\) is concave and continuously differentiable on \({\mathbb {R}}^{N}\), and for every \({\mathbf {L}}\in {\mathbb {R}}^{N}\), it holds that \(\nabla \varPhi ({\mathbf {L}})=\arg \min _{{\mathbf {x}}\in {\mathcal {K}}}\{{\mathbf {x}}\cdot {\mathbf {L}}+{\mathcal {R}}({\mathbf {x}})/\eta \}\) and \(\varPhi ({\mathbf {L}})=\min _{{\mathbf {x}}\in {\mathcal {K}}} \{{\mathbf {x}}\cdot {\mathbf {L}}+{\mathcal {R}}({\mathbf {x}})/\eta \}\).

It is now possible to lower bound the regret of \(RFTL(\eta ,{\mathcal {R}})\) by applying the lower bounds of Corollary 1 and Theorem 2.

Theorem 4

The regret of \(RFTL(\eta ,{\mathcal {R}})\) satisfies

and if \(R^{*}\) is continuously twice-differentiable on \(\text {conv}(\{-\eta {\mathbf {L}}_{0},\ldots ,-\eta {\mathbf {L}}_{T}\})\), then

where \({\mathbf {z}}_{t}\in [{\mathbf {L}}_{t-1},{\mathbf {L}}_{t}]\).

Proof

Let \(\varPhi \) be the potential function of \(RFTL(\eta ,{\mathcal {R}})\) according to Theorem 3. We have that \(\varPhi ({\mathbf {L}}_{t})={\mathbf {x}}_{t+1}\cdot {\mathbf {L}}_{t}+{\mathcal {R}}({\mathbf {x}}_{t+1})/\eta \) and \({\mathbf {x}}_{t+1}=\nabla \varPhi ({\mathbf {L}}_{t})\) for every t. Therefore,

where we used the fact that \({\mathbf {L}}_{0}={\mathbf {0}}\). Therefore,

where the first inequality is by Corollary 1, and the second inequality is by the fact that \({\mathbf {x}}_1=\arg \min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathcal {R}}({\mathbf {u}})\}\) and \({\mathbf {x}}_{T+1}\in {\mathcal {K}}\). This concludes the first part of the proof.

By Theorem 3, \(\varPhi ({\mathbf {L}})=-(1/\eta ){\mathcal {R}}^{*}(-\eta {\mathbf {L}})\). Thus, \(\varPhi \) is continuously twice-differentiable on \(\text {conv}(\{{\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\})\), and we have \(\nabla ^{2}\varPhi ({\mathbf {L}})=-\eta \nabla ^{2}{\mathcal {R}}^{*}(-\eta {\mathbf {L}})\). By the first part and by Theorem 2, for some \({\mathbf {z}}_{t}\in [{\mathbf {L}}_{t-1},{\mathbf {L}}_{t}]\),

concluding the second part of the proof. \(\square \)

Note that the first order regret term is split into two new non-negative terms, namely, \(\left( {\mathcal {R}}({\mathbf {x}}_{T+1})-{\mathcal {R}}({\mathbf {x}}_{1})\right) /\eta \) and \({\mathbf {x}}_{T+1}\cdot {\mathbf {L}}_{T}-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}_{T}\}\).

The first order regret lower bound given in Theorem 4 may be proved directly by extending the “follow the leader, be the leader” (FTL–BTL) lemma (Kalai and Vempala 2005, see also Hazan 2011). The extension and proof are given in Appendix 3.

4 Strictly positive individual sequence anytime regret

In this section we give lower bounds on the anytime regret for two classes of potentials. These are the class of potentials with negative definite Hessians, and a rich class of best expert potentials that includes RWM.

We start by describing our general argument, which is based on the lower bounds of Theorem 2 and Corollary 1. Note first that these bounds hold for any \(1\le T'\le T\). Now, let C be some convex set on which \(\varPhi \) is continuously twice-differentiable. If \({\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\in C\), then we may lower bound the regret by the second order term of Theorem 2, and further lower bound that term over any \({\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\in C\). Otherwise, there is some \(1\le T' \le T\) s.t. \({\mathbf {L}}_{T'}\notin C\), and we may lower bound the regret at time \(T'\) by the first order term, using Corollary 1. We further lower bound that term over any \({\mathbf {L}}_{T'}\notin C\). The minimum of those two lower bounds gives a lower bound on the anytime regret. By choosing C properly, we will be able to prove that these two lower bounds are strictly positive.

We now present our analysis in more detail. Observe that for every \(\eta >0\) and concave potential \(\varPhi :{\mathbb {R}}^{N}\rightarrow {\mathbb {R}},\varPhi _{\eta }( {\mathbf {L}})=(1/\eta )\varPhi (\eta {\mathbf {L}})\) is also concave, with \(\nabla \varPhi _{\eta }({\mathbf {L}})=\nabla \varPhi (\eta {\mathbf {L}})\). Let \(\Vert \cdot \Vert \) be a non-trivial seminorm on \({\mathbb {R}}^{N}\). In addition, let \(a>0\) be such that \(\varPhi \) is continuously twice-differentiable on the set \(\{\Vert {\mathbf {L}}\Vert \le a\}\), and let \({\mathbf {L}}_0={\mathbf {0}}\).

Suppose algorithm A uses \(\varPhi _{\eta }\) and encounters the loss path \({\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\). If there is some \(T'\) s.t. \(\Vert {\mathbf {L}}_{T'}\Vert >a/\eta \), then applying Corollary 1 to \(\varPhi _{\eta }\), we get

Defining \(\rho _{1}(a)=\inf {}_{\Vert {\mathbf {L\Vert }}\ge a}\{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\}\), we have that \(R_{A,T'}\ge \rho _{1}(a)/\eta \).

We next assume that \(\Vert {\mathbf {L}}_{t}\Vert \le a/\eta \) for every t. It is easily verified that the set \(\{\Vert {\mathbf {L}}\Vert \le a/\eta \}\) is convex, and since it contains \({\mathbf {L}}_{t}\) for every t, it also contains \(\text {conv}(\{{\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\})\). This means that \(\varPhi _{\eta }({\mathbf {L}})=(1/\eta )\varPhi (\eta {\mathbf {L}})\) is continuously twice-differentiable on \(\text {conv}(\{{\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\})\). Applying Theorem 2 to \(\varPhi _{\eta }\) and dropping the non-negative first order term, we have

where \({\mathbf {z}}_{t}\in [{\mathbf {L}}_{t-1},{\mathbf {L}}_{t}]\). We now define \(\rho _{2}(a)=\inf {}_{\Vert {\mathbf {L\Vert }}\le a,\Vert {\mathbf {l\Vert }}=1}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}\). If \(\Vert {\mathbf {l}}_{t}\Vert \ne 0\), then

where we used the fact that \( \Vert \eta {\mathbf {z}}_{t}\Vert \le a\), which holds since \({\mathbf {z}}_{t} \in \text {conv}(\{{\mathbf {L}}_{0},\ldots ,{\mathbf {L}}_{T}\})\). Otherwise, \(-{\mathbf {l}}_{t}^{\top }\nabla ^{2}\varPhi (\eta {\mathbf {z}}_{t}){\mathbf {l}}_{t}\ge 0=\rho _{2}(a)\Vert {\mathbf {l}}_{t}\Vert ^{2}\), so in any case,

Thus, we have

Lemma 1

If \(\Vert {\mathbf {L}}_{t}\Vert \le a/\eta \) for every t, then \(R_{A,T}\ge \frac{\eta }{2}\rho _{2}(a)\sum _{t=1}^{T}\Vert {\mathbf {l}}_{t}\Vert ^{2}\). Otherwise, for any t s.t. \(\Vert {\mathbf {L}}_{t}\Vert > a/\eta ,R_{A,t}\ge \rho _{1}(a)/\eta \). Therefore,

Note that \(\rho _{1}(a)\) is non-decreasing, \(\rho _{2}(a)\) is non-increasing, and \(\rho _{1}(a),\rho _{2}(a)\ge 0\). Lemma 1 therefore highlights a trade-off in the choice of a.

It still remains to bound \(\rho _1\) and \(\rho _2\) away from zero, and that will be done in two different ways for the cases of negative definite Hessians and best expert potentials. Nevertheless, the next technical lemma, which is instrumental in bounding \(\rho _1\) away from zero, still holds in general. Essentially, it says that in the definition of \(\rho _1(a)\), it suffices to take the infimum over \(\{\Vert {\mathbf {L}}\Vert =a\}\) instead of \(\{\Vert {\mathbf {L}}\Vert \ge a\}\).

Lemma 2

It holds that \(\rho _{1}(a)=\inf {}_{\Vert {\mathbf {L\Vert }}=a} \{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})- \min _{{\mathbf {u}}\in {\mathcal {K}}} \{{\mathbf {u}}\cdot {\mathbf {L}}\}\}\).

Proof

Let \(\Vert {\mathbf {L\Vert }}\ge a\), and define \({\mathbf {L}}'=a{\mathbf {L}}/\Vert {\mathbf {L\Vert }}\), so \(\Vert {\mathbf {L}}'{\mathbf {\Vert }}=a\). Since \(\varPhi \) is concave, we have that \(\varPhi ({\mathbf {L}})\ge \varPhi ({\mathbf {L}}')+ \nabla \varPhi ({\mathbf {L}})\cdot ({\mathbf {L}}-{\mathbf {L}}')\), and thus

where the last inequality uses the fact that \(\nabla \varPhi ({\mathbf {L}})\cdot {\mathbf {L}}'-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}'\}\ge 0\). Subtracting \(\varPhi ({\mathbf {0}})\), the result follows.\(\square \)

We now present the main result of this section, assuming we have shown \(\rho _1,\rho _2>0\). In what follows we write \(Q_{T}=Q_{T}({\mathbf {l}}_{1},\ldots ,{\mathbf {l}}_{T}) =\sum _{t=1}^{T}\Vert {\mathbf {l}}_{t}\Vert ^{2}\) for the generic quadratic variation w.r.t. the seminorm \(\Vert \cdot \Vert \) (not to be confused with specific notions of quadratic variation). We denote by \(Q>0\) a given lower bound on \(Q_{T}\).

Theorem 5

Let \(a>0\) satisfy \(\rho _{1}(a),\rho _{2}(a)>0\).

-

(i)

For every \(\eta >0,\max _{t}\{R_{A,t}\}\ge \min \{\frac{\rho _{1}(a)}{\eta },\frac{\eta }{2}\rho _{2}(a)Q\}\), and for \(\eta =\sqrt{\frac{2\rho _{1}(a)}{\rho _{2}(a)Q}}\),

$$\begin{aligned} \max _{t}\{R_{A,t}\} \ge \sqrt{\rho _{1}(a)\rho _{2}(a)/2}\cdot \sqrt{Q}\;. \end{aligned}$$ -

(ii)

If for any sequence with quadratic variation \(Q_{T}\le Q'\) we have \(R_{A,T}\le c\sqrt{Q'}\), then for any such sequence,

$$\begin{aligned} \max _{t}\{R_{A,t}\}\ge \frac{\rho _{1}(a)\rho _{2}(a)}{2c}\cdot \frac{Q_T}{\sqrt{Q'}}\;. \end{aligned}$$In particular, if \(Q_T=\varTheta (Q')\), then \(\max _{t}\{R_{A,t}\}=\varOmega (\sqrt{Q_T})\).

Proof

-

(i)

By Lemma 1,

$$\begin{aligned} \max _{t}\{R_{A,t}\}&\ge \min \left\{ \frac{\rho _{1}(a)}{\eta },\frac{\eta }{2}\rho _{2}(a)\sum _{t=1}^{T}\Vert {\mathbf {l}}_{t}\Vert ^{2}\right\} \ge \min \left\{ \frac{\rho _{1}(a)}{\eta },\frac{\eta }{2}\rho _{2}(a)Q\right\} \;. \end{aligned}$$Picking \(\eta =\sqrt{\frac{2\rho _{1}(a)}{\rho _{2}(a)Q}}\) implies that \(\frac{\rho _{1}(a)}{\eta }=\frac{\eta }{2}\rho _{2}(a)Q=\sqrt{\frac{1}{2}Q\rho _{1}(a)\rho _{2}(a)}\).

-

(ii)

Given a and \(\eta \), let \(0<\epsilon <a/\eta \) satisfy that \(T=Q'/\epsilon ^2\) is an integer. In addition, let \({\mathbf {x}}\in {\mathbb {R}}^{N}\) be such that \(\Vert {\mathbf {x}}\Vert =1\). The loss sequence \({\mathbf {l}}_{t}=(-1)^{t+1}\epsilon {\mathbf {x}}\), for \(1\le t\le T\), satisfies that \(Q_{T}=\epsilon ^{2}T=Q'\) and that \(\Vert {\mathbf {L}}_{t}\Vert =\Vert (1-(-1)^{t})\epsilon {\mathbf {x}}/2\Vert \le \epsilon <a/\eta \) for every t. Therefore,

$$\begin{aligned} c\sqrt{Q'} \ge R_{A,T}\ge \frac{\eta }{2}\rho _{2}(a)\sum _{t=1}^{T}\Vert {\mathbf {l}}_{t}\Vert ^{2}=\frac{\eta }{2}\rho _{2}(a)Q'\;, \end{aligned}$$where the second inequality is by Lemma 1. This implies that \(\eta \le \frac{2c}{\rho _{2}(a)\sqrt{Q'}}\).

On the other hand, let \(T>\frac{a^{2}}{\eta ^{2}Q'}\), define \(\epsilon =\sqrt{Q'/T}\), and consider the loss sequence \({\mathbf {l}}_{t}=\epsilon {\mathbf {x}}\). Then \(Q_{T}=\epsilon ^{2}T=Q'\), and \(\Vert {\mathbf {L}}_{T}\Vert =\epsilon T=\sqrt{Q'T}>a/\eta \). Thus, again using Lemma 1, we have \(c\sqrt{Q'} \ge R_{A,T}\ge \rho _{1}(a)/\eta \), which means that \(\eta \ge \frac{\rho _{1}(a)}{c\sqrt{Q'}}\). Together, we have that \(\frac{\rho _{1}(a)}{c\sqrt{Q'}}\le \eta \le \frac{2c}{\rho _{2}(a)\sqrt{Q'}}\), implying that \(c\ge \sqrt{\rho _{1}(a)\rho _{2}(a)/2}\).Footnote 3 Given any sequence with \(Q_T\le Q'\), we have that \(\frac{\rho _{1}(a)}{\eta }\ge \frac{\rho _{1}(a)\rho _{2}(a)\sqrt{Q'}}{2c}\) and \(\frac{\eta }{2}\rho _{2}(a)Q_T\ge \frac{\rho _{1}(a)\rho _{2}(a)Q_T}{2c\sqrt{Q'}}\), so by Lemma 1, \(\max _{t}\{R_{A,t}\}\ge \frac{\rho _{1}(a)\rho _{2}(a)}{2c}\cdot \frac{Q_T}{\sqrt{Q'}}\), concluding the proof.\(\square \)

4.1 Potentials with negative definite Hessians

For this case, we pick \(\Vert \cdot {\mathbf {\Vert }}_{2}\) as our seminorm. Let \(a>0\) and let \(\nabla ^{2}\varPhi ({\mathbf {L}})\prec 0\) for \({\mathbf {L}}\) s.t. \(\Vert {\mathbf {L\Vert }}_{2}\le a\). In this setting, the infimum in the definitions of \(\rho _{1}(a)\) and \(\rho _{2}(a)\) is equivalent to a minimum, using continuity and the compactness of \(L^2\) balls and spheres.

Lemma 3

If \(\Vert \cdot \Vert =\Vert \cdot \Vert _{2}\), then \(\rho _{1}(a)=\min {}_{\Vert {\mathbf {L\Vert }}=a}\{\varPhi ({\mathbf {L}}) -\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\}\) and \(\rho _{2}(a)=\min {}_{\Vert {\mathbf {L\Vert }}\le a,\Vert {\mathbf {l\Vert }}=1}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}\).

Proof

By Lemma 2, \(\rho _{1}(a)=\inf {}_{\Vert {\mathbf {L\Vert }}=a}\{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\}\). The function \(h({\mathbf {L}})=\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\) is continuous on \({\mathbb {R}}^{N}\) (Lemma 10). Therefore, \(\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\) is continuous on the compact set \(\{\Vert {\mathbf {L\Vert }}=a\}\) and attains a minimum there. The second statement follows from the fact that \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\) is a continuous function on the compact set \(\{\Vert {\mathbf {L\Vert }}\le a\}\times \{\Vert {\mathbf {l\Vert }}=1\}\), and attains a minimum there. \(\square \)

By Lemma 3, \(\rho _{2}(a)=\min {}_{\Vert {\mathbf {L\Vert }}\le a,\Vert {\mathbf {l\Vert }}=1}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}>0\), where the inequality is true since the Hessians are negative definite, so we are taking the minimum of positive values. In addition, if \({\mathbf {L}}\ne {\mathbf {0}}\), then \(\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}>\nabla \varPhi ({\mathbf {L}})\cdot {\mathbf {L}}-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\ge 0\), since \(\varPhi \) is strictly concave. Thus, again by Lemma 3, \(\rho _{1}(a)=\min {}_{\Vert {\mathbf {L\Vert }}=a}\{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\}>0\). The following statement is an immediate consequence of Theorem 5:

Theorem 6

If \(\nabla ^{2}\varPhi ({\mathbf {L}})\prec 0\) for every \({\mathbf {L}}\) s.t. \(\Vert {\mathbf {L\Vert }}_{2}\le a\), for some \(a>0\), then

-

(i)

For every \(\eta >0\), it holds that \(\max _{t}\{R_{A,t}\}\ge \min \{\frac{\rho _{1}(a)}{\eta },\frac{\eta }{2}\rho _{2}(a)Q\}\), and for \(\eta =\sqrt{\frac{2\rho _{1}(a)}{\rho _{2}(a)Q}},\max _{t}\{R_{A,t}\}\ge \sqrt{\rho _{1}(a)\rho _{2}(a)/2}\cdot \sqrt{Q}\).

-

(ii)

If for any sequence with quadratic variation \(Q_{T}\le Q'\) we have \(R_{A,T}\le c\sqrt{Q'}\), then for any such sequence, \(\max _{t}\{R_{A,t}\}\ge \frac{\rho _{1}(a)\rho _{2}(a)}{2c}\cdot \frac{Q_T}{\sqrt{Q'}}\). In particular, if \(Q_T=\varTheta (Q')\), then \(\max _{t}\{R_{A,t}\}=\varOmega (\sqrt{Q_T})\).

4.2 The best expert setting

In the best expert setting, where \({\mathcal {K}} = \varDelta _{N}\), potentials can never be strictly concave, let alone have negative definite Hessians. To see that, let \({\mathbf {L}}\in {\mathbb {R}}^{N},c\in {\mathbb {R}}\), and define \({\mathbf {L}}'={\mathbf {L}}+c\cdot {\mathbf {1}}\), where \({\mathbf {1}}\) is the all-one vector. We will say that \({\mathbf {L}}'\) is a uniform translation of \({\mathbf {L}}\). Then

where we use the concavity of \(\varPhi \), the fact that \(\nabla \varPhi \) is a probability vector, and the fact that \({\mathbf {L}}'-{\mathbf {L}}=c\cdot {\mathbf {1}}\). For a strictly concave \(\varPhi \), the above inequalities would be strict if \(c\ne 0\), but instead, they are equalities. Thus, the conditions for strict concavity are not fulfilled at any point \({\mathbf {L}}\).

We will replace the negative definite assumption with the assumption that for every \(i\ne j,\frac{\partial ^{2}\varPhi }{\partial L_{i}\partial L_{j}}>0\). This condition is natural for regret minimization algorithms, because \(\frac{\partial ^{2}\varPhi ({\mathbf {L}})}{\partial L_{i}\partial L_{j}}=\frac{\partial p_{i}({\mathbf {L}})}{\partial L_{j}}\), where \(p_{i}\) is the weight of expert i. Thus, we simply require that an increase in the cumulative loss of expert j results in an increase in the weight of every other expert (and hence a decrease in its own weight). A direct implication of this assumption is that \(\frac{\partial \varPhi ({\mathbf {L}})}{\partial L_{i}}>0\) for every i and \({\mathbf {L}}\). To see that, observe that \(p_{i}({\mathbf {L}})=1-\sum _{j\ne i}p_{j}({\mathbf {L}})\), so \(\frac{\partial p_{i}({\mathbf {L}})}{\partial L_{i}}=-\sum _{j\ne i}\frac{\partial p_{j}({\mathbf {L}})}{\partial L_{i}}<0\). Since \(p_{i}({\mathbf {L}})\ge 0\) and it is strictly decreasing in \(L_{i}\), it follows that \(p_{i}({\mathbf {L}})>0\), or \(\frac{\partial \varPhi ({\mathbf {L}})}{\partial L_{i}}>0\).

Using the above assumption we proceed to bound \(\rho _{1}\) and \(\rho _{2}\) away from zero. We first state some general properties of best expert potentials.

Lemma 4

(i) Every row and column of \(\nabla ^{2}\varPhi \) sum up to zero. (ii) \(\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\) is invariant w.r.t. a uniform translation of \({\mathbf {L}}\). (iii) \({\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\) is invariant w.r.t. a uniform translation of either \({\mathbf {l}}\) or \({\mathbf {L}}\).

Proof

(i) Defining \(h({\mathbf {L}})=\sum _{i=1}^{N}\frac{\partial \varPhi ({\mathbf {L}})}{\partial L_{i}}\), we have that \(h\equiv 1\), and thus \(0=\frac{\partial h({\mathbf {L}})}{\partial L_{j}}=\sum _{i=1}^{N}\frac{\partial ^{2}\varPhi ({\mathbf {L}})}{\partial L_{i}\partial L_{j}}\) for every j.

-

(ii)

Let \({\mathbf {L}}'={\mathbf {L}}+c\cdot {\mathbf {1}}\). As already seen, \(\varPhi ({\mathbf {L}}')-\varPhi ({\mathbf {L}})=c\), and we also have that

$$\begin{aligned} \min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}'\}&=\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}+c\cdot {\mathbf {u}}\cdot {\mathbf {1}}\}=\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}+c\;. \end{aligned}$$Therefore,

$$\begin{aligned} \varPhi ({\mathbf {L}}')-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}'\} =\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\;. \end{aligned}$$ -

(iii)

Note that in this context, \({\mathbf {l}},{\mathbf {L}}\in {\mathbb {R}}^{N}\) are unrelated vectors.

$$\begin{aligned} ({\mathbf {l}}+c{\mathbf {1}})^{\top }\nabla ^{2}\varPhi ({\mathbf {L}})({\mathbf {l}}+c{\mathbf {1}})&={\mathbf {l}}{}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}+c{\mathbf {1}}{}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}})({\mathbf {l}}+c{\mathbf {1}})+{\mathbf {l}}{}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}})(c{\mathbf {1}})\\&={\mathbf {l}}{}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\;, \end{aligned}$$where we have that \({\mathbf {1}}{}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}})={\mathbf {0}}^{\top }\) and \(\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {1}}={\mathbf {0}}\) by (i). To show the invariance for \({\mathbf {L}}\), recall that for \({\mathbf {L}}'={\mathbf {L}}+c\cdot {\mathbf {1}},\) \(\varPhi ({\mathbf {L}}')-\varPhi ({\mathbf {L}})=c\), and thus, \(\nabla ^{2}\varPhi ({\mathbf {L}}')=\nabla ^{2}\varPhi ({\mathbf {L}})\). \(\square \)

We now consider \(\rho _{1}(a)\) and \(\rho _{2}(a)\) where we use the seminorm \(\Vert {\mathbf {v}}\Vert =\delta ({\mathbf {v}})\). (The fact that \(\delta ({\mathbf {v}})=\max _{i}\{v_{i}\}-\min _{i}\{v_{i}\}\) is a seminorm is proved in Lemma 11 in Appendix 1.) Under this seminorm, \(\sum _{t=1}^{T}\Vert {\mathbf {l}}_{t}\Vert ^{2}\) becomes \(q_{T}\), the relative quadratic variation. Note that \(\delta ({\mathbf {v}})\) is invariant to uniform translation. In particular, for every \({\mathbf {v}}\in {\mathbb {R}}^{N}\) we may consider its “normalized” version, \(\hat{{\mathbf {v}}}={\mathbf {v}}-\min _i\{v_i\}\cdot {\mathbf {1}}\). We have that \(\delta (\hat{{\mathbf {v}}})=\delta ({\mathbf {v}}), \hat{{\mathbf {v}}}\in [0,\delta ({\mathbf {v}})]^{N}\), and there exist entries i and j s.t. \(\hat{{\mathbf {v}}}_{i}=0\) and \(\hat{{\mathbf {v}}}_{j}=\delta ({\mathbf {v}})\). Writing \({\mathcal {{N}}}(a)\) for the set of normalized vectors with seminorm a, we thus have that \({\mathcal {{N}}}(a)=\{{\mathbf {v}}\in [0,a]^{N}:\exists i,j \text { s.t. } v_i=a,\; v_j=0\}\). The set \({\mathcal {N}}(a)\) is bounded and also closed, as a finite union and intersection of closed sets.

Using invariance to uniform translation, we can now show that the infima in the expressions for \(\rho _1\) and \(\rho _2\) may be taken over compact sets, and thus be replaced with minima. Using the requirement that \(\frac{\partial ^{2}\varPhi }{\partial L_{i}\partial L_{j}}>0\) for every \(i\ne j\), we can then show that the expressions inside the minima are positive. This is summarized in the next lemma.

Lemma 5

For the best expert setting, it holds that \(\rho _{1}(a)=\min {}_{{\mathbf {L}}\in {\mathcal {N}}(a)}\{\varPhi ({\mathbf {L}})\}-\varPhi ({\mathbf {0}})>0\) and \(\rho _{2}(a)=\min {}_{{\mathbf {L}}\in [0,a]^N,{\mathbf {l}}\in {\mathcal {N}}(1)}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}>0\).

Proof

We start with \(\rho _1(a)\). By Lemma 2, we have that \(\rho _{1}(a)=\inf {}_{\Vert {\mathbf {L\Vert }}=a}\{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\}\). By part (ii) of Lemma 4, \(\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\) is invariant to uniform translation, so \(\rho _{1}(a)=\inf {}_{{\mathbf {L}}\in {\mathcal {N}}(a)}\{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\}\). For every normalized vector \({\mathbf {L}}\), \(\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}=0\), by placing all the weight of \({\mathbf {u}}\) on the zero entry of \({\mathbf {L}}\). In addition, as already observed, \(\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\) is continuous, and by the compactness of \({\mathcal {N}}(a),\rho _{1}(a)=\min {}_{{\mathbf {L}}\in {\mathcal {N}}(a)}\{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})-\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\}=\min {}_{{\mathbf {L}}\in {\mathcal {N}}(a)}\{\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})\}\). Now, \(\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})\ge \nabla \varPhi ({\mathbf {L}})\cdot {\mathbf {L=}}\sum _{i=1}^{N}p_{i}({\mathbf {L}})L_{i}\) by the concavity of \(\varPhi \). Recall that \(p_{i}({\mathbf {L}})>0\), and for \({\mathbf {L}}\in {\mathcal {N}}(a)\), we have that \(L_{i}\ge 0\) for every i, and there is at least one index with \(L_{i}>0\). Thus, \(\varPhi ({\mathbf {L}})-\varPhi ({\mathbf {0}})>0\), proving the statement for \(\rho _1\).

We now move on to \(\rho _2\). By Lemma 4, \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\) is invariant to a uniform translation of either \({\mathbf {l}}\) or \({\mathbf {L}}\). Therefore, \(\rho _{2}(a)=\inf {}_{{\mathbf {L}}\in [0,a]^N,{\mathbf {l}}\in {\mathcal {N}}(1)}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}\). Since \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\) is continuous on the compact set \([0,a]^N\times {\mathcal {N}}(1),\rho _{2}(a)=\min {}_{{\mathbf {L}}\in [0,a]^N,{\mathbf {l}}\in {\mathcal {N}}(1)}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}\). To show that \(\rho _{2}(a)>0\), it therefore suffices to show that \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}>0\) for every \({\mathbf {L}}\in [0,a]^N\) and \({\mathbf {l}}\in {\mathcal {N}}(1)\). Fix \({\mathbf {L}}\), and denote \(A=-\nabla ^{2}\varPhi ({\mathbf {L}})\). For any \({\mathbf {l}}\in {\mathcal {N}}(1)\), one entry is 1, another is 0, and the rest are in [0, 1]. If \(N=2\), we have that \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\) is either \(-\nabla ^{2}\varPhi ({\mathbf {L}})_{1,1}\) or \(-\nabla ^{2}\varPhi ({\mathbf {L}})_{2,2}\), and in both cases positive. We next assume \(N\ge 3\) and minimize \(f({\mathbf {l}}){\mathbf {=l}}^{\top }A{\mathbf {l=}}\sum _{i,j}a_{i,j}l_{i}l_{j}\) for \(l_{k}\in [0,1],k\ge 3\), where \(l_{1}=1,l_{2}=0\). For every k,

where the last equality is by part (i) of Lemma 4. Note that \(a_{i,k}<0\) if \(i\ne k\) and \(a_{k,k}>0\). Thus, for \(l_{k}=0,\frac{\partial f}{\partial l_{k}}<0\), and for \(l_{k}=1,\frac{\partial f}{\partial l_{k}}>0\), so by the linearity of \(\frac{\partial f}{\partial l_{k}}\), there is \(l_{k}^{*}\in [0,1]\) satisfying \(\frac{\partial f}{\partial l_{k}}=0\), and it is a minimizing choice for f. Thus, at a minimum point, it holds that \(\frac{\partial f}{\partial l_{k}}=0\), or \(\sum _{i\ne k}a_{i,k}l_{i}=-a_{k,k}l_{k}\) for every \(k\ge 3\). We have that

Since our choice of \(l_{1}=1\) and \(l_{2}=0\) was arbitrary, we have that \(f({\mathbf {l}})\ge \min _{i\ne j}\{-a_{i,j}\}>0\). Thus, \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l>}}0\), completing the proof. \(\square \)

We can now apply Theorem 5 to the best expert setting.

Theorem 7

If \(\frac{\partial ^{2}\varPhi }{\partial L_{i}\partial L_{j}}>0\) for every \(i\ne j\) and every \({\mathbf {L}}\) s.t. \(\delta ({\mathbf {L}})\le a\), then

-

(i)

For every \(\eta >0\), it holds that \(\max _{t}\{R_{A,t}\}\ge \min \{\frac{\rho _{1}(a)}{\eta },\frac{\eta }{2}\rho _{2}(a)q\}\), and for \(\eta =\sqrt{\frac{2\rho _{1}(a)}{\rho _{2}(a)q}},\max _{t}\{R_{A,t}\} \ge \sqrt{\rho _{1}(a)\rho _{2}(a)/2}\cdot \sqrt{q}\).

-

(ii)

If for any sequence with relative quadratic variation \(q_{T}\le q'\) we have \(R_{A,T}\le c\sqrt{q'}\), then for any such sequence, \(\max _{t}\{R_{A,t}\}\ge \frac{\rho _{1}(a)\rho _{2}(a)}{2c}\cdot \frac{q_T}{\sqrt{q'}}\). In particular, if \(q_T=\varTheta (q')\), then \(\max _{t}\{R_{A,t}\}=\varOmega (\sqrt{q_T})\).

5 Application to specific regret minimization algorithms

5.1 Online Gradient Descent with linear costs

In this subsection, we deal with the Lazy Projection variant of the OGD algorithm (Zinkevich 2003) with a fixed learning rate \(\eta \) and linear costs. In this setting, for each t, OGD selects a weight vector according to the rule \({\mathbf {x}}_{t+1}=\arg \min _{{\mathbf {x}}\in {\mathcal {K}}} \{\Vert {\mathbf {x}}+\eta {\mathbf {L}}_{t}\Vert _2\}\), where \({\mathcal {K}}\subset {\mathbb {R}}^N\) is compact and convex. As observed in Hazan and Kale (2010) and Hazan (2011), this algorithm is equivalent to \(RFTL(\eta ,{\mathcal {R}})\), where \({\mathcal {R}}({\mathbf {x}})=\frac{1}{2}\Vert {\mathbf {x}}\Vert _{2}^{2}\), namely, setting \({\mathbf {x}}_{t+1}=\arg \min _{{\mathbf {x}} \in {\mathcal {K}}}\{{\mathbf {x}}\cdot {\mathbf {L}}_{t}+(1/2\eta ) \Vert {\mathbf {x}}\Vert _{2}^{2}\}\). In what follows we will make the assumption that \({\mathcal {K}}\supseteq B({\mathbf {0}},a)\), where \(B({\mathbf {0}},a)\) is the closed ball with radius a centered at \({\mathbf {0}}\), for some \(a>0\).

Note that solving the above minimization problem without the restriction \({\mathbf {x}}\in {\mathcal {K}}\) yields \({\mathbf {x}}'_{t+1}=-\eta {\mathbf {L}}_t\). However, if \(\Vert {\mathbf {L}}_t\Vert _2\le a/\eta \), then \({\mathbf {x}}'_{t+1}\in {\mathcal {K}}\), and then, in fact, \({\mathbf {x}}_{t+1}=-\eta {\mathbf {L}}_t\). By Theorem 3,

Thus, if \(\Vert {\mathbf {L}}\Vert _2\le a\), then \(\varPhi ({\mathbf {L}})=-(1/2)\Vert {\mathbf {L}}\Vert ^2_2\) and also \(\nabla ^2\varPhi ({\mathbf {L}})=-I\), where I is the identity matrix. By Lemma 3,

where we used the fact that \(-{\mathbf {L}}\in {\mathcal {K}}\) if \(\Vert {\mathbf {L}}\Vert _{2}=a\). In addition, by Lemma 3,

By Theorem 6, we have that \(\max _{t}\{R_{A,t}\}\ge \min \{a^2/(2\eta ),(\eta /2)Q\}\), and for \(\eta =\frac{a}{\sqrt{Q}},\max _{t}\{R_{A,t}\}\ge \frac{a}{2}\sqrt{Q}\).

5.2 Randomized Weighted Majority

RWM is the most notable regret minimization algorithm for the expert setting. We have \({\mathcal {K}}=\varDelta _{N}\), and the algorithm gives a weight \(p_{i,t+1}=\frac{p_{i,0}e^{-\eta L_{i,t}}}{\sum _{j=1}^{N}p_{j,0}e^{-\eta L_{j,t}}}\) to expert i at time \(t+1\), where the initial weights \(p_{i,0}\) and the learning rate \(\eta \) are parameters.

It is easy to see that for the potential \(\varPhi _{\eta }({\mathbf {L}})=-(1/\eta )\ln (\sum _{i=1}^{N}p{}_{i,0}e^{-\eta L_{i}})\), we have that \({\mathbf {{p}}}=(p_{1},\ldots ,p_{N})=\nabla \varPhi _{\eta }({\mathbf {L}})\). The Hessian \(\nabla ^2\varPhi _\eta \) has the following simple form:

Lemma 6

Let \({\mathbf {L}}\in {\mathbb {R}}^{N}\) and denote \({\mathbf {{p}}}=\nabla \varPhi _\eta ({\mathbf {L}})\). Then \(\nabla ^{2}\varPhi _{\eta }({\mathbf {L}})=\eta \cdot ({\mathbf {p}}{\mathbf {p}}^{\top }-diag({\mathbf {{p}}}))\preceq 0 \), where \(diag({\mathbf {{p}}})\) is the diagonal matrix with \({\mathbf {{p}}}\) as its diagonal.

The proof is given in Appendix 2. We will assume \(p_{1,0}=\ldots =p_{N,0}=1/N\), and write \(RWM(\eta )\) for RWM with parameters \(\eta \) and the uniform distribution. Thus, \(\varPhi ({\mathbf {L}})=-\ln ((1/N)\sum _{i=1}^{N}e^{-L_{i}})\), and we have by Lemma 6 that \(\frac{\partial ^{2}\varPhi }{\partial L_{i}\partial L_{j}}>0\) for every \(i\ne j\). Therefore, by Lemma 5, \(\rho _1,\rho _2>0\). We now need to calculate \(\rho _1\) and \(\rho _2\). This is straightforward in the case of \(\rho _1\), but for \(\rho _2\) we give the value only for \(N=2\).

Lemma 7

For any \(N\ge 2,\rho _1(a)=\ln \frac{N}{N-1+e^{-a}}\). For \(N=2\), it holds that \(\rho _2(a)=\left( e^{a/2}+e^{-a/2}\right) ^{-2}\).

Proof

By Lemma 5,

In addition, \(\rho _{2}(a)=\min {}_{{\mathbf {L}}\in [0,a]^N,{\mathbf {l}}\in {\mathcal {N}}(1)}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}>0\). For \(N=2\), we have \({\mathcal {N}}(1)=\{(1,0),(0,1)\}\). Therefore, \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\) is either \(-\nabla ^{2}\varPhi ({\mathbf {L}})_{1,1}\) or \(-\nabla ^{2}\varPhi ({\mathbf {L}})_{2,2}\). Denoting \({\mathbf {p}}={\mathbf {p}}({\mathbf {L}})=\nabla \varPhi ({\mathbf {L}})\), we have that \(-\nabla ^{2}\varPhi ({\mathbf {L}})_{1,1}=p_{1}-p_{1}^2\) and \(-\nabla ^{2}\varPhi ({\mathbf {L}})_{2,2}=p_{2}-p_{2}^2\) by Lemma 6. Since \(p_1=1-p_2\), we have \(p_{1}-p_{1}^2=p_{2}-p_{2}^2\). Now,

The function \((e^x+e^{-x})^{-2}\) is decreasing for \(x\ge 0\), so the smallest value is attained for \(|L_1-L_2|=a\). Thus, for \(N=2,\rho _{2}(a)=(e^{a/2}+e^{-a/2})^{-2}\). \(\square \)

Picking \(a=1.2\), we have by Theorem 7 that

Theorem 8

For \(N=2\), there exists \(\eta \) s.t.

For a general N we may still lower bound \(\max _{t}\{R_{{RWM}(\eta ),t}\}\) by providing a lower bound for \(\rho _{2}(a)\). We use the fact that the term \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\), which is minimized in the definition of \(\rho _{2}(a)\), may be interpreted as the variance of a certain discrete and bounded random variable. Thus, the key element that will be used is a general lower bound on the variance of such variables. This bound, possibly of separate interest, is given in the following lemma, whose proof can be found in Appendix 2.

Lemma 8

Let \(x_{1}<\ldots <x_{N}\in {\mathbb {R}},0<p_{1},\ldots ,p_{N}<1\), and \(\sum _{k=1}^{N}p_{k}=1\), and let X be a random variable that obtains the value \(x_{k}\) with probability \(p_{k}\), for every \(1\le k\le N\). Then for every \(1\le i<j\le N,Var(X)\ge \frac{p_{i}p_{j}}{p_{i}+p_{j}}\cdot (x_{i}-x_{j})^{2}\), and equality is attained iff \(N=2\), or \(N=3\) with \(i=1,j=3\), and \(x_{2}=\frac{p_{1}x_{1}+p_{3}x_{3}}{p_{1}+p_{3}}\). In particular, \(Var(X)\ge \frac{p_{1}p_{N}}{p_{1}+p_{N}}\cdot (x_{N}-x_{1})^{2} \ge \frac{1}{2}\min _{k}\{p_{k}\}(x_{N}-x_{1})^{2}\).

We comment that for the special case \(p_{1}=\ldots =p_{N}=1/N\), Lemma 8 yields the inequality \(Var(X)\ge (x_{N}-x_{1})^{2}/(2N)\), sometimes referred to as the Szőkefalvi Nagy inequality (von Szökefalvi Nagy 1918).

We now proceed to lower bound \(\rho _2(a)\) for any N.

Lemma 9

For any \(N\ge 2\), it holds that \(\rho _2(a)\ge \frac{1}{2(N-1)e^{a}+2}\).

Proof

Recall that \(\rho _{2}(a)=\min {}_{{\mathbf {L}}\in [0,a]^N,{\mathbf {l}}\in {\mathcal {N}}(1)}\{-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\}\). By Lemma 6, \(\nabla ^{2}\varPhi ({\mathbf {L}})={\mathbf {p}}{\mathbf {p}}^{\top }-diag({\mathbf {{p}}})\), where \({\mathbf {{p}}}={\mathbf {p}}({\mathbf {L}})=\nabla \varPhi ({\mathbf {L}})\), and thus,

Assume for now that \(l_1,\ldots ,l_N\) are distinct. Then \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}} = Var(X)\), where X is a random variable obtaining the value \(l_i\) with probability \(p_i,i=1,\ldots ,N\). By Lemma 8, \(Var(X)\ge \frac{1}{2}\min _{k}\{p_{k}\}(\max _i\{l_i\}-\min _i\{l_{i}\})^{2}\). For \({\mathbf {l}}\in {\mathcal {N}}(1)\) it holds that \(\max _i\{l_i\}=1\) and \(\min _i\{l_{i}\}=0\). In addition, for \({\mathbf {L}}\in [0,a]^N\), the smallest value for \(\min _{k}\{p_{k}\}\) is obtained when one entry of \({\mathbf {L}}\) is a and the rest are 0, yielding that \(\min _{k}\{p_{k}\}\ge \frac{e^{-a}}{N-1+e^{-a}}\). Together we have that \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}} \ge \frac{1}{2}\cdot \frac{e^{-a}}{N-1+e^{-a}}=\frac{1}{2(N-1)e^{a}+2}\). Since the expression \(-{\mathbf {l}}^{\top }\nabla ^{2}\varPhi ({\mathbf {L}}){\mathbf {l}}\) is continuous in \({\mathbf {l}}\), this inequality holds even if the assumption that the entries of \({\mathbf {l}}\) are distinct numbers is dropped. The result follows.\(\square \)

Theorem 9

For any \(0<\alpha <1/N\) and \(\eta >0\), it holds that

and for \(\alpha =1/(2N)\) and \(\eta =\sqrt{(8N/q)\ln \frac{2N-1}{2N-2}}\),

Proof

By Theorem 7, for every \(\eta >0\), it holds that

We fix a, observing that any \(a\in (0,\infty )\) may be used, and denote \(\alpha = \frac{1}{(N-1)e^{a}+1}\). Note that \(\alpha \) may thus obtain any value in (0, 1 / N). By Lemma 9, we have that \(\rho _2(a)\ge \alpha /2\). By Lemma 7, \(\rho _1(a)=\ln \frac{N}{N-1+e^{-a}}\), and since

we have that \(\rho _1(a)=\ln \frac{N(1-\alpha )}{N-1}\). Thus, \(\max _{t}\{R_{{RWM}(\eta ),t}\}\ge \min \{\frac{1}{\eta }\ln \frac{N(1-\alpha )}{N-1},\frac{1}{4}\eta \alpha q \}\). We may maximize the r.h.s. by picking \(\eta = \sqrt{\frac{4}{\alpha q}\ln \frac{N(1-\alpha )}{N-1}}\), yielding \(\max _{t}\{R_{{RWM}(\eta ),t}\}\ge \sqrt{\frac{1}{4} \alpha q \ln \frac{N(1-\alpha )}{N-1}}\). We may further pick a s.t. \(\alpha = 1/(2N)\) and obtain

where the second inequality uses the fact that \(\ln (1+x)\ge \frac{x}{x+1}\) for \(x\in (-1,\infty )\). \(\square \)

6 Beyond quadratic variation

Up until now we have considered only quadratic variation as a measure for the smoothness of the loss sequence. In this section we will extend our results to more general measures, which include other notions of variation discussed in the literature (Hazan and Kale 2010; Chiang et al. 2012).

In what follows, we will consider some general quantity \(V_t\) that measures the variation of the loss sequence at time t, and denote by \(V'\) a known upper bound on \(V_T\) and by \(V>0\) a known lower bound on \(V_T\). We will further assume that for every loss sequence \({{\mathbf {l}}}_1,\ldots ,{{\mathbf {l}}}_T\in {{\mathbb {R}}}^N\) it holds that \(Q_T\ge \beta V_T\) for some \(\beta =\beta (N)>0\), where \(Q_T\) is the generic quadratic variation w.r.t. some seminorm (not to be confused with the definition for a specific seminorm). This restriction will still allow us to apply our results to the most interesting notions of variation, as will be shown later.

We now proceed to extend our original result, given in Theorem 5, to accommodate more general variation. Recall that we assume that \(a>0\) satisfies \(\rho _{1}(a),\rho _{2}(a)>0\), and let \(\eta >0\). The first part of Theorem 5 states that for a concave potential algorithm A, it holds that \(\max _{t}\{R_{A,t}\}\ge \min \{\frac{\rho _{1}(a)}{\eta },\frac{\eta }{2}\rho _{2}(a)Q\}\), where Q is a known lower bound on \(Q_T\). In our present context we may clearly set \(Q=\beta V\). It immediately follows that for \(\eta =\sqrt{\frac{2\rho _{1}(a)}{\rho _{2}(a)\beta V}}\), it holds that \(\max _{t}\{R_{A,t}\} \ge \sqrt{\rho _{1}(a)\rho _{2}(a)\beta /2}\cdot \sqrt{V}\).

The proof of the second part of the theorem in the context of general variation is also quite similar to the original one and will be given for completeness. Here we assume that for any loss sequence satisfying \(V_{T}\le V'\), the algorithm A further guarantees \(R_{A,T}\le c\sqrt{V'}\) for some \(c>0\).

For given a and \(\eta \), there exists \(0<\epsilon <a/\eta \) s.t. \(T=\beta V'/\epsilon ^2\) is an integer. In addition, let \({\mathbf {x}}\in {\mathbb {R}}^{N}\) be such that \(\Vert {\mathbf {x}}\Vert =1\). For the loss sequence \({\mathbf {l}}_{t}=(-1)^{t+1}\epsilon {\mathbf {x}},1\le t\le T\), it holds that \(\beta V_T\le Q_{T}=\epsilon ^{2}T=\beta V'\) and that \(\Vert {\mathbf {L}}_{t}\Vert =\Vert (1-(-1)^{t})\epsilon {\mathbf {x}}/2\Vert \le \epsilon <a/\eta \) for every t. Therefore,

where the second inequality is by Lemma 1. This implies that \(\eta \le \frac{2c}{\rho _{2}(a)\beta \sqrt{V'}}\).

Next, let \(T>\frac{a^{2}}{\eta ^{2}\beta V'}\), define \(\epsilon =\sqrt{\beta V'/T}\), and consider the loss sequence \({\mathbf {l}}_{t}=\epsilon {\mathbf {x}}\). Then \(\beta V_T\le Q_{T}=\epsilon ^{2}T=\beta V'\), and \(\Vert {\mathbf {L}}_{T}\Vert =\epsilon T=\sqrt{\beta V'T}>a/\eta \). Therefore, again using Lemma 1, we have \(c\sqrt{V'} \ge R_{A,T}\ge \rho _{1}(a)/\eta \), and thus \(\eta \ge \frac{\rho _{1}(a)}{c\sqrt{V'}}\). Together, we obtain that \(\frac{\rho _{1}(a)}{c\sqrt{V'}}\le \eta \le \frac{2c}{\rho _{2}(a)\beta \sqrt{V'}}\), implying that \(c\ge \sqrt{\rho _{1}(a)\rho _{2}(a)\beta /2}\). (Note that it is therefore impossible to guarantee a regret upper bound of \(c\sqrt{V'}\) for \(c<\sqrt{\rho _{1}(a)\rho _{2}(a)\beta /2}\).) For any sequence with \(V_T\le V'\), we have that \(\frac{\rho _{1}(a)}{\eta }\ge \frac{\rho _{1}(a)\rho _{2}(a)\beta \sqrt{V'}}{2c}\) and

so by Lemma 1, \(\max _{t}\{R_{A,t}\}\ge \frac{\rho _{1}(a)\rho _{2}(a)\beta }{2c}\cdot \frac{V_T}{\sqrt{V'}}\). We may therefore generalize Theorem 5 as follows:

Theorem 10

Let \(a>0\) satisfy \(\rho _{1}(a),\rho _{2}(a)>0\).

-

(i)

For every \(\eta >0,\max _{t}\{R_{A,t}\}\ge \min \{\frac{\rho _{1}(a)}{\eta },\frac{\eta }{2}\rho _{2}(a)\beta V\}\), and for \(\eta =\sqrt{\frac{2\rho _{1}(a)}{\rho _{2}(a)\beta V}}\),

$$\begin{aligned} \max _{t}\{R_{A,t}\}&\ge \sqrt{\rho _{1}(a)\rho _{2}(a)\beta /2}\cdot \sqrt{V}\;. \end{aligned}$$ -

(ii)

If for any sequence with variation \(V_{T}\le V'\) we have \(R_{A,T}\le c\sqrt{V'}\), then for any such sequence,

$$\begin{aligned} \max _{t}\{R_{A,t}\}\ge \frac{\rho _{1}(a)\rho _{2}(a)\beta }{2c}\cdot \frac{V_T}{\sqrt{V'}}\;. \end{aligned}$$In particular, if \(V_T=\varTheta (V')\), then \(\max _{t}\{R_{A,t}\}=\varOmega (\sqrt{V_T})\).

6.1 Application to specific notions of variation

The work of Hazan and Kale (2010) defines several types of variation for a loss sequence. In the context of online linear optimization they define \({\text {Var}}_T = \sum _{t=1}^T \Vert {{\mathbf {l}}}_t-\varvec{\mu }_T\Vert _2^2\), where \(\varvec{\mu }_T = \frac{1}{T}\sum _{t=1}^T{{\mathbf {l}}}_t\). In addition, for the best expert setting they define \({\text {Var}}_T^\infty = \max _{1\le i \le N}\{\sum _{t=1}^T |l_{i,t}-\mu _{i,T}|^2\}\), where \(\varvec{\mu }_T\) is as before; they further define \({\text {Var}}_T^{\max } = \max _{t \le T}\{{\text {Var}}_t(m(t))\}\), where m(t) is the best expert up to time t and \({\text {Var}}_t(i)\) is the variation of the losses of expert i up to time t. It is clear that \({\text {Var}}_T^{\max }\le {\text {Var}}_T^\infty \le {\text {Var}}_T\), and furthermore, \({\text {Var}}_T \le Q_T\), where the seminorm is taken to be the Euclidean norm. Thus, in the context of Theorem 10 we may choose \(\beta =1\) for all these notions of variation.

The work of Chiang et al. (2012) considers the \(L_p\)-deviation of a loss sequence, defined in the context of online linear optimization by \(D_p = \sum _{t=1}^T \Vert {{\mathbf {l}}}_t - {{\mathbf {l}}}_{t-1}\Vert _p^2\), where \({{\mathbf {l}}}_0={\mathbf {0}}\). Since for every \({\mathbf {x}}, {\mathbf {y}}\in {{\mathbb {R}}}^N\) we have that \(\Vert {\mathbf {x}}-{\mathbf {y}}\Vert _p^2 \le (\Vert {\mathbf {x}}\Vert _p+\Vert {\mathbf {y}}\Vert _p)^2 \le 2(\Vert {\mathbf {x}}\Vert _p^2+\Vert {\mathbf {y}}\Vert _p^2)\), it follows that \(D_p \le 4\sum _{t=1}^T \Vert {{\mathbf {l}}}_t\Vert _p^2\). Thus, we have that for the \(L_p\)-deviation we may take \(\beta =4\) (where \(Q_T\) is defined w.r.t. the \(L_p\) norm).

It is important to note, however, that in order to satisfy the conditions of Theorem 10, one requires a relevant concave potential algorithm. In particular, for the second part of the theorem one needs an algorithm with a suitable regret minimization guarantee. For \({\text {Var}}_{T}\) such a choice exists in the form of OGD. As shown by Hazan and Kale (2010), if \({\text {Var}}_{T}\) is upper bounded by \(V'\ge 12\) and (w.l.o.g.) \({\mathcal {K}}\subseteq B({\mathbf {0}},1)\), then for bounded single-period losses (w.l.o.g. \(\Vert {{\mathbf {l}}}_t\Vert _2\le 1\) for every t), the regret of OGD is upper bounded by \(15\sqrt{V'}\). In addition, if \({\mathcal {K}}\supseteq B({\mathbf {0}},a)\) for some \(a>0\), then \(\rho _{1}(a), \rho _{2}(a) > 0\) (as already shown in Sect. 5.1) and Theorem 10 may be meaningfully applied.Footnote 4

A second algorithm, Variation MW, given in Hazan and Kale (2010) specifically for the best expert setting, is not a concave potential algorithm. This is evident from the fact that its weights have an asymmetric dependence on single-period losses from different times. (This cannot be the case when the weight is a function of the cumulative loss.) The same is true for the algorithm of Chiang et al. (2012) when applied to the best expert setting. It is not immediately clear if there are interesting cases for which their algorithm is equivalent to a concave potential algorithm.

We summarize the results of this subsection in the next two corollaries.

Corollary 2

Theorem 10 may be applied to the following types of variation:

-

(i)

To \({\text {Var}}_T^{\max },{\text {Var}}_T^\infty \), and \({\text {Var}}_T\), with \(Q_T = \sum _{t=1}^T\Vert {{\mathbf {l}}}_t\Vert _2^2\) and \(\beta =1\).

-

(ii)

To the \(L_p\)-deviation, with \(Q_T = \sum _{t=1}^T\Vert {{\mathbf {l}}}_t\Vert _p^2\) and \(\beta =4\).

For OGD in particular we may apply Theorem 10 in combination with the bound of Hazan and Kale (2010) and the results of Sect. 5.1.Footnote 5 We obtain the following:

Corollary 3

Let \(B({\mathbf {0}},a)\subseteq {\mathcal {K}} \subseteq B({\mathbf {0}},1)\) for some \(a>0\). Then taking OGD as algorithm A, it holds that

-

(i)

If \({\text {Var}}_T\ge V\), then for every \(\eta >0,\max _{t}\{R_{A,t}\}\ge \min \{\frac{a^2}{2\eta },\frac{1}{2}\eta V\}\), and for \(\eta =\frac{a}{\sqrt{V}}\),

$$\begin{aligned} \max _{t}\{R_{A,t}\} \ge \frac{a}{2}\cdot \sqrt{V}\;. \end{aligned}$$ -

(ii)

If \(\sqrt{V'}\ge 12\), then for any sequence with \({\text {Var}}_T\le V'\), it holds that

$$\begin{aligned} \max _{t}\{R_{A,t}\}\ge \frac{a^2}{60}\cdot \frac{{\text {Var}}_T}{\sqrt{V'}}\;. \end{aligned}$$In particular, if \({\text {Var}}_T=\varTheta (V')\), then \(\max _{t}\{R_{A,t}\}=\varOmega (\sqrt{{\text {Var}}_T})\).

7 Extensions and applications

We have shown lower bounds on the anytime regret of concave potential algorithms. We have further proved a trade-off between upper bounds on the regret of such algorithms and lower bounds on their anytime regret. These results were shown in the setting of online linear optimization with full information, which includes the best expert setting. In this work we did not consider the more general setting of online convex optimization or the bandit setting. Whether or not results in a similar spirit may be obtained for those settings is an interesting open question.

7.1 The scope of our results

Our results apply to algorithms whose weight vector is defined as the gradient of a concave function of the cumulative loss. This mechanism is further modulated by using a fixed learning rate. As has already been argued, this algorithmic family contains the important and widely used class of RFTL algorithms. Nevertheless, it is clear that some important algorithms lie outside it. As explained in the previous section, this holds in particular for the Variation MW algorithm of Hazan and Kale (2010) and at least for some instances of the algorithm of Chiang et al. (2012). It remains an interesting open question whether the bound obtained by the Variation MW algorithm may be achieved by means of concave potential algorithms. The same is true for the bounds given in Chiang et al. (2012). We note, however, that those authors show that if the decision set \({\mathcal {K}}\) contains a ball centered at the origin, then the regret bound of the OGD algorithm in particular is inferior to the regret bound of their algorithm (Chiang et al. 2012, Lemma 9). Specifically, for any learning rate the regret bound of OGD is \(\varOmega (\min \{D_2,\sqrt{T}\})\), compared with the \(O(\sqrt{D_2})\) regret bound of their algorithm.

7.1.1 The use of fixed learning rates

Learning rates are employed as a means of parameterizing regret minimization algorithms in terms of some quantity of interest, such as the variation of the loss sequence. When an upper bound on that quantity is known in advance, the learning rate is commonly fixed as a function of that upper bound. Thus, in such a scenario, our assumption of a fixed learning rate seems like a minor restriction.

When no upper bound is given, variable learning rates are normally employed, with the so-called “doubling trick” being a special case. It is natural to consider adjusting the analysis leading to Lemma 1 to a setting where \({{\mathbf {x}}}_t = \nabla \varPhi _{\eta _t}({{\mathbf {L}}}_{t-1})\), for some non-increasing learning rates \(\eta _1,\ldots , \eta _T\). This may be done using the Taylor expansion of \(\varPhi \) as a function of both the learning rate and the cumulative loss. As a result, the regret, which formerly comprised the non-negative first and second order regret terms (see Theorem 2), now contains an additional non-positive term that depends on the changes in the learning rate. (This term disappears if the learning rate is fixed.) It is not clear how to bound the negative contribution of this new term in a way that would yield an interesting modification of the previous analysis.

7.2 Direct and indirect applications

We conclude with two examples that illustrate the applicability of our results.

The first, which was already mentioned in the introduction, concerns lower bounds on the price of financial instruments. Specifically, our results are used to derive a lower bound on the price of “at the money” European call options in an arbitrage-free market. The lower bound obtained for the price of such options is \(\exp (0.1\sqrt{Q})-1\approx 0.1\sqrt{Q}\) for \(Q<0.5\), where Q is the assumed quadratic variation of the stock’s log price ratios. This bound has the same asymptotic behavior as the Black-Scholes price and an upper bound on the price given by DeMarzo et al. (2006).

As the core element of this derivation, one requires an algorithm for the best expert setting whose regret is lower bounded in terms of the relative quadratic variation. By our results, the RWM algorithm may guarantee such a lower bound on the regret at some time t (see Sect. 5.2). A further modification to RWM moves all the weight to the best expert at time t, serving to “lock in” the regret until the final round. As a result, the lower bound on the anytime regret of RWM also holds for the final regret of the modified algorithm. For more details, see Gofer and Mansour (2012) and Gofer (2014b).

A second application is in the study of online linear optimization with switching costs, namely, where additional costs (and regret) are incurred for switching between weight vectors. It was shown by Gofer (2014a) that for switching costs that are lower bounded by a norm of the difference between weights, regret cannot be upper bounded given only some upper bound on the quadratic variation of the loss sequence, \(\sum _t\Vert {{\mathbf {l}}}_t\Vert _2^2\). The same claim also holds for relative quadratic variation and for the notions of variation considered by Hazan and Kale (2010) and Chiang et al. (2012). In particular, a non-oblivious adversary that knows the expectation of a learner’s next action may force unbounded expected regret. The same is true for an oblivious adversary vs. a deterministic learner. This result holds for general classes of the online linear optimization setting, including the best expert setting and any decision set that contains a ball around the origin.

For proving the above result, an instance of a concave potential algorithm is employed by the adversary to adaptively construct a loss sequence that is difficult for a given learning algorithm A. Our results for concave potential algorithms (and in particular RWM and OGD) are used in showing that A incurs either arbitrarily high switching costs and non-negative regret, or arbitrarily high regret along with the non-negative switching costs. This application demonstrates that regret lower bounds established for concave potential algorithms may facilitate the derivation of regret lower bounds that hold for every algorithm.

Notes

A European call option is a financial instrument that pays its holder at time T the sum of \(\max \{S_T-K,0\}\), where \(S_t\) is the price of a given stock at time t, and K is a set price. The option is termed “at the money” if \(K=S_0\). A market is arbitrage-free if no algorithm trading in financial assets can guarantee profit without any risk of losing money.

See the closely related Theorem 24.8 in Rockafellar (1970) regarding cyclically monotone mappings. The proof we give is different in that it involves the regret and relates the loss to the path integral.

Note that this means we cannot guarantee a regret upper bound of \(c\sqrt{Q'}\) for \(c<\sqrt{\rho _{1}(a)\rho _{2}(a)/2}\).

We note that even though OGD may be applied to the best expert setting as a special case, it may be shown that \(\rho _1(a)=0\) for \({\mathcal {K}}=\varDelta _{N}\) or any translation of \(\varDelta _{N}\) by a constant vector.

Note a very slight change from the original theorem in the second part. This change is needed since the regret upper bound of OGD holds only if \(\sqrt{V'}\ge 12\). We also point out that the second part of Theorem 10 still holds if the regret guarantee of the algorithm requires bounded single-period losses.

References

Boyd, S., & Vandenberghe, L. (2004). Convex optimization. Cambridge: Cambridge University Press.

Cesa-Bianchi, N., & Lugosi, G. (2006). Prediction, learning, and games. Cambridge: Cambridge University Press.

Cesa-Bianchi, N., Mansour, Y., & Stoltz, G. (2007). Improved second-order bounds for prediction with expert advice. Machine Learning, 66(2–3), 321–352.

Chiang, C. K., Yang, T., Lee, C. J., Mahdavi, M., Lu, C. J., Jin, R., et al. (2012). Online optimization with gradual variations. Journal of Machine Learning Research: Proceedings Track, 23, 6.1–6.20.

DeMarzo, P., Kremer, I., & Mansour, Y. (2006). Online trading algorithms and robust option pricing. In Proceedings of the 38th annual ACM symposium on theory of computing (pp. 477–486).

Even-Dar, E., Kearns, M., Mansour, Y., & Wortman, J. (2008). Regret to the best vs. regret to the average. Machine Learning, 72(1–2), 21–37.

Gofer, E. (2014a). Higher-order regret bounds with switching costs. Journal of Machine Learning Research: Proceedings Track, 35, 210–243.

Gofer, E. (2014b). Machine learning algorithms with applications in finance. PhD thesis, Tel Aviv University.

Gofer, E., & Mansour, Y. (2012). Lower bounds on individual sequence regret. In N. H. Bshouty, G. Stoltz, N. Vayatis, & T. Zeugmann (Eds.), Algorithmic learning theory (pp. 275–289). Heidelberg: Springer.

Hazan, E. (2006). Efficient algorithms for online convex optimization and their applications. PhD thesis, Princeton University.

Hazan, E. (2011). The convex optimization approach to regret minimization. In S. Sra, S. Nowozin, & S. J. Wright (Eds.), Optimization for machine learning. Cambridge: MIT Press.

Hazan, E., & Kale, S. (2010). Extracting certainty from uncertainty: Regret bounded by variation in costs. Machine Learning, 80(2–3), 165–188.

Kalai, A., & Vempala, S. (2005). Efficient algorithms for online decision problems. Journal of Computer and System Sciences, 71(3), 291–307.

Nesterov, Y. (2004). Introductory lectures on convex optimization: A basic course. Dordrecht: Kluwer Academic Publishers.

Rockafellar, R. T. (1970). Convex analysis. Princeton: Princeton University Press.

Shalev-Shwartz, S. (2007). Online learning: Theory, algorithms, and applications. PhD thesis, The Hebrew University.

von Szökefalvi Nagy, J. (1918). Über algebraische gleichungen mit lauter reellen wurzeln. Jahresbericht der Deutschen Mathematiker-Vereinigung, 27, 37–43.

Zinkevich, M. (2003). Online convex programming and generalized infinitesimal gradient ascent. In ICML (pp. 928–936).

Acknowledgments

This research was supported in part by the Google Inter-university center for Electronic Markets and Auctions, by a grant from the Israel Science Foundation, by a grant from United States-Israel Binational Science Foundation (BSF), by a grant from the Israeli Ministry of Science (MoS), and by The Israeli Centers of Research Excellence (I-CORE) program (Center No. 4/11). This work is partly based on Ph.D. thesis research carried out by the first author at Tel Aviv University.

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor: Gabor Lugosi.

Appendices

Appendix 1: Additional claims

Lemma 10

If \({\mathcal {K}}\subset {\mathbb {R}}^{N}\) is compact, then \(h({\mathbf {L}})=\min _{{\mathbf {u}}\in {\mathcal {K}}}\{{\mathbf {u}}\cdot {\mathbf {L}}\}\) is continuous on \({\mathbb {R}}^{N}\).

Proof