Abstract

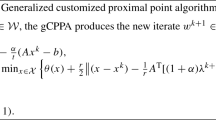

The augmented Lagrangian method is a classic and efficient method for solving constrained optimization problems. However, its efficiency is still, to a large extent, dependent on how efficient the subproblem be solved. When an accurate solution to the subproblem is computationally expensive, it is more practical to relax the subproblem. Specifically, when the objective function has a certain favorable structure, the relaxed subproblem yields a closed-form solution that can be solved efficiently. However, the resulting algorithm usually suffers from a slower convergence rate than the augmented Lagrangian method. In this paper, based on the relaxed subproblem, we propose a new algorithm with a faster convergence rate. Numerical results using the proposed approach are reported for three specific applications. The output is compared with the corresponding results from state-of-the-art algorithms, and it is shown that the efficiency of the proposed method is superior to that of existing approaches.

Similar content being viewed by others

References

Candès, E.: Compressive sampling. Proc. Int. Congr. Math. 3, 1433–1452 (2006)

Chen, S., Donoho, D., Saunders, M.A.: Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 20, 33–61 (1998)

Candès, E., Tao, T.: Decoding by linear programming. IEEE Trans. Inf. Theory 51, 4203–4215 (2005)

Wang, Y., Yang, J., Yin, W., Zhang, Y.: A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci. 1(3), 248–272 (2008)

Yang, J., Zhang, Y., Yin, W.: An efficient tvl1 algorithm for deblurring multichannel images corrupted by impulsive noise. SIAM J. Sci. Comput. 31(4), 2842–2865 (2009)

Cai, J., Candès, E.J., Shen, Z.: A singular value thresholding algorithm for matrix completion. SIAM J. Optim. 20(4), 1956–1982 (2010)

Candès, E.J., Recht, B.: Exact matrix completion via convex optimization. Found. Comput. Math. 9(6), 717–772 (2009)

Yin, W., Morgan, S., Yang, J., Zhang, Y.: Practical compressive sensing with toeplitz and circulant matrices. In: Proceedings of Visual Communications and Image Processing (VCIP) (2010)

Ye, Y., Tse, E.: An extension of karmarkars algorithm to convex quadratic programming. Math. Progr. 44, 157–179 (1989)

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Computer Science and Applied Mathematics. Academic Press Inc. [Harcourt Brace Jovanovich Publishers], New York (1982)

Fortin, M., Glowinski, R.: Augmented Lagrangian Methods. North- Holland Publishing Co., Amsterdam (1983)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Yin, W., Osher, S., Goldfarb, D., Darbon, J.: Bregman iterative algorithms for \(\ell _1\)-minimization with applications to compressed sensing. SIAM J. Imaging Sci. 1(1), 143–168 (2008)

Tao, M., Yang, J.: Alternating Direction Algorithms for Total Variation Deconvolution in Image Reconstruction. Manuscript (2009). http://www.optimization-online.org/DB_HTML/2009/11/2463.html

Esser, E.: Applications of Lagrangian-Based Alternating Direction Methods and Connections to Split Bregman. Manuscript (2009). ftp://math.ucla.edu/pub/camreport/cam09-31

Ramani, S., Fessler, J.A.: Parallel MR image reconstruction using augmented Lagrangian methods. IEEE Trans. Med. Imaging 30(3), 694–706 (2011)

Afonso, M.V., Bioucas-Dias, J.M., Figueiredo, M.A.T.: An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Imaging Process. 20(3), 681–695 (2011)

Goldstein, T., Osher, S.: The split Bregman method for l1-regularized problems. SIAM J. Imaging Sci. 2(2), 323–343 (2009)

Lin, Z., Chen, M., Wu, L., Ma, Y.: The augmented Lagrange multiplier method for exact recovery of a corrupted low-rank matrices. Math. Progr. submitted (2009)

Bertsekas, D.: Multiplier methods: a survey. Automatica 12, 133–145 (1976)

Chen, G., Teboulle, M.: A proximal-based decomposition method for convex minimization problems. Math. Progr. 64(1), 81–101 (1994)

Eckstein, J.: Some saddle-function splitting methods for convex programming. Optim. Methods Softw. 4, 75–83 (1994)

Eckstein, J., Bertsekas, D.P.: On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math. Progr. 55(3, Ser A), 293–318 (1992)

Yang, J., Zhang, Y.: Alternating direction algorithms for \(\ell _1\)-problems in compressive sensing. SIAM J. Sci. Comput. 33(1), 250–278 (2011)

Rockafellar, R.: Augmented Lagrangians and applications of the proximal point algorithm in convex programming. Math. Oper. Res. 1, 97–116 (1976)

Solodov, M., Svaiter, B.: A hybrid approximate extragradient-proximal point algorithm using the enlargement of a maximal monotone operator. Set Valued Anal 7, 117–132 (1999)

Burachik, R.S., Scheimberg, S., Svaiter, B.F.: Robustness of the hybrid extragradient proximal-point algorithm. J. Optim. Theory Appl. 111(1), 117–136 (2001)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 4(4), 1168–1200 (2005)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(5), 120–145 (2011)

Zhang, X., Burger, M., Osher, S.: A unified primal-dual algorithm framework based on Bregman iteration. J. Sci. Comput. 46(1), 20–46 (2011)

Cai, J., Osher, S., Shen, Z.: Linearized bregman iterations for compressive sensing. Math. Comput. 78(267), 1515–1536 (2009)

Cai, J., Osher, S., Shen, Z.: Convergence of the linearized bregman iteration for \(\ell _1\)-norm minimization. Math. Comput. 78(268), 2127–2136 (2009)

Yin, W.: Analysis and generalizations of the linearized bregman method. Tech. Rep. 4 (2010)

Berg, E.V.D., Friedlander, M.P.: Probing the Pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput. 31(2), 890–912 (2008)

Nesterov, Y.: Smooth minimization of non-smooth functions. Math. Progr. 103(1), 127–152 (2005)

Wen, Z., Yin, W., Goldfarb, D., Zhang, Y.: A fast algorithm for sparse reconstruction based on shrinkage, subspace optimization, and continuation. SIAM J. Sci. Comput. 32(4), 1832–1857 (2010)

Figueiredo, M., Nowak, R., Wright, S.J.: Gradient projection for sparse reconstruction: application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Proces. 1(4), 586–597 (2007)

Acknowledgments

The author’s research was supported by the National Natural Science Foundation of China under Grant 11401295, Natural Science Foundation of Jiangsu Province under Grant BK20141007, Major Program of the National Social Science Foundation of China under Grant 12&ZD114, National Social Science Foundation of China under Grant 15BGL58, Social Science Foundation of Jiangsu Province under Grant 14EUA001, and Qinglan Project of Jiangsu Province. The author would like to thank Min Tao from Nanjing University for her valuable comments. The author would also like to thank the editors who helped improving the quality of the present paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Roland Glowinski.

Rights and permissions

About this article

Cite this article

Shen, Y., Wang, H. New Augmented Lagrangian-Based Proximal Point Algorithm for Convex Optimization with Equality Constraints. J Optim Theory Appl 171, 251–261 (2016). https://doi.org/10.1007/s10957-016-0991-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-016-0991-1

Keywords

- Convex optimization

- Proximal point algorithm

- Augmented Lagrangian

- Sparse optimization

- Compressed sensing