Abstract

In the last 15 years, tumor anti-angiogenesis became an active area of research in medicine and also in mathematical biology, and several models of dynamics and optimal controls of angiogenesis have been described. We use the Hamilton–Jacobi approach to study the numerical analysis of approximate optimal solutions to some of those models earlier analysed from the point of necessary optimality conditions in the series of papers by Ledzewicz and Schaettler.

Similar content being viewed by others

1 Introduction

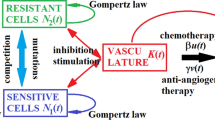

The search for therapy approaches that would avoid drug resistance is of tantamount importance in medicine. Two approaches that are currently being pursued in their experimental stages are immunotherapy and anti-angiogenic treatments. Immunotherapy tries to coax the body’s immune system to take action against the cancerous growth. Tumor anti-angiogenesis is a cancer therapy approach that targets the vasculature of a growing tumor. Contrary to traditional chemotherapy, anti-angiogenic treatment does not target the quickly duplicating and genetically unstable cancer cells, but instead the genetically stable normal cells. It was observed in experimental cancer that there is no resistance to the angiogenic inhibitors [1]. For this reason, tumor anti-angiogenesis has been called a therapy “resistant to resistance” that provides a new hope for the treatment of tumor-type cancers [2]. In the last 15 years, tumor anti-angiogenesis became an active area of research not only in medicine [3, 4], but also in mathematical biology [5–7], and several models of dynamics of angiogenesis have been described, e.g., by Hahnfeldt et al. [5] and d’Onofrio [6, 7]. In a sequence of papers [8–11], Ledzewicz and Schaettler completely described and solved corresponding to them, optimal control problems from a geometrical optimal control theory point of view. In most of the mentioned papers, the numerical calculations of approximate solutions are presented. However, in none of them are proved assertions that calculated numerical solutions that are really near the optimal one. The aim of this paper is an analysis of the optimal control problem from the Hamilton–Jacobi–Bellman point of view, i.e., using a dynamic programming approach, and to prove that, for calculated numerically solutions the functional considered takes an approximate value with a given accuracy.

2 Formulation of the Problem

In the paper, the following free terminal time T problem

over all Lebesgue measurable functions u:[0,T]→[0,a]=U that satisfy a constraint on the total amount of anti-angiogenic inhibitors to be administered

subject to

is investigated. The term \(\int_{0}^{T}u(t)\,dt\) is viewed as a measure for the cost of the treatment or related to side effects. The upper limit a in the definition of the control set U=[0,a] is a maximum dose at which inhibitors can be given. Note that the time T does not correspond to a therapy period. However, instead the functional (1) attempts to balance the effectiveness of the treatment with cost and side effects through the positive weight κ at this integral. The state variables p and q are, respectively, the primary tumor volume and the carrying capacity of the vasculature. Tumor growth is modelled by a Gompertzian growth function with a carrying capacity q, by (3), where ξ denotes a tumor growth parameter. The dynamics for the endothelial support is described by (4), where bp models the stimulation of the endothelial cells by the tumor and the term \(dp^{\frac{2}{3}}q\) models the endogenous inhibition of the tumor. The coefficients b and d are growth constants. The terms μq and Guq describe, respectively, the loss to the carrying capacity through natural causes (death of endothelial cells, etc.), and the loss due to extra outside inhibition. The variable u represents the control in the system and corresponds to the angiogenic dose rate, while G is a constant that represents the anti-angiogenic killing parameter. More details on the descriptions and the discussion of parameters in (1)–(4) can be found in [9, 10]. They analyzed the above problem using first-order necessary conditions for optimality of a control u given by the Pontryagin maximum principle and the second order: the so-called strengthened Legendre–Clebsch condition and geometric methods of optimal control theory.

3 Hamilton–Jacobi Approach

For problems (1)–(4), we can construct the following Hamiltonian:

where y=(y 1,y 2). The true Hamiltonian is

In spite that \(\hat{H}\) is linear in u, the control may not be determined by the minimum condition (detailed discussions are in [8]). Following [8], to exclude discussions about the structure of optimal controls in regions where the model does not represent the underlying biological problem, we confine ourselves to the biologically realistic domain

where \(\bar{p}= ( \frac{b-\mu }{d} )^{3/2}\), \(\bar{q}=\bar{p}\). Thus, the Hamiltonian H is defined in D×R 2. We shall call trajectories admissible iff they satisfy (3), (4) (with control u∈[0,a]), and are lying in D. Along any admissible trajectory, defined on [0,T], satisfying necessary conditions—maximum Pontryagin principle [12], we have

However, as the problems (1)–(4) are free final time, we cannot apply directly the dynamic programming approach. The main reason is that in the dynamic programming formulation, the value function must satisfy the Hamilton–Jacobi equation (the first-order PDE) together with the end condition in final time. Thus, the final time should be fixed, or at least it should belong to a fixed end manifold. To this effect, we follow the trick of Maurer [12] and transform problems (1)–(4) into a problem with fixed final time \(\tilde{T}=1\). The transformation proceeds by augmenting the state dimension and by introducing the free final time as an additional state variable. Define the new time variable τ∈[0,1] by

We shall use the same notation p(τ):=p(τ⋅T), q(τ):=q(τ⋅T) and u(τ):=u(τ⋅T) for the state and the control variable with respect to the new time variable τ. The augmented state

satisfies the differential equations

As a consequence, we shall consider the following augmented control problem (P̃) on the fixed time interval [0,1]:

s.t.

We shall call the trajectories \((\tilde{p},\tilde{q})\) (with control u∈[0,a]) admissible if they satisfy (5), (6), and (p,q) are lying in D. The transformed problem (\(\tilde{\mathrm{P}}\)) on the fixed interval [0,1] falls into the category of control problems treated in [13]. Thus, we can apply the dynamic programming method developed therein.

The Hamiltonian for the problem (\(\tilde{\mathrm{P}}\)) becomes

where \(\tilde{y}=(y_{1},y_{2},y_{3})\).

Define \(\tilde{D}:=[0,1]\times D\) and on it define the value function for the problem (\(\tilde{\mathrm{P}}\))

where the infimum is taken over all admissible trajectories starting at \((\tau ,p,q)\in \tilde{D}\). If it happens that S is of C 1, then it satisfies the Hamilton–Jacobi–Bellman equation [13]:

with a terminal value

Since the problems of the type (8)–(9) are difficult to solve explicitly in the paper [14] introduced the so-called ε-value function. For any ε>0, we call a function (τ,p,q)→S ε (τ,p,q), defined in \(\tilde{D}\), an ε-value function iff

It is also well known that there exists a Lipschitz continuous ε-value function and that it satisfies the Hamilton–Jacobi inequality:

Conversely [14]:

Each Lipschitz continuous function w(τ,p,q) satisfying

is an ε-value function; i.e., it satisfies (10)–(11).

In the next section, we describe a numerical construction of a function w satisfying (12).

4 Numerical Approximation of Value Function

This section is an adaptation of the method developed by Pustelnik in his Ph.D. thesis [15] for the numerical approximation of value function for the Bolza problem from optimal control theory. The main idea of that method is an approximation of the Hamilton–Jacobi expression from (12) by a piecewise constant function. However, we do not know the function w. The essential part of the method is that we start with a quite arbitrary smooth function g and then shift the Hamilton–Jacobi expression with g by the piecewise constant function to get an inequality similar to that as in (12). Thus, let \(\tilde{D}\ni (\tau ,p,q)\rightarrow g(\tau ,p,q)\) be an arbitrary function of class C 2 in \(\tilde{D}\), such that g(1,p,q)=p, (p,q)∈D. For a given function g, we define in \(\tilde{D}\times R^{+}\times U (\tau ,\tilde{p},\tilde{q},u)\rightarrow G_{g}(\tau ,\tilde{p},\tilde{q},u)\) as

Next, we define the function \((\tau ,\tilde{p},\tilde{q})\rightarrow F_{g}(\tau ,\tilde{p},\tilde{q})\) as

Note that the function F g is continuous in \(\tilde{D}\) and even Lipschitz continuous in \(\tilde{D}\), and denote its Lipschitz constant by \(M_{F_{g}}\). By the continuity of F g and compactness of \(\overline{\tilde{D}}\), there exist k d and k g such that

The first step of approximation is to construct the piecewise constant function h η,g which approximate the function F g . To this effect, we need some notations and notions.

4.1 Definition of Covering of \(\tilde{D}\)

Let η>0 be fixed and \(\{q_{j}^{\eta }\}_{j\in \mathbb{Z}}\) be a sequence of real numbers such that \(q_{j}^{\eta }=j\eta \), j∈ℤ (ℤ—set of integers). Denote

i.e.

Next, let us divide the set \(\tilde{D}\) into the sets \(P_{j}^{\eta ,g}\), j∈J, as follows:

As a consequence, we have for all i,j∈J, i≠j, \(P_{i}^{\eta ,g}\cap P_{j}^{\eta ,g}=\varnothing \), \(\bigcup_{j\in J}P_{j}^{\eta ,g}=\tilde{D}\) an obvious proposition.

Proposition 4.1

For each \((\tau ,p,q)\in \tilde{D}\), there exists an ε>0, such that a ball with center (τ,p,q) and radius ε is either contained only in one set \(P_{j}^{\eta ,g}\), j∈J, or contained in a sum of two sets \(P_{j_{1}}^{\eta ,g}\), \(P_{j_{2}}^{\eta ,g}\), j 1,j 2∈J. In the latter case, |j 1−j 2|=1.

4.2 Discretization of F g

Define in \(\tilde{D}\times R^{+}\) a piecewise constant function

Then, by the construction of the covering of \(\tilde{D}\), we have

i.e., the function (15) approximates F g with accuracy η.

The next step is to estimate integral of h η,g along any admissible trajectory by the finite sum of elements with values from the set {−η,0,η} multiplied by 1−τ i , 0≤τ i <1. Let \((\tilde{p}(\cdot ),\tilde{q}(\cdot ),u(\cdot ))\) be any admissible trajectory starting at the point \((0,p_{0},q_{0})\in \tilde{D}\). We show that there exists an increasing sequence of m points {τ i } i=1,…,m , τ 1=0, τ m =1, such that, for τ∈[τ i ,τ i+1],

Indeed, it is a direct consequence of two facts: absolute continuity of \(\tilde{p}(\cdot )\), \(\tilde{q}(\cdot )\) and continuity of F g . From (17), we infer that, for each i∈{1,…,m−1}, if \((\tau_{i},\tilde{p}(\tau_{i}),\tilde{q}(\tau_{i}))\in P_{j}^{\eta ,g}\) for a certain j∈J, then we have, for τ∈[τ i ,τ i+1),

Thus, for τ∈[τ i ,τ i+1] along the trajectory \(\tilde{p}(\cdot )\), \(\tilde{q}(\cdot )\),

and so, for i∈{2,…,m−1},

where \(\eta_{\tilde{p}(\cdot ),\tilde{q}(\cdot )}^{i}\) is equal to −η or 0 or η. Integrating (18), we obtain, for each i∈{1,…,m−1},

and in consequence,

Now, we will present the expression

in a different, more useful form. By performing simple calculations, we get the two following equalities:

From (20), we get

and next, we obtain

and, taking into account (19), we infer that

We would like to stress that (21) is very useful from a numerical point of view: We can estimate the integral h η,g(⋅,⋅,⋅) along any trajectory \(\tilde{p}(\cdot ),\tilde{q}(\cdot )\) as a sum of finite number of values, where each value consists of a number from the set {−η,0,η} multiplied by τ m −τ i .

The last step is to reduce the infinite number of admissible trajectories to finite one. To this effect, we say that for two different trajectories: \(( \tilde{p}_{1}(\cdot ),\tilde{q}_{1}(\cdot ) ) \), \(( \tilde{p}_{2}(\cdot ),\tilde{q}_{2}(\cdot ) ) \), the expressions

and

are identical iff

and

The last one means that in the set B of all trajectories \(\tilde{p}(\cdot ),\tilde{q}(\cdot )\) we can introduce an equivalence relation r: We say that two trajectories \(( \tilde{p}_{1}(\cdot ),\tilde{q}_{1}(\cdot ) ) \) and \(( \tilde{p}_{2}(\cdot ),\tilde{q}_{2}(\cdot ) ) \) are equivalent iff they satisfy (22) and (23). We denote the set of all disjoint equivalence classes by B r . The cardinality of B r , denoted by ∥B r ∥, is finite and bounded from above by 3m+1.

Define

It is easy to see that the cardinality of X is finite.

Remark 4.1

One can wonder whether the reduction of an infinite number of admissible trajectories to finite one makes computational sense, especially if the finite number may mean 3m+1. In the theorems below, we can always take infimum and supremum over all admissible trajectories and the assertions will be still true. However, from a computational point of view, they are examples in which it is more easily and effectively to calculate infimum over finite sets.

The considerations above allow us to estimate the approximation of the value function.

Theorem 4.1

Let \(( \tilde{p}(\cdot ),\tilde{q}(\cdot) ) \) be any admissible pair such that

Then we have the following estimate:

Proof

By inequality (16),

we have

Integrating the last inequality along any \(( \tilde{p}(\cdot ),\tilde{q} (\cdot ) ) \) in the interval [τ 1,τ m ], we get

Thus, by a well-known theorem [16],

Hence, we get two inequalities:

and

Both inequalities imply that

As a consequence of the above, we get

and thus the assertion of the theorem follows. □

Now, we use the definition of equivalence class to reformulate the theorem above in a way that is more useful in practice. To this effect, let us note that, by definition of the equivalence relation r, we have

and

Taking into account (21), we get

and a similar formula for supremum. Applying that to the result of the theorem above, we obtain the following estimation:

Thus, we come to the main theorem of this section, which allows us to reduce an infinite dimensional problem to the finite dimensional one.

Theorem 4.2

Let η>0 be given. Assume that there is θ>0, such that

Then

is εoptimal value at (τ 1,p 0,q 0) for ε=2η+θ.

Proof

From the formulae (24), (25), we infer

Then, using the definition of value function (7), we get (26). □

Example 4.1

Let the total amount of anti-angiogenic inhibitors A=300 mg (2). Take for initial values of tumor p 0=15502 and vasculature q 0=15500, κ=1. With u=10 (we assume maximum dose at which inhibitors can be given a=75 mg), taking an approximate solution of (3), (4), we jump to singular arc [8], and then follow it (in discrete way) until all inhibitors available are being used up, then final p=9283.647 and q=5573.07. We construct a function g numerically such that g(0,p 0,q 0)=9283.647 and assumption (25) is satisfied with θ=0, η=0.1 and \(h^{\eta ,g}(\tau_{1}, \tilde{p}(\tau_{1}),\tilde{q}(\tau_{1}))=0\).

5 Conclusions

The paper treats the free final-time optimal control problem in cancer therapy through the ε-dynamic programming approach stating that every Lipschitz solution of the Hamilton–Jacobi inequality is an ε-optimal value of the cost of the treatment. Then a numerical construction of the ε-optimal value is presented. As a final result, a computational formula for the ε-optimal value is given.

References

Boehm, T., Folkman, J., Browder, T., O’Reilly, M.S.: Antiangiogenic therapy of experimental cancer does not induce acquired drug resistance. Nature 390, 404–407 (1997)

Kerbel, R.S.: A cancer therapy resistant to resistance. Nature 390, 335–336 (1997)

Kerbel, R.S.: Tumor angiogenesis: past, present and near future. Carcinogenesis 21, 505–515 (2000)

Klagsburn, M., Soker, S.: VEGF/VPF: the angiogenesis factor found? Curr. Biol. 3, 699–702 (1993)

Hahnfeldt, P., Panigrahy, D., Folkman, J., Hlatky, L.: Tumor development under angiogenic signaling: a dynamical theory of tumor growth, treatment response, and postvascular dormancy. Cancer Res. 59, 4770–4775 (1999)

D’Onofrio, A.: Rapidly acting antitumoral anti-angiogenic therapies. Phys. Rev. E, Stat. Nonlinear Soft Matter Phys. 76(3), 031920 (2007)

D’Onofrio, A., Gandolfi, A.: Tumour eradication by antiangiogenic therapy: analysis and extensions of the model by Hahnfeldt et al. (1999). Math. Biosci. 191, 159–184 (2004)

Ledzewicz, U., Schättler, H.: Optimal bang-bang controls for a 2-compartment model in cancer chemotherapy. J. Optim. Theory Appl. 114, 609–637 (2002)

Ledzewicz, U., Schättler, H.: Anti-angiogenic therapy in cancer treatment as an optimal control problem. SIAM J. Control Optim. 46(3), 1052–1079 (2007)

Ledzewicz, U., Schättler, H.: Optimal and suboptimal protocols for a class of mathematical models of tumor anti-angiogenesis. J. Theor. Biol. 252, 295–312 (2008)

Ledzewicz, U., Oussa, V., Schättler, H.: Optimal solutions for a model of tumor anti-angiogenesis with a penalty on the cost of treatment. Appl. Math. 36(3), 295–312 (2009)

Maurer, H., Oberle, J.: Second order sufficient conditions for optimal control problems with free final time: the Riccati approach. SIAM J. Control Optim. 41(2), 380–403 (2002)

Fleming, W.H., Rishel, R.W.: Deterministic and Stochastic Optimal Control. Springer, New York (1975)

Nowakowski, A.: ε-Value function and dynamic programming. J. Optim. Theory Appl. 138(1), 85–93 (2008)

Pustelnik, J.: Approximation of optimal value for Bolza problem. Ph.D. Thesis (2009). (in Polish)

Castaing, C., Valadier, M.: Convex Analysis and Measurable Multifunctions. Lecture Notes in Math., vol. 580. Springer, New York (1977)

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. d’Onofrio.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Nowakowski, A., Popa, A. A Dynamic Programming Approach for Approximate Optimal Control for Cancer Therapy. J Optim Theory Appl 156, 365–379 (2013). https://doi.org/10.1007/s10957-012-0137-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-012-0137-z