Abstract

We derive thermodynamic functionals for spatially inhomogeneous magnetization on a torus in the context of an Ising spin lattice model. We calculate the corresponding free energy and pressure (by applying an appropriate external field using a quadratic Kac potential) and show that they are related via a modified Legendre transform. The local properties of the infinite volume Gibbs measure, related to whether a macroscopic configuration is realized as a homogeneous state or as a mixture of pure states, are also studied by constructing the corresponding Young-Gibbs measures.

Similar content being viewed by others

1 Introduction

In continuum mechanics, in order to describe the properties of a material, one studies a minimization problem of a given free energy functional with respect to an appropriate order parameter. The physical properties of the system are encoded in this functional which, in accordance with the second law of thermodynamics, is a convex function. Of particular interest is the case when we are in the regime of phase transition between pure states, which corresponds to a linear segment in the graph of the above functional with respect to the order parameter. In such a case, the solution of the minimization problem can be realized as a fine mixture of the two pure phases of the system. This is the case of occurrence of microstructures, a phenomenon observed in materials with significant technological implications. The percentage of each phase in this mixture has been successfully described by the use of Young measures. For an overview, one can look at [12] and the references therein. On the other hand, from an atomistic viewpoint and at finite temperature, there is a well-developed rigorous theory of phase transitions. For example, in the case of the Ising model, each pure phase is described via an extremal Gibbs measure and mixtures via convex combinations of the extremal ones. In this paper, we connect the two descriptions and derive a macroscopic continuum mechanics theory for scalar order parameter starting from statistical mechanics. In this context, we study the appearance of microstructures in our model by constructing Young-Gibbs measures, as they were introduced by Kotecký and Luckhaus in [10] for the case of elasticity. For more analogues of Young measures in the analysis of the collective behaviour in interacting particle systems, see [14].

To fix ideas, we consider the Ising model with nearest-neighbour ferromagnetic interaction as reference Hamiltonian. To allow spatially macroscopic inhomogeneous magnetization profiles, we have to patch together such Ising models for each given macroscopic magnetization. To obtain the desired profile, we can either do it by directly imposing a canonical constraint or by adding an external magnetic field to the Hamiltonian. We follow the second strategy and implement it by using a Kac potential acting at an intermediate scale and penalizing deviations out of an associated average magnetization (in other words, fixing the magnetization in a weaker sense than the canonical constraint). We study the Lebowitz-Penrose limit of the corresponding free energy and pressure and show equivalence of ensembles. As a result, for every macroscopic magnetization, there is a unique external field that can produce it. Note that this fact is not true for the nearest-neighbour Ising model in the phase transition regime at zero external field. Indeed, thanks to the Kac term, we are able to fix a given value of the magnetization at large scales, but this is still not possible at smaller ones. In fact, what we observe in these smaller scales is the persistence of the two pure states of the Ising model with a percentage determined by the overall macroscopic magnetization.

It is worth mentioning that, for the case of the canonical ensemble with homogeneous magnetization, the actual geometry of the location of the pure states has been investigated in the celebrated result of the construction of the Wulff shape for the Ising model, [3]. In an inhomogeneous set-up, the equivalent problem would be to further investigate how such shapes corresponding to two neighbouring macroscopic points are connected, but this is a challenging question beyond the scope of our paper.

To summarize, the presence of the Kac term in the Hamiltonian produces the phenomenon of microstructure as the result of the competition between the Ising factor, which prefers the spins aligned, and the long range averages, which tend to keep the average fixed as induced by the Kac term. As a consequence, modulated patterns made out of the pure states are created and macroscopic values of the magnetization are realized in this manner. The percentage of each pure state in such a mixture is captured by the Young measure. However, it would be desirable to study more detailed properties such as the geometric shape of such structures. At zero temperature, there have been several studies at both the mesoscopic-macroscopic scale (without claim of being exhaustive, we refer to [1, 2, 11] for a rigorous analysis) and the microscopic scale for lattice models, as in a recent series of works by Giuliani, Lebowitz and Lieb, see [8] and the references therein. It would be of fundamental importance to develop such a theory in finite temperature as one would like to incorporate fluctuation-driven phenomena. However, this is still beyond the available techniques.

The paper is organized as follows: in Sect. 2, we present the model and the main theorems. The proof of the limiting free energy and pressure is given in Sect. 3. This is a standard result that essentially follows after putting together the results for the homogeneous case, which is also recalled in Appendix 1. In Sect. 4, we prove equivalence of ensembles. In Sect. 5, as a corollary of the large deviations, we show that spin averages in domains larger than the Kac scale converge in probability to the fixed macroscopic configuration. The second part of the paper deals with investigating what happens when we take such averages in domains smaller than the Kac scale. We see that, in the phase transition regime, local averages converge in probability to averages with respect to a mixture of the pure states which, in accordance to the theory of the deterministic case, we call Young-Gibbs measure. The relevant proofs are given in Sect. 6 with some details left for Appendix 2.

2 Notation and Results

Let \(\mathbb {T}:= \left[ -\frac{1}{2}, \frac{1}{2} \right) ^d\) be the d-dimensional unit torus. For \(q\in \mathbb N\), we consider a small scaling parameter \(\varepsilon \) of the form \(2^{-q}\). In this case, \(\lim _{\varepsilon \rightarrow 0}\) stands for \(\lim _{q\rightarrow \infty }\). The microscopic version of \({\mathbb {T}}\) is the lattice \(\Lambda _{\varepsilon } := \left( \varepsilon ^{-1}{\mathbb {T}}\right) \cap {\mathbb Z}^d\). For a non-empty subset \(A\subset {\mathbb Z}^d\), let \(\Omega _{A} := \left\{ -1,1\right\} ^{A}\) be the set of configurations \(\sigma \) in A that give the value of the spin \(\sigma (x)\in \{-1,1\}\) in each lattice point \(x\in A\). Whenever needed, we also use the notation \(\sigma _A\).

Given a scalar function \(\alpha \in {C}({\mathbb {T}},{\mathbb R})\), which plays the role of an inhomogeneous external field, we define the Hamiltonian \(H_{\Lambda _{\varepsilon },\gamma , \alpha }:\Omega _{\Lambda _{\varepsilon }}\rightarrow {\mathbb R}\) as follows:

The first part \(H_{\Lambda _\varepsilon }^{ nn }:\Omega _{\Lambda _\varepsilon }\rightarrow \mathbb R\) is defined by

where \(x\sim y\) means that x and y are nearest-neighbour sites, assuming periodic boundary conditions in the box \(\Lambda _\varepsilon \). The second part is

where

is an average of the configuration \(\sigma \) around a vertex \(x\in \Lambda _\varepsilon \). We introduce another small parameter \(\gamma >0\) and the Kac interaction \(J_{\gamma }:\Lambda _\varepsilon \times \Lambda _\varepsilon \rightarrow {\mathbb R}\) defined by

Here \(\phi \in C^2({\mathbb R}^d, [0,\infty ))\) is an even function that vanishes outside the unit ball \(\{ r\in \mathbb R^d:|r|< 1 \}\) and integrates to 1. The difference \(x-y\) appearing in the right-hand side of (2.5) is a difference modulo \(\Lambda _\varepsilon \). Hence, the second term enforces the averages of spin configurations to follow \(\alpha \). Given (2.1), the associated finite volume Gibbs measure is defined by

where \(Z_{\Lambda _{\varepsilon }, \gamma , \alpha }\) is the normalizing constant. Note that throughout this paper, we neglect from the notation the dependence on \(\beta \).

To study inhomogeneous magnetizations, we assume that locally in the macroscopic scale (i.e. the scale of the torus \(\mathbb {T}\)) we have obtained a given value of the magnetization, which can however vary slowly as we move from one point to another. To describe what “locally” and “varying slowly” mean, we introduce an intermediate scale l of the form \(2^{-p}\), \(p\in \mathbb N\). Again, \(\lim _{l\rightarrow 0}\) stands for \(\lim _{p\rightarrow \infty }\). Let \(\{C_{l,1},\dots ,C_{l,N_l}\}\) be the natural partition \(\mathscr {C}_l\) of \(\mathbb {T}\) into \(N_l=l^{-d}\) cubes of side-length l, and let \(\{\Delta _{\varepsilon ^{-1}l,1},\ldots ,\Delta _{\varepsilon ^{-1}l,N_l}\}\) be its microscopic version, denoted by \(\mathscr {D}_{\varepsilon ^{-1}l}\). Its elements are given by \(\Delta _{\varepsilon ^{-1}l,i} := (\varepsilon ^{-1}C_{l,i})\cap \mathbb {Z}^d\) for every \(i=1,\ldots ,N_l\). Making an abuse of notation, for every i, we identify the set \(\Delta _{\varepsilon ^{-1}l,i}\subset \mathbb {Z}^d\) with the set \(\cup _{x\in \Delta _{\varepsilon ^{-1}l,i}} \Delta _{1}(x)\) in \(\mathbb R^d\), where \(\Delta _1(x)\) is the cube of size 1 centered in x. Note that \(|\Delta _{\varepsilon ^{-1}l}|\) is the volume of the set \(\Delta _{\varepsilon ^{-1}l}\), but also the cardinality of points in \(\mathbb Z^{d}\) within the set \(\Delta _{\varepsilon ^{-1}l}\). Given \(u\in {C}({\mathbb {T}}, \left( -1,1\right) )\), let \(u^{(l)}:\mathbb {T}\rightarrow (-1,1)\) be the piece-wise constant approximation of u at scale l: for all \(r\in C_{l,i}\),

Here \(|C_{l}|=l^d\) denotes the volume of any of the cubes \(C_{l,1},\ldots ,C_{l,N_l}\). For A and B non-empty subsets of \({\mathbb Z}^d\) such that \(A\subset B\), and for \(\sigma \in \Omega _B\), we define the average magnetization of \(\sigma \) in A by

For \(n\in \mathbb N\), we define the set

Observe that, under this definition, \(I_{ \left| A\right| }\) is the set of all possible (discrete) values that \(m_A\) can assume. For \(t\in [-1,1]\), let \(\lceil t \rceil _n\) be the value in \(I_{n}\) corresponding to the best approximation of t from above:

Furthermore, we consider the set

of all configurations whose locally averaged magnetization \(m_{\Delta _{\varepsilon ^{-1}l,i}}\) is close to the average of u in the corresponding macroscopic coarse grained box \(C_{l,i}\) [see (2.7)], for every \(i=1\ldots ,N_l\). We have:

Theorem 2.1

(Free energy and pressure) For \(u\in {C}({\mathbb {T}}, \left( -1,1\right) )\) and \(\alpha \in {C}({\mathbb {T}},{\mathbb R})\), we have

This limit gives the infinite volume free energy associated to the Hamiltonian (2.1). Here \(f_\beta \) is the infinite volume free energy associated to the Hamiltonian (2.2) (see Theorem 6.7). Similarly, we obtain the infinite volume pressure

Moreover, given \(I_{ \alpha }: {C}({\mathbb {T}}, \left( -1,1\right) )\rightarrow {\mathbb R}\) defined by

we obtain the following Large Deviations limit:

where the set \(\Omega _{\Lambda _\varepsilon ,l}(u)\) is defined in (2.11).

The proof is given in Sect. 3.

Remark 2.2

The minimization problem in Theorem (2.1) can be easily solved; indeed, since \(f_\beta \) is convex, symmetric with respect to the origin and \(\lim _{t \rightarrow \pm 1} f_\beta '(t)=\pm \infty \), the associated Euler-Lagrange equation

has a unique solution \(u := \tilde{u}(\alpha )\) for every number \(\alpha \in \mathbb R\). On the other hand, for a given \(u\in (-1,1)\), if we choose \(\tilde{\alpha }(u) := u+\frac{1}{2} f_\beta '(u)\), then we can say that the Hamiltonian \(H_{\Lambda _\varepsilon ,\gamma , \alpha }\) with \(\alpha =\tilde{\alpha }(u)\) fixes the magnetization profile u in the sense of large deviations. The same is true point-wisely for functions, namely, \(x\mapsto \tilde{u}(\alpha (x))\) is the minimizer of \(F_{\alpha }\) in \(C(\mathbb {T},(-1,1))\).

In Remark 2.2, we have established a relation between a fixed macroscopic magnetization u and the way to obtain it by imposing an appropriate external field \(\tilde{\alpha }(u)\) via a grand canonical ensemble with Hamiltonian \(H_{\Lambda _\varepsilon ,\gamma , \tilde{\alpha }(u)}\). There is, however, an important difference with respect to the case of the Ising model with homogeneous external magnetic field: in the case of homogeneous magnetization, the correspondence between values of the external field and values of the magnetization is not one-to-one due to the fact that \(f_\beta \) is constant on the interval \([-m_\beta ,m_\beta ]\) (to be specified later). On the contrary, in our model, we obtain such a one-to-one correspondence because of the presence of the Kac term which, acting at an intermediate scale, assigns a value to the magnetization according to the external field. This is manifested by a new quadratic term appearing in the free energy.

In the following theorem, we prove a duality relation between the free energy that corresponds to the Ising part of the Hamiltonian (2.2) and the pressure \(P(\alpha )\), obtained through a modified Legendre transform with the external field action given by (2.3).

Theorem 2.3

(Equivalence of ensembles) For \(\alpha \in {C} \left( {\mathbb {T}}, \mathbb {R}\right) \), the following identity holds:

Conversely, for \(u\in {C} \left( {\mathbb {T}}, \left( -1,1\right) \right) \),

The proof is given in Sect. 4. As we mentioned before, the Kac potential acts at an intermediate scale \(\gamma ^{-1}\) and tends to fix the average of the spin values in any box larger than \(\gamma ^{-1}\). To state this result properly, we recall the empirical magnetization defined in (2.8) and, with a slight abuse of notation, we extend it to a function from \(\mathbb {T}\) to \([-1,1]\) given by \(r\mapsto m_{B_{R}(\varepsilon ^{-1}r)}\) in such a way that it is constant in each small cube of side-length \(\varepsilon \). Here \(B_R(x)\) is the ball of radius R with center x, taking into consideration the periodicity in \(\Lambda _\varepsilon \). The first result asserts that, for \(\alpha \in C(\mathbb {T},\mathbb R)\) and \(R_\gamma \gg \gamma ^{-1}\), empirical averages converge in probability to the magnetization profile \(u=\tilde{u}(\alpha )\). Formally, we define the test operator \(L_{\omega ,g}:L^1(\mathbb {T},[-1,1])\rightarrow \mathbb R\), depending on a function \(\omega \in C(\mathbb {T},\mathbb R)\) and on a Lipschitz function \(g:[-1,1]\rightarrow \mathbb R\), by

Under this definition, the following theorem asserts that the operator applied to the empirical average

converges to \(L_{\omega ,g}(u)\) in \(\mu _{\Lambda _\varepsilon ,\gamma ,\alpha }\)-probability. Note that this convergence is a bit different than the usual convergence in probability, since the measure \(\mu _{\Lambda _\varepsilon ,\gamma ,\alpha }\) changes as \(\varepsilon \rightarrow 0\).

Theorem 2.4

Let \(u \in {C} \left( {\mathbb {T}},(-1,1)\right) \) and choose \(\alpha := \tilde{\alpha }(u)\) as in Remark 2.2. Then, for \(L_{\omega ,g}\) given in (2.19), \(R_\gamma \gg \gamma ^{-1}\) and \(\delta >0\), we have

The proof is given in Sect. 5. As it will be evident in the proof, in the above case \(R_{\gamma }\gg \gamma ^{-1}\), the test function g is not relevant.

A different scenario is observed when considering a smaller scale R: the value of the random sequence \(m_{B_{R}(x)}(\sigma )\) may oscillate and, as a consequence, its limiting value may not be just the average. In this case, we study more detailed properties of the underlying microscopic magnetizations. We refer to these as the “microscopic” spin statistics of the measure \(\mu _{\Lambda _{\varepsilon }, \gamma , \alpha }\) (as opposed to the “macroscopic” statistics given by large deviations). More precisely, we investigate how the limiting value u(r) in (2.21) is realized in intermediate scales: as a homogeneous state or as a mixture of the pure states, and how one can retain such an information in the limit. This is reminiscent of the theory of Young measures as applied to describe microstructure; see [12] for an overview. In fact, in order to describe it in our case, we will construct the appropriate Young measure.

Definition 2.5

(Young measure) A Young measure is a map

such that, for every continuous function \(g:[-1,1] \rightarrow \mathbb {R}\), the map \(r \mapsto \langle \nu (r), g \rangle \) is measurable. Here \(\mathcal P([-1,1])\) is the space of probability measures on \([-1,1]\), and \(\langle \nu (r),g \rangle \) indicates the expected value of g with respect to the probability \(\nu (r)\).

To state the main result, we need to recall some background. For an external field \(h\in {\mathbb R}\), let \(H^{ nn }_{\Lambda _\varepsilon , h}:\Omega _{\Lambda _{\varepsilon }}\rightarrow {\mathbb R}\) be the Hamiltonian defined by

and let \(\mu ^{ nn }_{\Lambda _\varepsilon ,h}\) be the associated finite volume measure

It is known that the set \(\mathcal G(\beta ,h)\) of infinite volume Gibbs measures associated to (2.22) is a non-empty, weakly compact, convex set of probability measures on \(\Omega _{\mathbb Z^d}\). More specifically, in \(d=2\) and for any pair \((\beta ,h)\), the set \(\mathcal G(\beta ,h)\) is the convex hull of two extremal elements \(\mu ^{ nn }_{h,\pm }\), the infinite volume limits of (2.23) with ± boundary conditions. Any non-extremal Gibbs measure can be uniquely expressed as a convex combination of these two elements: if \(G\in \mathcal G(\beta ,h)\), then there exists unique \(\lambda _G\in [0,1]\) such that

We define the magnetization at the origin as the expectation

The function \(\varphi :\mathbb R\rightarrow (-1,1)\) is odd, strictly increasing, continuous in every point \(h\ne 0\), and satisfies

There exists a critical value \(\beta _c>0\) such that the limit

is positive if and only if \(\beta >\beta _c\); it is the so-called spontaneous magnetization. Note that it also coincides with the magnetization associated to \(\mu ^{ nn }_{0,+}\): \(m_\beta =\int \sigma _0 \mu ^{ nn }_{0,+}(d\sigma )\). For \(\beta \le \beta _c\), we have \(m_\beta =0\). In this case, for every \(m\in (-1,1)\), there exist a unique value \(h=h(m)\in \mathbb R\) such that \(\varphi (h)=m\). If \(m_\beta >0\), the same is true for values of the magnetization such that \(|m|>m_\beta \). But, how about if \(|m|\le m_\beta \)? This has been investigated in [6], where the canonical infinite volume Gibbs measure has been constructed. As every magnetization \(u\in [-m_\beta ,m_\beta ]\) can be uniquely written as a convex combination

with \(\lambda _u\in [0,1]\), then u is the magnetization associated to the probability

Hence, although “macroscopically” one observes the value u of the magnetization, in intermediate (still diverging) scales, one observes mixtures of the \(m_\beta \) and \(-m_\beta \) phases with a frequency given by \(\lambda _{u}\).

The purpose of the next theorem is to investigate the above fact for inhomogeneous magnetizations, namely by “imposing” a macroscopic profile u(r) in a grand canonical fashion, as it is described in Remark 2.2. For low enough temperature and for \(|u(r)|<m_{\beta }\), at large scales (beyond \(\gamma ^{-1}\)), the system with Hamiltonian (2.1) tends to fix u(r) while, at smaller ones, it allows (large) fluctuations once their average over areas of order \(\gamma ^{-1}\) is compatible with u(r). Indeed, the result states that, at boxes of scale up to \(\gamma ^{-1}\), one of the two pure phases \(\pm m_\beta \) is observed while, at scales larger than \(\gamma ^{-1}\) we see u(r). To capture this phenomenon, we use the observable \(L_{\omega ,g}\) given by (2.19). With a slight abuse of notation, we can also view \(L_{\omega ,g}\) as acting over Young measures \(\nu (r)\in \mathcal P([-1,1])\) as follows:

Theorem 2.6

(Parametrization by Young measures) Let \(u\in C(\mathbb {T},(-1,1))\) and \(\alpha =\tilde{\alpha }(u)\in C(\mathbb T,\mathbb R)\) be its associated external field (given by the solution of (2.16)). We have the following cases:

-

Case \(R_\gamma \gg \gamma ^{-1}\). For every \(\delta >0\),

$$\begin{aligned} \lim _{\gamma \rightarrow 0} \lim _{\varepsilon \rightarrow 0} \mu _{\Lambda _\varepsilon ,\gamma ,\alpha }(|L_{\omega ,g}(m_{B_{R_\gamma }(\varepsilon ^{-1}\cdot )})-L_{\omega ,g}(\nu _u)|>\delta )=0, \end{aligned}$$(2.31)where the functional \(L_{\omega ,g}\) is defined in (2.19) and (2.30), and the Young measure is given by \(\nu _{u}(r) := \delta _{u(r)}\) for every \(r\in \mathbb {T}\). Here \(\delta _{u(r)}\) is the Dirac measure concentrated in u(r).

-

Case \(R=O(1)\). Suppose \(d=2\) and let \(\beta >\log \sqrt{5}\). Then, for every \(\delta >0\),

$$\begin{aligned} \lim _{\gamma \rightarrow 0} \lim _{\varepsilon \rightarrow 0} \mu _{\Lambda _\varepsilon ,\gamma ,\alpha }(|L_{\omega ,g}(m_{B_{R}(\varepsilon ^{-1}\cdot )})-L_{\omega ,g}(\nu _{u,R})|>\delta )=0. \end{aligned}$$(2.32)Here, for \(r\in \mathbb {T}\) and \(E\subset [-1,1]\) a Borel subset, the Young measure \(\nu _{u,R}\) is given by

$$\begin{aligned} \nu _{u,R}(r)(E) := {\left\{ \begin{array}{ll} \mu ^{ nn }_{h(u(r))}[m_{B_R(0)}\in E] &{} \text { if }|u(r)|>m_\beta \\ (\lambda _{u(r)}\mu ^{ nn }_{0,+}+(1-\lambda _{u(r)})\mu ^{ nn }_{0,-})[m_{B_R(0)}\in E] &{} \text { if }|u(r)|\le m_\beta \end{array}\right. }, \end{aligned}$$(2.33)where \(\lambda _{u(r)}\) and h(u(r)) are given in (2.28) and the discussion preceding it, respectively.

-

Case \(1\ll R \ll \gamma ^{-1}\). Under the same hypothesis of the previous item (\(d=2\) and \(\beta >\log \sqrt{5}\)), for every \(\delta >0\),

$$\begin{aligned} \lim _{R\rightarrow \infty }\lim _{\gamma \rightarrow 0} \lim _{\varepsilon \rightarrow 0} \mu _{\Lambda _\varepsilon ,\gamma ,\alpha }(|L_{\omega ,g}(m_{B_{R}(\varepsilon ^{-1}\cdot )})-L_{\omega ,g}(\nu _{u})|>\delta )=0, \end{aligned}$$(2.34)where

$$\begin{aligned} \nu _u(r) := {\left\{ \begin{array}{ll} \delta _{u(r)}, &{} \text { if }|u(r)|>m_\beta \\ \lambda _{u(r)}\delta _{m_\beta }+(1-\lambda _{u(r)})\delta _{-m_\beta }, &{} \text { if }|u(r)|\le m_\beta \end{array}\right. }. \end{aligned}$$(2.35)

The case \(R_\gamma \gg \gamma ^{-1}\) is only a restatement of Theorem 2.4. The proof of the case \(R=O(1)\) is given in Sect. 6. The case \(1\ll R \ll \gamma ^{-1}\) follows as a corollary of the previous case and it is briefly presented in Subsect. 6.1.

3 Proof of Theorem 2.1

In this section we prove the limits (2.12) and (2.13). Then, the limit in (2.15) is a direct consequence.

3.1 Proof of (2.12)

We first prove it for \(\alpha \) and u constant and then for the general case.

3.1.1 Constant u and \(\alpha \).

For \(u\in I_{ \left| \Lambda _\varepsilon \right| }\), we introduce the finite volume free energy associated to the Hamiltonian (2.1) by

For a generic \(u\in (-1,1)\) we prove that

We proceed in three steps: we first show that the limit \(\lim _{\varepsilon \rightarrow 0}F_{\Lambda _{\varepsilon }, \gamma , \alpha } \left( \lceil {u}\rceil _{\Lambda _\varepsilon }\right) \) exists for every \(\gamma \); we continue with a coarse-graining approximation and conclude establishing lower and upper bounds.

Step 1: existence of the limit \({\lim _{\varepsilon \rightarrow 0}F_{\Lambda _{\varepsilon }, \gamma , \alpha } \left( \lceil {u}\rceil _{\Lambda _\varepsilon }\right) }\) for fixed \({\gamma >0}\) Since \(\varepsilon =2^{-q}\), with a slight abuse of notation we denote the volume by \(\Lambda _q\) in order to keep track of the dependence on q. We have \(|\Lambda _{q+1}|=2^d |\Lambda _q|\). We also define the sequence of magnetizations \(u_q := \lceil {u}\rceil _{\Lambda _q}\). It suffices to prove that the sequence \((F_{\Lambda _q, \gamma ,\alpha }(u_q))_q\) is bounded below and that the inequality

holds for every q, where \((a_q)_q\) is a sequence of non-negative numbers such that \(\sum _q a_q<\infty \).

The fact that the sequence \((F_{\Lambda _q, \gamma ,\alpha }(u_q))_q\) is bounded from below follows from the inequalities

and the fact that the right hand side of (3.4) converges to the pressure with zero external field; see Theorem 6.7. To show (3.3), we write:

To find an upper bound for

we use the same sub-additive argument leading to (7.6) in the proof of Theorem 6.7. Indeed, repeating the argument appearing there, it can be proved that

where C is independent of q.

On the other hand, to estimate

we use the following continuity lemma whose proof is also given in Subsect. 1.

Lemma 3.1

If t and \(t'\) are consecutive elements of \(I_{|\Lambda _q|}\), then

where C is a constant that depends only on the dimension d.

The upper bound

follows after using this lemma repeatedly: indeed, \(u_{q+1}\) can be obtained from \(u_q\) moving through consecutive elements of \(I_{|\Lambda _{q+1}|}\) in at most \(2^d\) steps. To conclude, define

and observe that \(\sum _q a_q<\infty \).

Step 2: approximation by coarse-graining We consider a microscopic parameter \(L_\gamma \) of the form \(2^{m}\), \(m\in {\mathbb Z}^+\) depending on \(\gamma \) such that \(\gamma L_\gamma \rightarrow 0\) as \(\gamma \rightarrow 0\). In the sequel, in order to simplify notation we drop the dependence on \(\gamma \) from the scale L. Recall that by \({\mathscr {C}}_{\varepsilon L}= \left\{ C_{\varepsilon L,i}\right\} _{i}\) (respectively \(\mathscr {D}_L= \left\{ \Delta _{ L,i}\right\} _{i}\)), we denote a macroscopic (respectively microscopic) partition of \(\Lambda _{\varepsilon }\) consisting of \(N_{\varepsilon L} := (\varepsilon ^{-1}/ L)^d\) many elements.

We define a new coarse-grained interaction \(J_\gamma ^{(L)}\) on the new scale L. Let \(\Delta _{L,k}\), \(\Delta _{L,k'} \in \mathscr {D}_L\); then for every \(x\in \Delta _{L,k}\) and \(y\in \Delta _{L,k'}\) we define

As before, \( \left| \Delta _{L}\right| \) denotes the cardinality of a generic box \(\Delta _{L,i}\). Since it assumes constant values for all \(x\in \Delta _{L,k}\) and \(y\in \Delta _{L,k'}\) we also introduce the notation

Note that, for any k, we have

Comparing to \(J_\gamma \), we have the error

where the constant C depends on d and \(\Vert D\phi \Vert _\infty \) (the sup norm of the first derivative of \(\phi \)). For a macroscopic parameter l (to be chosen \(\varepsilon L\) in this case) and for \(r\in C_{l,k}\), we define the piece-wise constant approximation of \(\alpha \) at scale l as in (2.7):

With this definition, (3.15) implies that

Note that using the notation \(\bar{J}_\gamma ^{(L)}\) and \(\bar{\alpha }^{(\varepsilon L)}_k\) we can write

For the nearest-neighbour part of the Hamiltonian, there are \(|\partial C_{l}| N_{\varepsilon L}=L^{d-1}(\varepsilon ^{-1}/L)^d=L^{-1}|\Lambda _\varepsilon |\) nearest neighbours between the boxes \(\Delta _{L,1},\ldots , \Delta _{L,N_{\varepsilon L}}\); hence we have

where \(H_{\Delta _{k,i}}^{ nn }\) is considered with periodicity in the box \(\Delta _{L,i}\). Thus, to calculate (3.1), we sum over all possible values \(u_1, \ldots , u_{N_{\varepsilon L}}\) of the magnetization in the boxes \(\Delta _{L,k}\), with \(k=1,\ldots , N_{\varepsilon L}\), and obtain

with a vanishing error of the order \(|\Lambda _\varepsilon | (\gamma L +\varepsilon L + L^{-1})\), as follows from (3.17), (3.18) and (3.19). Like before, we are using the simplified notation \(N=N_{\varepsilon L}\).

In the Appendix, Theorem 6.7, we prove that the convergence to the free energy \(f_\beta \) is uniform, hence the sum

can be approximated by \(e^{-\beta L^d f_\beta \left( u_k\right) }\) with an error bounded by \(e^{\beta L^d s \left( L\right) }\), with \(s \left( L\right) \rightarrow 0\) as \(L\rightarrow \infty \). Then, the overall error is also vanishing:

Finally, we are left with

Step 3: upper and lower bounds To obtain a lower bound of (3.23), we bound the sum in (3.23) by the maximum contribution. Note that the cardinality of the sum vanishes in the limit \(\varepsilon \rightarrow 0\) after taking the logarithm and dividing by \(\beta \left| \Lambda _\varepsilon \right| \). Then the problem reduces to studying the minimum

where \(G: \left[ -1,1\right] ^N\rightarrow {\mathbb R}\) is the function defined by

Moreover, using the convexity of \(f_{\beta }\), we have

Furthermore, from the convexity of the function \(t\mapsto \left( t-\bar{\alpha }^{(\varepsilon L)}_i\right) ^2\), using (3.14), we obtain

Thus, using (3.26) and (3.27), expression (3.24) can be bounded from below by

which converges to \(f_\beta \left( u\right) + \left( u-\alpha \right) ^2\), and the lower bound follows.

For the upper bound of (3.23), we take one particular element \(\tilde{u}_1,...,\tilde{u}_N\) that realizes the value of the lower bound. In this way, we obtain a lower bound for the sum over all possible values of u in (3.23) that leads to the desired upper bound. The idea is that these values should be as close as possible to \(\lceil {u}\rceil _{ \left| \Lambda _\varepsilon \right| }\) and satisfy

Let \(u^-\) and \(u^+\) be the best possible approximations of \(\lceil {u}\rceil _{ \left| \Lambda _\varepsilon \right| }\) in \(I_{L^d}\) from below and from above, respectively. We have:

Notice that \(u^+-u^-\le \frac{2}{L^d}\). We define

Notice that identity (3.29) is satisfied by construction; moreover, it holds that

As \(u\in \left( -1,1\right) \), we can chose \( \left[ a,b\right] \subset \left( -1,1\right) \) such that \(\lceil {u}\rceil _{ \left| \Lambda _\varepsilon \right| }\in \left[ a,b\right] \) and \(\tilde{u}_i\in \left[ a,b\right] \) for every i, with \(\varepsilon \) small enough and L large enough. As \(f'_\beta \) is bounded in \( \left[ a,b\right] \) (see Theorem 6.7) and the function \(t\mapsto t^2\) is Lipschitz over bounded subsets of \({\mathbb R}\), it follows that

Moreover, since

we conclude the proof of the upper bound and with that the proof of (3.2).

3.1.2 General u and \(\alpha \).

For a macroscopic scale l of the form \(2^{-p}\), \(p\in \mathbb N\), recall the macroscopic partition \({\mathscr {C}}_l\) of \(\mathbb {T}\) and let \(\mathscr {D}_{\varepsilon ^{-1}l}\) be its microscopic version, both with \(N=l^{-d}\) elements. Given the function \(u\in C(\mathbb {T}, (-1,1))\), we define

and \(C_{l,i}\) is the macroscopic version of \(\Delta _{\varepsilon ^{-1}l,i}\). Note that the average \(\bar{u}^{(l)}_i\) does not depend on \(\varepsilon \), while the upper bound \( \bar{U}^{(l)}_i\) it does due to the given discretization accuracy. Similarly, for \(\alpha \in C(\mathbb {T},\mathbb R)\), we consider its coarse-grained version \(\alpha ^{(l)}\) as in (3.16). We next apply the previous result (for constant values of u and \(\alpha \)) and pass to the limit \(l\rightarrow 0\). To implement this procedure, we approximate the Hamiltonian (2.1) by the sum of Hamiltonians over the boxes of the partitions with periodic boundary conditions. Neglecting the interactions between neighbouring boxes, for the Ising part of the Hamiltonian, we break \(N_{l}\cdot |\partial \Delta _{\varepsilon ^{-1}l}|\sim |\Lambda _\varepsilon | \varepsilon l^{-1}\) many interactions. Hence

where \(\sigma =\sigma _1\vee \ldots \vee \sigma _{N}\) and by \(\vee \) we denote the concatenation on the sub-domains \(\Delta _{\varepsilon ^{-1}l,i}\), \(i=1,\ldots , N\). Similarly, for the Kac interaction, we neglect \(O \left( |\Lambda _\varepsilon | \gamma ^{-1}\varepsilon l^{-1}\right) \) interactions and obtain

Recalling the definition (2.11) of the set \(\Omega _{\Lambda _\varepsilon ,l}\), we have

After applying the previous result for u and \(\alpha \) constant to each one of the Hamiltonians \(H_{\Delta _{l,i}, \gamma , \bar{\alpha }^{(l)}_i}\) (recall the definition (2.1)), taking the \(\log \), dividing by \(-\beta \left| \Lambda _\varepsilon \right| \) and passing to the limits \(\lim _{\gamma \rightarrow 0}\lim _{\varepsilon \rightarrow 0}\), we obtain

Take finally \(\lim _{l\rightarrow 0}\) to obtain \(\int _{\mathbb {T}} \left[ f_\beta \left( u(r)\right) + \left( u(r)-\alpha (r)\right) ^2\right] dr\) and complete the proof of (2.12).

3.2 Proof of (2.13)

This is similar to the previous proof. For the case of \(\alpha \) constant, the existence of the limit \(\varepsilon \rightarrow 0\) for fixed \(\gamma \) can be proved by the same sub-additivity argument as before, without however the extra effort to keep the canonical constraint in the sequence of boxes of increasing size. Then, by the same coarse-graining argument, similarly to (3.23) we obtain

For an upper bound, given (3.26) and (3.27), we take the maximum of all choices of \(u_1, \ldots , u_N\) and bound (3.40) by

The later quantity is further bounded by

and the upper bound follows.

For a lower bound we take one element (when all are equal) and obtain:

For a general \(\alpha \in C(\mathbb {T},\mathbb R)\), we consider the partition \(\mathscr {C}_{\varepsilon ^{-1}l}\) of \(N_l\) many elements and, in each box, we apply the previous result. We have

Note that, for every \(\alpha \in \mathbb R\), since \(f_\beta \) is convex and \(u\mapsto (u-\alpha )^2\) is strictly convex, the function \(u\mapsto f_\beta (u)+(u-\alpha )^2\) is strictly convex, so its derivative is strictly increasing. Hence equation

has only one solution \(\tilde{u}(\alpha )\). Since \(\alpha \mapsto \tilde{u}(\alpha )\) is a continuous function, taking the limit \(l\rightarrow 0\) in (3.44), by the Dominated Convergence Theorem we obtain

Since this coincides with

the result follows.

4 Proof of Theorem 2.3

We have already proved (2.17) in Theorem 2.13, so we just need to prove (2.18). As in the proof of Theorem 2.13, equation (2.17) can be read as

where \(\tilde{u}\) has been defined in the discussion following equation (3.45). Given \(u \in {C} \left( {\mathbb {T}}, \left( -1,1\right) \right) \) and taking \(\alpha =\tilde{\alpha } \left( u\right) \), we get

From the definition of \(F_{\tilde{\alpha } \left( u\right) }\), it follows that

It remains to show that

for every \(\alpha \in {C} \left( {\mathbb {T}}, \left( -1,1\right) \right) \). Observe that

where \(K_{\Lambda _{\varepsilon },\gamma , \alpha }\) is defined in (2.3). With computations identical to the ones appearing in the proof of Theorem 2.1, it follows that

and

Taking \(-\frac{1}{\beta \left| \Lambda _\varepsilon \right| }\log \) in (4.5) and passing to the limit using (4.6) and (4.7), we obtain (4.4) and complete the proof of Theorem 2.3.

5 Proof of Theorem 2.4

We prove it first for \(\alpha \) and u constant, by taking \(\omega \) constant as well. The general case will follow by applying this case to piecewise constant approximations at an intermediate scale.

5.1 Constant u and \(\alpha \)

We first prove the following exponential bound: for every \(\delta >0\), there is a positive number \(I(\delta )\) such that

We observe that

where we used the fact that g is Lipschitz with constant K. This implies that

Now notice that, for every \(y \in \mathbb {Z}^d\), we have

where \(s(\gamma )\rightarrow 0\) when \(\gamma \rightarrow 0\), uniformly in y. As a consequence, we have

where we recall the definition of \(I_z^\gamma \) in (2.4). It follows that

The correction term \(s(\gamma )O(1)+O(\gamma ^{-1}/R_\gamma )\) vanishes when \(\gamma \rightarrow 0\). It follows that, for \(\gamma \) small enough and for every \(\delta >0\), the following estimate holds:

To estimate the latter expression, we observe that, \(\forall \delta '>0\),

If we choose \(\delta '=\delta /2\), we obtain

Thus, we reduced the problem to the following lemma, whose proof is given in the Appendix 1:

Lemma 5.1

For every \(c, \delta >0\) and \(\gamma \) small enough, we have that

where \(I_x^{\gamma }\) is defined in (2.4).

5.2 The Inhomogeneous Case.

Like before, we consider a macroscopic scale characterized by the parameter l, which we take to be equal to \(2^{-p}\) for \(p\in \mathbb N\). Recall that \({\mathscr {C}}_l\) is the corresponding macroscopic partition of \(\mathbb {T}\) into \(N_l := l^{-d}\) many sets denoted by \(C_{l,k}\), \(k=1,\ldots , N_l\). We denote their microscopic versions by \(\Delta _{\varepsilon ^{-1}l,k}\). Let \(u^{(l)}\) and \(\omega ^{(l)}\) respectively be the piece-wise constant approximations of u and \(\omega \) defined as (2.7). Since g is bounded and continuous and \(\omega \) is uniformly continuous, we have

For \(x\in \Delta _{\varepsilon ^{-1}l,i}\), let \(\tilde{m}_{B_{R_\gamma }(x)}\) be the magnetization considering periodic boundary conditions in \(\Delta _{\varepsilon ^{-1}l,i}\). Note that \(\tilde{m}_{B_{R_\gamma }(x)}\) coincides with \({m}_{B_{R_\gamma }(x)}\) if the distance between x and \(\Lambda _\varepsilon \setminus \Delta _{\varepsilon ^{-1}l,i}\) is larger than \(R_\gamma \). Then, since g is Lipschitz, we have

Replacing in (5.11) and splitting over the boxes of the partition \({\mathscr {D}}_{\varepsilon ^{-1}l}\), we obtain

Then, defining

for l and \(\varepsilon \) small enough, we have

We notice that, for \(\zeta >0\),

Choosing \(\zeta <\delta /2\) and setting \(\delta ' := \frac{1}{2 \left\| g\right\| _\infty } \left( \delta /2-\zeta \right) \), we have

To proceed, we apply the result obtained in the first step for \(\alpha \) and u constant. For this purpose, we define a new probability measure \(\tilde{\mu }_{\Lambda _{\varepsilon }, \gamma , \alpha ^{(l)}}\) defined on the union of the boxes \(\Delta _{\varepsilon ^{-1}l,i}\) with periodic boundary conditions in each of them and with external field \(\alpha ^{(l)}\) as defined in (3.16). Then, by neglecting the interactions between the boxes \(\Delta _{\varepsilon ^{-1}l,i}\), \(i=1,\ldots ,N_l\), for any set B we obtain that

Let us denote by \(\lceil \delta ' N_l \rceil \) the smallest integer not smaller than \(\delta ' N_l\). It follows from (5.1) that there exists \(I(\zeta )>0\) such that

Noting that

from (5.17), (5.18) and (5.19), we obtain the following estimate

If we choose l small enough, the coefficient of \(\varepsilon ^{-d}\) inside the exponential is negative and thus we obtain

concluding the proof of the inhomogeneous case as well as of Theorem 2.4.

6 Young-Gibbs Measures, Proof of Theorem 2.6

As mentioned before, the first case is just a restatement of Theorem 2.4. For the second case, it suffices to prove an exponential bound for the constant case and then the inhomogeneous case follows by the strategy in Subsect. 5.2. The last case is a direct consequence and it will be given at the end of this section. Hence, for the rest of this section, we restrict ourselves to constant \(\alpha \), u and \(\omega \). We first prove the case \(|u|>m_\beta \) and then the more difficult one: \(|u|\le m_\beta \). The hypotheses over the dimension and \(\beta \) are needed only in the second case.

Case \(|u|>m_\beta \). Let f be a local function and \(f_x\) its translation by \(x\in \Lambda _\varepsilon \). For simplicity of notation, we use f instead of \(g(m_{B_R})\). Then, for \(\omega \) constant and for fixed \(\delta >0\), it suffices to prove an exponential bound for \(\mu _{\Lambda _\varepsilon ,\gamma ,\alpha }(E_{\delta })\), where

with h such that \(\mathbb E_{\mu ^{ nn }_h}(\sigma _{0})=u\), i.e., for \(h := f'_{\beta }(u)\). We expand the Hamiltonian \(H_{\Lambda _\varepsilon ,\gamma ,\alpha }\) as follows:

When considering the corresponding measure, the constant terms cancel with the normalization. Note that

Hence, recalling (2.22) with the above h, we consider the following Hamiltonian:

To treat the second term, for some parameter \(\zeta >0\), we consider the random variable

which gives the density of bad Kac averages. Then, using the inequality

and Lemma 5.1 (for appropriate choice of \(\zeta \) and \(\delta '\)), the problem reduces to finding exponential bounds for the first term. Notice that, using (5.4) and (5.5), we have

for some \(s(\gamma )\rightarrow 0\) as \(\gamma \rightarrow 0\). Then the first term on the right-hand side of (6.6) is bounded by

where \(C_{1}\) is a positive constant and where \(\hat{Z}_{\Lambda _\varepsilon ,\gamma ,\alpha }\) is the partition function associated to \(\hat{H}_{\Lambda _\varepsilon ,\gamma ,\alpha }\). For \(\sigma \) such that \(D_\zeta (\sigma )\le \delta '\), we have

so (6.8) is bounded by

From Lemma 5.1, we have that \(\mu ^{ nn }_{\Lambda _\varepsilon ,h}(D_\zeta \le \delta ')>\frac{1}{2}\), for \(\varepsilon \) small enough. Moreover, it is a standard result that there exists \(C_4(\delta )>0\) such that

for \(\varepsilon \) small enough. For the exponential bound (6.11), we refer to [5], Theorem V.6.1. Actually, this theorem gives the result for f a local magnetization, that is, for f of the form \(f(\sigma )=\frac{1}{|\Delta |}\sum _{x\in \Delta }\sigma (x)\); in our case, this is enough as every local function can be written as a linear combination of local magnetizations. Under these considerations, by appropriately choosing \(\zeta \), \(\delta '\) and for \(\gamma \) small, the right hand side of (6.10) is bounded by \(3e^{-C_5(\delta )|\Lambda _\varepsilon |}\) for some constant \(C_{5}(\delta )>0\), and the result follows.

Case \(|u|\le m_\beta \). In this case, for f a local function, we seek an exponential bound for

where \(G_u := \lambda _u\mu ^{ nn }_++(1-\lambda _u)\mu ^{ nn }_-\) with \(\lambda _u\) as in (2.29). Comparing to (6.1), we notice that, instead of the measure \(\mu ^{ nn }_{h}\) with the external field corresponding to u, we have the canonical measure \(G_{u}\). Hence, in order to work with realizations of the measure \(G_{u}\), we need to introduce a scale K and prove that, for boxes in this scale, the relevant measures are \(\mu ^{ nn }_+\) or \(\mu ^{ nn }_-\) and that they appear with a percentage that agrees with the overall fixed magnetization u. There are two main obstacles: the first is that the Kac term in the original measure cannot directly fix the magnetization via large deviations as in Theorem 2.4, since we are looking at averages in a smaller scale than \(\gamma ^{-1}\); in particular, (5.5) is not true. The second is to show that, in the smaller scale K, only the nearest-neighbour part of the Hamiltonian is effective. Hence, we introduce another scale \(L\gg K\), in which the Kac term acts to all spins in the same way. Then inside the box only the nearest-neighbour interactions are relevant.

To proceed with this strategy, we fix a microscopic scale K of the form \(2^m\) and call \(\Delta _{K,1},\ldots ,\Delta _{K,N_K}\) the partition of \(\Lambda _\varepsilon \) into

boxes of side-length K. We call \(\Delta _{K,i}^0\) the boxes with the same center as \(\Delta _{K,i}\) and distance \(\sqrt{K}\) from their complement \(\Delta ^c_{K,i}\). We next introduce the notions of “circuit” and of “bad box”.

Definition 6.1

(Circuit) It is easier to define the lack of circuit. For a sign \(\tau =\pm \), we say that a configuration \(\sigma \in \{-1,1\}^{\Lambda _\varepsilon }\) does not have a \(\tau \)-circuit in \(\Delta _{K,i}\) if there exists a path of vertices \(\{ x_1,\ldots ,x_k \}\subset \Delta _{K,i}\setminus \Delta _{K,i}^0\) such that \(d(x_1,\Delta _{K,i}^c)=1\), \(d(x_k,\Delta _{K,i}^0)=1\), \(d(x_i,x_{i+1})=1\) for every \(i=1,\ldots ,k-1\), and \(\sigma _{x_i}=-\tau \) for every \(i=1,\ldots ,k\). In other words, if the connected components of \(-\tau \) that intersects the boundary of \(\Delta _{K,i}\) do not intersect \(\Delta _{K,i}^0\). If we are not interested in distinguishing the sign of the circuit, we just say that \(\sigma \) has a circuit.

Observe that the existence of a \(\tau \)-circuit can be decided from the outside configuration.

Definition 6.2

Given some precision \(\zeta >0\), a box \(\Delta _{K,i}\) is called \(\zeta \)-bad for a configuration \(\sigma \) if

-

\(\sigma \) does not have a circuit in \(\Delta _{K,i}\), or if

-

\(\sigma \) has a circuit in \(\Delta _{K,i}\) but

$$\begin{aligned} \min _{\tau = \pm } \Big | \frac{1}{|\Delta _{K}|} \sum _{x\in \Delta _{K,i}} f_x- \mathbb E_{\mu ^{ nn }_{0,\tau }}(f) \Big | >\zeta . \end{aligned}$$(6.14)

On the other hand, we call a box \(\zeta \)-good if it is not \(\zeta \)-bad. We can further specify it saying it is \((\zeta ,\tau )\)-good if

Let \(N^{ bad }_{K,\zeta }\) and \(N_{K,\zeta ,\tau }^ good \) be the number of \(\zeta \)-bad and \((\zeta ,\tau )\)-good boxes, respectively. To conclude the proof of the case \(|u|\le m_{\beta }\), it suffices to prove that the probability of having a large density of \(\zeta \)-bad boxes is small and that the density of \((\zeta ,+)\)-good boxes is \(\lambda _u\); this is the content of the following lemma.

Lemma 6.3

For every \(\zeta ,\delta >0\), there exists \(C(\zeta ,\delta )>0\) such that the following exponential bounds hold for every \(\varepsilon \) and \(\gamma \) small enough and K large enough:

Before giving its proof, we see how the case \(|u|\le m_{\beta }\) follows from it. For \(\zeta ,\delta '>0\) (they will later depend on \(\delta \)), (6.12) is bounded by

The exponential bound for the second term is given by Lemma 6.3. To control the first one, we decompose the average as follows:

Take \(\delta '=\frac{\delta }{4 \left\| f\right\| _\infty }\) and observe that, for \(\sigma \) such that \(\frac{N^ bad _{K,\zeta }(\sigma )}{N_K}\le \delta '\), we have

Then the first term of (6.18) is bounded by

Subtracting and adding \(\mathbb E_{\mu ^{ nn }_\tau }(f)\), we have

Choosing \(\zeta =\frac{\delta }{4}\), the first term in the last expression is smaller than \(\frac{\delta }{4}\), thus (6.21) is bounded by

which, by Lemma 6.3, decays exponentially.

Proof of Lemma 6.3

(i) We first notice that the criterion for a box to be “bad” is based only on the nearest-neighbour interaction part of the measure. Therefore, instead of estimating (6.16) using \(\mu _{\Lambda _\varepsilon , \gamma ,\alpha }\), we reduce ourselves to an estimate using only the Ising part. To do that, we introduce another intermediate scale L of order \(\gamma ^{-1+a}\), for \(a>0\), and we first condition over all possible values of the magnetization in this scale: we divide \(\Lambda _\varepsilon \) into boxes \(\Delta _{L,1},\ldots ,\Delta _{L,N_L}\), \(N_L=(\varepsilon L)^{-d}\) (recall (6.13)) and, in each box \(\Delta _{L,i}\), the new order parameter \(m_{\Delta _{L,i}}(\sigma )\) takes values in \(I_{|\Delta _{L,i}|}\). We denote this new configuration space by \(\mathcal M_{L} := \prod _{i=1}^{N_L}I_{|\Delta _{L,i}|}\). Then, by conditioning on a set of configurations with a given average magnetization in \(\mathcal M_L\), the Kac part of the Hamiltonian is essentially constant so we are only left with the nearest-neighbour interaction.

To proceed with this plan, we follow the coarse-graining procedure as in Sect. 2.1; recall the effective interaction \( \bar{J}_{\gamma }^{(L)}\) in the new scale L given in (3.13). For \(\eta := \{\eta _i\}_i\in \mathcal M_{L}\), recalling (3.18), we denote the new coarse-grained Hamiltonian

(note that \(\alpha \) is constant). Recalling the error (3.17), for \(L=\gamma ^{-1+a}\), we obtain that

where

Note that in the splitting in (6.25) we do not specify the boundary conditions, as with an extra lower order (surface) error we can choose them ad libitum. Hence, we have to estimate \(\mu _{\Lambda _\varepsilon ,0}^{ nn }(\{N^{ bad }_{K,\zeta }>\delta N_K\})\). We split it into a product over the measures \(\mu _{\Delta _{L,i},0,+}^{ nn }\) assuming \(+\) boundary conditions and making an error of lower order. Then, we focus in a box \(\Delta _L\) and denote by \(N_{K,L}\) (respectively \(N^{ bad }_{K,L,\zeta }\)) the number of boxes (respectively bad boxes) of size K in \(\Delta _L\). In order to conclude, it suffices to show that there is \(r(\delta , \zeta , K)>0\) such that

The proof of (6.27) is lengthy and it is outlined below, after the end of the proof of Lemma 6.3. Furthermore, this decaying estimate should win against the accumulating errors of the order \(\gamma ^a |\Lambda _\varepsilon |\) in (6.25) and (6.26). This is true since \(\gamma ^a K^d \ll r(\delta ,\zeta ,K)\), for \(\gamma \) small enough, after using the fact that \(|\Delta _L|=N_{K,L} K^d\). We also need a lower bound of (6.26). For that, it suffices to show that for every i:

The proof is given in Appendix 1, concluding the proof of item (i) of Lemma 6.3.

(ii) To prove (6.17), for u constant, in a box \(\Lambda _\varepsilon \) we have:

Moreover,

From Definition 6.2, in the good boxes we have a circuit of ± spins. Then, using (8.1), for every \(x\in \Delta ^0_{K}\) we have that

for K large and a generic box \(\Delta _K\). We consider the measure \(\mu _{\Lambda _\varepsilon ,\gamma ,\alpha }\) and use the estimate (5.1). We split the measure over the boxes \((\Delta _{K,i})_i\) like previously and, using (6.29) as well as the estimate (6.16), we obtain (6.17). This concludes the proof of Lemma 6.3. \(\square \)

In the sequel, we first prove the remaining estimate (6.27). Here we present the strategy and state the main lemmas. For the proofs we refer to Appendix 1 and 1. The section will conclude with the proof of (2.34).

Proof of (6.27). Given a box \(\Delta _L\), let \(I\subset \{1,\ldots , N_{K,L}\}\) denote the indices of the boxes \(\Delta _{K}\) within it.

Definition 6.4

Given \(I\subset \{1,\ldots , N_{K,L}\}\) and \(\underline{a}\in \{-,+\}^{I}\), we define \(\mathcal X'_{I,\underline{a}}\) to be the set of configurations where there is some circuit around \(\Delta _{K,i}^0\) for all \(i\in I\) and (6.14) is true. On the other hand, we define \(\mathcal X''_I\) to be the set of configurations for which there is no circuit for any of the boxes in I.

Asking for more than \(\delta N_{K,L}\), \(0<\delta <1\), many bad boxes is equivalent to the fact that at least one of the two cases described in Definition 6.4 has to occur more than \(\frac{\delta N_{K,L}}{2}\), hence:

To estimate the first contribution, we have the following lemma:

Lemma 6.5

Consider a box \(\Delta _L\) divided into \(N_{K,L}\) smaller boxes \(\Delta _K\), with \(K\ll L\). There is a positive constant c so that, for any \(I\subset \{1,\ldots ,N_{K,L}\}\) and \(i\in I\), the following is true:

where \(\zeta \) is the precision parameter in the criterion (6.14) of bad boxes.

To obtain (6.27), we need to iterate the result of Lemma 6.5 and get

which agrees with the one in the right hand side of (6.27) since \(r(\delta , \zeta , K):=-\delta \log (\zeta ^{-2}K^{-d})\) is sufficiently large by considering K large for \(\zeta \) and \(\delta \) fixed.

To find an estimate for the second contribution in (6.31), we use the random-cluster formulation. We give a complete description of the method in Appendix 1, where we also provide the proof of the following lemma:

Lemma 6.6

Suppose \(\beta >\log \sqrt{5}\). For every \(\delta >0\), there exists \(C=C(\delta )>0\) such that the exponential bound

holds for K (and L) large enough.

Note that here, for simplicity of the proof, we can use empty boundary conditions by making an extra error of smaller order. From (6.31), (6.33) and (6.34), we conclude the proof of (6.27).

6.1 Proof of (2.34)

When \(R\rightarrow \infty \), for any translation invariant measure \(\mu \) (either \(\mu ^{ nn }_{0,\pm }\) or \(\mu ^{ nn }_{h(u)}\) for some \(|u|>m_\beta \)) we have that

Similarly, if R depends on \(\gamma \) and we pass simultaneously to the limit in such a way that \(1\ll R_\gamma \ll \gamma ^{-1}\).\(\square \)

References

Alberti, G., Choksi, R., Otto, F.: Uniform energy distribution for an isoperimetric problem with long-range interactions. J. Am. Math. Soc. 22, 569–605 (2009)

Chen, X., Oshita, Y.: Periodicity and uniqueness of global minimizers of an energy functional containing a long-range interaction. SIAM J. Math. Anal. 37, 1299–1332 (2005)

Dobrushin, R., Kotecký, R., Shlosman, S.: Wulff Construction: A Global Shape from a Local Interaction. AMS, Providence (1992)

Edwards, R.G., Sokal, A.D.: Generalization of the Fortuin–Kasteleyn–Swendsen–Wang representation and the Monte Carlo algorithm. Phys. Rev. D 38, 2009–2012 (1988)

Ellis, R.: Entropy, Large Deviations, and Statistical Mechanics. Springer, Berlin (1985)

Georgii, H.-O.: Canonical Gibbs Measures. Lecture Notes in Mathematics. Springer, Berlin (1979)

Georgii, H.-O.: Gibbs Measures and Phase Transitions. De Gruyter, Berlin (2011)

Giuliani, A., Lebowitz, J., Lieb, E.: Periodic minimizers in 1D local mean field theory. Commun. Math. Phys. 286, 163–177 (2009)

Grimmett, G.: Percolation. In: Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 321, 2nd edn. Springer-Verlag, Berlin (1999)

Kotecký, R., Luckhaus, S.: Nonlinear elastic free energies and gradient Young-Gibbs measures. Commun. Math. Phys. 326, 887–917 (2014)

Müller, S.: Singular perturbations as a selection criterion for periodic minimizing sequences. Calc. Var. Partial Differ. Equ. 1, 169–204 (1993)

Müller, S.: Variational models for microstructure and phase transitions. MIS MPG Lecture Notes no. 2 (1998)

Presutti, E.: Scaling Limits in Statistical Mechanics and Microstructures in Continuum Mechanics. Springer, Berlin (2000)

Presutti, E.: Microstructures and phase transition. Boll. Unione Mat. Ital. 9(5), 655–688 (2012)

Presutti, E.: From equilibrium to nonequilibrium statistical mechanics. Phase transitions and the Fourier law. Braz. J. Prob Stat. 29, 211–281 (2015)

Simon, B.: Statistical Mechanics of Lattice Gases. Princeton University Press, Princeton (1993)

Acknowledgments

It is a great pleasure to thank Errico Presutti for suggesting us the problem and for his continuous advising. We also acknowledge fruitful suggestions from Roman Kotecký at an earlier version of the paper. This work was initiated when NSL and DT were visiting GSSI. NSL also acknowledges the hospitality of the University of Sussex. The visit of NSL to GSSI was partially supported by Grant 2010-MINCYT-NIO Interacting stochastic systems: fluctuations, hydrodynamics, scaling limits; the visit of NSL to the University of Sussex was partially supported by Grant 14-STIC-03 Evaluation and Optimal Control of High-dimensional stochastic network—ECHOS. NSL was also supported by a scholarship from CONICET-Argentina during the course of his PhD.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Homogeneous Magnetization

For the nearest-neighbour interaction and for \(h\in {\mathbb R}\), we define the finite volume pressure by

Moreover, for \(u\in I_{ \left| \Lambda _\varepsilon \right| }\), we define the finite volume free energy by

and extend the domain of \(f_{\Lambda _\varepsilon , \beta }\) to \( \left[ -1,1\right] \) by assigning the values that correspond to linear interpolation between the values of \(f_{\Lambda _{\varepsilon }, \beta }\) at the neighbouring points in \(I_{ \left| \Lambda _\varepsilon \right| }\). We next prove the existence of the infinite volume free energy and pressure.

Theorem 6.7

(Free energy and pressure) The sequence of functions \( \left( f_{\Lambda _\varepsilon ,\beta }\right) _\varepsilon \) converges point-wise to a function \(f_\beta : \left[ -1,1\right] \rightarrow {\mathbb R}\) called free energy. The function \(f_\beta \) is convex and continuous, differentiable in the interior of its domain. Its derivative \(f'_\beta \) is continuous, it satisfies \(\lim _{u\downarrow -1}f'_\beta \left( u\right) =-\infty \) and \(\lim _{u\uparrow 1}f'_\beta \left( u\right) =\infty \) and it is bounded on compact subsets of \( \left( -1,1\right) \). Moreover, the convergence \(\lim _{\varepsilon \rightarrow 0}f_{\Lambda _\varepsilon ,\beta }=f_\beta \) is uniform.

Similarly, the sequence of functions \( \left( p_{\Lambda _\varepsilon ,\beta }\right) _\varepsilon \) converges point-wise to a function \(p_\beta :{\mathbb R}\rightarrow {\mathbb R}\) called pressure and it is given by

for every \(h\in {\mathbb R}\).

Proof

This is a classical result (see e.g. [5, 13, 16]) with the exception of the uniform convergence of the free energy, which is given here. With a slight abuse of notation we use \(\Lambda _{q} := \Lambda _{\varepsilon }\) with \(\varepsilon =2^{-q}\). Observe that \(\Lambda _{q+1}\) is the disjoint union of the sub-domains \(\Lambda _{q, 1}, \ldots , \Lambda _{q, 2^d}\), each of which is a translation of \(\Lambda _{q}\). For a configuration \(\sigma \in \Omega _{\Lambda _{q+1}}\), we call \(\sigma _{i}\), \(i=1,\ldots ,2^d\) its projections over these sub-domains, i.e., \(\sigma =\sigma _1\vee \ldots \vee \sigma _{2^d}\) where by \(\vee \) we denote the concatenation on the sub-domains. Let \(u\in I_{ \left| \Lambda _{q}\right| }\). Observe that if \(m_{\Lambda _{q,i}}(\sigma _i)=u\) for all \(i=1,\ldots ,2^d\) then \(m_{\Lambda _{q+1}}(\sigma )=u\). Note also that there are \(O \left( 2^d |\partial \Lambda _q|\right) \) many edges connecting vertices of different sub-domains, where by \(\partial \Lambda _q\) we denote the boundary of the set. As a consequence, after defining

we neglect the contributions between the sub-domains, so for some \(C>0\) we obtain:

Taking logarithm and dividing by \(-\beta \left| \Lambda _{q+1}\right| \), we get

For a configuration \(\sigma \in \Omega _{\Lambda _{q+1}}\), let \(N^+ \left( \sigma \right) := \left| \left\{ x\in \Lambda _{q+1}:\sigma (x)=1\right\} \right| \) be the associated number of pluses. There is a correspondence between \(I_{ \left| \Lambda _{q+1}\right| }\) and the set \( \left[ 0, \left| \Lambda _{q+1}\right| \right] \cap {\mathbb Z}\) containing all possible number of pluses. Let u and \(u'\) be consecutive elements of \(I_{ \left| \Lambda _{q+1}\right| }\) such that \(u<u'\), and let n and \(n+1\) be respectively their associated number of pluses. Then

where \(\sigma '\ge \sigma \) means \(\sigma '(x)\ge \sigma (x)\) for every \(x\in \Lambda _{q+1}\). In the later sum, the configurations \(\sigma '\) differ from \(\sigma \) just in one site. As every site has \(2^d\) neighbours, we have

Replacing in (7.7), using the fact that \( \left| \left\{ \sigma ':\sigma '\ge \sigma ,N^+ \left( \sigma '\right) =n+1\right\} \right| = \left| \Lambda _{q+1}\right| -n\) (i.e., the number of minuses in the \(\sigma \) configuration) and the bound \(\frac{ \left| \Lambda _{q+1}\right| -n}{n+1}\le \left| \Lambda _{q+1}\right| \), we get

Taking logarithm and dividing by \(-\beta \left| \Lambda _{q+1}\right| \), we get

For \(f_{\Lambda _{q+1},\beta } \left( u'\right) -f_{\Lambda _{q+1},\beta } \left( u\right) \) the same bound can be obtained by replacing the number of pluses \(N^+\) by the number of minuses \(N^-\). Then

For \(u\in I_{ \left| \Lambda _{q+1}\right| }\), let \(u_{-}\) and \(u_{+}\) be the best approximates in \(I_{ \left| \Lambda _{q}\right| }\) of u from below and from above:

For \(u\in I_{ \left| \Lambda _{q+1}\right| }\setminus I_{ \left| \Lambda _{q}\right| }\), using (7.11) repeatedly, we get

after using (7.6) and the fact that \(2^{-q}\ll \frac{\log \left| \Lambda _{q+1}\right| }{ \left| \Lambda _{q+1}\right| }\).

Let \(a_q := O \left( \frac{\log \left| \Lambda _{q+1}\right| }{ \left| \Lambda _{q+1}\right| }\right) \) and observe that \(a := \sum _qa_q\) is finite. From the above estimates and the fact that \(f_{\Lambda _q,\beta }\) is defined by linear interpolation, we obtain

for every \(u\in \left[ -1,1\right] \) and every q. Let \(g_{q} := f_{\Lambda _q,\beta }-\sum _{i=0}^{q-1} a_i\). Inequality (7.14) implies that

for every \(u\in \left[ -1,1\right] \). The point-wise convergence of the free energy guarantees the point-wise convergence of \( \left( g_{q}\right) _q\) to \(f_\beta -a\). Then \((g_{q})_q\) is a sequence of continuous functions defined on a compact set that converges point-wise and in a monotonic way to \(f_\beta -a\). Under these hypotheses, Dini’s theorem asserts that the convergence is uniform, hence concluding the uniform convergence of \(f_{\Lambda _q,\beta }\) to \(f_\beta \). \(\square \)

1.1 Proof of Lemma 3.1

We consider identity (7.7) with \(H_{\Lambda _q}^{ nn }\) replaced by \(H_{\Lambda _{q},\gamma , \alpha }\). While comparing \(H_{\Lambda _{q},\gamma , \alpha } \left( \sigma \right) \) with \(H_{\Lambda _{q},\gamma , \alpha } \left( \sigma '\right) \), the nearest-neighbour part of the Hamiltonian can be treated as in the proof of Theorem 6.7. To treat the quadratic part, observe that, as every vertex interacts with \(O \left( \gamma ^{-d}\right) \) vertices, we have

We can now repeat the arguments of the proof of Theorem 6.7: using (7.11) and (7.6) with error \(O(2^{-q}\gamma ^{-d})\), for t and \(t'\) consecutive elements of \(I_{|\Lambda _q|}\) we have that

where C is a constant that depends only on the dimension.

1.2 Proof of Lemma 5.1

Given \(c,\zeta >0\), let \(B_{\Lambda _\varepsilon ,\gamma ,\zeta , >c}\) be the set of mostly bad spin configurations:

where \(I_x^\gamma \) is defined in (2.4). Let \(u\in \left[ -m_\beta ,m_\beta \right] \). To get an upper bound of

we look for a lower bound for the Kac part of the Hamiltonian. Indeed, for \(\sigma \in B_{\Lambda _\varepsilon ,\gamma ,\zeta , >c}\) since \(\alpha =u\) (from (2.16)) we have that

which further implies that

Furthermore, from Theorem 6.7 we have that there exists an error \(s_1(\varepsilon )\rightarrow 0\) as \(\varepsilon \rightarrow 0\) such that

On the other hand, to estimate the denominator of (7.19), note that since \(u\in [-m_\beta ,m_\beta ]\), we have that \(f'_\beta \left( u\right) =0\). Then, (2.13) gives \(P \left( \alpha \right) =-f_\beta \left( u\right) \) for \(u=\alpha \); hence,

for some error \(s_2 \left( \varepsilon ,\gamma \right) \) vanishing as \(\varepsilon \) and \(\gamma \) go to zero. Thus, if we substitute (7.21), (7.22) and (7.23) into (7.19), we obtain that:

for \(\varepsilon \) and \(\gamma \) small enough.

If \(u\notin \left[ -m_\beta ,m_\beta \right] \) we have a similar strategy but for the appropriate external field. Hence, adding and subtracting u we expand the Hamiltonian as follows:

Note that for the computation of (7.19) the constant terms are irrelevant. In the case \(u\notin \left[ -m_\beta ,m_\beta \right] \), from (2.16) we have that

hence our goal is to approximate the Hamiltonian \(H_{\Lambda _{\varepsilon },\gamma ,\alpha }\) by (2.22) with external field \(h := -2 \left( u-\alpha \right) \). Additionally, recalling (5.4) and (5.5) we obtain that

for some \(s(\gamma )\rightarrow 0\) as \(\gamma \rightarrow 0\). Then, with h defined above, the third term of (7.25) gives \(-h \sum _{x\in \Lambda _\varepsilon }\sigma (x)\) with a vanishing error. For the second term we use (7.20).

For the denominator, we restrict to

for \(c', \zeta '>0\) to be chosen appropriately. Then for \(\sigma \in B_{\Lambda _\varepsilon ,\gamma ,\zeta ',\le c'}\) we have that

Thus, replacing all above estimates in (7.19), with \(c', \zeta '>0\) chosen such that \(O \left( 1\right) s \left( \gamma \right) +O \left( 1\right) c'+\zeta '^2\) is smaller than \(c\zeta ^{2}\) (also \(\gamma \) small enough) we obtain:

for some \(C>0\). Thus, to conclude it suffices to prove that the denominator is close to 1. This is the content of the next lemma:

Lemma 6.8

Let \(u\in \left[ -1,1\right] \setminus \left[ -m_\beta ,m_\beta \right] \) and let \(h=f_\beta '(u)\) be the external field that corresponds to the homogeneous magnetization u. Then, for the measure (2.23) we have that

for every \(\zeta , c>0\).

Proof of lemma 6.8

For \(\sigma \in B_{\Lambda _\varepsilon ,\gamma ,\zeta , >c}\), we have

which implies that

By translation invariance of the measure \(\mu ^{ nn }_{\Lambda _\varepsilon ,h}\) with periodic boundary conditions, the left-hand side of (7.33) coincides with \(\mathbb {E}_{\mu ^{ nn }_{\Lambda _\varepsilon ,h}}\left[ \left| I_0^{\gamma }-u\right| \right] \). Since the random variable \( \left| I_0^{\gamma }-u\right| \) depends on a finite number of coordinates, the later expectation converges to \( {\mathbb E}_{\mu ^{ nn }_{h}} \left[ \left| I_0^{\gamma }-u\right| \right] \) as \(\varepsilon \rightarrow 0\). Note that \(\mu ^{ nn }_h\) is the infinite volume limit of (2.23). Then, by applying the multidimensional ergodic theorem (e.g. Theorem 14.A8 of [7]), we obtain that

since \(\mathbb E_{\mu ^{ nn }_{h}}[\sigma (x)]=u\) for all \(x\in B_{\gamma ^{-1}}(0)\) and \(\gamma ^{-1}\rightarrow \infty \). \(\square \)

Appendix 2: Estimates on “Bad Boxes”.

Before proceeding with the estimates on “bad boxes”, we state a theorem for the infinite volume Gibbs measures for the Ising model:

Theorem 6.9

For \(d\ge 2\), \(h=0\) and \(\beta >\beta _c(d)\) (\(\beta _c(d)\) is the critical value of the inverse temperature in dimension d), there are two different probability measures \(\mu ^{ nn }_{0,\pm }\) on \(\{-1,1\}^{\mathbb Z^d}\) such that, for any sequence of increasing volumes \((\Lambda _n)_n\), the sequence \(\mu ^{ nn }_{\Lambda _\varepsilon , 0,\pm }\) (with ± boundary conditions) converges weakly to \(\mu ^{ nn }_{0,\pm }\). More precisely, for \(\Delta \subset \Lambda \) finite subsets of \(\mathbb Z^d\) and \(f:\{-1,1\}^{\mathbb Z^d}\rightarrow \mathbb R\) a function that depends only on spins inside \(\Delta \), there exists a positive constant C such that

Furthermore, exponential decay of correlations holds: if the functions f and g depend on spins inside the finite regions \(\Delta _f\) and \(\Delta _g\), respectively, then there exist positive constants \(C_1\) and \(C_2\) such that

The proof is standard and can be found in [15], Theorem 2.5 and its proof in Section 2.6.2. See also Theorem 2.18.

1.1 Proof of Lemma 6.5.

In this section we give the following proof:

Proof of Lemma 6.5:

Let \(\Delta _K^{00}\) be the cube with the same center as \(\Delta _K^0\) and at distance \(K^{\frac{1}{2}}\) from its complement. Given a set \(S\subset \Lambda _\varepsilon \), an accuracy parameter \(\zeta \) and a radius \(R>0\) we define the following set of configurations:

We have that for any \(\tau \in \{+,-\}\) and for L large enough

Given \(i\in I\) and \(C\in \mathcal K_i\) the sets \(G_{C,i}\) and \(\mathcal X'_{I\setminus i}\) are \(C^c\) measurable while the set \(C_{\Delta _{K,i}^0,\zeta }\) is C measurable. Hence, using (8.4) we obtain

where we have used the restricted measures \(\mu ^{ nn }_{C, 0,+}\) and \(\mu ^{ nn }_{C^c, 0,+}\) instead of \(\mu ^{ nn }_{\Delta _L, 0,+}\). From the exponential decay of correlations (8.2), we have that there are two positive constants \(C_1\) and \(C_2\) such that

Then, using the Chebyshev inequality and the weak convergence to an infinite volume limit (8.1) we obtain:

for some \(c>0\) and where R is such that \(R\ll K^{1/2}\). Then from (8.5) we obtain:

which gives the right hand side of (6.32) by using the fact that the events \(G_{C,i}\) for \(C\in \mathcal K_i\) are disjoint. \(\square \)

1.2 Proof of Lemma 6.6

For completeness of the presentation, we first give a short description of the method and then proceed with the proof of the relevant Lemma 6.6. We restrict ourselves to dimension 2, but we expect that a similar result should be also true in higher dimensions. As before, we divide \(\Delta _L\) into boxes \(\Delta _{K,i}\), and call \(N_{K,L}=\frac{L^2}{K^2}\). Recall that \(\Delta _{K,i}^0\) stands for the box with the same center as \(\Delta _{K,i}\) and distance \(\sqrt{K}\) from its complement \(\Delta _{K,i}^c\).

Let \(E(\Delta _L)\) be the set of edges connecting vertices in \(\Delta _L\): \(E(\Delta _L):=\{ \left\{ x,y\right\} \subset \Lambda _L: \left| x-y\right| =1\}\). The random-cluster probability for \(\omega \in \left\{ 0,1\right\} ^{E(\Delta _L)}\) is defined by

where \(p := 1-e^{-2\beta }\), \( Cl (\omega )\) is the number of connected components (or clusters) associated to \(\omega \), and \(Z'\) is the normalizing constant. The Edwards-Sokal probability Q, see [4], is defined on the product space \( \left\{ 0,1\right\} ^{E \left( \Delta _L\right) }\times \left\{ -1,1\right\} ^{\Delta _L}\) and has the random-cluster probability \(\phi \) as the first marginal and the Ising probability \(\mu _{\Delta _L,0,}\) as the second marginal. The main property of Q is that the conditional probability \(Q(\cdot |\omega )\) is given by sampling a value of a spin independently in each cluster of \(\omega \) with probability \(\frac{1}{2}\). In this way, if \(x,y\in \Delta _L\) and \(\omega \in \left\{ 0,1\right\} ^{E(\Delta _L)}\) are such that x and y are connected by a path of edges \(e_1,\ldots ,e_k\) such that \(\omega _{e_i}=1\) for every i, then \(Q(\sigma (x)=\sigma (y)|\omega )=1\).

We can define a partial order on the probability space \( \left\{ 0,1\right\} ^{E(\Delta _L)}\) by \(\omega \preccurlyeq \omega '\) if and only if \(\omega _e\le \omega '_e\) for every \(e\in E(\Delta _L)\). A function \(f: \left\{ 0,1\right\} ^{E(\Delta _L)}\rightarrow {\mathbb R}\) is increasing (resp. decreasing) if and only if \(f(\omega )\le f(\omega ')\) (resp. \(f(\omega )\ge f(\omega ')\)) for every \(\omega ,\omega '\) such that \(\omega \preccurlyeq \omega '\); an event \(A\subset \left\{ 0,1\right\} ^{E(\Delta _L)}\) is increasing (resp. decreasing) if the indicator function \(\mathbf 1 _A\) is an increasing (resp. decreasing) function. For probabilities P and \(P'\) on \( \left\{ 0,1\right\} ^{E(\Delta _L)}\), we say that P is stochastically dominated by \(P'\), and write \(P\le _{ st }P'\), if and only if \(\int f dP\le \int f dP'\) for every increasing function f. The later property holds if and only if \(\int f dP\ge \int f dP'\) for every decreasing function f. Let \(B_\rho \) be the Bernoulli probability on \( \left\{ 0,1\right\} ^{E(\Delta _L)}\) with parameter \(\rho := \frac{1-e^{-2\beta }}{1+e^{-2\beta }}\):

The random-cluster probability satisfies \(B_\rho \le _{st} \phi \); in particular, \(\phi (A)\le B_\rho (A)\) for every decreasing event A. We are ready now to give the proof of Lemma 6.6.

Proof of Lemma 6.6:

We need to introduce some terminology. Let \({\mathbb Z}^{2*} := {\mathbb Z}^2+ \left( \frac{1}{2},\frac{1}{2}\right) \) be the dual set of vertices of \({\mathbb Z}^2\). For an edge \(e= \left\langle xy\right\rangle \in E({\mathbb Z}^2)\), where \(E(\mathbb Z^2)\) is the set of edges of \(\mathbb Z^2\), we define its dual edge \(e^*\) as the one obtained after rotating it 90 degrees around its middle point; for an edge subset \(A\subset E({\mathbb Z}^2)\), we define \(A^* := \left\{ e^*:e\in A\right\} \). For any subset of edges E, let the support of E be the set of vertices that are extreme vertices of any of the edges in E. For a subset \(R\subset \Delta _L\), we define its dual set of vertices \(R^*\) as the support of \(E(R)^*\). The inner boundary of \(R^*\) is defined by \(\partial ^{\circ }R^* \left\{ x\in R^*: \left| x-y\right| =1 for some y\in {\mathbb Z}^{2*}\setminus R^*\right\} \). For a configuration \(\omega \in \left\{ 0,1\right\} ^{E(R)}\), we define its dual configuration \(\omega ^*\in \left\{ 0,1\right\} ^{E(R)^*}\) by \(\omega ^*_{e^*}=1-\omega _{e}\). We say that an edge \(e^*\in E(R)^*\) is \(\omega ^*\) -open if \(\omega ^*_{e^*}=1\). Associated to \(\omega ^*\), and for a fixed box \(\Delta _{K,i}\), we call \(J_{\Delta _{K,i}}(\omega ^*)\subset E(\Delta _{K,i})^*\) the set of edges “penetrating from the outside of \(\Delta _{K,i}\)”, i.e., those containing the dual edges that are \(\omega ^*\)-open and are connected to \(\partial ^\circ (\Delta _{K,i}^*)\) by a path of \(\omega ^*\)-open edges. We say that \(\omega \in \left\{ 0,1\right\} ^{E(\Delta _L)}\) has a circuit of open edges in \(\Delta _{K,i}\) if \(J_{\Delta _{K,i}}(\omega ^*)\cap E(\Delta _{K,i}^0)^*=\emptyset \) (this is the formal way of saying that \(\omega \) has a self-avoiding path of open edges living in \(E(\Delta _{K,i})\) that surrounds \(\Delta _{K,i}^0\)).

Consider the random-cluster probability \(\phi \) associated to \(\mu _{\Delta _L,0,}\) (defined in (8.9)) and the corresponding Edwards-Sokal coupling Q between \(\phi \) and \(\mu _{\Delta _L,0,}\). The fundamental property of Q implies that, for every \(\Delta _{K,i}\),

As a consequence, if we define \(\mathcal Y''_I\) to be the set of configurations \(\omega \in \left\{ 0,1\right\} ^{E(\Delta _L)}\) that do not have a circuit of open edges for every \(i\in I\), we have \(\mu _{\Delta _L,0,\emptyset }(\cup _{I:\, |I|\ge \delta N_{K,L} }\mathcal X''_{I})\le \phi (\cup _{I:\, |I|\ge \delta N_{K,L} }\mathcal Y''_{I}) \); to conclude, we need to control this last term. Recall the Bernoulli probability \(B_\rho \) on \( \left\{ 0,1\right\} ^{E(\Delta _L)}\), given in (8.10). As \(\cup _{I:\, |I|\ge \delta N_{K,L} }\mathcal Y''_{I}\) is a decreasing event, the stochastic domination \(B_\rho \le _{st}\phi \) implies that \(\phi (\cup _{I:\, |I|\ge \delta N_{K,L} }\mathcal Y''_{I})\le B_\rho (\cup _{I:\, |I|\ge \delta N_{K,L} }\mathcal Y''_{I})\). Observe that inequality

holds, where \(\Delta _K\) is any of the boxes \(\Delta _{K,1},\ldots ,\Delta _{K,N_{K,L}}\). Moreover, by Stirling’s formula, there is a constant \(C_1=C_1(\delta )>0\) such that \(\left( {\begin{array}{c}N_{K,L}\\ \lceil \delta N_{K,L}\rceil \end{array}}\right) \le C_1^{N_{K,L}}\) for every \(N_{K,L}\). To estimate

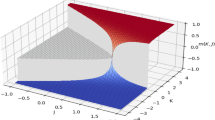

we consider its complement. Let \(R_1\), \(R_2\), \(R_3\) and \(R_4\) be the rectangles of dimension \(K\times \frac{K-K^{\frac{1}{2}}}{2}\) or \(\frac{K-K^{\frac{1}{2}}}{2}\times K\) that satisfy \(\cup _{i=1}^4 R_i=\Delta _K\setminus \Delta _K^0\) (see Fig. 1). \(\square \)

Let R be one of these rectangles and, without loss of generality, suppose it to be horizontal, that is of dimension \(K\times \frac{K-K^{\frac{1}{2}}}{2}\). Let \(T(R^*)\) and \(B(R^*)\) be the corresponding vertices in the top and in the bottom of the support of the (dual) set of edges \(R^*\), that is, the ones with highest and lowest second coordinate (see Fig. 2).

We say that a configuration \(\omega \in \left\{ 0,1\right\} ^{E(R)}\) is good lengthwise if its dual configuration \(\omega ^*\in \left\{ 0,1\right\} ^{E(R)^*}\) does not have any path of open edges connecting \(T(R^*)\) with \(B(R^*)\); in this case, we say \(\omega ^*\) is bad transversally. Observe that a sufficient condition for a configuration \(\omega \in \left\{ 0,1\right\} ^{E(\Delta _K)}\) to have a circuit is that, for every \(1\le i\le 4\), the projection \(\omega _{E(R_i)}\) is good lengthwise. We have

in the last inequality, we used the fact that the event \( \left\{ \omega \in \left\{ 0,1\right\} ^{E(R)}:\omega is good lengthwise \right\} \) is increasing and that the Bernoulli probability satisfies the FKG property; see [9]. To estimate the probability of the last set, we consider its complement:

Observe that, if \(\omega ^*\) is good transversally, there exists a self-avoiding path \(\gamma \) of open edges starting in \(B(R^*)\) and such that \( \left| \gamma \right| =\lfloor \sqrt{K}\rfloor \), where \(\lfloor \sqrt{K}\rfloor \) denotes the integer part of \(\sqrt{K}\) and \( \left| \gamma \right| \) the number of edges of \(\gamma \). Then

We conclude that

Coming back to (8.13), we obtain the upper bound \( \left[ C_1 \left( 8K \left[ 3(1-\rho )\right] ^{\lfloor \sqrt{K}\rfloor }\right) ^\delta \right] ^{N_{K,L}}\). Condition \(\beta >\log \sqrt{5}\) is equivalent to \(3(1-\rho )<1\). Take K large enough to satisfy \( \left( 8K \left[ 3(1-\rho )\right] ^{\lfloor \sqrt{K}\rfloor }\right) ^\delta <1\) to conclude.

1.3 Proof of (6.28).

Given \(\eta \in I_{|C_{l}|}\), if \(|\eta | < m_\beta \) we choose \(p<1\) such that \(\eta =pm_{\beta }-(1-p)m_{\beta }\). Supposing that \(\Delta _L=[0,L)^d\) we split it as: \(\Delta _L=\Delta _L^+\cup \Delta \cup \Delta _L^-\), where

for an appropriate \(c^*>0\) to be chosen next. The set \(\Delta _L^+\) (respectively \(\Delta _L^-\)) is the part of the domain corresponding to smaller (respectively larger) values of \(x_1\). With this definition, letting \(\tau _1=+\) and \(\tau _2=-\), there is a \(c>0\) such that

We express the set in (8.23) by the union (over all possible magnetizations \(m_i\), \(i=1,2\), with \(|m_i-\tau _i m_{\beta }|\le c |\Delta _L^{\tau _i}|^{-1/2}\)) of the set \(\{\sum _{x\in \Delta _L^{\tau _i}}\sigma (x)=m_i | \Delta _L^{\tau _i}|\}\). Then, it follows that there are two values \(m^{*}_i\), \(i=1,2\), of the magnetization with the above constraint so that

With this, we define the set

with b such that \(m^{*}_1| \Delta _L^+ |+m^{*}_2 | \Delta _L^- | + b|\Delta |= \eta |\Delta _L |\). It is easy to see that such a b exists for a \(c^*\) in (8.22) large enough.

Thus, to get a lower bound to the left hand side of (6.28) we restrict to \(\mathcal G_\eta \). As a consequence, in each subdomain the corresponding probabilities can be bounded as in (8.24) and we are left with only the boundary terms, which are of the order \(L^{d-1}\) (for a box \(\Delta _L=L^d\)). We have

Then we can easily conclude since

If \(|\eta |>m_\beta \), then we choose \(h := h(\eta )\) as in discussion following (2.27) and obtain:

for an appropriate choice of c. Following the steps above, (8.28) implies (6.28) for the case \(|\eta |>m_\beta \) and concludes the proof.

Rights and permissions