Abstract

We study three instances of log-correlated processes on the interval: the logarithm of the Gaussian unitary ensemble (GUE) characteristic polynomial, the Gaussian log-correlated potential in presence of edge charges, and the Fractional Brownian motion with Hurst index \(H \rightarrow 0\) (fBM0). In previous collaborations we obtained the probability distribution function (PDF) of the value of the global minimum (equivalently maximum) for the first two processes, using the freezing-duality conjecture (FDC). Here we study the PDF of the position of the maximum \(x_m\) through its moments. Using replica, this requires calculating moments of the density of eigenvalues in the \(\beta \)-Jacobi ensemble. Using Jack polynomials we obtain an exact and explicit expression for both positive and negative integer moments for arbitrary \(\beta >0\) and positive integer n in terms of sums over partitions. For positive moments, this expression agrees with a very recent independent derivation by Mezzadri and Reynolds. We check our results against a contour integral formula derived recently by Borodin and Gorin (presented in the Appendix 1 from these authors). The duality necessary for the FDC to work is proved, and on our expressions, found to correspond to exchange of partitions with their dual. Performing the limit \(n \rightarrow 0\) and to negative Dyson index \(\beta \rightarrow -2\), we obtain the moments of \(x_m\) and give explicit expressions for the lowest ones. Numerical checks for the GUE polynomials, performed independently by N. Simm, indicate encouraging agreement. Some results are also obtained for moments in Laguerre, Hermite-Gaussian, as well as circular and related ensembles. The correlations of the position and the value of the field at the minimum are also analyzed.

Similar content being viewed by others

1 Introduction

Logarithmically correlated Gaussian (LCG) random processes and fields attract growing attention in mathematical physics and probability and play an important role in problems of statistical mechanics, quantum gravity, turbulence, financial mathematics and random matrix theory, see e.g. recent papers [1–5] for introduction and some background references and [6] for earlier review including condensed matter applications. A general lattice version of logarithmically correlated Gaussian field is a collection of Gaussian variables \(V_{N,x} : x \in D_N\) attached to the sites of \(d-\)dimensional box \(D_N\) of side length N (assuming lattice spacing one) and characterized by the mean zero and the covariance structure

where \(\ln _+(w) = \max \left( \ln {w}, 0\right) \), \(g>0\) and both f(x) and \(\psi (x,y)\) are bounded function far enough from the boundary of \(D_N\). One also can define the continuous versions V(x) of LCG fields on various domains \(D\in \mathbb {R}^d\) which is then necessarily a random generalized function (“random distribution”), the most famous example being the Gaussian Free field in \(d=2\), see [7] for a rigorous definition. For \(d=1\) the one-dimensional versions of LGC processes are known under the name of 1 / f noises, see e.g. [8, 9]. They appear frequently in physics and engineering sciences, and also are rich and important mathematical objects of interest on their own. Such processes emerge, for example, in constructions of conformally invariant planar random curves [10] and are relevant in random matrix theory and studies of the Riemann zeta-function on the critical line [2].

In particular, the problem of characterizing the distribution of the global maximum \(M_N =\max _{x\in D_N}V_{N,x}\) of LCG fields and processes (or their continuum analogues) recently attracted a lot of interest, in physics, see [6, 11–15] and mathematics, see [2, 16–26]. The distribution is proved to be given by the Gumbel distribution with random shift [20] and has a universal tail predicted by renormalization group arguments in [6]. The detail of the full distribution are not universal and depend on some details of the behaviour of the covariance (2) for global \(|x - y|\sim N\) scale as well as on the subleading term f(x) in the variance (1). The explicit forms for the maximum distribution were conjectured in a few specific models of 1 / f noises [2, 11, 12, 21].

The goal of this paper is to provide some information about the distribution of the position of the global minimum

for some examples of 1-dimensional processes with logarithmic correlations, though depending on applications, one can be interested instead in a maximum. Statistical properties of the value and position for maxima and minima are obviously trivially related in cases when \(V(x) = -V(x)\) in law.

Our first example is the modulus of the characteristic polynomial of a random GUE matrix over the interval \([-1,1]\) of the spectral parameter. As is well-known, in the limit of large sizes of the matrix the logarithm of that modulus is very intimately related to 1 / f noises [1, 2, 21, 27, 28]. In that example the interesting quantity is obviously the statistics of the maximum, with minimum value being trivially zero at every characteristic root of the matrix. The second example is a general two-parameter variant of a log-correlated process on the interval with, in the language of Coulomb gases, endpoint charges, introduced and studied in [12]. That case may include a non-random (logarithmic) background potential \(V_0(x)\), so that for the sum \(V_0(x) + V(x)\) we have

Our last example is a regularized version of the fractional Brownian motion with zero Hurst index, which is a bona fide (nonstationary) 1d LCG process [1]. Here statistics of maxima and minima are trivially related by symmetry in law.

2 Models, Method and Main Results

We now define the three models to be considered. Although we did not yet succeed in obtaining the full probability distribution function (PDF), \(\mathcal{P}(x_m)\), of the position of the global minimum \(x_m\), we derive formulae for all positive integer moments of \(x_m\) in terms of sums over partitions. In this section we present explicit values for some low moments of \(x_m\), more results can be found in the remainder of the paper.

As is clear from [12] there is an intimate relation between statistics of extrema in log-correlated fields on an interval and the \(\beta \)-Jacobi ensemble of random matrices [29, 30]. In the course of our calculation we present methods to calculate the moments of the eigenvalue density of the Jacobi ensemble. In this section we provide a very explicit formula for these moments derived in remainder of the paper. In particular our result is in agreement with recent results by Mezzadri and Reynolds [31]. We check our results against a contour integral representation derived recently by Borodin and Gorin (presented in the Appendix 1 from these authors) which, remarkably, also allows to calculate negative moments.

Finally we sketch the replica method and the application of the freezing-duality conjecture to extract the moments of \(x_m\) from the moments of the Jacobi ensemble.

2.1 Results for Log-Correlated Processes

2.1.1 GUE Characteristic Polynomials (GUE-CP)

Our first prediction is for the lowest moments of the position of the global maximum for the the modulus of the characteristic polynomial \(p_{N}(x) = \mathrm {det}(xI-H)\) of the Hermitian \(N\times N\) matrix H sampled with the probability weight

known as the Gaussian Unitary Ensemble (or GUE) [32–34]. Here the variance is chosen to ensure that asymptotically for \(N\rightarrow \infty \), the limiting mean density of the GUE eigenvalues is given by the Wigner semicircle law \(\rho (x) = (2/\pi )\sqrt{1-x^{2}}\) supported in the interval \(x\in [-1,1]\). Hence the object we want to study is \(\log |p_{N}(x)| = \sum _i \ln |x - \lambda _i|\) where the \(\lambda _i\) are the eigenvalues of the GUE matrix H. To study its fluctuations it turns out to be more convenient to subtract its mean. This leads to the following

Prediction 1

Define \(\phi _{N}(x)=2\log |p_{N}(x)|-2\mathbb {E}(\log |p_{N}(x)|)\) and consider the random variable

Then the lowest even integer moments of this random variable have the values

whereas the odd integer moments vanish by symmetry.

In particular, the kurtosis of the distribution of \(x_{m}^{(N)}\) in the large-N limit is given by

To make a contact between \(|p_N(x)|\) and the LCG processes we refer to the paper [1]. That work revealed that the natural large-N limit of \(\phi _N(x)\) is given by the random Chebyshev–Fourier series

with \(T_{n}(x) = \cos (n\arccos (x))\) being Chebyshev polynomials and real \(a_n\) being independent standard Gaussian variables. A quick computation shows that the covariance structure associated with the generalized process F(x) is given by an integral operator with kernel

as long as \(x\ne y\). Such a limiting process F(x) is an example of an aperiodic 1 / f-noise.

Note however that the series (8) is formal and diverges with probability one. In fact it should be understood as a random generalized function (distribution). Though there is no sense in discussing the maxima and its position for generalized functions, the problem is well-defined for the logmod of the characteristic polynomial \(\log {|p_N(x)|}\) for any finite N. One therefore needs to find a tool to utilize its asymptotically Gaussian nature evident in (8). It turns out that the latter is encapsulated in the following asymptotic formula due to Krasovsky [35]Footnote 1 which will be central for our considerations:

where \(C(\delta ) := 2^{2\delta ^{2}}\frac{G(\delta +1)^{2}}{G(2\delta +1)}\), with G(z) being the Barnes G-function. In particular, differentiating with respect to \(\delta \), we deduce that

The formula (10) suggests that, apart from the factors \(C(\delta _{j})\) which as we shall see play no role in our calculations, the faithful description of \(2\log |p_{N}(x_{j})|\) is that of the regularized GLC process with covariance (9), the position-dependent variance \(2\left( \ln {N}+\ln {\sqrt{1-x^2}}-\ln {2}\right) \) and the position-dependent mean \(N(2x^2-1-2\log (2))\). We find it convenient to subtract the mean value and concentrate on the centered GLC \(\phi _N(x)\) in Prediction 1. As to the position-dependent logarithmic variance (stemming from the factors \((1-x_{j}^{2})^{\delta _{j}^{2}/2}\) in (10)) we shall see that it does play a very essential role in statistics of the position of global maximum for \(|p_{N}(x)|\) via giving rise to nontrivial “edge charges” in the corresponding Jacobi ensemble. This observation corroborates with the earlier mentioned fact that the subleading position-dependent term f(x) in the variance of the LCG, see (1), may modify the extreme value statistics.

2.1.2 Log-Correlated Gaussian Random Potential (LCGP) with a background potential:

An interesting question is to study the position of the minimum for the sum of a LCG random potential and of a determistic background potential, i.e:

Here we obtain results when D is an interval, say \(x \in [0,1]\). The LCG random potential has correlations:

and \(C_\epsilon (0)=2 \ln (1/\epsilon )\) where \(\epsilon \) is a small scale regularization. The background potential is of the special logarithmic form:

which we will often refer to, following the Coulomb gas language, as “edge charges” at the boundary. We mainly focus on the case of repelling charges, \({\bar{a}}, {\bar{b}}>0\), although both the model, and some of our results, extend to some range of attractive charges. Some properties of this model, such as the PDF of the value of the total potential at the minimum, were studied in [12]. Here we obtain, for the two lowest moments of \(x_m\)

Prediction 2

Note that for the background potential \(V_0(x)\) alone, i.e. in the absence of disorder, and for \({\bar{a}}, {\bar{b}} >0\), the minimum for the background potential \(V_0(x)\) alone is at \(x_m^0=\frac{{\bar{a}}}{{\bar{a}} + {\bar{b}}}\), that is \(x_m^0 - \frac{1}{2} = \frac{{\bar{a}}- {\bar{b}}}{2 ({\bar{a}}+{\bar{b}})}\). Hence the disorder brings the minumum closer in average to the midpoint \(x=\frac{1}{2}\).

2.1.3 Fractional Brownian Motion with Hurst Index \(H=0\) (fBm0)

The fractional Brownian motion introduced by Kolmogorov in 1940 and rediscovered in the seminal work by Mandelbrot & van Ness [38] is defined as the Gaussian process with zero mean and with the covariance structure:

where \(0<H<1\) and \(\sigma _H^2=\text{ Var }\{B_H(1)\}\). The utility of these long-ranged correlated processes is related to the properties of being self-similar and having stationary increments, which characterize the corresponding family of Gaussian process uniquely. In particular, for \(H=1/2\) the fBm \(B_{1/2}(t)\equiv B(t)\) is the usual Brownian motion (Wiener process). Note however that naively putting \(H=0\) in (17) does not yield a well-defined process. Nevertheless we will see below that the limit \(H\rightarrow 0\) for fractional Brownian motion can be properly defined after appropriate regularization and yields a Gaussian process with logarithmic correlations.

Consider a family of Gaussian processes depending on two parameters: \(0\le H<1\) and a regularization \(\eta >0\) and given explicitly by the integral representation [1]

Here \(B_c(s)=B_R(s)+iB_I(s)\), with \(B_R(s)\) and \(B_I(s)\) being two independent copies of the Wiener process B(t) (the standard Brownian motion) so that B(dt) is the corresponding white noise measure, \(\mathbb {E}\left\{ B(dt)\right\} =0\) and \(\mathbb {E}\left\{ B(dt)B(dt')\right\} =\delta (t-t')dtdt'\).

The regularized process \(\{B^{(\eta )}_H(x): x\in \mathbb {R} \}\) is Gaussian, has zero mean and is characterized by the covariance structure

where

It is easy to verify that for any \(0<H<1\) one has \(\lim _{\eta \rightarrow 0}B^{(\eta )}_{H}(x)=B_{H}(x)\) which is precisely the fBm defined in (17).

As has been already mentioned the limit \(\eta \rightarrow 0\) for \(H=0\) does not yield any well-defined process. At the same time taking the limit \(H \rightarrow 0\) at fixed \(\eta \) gives

ensuring that for any \(\eta >0\) the limit of \(B^{(\eta )}_{H}(x)\) as \(H\rightarrow 0\) yields a well-defined Gaussian process \(\{ B^{(\eta )}_{0}(x): x\in \mathbb {R} \}\) with stationary increments and with the increment structure function depending logarithmically on the time separation:

We consider \(B^{(\eta )}_{0}(x)\) as the most natural extension of the standard fBm to the case of zero Hurst index \(H=0\). We will frequently refer to this process as fBm0. The process is regularized at scales \(|x_1-x_2|<2\eta \).

It is also worth pointing out that there exists an intimate relation between \(B^{(\eta )}_0(x)\) and the behaviour of the (increments of) GUE characteristic polynomials, though at a different, so-called “mesoscopic” spectral scales [1], negligible in comparision with the interval \([-1,1]\). The mesoscopic intervals are defined as those typically containing in the limit \(N\rightarrow \infty \) a number of eigenvalues growing with N, but representing still a vanishingly small fraction of the total number N of all eigenvalues. In other words, fBM0 describes behaviour of the (logarithm of) the ratio of the moduli of characteristic polynomial at mesoscopic difference in spectral parameter. More precisely, \(B^{(\eta )}_0(x)\) is , in a suitable sense, given by \(N\rightarrow \infty \) limit of the following object:

where H is an \(N \times N\) random GUE matrix, parameter \(\eta >0\) is a regularisation ensuring that the logarithms are well defined for real x and \(d_{N}\) specifies the asymptotic scale of the spectral axis of H as \(N \rightarrow \infty \) and is chosen to be mesoscopic \(1 \ll d_{N} \ll N\) (say, \(d_{N} = N^{\gamma }\) with \(0< \gamma < 1\)).

Applying our methods of dealing with LCG to fbM0 yields the following predictions for a few lowest moments of the position of the global minimum for the process \(B^{(\eta )}_{0}(x)\) in the interval 0, L:

Prediction 3

Define \(V(x)=2B^{(\eta )}_{0}(x)\) and for \(\eta >0\) and \(L>0\) consider the random variable

Then the lowest even integer moments of this random variable have the values

whereas the odd integer moments can be found from the identities

One should point out an interesting difference in application of our method to this case, concerning the value of the minimum \(V_m = \min _{x \in [0,1]} V(x)\), which seems to be a direct consequence of non-stationarity of the fBm0. Since the process is constrained to the value \(V(0)=2B^{(\eta )}_{0}(0)=0\) at zero, \(V_m\) is necessarily negative or zero, at variance with the other cases studied here. As discussed in Appendix 7, this implies that the method of analytical continuation in n of Ref. [12], which works nicely for the other cases, fails to predict the PDF of \(V_m\) for the fBm0, and requires modifications which are left for future studies. We do not believe that this problem bears consequence to the moments of \(x_m\), which enjoy a nice and simple analytical continuation to \(n=0\). As we checked numerically up to large n, these moments pass the standard tests (i.e. positivity of Hankel matrices) for existence of a positive associated PDF. Conditional moments however, i.e. conditioned to an atypically high value of \(V_m\), would need a more careful study, beyond the scope of this paper.

2.2 Moments of the Eigenvalue Density of the Jacobi Ensemble

As mentioned above the statistics of extrema in log-correlated fields on an interval relate to the \(\beta \)-Jacobi ensemble of random matrices. We denote \(\mathbf{y}=(y_1,\ldots ,y_n)\) the set of eigenvalues, with \(y_i \in [0,1]\), \(i=1,\ldots ,n\). The model can be defined [29, 30] by the joint distribution of eigenvaluesFootnote 2

where the normalization constant, \(\mathcal{Z}_n\), is the famous Selberg integral [39] for which an explicit formula exists for any positive integer n

In [12] we analytically continued this formula to complex n which allowed to obtain the probability distribution of the height of the global minimum of the process (see also [40–43]). In the present paper we advance this analysis much further in order to extract the statistics of the position of the global minimum. As we will show this requires to obtain some exact formula for the moments of the eigenvalue density for the \(\beta \)-Jacobi ensemble for arbitrary positive integer n. Furthermore these should be explicit enough to allow for a continuation to \(n=0\).

Let us define the average value \(<f(y)>_J\) of any function f(y) over the Jacobi density by the relation

In particular for any positive integer n the mean density of eigenvalues is defined as:

where \(\delta \) is the Dirac distribution. The moments studied here are then defined as:

The problem of calculating these moments, for the \(\beta \)-Jacobi ensemble, has been already addressed in the theoretical physics and mathematical literature, motivated by various applications. In full generality it turns out to be a hard problem, and only limited results were available. One method is based on recursion on the order of the moment, as outlined in the chapter 17 of Mehta’s book [33] and used in [44, 45] in the context of conductance distribution in chaotic transport through mesoscopic cavities. In that approach higher moments calculations become technically unsurmountable. Another approach using Schuhr functions was developed and gave very explicit results for these and other moments for \(\beta =1,2\) [46] (see also related work in [47] and [48]).

More recently, an interesting contour integral representation for those moments was proved by Borodin and Gorin, but remained unpublished. It is described in the Appendix 1 to the present paper, provided by these authors. It allows a systematic calculation of the moments, including negative ones. However evaluating these integrals becomes again a challenge for higher moments.

In the present paper we give an explicit expression for all integer moments, positive and negative, of the eigenvalue density for the \(\beta \)-Jacobi ensemble, based on a different approach, in terms of sums over partitions.

Let us further denote \(\lambda =(\lambda _1 \ge \lambda _2 \ge \cdots \ge \lambda _{\ell (\lambda )})\) a partition of length \(\ell (\lambda )\) of the integer \(k \ge 0\), with \(\lambda _i\) strictly positive integers such that \(|\lambda |=\sum _{i=1}^{\ell (\lambda )} \lambda _i=k\). Then we obtain

where the sum is over all partitions of k, and

in terms of the Pochhammer symbol \((x)_n = x (x+1) \cdots (x+n-1) = \Gamma (x+n)/\Gamma (x)\), with for positive moments

while for negative moments

This result as it stands was derived for \(n, k \in \mathbb {N}\) and \(\kappa >0\), and in range of values of a, b such that these moments exist. Later however we will study analytic continuations in these parameters.

For positive moments, this very explicit formula is equivalent to a result by Mezzadri and Reynolds, which appeared in [31] during the course of the present work. Our independent derivation is relatively straightforward and will be given below for both positive and negative moments.

One can check on the above expressions (33, 34), that in the limit \(\kappa \rightarrow 0\) partitions contribute as \(\sim \kappa ^{\ell (\lambda )-1}\), hence only the single partition \(\lambda =(k)\) with a single row contributes, leading to the trivial limit for the moments:

here for k of either sign, as expected, since in that limit the Jacobi measures decouples \(\mathcal{P}_J(\mathbf{y}) = \prod _{i=1}^n P^0(y_i)\) where \(P^0(y)= \frac{\Gamma (a+b+2)}{\Gamma (a+1) \Gamma (b+1)} y^a (1-y)^b\). Other general properties of the moments, such as identity of moments of y and of \(1-y\) for \(a=b\), are less straightforward to see on the above formula, and are explicitly checked below for low moments.

2.3 Replica Method and Freezing Duality Conjecture for Log-Correlated Processes

Our method of addressing statistics related to the global minimum of random functions (which can be trivially adapted for the global maximum with obvious modifications) is inspired by statistical mechanics of disordered systems. Namely, we look at any random function V(x) defined in an interval \(D\in \mathbb {R}\) of the real axis as a one-dimensional random potential, with x playing the role of spatial coordinate. To that end we introduce for any \(\beta >0\) and any positive \(\beta -\)independent weight function \(\mu (x)>0\) the associated Boltzmann-Gibbs-like equilibrium measure by

where we have defined the associated normalization function (the “partition function”)

with \(\beta =1/T\) playing the role of inverse temperature.

According to the basic principles of statistical mechanics in the limit of zero temperature \(\beta \rightarrow \infty \) the Boltzmann-Gibbs measure must be dominated by the minimum of the random potential \(V_m=\min _{x\in D}\,V(x)\) achieved at the point \(x_m\in D\). The latter is randomly fluctuating from one realization of the potential to another. The probability density for the position of the minimum is defined as \(\mathcal{P}(x)=\overline{\delta (x-x_m)}\) where from now on we use the bar to denote the expectation with respect to random process (“potential disorder”) realizations:

This leads to the fundamental relation

Therefore, calculating \(\mathcal{P}(x)\) amounts to (i) performing the disorder average of the Boltzmann-Gibbs measure (38) and (ii) evaluating its zero-temperature limit. The second step is highly non-trivial, due to a phase transition occuring at some finite value \(\beta =\beta _c\). In our previous work on decaying Burgers turbulence [49], which turns out to be a limiting case of the present problem (see Sect. 4.3), we have already succeeded in implementing that program. Following the same strategy, the step (i) is done using the replica method, a powerful (albeit not yet mathematically rigorous) heuristic method of theoretical physics of disordered systems. It amounts to representing \(Z_{\beta }^{-1}=\lim _{n\rightarrow 0} Z_{\beta }^{n-1}\) which after assuming integer \(n>1\) results in the formal identity

where

Note that \(p_{\beta ,n}(x)\) is not a probability distribution for general n, but becomes one for \(n=0\).

In the next section we will show how to calculate the moments \(M_k(\beta )=\int _{D} p_{\beta ,n}(x) x^k \,dx\) for positive integer k and any integer value \(n>0\) in the high temperature phase of the model \(\beta <\beta _{c}\). Note that by a trivial rescaling of the potential one always can ensure \(\beta _c=1\), setting \(g=1\) in (1), and we assume such a rescaling henceforth. In the range \(\beta <1\) the formulae we obtain for the moments turn out to be easy to continue to \(n=0\). This yields the integer moments of the probability density \(\overline{p_\beta (x)}\) in that phase. There still however remains the task of finding a way to continue those expressions to \(\beta >1\) in order to compute the limit \(\beta \rightarrow \infty \) and extract the information about the Argmin distribution \(\mathcal{P} (x)\). To perform the continuation, we rely on the freezing transition scenario for logarithmically correlated random landscapes. The background idea goes back to [6] and was advanced further in [11] leading to explicit predictions. In [12] it was discovered that the duality property appears to play a crucial role, leading to the freezing-duality conjecture (FDC), which was further utilized in [2, 9, 13, 14, 21, 49]. In brief, the FDC predicts a phase transition at the critical value \(\beta =1\) and amounts to the following principle:

Thermodynamic quantities which for \(\beta <1\) are duality-invariant functions of the inverse temperature \(\beta \), that is remain invariant under the transformation \(\beta \rightarrow \beta ^{-1}\), “freeze” in the low temperature phase, that is retain for all \(\beta >1\) the value they acquired at the point of self-duality \(\beta =1\).

Here, on our explicit formula, we will indeed be able to verify that every integer moment \(M_k(\beta )=\int _{D} p_{\beta ,n=0}(x) x^k \,dx\) of the probability density \(\overline{p_\beta (x)}\) is duality-invariant in the above sense, and hence can be continued to \(\beta >1\) using the FDC, yielding the moments of the position of the global minimum. Another way of proof is based on the powerful contour integral representation for the moments of Jacobi ensemble of random matrices provided by Borodin and Gorin. We thus conjecture that not only all moments, but the whole disorder averaged Gibbs measure \(\overline{p_\beta (x)}\) freezes at \(\beta =1\), hence that the PDF of the position of the minimum is determined as

similar to the conjecture in [49] in our study of the Burgers equation.

Although the FDC scenario is not yet proven mathematically in full generality and has a status of a conjecture supported by physical arguments and available numerics, recently a few nontrivial aspects of freezing were verified within rigorous probabilistic analysis, see e.g. [20, 22, 23, 50] for progress in that direction.Footnote 3 However the role of duality has not yet been verified rigorously. Interesting connections to duality in Liouville and conformal field theory [51] remain to be clarified.

In the rest of the paper we give a detailed derivation of the outlined steps of our procedure and an analysis of the results.

3 Calculations Within the Replica Method

Our goal in this section is to develop the method of evaluating the required disorder average and calculating the resulting integrals explicitly in some range of inverse temperatures \(\beta \) for a few instances of the log-correlated random potentials V(x).

3.1 Connections to the \(\beta \)-Jacobi Ensemble

3.1.1 GUE Characteristic Polynomial

In that case we will follow the related earlier study in [21] and use the family of weight factors \(\mu (x) = \rho (x)^q\) on the interval \(x \in [-1,1]\), with \(\rho (x)=\frac{2}{\pi } \sqrt{1-x^2}\) and parameter \(q>0\). Such a choice of the weight is justified a posteriori by the possibility to find within this family a duality-invariant expression for the moments in the high-temperature phase which is central for our method to work. Since here we are interested in the maximum of the characteristic polynomial we will define the potential \(V(x)=- \phi _{N}(x)\) where \(\phi _{N}(x)\), defined in Prediction 1, is not strictly a Gaussian field. Nevertheless due to the Krasovsky formula (10) we can write asymptotically in the limit of large \(N\gg 1\)

with \(A_n = [ C(\beta ) (N/2)^{\beta ^2} e^{- \beta C'(0)} 2^{- \beta ^2 (n-1)} ]^n\).

3.1.2 General Scaled Model in [0, 1].

It is easy to see that by proper rescaling the evaluation of the function \(p_{\beta ,n}(x)\) from (42) amounts in the case of both characteristic GUE polynomials and the LCGP process to evaluating particular cases of the following multiple integral defined on the interval [0, 1]:

which is formally the (un-normalized) density of eigenvalues of the \(\beta \)-Jacobi ensemble (28), i.e. \(p_{\beta ,a,b,n}(y) = \mathcal{Z}_n \rho _J(y)\), however with a negative value of the parameter \(\kappa =- \beta ^2\). In particular the normalisation factor \(\mathcal{Z}_n\) is the Selberg integral (29). Note that both integrals are well defined for \(\beta ^2 < 1\) and positive integer n. They can also be defined for larger \(\beta \), upon introducing an implicit small scale cutoff which modifies the expressions for \(|y_i-y_j|<\epsilon \). However we will use only the high temperature regime and will continue analytically our moment formula to \(n=0\) and \(\beta =1\).

These integrals are associated to the statistical mechanics of a random energy model generated by a LCG field in the interval [0, 1] in presence of boundary charges of strength a and b. More precisely, they appear in the study of the continuum partition function introduced in [12]

where the correlator of V(x) was defined in (13). Here a and b are the two parameters of the model and the factor \(\epsilon ^{\beta ^2}\) ensures that the integer moments \(\overline{Z_\beta ^n}\) are \(\epsilon \)-independent in the high temperature regime. There, these moments \(\overline{Z_\beta ^n} = \mathcal{Z}_n\) are given by the Selberg integral (29). Several aspects of this model, such as freezing, duality and obtaining the PDF of the value of the minimum, were analyzed in [12]. Here we will focus instead on the calculation of the moments of the position y. In each realization of the random potential V they are defined as

and we will be interested in calculating their disorder averages \(\overline{< y^k>_{\beta ,a,b}}\).

3.1.3 Fractional Brownian Motion

For our fBm0 example we take the weight function \(\mu (x)=1\) in (38, 39) on the interval \(x \in [0,L]\), and use the rescaled fBm with \(H=0\) as the random potential: \(V(x)=2 g B^{(\eta )}_0(x)\). Exploiting the Gaussian nature of fBm0 we can easily perform the required average using (19) which yields

Further using the definitions (22, 23) we arrive at

Assuming that all \(x_i>0\) we can write in the limit of vanishing regularization \(\eta \rightarrow 0\):

For convenience we will use the particular value \(g=1\) ensuring a posteriori the critical temperature value \(\beta _c=1\).

It is easy to see how our three examples can be studied within the framework of the model (46):

-

GUE characteristic polynomial. We define \(x= 1-2 y\), , with \(y \in [0,1]\) and

$$\begin{aligned}&p_{\beta ,n}(x) dx = C_n p_{\beta ,a,a,n}(y) dy, \quad a = \frac{q + \beta ^2}{2} \end{aligned}$$(54)$$\begin{aligned}&C_n = \left[ \big (\frac{2}{\pi }\big )^{q} C(\beta ) N^{\beta ^2} e^{- \beta C'(0)} 2^{1+ q - 2 \beta ^2 (n-1)}\right] ^n \end{aligned}$$(55) -

LCGP plus a background potential on interval [0, 1], defined in (13) and (14). One sees that this model is obtained by choosing \(a = \beta {\bar{a}}\) and \(b= \beta {\bar{b}}\).

-

fBm0: we define \(x=L y\), with \(y \in [0,1]\) and:

$$\begin{aligned} p_{\beta ,n}(x) dx = L^{n(n+1) \gamma + n} p_{\beta ,a=2 n \beta ^2,b=0,n}(y) dy \end{aligned}$$(56)

Although the explicit form of the density \(\rho _J(y)\) of the \(\beta \)-Jacobi ensemble is not known in a closed form for finite n, the formulae (33, 34) displayed in the introduction provide an explicit expression for its integer moments

for positive integers k, n and \(\beta ^2<0\). The collection of indices \(\beta ,a,b,n\) is now replacing the index J, and some of them may be omitted below when no confusion is possible.

We will now derive the formulae (33, 34) using methods based on Jack polynomials. In a second stage we will continue them analytically to arbitrary n, including \(n=0\), and to \(0<\beta ^2 \le 1\), to obtain moments for the random model of interest as:

3.2 Derivation of the Moment Formula in Terms of Sums over Partitions

To calculate the moments, we now consider one of the most distinguished bases of symmetric polynomials, the Jack polynomials, named after Henry Jack. They play a central role for our study because their average with respect to the \(\beta \)-Jacobi measure is explicitly known, due to Kadell [52] (see below). As discussed in the book [53] (see reprint of the original article) Jack introduced a special set of symmetric polynomials of n variables \(\mathbf{y}:=(y_1,\ldots ,y_n)\) indexed by integer partitions \(\lambda \) and dependent on a real parameter \(\alpha \). He called them \(Z(\lambda )\), which in modern notations are denoted as \(J^{(\alpha )}_{\lambda }(\mathbf{y})\) following I. Macdonald who greatly developed the theory of such and related objects in the book [54]. Another important source of information is Stanley’s paper [55]. In what follows we use notations and conventions from [54] and [55].Footnote 4

Let us recall the definition of a partition \(\lambda =(\lambda _1 \ge \lambda _2 \ge \cdots \ge \lambda _{\ell (\lambda )}) >0)\) of the integer k, of length \(\ell (\lambda )\), with \(\lambda _i\) strictly positive integers such that \(|\lambda |:=\sum _{i=1}^{\ell (\lambda )} \lambda _i=k\). It can be written as

from which one usually draw the Young diagram representing the partition \(\lambda \), as a collection of unit area square boxes in the plane, centered at coordinates i along the (descending, southbound) vertical, and j along the (eastbound) horizontal. The dual (or conjugate) \(\lambda '\) of \(\lambda \) is the partition whose Young diagram is the transpose of \(\lambda \), i.e. reflected along the (descending) diagonal \(i=j\). Hence \(\lambda '_i\) is the number of j such that \(\lambda _j \ge i\). If \(s=(i,j)\) stands for a square in the Young diagram, one defines the “arm length” \(a_{\lambda }(s)=\lambda _i-j\) which is equal to the number of squares to the east of square s and the “leg length” \(l_{\lambda }(s)=\lambda _j'-i\) as the number of squares to the south of the square s. One then defines the product

which will be used later on.Footnote 5

Given a partition \(\lambda \), one defines, in the theory of symmetric functions, the monomial symmetric functions \(m_\lambda (\mathbf{y})\), over n variables \(\mathbf{y}= \{y_r\}\), \(r=1,,n\) as \(m_\lambda (\mathbf{y})=\sum _\sigma \prod _{r=1}^n y_{\sigma (r)}^{\lambda _i}\) where the summation is over all non-equivalent permutations of the variables. For example, given a partition (211) of \(k=4\), \(m_{(211)}(\mathbf{y})=y_1^2 y_2 y_3 + y_1 y_2^2 y_3 + y_1 y_2 y_3^2\). Another useful set of symmetric functions, of obvious importance for the calculation of moments, are the power-sums:

which form a basis of the ring of symmetric functions.

Define the following scalar product, which depends on a real parameter \(\alpha \) as

where \(q_i=q_i(\lambda )\) is the number of rows in \(\lambda \) whose length are equal to i. Here and below we suppress the arguments of all symmetric polynomials except when explicitly needed, for example \(p_\lambda (\mathbf{y}) \rightarrow p_\lambda \). The Jack functions \(J^{(\alpha )}_\lambda \) obey the following properties (i) orthogonality with respect to the above scalar product (ii) fixed coefficient of highest degree in the monomial basisFootnote 6

We can go from the basis of power sums to the Jack polynomial basis by the following linear transformations:

where the coefficients are in general complicated. Note that the k-th moment that we are interested in is precisely the Jacobi ensemble average

where (k) denotes the partition with only one row of length k. Hence we need only the coefficient \(\gamma ^\lambda _{\nu =(k)}\). As we now show one can express this coefficient in terms of \(\theta ^\lambda _{\nu =(k)}\). Indeed, one can write in two ways the following scalar product, first as

and also as

Hence, comparing we obtain

valid for arbitrary partitions \(\mu \) and \(\lambda \), which we apply to \(\mu =(k)\).

Now it turns out that \(\theta ^\lambda _{(k)}(\alpha )\) is known to to be equal to:

if \(|\lambda |=k\) and zero otherwise, (see p 383 Ex. 1 (b) in [54] and (19) in [56]), where \(a'_\lambda (s) = j-1\) and \(l'_\lambda (s)=i-1\) are respectively called the co-arm and co-leg lengths of the partition \(\lambda \), and the product does not include the box \(s=(1,1)\).

Positive moments: this leads to the explicit result for the positive k-th moment in terms of an average of the Jack polynomial associated to the partition (k):

(see Appendix 6 for an alternative rewriting of this formula).

The problem therefore amounts to evaluating the Jacobi average of \(J^{(\alpha )}_{\lambda }(\mathbf{y})\). Such averages where evaluated for a general partition \(\lambda \), for a differently normalized set of Jack polynomials, denoted by Macdonald as \(P^{(\alpha )}_{\lambda }(\mathbf{y})\), related to the \(J^{(\alpha )}_{\lambda }(\mathbf{y})\) as follows

Namely, as was conjectured by Macdonald in [54], and proved by Kadell [52], there exists a closed form expression for \(P^{(\alpha )}_{\lambda }(\mathbf{y})\) integrated with the (unnormalized) Jacobi density over the hypercube \(\mathbf{y}\in [0,1]^n\) with the correspondence

It is given by

where

For the empty partition \(\lambda =(0)\) we have \(P^{(1/\kappa )}_{(0)}(\mathbf{y})=1\) and the above integral reduces to the Selberg integral (29).

Recalling the definition of the Jacobi ensemble average (30) and taking the ratio of (74) to (29), we obtain the average of the \(P^{(1/\kappa )}(\mathbf{y})\) polynomial and from it, the average of \(J^{(1/\kappa )}(\mathbf{y})\). The calculation is detailed in the Appendix 5 and the final result is simple and explicit for arbitrary partition \(\lambda \)

Putting together Eqs. (70, 71) and (76) we obtain the k-th moment as

where \(\alpha \) should be replaced by \(1/\kappa \) according to (73). Using the explicit expressions for the normalization constants (211) and (212) in Appendix 4 and the above definitions of the co-arm and co-leg, one obtains the formula (33, 34, 35), for the positive moments given in Sect. 2.2.Footnote 7

We have also used:

where the prime indicates that \(i=j=1\) is excluded from the product.

Equivalently, we can rewrite the expression (77) for the moments in a “geometric” form which involves only products over boxes in the Young diagrams, see (91, 92) below.

Negative moments: Negative moments can be obtained by applying (70) to the inverse variable, here before averaging (with \(k \ge 0\))

where we denote \((\frac{1}{\mathbf{y}}) \equiv (\frac{1}{y_1},\cdots \frac{1}{y_n})\). We now use the following relation between Jack polynomials, for \(n \ge \ell (\lambda )\)

see [30] p. 643, where l is (a priori) any integer \(l \ge \lambda _1\) and one denotes

the partition of length n. Using (72) in (80), inserting (81), using again (72), one can now average over the Jacobi measure as follows

where the average on the right is over a shifted Jacobi measure with parameter \(a-l,b,\kappa \) to account for the prefactor in (81). For the same reason the ratio of Selberg integrals appear, since it is the normalization of the Jacobi measure. We can now use the explicit expressions (29) and (76). One finds, after a tedious calculation, similar in spirit to the one described above for the positive moments, the formula (33), (34) for the negative moments given in Sect. 2.2 with

where a priori l is an integer sufficiently large. In practice we found that for generic values of \(\kappa \) the final result is independent of the choice of l (for each partition), hence we chose \(l=0\) which leads to the simplest expression (36) given in in Sect. 2.2.

We now discuss an interesting alternative and useful representation for the (positive and negative) integer moments in terms of contour integrals.

3.3 Borodin-Gorin Contour Integral Representation of the Moments

3.3.1 Positive Moments

Recently Borodin and Gorin proved integral representations for the moments of the Jacobi \(\beta \)-ensemble. We refer to the Appendix 1 for derivation and present here a summary of results in our notation. They call these moments \(\frac{1}{N} M_k(\theta ,N,M,\alpha )\) and the correspondence from their four parameters to ours is:

which leads to the correspondence

Translated in our parameters their moment formula for positive integer n reads:

which must be supplemented with the conditions, let us call them \(C_1\): \(|u_1| \ll |u_2| \ll |u_3| \cdots \ll |u_k|\) and \(C_2\): all the contours enclose the singularities at \(u_i = - (1-n) b^2\) and not at \(u_i = 2 + a + b + \beta ^2 (1-n)\). These conditions imply that one can first perform the integral on \(u_1\) and only around the pole \(u_1 = - (1-n) \beta ^2\) and then iteratively on \(u_2,u_3,\ldots \) and so on.

We will investigate below the properties of this representation in the context of the models we study here.

3.3.2 Negative Moments

Remarkably, Borodin and Gorin also proved a contour integral formula for negative moments, whenever they exist. In our notations, for \(k \ge 1\), it reads

which must be supplemented with the conditions, (i) \(C_1\): \(|u_1| \ll |u_2| \ll |u_3| \quad \ll |u_k|\) and (ii) \(C_2\): all the contours enclose the singularities at 0 and not at a.

3.4 Duality

Let us now discuss an important property of these moment, the duality.

3.4.1 Statement of the Duality on the Moments

For clarity let us first recall the property of duality-invariance unveiled in [12]. Consider first a thermodynamic quantity \(O_\beta \) obtained in the replica formalism in the limit \(n=0\). This quantity is said to be duality-invariant if it is well defined in the high temperature region \(\beta <1\) and its temperature dependence is given by a function \(f(\beta )\) which is known analytically, and satifies \(f(\beta '=1/\beta )=f(\beta )\). The simplest example of such quantities is the mean free energy for Gaussian log-correlated models for which \(f(x)=x+ 1/x\). More complicated examples are presented in [12], see e.g. (13, 14) there. Note that it does not imply anything on the behaviour of \(O_\beta \) for \(\beta >1\), hence it is strictly a property of the high temperature phase. However the property of duality invariance is not restricted to the replica limit \(n=0\). In [12] by considering the generating functions for the moments of the partition function, one notices that duality invariance can be extended to finite n by further requiring \(n' \beta '=n \beta \) (see (25) there where s should be identified as \(- n \beta \)).

In the present model, there are more parameters and we can formulate the duality-invariance property for the moments as follows:

Duality-invariance property

which, again, should be understood in the sense of analytical continuation (i.e. it does not provide a mapping from the high to low temperature region as discussed above).

3.4.2 Checking and Proving Duality for Moments

The duality property (90) can be checked on the explicit formula (33–35), derived in this paper. Here we focus on positive moments, but similar considerations hold for negative ones, when they exist. To see it explicitly it is convenient to rewrite that formula in a “geometric” form involving only products over boxes in the Young diagrams, as

where

where \(\epsilon \) has been introduced only to remove box (1,1) from one of the products and the limit \(\epsilon \rightarrow 0\) is trivial. For application to the present purpose we need to set \(\kappa =-\beta ^2\).

The duality is easy to check on that formula and corresponds to the exchange of the partition \(\lambda \) with its dual \(\lambda '\). Indeed it is easy to check that

where \(s'=(j,i)\) is the box in the dual diagram conjugate to \(s=(i,j)\), which implies that arm lengths and leg lengths are also exchanged under duality. A similar observation over duality-invariant sum over partitions was reported very recently in [14] for a related problem, about the value at the minimum of a log-correlated field.

Another proof of the duality invariance property for the moments was provided in the recent work Borodin and Gorin (BG). They proved that these moments are rational functions of their four arguments, and that the corresponding analytical continuation in these arguments satisfies an invariance property, which is equivalent to the above duality-invariance (90) under the correspondence (86).

3.4.3 Consequence of the Duality-Invariance: Freezing

Let us now examine the implications of the relation (90) for the three examples studied in this paper, showing that a freezing transition at \(\beta =1\) is expected in all cases.

For the GUE problem \(a=b=\frac{q+\beta ^2}{2}\) and one finds that the duality invariance in terms of the parameter q can be written as:

The choice \(q=1\) thus ensures duality-invariance of the moments for arbitrary \(\beta <1\) and again implies freezing at \(\beta =1\).

More generally, starting from Jacobi ensemble measure (28) one can ask how to choose a and b so that the model exhibits the duality-invariance. Consider two (otherwise arbitrary) duality-invariant functions of \(\beta \), \({\bar{a}}(\beta )\) and \({\bar{b}}(\beta )\), i.e. satisfying \({\bar{a}}(1/\beta )={\bar{a}}(\beta )\) and \({\bar{b}}(1/\beta )={\bar{b}}(\beta )\), and choose:

Then the moments are self-dual. Our second example Eqs. (13) and (14) of a log-correlated potential in presence of a background potential, corresponds to the case of temperature independent constants \({\bar{a}}\) and \({\bar{b}}\) and its moments are thus duality-invariant.

Finally, for the fBm0 the parameter \(a=2 n \beta ^2\) and \(b=0\). One checks from (90) that the fBm0 satisfies duality invariance for arbitrary n. In the replica limit \(n=0\) we must set \(a=b=0\), which is a self-dual point, hence for this model the moments obey the following duality-invariance for \(\beta <1\)

so that according to the FDC, one should expect them to exhibit freezing at \(\beta =1\).

3.5 \(n=0\) Limit of the Moments Formula

The moment formula obtained above, as well as their contour integral representation are explicit enough to allow for analytic continuation to \(n=0\).

3.5.1 Replica limit of sums over partitions.

Consider the formula (33, 34) inserting \(\kappa =-\beta ^2\). The limit \(n \rightarrow 0\) is straightforward, except for one of the factors in the second line for which we use:

This leads to the following formula for the disorder averages of the moments (48) of the general scaled disordered statistical mechanics model (47)

with

This formula is valid in the higher temperature phase of the model, \(\beta <1\), where all the factors are clearly finite and non-zero. We will study explicitly below a few moments and their temperature dependence. Note that in the limit \(\beta \rightarrow 0\) one recovers the moments (37) (i.e. with the weight \(P^0(y)\)).

The moments of the position of the scaled minimum of the potential as discussed above are recovered in the zero temperature limit \(\beta =+\infty \) of the statistical mechanics model. According to the FDC (see section) these moments are equal to their value at the freezing transition \(\beta =1\). Hence they can be obtained by taking as limits

of the above expression (98–100). However in this expression one easily sees that the factor \(C^\lambda _2\) has poles for \(\beta =1\), while \(C^\lambda _1\) is regular and has a finite limit. Examination of low moments, detailed below, show massive cancellations of these poles leading to a well defined finite limit. As shown below, using the contour integral representation, this limit is indeed finite for any moment. Note that the poles in the limit \(\beta \rightarrow 1\) are also present for \(n>0\), so consideration of finite n does not help to handle these cancellations.

The formula (101) together with formula (98–100) thus gives arbitrary positive integer moments of the position of the global minimum of the log-correlated process and as such is a main result of our paper. They can be used to generate these moments to a very high degree on the computer.

3.5.2 Contour Integral Representation of Moments for \(n=0\).

(i) positive moments To take the limit \(n=0\) in the contour integral formula (87) we rewrite

Inserting the first term in the contour integral gives zero. Next, it is easy to see that only the pole in \(u_1\) gives non zero residue. Hence we can insert only this term in the integral (87), where we can now safely take \(n=0\) leading to the following representation for the disorder average:

where we have shifted \(u_i \rightarrow u_i - \beta ^2\) for convenience. This again must be supplemented with the condition (i) \(C_1:\) \(|u_1| \ll |u_2| \ll |u_3| \cdots \ll |u_p|\) and (ii) \(C_2\): all the contours enclose the singularities at \(u_1 = 0\) but not at \(u_i = 2 +a+b+2 \beta ^2\). In practice the contours will run (and close) in the negative half plane \(Re(u_i)<0\) and pick up residues from poles on the negative real line. We note that one needs the condition \( 2 +a+b+2 \beta ^2 >0\) which, for \(a=b\) is precisely the one found in [12] corresponding to a binding transition to the edge (for \(a< -1 - \beta ^2\)). We will thus assume that the condition is fulfilled, which is the case for all three examples considered here.

The limit \(\beta \rightarrow 1\) can be performed explicitly leading to a contour integral representation for the positive integer moments of the position of the global extremum of the log-correlated field

with the same contour conditions \(C_1\) and \(C_2\). This formula should thus be equivalent to our main result (98–101), which we have checked for a few low order moments.

(i) negative moments The same manipulation as above in the limit \(n \rightarrow 0\) gives the disorder averaged moments for \(k \ge 1\):

provided these moment exist. Taking again the limit \(\beta \rightarrow 1\) one obtains the negative integer moments of the position of the global extremum of the log-correlated field as

for k positive integer, provided they exist. In both integrals the contours obey the two conditions (i) \(C_1\): \(|u_1| \ll |u_2| \ll |u_3| \cdots \ll |u_k|\) and (ii) \(C_2\): all the contours enclose the singularities at 0 and not at a. At present there is no equivalent formula in terms of sums over partitions, hence the above formula is an important result of the paper.

We now turn to explicit study of the low moments

3.6 Calculation and Results for the First Moment

Let us illustrate the calculation using the contour integral (87) on the simplest example of the first moment \(k=1\)

which is equal to the residue at \(u_1=- (1-n) \beta ^2\). In terms of partitions only the partition \(\lambda =(1)\) contributes, so it is easy to see on (33, 34), and even more immediate on (91, 92) [using \(i=j=1\), \(a_\lambda =\ell _\lambda =0\)], that it reproduces (71). One can explicitly verify on this result that the first moment is invariant by the duality transformation (90).

The \(n=0\) limit yields the disorder averaged first moment

For the symmetric situation \(a=b\), which is the case both for the fBm \(a=b=0\) and for the GUE characteristic polynomial \(a=b=\frac{1 + \beta ^2}{2}\) the first moment is thus simply

In the second example of the LCGP with edge charges one obtains:

The duality-freezing conjecture then leads to the the first moment of the position of the minimum

which is Eq. (15) in Prediction 2.

These results can be compared to the first moment in absence of the random potential and at finite inverse temperature \(\beta \), i.e. from the measure \(P^0(y)|_{a=\beta {\bar{a}}, b=\beta {\bar{b}}}\)

which reproduces the absolute minimum \(y_m^0={\bar{a}}/({\bar{a}}+{\bar{b}})\) in the absence of disorder for \(\beta =+\infty \). Comparing with (111) shows that even at the freezing temperature \(\beta =1\), disorder brings the average position closer to the midpoint \(y=\frac{1}{2}\).

3.7 Results for Second, Third and Fourth Moments

The calculation of the second moment by the contour integral method is relatively simple, and sketched in the Appendix 2 Sect. 1 for \(n=0\). It leads to the disorder average:

This expression is also easily recovered from the sum (33, 34), or (91, 92) involving now the two partitions (2) and (1, 1). For \(\beta =0\) it reproduces (37), i.e. the trivial average with respect to the weight \(P^0(y) \sim y^a(1-y)^b\). The expression (113) is duality-invariant and we expect that it freezes at \(\beta =1\) leading to the predictions for the second moment of the position of the extremum.

Let us now detail the results for each example separately, including moments up to \(k=4\) when space permits, more detailed derivations and results being displayed in the Appendix 2.

3.7.1 Log-Correlated Potential with Edge Charges \({\bar{a}},{\bar{b}}\).

The second moment of the position of the global minimum is obtained from above asFootnote 8

This leads to the variance (16) in Prediction 2, replacing there \(x \rightarrow y\). Expressions for higher moments for general \({\bar{a}},{\bar{b}}\) are too bulky to present here, and we only display the third moment in (192) and the skewness in (193).

Two special cases are of interest:

-

(i)

only one edge charge, at \(y=0\): One sets \({\bar{b}}=0\). Let us give here the skewness in that case

$$\begin{aligned} Sk := \frac{\overline{(y_m - \overline{y_m})^3}}{\overline{(y_m - \overline{y_m})^2}^{~\frac{3}{2}}} = -\frac{{\bar{a}} ({\bar{a}}+5) ({\bar{a}} (7 {\bar{a}}+68)+164)}{\sqrt{2} \sqrt{{\bar{a}}+2} ({\bar{a}}+6)^2 (2 {\bar{a}}+9)^{3/2}} \end{aligned}$$(115)which is negative. It can be compared with the skewness associated to the measure \(\sim y^{{\bar{a}}}\), which is

$$\begin{aligned} Sk_0 = -\frac{2 {\bar{a}} \sqrt{{\bar{a}}+3}}{\sqrt{{\bar{a}}+1} ({\bar{a}}+4)} \end{aligned}$$(116)One finds that Sk decreases from 0 to \(-7/4\) as \({\bar{a}}\) increases, while \(Sk_0\) decreases from 0 to \(-2\), hence they are quite distinct.

-

(ii)

symmetric case \({\bar{a}}={\bar{b}}\). The variance is obtained as:

$$\begin{aligned} \overline{y_m^2} - \overline{y_m}^2 = \frac{4 {\bar{a}}+9}{4 (2 {\bar{a}}+5)^2} \end{aligned}$$(117)where we recall, \(\overline{y_m}=\frac{1}{2}\), and we checked explicity that the moment formulae lead to

$$\begin{aligned} \overline{ \bigg ( y_m - \frac{1}{2} \bigg )^3 } = 0 \end{aligned}$$(118)as expected by symmetry \(y \rightarrow 1-y\). The fourth moment and kurtosis are obtained as

$$\begin{aligned}&\overline{ y_m^4 } = \frac{4 a^5+84 a^4+663 a^3+2488 a^2+4478 a+3110}{4 (a+3) (2 a+5)^2 (2 a+7)^2} \end{aligned}$$(119)$$\begin{aligned}&\mathrm{Ku} = -\frac{2 \left( 8 a^5+248 a^4+2054 a^3+7328 a^2+12053 a+7523\right) }{(a+3) (2 a+7)^2 (4 a+9)^2} \end{aligned}$$(120)The kurtosis can be compared to the one of the measure \(y^{{\bar{a}}} (1-y)^{{\bar{a}}}\) which is:

$$\begin{aligned} \kappa _0 = - \frac{6}{5+2 a} \end{aligned}$$(121)Note that when \({\bar{a}}\) increases, \(\mathrm{Ku} \rightarrow -1/4\) while \(\mathrm{Ku}_0 \rightarrow 0\).

3.7.2 GUE Characteristic Polynomial

For the GUE-CP we must insert \(a=b=\frac{1+\beta ^2}{2}\), in (92). The second moment for the associated statistical mechanics model in the high temperature phase \(\beta <1\), and for the position of the global minimum (obtained by setting \(\beta =1\)) are then found to be:

For completeness the expression at finite n is given in (191). In the original variable \(x \in [-1,1]\), i.e. the support of the semi-circle, using \(x=1-2 y\) and the result for the first moment we obtain:

where we expect now the PDF of the position of the maximum, \(\mathcal{P}(x)\), to be centered and symmetric around \(x=0\) (which was checked explicitly up to fifth moment).

We give directly the fourth moment of the position of the maximum (see 1 for finite temperature expressions)

which leads to the fourth cumulant and kurtosis as:

These moments can be compared with the ones of the semi-circle density

which are:

and are found quite close, suggesting that \(\mathcal{P}(x)\) is distinct from, but numerically close, to the semi-circle density.

3.7.3 Fractional Brownian Motion

For the fBm0 we should set \(a=b=0\) in (113) leading to the following disorder average of the associated statistical mechanics model in the high temperature phase:

which is manifestly duality-invariant (see (190) for the n-dependence). The second moment of the position of the global minimum of the fBm0 is thus predicted to be

as displayed in Prediction 3. This is distinct, but numerically not very different, from what is obtained from a uniform distribution \(P^0(y)=1\) on [0, 1], namely \(<y^2>_{P^0}=\frac{1}{3}\) and \(<(y-\frac{1}{2})^2>_{P^0}=\frac{1}{12}\).

All odd moments centered around \(y=\frac{1}{2}\) are predicted to vanish, as in (118). Although we explicitly checked up to fifth it should be a general property. The asymmetry induced by fixing one point of the fBm0 at \(x=0\) and letting the one at \(x=1\) free, does not manifest itself in the moments of the minimum (or at any temperature in the statistical mechanics model). It does arise however to first order in n (as seen e.g. from (71)) and is detectable in the joint distribution of values and positions of the minimum (see below).

From the formula (119) specialized to \(a=0\) we obtain respectively the fourth moment, the fourth cumulant and the kurtosis \(\kappa \) for the position of the minimum of the fBm0:

These three numbers are respectively \(\frac{1}{5}\), \(- \frac{1}{120}\) and \(-1.2\) for a uniform distribution of [0.1], hence a difference of a few percent.

3.8 Results for Negative Moments

The negative moments lead interesting additional information on the three problems under study. Let us present the (short) calculation of the first negative moment, and also give the expression for the second. Equation (89) for \(k=1\) yields

The continuation to \(n=0\) of this formula, and of the one for the second negative moment (calculated in Appendix 2, Sect. 1 ) leads to the following disorder averages in the statistical mechanics model for \(\beta ^2 < 1\)

Obviously the same formula exist hold exchanging \(y \rightarrow 1-y\) and \(a \rightarrow b\). One can check that these formula coincide with the ones obtained from the general result (34–36).

One can check that for \(\beta =0\) and a, b fixed, i.e. in the absence of the random potential, these formula agree with the same averages over the deterministic measure \(P^0(y) \sim y^a (1-y)^b\), i.e. Eq. (37) setting \(k=-1,-2\) there. The effect of the disorder is thus to increase the values of the inverse moments, presumably from the events when favorable regions in the random potential appear near the edges. We see that \(a>0\) (repulsive charge at \(y=0\)) is required for the finiteness of the first inverse moment, and \(a>1\) for the finiteness of the second. In addition, since \(y \in [0,1]\), one must have \(\overline{<y^{-1}>_\beta } \ge 1\). Hence a binding transition at \(y=1\) must occur when the charge becomes too attractive, for \(b\le b_c = - 1 - \beta ^2\). Symmetric conditions hold under exchanges of \(y \rightarrow 1-y\) and \((a,b) \rightarrow (b,a)\).

These moments give some information about the disorder-averaged Gibbs measure: if we assume a power law behavior near the edge, \(\overline{p_\beta (y)} \sim _{y \rightarrow 0} y^c\), the exponent \(c=c(a,b,\beta )\) must be such that \(c>0\) whenever \(a>0\), and \(c>1\) whenever \(a>1\). From the divergence of the inverse moments when a reaches these values, we can surmise that c also vanishes at \(a=0\) and equals 1 at \(a=1\). The simplest possible scenario then is that \(c=a\), but it remains to be confirmed.

The effect of disorder saturates at \(\beta =1\) where we predict freezing in these negative moments, which, as can be checked on (134) using (90) are duality-invariant. As discussed above we predict that the full PDF \(\overline{p_\beta (y)}\) freezes, i.e. \(\mathcal{P}(y_m) = \overline{p_{\beta =1}(y)}\). Let us now discuss consequences for our three examples.

-

(i)

For the GUE-CP, one must set \(a=b=\frac{1+ \beta ^2}{2}\). One sees that the first inverse moment exist, but not the second, so the exponent \(0<c <1\). One finds that the first inverse moment is temperature independent:

$$\begin{aligned} \overline{<y^{-1}>_\beta } = 4 , \quad 0 \le \beta \le 1 \end{aligned}$$(135)which leads to the following predictions for the position of the maximum

$$\begin{aligned} \overline{y_m^{-1}} = 4, \quad \overline{(1-x_m)^{-1}} = 2 \end{aligned}$$(136)Remarkably, this is also exactly the value of the same average with respect to the semi-circle density

$$\begin{aligned} <\frac{1}{1-x}>_\rho = 2 \end{aligned}$$(137)This suggests that the two distributions, \(\mathcal{P}(x)\) ands \(\rho (x)\), although distinct, are very similar near the edges.

-

(ii)

LCP with edge charges, one must set \(a=\beta {\bar{a}}\), \(b= \beta {\bar{b}}\). The prediction for the first two inverse moments of the position of the global minimum is:

$$\begin{aligned} \overline{y_m^{-1}} = \frac{2+{\bar{a}}+{\bar{b}}}{{\bar{a}}} , \quad \overline{y_m^{-2}} = \frac{\left( {\bar{a}}+{\bar{b}}+2\right) \left( {\bar{a}} ({\bar{a}}+{\bar{b}})+1\right) }{{\bar{a}} ({\bar{a}}-1)^2} \end{aligned}$$(138)where domain of existence has been discussed above. It would be interesting to see whether the simplest scenario for the edge behavior, i.e. that \(\mathcal{P}(y_m) \sim y_m^a\) near \(y=0\), and by symmetry \(\mathcal{P}(y_m) \sim y_m^b\) near \(y=1\) can be confirmed (or infirmed) in future studies.

-

(iii)

For the fBm0 one must set \(a=b=0\) (for \(n=0\)), and neither of these moments exist. Hence the edge exponent of \(\mathcal{P}(y) \sim y^c\) is such that \(c \le 0\) (and probably \(c=0\)).

3.9 Correlation Between Position and Value of the Minimum

Until now we used only the values of the moments at \(n=0\). However they do exhibit a non-trivial dependence in the number of replica n. One may thus ask what is the information encoded in that dependence.

The detailed analysis is performed in footnoteFootnote 9 The answer is that the knowledge of the n-dependence of all moments allows in principle to reconstruct the joint distribution, \(\mathcal{P}(x_m,V_m)\), of the position and value of the extremum. This is an ambitious task which is far from completed. However in footnote 9 we give a general formula for the conditional moments, i.e the moments of \(x_m\) conditioned to a particular value of \(V_m\).

Here we display the results for the simplest cross-correlations in the LCGP model with edge charges, the derivation and more results are given in footnote 9. We find

In the case \({\bar{a}}={\bar{b}}\) these two correlations of the first moment vanish, and so do higher ones: one shows (from the general formula in footnote 9) that the first conditional moment, \(\mathbb {E}(y_m | V_m)= \frac{1}{2}\) independently of \(V_m\). Continuing with the case \({\bar{a}}={\bar{b}}\) we further obtain

Setting \({\bar{a}}=1\) leads to the prediction for the GUE-CP as

and we recall that \(x_m=1-2 y_m\) with \(\overline{y_m}=\frac{1}{2}\) for the GUE-CP and in fact, as discussed above the first conditional moment \(\mathbb {E}(x_m| V_m)= 0\) vanishes for any \(V_m\).

The fBm0, as we defined it with the value fixed at \(y=0\), provides an interesting example of a process with non-trivial correlation. Indeed the above formula must be modified since \(a =2 \beta ^2 n\) for the fBm0. As discussed in Appendix 7, that leads to difficulties in the method for the determining the PDF of \(V_m\). One should thus be careful in assessing the validity of the following results for the case of the fBm0. They read

and we recall \(\overline{y_m}=\frac{1}{2}\) for the fBm. The negative value obtained for the first correlation (first line) is a reflection of the boundary condition chosen, namely pinning of \(B_0^{(\eta )}(y=0)=0\) and free boundary condition at \(y=1\), which allows for lower values of the minimum near the right edge. The vanishing of the second line follows from the discussion in footnote 9.

4 Other Ensembles

4.1 General Considerations

Once the moments for the Jacobi ensemble are known, one can obtain moments in a few other ensembles. Define the generic measure

with, for Jacobi, \(\mu _A(y) = \mu _J(y) := y^a (1-y)^b \theta (0<y<1)\). The main other ensembles differ only by the choice of the weight function \(\mu _A(y)\).

-

(i)

Laguerre ensemble Define \(y_i=z_i/b\) and take the limit \(b \rightarrow +\infty \). Then

$$\begin{aligned} \lim _{b \rightarrow +\infty } \mathcal{P}_J(\mathbf{y}) d \mathbf{y} = \mathcal{P}_L(\mathbf{z}) d \mathbf{z}, \quad \mu _A(z) = z^a e^{-z} \theta (z) \end{aligned}$$(152)where \(\mathcal{Z}^L_n= \lim _{b \rightarrow +\infty } b^{a+n + \kappa n(n-1)/2} \mathcal{Z}^J_n = \prod _{j=1}^n \frac{\Gamma (1+a+ (j-1) \kappa ) \Gamma (1+j \kappa )}{\Gamma (1+\kappa )}\). Hence the moments in Laguerre ensemble are obtained as:

$$\begin{aligned}<z^k>_L = \lim _{b \rightarrow \infty } b^k <y^k>_J \end{aligned}$$(153)However in our statistical mechanics model, for instance the LCG random potential with an external background, we need to define z slightly differently, i.e. \(z=\frac{b}{\beta } y = {\bar{b}} y\). This ensures duality invariance of the problem, and the Laguerre weight can now be interpreted as a bona-fide external background potential:

$$\begin{aligned} V_0(z) = - {\bar{a}} \ln z + z \end{aligned}$$(154)which confines the particle near the edge \(z=0\) (for \({\bar{a}}>0\)).

-

(ii)

Gaussian–Hermite ensemble Define \(y_i=\frac{1}{2} + (z_i/\sqrt{8 a})\) and take the limit \(a=b \rightarrow +\infty \). Then

$$\begin{aligned} \lim _{a=b \rightarrow +\infty } \mathcal{P}_J(\mathbf{y}) d \mathbf{y} = \mathcal{P}_G(\mathbf{z}) d \mathbf{z}, \quad \mu _G(z) = e^{-z^2/2} \end{aligned}$$(155)where \(\mathcal{Z}^G_n= \lim _{a=b \rightarrow +\infty } 4^{an} (8a)^{n/2 + \kappa n(n-1)/2} \mathcal{Z}^J_n = (2 \pi )^{n/2} \prod _{j=1}^n \frac{\Gamma (1+ j \kappa )}{\Gamma (1+\kappa )}\) is the Mehta integral. Similarly below we will introduce a factor of \(\beta \) in the definition, see next section.

-

(iii)

Inverse-Jacobi weights Define \(y_i=1/z_i\) Then

$$\begin{aligned} \mathcal{P}_J(\mathbf{y}) d \mathbf{y} = \mathcal{P}_L(\mathbf{z}) d \mathbf{z}, \quad \mu _A(z) = z^{-2-a-b-2(n-1) \kappa } (z-1)^b \theta (z-1) \end{aligned}$$where \(\mathcal{Z}^I_n= \mathcal{Z}^J_n\)

There is a second set of models, for which the correspondence is less direct. We will follow the arguments of [39] to surmise a relation between moments. As in that work, one starts with the simple identity, for \(k \in \mathbb {Z}\), \(a \in \mathbb {R}\)

valid whenever the last integral converge. That leads to the multiple-integral version

for any Laurent polynomial f. From this one conjectures interesting relations between quantities in the circular and Jacobi ensembles, see (1.15–1.17) in [39] as well as Proposition 13.1.4 in Chap.13 of [30]. Further elaborations of these relations lead us to the following conjectures for the moments:

-

(i)

Circular ensemble with weight. Consider the CUE with weight, defined by the joint probability

$$\begin{aligned} \frac{1}{\mathcal{Z}^C_n} \prod _{i=1}^n \frac{d\theta _i}{2 \pi } |1 + e^{i \theta _i}|^{2 \mu } \prod _{1 \le i < j \le n} |e^{i \theta _i} - e^{i \theta _j}|^{2 \kappa } \end{aligned}$$(157)for the variables \(\theta _i \in [-\pi ,\pi [\). Then we conjecture that

$$\begin{aligned}< \cos (k \theta )>_{\text {circular}} = (-1)^k < y^k >_{\kappa ,a,b,n} |_{a=-\mu -1-\kappa (n-1),b =2 \mu } \end{aligned}$$(158) -

(ii)

Cauchy-\(\beta \) ensemble. Following (1.19) in [39] and using the stereographic projection from the circle to the real axis, \(e^{i \theta }=(i - z)/(i+z)\) we obtain the Cauchy-\(\beta \) ensemble which has weight on the whole real axis \(z \in \mathbb {R}\)

$$\begin{aligned} \mu _C(z) = \frac{1}{(1+z^2)^\rho }, \quad \rho = 1 + \mu + (n-1) \kappa \end{aligned}$$(159)For this ensemble, the conjecture then becomes

$$\begin{aligned}< Re[ (\frac{i - z}{i+z})^k ]>_{\text {Cauchy}} = (-1)^k < y^k >_{\kappa ,a,b,n} |_{a=-\rho ,b =2 \rho -2 - 2 (n-1) \kappa } \end{aligned}$$(160)which we checked is obeyed for \(\kappa =0\), in which case one has \(< y^k >_{J,\kappa =0}= (1-\rho )_k/(\rho )_k\). Note that interesting integrable generalization of Cauchy ensemble was proposed in [57].

Let us now study the two following examples in more details

4.2 Moments for the Laguerre Ensemble

4.2.1 General Formula

From (33–35), performing the limit (153) we obtain the general positive moments of the Laguerre ensemble asFootnote 10

where the sum is over all partitions of k, and

i.e. a single factor has disappeared. It turns out that these sums are polynomials, although it may not be easy to see on this expression.

Let us give the first two moments:

higher moments become more complicated polynomials. Formula for negative moments can also be obtained from (33–36) performing the same limit.

4.2.2 Random Statistical Mechanics Model Associated to Laguerre Ensemble

In the corresponding disordered model, LCRG with a background confining potential (154) we find

Freezing of these manifestly duality invariant expressions lead to

In the absence of disorder the absolute minimum is at \(z_m^0={\bar{a}}\), hence the random potential now tends to push the minimum towards the larger positive z (i.e. to unbind the particle). One finds a few higher moments

whose associated cumulants have simpler expressions

4.3 Gaussian–Hermite Ensemble

Let us now turn to the Gaussian ensemble. This model is of great interest as its disordered statistical mechanics is associated to the solution of the one-dimensional decaying Burgers equation with a random initial condition and of viscosity \(\sim 1/\beta \). The velocity is the gradient of a potential, and the initial condition is chosen to correspond to a log-correlated random potential. The one-space point statistics of the velocity at any later time is then exactly associated to the statistical model of the Gaussian ensemble, as we showed in [49]. The freezing transition at \(\beta =1\) corresponds to a transition in the Burgers dynamics from a Gaussian phase, to a shock-dominated phase. For more details on the correspondence between the two problems, we refer the reader to [49] where the model is introduced and analyzed. We use the same conventions as in that work. Defining the new variable z:

in terms of the Jacobi variable \(y \in [0,1]\), we obtain the moments of the Gaussian ensemble by taking \(b=a \rightarrow +\infty \) in the general formula (33–34). This limit is not simple. First, raising (171) to the power \(z^k\) requires adding contributions of various moments \(<y^p>\) of degree \(p \le k\). Second, in each such moment there is no obvious term by term simplification in the large \(a=b\) limit. As one sees on (33–34), each term \(A_\lambda \) is superficially of order one, hence multiple cancellations do occur in the sum so that the end result is of order \(1/a^{k/2}\) at large a. The calculation is thus handled using Mathematica, which allows to obtain moments to high degrees.

Setting \(n=0\), we then obtain the first non-trivial cumulants of \(\overline{p_\beta (z)}\). Note that the weight factor is now \(e^{- \beta z^2/2}\) hence the disordered model corresponds to a particle in a LCGP in presence of a quadratic confining background potential \(V_0(z) = z^2/2\) at inverse temperature \(\beta \). We obtain

and we list here the next three ones i.e. \(\overline{<z^k>_\beta }^c\) for \(k=6,8,10\)

These expressions are identical to the ones calculated in [49] using there a much more painstaking method. They are manifestly duality-invariant and freeze at \(\beta =1\), from which one can read the expressions for the corresponding moments of the position of the minimum \(z_m\) (which we do not write here in detail). Since, as discussed there, the Burgers velocity v in the inviscid limit is equal to the position of the minimum \(v \equiv z_m\) in the Gaussian ensemble problem, this leads to non-trivial predictions for the moments of the PDF of these two quantities, some of which were numerically checked there.

5 Discussion and Conclusions

5.1 Numerical Verification

We now compare our predictions for the position \(x_m\) of the maximum of the GUE-CP with the results of direct numerical simulations of GUE polynomials for matrices of growing size N, performed by Nick Simm, who we also thank for the detailed analysis of the data.

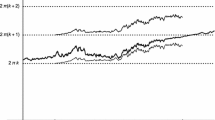

Histogram of values \(x_{m}\) for the position of the maximum of the characteristic polynomial for size \(N=3000\) GUE matrices with 250, 000 realizations. We use the numerical method described in Section 3 of [21]. The curve fitting the histogram (red) differs from the semi-circular density (green) at most by 0.099

In Fig. 1 we show the histogram of the full PDF of the position \(x_m\). For the sake of comparison we also plotted the semi-circle density of eigenvalues, which as discussed above, has the same first negative moment. The data suggest that, although the distribution of the maximum if clearly not given by the semi-circular law, the edge behaviors of the two distributions are numerically close.

Variance of the position of the maximum of the characteristic polynomial for 20 equally spaced data points corresponding to size \(N=150\) up to size \(N=3000\) GUE matrices with 250, 000 realizations. The x axis has been chosen as \(1/[10 (\ln N)^3]\). The blue point is the prediction (123)

Inverse moment of the position of the maximum of the characteristic polynomial, same samples and x axis scale as in Fig.2. The prediction is a divergence of the moment as \(N \rightarrow +\infty \)

Next, in Figs. 2 and 3 we are plotting the values of the second moment and of the kurtosis as compared to the Prediction 1. While the detailed analysis of finite size effects is left for the future, we plotted the data against the finite size scale \(1/[10 (\ln N)^3]\), which we found appropriate.

We see a rather good agreement for the extrapolated values of the second moment, and still reasonable agreement for the kurtosis. In Fig. 4, we also plot the first inverse moment, which shows rather good convergence to the predicted value 2. The second inverse moment, predicted to diverge, is also shown in Fig. 5.

In conclusion the agreement with the predictions is reasonable, and for some observables, excellent. We hope that increasing both the number of realizations and the value of the parameter N should lead to further improvement, but such a programme is challenging computationally and is left for future research.

5.2 Conclusions