Abstract

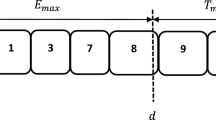

We address a single machine scheduling problem with a new optimization criterion and unequal release dates. This new criterion results from a practical situation in the domain of book digitization. Given a set of job-independent delivery dates, the goal is to maximize the cumulative number of jobs processed before each delivery date. We establish the complexity of the general problem. In addition, we discuss some polynomial cases and provide a pseudopolynomial time algorithm for the two-delivery-dates problem based on dynamic programming and some dominance properties. Experimental results are also reported.

Similar content being viewed by others

References

Brucker, P. (2007). Scheduling algorithms. Berlin: Springer.

Detienne, B., Dauzères-Pérès, S., & Yugma, C. (2011). Scheduling jobs on parallel machine to minimize a regular step total cost function. Journal of Scheduling, 14(6), 523–538.

Garey, M. R., Johnson, D. S., & Sethi, R. (1976). The complexity of flowshop and jobshop scheduling. Mathematics of Operations Research, 1(2), 117–129.

Graham, R. L., Lawler, E. L., Lenstra, J. K., & Rinnooy Kann, A. H. G. (1979). Optimization and approximation in deterministic sequencing and scheduling: A survey. Annals of Discrete Mathematics, 6, 287–326.

Hall, N. G., Lesaoana, M., & Potts, C. N. (2001). Scheduling with fixed delivery dates. Operations Research, 49(1), 134–144.

Hall, N. G., Sethi, S. P., & Sriskandarajah, C. (1991). On the complexity of generalized due date scheduling problems. European Journal of Operational Research, 51(1), 100–109.

Janiak, A., & Krysiak, T. (2007). Single processor scheduling with job values depending on their completion times. Journal of Scheduling, 10(2), 129–138.

Janiak, A., & Krysiak, T. (2012). Scheduling jobs with values dependent on their completion times. International Journal of Production Economics, 135(1), 231–241.

Knuth, D. (1997). The art of computer programming, volume 3: Sorting and searching (3rd ed.). Boston: Addison-Wesley.

Moore, J. M. (1968). An n job, one machine sequencing algorithm for minimizing the number of late jobs. Management Science, 15(1), 102–109.

Pinedo, M. (1995). Scheduling theory, algorithms, and systems. Englewood Cliffs, NJ: Prentice Hall.

Raut, S., Swami, S., & Gupta, J. N. D. (2008). Scheduling a capacitated single machine with time deteriorating job values. International Journal of Production Economics, 114(2), 769–780.

Acknowledgments

This study was supported by FUI project Dem@ tFactory, financed by DGCIS, Conseil général de Seine-et-Marne, Conseil général de Seine-Saint-Denis, Ville de Paris, Pôle de compétitivité Cap Digital.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1 The dynamic programming algorithm

In Algorithm 3, a \(4\)-tuple \((k_1, t_2, k_2, v)\) is represented as a pair of a \(3\)-tuple and a payoff: \((\langle k_1, t_2, k_2 \rangle , v)\), to easily handle similar \(4\)-tuples. As for 4-tuples, two pairs \((e, v)\) and \((e^{\prime }, v^{\prime })\) are similar iff \(e = e^{\prime }\).

Moreover, we defined in Sect. 3.2 variables \(\beta _1\) and \(\beta _2\):

-

\(\beta _1\) is the earliest possible completion time of \(J_j\) if it is reinserted in \(S_2\) and \(S_2\) is empty: \(\beta _1 = \max (b_j, k_1) + p_j\); notice that \(\beta _1 = \max (b_j, k_1) + p_j = \max (b_j, k_1, k_2) + p_j\) since \(k_2 = 0\).

-

\(\beta _2\) is the earliest possible completion time of \(J_j\) if it is reinserted in \(S_2\) when \(S_2\) is not empty: \(\beta _2 = \max (r_j, k_2) + p_j\); notice that \(\beta _2 = \max (r_j, k_2) + p_j = \max (b_j, k_2) + p_j\), since \(k_2 > D_1\), and \(\max (b_j, k_2) + p_j = \max (b_j, k_2, k_1) + p_j\), since \(k_1 < k_2\).

Thus, in Algorithm 3, we use variable \(\beta = \max (b_j, k_1, k_2) + p_j\) instead of \(\beta _1\) and \(\beta _2\).

Theorem 4

Algorithm 3 returns the payoff of an optimal schedule.

Proof

Recall that jobs are numbered by nondecreasing release dates: \(J_1, \ldots , J_N\). Moreover, recall that at each step \(j\), three functions \(g_1\), \(g_2\), \(g_3\) were defined in Sect. 3.2, taking as argument a \(4\)-tuple. We define the corresponding functions \(q_1\), \(q_2\), \(q_3\), such that, for \(i \in \{1, 2, 3\}\): \(g_i( k_1, t_2, k_2, v, j) = (k^{\prime }_1, t^{\prime }_2, k^{\prime }_2, v^{\prime }) \Leftrightarrow q_i(\langle k_1, t_2, k_2 \rangle , v, j) = (\langle k^{\prime }_1, t^{\prime }_2, k^{\prime }_2 \rangle , v^{\prime }) \). Finally, let \(\mathcal{Q }_0 = \{(\langle 0, D_2, 0 \rangle , 0)\}\) and, for any \(j \in \{1,\ldots ,N\}\), let \(Q_j = \cup _{(e,v) \in \mathcal{Q }_{j-1}} ( q_1(e,v,j) \cup q_2(e,v,j) \cup q_3(e,v,j) )\).

Algorithm 3 constructs exactly the sets \(\mathcal{Q }_j\), \(j \in \{1,\ldots ,N\}\), except for the similar pairs: for each subset of similar pairs, only one of the pairs with the maximal value of \(v\) is kept. Indeed, line 3 of Algorithm 3 clearly adds \(\{q_3(e,v,j): (e,v) \in \mathcal{Q }_{j-1}\}\) to \(\mathcal{Q }_j\), lines 4–8 add \(\{q_1(e,v,j): (e,v) \in \mathcal{Q }_{j-1}\}\) to \(\mathcal{Q }_j\) and lines 9–13 add \(\{ q_2(e,v,j): (e,v) \in \mathcal{Q }_{j-1}\}\) to \(\mathcal{Q }_j\); all these additions are performed while observing the avoidance of similar pairs. We show next that \(\mathcal{Q }_N\), when constructed without avoiding similar pairs, contains some pair corresponding to an optimal schedule. Therefore, since the removal of similar pairs from \(\mathcal{Q }_j\), \(j = 1, \ldots , N\), clearly does not prevent to have at least one pair corresponding to an optimal schedule in \(\mathcal{Q }_N\), that will prove that Algorithm 3 returns an optimal payoff.

For any ERD-schedule \(S\) where \(S_2\) is a block, we denote by \(f(S)\) the pair \((e,v)\) corresponding to \(S\)’s \(3\)-tuple and payoff respectively.

Let \(S^*\) be an optimal ERD-schedule where \(S^*_2\) is a block. Let us denote by \(\{J_{i_1},\ldots ,J_{i_l}\}\), \(i_1 < i_2 < \cdots < i_l\), the set of jobs of \(S^*\) that complete before \(D_2\). For \(k = 1, .., l\), let \(S^{i_k}\) be the schedule that satisfies all the following conditions, with minimum \(k_1\) and \(k_2\) values (among all the schedules satisfying the same conditions):

-

1.

\(S^{i_k}\) schedules all the jobs \(J_{i_1},\ldots ,J_{i_k}\) before \(D_2\), and all the other jobs after \(D_2\)

-

2.

if \(C_{i_q}(S^*) \le D_1\), then \(C_{i_q}(S^{i_k}) \le D_1\), \(\forall q \in \{1, \ldots , k\}\)

-

3.

if \(D_1 < C_{i_q}(S^*) \le D_2\), then \(D_1 < C_{i_q}(S^{i_k}) \le D_2\), \(\forall q \in \{1, \ldots , k\}\)

-

4.

\(S^{i_k}\) is an ERD-schedule where \(S^{i_k}_2\) is a block

To conclude the proof, we show by induction that, for every \(k \in \{1, \ldots , l\}\), \(f(S^{i_k}) \in \mathcal{Q }_{i_k}\), which implies \(f(S^{i_l}) \in \mathcal{Q }_{i_{l}} \subseteq \mathcal{Q }_{N}\), which indeed proves the theorem, since \(v(S^{i_l}) = v(S^*)\).

First step of the induction. The only pair of set \(\mathcal{Q }_0\) corresponds to a schedule with no jobs before \(D_2\). If \(i_1 > 1\), for all \(i \in \{1,\ldots ,i_1 - 1\}\): \((\langle 0, D_2, 0 \rangle , 0) \in \{ q_3(e,v,i): (e,v) \in \mathcal{Q }_{i-1}\} \subseteq \mathcal{Q }_i\), by definition of \(\mathcal{Q }_i\). Then, there are two cases.

If \(C_{i_1}(S^*) \le D_1\), then, in \(S^{i_1}\), \(J_{i_1}\) completes at its earliest possible completion time \(r_{i_1}+p_{i_1}\), and all the other jobs are executed after \(D_2\). Therefore, \(f(S^{i_1}) = (\langle r_{i_1}+p_{i_1}, D_2, 0 \rangle ,2) = q_1(\langle 0, D_2, 0 \rangle , 0, i_1) \in \{q_1(e,v, i_1): (e,v) \in \mathcal{Q }_{i_1-1}\} \subseteq \mathcal{Q }_{i_1}\).

Otherwise, if \(D_1 < C_{i_1}(S^*) \le D_2\), then, in \(S^{i_1}\), job \(J_{i_1}\) completes at its earliest possible completion time into \(]D_1, D_2]\): \(\max (r_{i_1},b_{i_1})\). Therefore, \(f(S^{i_1}) = (\langle 0,\max (r_{i_1}, b_{i_1}),\) \(\max (r_{i_1}, b_{i_1}) +p_{i_1}\rangle ,1) = q_2(\langle 0, D_2, 0 \rangle , 0, i_1) \in \{ q_2(e,v, i_1): (e,v) \in \mathcal{Q }_{i_1-1}\} \subseteq \mathcal{Q }_{i_1}\).

General step of the induction. Assume now that \(f(S^{i_{j-1}}) \in \mathcal{Q }_{i_{j-1}}\). There are two cases.

If \(C_{i_j}(S^*) \le D_1\), then, in \(S^{i_j}\), job \(J_{i_j}\) must start after both \(k_1\) and \(r_{i_j}\), to satisfy condition 4 and to maintain feasibility. So, the earliest possible starting time of job \(J_{i_j}\) is \(\max (r_{i_j},k_1)\). Moreover, \(S^{i_{j}}_2\) must start not earlier than both the starting time of \(S^{i_{j-1}}_2\) (since \(k_2(S^{i_{j-1}})\) is minimal) and the completion time of \(J_{i_j}\) in \(S^{i_j}\) (to avoid overlaps). Hence, if \(S^{i_{j-1}}_2\) starts before the completion time of \(J_{i_j}\) in \(S^{i_j}\) (i.e. \(\max (r_{i_j},k_1(S^{i_{j-1}})) + p_{i_j} > t_2(S^{i_{j-1}})\)), then \(S^{i_{j}}_2\) must start exactly at the completion time of \(J_{i_j}\) in \(S^{i_j}\). Otherwise, \(S^{i_{j}}_2\) must start at \(t_2(S^{i_{j-1}})\). Hence, \(q_2\) adds \(f(S^{i_{j}})\) to \(\mathcal{Q }_{i_j}\).

Otherwise, if \(D_1 < C_{i_j}(S^*) \le D_2\), then the earliest possible starting time of \(J_{i_j}\) in \(S^{i_j}\) is clearly \(\max (b_{i_j},k_1)\) if \(S^{i_j}_2\) is empty; otherwise, it is \(\max (r_{i_j},k_2)\). Function \(q_2\) places the job precisely at that date and, in order for \(S^{i_j}_2\) to remain a block, it right-shifts all its jobs so that \(S^{i_j}_2\) contains no idle time. Hence, \(f(S^{i_j}) \in \mathcal{Q }_{i_j}\). \(\square \)

Proposition 4

Algorithm 3 computes the optimal payoff of an instance in pseudopolynomial time (\(O(N(D_1 (D_2)^2) + N\log N)\)).

Proof

First, observe that, by lines 8 and 13, it is impossible that some \(\mathcal{Q }_j\) contains two pairs \((e,v)\) and \((e^{\prime },v^{\prime })\) with \(e = e^{\prime }\). So the number of elements in each \(\mathcal{Q }_j\) is bounded by the number of possible \(3\)-tuples. Clearly, \(k_1\) can only range from \(1\) to \(D_1\) and \(k_2\) from \(D_1 + 1\) to \(D_2\). Let \(b=\min _{i=1,\ldots ,N}b_i\). \(t_2\) can only range from \(b\) to \(D_2 - 1\). Overall, the number of states in each \(\mathcal{Q }_j\) is bounded by \(X = (D_1) \times (D_2 - D_1) \times (D_2 - b)\). In each for loop of lines 2–13, we first copy \(\mathcal{Q }_{j-1}\) into \(\mathcal{Q }_j\), hence inducing a complexity of \(O(X)\), then for each loop of lines 4–8, we try to reinsert job \(J_j\) into \(S_1\) for each \(3\)-tuple of \(\mathcal{Q }_{j-1}\), hence inducing an overall complexity of \(O(X)\) to create states \(e\) on line 6 and, by storing \(\mathcal{Q }_j\) as an array or a hash table, a complexity of \(O(X)\) is induced to update \(\mathcal{Q }_j\) on line 8. The same complexity clearly applies for the foreach loop of lines 9–13. So, overall, the complexity of executing lines 3–13 is \(O(X) = O(D_1 (D_2)^2)\). The for loop of lines 2–13 is executed \(N\) times. Finally, sorting the jobs in nondecreasing release dates order can be achieved in \(O(N\log N)\). Overall, the complexity of Algorithm 3 is \(O(N(D_1(D_2)^2) + N\log N)\). \(\square \)

Appendix 2 Correctness of Algorithm 2

Proposition 3

\(Algo2(0, S)\) yields a feasible schedule \(S^{\prime }\) payoff of which is greater than or equal to \(v(S)\), and that schedules \(N_1^M\) jobs completing no later than \(D_1\).

Proof

We need to prove the following assertions:

-

1.

The algorithm always terminates.

-

2.

The returned schedule is feasible.

-

3.

In the returned schedule, exactly \(N_1^M\) jobs complete no later than \(D_1\).

-

4.

The returned schedule has a payoff greater than or equal to the payoff of the initial schedule.

Assertion 1: The algorithm always terminates.

When \(Algo2 (0, S^{\prime })\) is first called, Part 1 (lines 4–8) and/or Part 2 (lines 9–15) are executed. If we are in Part 1, the algorithm terminates, as there are no other calls to function \(Algo2\). In Part 2, the algorithm terminates, unless there is a call to \(Algo2(0, S^{\prime })\) (line 14) or \(Algo2(1, S^{\prime })\) (line 15). \(Algo2(0, S^{\prime })\) will cause the execution of Part 1 (since \(n_2 \in \{0, 1\}\)), which will terminate. Therefore we only need to prove that \(Algo2(1, S^{\prime })\) terminates, and more generally that \(Algo2(k, S^{\prime })\) terminates, for \(k > 0\).

Let us first consider the evolution of \({\overline{n}_1}, {n_2}, n_3\) on lines 20–29 and 31–38. At line 22, \(n_3\) increases by \(n_2\), and \(n_2\) becomes equal to \(0\); at line 24, \({\overline{n}_1}\) decreases by \(k-1\), and on lines 26–27 it decreases by \(1\); at line 28, \({\overline{n}_1}\) becomes equal to \(0\); at line 29, \(n_3\) becomes equal to \(0\). As for lines 31–38, we have: at line 34, \(n_3\) increases by \(k+1\), and \(n_2\) decreases by \(sk+1\); at line 35, \({\overline{n}_1}\) decreases by \(k-1\); at line 37, \({\overline{n}_1}\) decreases by \(1\); at line 38, \(n_3\) decreases by \(k+1\).

Let us consider all the calls of Algo2 to show that they cannot be executed indefinitely. When \(Algo2(0, S^{\prime })\) is called at line 41, the algorithm stops, since \(n_2 \in \{0, 1\}\). Hence, we only need to examine the calls of \(Algo2(1, S^{\prime })\) on lines 15 and 40, and the call of \(Algo2(k+1, S^{\prime })\) on line 18. The instruction of line 15 is executed at most once, that is at the first call of \(Algo2(0, S^{\prime })\), since the following calls of \(Algo2(0, S^{\prime })\) are executed only if \(n_2 \in \{0, 1\}\). As for the instruction of line 40, it can be executed only while \({\overline{n}_1} > 0\) and \(n_2 \ge 2\), which is a limited number of times, since \({\overline{n}_1}\) and \(n_2\) strictly decrease on lines 20–29 and 31–38. Finally, the instruction \(Algo2(k+1, S^{\prime })\) of line 18 cannot be executed indefinitely, since at some point we will have \(k = {\overline{n}_1}\) or \(k + 1 = n_2\).

Assertion 2: The returned schedule is feasible.

We need to show that each performed operation (LS or RS) is feasible.

Let us examine each part of the pseudocode. We will refer to the different cases considered for the definitions of the \(RS\) and \(LS\) operations earlier in this section.

Part 1.

Line 5: the \(RS(J_i, 1, 3)\) operations are feasible (cf. Case 1). After executing this line, there are no more \(\overline{S}_1^M\)-jobs in \(S^{\prime }_1\), and therefore \(c = 0\).

Line 6: \(RS(J_{\text{ s}}, 2, 3)\) is feasible (cf. Case 1). After executing this line, if there is a straddling job \(J_{\text{ s}}\), \(J_{\text{ s}}\) is an \({S}_1^M\)-job.

Line 7: if there is a straddling job \(J_{\text{ s}}\), the operation \(LS(J_{\text{ s}}, 2, 1)\) is first performed (since \(J_{\text{ s}}\) is an \({S}_1^M\)-job), and then we perform \(LS(J_M, 2, 1)\) for each of the other \({S}_1^M\)-jobs \(J_M\) in \(S^{\prime }_2\). As \(c = 0\) and \(J_{\text{ s}}\) is an \({S}_1^M\)-job, the total sum of the idle times between \(c\) and \(C_{\text{ s}} - p_{\text{ s}}\) is at least \(C_{\text{ s}} - D_1\). Therefore, \(LS(J_{\text{ s}}, 2, 1)\) is feasible (cf. Case 4.(b)). Hence, when \(LS(J_M, 2, 1)\) is performed for each of the non-straddling \({S}_1^M\)-jobs \(J_M\) in \(S^{\prime }_2\), there is no straddling job. Moreover, since \(c = 0\), and all of these jobs are \({S}_1^M\)-jobs, their total processing time is at most the total sum of the idle times in \([c, D_1]\). Therefore, the \(LS(J_M, 2, 1)\) operations are feasible (cf. Case 4.(a)).

Line 8: \(LS(J_M, 3, 1)\) is performed for every \(S_1^M\)-job in \(S^{\prime }_3\). As the reinserted jobs are all \(S_1^M\)-jobs, and since there is no straddling job, and \(c = 0\), the total sum of the idle times in \([c, D_1]\) is at least equal to the total processing time of those jobs. Therefore, the \(LS(J_M, 3, 1)\) operations are feasible (cf. Case 3).

Part 2.

Line 11: \(RS(J_{\text{ s}}, 2, 3)\) is feasible (cf. Case 1). After this operation there is no straddling job. Moreover, c is unchanged and \(c \le t_{\text{ s}}\).

Line 12: since \(J_i\) is an \(S_1^M\)-job, \(r_i > t_{\text{ s}}\) implies \(p_i \le D_1 - t_{\text{ s}}\). Therefore, if the condition of line 10 is true, \(p_i \le D_1 - t_{\text{ s}}\). Hence, since \(c \le t_{\text{ s}}\), the total amount of idle times in \([c, D_1]\) is greater than \(p_i\). Therefore, since there is no straddling job, \(LS(J_i, 2, 1)\) is feasible (cf. Case 4.(a)).

Part 3.

Lines 20–21: notice that if flag is true, there are exactly \(k+1\) \(S_1^M\)-jobs in \(S^{\prime }_2\) and thus there exists a unique \(E_{k+1}\), which contains all the \(S_1^M\)-jobs in \(S^{\prime }_2\), including \(J_{\text{ s}}\).

Line 22: the \(RS(J_M, 2, 3)\) operations are feasible (cf. Case 1). If flag is true, there is no straddling job after these operations. If flag is false, it is possible that there is a straddling job \(J_{\text{ s}}\) after these operations: in this case, \(J_{\text{ s}}\) is an \(\overline{S}_1^M\)-job.

Line 23: \(RS(J_{\text{ s}}, 2, 3)\) is feasible (cf. Case 1). This operation is never performed if flag is true. After line 23 there is no straddling job.

Lines 24–27: there are two cases, depending on the value of flag.

-

If flag is false, we perform for all the jobs \(J_j\) of \(G_k\): \(RS(J_j, 1, 2)\) (lines 24 and 27). As flag is false, at least \(k+1\) non-straddling jobs were moved to \(S^{\prime }_3\) at line 22. Let us consider \(E_{k+1}\) a set of \(k+1\) non-straddling jobs that were moved to \(S^{\prime }_3\) at line 22. So, after lines 22 and 23, the total sum of the idle times in \(S^{\prime }_2\) is at least equal to \(\sum _{J_i \in E_{k+1}} p_i\). Moreover, by induction hypothesis, \(\sum _{J_j \in G_k} p_j < \sum _{J_i \in E_{k+1}} p_i\). Therefore, the total sum of the idle times in \(S^{\prime }_2\) is at least equal to \(\sum _{J_j \in G_k} p_j\). We deduce (cf. Case 2) that \(RS(J_j, 1, 2)\) is feasible for all the jobs \(J_j\) of \(G_k\).

-

If flag is true, we perform \(RS(J_j, 1, 2)\) (line 24) only for the jobs of \(G_{k-1}\), while for the unique job \(J_k\) of \(G_k \backslash G_{k-1}\) we perform \(RS(J_k, 1, 3)\) (line 26). As flag is true, exactly \(k+1\) jobs were moved to \(S^{\prime }_3\) at line 22, one of them being \(J_{\text{ s}}\). Therefore, exactly \(k\) non-straddling jobs were moved to \(S^{\prime }_3\) at line 22. Let \(E_k\) be the set of those jobs. Moreover, the instruction of line 23 is not performed, since \(RS(J_{\text{ s}}, 2, 3)\) is already performed at line 22. So, after lines 22 and 23, the total sum of the idle times in \(S^{\prime }_2\) is at least equal to \(\sum _{J_i \in E_{k}} p_i\). By induction hypothesis, \(\sum _{J_j \in G_{k-1}} p_j < \sum _{J_i \in E_{k}} p_i\). Therefore, the total sum of the idle times in \(S^{\prime }_2\) is at least equal to \(\sum _{J_j \in G_{k-1}} p_j\). We deduce (cf. Case 2) that \(RS(J_j, 1, 2)\) is feasible for all the jobs \(J_j\) of \(G_{k-1}\). Finally, the operation \(RS(J_k, 1, 3)\) at line 26 is feasible (cf. Case 1).

Line 28: the \(RS(J_i, 1, 3)\) operations are feasible (cf. Case 1). After these operations, there are no more \(\overline{S}_1^M\)-jobs in \(S^{\prime }_1\), therefore \(c = 0\).

Line 29: \(LS(J_M, 3, 1)\) is performed for every \(S_1^M\)-job in \(S^{\prime }_3\). As the reinserted jobs are all \(S_1^M\)-jobs, and since there is no straddling job, and \(c = 0\), the total sum of the idle times in \([c, D_1]\) is at least equal to the total processing time of those jobs. Therefore, the \(LS(J_M, 3, 1)\) operations are feasible (cf. Case 3).

Part 4.

Recall that the following condition is observed: \(\exists \) \( E_{k+1}\), \(\sum _{J_j \in G_k} p_j \ge \sum _{J_i \in E_{k+1}} p_i\).

Lines 31–33: if the condition of line 31 is true, then we have that for all \(J_i \in E_{k+1}, r_i \le t_{\text{ s}}\) and \(p_i > D_1 - t_{\text{ s}}\), because of the instructions of lines 10–12. Indeed, none of the operations performed in the algorithm transforms a schedule without straddling job in a schedule with a straddling job, as can be seen in the definitions of the left-shift and right-shift operators. Therefore, any job \(J_i\) of \( E_{k+1}\) can be rescheduled to start at time \(t_{\text{ s}}\), by right-shifting \(J_{\text{ s}}\). After this exchange, \(J_i\) is the actual straddling job.

Line 34: the \(RS(J_i, 2, 3)\) operations are feasible (cf. Case 1). After executing these operations there is no straddling job.

Line 35: since at least \(k\) non-straddling jobs were moved to \(S^{\prime }_3\) at line 34, the total sum of the idle times in \(S^{\prime }_2\) is at least equal to \(\sum _{J_i \in E_{k}} p_i\), with \(E_k\) a set of \(k\) non-straddling jobs moved to \(S^{\prime }_3\) at line 34. By induction hypothesis, \(\sum _{J_j \in G_{k-1}} p_j < \sum _{J_i \in E_{k}} p_i\). Therefore, performing \(RS(J_j, 1, 2)\) on every job \(J_j\) of \(G_{k-1}\) is feasible (cf. Case 2).

Line 37: \(RS(J_k, 1, 3)\) is feasible (cf. Case 1).

Line 38: at lines 35–37, all the jobs of \(G_k\) were removed from \(S^{\prime }_1\). Therefore, the total amount of idle times in \([c, D_1]\) is at least \(\sum _{J_j \in G_{k}} p_j\). As \(\sum _{J_j \in G_k} p_j \ge \sum _{J_i \in E_{k+1}} p_i\), the total amount of idle times in \([c, D_1]\) is at least \(\sum _{J_i \in E_{k+1}} p_i\). For this reason, and since there is no straddling job, we deduce that performing \(LS(J_i, 3, 1)\) on every job \(J_i\) of \(E_{k+1}\) is feasible (cf. Case 3).

Assertion 3: In the returned schedule, exactly \(N_1^M\) jobs complete no later than \(D_1\).

As the terminal condition \({\overline{n}_1} = n_2 + n_3\) implies that exactly \(N_1^M\) jobs complete no later than \(D_1\), it is sufficient to prove that whenever the algorithm terminates, the terminal condition is true.

In Part 1, at line 5, \({\overline{n}_1}\) becomes equal to \(0\), at line 7, \(n_2\) becomes equal to \(0\), and at line 8, \(n_3\) becomes equal to \(0\). Therefore, the terminal condition is true.

In Part 2, the algorithm stops only if the condition \({\overline{n}_1} < n_2 + n_3\) (line 13) is not satisfied, which implies that the terminal condition is true, as we cannot have \({\overline{n}_1} > n_2 + n_3\); otherwise, there would be a contradiction on \(N_1^M\) being the maximal number of jobs that can complete no later than \(D_1\).

If Part 3 terminates (lines 20–29), we have that \({\overline{n}_1} = n_2 = n_3 = 0\), as shown in the proof of Assertion 1. Therefore the terminal condition is true.

Finally, Part 4 terminates only if the condition \({\overline{n}_1} < n_2 + n_3\) (line 39) is not satisfied, which, as said above, implies that the terminal condition is true.

Assertion 4: The returned schedule has a payoff greater than or equal to the payoff of the initial schedule.

In order to prove this assertion, we show that the execution of any aforementioned part does not decrease the payoff. Let \(\overline{n}_1^b\) (resp. \(n_2^b\), \(n_3^b\)) be the value of \(\overline{n}_1\) (resp. \(n_2\), \(n_3\)) at the beginning of the sequence of instructions of a given part. Let us consider each part.

-

lines 5–8: at line 5, \(\overline{n}_1^b\) operations \(RS(J_i, 1, 3)\) are performed, inducing a payoff variation of \(-2 \overline{n}_1^b\); if \(RS(J_{\text{ s}}, 2, 3)\) is performed at line 6, it induces a payoff variation of \(-1\); the \(n_2^b\) \(LS(J_M, 2, 1)\) operations of line 7 induce a payoff variation of \(n_2^b\); and the \(n_3^b\) \(LS(J_M, 3, 1)\) operations of line 8 induce a payoff variation of \(2 n_3^b\). Thus, the total payoff variation is at least \(n_2^b + 2 n_3^b -2 \overline{n}_1^b -1\). If \(\overline{n}_1^b = 0\), the payoff is at least \(n_2^b + 2 n_3^b -1 \ge 0\), as \(0 = \overline{n}_1^b < n_2^b + n_3^b\). Otherwise (i.e. \(\overline{n}_1^b > 0\)), if \(n_2^b = 0\), then \(\overline{n}_1^b < n_2^b + n_3^b = n_3^b\) and therefore \(n_2^b + 2 n_3^b -2 \overline{n}_1^b -1 \ge 0\); else (i.e. \(n_2^b = 1\)), then \(\overline{n}_1^b < n_3^b + 1\). Thus, \(2 n_3^b \ge 2 \overline{n}_1^b\). Therefore, \(n_2^b + 2 n_3^b -2 \overline{n}_1^b -1 \ge 0\).

-

lines 11–12: the \(RS(J_{\text{ s}}, 2, 3)\) operation at line 11 induces a payoff variation of \(-1\), while the \(LS(J_i, 2, 1)\) operation at line 12 induces a payoff variation of \(1\). Therefore, the total payoff variation is \(0\).

-

lines 20–29: at line 22, we perform \(n_2^b\) times the operation \(RS(J_M, 2, 3)\) (payoff variation: \(-n_2^b\)). If flag is true, then there is no straddling job after the operation of line 22. In this case, at line 24, we perform \(k-1\) times the operation \(RS(J_j, 1, 2)\) (payoff variation: \(-k+1\)), and at line 26, the operation \(RS(J_k, 1, 3)\) (payoff variation: \(-2\)). Otherwise, if flag is false, the operation \(RS(J_{\text{ s}}, 2, 3)\) at line 23 can possibly be performed (payoff variation: \(-1\)); and \(k\) \(RS(J_j, 1, 2)\) operations (payoff variation: \(-k\)) are performed at lines 24 and 27. Finally, at line 28 we perform \(\overline{n}_1^b-k\) times the operation \(RS(J_i, 1, 3)\) (payoff variation: \(-2(\overline{n}_1^b - k)\)); and at line 29 we perform \((n_2^b + n_3^b)\) times \(LS(J_M, 3, 1)\) (payoff variation: \(2(n_2^b + n_3^b)\)). Therefore, the total payoff variation is at least \(2 n_3^b + n_2^b +k -2 \overline{n}_1^b -1\). If \(\overline{n}_1^b = k\), then the payoff variation is at least \(2 n_3^b + n_2^b - \overline{n}_1^b -1 \ge 0\), as \(\overline{n}_1^b < n_2^b + n_3^b\). If \(n_2^b = k + 1\), then the payoff variation is at least \(2(n_3^b + n_2^b - \overline{n}_1^b -1) \ge 0\), as \(\overline{n}_1^b < n_2^b + n_3^b\).

-

lines 31–38: the exchange performed in lines 31–33 does not change the payoff; at line 34, we perform \(k+1\) times the operation \(RS(J_i, 2, 3)\) (payoff variation: \(-k-1\)); at line 35, we perform \((k-1)\) times the operation \(RS(J_j, 1, 2)\) (payoff variation: \(-k + 1\)); at line 37, we perform \(RS(J_k, 1, 3)\) (payoff variation: \(-2\)); and at line 38, \((k + 1)\) times the operation \(LS(J_i, 3, 1)\) (payoff variation: \(2(k + 1)\)). Therefore, the total payoff variation is \(0\). \(\square \)

Rights and permissions

About this article

Cite this article

Seddik, Y., Gonzales, C. & Kedad-Sidhoum, S. Single machine scheduling with delivery dates and cumulative payoffs. J Sched 16, 313–329 (2013). https://doi.org/10.1007/s10951-012-0302-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10951-012-0302-0