Abstract

We present a statistical mechanical model for critical currents which is successful in describing both the in-plane and out-of-plane magnetic field angle dependence of J c . This model is constructed using the principle of maximum entropy, that is, by maximizing the information entropy of a distribution, subject to constraints. We show the same approach gives commonly assumed forms for J c (B) and J c (T). An expression for two or more variables, e.g. J c (B,T), therefore, follows the laws of probability for a joint distribution. This gives a useful way to generate predictions for J c (B,T) for the DC critical current in wires, cables or coils.

Similar content being viewed by others

1 Introduction

In science, we create models to describe reality. Examples are Newton’s laws, Maxwell’s equations, and in superconductivity, the critical state model and the Ginzburg–Landau equations. In using these models we are often confronted with the problem that we lack the detailed information required to calculate the properties of a system. In superconductors, for example, we do not know the physical details of the pinning landscape. This landscape consists of defects of different location, size, shape, and chemistry. To this physical environment, we add vortices interacting with the pinning landscape and each other. The details of these interactions throughout the material are unknown to us. In summary, we do not have all the information required to calculate the measured properties.

A way around this problem is to employ information theory which provides a formalism for how we should model a system given limited information [1–5]. Here, we use a well-known variational method of information theory—maximum entropy inference—to create a model. This method, sometimes known as MaxEnt, is originally due to Jaynes [1, 2] and has been successfully used to model complex phenomena in numerous branches of science and engineering [4–7].

2 Method

We describe a measured property of a system using the distribution which maximizes the information entropy, subject to the known constraints expressed as expectation values. The expression for the Shannon information entropy is, in continuous form, S I =−∫f(x)ln(f(x)) dx. This is maximized using constraints of the form 〈g(x)〉=∫f(x)g(x) dx=const. This is proved to give the least biased distribution among all possible distributions [1–7]. We emphasize this is not the equilibrium thermodynamic entropy of our superconductor sample. It is the entropy of a distribution for an imagined ensemble of samples uniformly covering the domain of the variable we are modeling.

Commonly S I is thought of as a measure of the multiplicity of microstates or a measure of the “missing information” required to specify the microstate. The method guarantees that if we include all the constraints operating in the experiment, then the distribution predicted through maximizing S I is overwhelmingly the most likely to be observed experimentally. As we cannot be sure of the constraints a priori, we assume simple constraints then proceed to fit the derived distribution to our experimental data. If this distribution accurately describes the data then we have found a valid model. If the distribution does not fit our data, then other constraints exist or we have used the wrong constraints. Therefore, even a failure of the procedure is valuable—a failure means we should reassess our constraints and the discrepancy between data and model can help us to uncover new physics [3].

3 Results

3.1 Field Angle Dependence of J c

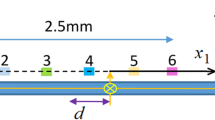

For a planar sample lying in the xy plane, with current direction \(\vec{J} = J_{x}\), we define θ as the angle of the applied magnetic field in the yz plane, and ϕ the angle in the xy plane. Using ψ∈{θ,ϕ} for either angle, we maximize \(S = - \int_{0}^{\pi } f(\psi)\ln(f(\psi))\,d\psi\) where J c (ψ)=J 0 f(ψ), with ∫f(ψ) dψ=1, using simple constraints. That is, we make no attempt to predict the magnitude of the critical current, only the angle dependence. If there are no constraints on the Shannon entropy we obtain a uniform distribution,

To connect this model with a physical picture we could consider f(ψ) as the distribution of the probability that a vortex chosen at random, with the field at an angle ψ, is pinned. The missing information being maximized is the information of which vortices are pinned and which are not pinned across an ensemble of systems covering all angles. The reality of this picture however, is not necessary to our procedure and it is not part of the method; we do not have to know any of the physical details of our microstates to apply the method—in fact the object of the method is the opposite, to adopt maximum ignorance of the microscopic details.

Equation (1a) is incomplete as a model as it is well known that peaks occur in J c (ψ) due to interactions with correlated defects. We consider the case that these correlated defects align with the crystal axes and therefore the Cartesian axes. It is therefore the constraints on the distributions in the Cartesian variables, which are the relevant information. As θ=tan−1(y/z) and ϕ=tan−1(x/y), we construct a distribution f(ψ) by first constructing f(y/z) or f(x/y) and then transforming from the Cartesian to the angular variable. That is, f Ψ (ψ)=f Y/Z (h(ψ))| dh(ψ)/dψ|, with h(ψ)=tanψ=y/z etc. We try simple constraints or equivalently, entropy maximizing distributions for f(y/z) and f(x/y). We choose a Gaussian distribution which has the constraints of a finite mean and variance, and a (truncated) Lorentzian which has only the constraint of a finite variance [8]. These are the simplest symmetric choices for the constraints on f(y/z) or f(x/y) with support −∞<0<∞. We then obtain for J c (ψ), for a peak centered at ψ=π/2

where the σ,γ are scale parameters deriving from the Cartesian distributions. We call Eq. (1b) the angular-Gaussian function, and Eq. (1c) the angular-Lorentzian function. These equations were first derived in [9, 10]. For a physical interpretation of these equations, based on a model of the pinned vortex as a random walk which interacts with correlated pinning; see [10].

The use of the same equations for the variable Lorentz force configuration seems perplexing at first. However, unless the experimental conditions can change the constraints for p(x/y) the equations must be the same in both yz and xy planes. It is difficult to see how this change of constraints could occur. In Fig. 1, we show four datasets for J c (θ) and J c (ϕ). Figure 1a is J c (θ) for Bi-2223 wire, and Fig. 1b is J c (ϕ) for a YBCO film. The data is fitted with a combination of Eqs. (1a) and (1c)—the peaks have the angular-Lorentzian shape. It is clear we have the same shape in both cases. In Figs. 1c and 1d, we show J c (θ) and J c (ϕ) data for samples with much more complex defect structures. To fit this data requires a combination of multiple angular-Gaussian functions reflecting the multiple distinct defect populations affecting pinning. The fitting parameters are listed in Table 1. The absolute J 0 values are arbitrary. For angular data it is typical to require mixture distributions—it is unlikely the broadening of a single peak will explain J c over all angles. Further evidence showing how these equations fit a wide variety of experimental data is given in [9–11]. Our ability to describe a wide range of data shows we have the correct constraints and the correct maximum entropy distributions.

3.2 Field and Temperature Dependence of J c

J c (B) is measured from zero external field to the irreversibility field, B irr, where the critical current goes to zero. We define B=B self-field+B ext, J c (B)=J 0 f(B) with ∫f(B) dB=1. We then maximize \(S = - \int_{0}^{\mathrm{Birr}} f(B)\*\ln(f(B))\,dB\) subject to constraints.

The most common constraints for a distribution on a finite domain are that there exist expectation values for the geometric means, 〈lnB〉 and 〈ln(B irr−B)〉. If we maximize the Shannon entropy using these constraints, we obtain a beta distribution, and we immediately have

where \(\operatorname{Beta}(\alpha,\beta)\) is the beta function, α,β>0 [4]. We note that multiplying Eq. (2) by B we form a normalized plot, (sometimes called a Kramer plot), which is the equivalent of flux pinning force v. field for \(\vec{B} \bot\vec{J}\). This is also a beta distribution with α→α+1. This new equation, F p ∝B α(1−(B/B irr))β−1, has been known for the past 40 years to fit experimental data for superconductors [15–17]. This includes accurate fitting of data for LTS and HTS samples. Because we have good correspondence with the data, we know we have found the correct constraints.

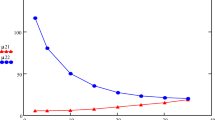

One circumstance which is known to occur [16, 17] in which Eq. (2) fails in the form, F p ∝B α(1−(B/B irr))β−1 is when the data is better fitted with two or more components, i.e., a mixture distribution, F p =∑ i w i F i . This suggests the sample has physical populations of pinning defects which are sufficiently different in their properties that they are resolved as distinct statistical populations. In Fig. 2, we show two datasets, the first showing the typical flux pinning force curve of the Kramer form, the second showing a sample with the “peak effect”—a second maximum in critical current, which can be modeled using a two component mixture distribution of beta distributions.

The physical interpretation of the logarithmic constraints for a finite domain is unclear. We refer the reader to Jaynes [18] who showed how the Beta distribution emerges in the context of Bayesian statistics if the correct ignorance prior is assumed. The physical issue is that it is unclear how our probabilities relate to B, whereas in the angular case it was clear how the probabilities related to lengths. This is a common situation—we often get power law distributions in multiscale systems, e.g. financial and biological systems, but the exact physical origin of these power laws is elusive. The power law E–I characteristic of superconductors is itself an example of this effect.

We now turn to the temperature dependence, J c (T), which is of particular relevance for HTS samples. Again our data falls on a finite domain 0<T<T c . We again assume logarithmic constraints and therefore directly obtain

For temperature dependent data in superconductors, the form J c ∼(1−(T/T c ))p is commonly employed in experimental analysis [19, 20]. Thus it seems we have γ=1 in most, if not all, observed data. Again it may be that we have mixture distributions for samples with complex defect structures.

3.3 J c Expressions for Two or More Variables

If we wish to construct a function of two or more variables, then this must be done using the rules of probability for joint distributions. For example, to construct J c (T,B)=J 0 f(T,B), we are asking for the probability that both T and B are “true.” We therefore use Bayes’ rule in either inversion

where f(T|B) is the conditional probability for T, given B. The marginal probability f(T) is given by f(T)=∫f(T,B) dB. For example, the conditional probability may be of the form f(T|B)∝(1−(T/T c (B)))α(B). The field dependent parameters T c (B) and α(B) need to be found from data fitting and will themselves be maximum entropy forms, in this case beta distributions.

In many texts on superconductors expressions for joint variables are formed from simple products of expressions for single variables [15]. This is well known to lead to errors, and the information theoretic formalism we have adopted shows how to avoid these problems.

4 Conclusions

Using maximum entropy inference is a new approach to understanding the electromagnetic properties of superconductors. On first encounter, it seems we are “getting something for nothing,” which is often the illusion with maximum entropy models. The maximum entropy method exploits the fact that, by some extremely large factor, there are more states of our ensemble of systems which produce the behavior described by the maximum entropy expressions than states which produce any other behavior. This formalism can be extended to any measured property of the system, including critical currents, irreversibility fields and AC losses. It also applies to measurements in the flux flow regime, and is scalable in the sense of applying to wires, cables or coils. The fundamental criterion is only that we have a reproducible experiment. By taking into account our ignorance of microscopic details, maximum entropy inference constructs models which fundamentally only depend on logic as expressed through the laws of probability. They are thus in a sense more fundamental than our “models of reality”—critical state models, Ginzburg–Landau equations, or the Bardeen–Cooper–Schreiffer theory, and hence apply without modification to any Type II superconductor.

References

Jaynes, E.T.: Phys. Rev. 106, 620 (1957)

Jaynes, E.T.: Phys. Rev. 108, 171 (1957)

Jaynes, E.T.: In: Levine, R.D., Tribus, M. (eds.) The Maximum Entropy Formalism, pp. 15–118. MIT Press, Cambridge (1978)

Kapur, J.N.: Maximum-Entropy Models in Science and Engineering, New Age, 2nd revised edn. (2009)

Cover, T.M., Thomas, J.A.: Elements of Information Theory, 2nd edn. Wiley Series in Telecommunications and Signal Processing (2006)

Banavar, J.R., Maritan, A., Volkov, I.: J. Phys. Condens. Matter 22, 063101 (2010)

Caticha, A.: Lectures on probability, entropy, and statistical physics. Max-Ent 2008 Sao Paulo, Brazil. arXiv:0808.0012

Carazza, B.: J. Phys. A, Math. Gen. 9, 1069 (1976)

Long, N.J., Strickland, N., Talantsev, E.: IEEE Trans. Appl. Supercond. 17, 3684 (2007)

Long, N.J.: Supercond. Sci. Technol. 21, 025007 (2008)

Wimbush, S.C., Long, N.J.: New J. Phys. 14, 083017 (2012)

Clem, J.R., Weigand, M., Durrell, J.H., Campbell, A.M.: Supercond. Sci. Technol. 24, 062002 (2011)

Harrington, S.A., et al.: Nanotechnology 21, 095604 (2010)

Durrell, J.H., et al.: Phys. Rev. Lett. 90, 247006 (2003)

Matsushita, T.: Flux Pinning in Superconductors. Springer, Berlin (2006)

Campbell, A.M., Evetts, J.E.: Critical Currents in Superconductors. Taylor & Francis, London (1972)

Varanasi, C.V., Barnes, P.N.: In: Bhattacharya, R., Paranthaman, M.P. (eds.) High Temperature Superconductors. Wiley–VCH, Weinheim (2010)

Jaynes, E.T.: Probability Theory, the Logic of Science. Cambridge University Press, Cambridge (2003)

Jung, J., Yan, H., Darhmaoui, H., Abdelhadi, M., Boyce, B., Lemberger, T.: Supercond. Sci. Technol. 12, 1086–1089 (1999)

Albrecht, J., Djupmyr, M., Bruck, S.: J. Phys. Condens. Matter 19, 216211 (2007)

Acknowledgements

The author acknowledges discussions with S.C. Wimbush and other members of the superconductivity team at IRL. Thanks to N.W. Ashcroft, Cornell University, for comments on an earlier version of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Long, N.J. A Statistical Mechanical Model of Critical Currents in Superconductors. J Supercond Nov Magn 26, 763–767 (2013). https://doi.org/10.1007/s10948-012-2063-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10948-012-2063-6