Abstract

Locally adaptive differential frames (gauge frames) are a well-known effective tool in image analysis, used in differential invariants and PDE-flows. However, at complex structures such as crossings or junctions, these frames are not well defined. Therefore, we generalize the notion of gauge frames on images to gauge frames on data representations \(U:\mathbb {R}^{d} \rtimes S^{d-1} \rightarrow \mathbb {R}\) defined on the extended space of positions and orientations, which we relate to data on the roto-translation group SE(d), \(d=2,3\). This allows to define multiple frames per position, one per orientation. We compute these frames via exponential curve fits in the extended data representations in SE(d). These curve fits minimize first- or second-order variational problems which are solved by spectral decomposition of, respectively, a structure tensor or Hessian of data on SE(d). We include these gauge frames in differential invariants and crossing-preserving PDE-flows acting on extended data representation U and we show their advantage compared to the standard left-invariant frame on SE(d). Applications include crossing-preserving filtering and improved segmentations of the vascular tree in retinal images, and new 3D extensions of coherence-enhancing diffusion via invertible orientation scores.

Similar content being viewed by others

1 Introduction

Many existing image analysis techniques rely on differential frames that are locally adapted to image data. This includes methods based on differential invariants [33, 39, 50, 60], partial differential equations [38, 60, 71], and non-linear and morphological scale spaces [12, 13, 72], used in various image processing tasks such as tracking and line detection [6], corner detection and edge focussing [8, 39], segmentation [66], active contours [15, 16], DTI data processing [45, 46], feature-based clustering, etc. These local coordinate frames (also known as ‘gauge frames’ according to [10, 33, 39]) provide differential frames directly adapted to the local image structure via a structure tensor or a Hessian of the image. Typically the structure tensor (based on first-order Gaussian derivatives) is used for adapting to edge-like structures, while the Hessian (based on second-order Gaussian derivatives) is used for adapting to line-like structures. The primary benefit of the gauge frames is that they allow to include adaptation for anisotropy and curvature in a rotation and translation invariant way. See Fig. 1, where we have depicted local adaptive frames based on eigenvector decomposition of the image Hessian at some given scale, of the MR image in the background.

Left locally adaptive frames (gauge frames) in the image domain computed as the eigenvectors of the Hessian of the image at each location. Right such gauge frames can be used for adaptive anisotropic diffusion and geometric reasoning. However, at complex structures such as blob-structures/crossings, the gauge frames are ill defined causing fluctuations

It is sometimes problematic that such locally adapted differential frames are directly placed in the image domain \(\mathbb {R}^{d}\) \((d=2,3)\), as at the vicinity of complex structures, e.g., crossings, textures, bifurcations, one typically requires multiple local spatial coordinate frames. To this end, one effective alternative is to extend the image domain to the joint space of positions and orientations \(\mathbb {R}^{d} \rtimes S^{d-1}\). The advantage is that it allows to disentangle oriented structures involved in crossings, and to include curvature, cf. Fig. 2. Such extended domain techniques rely on various kinds of lifting, such as coherent state transforms (also known as invertible orientation scores) [2, 6, 27, 34], continuous wavelet transforms [6, 23, 27, 63], orientation lifts [11, 73], or orientation channel representations [32]. In case one has to deal with more complex diffusion-weighted MRI techniques, the data in extended position orientation domain can be obtained after a modeling procedure as in [1, 65, 67, 68]. In this article we will not discuss in detail on how such a new image representation or lift \(U:\mathbb {R}^{d} \rtimes S^{d-1} \rightarrow \mathbb {R}\) is to be constructed from gray-scale image \(f:\mathbb {R}^{d} \rightarrow \mathbb {R}\), and we assume it to be a sufficiently smooth given input. Here \(U({\mathbf{x }},{\mathbf{n }})\) is to be considered as a probability density of finding a local oriented structure (i.e., an elongated structure) at position \({\mathbf{x }} \in \mathbb {R}^{d}\) with orientation \({\mathbf{n }} \in S^{d-1}\).

We aim for adaptive anisotropic diffusion of images which takes into account curvature. At areas with low orientation confidence (in blue) isotropic diffusion is required, whereas at areas with high orientation confidence (in red) anisotropic diffusion with curvature adaptation is required. Application of locally adaptive frames in the image domain suffers from interference (3rd column), whereas application of locally adaptive frames in the domain \(\mathbb {R}^{d} \rtimes S^{d-1}\) allows for adaptation along all the elongated structures (4th column) (Color figure online)

When processing data in the extended position orientation domain, it is often necessary to equip the domain with a structure that links the data across different orientation channels, in such a way that a notion of alignment between local orientations is taken into account. This is achieved by relating data on positions and orientations to data on the roto-translation group \(SE(d)=\mathbb {R}^{d} \rtimes SO(d)\). This idea resulted in contextual image analysis methods [4, 17, 20, 26, 27, 30, 53, 63, 64, 70, 73] and appears in models of low-level visual perception and their relation with the functional architecture of the visual cortex [5, 11, 19, 49, 57–59]. Following the conventions in [30], we denote functions on the coupled space of positions and orientations by \(U:\mathbb {R}^d\rtimes S^{d-1}\rightarrow \mathbb {R}\). Then, its extension \(\tilde{U}:SE(d)\rightarrow \mathbb {R}\) is given by

for all \({\mathbf{x }}\in \mathbb {R}^d\) and all rotations \({\mathbf{R }}\in SO(d)\), and given reference axis \({\mathbf{a }} \in S^{d-1}\). Throughout this article \({\mathbf{a }}\) is chosen as follows:

Then, we can identify the joint space of positions and orientations \(\mathbb {R}^d\rtimes S^{d-1}\) by

where this quotient structure is due to (1), and where \(SO(d-1)\) is identified with all rotations on \(\mathbb {R}^d\) that map reference axis \({\mathbf{a }}\) onto itself. Note that in Eq. (1) the tilde indicates we consider data on the group instead of data on the quotient. If \(d=2\), the tildes can be ignored as \(\mathbb {R}^2\rtimes S^1 =SE(2)\). However, for \(d\ge 3\) this distinction is crucial and necessary details on (3) will follow in the beginning of Sect. 6.

In this article, our quest is to find locally optimal differential frames in SE(d) relying on similar Hessian- and/or structure-tensor type of techniques for gauge frames on images, recall Fig. 1. Then, the frames can be used to construct crossing-preserving differential invariants and adaptive diffusions of data in SE(d). In order to find these optimal frames, our main tool is the theory of curve fits. Early works on curve fits have been presented [37, 54] where the notion of curvature consistency is applied to inferring local curve orientations, based on neighborhood co-circularity continuation criteria. This approach was extended to 2D texture flow inference in [7], by lifting images in position and orientation domain and inferring multiple Cartan frames at each point. Our work is embedded in a Lie group framework where we consider the notion of exponential curve fits via formal variational methods. Exponential curves in the SE(d)-curved geometry are the equivalents of straight Footnote 1 lines in the Euclidean geometry. If \(d=2\), the spatial projection of these exponential curves are osculating circles, which are used for constructing the curvature consistency in [54], for defining the tensor voting fields in [52], and for local modeling association fields in [19]. If \(d=3\), the spatial projection of exponential curves are spirals with constant curvature and torsion. Based on co-helicity principles, similar spirals have been used in neuroimaging applications [61] or for modeling heart fibers [62]. In these works curve fits are obtained via efficient discrete optimization techniques, which are beyond the scope of this article.

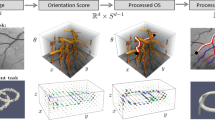

In Fig. 3, we present an example for \(d=2\) of the overall pipeline of including locally adaptive frames in a suitable diffusion operators \(\varPhi \) acting in the lifted domain \(\mathbb {R}^{2}\rtimes S^1\). For \(d>2\) the same pipeline applies. Here, an exponential curve fit \(\gamma ^{{\mathbf{c }}^*}_{g}(t)\) (in blue, with spatial projection in red) at a group element \(g\in SE(d)\) is characterized by \((g, {\mathbf{c }}^{*}(g))\), i.e., a starting point g and an tangent vector \({\mathbf{c }}^{*}(g)\) that should be aligned with the structures of interest. In essence, this paper explains in detail how to compute \({\mathbf{c }}^{*}(g)\) as this will be the principal direction the differential frame will be aligned with, and then gives appropriate conditions for fixing the remaining directions in the frame.

The overall pipeline of image processing \(f \mapsto \varUpsilon f\) via left-invariant operators \(\varPhi \). In this pipeline we construct an invertible orientation score \(W_\psi f\) (Sect. 7.1), we fit an exponential curve (Sect. 5,6), we obtain the gauge frame (Sect. 3 and Appendix 1), we construct a non-linear diffusion, and finally we apply reconstruction (Sect. 7.1). The main focus of this paper is curve fitting, where we compute per element \(g=(x,y,\theta )\) an exponential curve fit \(\gamma ^{{\mathbf{c }}^*}_{g}(t)\) (in blue, with spatial projection in red) with tangent \(\dot{\gamma }_g^{{\mathbf{c }}^{*}}(0)={\mathbf{c }}^{*}(g)=(c^1,c^2,c^3)^T\) at g. Based on this fit we construct for each g, a local frame \(\{\left. \mathcal {B}_{1}\right| _{g}, \left. \mathcal {B}_{2}\right| _{g},\left. \mathcal {B}_{3}\right| _{g}\}\) which are used in our operators \(\varPhi \) on the lift (here \(\varPhi \) is a non-linear diffusion operator) (Color figure online)

The main contribution of this article is to provide a general theory for finding locally adaptive frames in the roto-translation group SE(d), for \(d=2,3\). Some preliminary work on exponential curve fits of the second order on SE(2) has been presented in [34, 35, 63]. In this paper we formalize these previous methods (Theorems 2 and 3) and we extend them to first-order exponential curve fits (Theorem 1). Furthermore, we generalize both approaches to the case \(d=3\) (Theorems 4, 5, 6, 7, and 8). All theorems contain new results except for Theorems 2 and 3. The key ingredient is to consider the fits as formal variational curve optimization problems with exact solutions derived by spectral decomposition of structure tensors and Hessians of the data \(\tilde{U}\) on SE(d). In the SE(3)-case we show that in order to obtain torsion-free exponential curve fits with well-posed projection on \(\mathbb {R}^{3}\rtimes S^{2}\), one must resign to a twofold optimization algorithm. To show the potential of considering these locally adaptive frames, we employ them in medical image analysis applications, in improved differential invariants and improved crossing-preserving diffusions. Here, we provide for the first time coherence-enhancing diffusions via 3D invertible orientation scores [42, 43], extending previous methods [34, 35, 63] to the 3D Euclidean motion group.

1.1 Structure of the Article

We start the body of this article reviewing preliminary differential geometry tools in Sect. 2. Then, in Sect. 3 we describe how a given exponential curve fit induces the locally adaptive frame. In Sect. 4 we provide an introduction by reformulating the standard gauge frames construction in images in a group-theoretical setting. This gives a roadmap towards SE(2)-extensions explained in Sect. 5, where we deal with exponential curve fits of the first order in Sect. 5.2 computed via a structure tensor, and exponential curves fits of second order in Sect. 5.3 computed via the Hessian of the data \(\tilde{U}\). In the latter case we have two options for the curve optimization problem: one solved by the symmetric sum, and one by the symmetric product of the non-symmetric Hessian. The curve fits in SE(2) in Sect. 5 are extended to curve fits in SE(3) in Sect. 6. It starts with preliminaries on the quotient (3) and then it follows the same structure as the previous section. Here we present the twofold algorithm for computing the torsion-free exponential curve fits.

In Sect. 7 we consider experiments regarding medical imaging applications and feasibility studies. We first recall the theory of invertible orientation scores needed for the applications. In the SE(2)-case we present crossing-preserving multi-scale vessel enhancing filters in retinal imaging, and in the SE(3)-case we include a proof of concept of crossing-preserving (coherence-enhancing diffusion) steered by gauge frames via invertible 3D orientation scores.

Finally, there are 5 appendices. Appendix 1 supplements Sect. 3 by explaining the construction of the frame for \(d=2,3\). Appendix 2 describes the geometry of neighboring exponential curves needed for formulating the variational problems. Appendix 3 complements the twofold approach in Sect. 6. Appendix 4 provides the definition of the Hessian used in the paper. Finally, Table 1 in Appendix 5 contains a list of symbols, their explanations and references to the equation in which they are defined. We advise the reader to keep track of this table. Especially, in the more technical sections: Sects. 5 and 6.

2 Differential Geometrical Tools

Relating our data to data on the Euclidean motion group, via Eq. (1), allows us to use tools from Lie group theory and differential geometry. In this section we explain these tools that are important for our notion of an exponential curve fit to smooth data \(\tilde{U}:SE(d) \rightarrow \mathbb {R}\). Often, we consider the case \(d=2\) for basic illustration. Later on, in Sect. 6, we consider the case \(d=3\) and extra technicalities on the quotient structure will enter.

2.1 The Roto-Translation Group

The data \(\tilde{U}:SE(d) \rightarrow \mathbb {R}\) is defined on the group SE(d) of rotations and translations acting on \(\mathbb {R}^{d}\). As the concatenation of two rigid body motions is again a rigid body motion, the group SE(d) is equipped with the following group product:

where we recognize the semi-direct product structure \(SE(d)=\mathbb {R}^{d} \rtimes SO(d)\), of the translation group \(\mathbb {R}^{d}\) with rotation group \(SO(d)=\{\mathbf{R } \in \mathbb {R}^{d\times d}\,|\, \mathbf{R }^{T}=\mathbf{R }^{-1},\det {\mathbf {R}}=1\}\). The groups SE(d) and SO(d) have dimension

Note that \(n_2=3\), \(n_3=6\). One may represent elements g from SE(d) by the following matrix representation

We will often avoid this embedding into the set of invertible \((d+1) \times (d+1)\) matrices, in order to focus on the geometry rather than the algebra.

2.2 Left-Invariant Operators

In image analysis applications operators \(\tilde{U} \mapsto \tilde{\varPhi }(\tilde{U})\) need to be left-invariant and not right-invariant [24, 34]. Left-invariant operators \(\tilde{\varPhi }\) in the extended domain correspond to rotation and translation invariant operators \(\varUpsilon \) in the image domain, which is a desirable property. On the other hand, right-invariance boils down to isotropic operators in the image domain which is an undesirable restriction. By definition \(\tilde{\varPhi }\) is left-invariant and not right-invariant if it commutes with the left-regular representation \(\mathcal {L}\) (and not with the right-regular representation \(\mathcal {R}\)). Representations \(\mathcal {L},\mathcal {R}\) are given by

for all \(h,g \in SE(d)\). So operator \(\tilde{\varPhi }\) must satisfy \(\tilde{\varPhi } \circ \mathcal {L}_{g}= \mathcal {L}_{g} \circ \tilde{\varPhi }\) and \(\tilde{\varPhi } \circ \mathcal {R}_{g}\ne \mathcal {R}_{g} \circ \tilde{\varPhi }\) for all \(g \in SE(d)\).

2.3 Left-Invariant Vector Fields and Dual Frame

A special case of left-invariant operators are left-invariant derivatives. More precisely (see Remark 1 below), we need to consider left-invariant vector fields \(g \mapsto \mathcal {A}_{g}\), as the left-invariant derivative \(\mathcal {A}_{g}\) depends on the location g where it is attached. Intuitively, the left-invariant vector fields \(\{\mathcal {A}_{i}\}_{i=1}^{n_i}\) provide a local moving frame of reference in the tangent bundle T(SE(d)), which comes in naturally when including alignment of local orientations in the image processing of \(\tilde{U}\).

Formally, the left-invariant vector fields are obtained by taking a basis  in the tangent space at the unity element

in the tangent space at the unity element  , and then one uses the push-forward \((L_{g})_*\) of the left multiplication

, and then one uses the push-forward \((L_{g})_*\) of the left multiplication

to obtain the corresponding tangent vectors in the tangent space \(T_{g}(SE(d))\). Thus, one associates to each \(A_{i}\) a left-invariant field \(\mathcal {A}_{i}\) given by

where we consider each \(\mathcal {A}_{i}\) as a differential operator on smooth locally defined functions \(\tilde{\phi }\) given by

An explicit way to construct and compute the differential operators \(\left. \mathcal {A}_{i}\right| _{g}\) from  is via

is via

where \(A \mapsto e^A=\sum \nolimits _{k=0}^{\infty } \frac{A^k}{k!}\) denotes the matrix exponential from Lie algebra  to Lie group SE(d). The differential operators \(\{\mathcal {A}_{i}\}_{i=1}^{n_d}\) induce a corresponding dual frame \(\{\omega ^{i}\}_{i=1}^{n_d}\), which is a basis for the co-tangent bundle \(T^{*}(SE(d))\). This dual frame is given by

to Lie group SE(d). The differential operators \(\{\mathcal {A}_{i}\}_{i=1}^{n_d}\) induce a corresponding dual frame \(\{\omega ^{i}\}_{i=1}^{n_d}\), which is a basis for the co-tangent bundle \(T^{*}(SE(d))\). This dual frame is given by

where \(\delta ^{i}_{j}\) denotes the Kronecker delta. Then the derivative of a differentiable function \(\tilde{\phi }: SE(d) \rightarrow \mathbb {R}\) is expressed as follows:

Remark 1

In differential geometry, there exist two equivalent viewpoints [3, Ch. 2] on tangent vectors \(\mathcal {A}_{g} \in T_{g}(SE(d))\): either one considers them as tangents to locally defined curves; or one considers them as differential operators on locally defined functions. The connection between these viewpoints is as follows. We identify a tangent vector \(\dot{\tilde{\gamma }}(t) \in T_{\tilde{\gamma }(t)}(SE(d))\) with the differential operator \((\dot{\tilde{\gamma }}(t))(\tilde{\phi }) := \frac{d}{dt} \tilde{\phi }(\tilde{\gamma }(t))\) for all locally defined, differentiable, real-valued functions \(\tilde{\phi }\).

Next we express tangent vectors explicitly in the left-invariant moving frame of reference, by taking a directional derivative:

with \(\dot{\tilde{\gamma }}(t)= \sum \limits _{i=1}^{n_d} \dot{\tilde{\gamma }}^{i}(t)\left. \mathcal {A}_{i}\right| _{\tilde{\gamma }(t)}\), and with \(\tilde{\phi }\) smooth and defined on an open set around \(\tilde{\gamma }(t)\). Eq. (13) will play a crucial role in Sect. 5 (exponential curve fits for \(d=2\)) and Sect. 6 (exponential curve fits for \(d=3\)).

Example 1

For \(d=2\) we take  ,

,  ,

,  . Then we have the left-invariant vector fields

. Then we have the left-invariant vector fields

The dual frame is given by

For explicit formulas for left-invariant vector fields in SE(3) see [18, 30].

2.4 Exponential Curves in SE(d)

Let \((\mathbf{c }^{(1)},\mathbf{c }^{(2)})^T \in \mathbb {R}^{d+r_d}=\mathbb {R}^{n_d}\) be a given column vector, where \(\mathbf{c }^{(1)}=(c^1,\ldots ,c^{d}) \in \mathbb {R}^d\) denotes the spatial part and \(\mathbf{c }^{(2)}=(c^{d+1},\ldots , c^{n_d}) \in \mathbb {R}^{r_d}\) denotes the rotational part. The unique exponential curve passing through \(g \in SE(d)\) with initial velocity \(\mathbf{c }(g)=\sum \nolimits _{i=1}^{n_d} c^{i}\left. \mathcal {A}_{i}\right| _{g}\) equals

with  denoting a basis of

denoting a basis of  . In fact such exponential curves satisfy

. In fact such exponential curves satisfy

and thereby have constant velocity in the moving frame of reference, i.e., \(\dot{\tilde{\gamma }}^{i}=c^{i}\) in Eq. (13). A way to compute the exponentials is via matrix exponentials and (6).

Example 2

If \(d=2\) we have exponential curves:

which are circular spirals with

for the case \(c^{3}\ne 0\), and all \(t\ge 0\) and straight lines with

for the case \(c^{3}=0\), where \(g_0=(x_0,y_0,\theta _{0}) \in SE(2)\). See the left panel in Fig. 4.

Left horizontal exponential curve \(\tilde{\gamma }^{\mathbf{c }}_g\) in SE(2) with \(\mathbf{c }=(1,0,1)\). Its projection on the ground plane reflects co-circularity, and the curve can be obtained by a lift (30) from its spatial projection. Right the distribution \(\Delta \) of horizontal tangent vector fields as a sub-bundle in the tangent bundle T(SE(2))

Example 3

For \(d=3\), the formulae for exponential curves in SE(3) are given in for example [18, 30]. Their spatial part are circular spirals with torsion \(\varvec{\tau }(t)=\frac{|\mathbf{c }^{(1)} \cdot \mathbf{c }^{(2)}|}{\Vert \mathbf{c }^{(1)}\Vert }\, \varvec{\kappa }(t)\) and curvature

Note that their magnitudes are constant:

2.5 Left-Invariant Metric Tensor on SE(d)

We use the following (left-invariant) metric tensor:

where \(\dot{\tilde{\gamma }}=\sum _{i=1}^{n_d}\dot{\tilde{\gamma }}^{i} \left. \mathcal {A}_{i}\right| _{\tilde{\gamma }}\), and with stiffness parameter \(\mu \) along any smooth curve \(\tilde{\gamma }\) in SE(d). Now, for the special case of exponential curves, one has \(\dot{\tilde{\gamma }}^{i}= c^{i}\) is constant. The metric allows us to normalize the speed along the curves by imposing a normalization constraint

We will use this constraint in the fitting procedure in order to ensure that our exponential curves (17) are parameterized by Riemannian arclength t.

2.6 Convolution and Haar Measure on SE(d)

In general a convolution of data \(\tilde{U}:SE(d)\rightarrow \mathbb {R}\) with kernel \(\tilde{K}:SE(d)\rightarrow \mathbb {R}\) is given by

for all \(h=(\mathbf{x }',\mathbf{R }') \in SE(d)\), where Haar measure \(\overline{\mu }\) is the direct product of the usual Lebesgue measure on \(\mathbb {R}^d\) with the Haar measure on SO(d).

2.7 Gaussian Smoothing and Gradient on SE(d)

We define the regularized data

where \(\mathbf{s }=(s_p,s_o)\) are the spatial and angular scales, respectively, of the separable Gaussian smoothing kernel defined by

This smoothing kernel is a product of the heat kernel \(G_{s_p}^{\mathbb {R}^d}(\mathbf{x })=\frac{e^{-\frac{\Vert \mathbf{x }\Vert ^2}{4s_p}}}{(4\pi s_p)^{d/2}}\) on \(\mathbb {R}^{d}\) centered at \(\mathbf{0 }\) with spatial scale \(s_p>0\), and a heat kernel \(G_{s_o}^{S^{d-1}}(\mathbf{R }\mathbf{a })\) on \(S^{d-1}\) centered around \(\mathbf{a } \in S^{d-1}\) with angular scale \(s_o>0\).

By definition the gradient \(\nabla \tilde{U}\) of image data \(\tilde{U}:SE(d) \rightarrow \mathbb {R}\) is the Riesz representation vector of the derivative \(\mathrm{d}\tilde{U}\):

relying on \(\mathbf{M }_{\mu }\) as defined in (24). Here, following standard conventions in differential geometry,  denotes the inverse of the linear map associated to the metric tensor (23). Then, the Gaussian gradient is defined by

denotes the inverse of the linear map associated to the metric tensor (23). Then, the Gaussian gradient is defined by

2.8 Horizontal Exponential Curves in SE(d)

Typically, in the distribution \(\tilde{U}\) (e.g., if \(\tilde{U}\) is an orientation score of a gray-scale image) the mass is concentrated around so-called horizontal exponential curves in SE(d) (see Fig. 3). Next we explain this notion of horizontal exponential curves.

A curve \(t \mapsto (x(t),y(t)) \in \mathbb {R}^2\) can be lifted to a curve \(t \mapsto \tilde{\gamma }(t)=(x(t),y(t),\theta (t))\) in SE(2) via

Generalizing to \(d\ge 2\), one can lift a curve \(t \mapsto \mathbf{x }(t) \in \mathbb {R}^d\) towards a curve \(t \mapsto \gamma (t)=(\mathbf{x }(t),\mathbf{n }(t))\) in \(\mathbb {R}^{d}\rtimes S^{d-1}\) by setting

A curve \(t \mapsto \mathbf{x }(t)\) can be lifted towards a family of lifted curves \(t \mapsto \tilde{\gamma }(t)=(\mathbf{x }(t),\mathbf{R }_{\mathbf{n }(t)})\) into the roto-translation group SE(d) by setting \(\mathbf{R }_{\mathbf{n }(t)} \in SO(d)\) such that it maps reference axis \(\mathbf{a }\) onto \(\mathbf{n }(t)\):

Here we use \(\mathbf{R }_{\mathbf{n }}\) to denote any rotation that maps reference axis \(\mathbf{a }\) onto \(\mathbf{n }\). Clearly, the choice of rotation is not unique for \(d>2\), e.g., if \(d=3\) then \(\mathbf{R }_{\mathbf{n }} \mathbf{R }_{\mathbf{a },\alpha }\mathbf{a }=\mathbf{a }\) regardless the value of \(\alpha \), where \(\mathbf{R }_{\mathbf{a },\alpha }\) denotes the counterclockwise 3D rotation about axis \(\mathbf {a}\) by angle \(\alpha \).

Next we study the implication of restriction (31) on the tangent bundle of SE(d).

-

For \(d=2\), we have restriction \(\dot{\mathbf{x }}(t)=(\dot{x}(t), \dot{y}(t))=\Vert \dot{\mathbf{x }}(t)\Vert (\cos \theta (t),\sin \theta (t))\), i.e.,

$$\begin{aligned} \dot{\tilde{\gamma }} \in \left. \Delta \right| _{\tilde{\gamma }}, \ \text {with }\Delta= & {} \text {span}\{\cos \theta \partial _x+\sin \theta \partial _y, \partial _{\theta }\}\nonumber \\= & {} \text {span}\{\mathcal {A}_1,\mathcal {A}_3\}, \end{aligned}$$(32)where \(\Delta \) denotes the so-called horizontal part of tangent bundle T(SE(2)). See Fig. 4.

-

For \(d=3\), we impose the constraint:

$$\begin{aligned} \dot{\tilde{\gamma }}(t) \in \varDelta _{\tilde{\gamma }(t)}, \ \text {with }\varDelta := \text {span}\{\mathcal {A}_{3},\mathcal {A}_{4}, \mathcal {A}_{5}\}, \end{aligned}$$(33)where \(\mathcal {A}_3= \mathbf{n } \cdot \nabla _{\mathbb {R}^3}\), since then spatial transport is always along \(\mathbf{n }\) which is required for for (31).

Curves \(\tilde{\gamma }(t)\) satisfying the constraint (32) for \(d=2\), and (33) for \(d=3\) are called horizontal curves. Note that \(\text {dim}(\varDelta )=d\).

Next we study how the restriction applies to the particular case of exponential curves on SE(d).

-

For \(d=2\) horizontal exponential curves are obtained from (18), (19), (20) by setting \(c^{2}=0\).

-

For \(d=3\), we use a different reference axis \(\mathbf{a }\), and horizontal exponential curves are obtained from (16) by setting \(c^1=c^2=c^6=0\).

If exponential curves are not horizontal, then we indicate how much the local tangent of the exponential curve points outside the spatial part of \(\Delta \), by a ‘deviation from horizontality angle’ \(\chi \), which is given by

Example 4

In case \(d=2\) we have \(n_2=3\), \(\mathbf{a }=(1,0)^T\). The horizontal part of the tangent bundle \(\Delta \) is given by (32), and horizontal exponential curves are obtained from (18) by setting \(c^2=0\). For exponential curves in general, we have deviation from horizontality angle

An exponential curve in SE(2) is horizontal if and only if \(\chi =0\). See Fig. 4, where in the left we have depicted a horizontal exponential curve and where in the right we have visualized distribution \(\Delta \).

Example 5

In case \(d=3\), we have \(n_3=6\), \(\mathbf{a }=(0,0,1)^T\). The horizontal part of the tangent bundle is given by (33), and horizontal exponential curves are characterized by \(c^{3}, c^{4}, c^{5}\) whereas \(c^{1}=c^{2}=c^6=0\). By Eq. (22) these curves have zero torsion \(|\tau |=0\) and constant curvature \(\frac{\sqrt{(c^4)^2+(c^{5})^2}}{c^3}\) and thus they are planar circles. For exponential curves in general, we have deviation from horizontality angle

An exponential curve in SE(3) is horizontal if and only if \(\chi =0\) and \(c^6=0\).

Locally adaptive frame \(\{\left. \mathcal {B}_{1}\right| _{g}, \left. \mathcal {B}_{2}\right| _{g}, \left. \mathcal {B}_{3}\right| _{g}\}\) (in blue) in \(T_{g}(SE(2))\) (with g placed at the origin) is obtained from frame \(\{\left. \mathcal {A}_{1}\right| _{g}, \left. \mathcal {A}_{2}\right| _{g}, \left. \mathcal {A}_{3}\right| _{g}\}\) (in red) and \(\mathbf{c }(g)\), via normalization and two subsequent rotations \(\mathbf{R }^{\mathbf{c }}=\mathbf{R }_{2}\mathbf{R }_{1}\), see Eq. (36), revealing deviation from horizontality \(\chi \), and spherical angle \(\nu \) in Eq. (37). Vector field \(\mathcal {A}_{1}\) takes a spatial derivative in direction \(\mathbf{n }\), whereas \(\mathcal {B}_{1}\) takes a derivative along the tangent \(\mathbf{c }\) of the local exponential curve fit (Color figure online)

3 From Exponential Curve Fits to Gauge Frames on SE(d)

In Sects. 5 and 6 we will discuss techniques to find an exponential curve \(\tilde{\gamma }^{\mathbf{c }}_g(t)\) that fits the data \(\tilde{U}:SE(d)\rightarrow \mathbb {R}\) locally. Let \(\mathbf{c }(g)=(\tilde{\gamma }^{\mathbf{c }}_g)'(0)\) be its tangent vector at g.

In this section we assume that the tangent vector \(\mathbf{c }(g)=(\mathbf{c }^{(1)}(g),\mathbf{c }^{(2)}(g))^T \in R^{d+r_d}=\mathbb {R}^{n_d}\) is given. From this vector we will construct a locally adaptive frame \( \{\left. \mathcal {B}_{1}\right| _g,\ldots ,\left. \mathcal {B}_{n_d}\right| _{g}\}\), orthonormal w.r.t.  -metric in such a way that

-metric in such a way that

-

1.

the main spatial generator (\(\mathcal {A}_{1}\) for \(d=2\) and \(\mathcal {A}_{d}\) for \(d>2\)) is mapped onto \(\left. \mathcal {B}_{1}\right| _{g}= \sum \nolimits _{i=1}^{n_d}c^{i}(g) \left. \mathcal {A}_{i}\right| _{g}\),

-

2.

the spatial generators \(\{\left. \mathcal {B}_{i}\right| _{g}\}^d_{i=2}\) are obtained from the other left-invariant spatial generators \(\{\left. \mathcal {A}_{i}\right| _g\}^d_{i=1}\) by a planar rotation of \(\mathbf{a }\) onto \(\frac{{\mathbf{c }}^{(1)}}{\Vert {\mathbf{c }}^{(1)}\Vert }\) by angle \(\chi \). In particular, if \(\chi =0\), the other spatial generators do not change their direction. This allows us to still distinguish spatial generators and angular generators in our adapted frame.

Next we provide for each \(g \in SE(d)\) the explicit construction of a rotation matrix \(\mathbf{R }^{\mathbf{c }(g)}\) and a scaling by \(\mathbf{M }_{\mu ^{-1}}\) on \(T_g(SE(d))\), which maps frame \(\{\left. \mathcal {A}_{1}\right| _g,\ldots ,\left. \mathcal {A}_{n_d}\right| _{g}\}\) onto \(\{\left. \mathcal {B}_{1}\right| _g,\ldots ,\left. \mathcal {B}_{n_d}\right| _{g}\}\).

The construction for \(d>2\) is technical and provided in Theorem A in Appendix 1. However, the whole construction of the rotation matrix \(\mathbf{R }^{\mathbf{c }}\) via a concatenation of two subsequent rotations is similar to the case \(d=2\) that we will explain next.

Consider \(d=2\) where the frames \(\{\mathcal {A}_{1},\mathcal {A}_{2},\mathcal {A}_{3}\}\) and \(\{\mathcal {B}_{1},\mathcal {B}_{2},\mathcal {B}_{3}\}\) are depicted in Fig. 5 The explicit relation between the normalized gauge frame and the left-invariant vector field frame is given by

with \(\underline{\mathcal {A}}:=(\mathcal {A}_1,\mathcal {A}_{2},\mathcal {A}_{3})^T\), \(\underline{\mathcal {B}}:=(\mathcal {B}_1,\mathcal {B}_{2},\mathcal {B}_{3})^T\), and with rotation matrix

where the rotation angles are the deviation from horizontality angle \(\chi \) and the spherical angle

Recall that \(\chi \) is given by (35). The multiplication \(\mathbf{M }_{\mu }^{-1}\underline{\mathcal {A}}\) ensures that each of the vector fields in the locally adaptive frame is normalized w.r.t. the  -metric, recall (23).

-metric, recall (23).

Remark 2

When imposing isotropy (w.r.t. the metric  ) in the plane orthogonal to \(\mathcal {B}_{1}\), there is a unique choice \(\mathbf{R }^{\mathbf{c }}\) mapping \((1,0,0)^T\) onto \((\mu c^{1},\mu c^{2},c^{3})^T\) such that it keeps the other spatial generator in the spatial subspace of \(T_g(SE(2))\) (and with \(\chi =0 \Leftrightarrow \mathcal {B}_{2}=\mu ^{-1}\mathcal {A}_{2}\)). This choice is given by (37).

) in the plane orthogonal to \(\mathcal {B}_{1}\), there is a unique choice \(\mathbf{R }^{\mathbf{c }}\) mapping \((1,0,0)^T\) onto \((\mu c^{1},\mu c^{2},c^{3})^T\) such that it keeps the other spatial generator in the spatial subspace of \(T_g(SE(2))\) (and with \(\chi =0 \Leftrightarrow \mathcal {B}_{2}=\mu ^{-1}\mathcal {A}_{2}\)). This choice is given by (37).

The generalization to the d-dimensional case of the construction of a locally adaptive frame \(\{\mathcal {B}_{i}\}_{i=1}^{n_d}\) from \(\{\mathcal {A}_{i}\}_{i=1}^{n_d}\) and the tangent vector \(\mathbf{c }\) of a given exponential curve fit \(\tilde{\gamma }^{\mathbf{c }}_g(\cdot )\) to data \(\tilde{U}:SE(d)\rightarrow \mathbb {R}\) is explained in Theorem 7 in Appendix 1.

4 Exponential Curve Fits in \(\mathbb {R}^{d}\)

In this section we reformulate the classical construction of a locally adaptive frame to image f at location \(\mathbf{x } \in \mathbb {R}^d\), in a group-theoretical way. This reformulation seems technical at first sight, but helps in understanding the formulation of projected exponential curve fits in the higher dimensional Lie group SE(d).

4.1 Exponential Curve Fits in \(\mathbb {R}^{d}\) of the First Order

We will take the structure tensor approach [9, 48], which will be shown to yield first-order exponential curve fits.

The Gaussian gradient

with Gaussian kernel

is used in the definition of the structure matrix:

with \(s=\frac{1}{2}\sigma ^2_{s}\), and \(\rho =\frac{1}{2}\sigma ^2_{\rho }\) the scale of regularization typically yielding a non-degenerate and positive definite matrix. In the remainder we use short notation \(\mathbf {S}^{s,\rho }:=\mathbf {S}^{s,\rho }(f)\). The structure matrix appears in solving the following optimization problem where for all \(\mathbf{x } \in \mathbb {R}^{d}\) we aim to find optimal tangent vector

In this optimization problem we find the tangent \(\mathbf{c }^{*}(\mathbf{x })\) which minimizes a (Gaussian) weighted average of the squared directional derivative \(|\nabla ^{s}f(\mathbf{x }') \cdot \mathbf{c }|^2\) in the neighborhood of \(\mathbf{x }\). The second identity in (41), which directly follows from the definition of the structure matrix, allows us to solve optimization problem (41) via the Euler–Lagrange equation

since the minimizer is found as the eigenvector \(\mathbf{c }^{*}(\mathbf{x })\) with the smallest eigenvalue \(\lambda _1\).

Now let us put Eq. (41) in group-theoretical form by reformulating it as an exponential curve fitting problem. This is helpful in our subsequent generalizations to SE(d). On \(\mathbb {R}^{d}\) exponential curves are straight lines:

and on \(T(\mathbb {R}^{d})\) we impose the standard flat metric tensor  . In (41) we replace the directional derivative by a time derivative (at \(t=0\)) when moving over an exponential curve:

. In (41) we replace the directional derivative by a time derivative (at \(t=0\)) when moving over an exponential curve:

where

Because in (41) we average over directional derivatives in the neighborhood of \(\mathbf{x }\) we now average the time derivatives over a family of neighboring exponential curves \(\gamma _{\mathbf{x }',\mathbf{x }}^{\mathbf{c }}(t)\), which are defined to start at neighboring positions \(\mathbf{x }'\) but having the same spatial velocity as \(\gamma _{\mathbf{x }}^{\mathbf{c }}(t)\). In \(\mathbb {R}^{d}\) the distinction between \(\gamma _{\mathbf{x }',\mathbf{x }}^{\mathbf{c }}(t)\) and \(\gamma _{\mathbf{x }'}^{\mathbf{c }}(t)\) is not important but it will be in the SE(d)-case.

Definition 1

Let \(\mathbf{c }^{*}(\mathbf{x }) \in T_{\mathbf{x }}(\mathbb {R}^{d})\) be the minimizer in (44). We say \(\gamma _{\mathbf{x }}(t)= \mathbf{x }+\exp _{\mathbb {R}^{d}}({t \mathbf{c }^{*}(\mathbf{x }))}\) is the first-order exponential curve fit to image data \(f: \mathbb {R}^{d} \rightarrow \mathbb {R}\) at location \(\mathbf{x }\).

4.2 Exponential Curve Fits in \(\mathbb {R}^{d}\) of the Second Order

For second-order exponential curve fits we need the Hessian matrix defined by

with \(G_s\) the Gaussian kernel given in Eq. (39). From now on we use short notation \(\mathbf{H }^{s}:=\mathbf{H }^{s}(f)\). When using the Hessian matrix for curve fitting we aim to solve

In this optimization problem we find the tangent \(\mathbf{c }^{*}(\mathbf{x })\) which minimizes the second-order directional derivative of (Gaussian) regularized data \(G_{s} * f\). When all Hessian eigenvalues have the same sign we can solve the optimization problem (47) via the Euler–Lagrange equation

and the minimizer is found as the eigenvector \(\mathbf{c }^{*}(\mathbf{x })\) with the smallest eigenvalue \(\lambda _1\).

Family of neighboring exponential curves, given a fixed point \(g \in SE(2)\) and a fixed tangent vector \(\mathbf{c }=\mathbf{c }(g) \in T_{g}(SE(2))\). Left our choice of family of exponential curves \(\gamma _{h,g}^{\mathbf{c }}\) for neighboring \(h \in SE(2)\). Right exponential curves \(\gamma ^{\mathbf{c }}_h\) with \(\mathbf{c }=\mathbf{c }(g)\) are not suited for local averaging in our curve fits. The red curves start from g (indicated with a dot), the blue curves from \(h\ne g\) (Color figure online)

Now, we can again put Eq. (47) in group-theoretical form by reformulating it as an exponential curve fitting problem. This is helpful in our subsequent generalizations to SE(d). We again rely on exponential curves as defined in (43). In (47) we replace the second-order directional derivative by a second-order time derivative (at \(t=0\)) when moving over an exponential curve:

Remark 3

In general the eigenvalues of Hessian matrix \(\mathbf{H }^s\) do not have the same sign. In this case we still take \(\mathbf {c}^*(\mathbf {x})\) as the eigenvector with smallest absolute eigenvalue (representing minimal absolute principal curvature), though this no longer solves (47).

Definition 2

Let \(\mathbf{c }^{*}(\mathbf{x }) \in T_{\mathbf{x }}(\mathbb {R}^{d})\) be the minimizer in (49). We say \(\gamma _{\mathbf{x }}(t)= \mathbf{x }+\exp _{\mathbb {R}^{d}}({t \mathbf{c }^{*}(\mathbf{x }))}\) is the second-order exponential curve fit to image data \(f: \mathbb {R}^{d} \rightarrow \mathbb {R}\) at location \(\mathbf{x }\).

Remark 4

In order to connect optimization problem (49) with the first-order optimization (44), we note that (49) can also be written as an optimization over a family of curves \(\gamma _{\mathbf{x }',\mathbf{x }}^{\mathbf{c }}\) defined in (45):

because of linearity of the second-order time derivative.

5 Exponential Curve Fits in SE(2)

As mentioned in the introduction, we distinguish between two approaches: a first-order optimization approach based on a structure tensor on SE(2), and a second-order optimization approach based on the Hessian on SE(2). The first-order approach is new, while the second-order approach formalizes the results in [28, 34]. They also serve as an introduction to the new, more technical, SE(3)-extensions in Sect. 6.

All curve optimization problems are based on the idea that a curve (or a family of curves) fits the data well if a certain quantity is preserved along the curve. This preserved quantity is the data \(\tilde{U}(\tilde{\gamma }(t))\) for the first-order optimization, and the time derivative \(\frac{d}{dt}\tilde{U}(\tilde{\gamma }(t))\) or the gradient \(\nabla \tilde{U}(\tilde{\gamma }(t))\) for the second-order optimization. After introducing a family of curves similar to the ones used in Sect. 4 we will, for all three cases, first pose an optimization problem, and then give its solution in a subsequent theorem.

In this section we rely on group-theoretical tools explained in Sect. 2 (only the case \(d = 2\)), listed in panels (a) and (b) in our table of notations presented in Appendix 5. Furthermore, we introduce notations listed in the first part of panel (c) in our table of notations in Appendix 5.

5.1 Neighboring Exponential Curves in SE(2)

Akin to (45) we fix reference point \(g \in SE(2)\) and velocity components \(\mathbf{c }=\mathbf{c }(g) \in \mathbb {R}^3\), and we shall rely on a family \(\{\tilde{\gamma }_{h,g}^{\mathbf{c }}\}\) of neighboring exponential curves around \(\tilde{\gamma }^{\mathbf{c }}_g\). As we will show in subsequent Lemma 1 neighboring curve \(\tilde{\gamma }^{\mathbf{c }}_{h,g}\) departs from h and has the same spatial and rotational velocity as the curve \(\tilde{\gamma }^{\mathbf{c }}_g\) departing from g. This geometric idea is visualized in Fig. 6, where it is intuitively explained why one needs the initial velocity vector \(\tilde{\mathbf{R }}_{h^{-1}g}\mathbf{c }\), instead of \(\mathbf{c }\) in the following definition for the exponential curve departing from a neighboring point h close to g.

Definition 3

Let \(g \in SE(2)\) and \(\mathbf{c }=\mathbf{c }(g) \in \mathbb {R}^{3}\) be given. Then we define the family \(\{\tilde{\gamma }_{h,g}^{\mathbf{c }}\}\) of neighboring exponential curves

with rotation matrix \(\tilde{\mathbf{R }}_{h^{-1}g} \in SO(3)\) defined by

for all \(g=(\mathbf{x },\mathbf{R }) \in SE(2)\) and all \(h=(\mathbf{x }',\mathbf{R }') \in SE(2)\), with \(\mathbf{R },\mathbf{R }' \in SO(2)\) a counterclockwise rotation by, respectively, angle \(\theta \) and \(\theta '\).

Lemma 1

Exponential curve \(\tilde{\gamma }_{h,g}^{\mathbf{c }}\) departing from \(h\in SE(2)\) given by (51) has the same spatial and angular velocity as exponential curve \(\tilde{\gamma }^{\mathbf{c }}_g\) departing from \(g \in SE(2)\).

On the Lie algebra level, we have that the initial velocity component vectors of the curves \(\tilde{\gamma }^{\mathbf{c }}_g\) and \(\tilde{\gamma }_{h,g}^{\mathbf{c }}\) relate via \(\mathbf{c } \mapsto \tilde{\mathbf{R }}_{h^{-1}g} \mathbf{c }\).

On the Lie group level, we have that the curves themselves \(\tilde{\gamma }^{\mathbf{c }}_g(\cdot )=(\mathbf{x }_{g}(\cdot ),\mathbf{R }_{g}(\cdot ))\), \(\tilde{\gamma }_{h,g}^{\mathbf{c }}(\cdot )= (\mathbf{x }_{h}(\cdot ),\mathbf{R }_{h}(\cdot ))\) relate via

Proof

The proof follows from the proof of a more general theorem on the SE(3) case which follows later (in Lemma 3).

\(\square \)

Remark 5

Eq. (53) is the extension of Eq. (45) on \(\mathbb {R}^{2}\) to the SE(2) group.

Additional geometric background is given in Appendix 2.

5.2 Exponential Curve Fits in SE(2) of the First Order

For first-order exponential curve fits we solve an optimization problem similar to (44) given by

with \(\tilde{V}=\tilde{G}_{\mathbf{s }}*\tilde{U}\), \(g=(\mathbf{x },\mathbf{R })\), \(h=(\mathbf{x }',\mathbf{R }')\) and \(\mathrm{d}\overline{\mu }(h)=\mathrm{d}\mathbf{x }' \mathrm{d}\mu _{SO(2)}(\mathbf{R }')=\mathrm{d}\mathbf{x }' \mathrm{d}\theta '\). Here we first regularize the data with spatial and angular scale \(\mathbf{s }=(s_{p},s_o)\) and then average over a family of curves where we use spatial and angular scale \(\varvec{\rho }=(\rho _{p},\rho _o)\). Here \(s_{p},\rho _p>0\) are isotropic scales on \(\mathbb {R}^{2}\) and \(s_{o}, \rho _o>0\) are scales on \(S^{1}\) of separable Gaussian kernels, recall (27). Recall also (24) for the definition of the norm \(\Vert \cdot \Vert _{\mu }\). When solving this optimization problem the following structure matrix appears

In the remainder we use short notation \(\mathbf{S }^{\mathbf{s },\varvec{\rho }}:=\mathbf{S }^{\mathbf{s },\varvec{\rho }}(\tilde{U})\). We assume that \(\tilde{U}\), \(\varvec{\rho }, \mathbf{s }\), and g are chosen such that \(\mathbf{S }^{\mathbf{s },\varvec{\rho }}(g)\) is a non-degenerate matrix. The optimization problem is solved in the next theorem.

Theorem 1

(First-Order Fit via Structure Tensor) The normalized eigenvector \(\mathbf{M }_{\mu }\mathbf{c }^{*}(g)\) with smallest eigenvalue of the rescaled structure matrix \(\mathbf{M }_{\mu }\mathbf{S }^{\mathbf{s },\varvec{\rho }}(g)\mathbf{M }_{\mu }\) provides the solution \(\mathbf{c }^{*}(g)\) to optimization problem (54).

Proof

We will apply four steps. In the first step we write the time derivative as a directional derivative, in the second step we express the directional derivative in the gradient. In the third step we put the integrand in matrix-vector form. In the final step we express our optimization functional in the structure tensor and solve the Euler–Lagrange equations.

For the first step we use (51) and the fundamental property (17) of exponential curves such that via application of (13):

where we use short notation \(\tilde{\mathbf {R}}_{h^{-1}g} \mathbf{c } = \sum _{i=1}^{3} (\tilde{\mathbf {R}}_{h^{-1}g} \mathbf{c })^i \mathcal {A}_i |_h\).

In the second step we use the definition of the gradient (28) and the metric tensor (23) to rewrite this expression to

Then, in the third step we write this in vector-matrix form and we obtain via (28)

where we used the fact that \(\mathbf{M }_{\mu ^{2}}\) and \(\tilde{\mathbf {R}}_{h^{-1}g}^{T}\) commute.

Finally, we use the structure tensor definition (55) to rewrite the convex optimization functional in (54) as

while the boundary condition \(\Vert \mathbf{c }\Vert _{\mu }=1\) can be written as

The Euler–Lagrange equation reads \(\nabla \mathcal {E}(\mathbf{c }^{*})=\lambda _{1}\nabla \varphi (\mathbf{c }^{*})\), with \(\lambda _{1}\) the smallest eigenvalue of \(\mathbf{M }_{\mu }\mathbf{S }^{\mathbf{s },\varvec{\rho }}(g)\mathbf{M }_{\mu }\) and we have

from which the result follows. \(\square \)

The next remark explains the frequent presence of the \(\mathbf{M }_{\mu }\) matrices in (69).

Remark 6

The diagonal \(\mathbf{M }_{\mu }\) matrices enter the functional due to the gradient definition (28), and they enter the boundary condition via \(\Vert \mathbf{c }\Vert _{\mu }^2=\mathbf{c }^T \mathbf{M }_{\mu ^2} \mathbf{c }=1\). In both cases they come from the metric tensor (23). Parameter \(\mu \) which controls the stiffness of the exponential curves has physical dimension \([\text {Length}]^{-1}\). As a result, the normalized eigenvector \(\mathbf {M}_{\mu } \mathbf{c }^{*}(g)\) is, in contrast to \(\mathbf{c }^{*}(g)\), dimensionless.

5.3 Exponential Curve Fits in SE(2) of the Second Order

We now discuss the second-order optimization approach based on the Hessian matrix. At each \(g\in SE(2)\) we define a \(3\times 3\) non-symmetric Hessian matrix

and where i denotes the row index and j denotes the column index, and with \(\tilde{G}_{\mathbf{s }}\) a Gaussian kernel with isotropic spatial part as described in Eq. (27). In the remainder we write \(\mathbf{H }^{\mathbf{s }} :=\mathbf{H }^{\mathbf{s }}(\tilde{U})\).

Remark 7

As the left-invariant vector fields are non-commutative, there are many ways to define the Hessian matrix on SE(2), since the ordering of the left-invariant derivatives matters. From a differential geometrical point of view our choice (62) is correct, as we motivate in Appendix 4.

For second-order exponential curve fits we consider 2 different optimization problems. In the first case we minimize the second-order derivative along the exponential curve:

In the second case we minimize the norm of the first-order derivative of the gradient of the neighboring family of exponential curves:

with again \(\tilde{V}=\tilde{G}_{\mathbf{s }}*\tilde{U}\).

Remark 8

Optimization problem (63) can also be written as an optimization problem over the neighboring family of curves, as it is equivalent to problem:

In the next two theorems we solve these optimization problems.

Theorem 2

(Second-Order Fit via Symmetric Sum Hessian) Let \(g \in SE(2)\) be such that the eigenvalues of the rescaled symmetrized Hessian

have the same sign. Then the normalized eigenvector \(\mathbf{M }_{\mu }\mathbf{c }^{*}(g)\) with smallest eigenvalue of the rescaled symmetrized Hessian matrix provides the solution \(\mathbf{c }^{*}(g)\) of optimization problem (63).

Proof

Similar to the proof of Theorem 1 we first write the time derivative as a directional derivative using Eq. (13). Since now we have a second-order derivative this step is applied twice:

Then we write the result in matrix-vector form and split the matrix in a symmetric and anti-symmetric part

where only the symmetric part remains. Finally, the optimization functional in (63) (which is convex if the eigenvalues have the same sign) can be written as

Again we have the boundary condition \(\varphi (\mathbf{c })=\mathbf{c }^T \mathbf{M }_{\mu ^2} \mathbf{c }-1=0\). The result follows using the Euler–Lagrange formalism \(\nabla \mathcal {E}(\mathbf{c }^{*})=\lambda _{1}\nabla \varphi (\mathbf{c }^{*})\):

which boils down to finding the eigenvector with minimal absolute eigenvalue \(|\lambda _{1}|\) which gives our result. \(\square \)

Theorem 3

(Second-Order Fit via Symmetric Product Hessian) Let \(\rho _p,\rho _o, s_p,s_o>0\). The normalized eigenvector \(\mathbf{M }_{\mu }\mathbf{c }^{*}(g)\) with smallest eigenvalue of matrix

provides the solution \(\mathbf{c }^{*}(g)\) of optimization problem (64).

Proof

First we use the definition of the gradient (28) and then we again rewrite the time derivative as a directional derivative:

for \(\tilde{\mathbf{c }} = \tilde{\mathbf{R }}_{h^{-1} g} \mathbf{c }\), recall (52), and where \(\mu _i=\mu \) for \(i=1,2\) and \(\mu _i=1\) for \(i=3\). Here we use \(\tilde{\gamma }_{h,g}^{\mathbf{c }}(0)=h\), and the formula for left-invariant vector fields (10). Now insertion of (71) into the metric tensor  (23) yields

(23) yields

Finally, the convex optimization functional in (64) can be written as

Again we have the boundary condition \(\varphi (\mathbf{c })=\mathbf{c }^T \mathbf{M }_{\mu ^2} \mathbf{c }-1=0\) and the result follows by application of the Euler–Lagrange formalism: \(\nabla \mathcal {E}(\mathbf{c }^{*})=\lambda _{1}\nabla \varphi (\mathbf{c }^{*})\). \(\square \)

6 Exponential Curve Fits in SE(3)

In this section we generalize the exponential curve fit theory from the preceding chapter on SE(2) to SE(3). Because our data on the group SE(3) was obtained from data on the quotient \(\mathbb {R}^{3}\rtimes S^{2}\), we will also discuss projections of exponential curve fits on the quotient.

We start in Sect. 6.1 with some preliminaries on the quotient structure (3). Here we will also introduce the concept of projected exponential curve fits. Subsequently, in Sect. 6.2, we provide basic theory on how to obtain the appropriate family of neighboring exponential curves. More details can be found in Appendix 2. In Sect. 6.3 we formulate exponential curve fits of the first order as a variational problem. For that we define the structure tensor on SE(3), which we use to solve the variational problem in Theorems 4 and 5. Then we present the twofold algorithm for achieving torsion-free exponential curve fits. In Sect. 6.4 we formulate exponential curve fits of the second order as a variational problem. Then we define the Hessian tensor on SE(3), which we use to solve the variational problem in Theorem 6. Again torsion-free exponential curve fits are accomplished via a twofold algorithm.

Throughout this section we will rely on the differential geometrical tools of Sect. 2, listed in panels (a) and (b) in in Table 1 in Appendix 5. We also generalize concepts on exponential curve fits introduced in the previous section to the case \(d=3\) (requiring additional notation). They are listed in panel (c) in the table in Appendix 5.

6.1 Preliminaries on the Quotient \(\mathbb {R}^{3} \rtimes S^2\)

Now let us set \(d=3\), and let us assume input U is given and let us first concentrate on its domain. This domain equals the joint space \(\mathbb {R}^{3} \rtimes S^{2}\) of positions and orientations of dimension 5, which we identified with a 5-dimensional group quotient of SE(3), where SE(3) is of dimension 6 (recall (3)). For including a notion of alignment it is crucial to include the non-commutative relation in (4) between rotations and translation, and not to consider the space of positions and orientations as a flat Cartesian product. Therefore, we model the joint space of positions and orientations as the Lie group quotient (3), where

for reference axis \(\mathbf{a }=\mathbf e _{z}=(0,0,1)^T\). Within this quotient structure two rigid body motions \(g=(\mathbf{x },\mathbf{R }), g'=(\mathbf{x }',\mathbf{R }') \in SE(3)\) are equivalent if

Furthermore, one has the action \(\odot \) of \(g=(\mathbf{x },\mathbf{R }) \in SE(3)\) onto \((\mathbf y ,\mathbf{n }) \in \mathbb {R}^{3} \times S^{2}\), which is defined by

As a result we have

Thereby, a single element in \(\mathbb {R}^{3} \rtimes S^{2}\) can be considered as the equivalence class of all rigid body motions that map reference position and orientation \((\mathbf{0 },\mathbf{a })\) onto \((\mathbf{x },\mathbf{n })\). Similar to the common identification of \(S^{2}\equiv SO(3)/SO(2)\), we denote elements of the Lie group quotient \(\mathbb {R}^{3}\rtimes S^{2}\) by \((\mathbf{x },\mathbf{n })\).

6.1.1 Legal Operators

Let us recall from Sect. 3 that exponential curve fits induce gauge frames. Note that both the induced gauge frame \(\{\mathcal {B}_1,\ldots , \mathcal {B}_{6}\}\) and the non-adaptive frame \(\{\mathcal {A}_{1},\ldots ,\mathcal {A}_{6}\}\) are defined on the Lie group SE(3), and cannot be defined on the quotient. Nevertheless, combinations of them can be well defined on \(\mathbb {R}^{3}\rtimes S^2\) (e.g., \(\Delta _{\mathbb {R}^{3}}=\mathcal {A}_{1}^{2}+\mathcal {A}_{2}^{2}+\mathcal {A}_{3}^{2}\) is well defined on the quotient). This brings us to the definition of so-called legal operators, as shown in [29, Thm. 1]. In short, the operator \(\tilde{U} \mapsto \tilde{\varPhi }(\tilde{U})\) is legal (left-invariant and well defined on the quotient) if and only if

recall (7), where

with the \(\mathbf{R }_\mathbf{e _{z},\alpha }\) the counterclockwise rotation about \(\mathbf e _{z}\). Such legal operators relate one-to-one to operators \(\varPhi : \mathbb {L}_{2}(\mathbb {R}^{3} \rtimes S^{2}) \rightarrow \mathbb {L}_{2}(\mathbb {R}^{3} \rtimes S^{2})\) via

relying consequently on (1).

6.1.2 Projected Exponential Curve Fits

Action (74) allows us to map a curve \(\tilde{\gamma }(\cdot )=(\mathbf{x }(\cdot ),\mathbf{R }(\cdot ))\) in SE(3) onto a curve \((\mathbf{x }(\cdot ),\mathbf{n }(\cdot ))\) on \(\mathbb {R}^{3} \rtimes S^2\) via

This can be done with exponential curve fits \(\tilde{\gamma }^{\mathbf{c }=\mathbf{c }^{*}(g)}_{g}(t)\) to define projected exponential curve fits.

Definition 4

We define for \(g=(\mathbf{x },\mathbf{R }_{\mathbf{n }})\) the projected exponential curve fit

Lemma 2

The projected exponential curve fit is well defined on the quotient, i.e., the right-hand side of (78) is independent of the choice of \(\mathbf{R }_{\mathbf{n }}\) s.t. \(\mathbf{R }_{\mathbf{n }} \mathbf e _z = \mathbf{n }\), if the optimal tangent found in our fitting procedure satisfies:

and for all \(g\in SE(3)\), with

Illustration of the family of curves \(\tilde{\gamma }_{h,g}^{\mathbf{c }}\) in SE(3). Left The (spatially projected) exp-curve \(t \mapsto P_{\mathbb {R}^{3}}\tilde{\gamma }^{\mathbf{c }}_{g}(t)\), with \(g=(\mathbf{x },\mathbf{R }_{\mathbf{n }})\) in red. The frames indicate the rotation part \(P_{SO(3)}\tilde{\gamma }^{\mathbf{c }}_{g}(t)\), which for clarity we depicted only at two time instances t. Middle neighboring exp-curve \(t \mapsto \tilde{\gamma }^{\mathbf{c }}_{g,h}(t)\) with \(h=(\mathbf{x }',\mathbf{R }_{\mathbf{n }})\), \(\mathbf{x }\ne \mathbf{x }'\) in blue, i.e., neighboring exp-curve departing with same orientation and different position. Right exp-curve \(t \mapsto \tilde{\gamma }^{\mathbf{c }}_{g,h}(t)\) with \(h=(\mathbf{x },\mathbf{R }_{\mathbf{n }'})\), \(\mathbf{n }'\ne \mathbf{n }\), i.e., the neighboring exp-curve departing with same position and different orientation (Color figure online)

Proof

For well-posed projected exponential curve fits we need the right-hand side of (78) to be independent of \(\mathbf{R }_{\mathbf{n }}\) s.t. \(\mathbf{R }_{\mathbf{n }} \mathbf e _z = \mathbf{n }\), i.e., it should be invariant under \(g \rightarrow g h_{\alpha }\). Therefore, we have the following constraint on the fitted curves:

Then the constraint on the optimal tangent (79) follows from fundamental identity

which holdsFootnote 2 for all \(h_{\alpha }\). We apply this identity (82) to the right-hand side of (81) and use the definition of \(\odot \) defined in (74) yielding:

Finally our constraint (79) follows from \(\mathbf Z _{\alpha }^T=\mathbf Z _{\alpha }^{-1}\). \(\square \)

6.2 Neighboring Exponential Curves in SE(3)

Here we generalize the concept of family of neighboring exponential curves (45) in the \(\mathbb {R}^d\)-case, and Definition 3 in the SE(2)-case, to the SE(3)-case.

Definition 5

Given a fixed reference point \(g \in SE(3)\) and velocity component \(\mathbf{c }=\mathbf{c }(g)=(\mathbf{c }^{(1)}(g),\mathbf{c }^{(2)}(g)) \in \mathbb {R}^6\), we define the family \(\{\tilde{\gamma }_{h,g}^{\mathbf{c }}(\cdot )\}\) of neighboring exponential curves by

with rotation matrix \(\tilde{\mathbf{R }}_{h^{-1}g} \in SO(6)\) defined by

for all \(g=(\mathbf{x },\mathbf{R }), h=(\mathbf{x }',\mathbf{R }') \in SE(3)\).

The next lemma motivates our specific choice of neighboring exponential curves. The geometric idea is visualized in Fig. 7 and is in accordance with Fig. 6 on the SE(2)-case.

Lemma 3

Exponential curve \(\tilde{\gamma }_{h,g}^{\mathbf{c }}\) departing from \(h=(\mathbf{x }',\mathbf{R }')\in SE(3)\) given by (84) has the same spatial and rotational velocity as exponential curve \(\tilde{\gamma }^{\mathbf{c }}_g\) departing from \(g=(\mathbf{x },\mathbf{R }) \in SE(3)\).

On the Lie algebra level, we have that the initial velocity component vectors of the curves \(\tilde{\gamma }^{\mathbf{c }}_g\) and \(\tilde{\gamma }_{h,g}^{\mathbf{c }}\) relate via \(\mathbf{c } \mapsto \tilde{\mathbf{R }}_{h^{-1}g} \mathbf{c }\).

On the Lie group level, we have that the curves themselves \(\tilde{\gamma }^{\mathbf{c }}_g(\cdot )=(\mathbf{x }_{g}(\cdot ),\mathbf{R }_{g}(\cdot ))\), \(\tilde{\gamma }_{h,g}^{\mathbf{c }}(\cdot )= (\mathbf{x }_{h}(\cdot ),\mathbf{R }_{h}(\cdot ))\) relate via

Proof

See Appendix 2. \(\square \)

Remark 9

Lemma 3 extends Lemma 1 to the SE(3) case. When projecting the curves \(\tilde{\gamma }_{g}^{\mathbf{c }}\) and \(\tilde{\gamma }_{h,g}^{\mathbf{c }}\) into the quotient, one has that curves \(\tilde{\gamma }_{g}^{\mathbf{c }} \odot (\mathbf{0 },\mathbf{a })\) and \(\tilde{\gamma }_{h,g}^{\mathbf{c }} \odot (\mathbf{0 },\mathbf{a })\) in \(\mathbb {R}^{3}\rtimes S^2\) carry the same spatial and angular velocity.

Remark 10

In order to construct the family of neighboring exponential curves in SE(3), one applies the transformation \(\mathbf{c } \mapsto \tilde{\mathbf{R }}_{h^{-1}g} \mathbf{c }\) in the Lie algebra. Such a transformation preserves the left-invariant metric:

for all \(h \in SE(3)\) and all \(t \in \mathbb {R}\). For further differential geometrical details see Appendix 2.

6.3 Exponential Curve Fits in SE(3) of the First Order

Now let us generalize the first-order exponential curve fits of Theorem 1 to the setting of \(\mathbb {R}^{3}\rtimes S^{2}\). Here we first consider the following optimization problem on SE(3) (generalizing (44)):

Recall that \(\Vert \cdot \Vert _{\mu }\) was defined in (24), \(\tilde{V}\) in (26), and \(\mu \) in (25). The reason for including the condition \(c^6=0\) will become clear after defining the structure matrix.

6.3.1 The Structure Tensor on SE(3)

We define structure matrices \(\mathbf {S}^{\mathbf{s },\varvec{\rho }}\) of \(\tilde{U}\) by

where we use matrix \(\tilde{\mathbf{R }}_{h^{-1}g}\) defined in Eq. (85). Again we use short notation \(\mathbf {S}^{\mathbf{s },\varvec{\rho }} :=\mathbf {S}^{\mathbf{s },\varvec{\rho }} (\tilde{U})\).

Remark 11

By construction (1) and (10) we have

so the null space of our structure matrix includes

Remark 12

We assume that \(\mathbf{s }=(s_{p}, s_{o})\) and function \(\tilde{U}\) are chosen in such a way that the null space of the structure matrix is precisely equal to \(\mathcal {N}\) (and not larger).

Due to the assumption in Remark 12, we need to impose the condition

in our exponential curve optimization to avoid non-uniqueness of solutions. To clarify this, we note that the optimization functional in (88) can be rewritten as

as we will show in the next theorem where we solve the optimization problem for first-order exponential curve fits. Indeed, for uniqueness we need (91) as otherwise we would have \(\mathcal {E}(\mathbf{c }+\mathbf{M }_{\mu ^{-2}}\mathbf{c }_0)=\mathcal {E}(\mathbf{c })\) for all \(\mathbf{c }_0 \in \mathcal {N}\).

Theorem 4

(First-Order Fit via Structure Tensor) The normalized eigenvector \(\mathbf{M }_{\mu }\mathbf{c }^{*}(g)\) with smallest non-zero eigenvalue of the rescaled structure matrix \(\mathbf{M }_{\mu }\mathbf{S }^{\mathbf{s },\varvec{\rho }}(g)\mathbf{M }_{\mu }\) provides the solution \(\mathbf{c }^{*}(g)\) to optimization problem (88).

Proof

All steps (except for the final step of this proof, where the additional constraint \(c^6=0\) enters the problem) are analogous to the proof of the first-order method in the SE(2) case: the proof of Theorem 1. We will now shortly repeat these first steps. First we rewrite the time derivative as a directional derivative which is then rewritten to the gradient

We then put this result in matrix-vector form:

This again yields the following optimization functional

So, just as in the SE(2)-case we have the following Euler–Lagrange equations:

Again the second equality in (95) follows from the first by multiplication by \(\mathbf{M }_{\mu }^{-1}\).

Finally, the constraint \(c^{6}=0\) is included in our optimization problem (88) to excluded the null space (90) from the optimization; therefore, we take the eigenvector with the smallest non-zero eigenvalue providing us the final result. \(\square \)

6.3.2 Projected Exponential Curve Fits in \(\mathbb {R}^{3}\rtimes S^{2}\)

In reducing the problem to \(\mathbb {R}^{3} \rtimes S^{2}\) we first note that

with \(\mathbf Z _{\alpha }\) defined in Eq. (80), and where we recall \(h_{\alpha }=(\mathbf{0 },\mathbf{R }_\mathbf{e _{z},\alpha })\).

In the following theorem we summarize the well-posedness of our projected curve fits on data \(U:\mathbb {R}^{3}\rtimes S^2 \rightarrow \mathbb {R}\) and use the quotient structure to simplify the structure tensor.

Theorem 5

(First-Order Fit and Quotient Structure) Let \(g=(\mathbf{x },\mathbf{R }_{\mathbf{n }})\) and \(h=(\mathbf{x }',\mathbf{R }_{\mathbf{n }'})\) where \(\mathbf{R }_{\mathbf{n }}\) and \(\mathbf{R }_{\mathbf{n }'}\) denote any rotation which maps \(\mathbf e _z\) onto \(\mathbf{n }\) and \(\mathbf{n }'\), respectively. Then, the structure tensor defined by (89) can be expressed as

The normalized eigenvector \(\mathbf{M }_{\mu }\mathbf{c }^{*}(\mathbf{x },\mathbf{R }_{\mathbf{n }})\) with smallest non-zero eigenvalue of the rescaled structure matrix \(\mathbf{M }_{\mu }\mathbf{S }^{\mathbf{s },\varvec{\rho }}(g)\mathbf{M }_{\mu }\) provides the solution of (88) and defines a projected curve fit in \(\mathbb {R}^{3}\rtimes S^{2}\):

which is independent of the choice of \(\mathbf{R }_{\mathbf{n }'}\) and \(\mathbf{R }_{\mathbf{n }}\).

Volume rendering of a 3D test-image. The real part of the orientation score (cf. Sect. 7.1) provides us a density U on \(\mathbb {R}^{3}\rtimes S^2\). Left spatial parts of exponential curves (in black) aligned with spatial generator \(\left. \mathcal {A}_{3}\right| _{({\mathbf{x }},\mathbf{R }_{{\mathbf{n }}_{max}({\mathbf{x }})})}\), where \({\mathbf{n }}_\mathrm{max}({\mathbf{x }})= \underset{{\mathbf{n }}\in S^{2}}{\text {argmax}} |U({\mathbf{x }},{\mathbf{n }})|\). Right spatial parts of our exponential curve fits Eq. (104) computed via the algorithm in Sect. 6.3.3, which better follow the curvilinear structures

Proof

The proof consists of two parts. First we prove that (97) follows from the structure tensor defined in (89). Then we use Lemma 2 to prove that our projected exponential curve fit (98) is well defined. For both we use Theorem 4 as our venture point.

For the first part of the proof we note that the integrand in the structure tensor definition Eq. (89) is invariant under \(h \mapsto h h_{\alpha }=h (\mathbf{0 },\mathbf{R }_\mathbf{e _{z},\alpha })\) on the integration variable. To show this we first note that, \(\mathbf Z _{\alpha }\) defined in (80), satisfies \(\mathbf Z _{\alpha }(\mathbf Z _{\alpha })^T=I\). Furthermore, we have

and \(\tilde{G}_{\varvec{\rho }}(h h_{\alpha })=\tilde{G}_{\varvec{\rho }}(h)\). Therefore, integration over third Euler-angle \(\alpha \) is no longer needed in the definition of the structure tensor (89) as it just produces a constant \(2\pi \) factor.

For the second part we apply Lemma 2 and thereby it remains to be shown that condition \(\mathbf{c }^{*}(g h_{\alpha })= \mathbf Z _{\alpha }^T \mathbf{c }^{*}(g)\) is satisfied. This directly follows from (96):

which shows our condition. \(\square \)

6.3.3 Torsion-Free Exponential Curve Fits of the First Order via a Twofold Approach

Theorem 4 provides us exponential curve fits that possibly carry torsion. From Eq. (22) we deduce that the torsion norm of such an exponential curve fit is given by \(|\tau |=\frac{1}{\Vert \mathbf{c }^{(1)}\Vert } (c^{1}c^{4}+c^{2}c^5 +c^{3}c^6)|\kappa |\). Together with the fact that we exclude the null space \(\mathcal {N}\) from our optimization domain by including constraint \(c^6=0\), this results in insisting on zero torsion along horizontal exponential curves where \(c^{1}=c^{2}=0\). Along other exponential curves torsion appears if \(c^{1}c^{4}+c^{2}c^5\ne 0\).

Now the problem is that insisting, a priori, on zero torsion for horizontal curves while allowing non-zero torsion for other curves is undesirable. On top of this, torsion is a higher order less-stable feature than curvature. Therefore, we would like to exclude it altogether from our exponential curve fits presented in Theorems 4 and 5, by a different theory and algorithm. The results of the algorithm show that even if structures do have torsion, the local exponential curve fits do not need to carry torsion in order to achieve good results in the local frame adaptation, see, e.g., Fig. 8.

The constraint of zero torsion forces us to split our exponential curve fit into a twofold algorithm:

- Step 1 :

-

Estimate at \(g \in SE(3)\) the spatial velocity part \(\mathbf{c }^{(1)}(g)\) from the spatial structure tensor.

- Step 2 :

-

Move to a different location \(g_{new} \in SE(3)\) where a horizontal exponential curve fit makes sense and then estimate the angular velocity \(\mathbf{c }^{(2)}\) from the rotation part of the structure tensor over there.

This forced splitting is a consequence of the next lemma.

Lemma 4

Consider the class of exponential curves with non-zero spatial velocity \(\mathbf{c }^{(1)}\ne \mathbf{0 }\) such that their spatial projections do not have torsion. Within this class the constraint \(c^{6}=0\) does not impose constraints on curvature if and only if the exponential curve is horizontal.

Proof

For a horizontal curve \(\tilde{\gamma }^{\mathbf{c }}_{g}(t)\) we have \(\chi =0 \Leftrightarrow c^{1}=c^{2}=0\) and indeed \(|\tau | = \frac{{\mathbf{c }}^{(1)} \cdot {\mathbf{c }}^{(2)} |\kappa |}{\Vert {\mathbf{c }}^{(1)}\Vert }=c^{6}|\kappa |=0\) and we see that constraints \(c^{6}=0\) and \(|\tau |=0\) reduce to only one constraint. The curvature magnitude stays constant along the exponential curve and the curvature vector at \(t=0\), recall Eq. (21), is in this case given by

which can be any vector orthogonal to spatial velocity \(\mathbf{c }^{(1)}=(0,0,c^{3})^T\). Now let us check whether the condition is necessary. Suppose \(t \mapsto \tilde{\gamma }_{g}^{\mathbf{c }}(t)\) is not horizontal, and suppose it is torsion free with \(c^6=0\). Then we have \(c^{1}c^{4}+c^{2}c^{5}=0\), as a result the initial curvature

is both orthogonal to vector \(\mathbf{c }^{(1)}=(c^1,c^2,c^3)^T\) and orthogonal to \((-c^2,c^1,0)^T\), and thereby constrained to a one dimensional subspace. \(\square \)

From these observations we draw the following conclusion for our exponential curve fit algorithms.

Conclusion In order to allow for all possible curvatures in our torsion-free exponential curve fits, we must relocate the exponential curve optimization at \(g \in SE(3)\) in \(\tilde{U}:SE(3) \rightarrow \mathbb {R}\) to a position \(g_{new} \in SE(3)\) where a horizontal exponential curve can be expected. Subsequently, we can use Lemma 3 to transport the horizontal and torsion-free curve through \(g_{new}\), back to a torsion-free exponential curve through g.

This conclusion is the central idea behind our following twofold algorithm for exponential curve fits.

6.3.4 Algorithm Twofold Approach

The algorithm follows the subsequent steps:

Step 1a Initialization. Compute structure tensor \(\mathbf {S}^{\mathbf{s },\varvec{\rho }}(g)\) from input image \(U:\mathbb {R}^{3} \times S^{2} \rightarrow \mathbb {R}^{+}\) via Eq. (97).

Step 1b Find the optimal spatial velocity:

for \(g=(\mathbf{x },\mathbf{R }_{\mathbf{n }}\)), which boils down to finding the eigenvector with minimal eigenvalue of the \(3\times 3\) spatial sub-matrix of the structure tensor (89).

Step 2a Given \(\mathbf{c }^{(1)}(g)\) we aim for an auxiliary set of coefficients, where we also take into account rotational velocity. To achieve this in a stable way we move to a different location in the group:

and apply the transport of Lemma 3 afterwards. At \(g_{new}\), we enforce horizontality, see Remark 13 below, and we consider the auxiliary optimization problem

Here zero deviation from horizontality (34) and zero torsion (22) is equivalent to the imposed constraint:

Step 2b The auxiliary coefficients \(\mathbf{c }_{new}(g_{new})=(0,0\), \(c^{3}(g_{new}), c^{4}(g_{new}),c^{5}(g_{new}),0)^{T}\) of a torsion-free, horizontal exponential curve fit \(\tilde{\gamma }_{g_{new}}^{\mathbf{c }_{new}}\) through \(g_{new}\). Now we apply transport (via Lemma 3) of this horizontal exponential curve fit towards the corresponding exponential curve through g:

This gives the final, torsion-free, exponential curve fit \(t \mapsto \tilde{\gamma }_{g}^{\mathbf{c }^{*}(g)}(t)\) in SE(3), yielding the final output projected curve fit

with \(g=(\mathbf{x },\mathbf{R }_{\mathbf{n }})\), recall Eq. (74).

Remark 13

In step 2a of our algorithm we jump to a new location \(g_{new}=(\mathbf{x },\mathbf{R }_{\mathbf{n }_{new}})\) with possibly different orientation \(\mathbf{n }_{new}\) such that spatial tangent vector

points in the same direction as \(\mathbf{n }_{new} \in S^2\), recall Eq. (31), from which it follows that \(\mathbf{n }_{new}\) is indeed given by (101). If \(\mathbf{c }^{(1)}=(c^{1},c^{2},c^{3})^T=\mathbf{a }=(0,0,1)^T\) then \(\mathbf{n }_{new}=\mathbf{n }\).

Lemma 5

The preceding algorithm is well defined on the quotient \(\mathbb {R}^{3}\rtimes S^{2}=SE(3)/(\{{\mathbf {0}}\}\times SO(2))\).

Proof

To show that the preceeding algorithm is well defined on the quotient, we need to show that the final result (104) is independent on both the choice of of \(\mathbf{R }_{\mathbf{n }} \in SO(3)\) s.t. \(\mathbf{R }_{\mathbf{n }}\mathbf e _{z}=\mathbf{n }\) and the choice of \(\mathbf{R }_{\mathbf{n }_\mathrm{new}} \in SO(3)\) s.t. \(\mathbf{R }_{\mathbf{n }_\mathrm{new}}\mathbf e _{z}=\mathbf{n }_{new}\).

First, we show independence on the choice of \(\mathbf{R }_{\mathbf{n }}\). We apply Lemma 2 and thereby it remains to be shown that condition \(\mathbf{c }^{*}_\mathrm{final}(g h_{\alpha })= \mathbf Z _{\alpha }^T \mathbf{c }^{*}_\mathrm{final}(g)\) is satisfied. This follows directly from Eq. (103) if as long as \(\mathbf{n }_{new}\) found in Step 2a is independent of the choice of \(\mathbf{R }_{\mathbf{n }}\). This property indeed follows from \(\mathbf{c }^{(1)}(g h_{\alpha }) = \mathbf{R }_\mathbf{e _{z},\alpha }^T \mathbf{c }^{(1)}(g)\) which can be proven analogously to (99). Then we have

So we conclude that (104) is indeed independent on the choice of \(\mathbf{R }_{\mathbf{n }}\).

Finally, Eq. (104) is independent of the choice of \(\mathbf{R }_{\mathbf{n }_\mathrm{new}}\). This follows from \(\mathbf{c }_\mathrm{new}(g h_{\alpha }) = \mathbf Z _{\alpha }^T \mathbf{c }_\mathrm{new}(g)\) in Step 2a. Then \(\mathbf{c }^{*}_\mathrm{final}\) in Eq. (103) is independent of the choice of \(\mathbf{R }_{\mathbf{n }_\mathrm{new}}\) because \(\mathbf Z _{\alpha }^{T}\) in \(\mathbf{c }_\mathrm{new} \mapsto \mathbf Z _{\alpha }^{T} \mathbf{c }_\mathrm{new}\) is canceled by \(\mathbf{R }_\mathrm{new} \mapsto \mathbf{R }_\mathrm{new} \mathbf{R }_\mathbf{e _{z},\alpha }\) in Eq. (103). \(\square \)

In Fig. 8 we provide an example of spatially projected exponential curve fits in SE(3) via the twofold approach. Here we see that the resulting gauge frames better follow the curvilinear structures of the data (in comparison to the normal left-invariant frame).

6.4 Exponential Curve Fits in SE(3) of the Second Order

In this section we will generalize Theorem 2 to the case \(d=3\), where again we include the restriction to torsion-free exponential curves.

6.4.1 The Hessian on SE(3)

For second-order curve fits we consider the following optimization problem:

with \(\tilde{V}=\tilde{G}_{\mathbf{s }}*\tilde{U}\). Before solving this optimization problem in Theorem 6, we first define the \(6\times 6\) non-symmetric Hessian matrix by

and where \(i=1,\ldots ,6\) denotes the row index, and \(j=1,\ldots , 6\) denotes the column index. Again we write \(\mathbf{H }^{\mathbf{s }}:=\mathbf{H }^{\mathbf{s }} (\tilde{U})\).

Theorem 6

(Second-Order Fit via Symmetric Sum Hessian) Let \(g \in SE(3)\) be such that the symmetrized Hessian matrix \(\frac{1}{2} \mathbf{M }_{\mu }^{-1}( \mathbf{H }^{\mathbf{s }}(g)+ (\mathbf{H }^{\mathbf{s }}(g))^T) \mathbf{M }_{\mu }^{-1}\) has eigenvalues with the same sign. Then the normalized eigenvector \(\mathbf{M }_{\mu }\mathbf{c }^{*}(g)\) with smallest absolute non-zero eigenvalue of the symmetrized Hessian matrix provides the solution \(\mathbf{c }^{*}(g)\) of optimization problem (106).

Proof

Similar to the proof of Theorem 2 (only now with summations from 1 to 5). Again we include our additional constraint \(c^6=0\) by taking the smallest non-zero eigenvalue. \(\square \)

Remark 14

The restriction to \(g \in SE(3)\) such that the eigenvalues of the symmetrized Hessian carry the same sign is necessary for a unique solution of the optimization. Note that in case of our first-order approach via the positive definite structure tensor, no such cumbersome constraints arise. In case \(g \in SE(3)\) is such that the eigenvalues of the symmetrized Hessian have different sign there are 2 options:

-

1.

Move towards a neighboring point where the Hessian eigenvalues have the same sign and apply transport (Lemma 3, Fig. 7) of the exponential curve fit at the neighboring point.

-

2.

Take \(\mathbf{c }^{*}(g)\) still as the eigenvector with smallest absolute eigenvalue (representing minimal absolute principal curvature), though this no longer solves (106).

In black the spatially projected part of exponential curve fits \(t \mapsto \gamma ^{\mathbf{c }}_{g}(t)\) of the second kind (fitted to the real part of the 3D invertible orientation score, for details see Fig. 10) of the 3D image visualized via volume rendering. Left output of the twofold approach outlined in Sect. 6.4.2. Right output of the twofold approach outlined in Appendix 3 with \(s_p=\frac{1}{2}\), \(s_o=\frac{1}{2} (0.4)^2\), \(\mu =10\)

6.4.2 Torsion-Free Exponential Curve Fits of the Second Order via a Twofold Algorithm

In order to obtain torsion-free exponential curve fits of the second order via our twofold algorithm, we follow the same algorithm as in Subsection 6.3.3, but now with the Hessian field \(\mathbf{H }^{\mathbf{s }}\) (107) instead of the structure tensor field.

Step 1a Initialization. Compute Hessian \(\mathbf {H}^{\mathbf{s }}(g)\) from input image \(U:\mathbb {R}^{3} \times S^{2} \rightarrow \mathbb {R}^{+}\) via Eq. (107).

Step 1b Find the optimal spatial velocity by (100) where we replace \(\mathbf{M }_{\mu ^2} \mathbf {S}^{\mathbf{s },\varvec{\rho }}(g) \mathbf{M }_{\mu ^2} \) by \(\mathbf {H}^{\mathbf{s }}(g)\).

Step 2a We again fit a horizontal curve at \(g_{new}\) given by (101). The procedure is done via (102) where we again replace \(\mathbf{M }_{\mu ^2} \mathbf {S}^{\mathbf{s },\varvec{\rho }}(g) \mathbf{M }_{\mu ^2} \) by \(\mathbf {H}^{\mathbf{s }}(g)\).

Step 2b Remains unchanged. We again apply Eq. (103) and Eq. (104).

There are some serious computational technicalities in the efficient computation of the entries of the Hessian for discrete input data, but this is outside the scope of this article and will be pursued in future work.

Remark 15

In Appendix 3 we propose another twofold second-order exponential curve fit method. Here one solves a variational problem for exponential curve fits where exponentials are factorized over, respectively, spatial and angular part. Empirically, this approach performs good (see, e.g., Fig. 9).

7 Image Analysis Applications