Abstract

Serious gaming claims to provide an interactive and motivational approach to learning; hence, it is being increasingly used in various disciplines, including dentistry. GRAPHIC (Games Research Applied to Public Health with Innovative Collaboration)-II, a serious game for dental public health, was used by dental undergraduates at King’s College London, in the 2013–2014 academic year. The aim was to explore the use of GRAPHIC and student perspectives on the game. Students were divided into two groups with 79 students in each group, based on timetabling schedules to use the game as part of their learning. The average number of submission attempts by students in group 1 and group 2 used to complete the game was 18.3 (SD = 14.45) and 8.9 (SD = 16.80), respectively (p < 0.001). Logged data also showed that more students in group 2 completed the game with only one attempt (n = 23), compared with group 1 (n = 4). Amongst these students there were four different patterns of satisfactory answers which constituted their chance to ‘win’ and a range of times. Across the two groups, a number of students completed the game with a high number (>30) of attempts (n = 18). These findings suggest that whilst some students may have completed the game using a collaborative approach, others may have used a random approach to complete the game. These two strategies are considered to hinder students from achieving learning outcomes within the game, and may be related to the limitations of the game in managing the role of failure. Feedback from students towards the game was positive overall with further development suggested. In summary, GRAPHIC contributed to dental public health education, and the logging system of gaming activities can be considered as a helpful feature of serious games, to permit academic staff to identify and assist students in achieving learning outcomes, and inform future game refinement.

Similar content being viewed by others

1 Introduction

Serious gaming is gaining momentum in an educational context as an interactive and motivational approach to learning (Jabbar and Felicia 2015; Ke et al. 2016; Boyle et al. 2016). Because of these advantages, and in comparison to traditional educational delivery methods, serious games are being increasingly used for training in governmental, military, health, and educational arenas (Zyda 2005; Boyle et al. 2016).

Serious games have been used for educating and training healthcare students and professionals, and it can be claimed they have positive effects on knowledge or skill improvement (Blakely et al. 2009; Akl et al. 2010; Graafland et al. 2012; Akl et al. 2013; Wang et al. 2016). In addition, serious games can provide opportunities for healthcare students to practice in safe environments (Blakely et al. 2009). Furthermore, Kron et al. (2010) and Lynch-Sauer et al. (2011) found that medical and nursing students respectively had positive attitudes towards computer games. Although serious games have not been widely used in dental education, they have been used in several subjects. For example, there are serious games for pre-clinical subjects such as dentine bonding (Amer et al. 2011) and alginate mixing (Hannig et al. 2013), and positive impact on learners were found in these dental serious games.

Dental public health is a field of dentistry, defined as “the science and art of preventing oral disease, promoting oral health and the quality of life through the organized efforts and informed choices of the society, organisations, public and private, communities and individuals” (Gallagher 2005). Therefore, it was considered important and helpful to apply serious gaming to dental public health education, where students can gain relevant experiences in online learning situations. The aims of this study were to investigate the use of a serious game for dental public health education by analysing a key feature of the game, the log function of the gaming activities of play-learners (dental students), and to explore student perspectives towards the game.

The paper will firstly describe the public health game GRAPHIC. The methods of this study will then be presented, followed by findings, discussion, and finally a summary of this study.

2 GRAPHIC-II

An intervention using the first iteration of the GRAPHIC (Games Research Applied to Public Health with Innovative Collaboration) Game or GRAPHIC-I was reported by O’Neill et al. (2012) as having potential for dental public health education following its first pilot iteration with undergraduate dental students. The learning outcome of GRAPHIC was to enhance dental student understanding of how to design health promotion programmes for a given population—in this case primary school children (O’Neill et al. 2012). In other words, by completing the game, students should be able to:

-

1.

identify the oral health needs and demands of a given population;

-

2.

recommend a series of possible actions in support of improving oral health;

-

3.

agree on a plan of action; and

-

4.

outline a monitoring and evaluation strategy.

To complete the game, students were firstly required to consider information on a virtual town, which constituted a learning scenario for the game. A variety of health promotion interventions were provided as possible options (Fig. 1), including ‘Restricting sugars intake’, ‘School lunchbox policy’, and ‘Healthy schools programme’. From these choices, students needed to use an evidence-based approach, by (a) reading recommended literature relating to each intervention, (b) considering if the evidence was strong enough, and then (c) evaluating whether an intervention was relevant to the learning scenario or not. After that, students were required to submit the five best options for health promotion interventions provided in the game.

Evaluation data and feedback from staff and students on GRAPHIC-I revealed that there was much room for an improved gaming interface, also including functionality, suggesting easier navigation and selection of health promotion initatives (Sipiyaruk 2013). GRAPHIC-II was devised as a new improved online serious game for the next cohort of dental students. It was developed as a stand-alone web-based game and, in this iteration, students had to achieve a score of 100% through a reasoned, evidence-based approach. Although the rules to complete the game were similar to the previous version, there was an improved design:

-

1.

The user interface was improved visually by having the colourful graphical display and functionally to increase the speed and ease of selecting possible intervention thus supporting learner progress through the game. This involved adding ‘GAME PROGRESS’ and ‘ACTION PLAN’ boxes (Fig. 2).

-

2.

Moreover, instead of a virtual community, this version used information from an African country as a learning scenario.

-

3.

Additionally, there was incorporation of an analytics function for the new version of the web-based game to log all gaming activity, including submitted answers, as part of the game engine. Therefore, this function could be used to reveal strategies employed by students to complete the game.

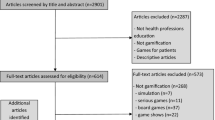

3 Methods

GRAPHIC-II was made available as an in-course requirement for one academic year (2013–14) of dental undergraduate students’ dental public health course at King’s College London Dental Institute. The learning outcomes were the same as in GRAPHIC-I; students were required to provide a set of the best five options to promote dental health in a given population scenario. The game system was first tested technically by the development team and by academic dental public health staff at KCL. Afterwards, the scientific content of the game and scoring system were reviewed and validated by the academic team.

To complete the game, students had to obtain a score of 100% and were permitted unlimited attempts to submit an answer. Rather than learners interacting with the game as a group, it was played by individuals as part of course requirements; thus providing the opportunity to measure individual activity through analytics. Students were first able to access the game on-campus where they were given staff support, and subsequently use and complete the game off-campus. Students were divided into two groups and used the game on successive weeks. They were required to firstly perform the game in class to build familiarity, and were then permitted to complete the game in their own time. Afterwards, students were required to complete a reflective assignment relating to their chosen health promotion initiatives.

The analytical data were automatically collected by the logging system of the game; this included when and how long each student logged into the game, how many times each student submitted their answers, and what answers they submitted. The anonymised data were exported in an Excel file for analysis. Descriptive analysis was performed to summarise and describe the basic features of the data, and independent t test was used to compare a different feature between the two groups. Additionally, students were encouraged to provide feedback and this was analysed thematically using a framework analysis (Spencer et al. 2013).

Ethical approval for this project was granted by the Biomedical Sciences, Dentistry, Medicine and Natural & Mathematical Sciences Research Ethics Subcommittee (BDM RESC), King’s College London (KCL) College Research Ethics Committees (CREC), application number BDM/13/14-117 on 15th July 2014. This set of anonymised data were analysed using SPSS Version 22.

4 Results

163 dental students from King’s College London were assigned to complete GRAPHIC-II. Five students were not available to complete the game on time and were excluded from the analysis and thus 79 students played GRAPHIC-II each week. The data from these 158 students are reported in this study, which included the number submission attempts, grouping of answers (list of health promotion interventions), and the time taken by students to achieve the required score of 100%.

Amongst the 158 students who completed the game, the average number of attempts to achieve a score of 100% was 13.6 (range 1–126, SD = 16.31). The average for group 1 students was 18.3 (range 1–57, SD = 14.45), whilst that for group 2 was 8.9 (range 1–126, SD = 16.80) (p < 0.001), as presented in Table 1. There were 23 students from group 2 (and only 4 students from group 1) who achieved 100% with the first attempt.

Regarding the best five options, students had to choose from the provided list of health promotion programmes; 11 patterns were used overall by this year group. Amongst the class, there were 27 students who completed the game by using only one attempt to submit their answers; these one-attempt submission students submitted four different patterns of answers. The speed of submission from logging onto the game varied widely from 3 min to 2 days (Median = 7 min, Mode = 7 min, Mean = 132.3 min, SD = 647.09), with evidence that students took varying times to submit one accurate response.

Exploring the behaviour of students who submitted their answers with a high number of attempts, 18 students took more than 30 attempts to complete the game, in varying patterns of time. The three highest numbers of submission attempts were 126, 74, and 57, as presented in Table 2. In term of submission rate (attempt/minute), amongst these students, the three highest submission rates were 2.74, 2.47, and 2.11 attempts/minute (Table 3).

Student feedback was largely positive, focusing on the navigation of the game, which was considered user friendly and visually intuitive. Examples of this type of feedback were:

… it’s nice and colourful and eye-catching.

Easy to use, interactive and a truly worth-while task …

Further suggested developments included receiving feedback informing the learners why a set of answers they selected was incorrect and having a more relevant learning scenario to their current UK context. As learners commented:

… perhaps giving feedback on the reasons as to why the incorrect options are regarded as being incorrect.

Using a more relevant example …

5 Discussion

5.1 Log Data as Indirect Observation

The data in this study were collected automatically by the log engine of the game. This data collection technique can be considered as a strength of serious games. Smith et al. (2015) claim that this technique represents an indirect observation, where the process of data collection does not distract players or learners, compared with direct observation. In addition, this technique allows a researcher to collect data remotely, as seen in GRAPHIC-II. The analysis of log data can be considered as stealth assessment, allowing the researcher to assess how users interact with the game tasks (Snow et al. 2015). According to GRAPHIC-II, the log data included student identifiers, when and how long they logged into the game, how many times they submitted their answers, and what answers they submitted.

5.2 Game Completion with Low Submissions

From the log data of GRAPHIC-II, it can be concluded that group 2 students used fewer submission attempts, compared with group 1 (p < 0.001). This suggested that the students in group 2 performed better in completing the game and achieving the score of 100%, compared with group 1 counterparts. Given the game design, however, completing the game using one-attempt submission was not expected. These students are intellectually able and thus some students were able to achieve the correct result quickly at their first attempt whilst others may have taken care over their response. It is possible that the group 1 students might have discussed their learning with peers which could explain why more in group 2 submitted five correct answers at the first attempt. The issue of collusion has been noted as relevant for online assessment, especially when all students do not have to complete tasks at the same time (Rowe 2004), which is common with formative assessments. However, the fact that students used 11 different ways to achieve the final score of 100% meant there was not just one right answer; this reflects reality where different groupings of evidence-based health promotion initiatives may be used to promote oral health, and reducing the need for collusion. Furthermore, students were permitted to complete the game at their leisure and some chose to do so; however, an important issue to explore is that students may not gain the requisite learning through the game if they complete the game at the first attempt and do so quickly.

It can be suggested that the level of difficulty of the game was suitable for most students; however, incorporating more learning scenarios with a higher level of difficulty for students who complete the assignment quickly may be a helpful way of improving learning. It would also be more advantageous if student game activities are scheduled for all students to perform and complete the game at the same time; however, timetabling challenges mean that all students are not always available on the same day and it is difficult to have access to sufficient computers if all students in the class perform the game at the same time. In future, as the personal computing capabilities of student increase, it should become easier to run large classes.

Encouraging autonomous and self-directed learning is considered beneficial (Grow 1991). Feedback or clues can also be considered as a solution, so students can improve their knowledge using feedback in the game, rather than relying on other students. Also, it seems that greater autonomy can be appropriate for dental students who will finally be working in their own practice when they have finished their degree in dentistry. Therefore, it would be ideal if students could realise the benefits of the game and complete it autonomously. Furthermore, pre- and post-knowledge tests can be considered as supporting tools. A pre-knowledge test can identify knowledge gap of students, and then students need to pay attention on the game completion in order to achieve a higher score from a post-knowledge test.

5.3 Game Completion with High Submissions

Another student strategy to be examined is making a random submission of answers until the successful completion of the game, possibly without reading the provided learning materials, i.e. following the game outcomes, in order to submit the appropriate list of health promotion programmes to achieve the score of 100%. As a rule, the students would need to read the evidence-based learning materials provided in GRAPHIC to select the best five answers for submission. This requires a period of time to consider which choices should be selected for the submission. However, according to our data, some students appeared to randomly submit their answers, as they spent less than a minute considering and/or submitting answers. Although these students completed the game with a great number of submission attempts, they might not achieve the game outcomes because they missed the process of thinking. To prevent this issue, the game should include a new function where the number of submission attempts would be limited to an appropriate number, thus placing less pressure to have the right answer and take more time to gain the final answer. This feature will also enhance the challenge of the game, as not all students will be able to achieve a score of 100%.

5.4 The Role of Failure

To understand why the students who had these two unexpected strategies might not achieve learning outcomes, compared to other students who have learnt from the game, it is necessary to explain the role of ‘failure’. This is an underpinning game theory, suggesting that ‘failure’ in games is not generally considered as ‘failure’, because players can consider their failure in order to re-evaluate or adjust their strategies to achieve goals of games (Gee 2008; Klopfer et al. 2009). This can be considered as a learning process when using a serious game. In other words, within GRAPHIC, students firstly may not be able to get the score of 100%; from feedback, they need to re-evaluate their submitted set of health promotion interventions and reconsider other options when submitting an answer in order to get a better score until completion of the game.

5.5 Benefits and Limitations of the Log Data Analytics

The log activity function of GRAPHIC-II can be considered a valuable feature of serious games. Learning analytics can be applied to identify students who are stuck with their learning (Serrano-Laguna et al. 2014). In this study, this feature identified strategies or gaming behaviour (e.g. random answers) that students used to complete the game, which might hinder some from achieving the desired learning outcomes. It can then help identify students who would benefit from support. However, within GRAPHIC-II, there is a limitation of merely using the analytics of the log data which must be recognised. Some students may have fully understood the underpinning theoretical framework they had been taught before encountering GRAPHIC. Therefore, they could more easily obtain correct answers at the first attempt and thus achieve successful learning outcomes. Consequently, further evaluation to check student’s understanding (e.g. an interview, a reflective assignment, or pre- and post-knowledge tests) is required to confirm whether students have achieved the learning outcomes. For example, as mentioned in methods section, this cohort of students needed to complete a reflective assignment after game completion, this would examine whether or not they understood reasons of answers they selected.

5.6 Further Development of GRAPHIC

According to the user feedback, the findings suggest that GRAPHIC-II was much improved from GRAPHIC-I, especially in relation to game navigation. This can be claimed as a major improvement, as a problematic navigation can distract students from their learning. In addition, the new interface in GRAPHIC seemed to be more engaging for students. However, further developments were suggested such as user feedback, relevance of the learning scenario to their context, and having access to additional learning scenarios with higher levels of difficulty. These will enhance a quality of learning within GRAPHIC.

6 Conclusion

GRAPHIC-II offers a gaming environment where students can practice critical thinking and decision-making skills regarding health promotion in a safe environment. In GRAPHIC-II, users were required to consider the provided learning scenario, evaluate the given list of health promotion programmes, and subsequently decide what the appropriate answers were. Therefore, students were able to gain experience in a safe environment. In addition, the activity logging system of serious games can be a valuable feature in supporting academic staff when evaluating whether the students acquire critical thinking and decision-making skills in dental public health. This feature can be considered as a stealth assessment, which allows academic staff to observe how students complete the game, without interrupting their activities. By using this technique, academic staff can identify students who may be stuck with a learning process in order to help them achieve the desired learning outcomes. However, multiple techniques, including pre- and post-knowledge tests or a reflective assignment, are required to confirm whether the learning outcomes are achieved through the game. Overall, GRAPHIC-II provided a greater contribution to dental public health education, compared with GRAPHIC-I; however, further improvements are required to enhance its quality.

References

Akl, E. A., Pretorius, R. W., Sackett, K., Erdley, W. S., Bhoopathi, P. S., Alfarah, Z., et al. (2010). The effect of educational games on medical students’ learning outcomes: A systematic review: BEME Guide No 14. Medical Teacher, 32(1), 16–27.

Akl, E. A., Kairouz, V. F., Sackett, K. M., Erdley, W. S., Mustafa, R. A., Fiander, M., Gabriel, C., Schünemann, H. (2013). Educational games for health professionals. Cochrane Database of Systematic Reviews. doi:10.1002/14651858.CD006411.pub4.

Amer, R. S., Denehy, G. E., Cobb, D. S., Dawson, D. V., Cunningham-Ford, M. A., & Bergeron, C. (2011). Development and evaluation of an interactive dental video game to teach dentin bonding. Journal of Dental Education, 75(6), 823–831.

Blakely, G., Skirton, H., Cooper, S., Allum, P., & Nelmes, P. (2009). Educational gaming in the health sciences: Systematic review. Journal of Advanced Nursing, 65(2), 259–269.

Boyle, E. A., Hainey, T., Connolly, T. M., Gray, G., Earp, J., & Ott, M. (2016). An update to the systematic literature review of empirical evidence of the impacts and outcomes of computer games and serious games. Computers & Education, 94, 178–192.

Gallagher, J. E. (2005). Wanless: A public health knight. Securing good health for the whole population. Community Dental Health, 22(2), 66–70.

Gee, J. P. (2008). Learning and games. In K. Salen (Ed.), The ecology of games: Connecting youth, games, and learning (pp. 21–40). Cambridge: MIT Press.

Graafland, M., Schraagen, J. M., & Schijven, M. P. (2012). Systematic review of serious games for medical education and surgical skills training. British Journal of Surgery, 99(10), 1322–1330.

Grow, G. O. (1991). Teaching learners to be self-directed. Adult Education Quarterly, 41(3), 125–149.

Hannig, A., Lemos, M., Spreckelsen, C., Ohnesorge-Radtke, U., & Rafai, N. (2013). Skills-o-mat: Computer supported interactive motion-and game-based training in mixing alginate in dental education. Journal of Educational Computing Research, 48(3), 315–343.

Jabbar, A. I. A., & Felicia, P. (2015). Gameplay engagement and learning in game-based learning: A systematic review. Review of Educational Research, 85(4), 740–779.

Ke, F., Xie, K., & Xie, Y. (2016). Game-based learning engagement: A theory-and data-driven exploration. British Journal of Educational Technology, 47(6), 1183–1201.

Klopfer, E., Osterweil, S., & Salen, K. (2009). Moving Learning Games Forward. Cambridge: The Education Arcade, Massachuesetts Institute of Technology.

Kron, F. W., Gjerde, C. L., Sen, A., & Fetters, M. D. (2010). Medical student attitudes toward video games and related new media technologies in medical education. BMC Medical Education, 10, 50.

Lynch-Sauer, J., VandenBosch, T. M., Kron, F., Gjerde, C. L., Arato, N., & Sen, A. (2011). Nursing students’ attitudes toward video games and related new media technologies. Journal of Nursing Education, 50(9), 513–523.

O’Neill, E., Reynolds, P. A., Hatzipanagos, S., & Gallagher, J.E. (2012). GRAPHIC (Games Research Applied to Public Health with Innovative Collaboration)—Designing a serious game pilot for dental public health. Bulletin du Groupement International pour la Recherche Scientifique en Stomatologie et Odontologie, 51(3), e30-e31. http://www.girso.eu/journal/index.php/girso/article/view/255/262 Accessed July 28, 2014.

Rowe, N. C. (2004). Cheating in Online Student Assessment: Beyond Plagiarism. Online Journal of Distance Learning Administration, 7(2), http://www.westga.edu/~distance/ojdla/summer72/rowe72.html Accessed July 30, 2014 .

Serrano-Laguna, Á., Torrente, J., Moreno-Ger, P., & Fernández-Manjón, B. (2014). Application of learning analytics in educational videogames. Entertainment Computing, 5(4), 313–322.

Sipiyaruk, K. (2013). Serious games for dental public health education: Experiences and opinions towards serious games. MRes Dissertation, King’s College London.

Smith, S. P., Blackmore, K., & Nesbitt, K. (2015). A meta-analysis of data collection in serious games research. In C. S. Loh, Y. Sheng, & D. Ifenthaler (Eds.), Serious games analytics: Methodologies for performance measurement, assessment, and improvement (pp. 31–55). New York: Springer.

Snow, E. L., Allen, L. K., & McNamara, D. S. (2015). The dynamical analysis of log data within educational games. In C. S. Loh, Y. Sheng, & D. Ifenthaler (Eds.), Serious games analytics: Methodologies for performance measurement, assessment, and improvement (pp. 81–100). New York: Springer.

Spencer, L., Ritchie, J., Ormston, R., O’connor, W., & Barnard, M. (2013). Analysis: Principles and processes. In J. Ritchie, J. Lewis, C. M. Nicholls, & R. Ormston (Eds.), Qualitative research practice: A guide for social science students and researchers (2nd ed., pp. 269–293). London: Sage.

Wang, R., DeMaria, S., Jr., Goldberg, A., & Katz, D. (2016). A systematic review of serious games in training: Health care professionals. Simulation in Healthcare, 11(1), 41–51.

Zyda, M. (2005). From visual simulation to virtual reality to games. Computer, 38(9), 25–32.

Acknowledgements

The authors would like to sincerely thank Professor Margaret Whitehead for permitting to use the diagram of wider determinants of health for GRAPHIC, Dr Eunan O’Neill for his contribution to the development of the original GRAPHIC concept, and Tier 2 Consulting Limited for the technical development of the game.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sipiyaruk, K., Gallagher, J.E., Hatzipanagos, S. et al. Acquiring Critical Thinking and Decision-Making Skills: An Evaluation of a Serious Game Used by Undergraduate Dental Students in Dental Public Health. Tech Know Learn 22, 209–218 (2017). https://doi.org/10.1007/s10758-016-9296-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10758-016-9296-6