Abstract

We present a computer-aided programming approach to concurrency. The approach allows programmers to program assuming a friendly, non-preemptive scheduler, and our synthesis procedure inserts synchronization to ensure that the final program works even with a preemptive scheduler. The correctness specification is implicit, inferred from the non-preemptive behavior. Let us consider sequences of calls that the program makes to an external interface. The specification requires that any such sequence produced under a preemptive scheduler should be included in the set of sequences produced under a non-preemptive scheduler. We guarantee that our synthesis does not introduce deadlocks and that the synchronization inserted is optimal w.r.t. a given objective function. The solution is based on a finitary abstraction, an algorithm for bounded language inclusion modulo an independence relation, and generation of a set of global constraints over synchronization placements. Each model of the global constraints set corresponds to a correctness-ensuring synchronization placement. The placement that is optimal w.r.t. the given objective function is chosen as the synchronization solution. We apply the approach to device-driver programming, where the driver threads call the software interface of the device and the API provided by the operating system. Our experiments demonstrate that our synthesis method is precise and efficient. The implicit specification helped us find one concurrency bug previously missed when model-checking using an explicit, user-provided specification. We implemented objective functions for coarse-grained and fine-grained locking and observed that different synchronization placements are produced for our experiments, favoring a minimal number of synchronization operations or maximum concurrency, respectively.

Similar content being viewed by others

1 Introduction

Programming for a concurrent shared-memory system, such as most common computing devices today, is notoriously difficult and error-prone. Program synthesis for concurrency aims to mitigate this complexity by synthesizing synchronization code automatically [5, 6, 9, 15]. However, specifying the programmer’s intent may be a challenge in itself. Declarative mechanisms, such as assertions, suffer from the drawback that it is difficult to ensure that the specification is complete and fully captures the programmer’s intent.

We propose a solution where the specification is implicit. We observe that a core difficulty in concurrent programming originates from the fact that the scheduler can preempt the execution of a thread at any time. We therefore give the developer the option to program assuming a friendly, non-preemptive, scheduler. Our tool automatically synthesizes synchronization code to ensure that every behavior of the program under preemptive scheduling is included in the set of behaviors produced under non-preemptive scheduling. Thus, we use the non-preemptive semantics as an implicit correctness specification.

The non-preemptive scheduling model (also known as cooperative scheduling [26]) can simplify the development of concurrent software, including operating system (OS) kernels, network servers, database systems, etc. [21, 22]. In the non-preemptive model, a thread can only be descheduled by voluntarily yielding control, e.g., by invoking a blocking operation. Synchronization primitives may be used for communication between threads, e.g., a producer thread may use a semaphore to notify the consumer about availability of data. However, one does not need to worry about protecting accesses to shared state: a series of memory accesses executes atomically as long as the scheduled thread does not yield.

A user evaluation by Sadowski and Yi [22] demonstrated that this model makes it easier for programmers to reason about and identify defects in concurrent code. There exist alternative implicit correctness specifications for concurrent programs. For example, for functional programs one can specify the final output of the sequential execution as the correct output. The synthesizer must then generate a concurrent program that is guaranteed to produce the same output as the sequential version [3]. This approach does not allow any form of thread coordination, e.g., threads cannot be arranged in a producer–consumer fashion. In addition, it is not applicable to reactive systems, such as device drivers, where threads are not required to terminate.

Another implicit specification technique is based on placing atomic sections in the source code of the program [14]. In the synthesized program the computation performed by an atomic section must appear atomic with respect to the rest of the program. Specifications based on atomic sections and specifications based on the non-preemptive scheduling model, used by our tool, can be easily expressed in terms of each other. For example, one can simulate atomic sections by placing \(\mathsf {yield}\) statements before and after each atomic section, as well as around every instruction that does not belong to any atomic section.

We believe that, at least for systems code, specifications based on the non-preemptive scheduling model are easier to write and are less error-prone than atomic sections. Atomic sections are subject to syntactic constraints. Each section is marked by a pair of matching opening and closing statements, which in practice means that the section must start and end within the same program block. In contrast, a \(\mathsf {yield}\) can be placed anywhere in the program.

Moreover, atomic sections restrict the use of thread synchronization primitives such as semaphores. An atomic section either executes in its entirety or not at all. In the former case, all wait conditions along the execution path through the atomic section must be simultaneously satisfied before the atomic section starts executing. In practice, to avoid deadlocks, one can only place a blocking instruction at the start of an atomic section. Combined with syntactic constraints discussed above, this restricts the use of thread coordination with atomic sections—a severe limitation for systems code where thread coordination is common. In contrast, synchronization primitives can be used freely under non-preemptive scheduling. Internally, they are modeled using \(\mathsf {yield}\)s: for instance, a semaphore acquisition instruction is modeled by a \(\mathsf {yield}\) followed by an \(\mathsf {assume}\) statement that proceeds when the semaphore becomes available.

Lastly, our specification defaults to the safe choice of assuming everything needs to be atomic unless a \(\mathsf {yield}\) statement is placed by the programmer. In contrast, code that uses atomic sections can be preempted at any point unless protected by an explicit atomic section.

In defining behavioral equivalence between preemptive and non-preemptive executions, we focus on externally observable program behaviors: two program executions are observationally equivalent if they generate the same sequences of calls to interfaces of interest. This approach facilitates modular synthesis where a module’s behavior is characterized in terms of its interaction with other modules. Given a multi-threaded program \(\mathscr {C}\) and a synthesized program \(\mathscr {C}'\) obtained by adding synchronization to \(\mathscr {C}, \mathscr {C}'\) is preemption-safe w.r.t. \(\mathscr {C}\) if for each execution of \(\mathscr {C}'\) under a preemptive scheduler, there is an observationally equivalent non-preemptive execution of \(\mathscr {C}\). Our synthesis goal is to automatically generate a preemption-safe version of the input program.

We rely on abstraction to achieve efficient synthesis of multi-threaded programs. We propose a simple, data-oblivious abstraction inspired by an analysis of synchronization patterns in OS code, which tend to be independent of data values. The abstraction tracks types of accesses (read or write) to each memory location while ignoring their values. In addition, the abstraction tracks branching choices. Calls to an external interface are modeled as writes to a special memory location, with independent interfaces modeled as separate locations. To the best of our knowledge, our proposed abstraction is yet to be explored in the verification and synthesis literature. The abstract program is denoted as \(\mathscr {C}_{\textit{abs}}\).

Two abstract program executions are observationally equivalent if they are equal modulo the classical independence relation I on memory accesses. This means that every sequence \(\omega \) of observable actions is equivalent to a set of sequences of observable actions that are derived from \(\omega \) by repeatedly commuting independent actions. Independent actions are accesses to different locations, and accesses to the same location iff they are both read accesses. Using this notion of equivalence, the notion of preemption-safety is extended to abstract programs.

Under abstraction, we model each thread as a nondeterministic finite automaton (NFA) over a finite alphabet, with each symbol corresponding to a read or a write to a particular variable. This enables us to construct NFAs \(\mathsf {NP}_{\textit{abs}}\), representing the abstraction of the original program \(\mathscr {C}\) under non-preemptive scheduling, and \({\mathsf {P}}_{abs}\), representing the abstraction of the synthesized program \(\mathscr {C}'\) under preemptive scheduling. We show that preemption-safety of \(\mathscr {C}'\) w.r.t. \(\mathscr {C}\) is implied by preemption-safety of the abstract synthesized program \(\mathscr {C}_{\textit{abs}}'\) w.r.t. the abstract original program \(\mathscr {C}_{\textit{abs}}\), which, in turn, is implied by language inclusion modulo I of NFAs \({\mathsf {P}}_{abs}\) and \(\mathsf {NP}_{\textit{abs}}\). While the problem of language inclusion modulo an independence relation is undecidable [2], we show that the antichain-based algorithm for standard language inclusion [11] can be adapted to decide a bounded version of language inclusion modulo an independence relation.

Our synthesis works in a counterexample-guided inductive synthesis (CEGIS) loop that accumulates a set of global constraints. The loop starts with a counterexample obtained from the language inclusion check. A counterexample is a sequence of locations in \(\mathscr {C}_{\textit{abs}}\) such that their execution produce an observation sequence that is valid under the preemptive semantics, but not under the non-preemptive semantics. From the counterexample we infer mutual exclusion (mutex) constraints, which when enforced in the language inclusion check avoid returning the same counterexample again. We accumulate the mutex constraints from all counterexamples iteratively generated by the language inclusion check. Once the language inclusion check succeeds, we construct a set of global constraints using the accumulated mutex constraints and constraints for enforcing deadlock-freedom. This approach is the key difference to our previous work [4], where a greedy approach is employed that immediately places a lock to eliminate a bug. The greedy approach may result in a suboptimal lock placement with unnecessarily overlapping or nested locks.

The global approach allows us to use an objective function f to find an optimal lock placement w.r.t. f once all mutex constraints have been identified. Examples of objective functions include minimizing the number of \(\mathsf {lock}\) statements (leading to coarse-grained locking) and maximizing concurrency (leading to fine-grained locking). We encode such an objective function, together with the global constraints, into a weighted maximum satisfiability (MaxSAT) problem, which is then solved using an off-the-shelf solver.

Since the synthesized lock placement is guaranteed not to introduce deadlocks our solution follows good programming practices with respect to locks: no double locking, no double unlocking and no locks locked at the end of the execution.

We implemented our synthesis procedure in a new prototype tool called Liss (Language Inclusion-based Synchronization Synthesis) and evaluated it on a series of device driver benchmarks, including an Ethernet driver for Linux and the synchronization skeleton of a USB-to-serial controller driver, as well as an in-memory key-value store server. First, Liss was able to detect and eliminate all but two known concurrency bugs in our examples; these included one bug that we previously missed when synthesizing from explicit specifications [6], due to a missing assertion. Second, our abstraction proved highly efficient: Liss runs an order of magnitude faster on the more complicated examples than our previous synthesis tool based on the CBMC model checker. Third, our coarse abstraction proved surprisingly precise for systems code: across all our benchmarks, we only encountered three program locations where manual abstraction refinement was needed to avoid the generation of unnecessary synchronization. Fourth, our tool finds a deadlock-free lock placement for both a fine-grained and a coarse-grained objective function. Overall, our evaluation strongly supports the use of the implicit specification approach based on non-preemptive scheduling semantics as well as the use of the data-oblivious abstraction to achieve practical synthesis for real-world systems code. With the two objective functions we implemented, Liss produces an optimal lock placements w.r.t. the objective.

Contributions First, we propose a new specification-free approach to synchronization synthesis. Given a program written assuming a friendly, non-preemptive scheduler, we automatically generate a preemption-safe version of the program without introducing deadlocks. Second, we introduce a novel abstraction scheme and use it to reduce preemption-safety to language inclusion modulo an independence relation. Third, we present the first language inclusion-based synchronization synthesis procedure and tool for concurrent programs. Our synthesis procedure includes a new algorithm for a bounded version of our inherently undecidable language inclusion problem. Fourth, we synthesize an optimal lock placement w.r.t. an objective function. Finally, we evaluate our synthesis procedure on several examples. To the best of our knowledge, Liss is the first synthesis tool capable of handling realistic (albeit simplified) device driver code, while previous tools were evaluated on small fragments of driver code or on manually extracted synchronization skeletons.

2 Related work

This work is an extension of our work that appeared in CAV 2015 [4]. We included a proof for Theorem 3 that shows that language inclusion is undecidable for our particular construction of automata and independence relation. Further, we introduced a set of global mutex constraints that replace the greedy approach of our previous work and enables optimal lock placement according to an objective function.

Synthesis of synchronization is an active research area [3, 5, 6, 8, 12, 15, 17, 23, 24]. Closest to our work is a recent paper by Bloem et al. [3], which uses implicit specifications for synchronization synthesis. While their specification is given by sequential behaviors, ours is given by non-preemptive behaviors. This makes our approach applicable to scenarios where threads need to communicate explicitly. Further, correctness in Bloem et al. [3] is determined by comparing values at the end of the execution. In contrast, we compare sequences of events, which serves as a more suitable specification for infinitely-looping reactive systems. Further, Khoshnood et al. developed ConcBugAssist [18], similar to our earlier paper [15], that employs a greedy loop to fix assertion violations in concurrent programs.

Our previous work [5, 6, 15] develops the trace-based synthesis algorithm. The input is a program with assertions in the code, which represent an explicit correctness specification. The algorithm proceeds in a loop where in each iteration a faulty trace is obtained using an external model checker. A trace is faulty if it violates the specification. The trace is subsequently generalized to a partial order [5, 6] or a formula over happens-before relations [15], both representing a set of faulty traces. A formula over happens-before relations is basically a disjunction of partial orders. In our earlier previous work [5, 6] the partial order is used to synthesize atomic sections and inner-thread reorderings of independent statements. In our later work [15] the happens-before formula is used to obtain locks, wait-signal statements, and barriers. The quality of the synthesized code heavily depends on how well the generalization steps works. Intuitively the more faulty traces are removed in one synthesis step the more general the solution is and the closer it is to the solution a human would have implemented.

The drawback of assertions as a specification is that it is hard to determine if a given set of assertions represents a complete specification. The current work does not rely on an external model-checker or an explicit specification. Here we are solving language inclusion, a computationally harder problem than reachability. However, due to our abstraction, our tool performs significantly better than tools from our previous work [5, 6], which are based on a mature model checker (CBMC [10]). Our abstraction is reminiscent of previously used abstractions that track reads and writes to individual locations (e.g., [1, 25]). However, our abstraction is novel as it additionally tracks some control-flow information (specifically, the branches taken) giving us higher precision with almost negligible computational cost. For the trace generalization and synthesis we use the technique from our previous work [15] to infer looks. Due to our choice of specification no other synchronization primitives are needed.

In Vechev et al. [24] the authors rely on assertions for synchronization synthesis and include iterative abstraction refinement in their framework. This is an interesting extension to pursue for our abstraction. In other related work, CFix [17] can detect and fix concurrency bugs by identifying simple bug patterns in the code.

The concepts of linearizability and serializability are very similar to our implicit specification. Linearizability [16] describes the illusion that every method of an object takes effect instantaneously at some point between the method call and return. A set of transactions is serializable [13, 20] if they produce the same result, whether scheduled in parallel or in sequential order.

There has been a body of work on using a non-preemptive (cooperative) scheduler as an implicit specification. The notion of cooperability was introduced by Yi and Flanagan [26]. They require the user to annotate the program with yield statements to indicate thread interference. Then their system verifies that the yield specification is complete meaning that every trace is cooperable. A preemptive trace is cooperable if it is equivalent to a trace under the cooperative scheduler.

3 Illustrative example

Figure 2 contains our running example, a part of a device driver. A driver interfaces the operating system with the hardware device (as illustrated in Fig. 1) and may be used by different threads of the operating system in parallel. An operating system thread wishing to use the device must first call the open_dev procedure and finally the close_dev procedure to indicate it no longer needs the device. The driver keeps track of the number of threads that interact with the device. The first thread to call open_dev will cause the driver to power up the device, the last thread to call close_dev will cause the driver to power down the device. The interaction between the driver and the device are represented as procedure calls in lines \(\ell _2\) and \(\ell _8\). From the device’s perspective, the power-on and power-off signals alternate. In general, we must assume that it is not safe to send the power-on signal twice in a row to the device. If executed with the non-preemptive scheduler the code in Fig. 2 will produce a sequence of a power-on signal followed by a power-off signal followed by a power-on signal and so on.

Consider the case where the procedure open_dev is called in parallel by two operating system threads that want to initiate usage of the device. Without additional synchronization, there could be two calls to power_up in a row when executing under a preemptive scheduler. Consider two threads (\(\mathtt {T}1\) and \(\mathtt {T}2\)) running the open_dev procedure. The corresponding trace is \(\mathtt {T}1.\ell _1;\ \mathtt {T}2.\ell _1;\ \mathtt {T}1.\ell _2;\ \mathtt {T}2.\ell _2;\ \mathtt {T}2.\ell _3;\ \mathtt {T}2.\ell _4;\ \mathtt {T}1.\ell _3;\ \mathtt {T}1.\ell _4\). This sequence is not observationally equivalent to any sequence that can be produced when executing with a non-preemptive scheduler.

Figure 3 contains the abstracted versions of the two procedures, open_dev_abs and close_dev_abs. For instance, the instruction \(\mathtt {open} := \mathtt {open} + 1\) is abstracted to the two instructions labeled \(\ell _{3a}\) and \(\ell _{3b}\). The calls to the device (power_up and power_down) are abstracted as writes to a hypothetical \(\mathtt {dev}\) variable. This expresses the fact that interactions with the device are never independent. The abstraction is coarse, but still captures the problem. Consider two threads (\(\mathtt {T}1\) and \(\mathtt {T}2\)) running the open_dev_abs procedure. The following trace is possible under a preemptive scheduler, but not under a non-preemptive scheduler: \(\mathtt {T}1.\ell _{1a};\ \mathtt {T}1.\ell _{1b};\ \mathtt {T}2.\ell _{1a};\ \mathtt {T}2.\ell _{1b};\ \mathtt {T}1.\ell _2;\ \mathtt {T}2.\ell _2;\ \mathtt {T}2.\ell _{3a};\ \mathtt {T}2.\ell _{3b};\ \mathtt {T}2.\ell _4;\ \mathtt {T}1.\ell _{3a};\ \mathtt {T}1.\ell _{3b};\ \mathtt {T}1.\ell _4\). Moreover, the trace cannot be transformed by swapping independent events into any trace possible under a non-preemptive scheduler. This is because instructions \(\ell _{3b}:\mathsf {write}(\mathtt {open})\) and \(\ell _{1a}:\mathsf {read}(\mathtt {open})\) are not independent. Further, \(\ell _2:\mathsf {write}(\mathtt {dev})\) is not independent with itself. Hence, the abstract trace exhibits the problem of two successive calls to power_up when executing with a preemptive scheduler. Our synthesis procedure finds this problem, and stores it as a mutex constraint: \(\mathrm {mtx}([\ell _{1a}{:}\ell _{3b}],[\ell _2{:}\ell _{3b}])\). Intuitively this constraint expresses the fact if one thread is executing any instruction between \(\ell _{1a}\) and \(\ell _{3b}\) no other thread may execute \(\ell _2\) or \(\ell _{3b}\).

While this constraint ensures two parallel calls to open_dev behave correctly, two parallel calls to close_dev may result in the device receiving two power_down signals. This is represented by the concrete trace \(\mathtt {T}1.\ell _5;\ \mathtt {T}1.\ell _6;\ \mathtt {T}2.\ell _5;\ \mathtt {T}2.\ell _6;\ \mathtt {T}2.\ell _7;\ \mathtt {T}2.\ell _8;\ \mathtt {T}2.\ell _9;\ \mathtt {T}1.\ell _7;\ \mathtt {T}1.\ell _8;\ \mathtt {T}1.\ell _9\). The corresponding abstract trace is \(\mathtt {T}1.\ell _{5a};\ \mathtt {T}1.\ell _{5b};\ \mathtt {T}1.\ell _{6a};\ \mathtt {T}1.\ell _{6b};\ \mathtt {T}2.\ell _{5a};\ \mathtt {T}2.\ell _{5b};\ \mathtt {T}2.\ell _{6a};\ \mathtt {T}2.\ell _{6b};\ \mathtt {T}2.\ell _{7a};\ \mathtt {T}2.\ell _{7b};\ \mathtt {T}2.\ell _8;\ \mathtt {T}2.\ell _9;\ \mathtt {T}1.\ell _{7a};\ \mathtt {T}1.\ell _{7b};\ \mathtt {T}1.\ell _8;\ \mathtt {T}1.\ell _9\). This trace is not possible under a non-preemptive scheduler and cannot be transformed to a trace possible under a non-preemptive scheduler. This results in a second mutex constraint \(\mathrm {mtx}([\ell _{5a}{:}\ell _{8}],[\ell _{6b}{:}\ell _{8}])\). With both mutex constraints the program is correct. Our lock placement procedure then encodes these constraints in SMT and the models of the SMT formula are all the correct lock placements. In Fig. 4 we show open_dev and close_dev with the inserted locks.

4 Formal framework and problem statement

We present the syntax and semantics of a concrete concurrent while language \({\mathscr {W}}\). For our solution strategy to be efficient we require an abstraction and we also introduce the syntax and semantics of the abstract concurrent while language \({{\mathscr {W}}}_{\textit{abs}}\). While \({\mathscr {W}}\) (and our tool) permits non-recursive function call and return statements, we skip these constructs in the formalization below. We conclude the section by formalizing our notion of correctness for concrete concurrent programs.

4.1 Concrete concurrent programs

In our work, we assume a read or a write to a single shared variable executes atomically and further assume a sequentially consistent memory model.

4.1.1 Syntax of \({\mathscr {W}}\) (Fig. 5)

A concurrent program is a finite collection of threads \(\langle \mathtt {T}1, \ldots , \mathtt {T}n \rangle \) where each thread is a statement written in the syntax of \({\mathscr {W}}\). Variables in \({\mathscr {W}}\) can be categorized into

-

shared variables \( ShVar _i\),

-

thread-local variables \( LoVar _i\),

-

lock variables \( LkVar _i\),

-

condition variables \( CondVar _i\) for wait-signal statements, and

-

guard variables \( GrdVar _i\) for assumptions.

The \( LkVar _i, CondVar _i\) and \( GrdVar _i\) variables are also shared between all threads. All variables range over integers with the exception of guard variables that range over Booleans (\(\mathtt {true},\mathtt {false}\)). Each statement is labeled with a unique location identifier \( \ell \); we denote by \(\mathsf {stmt}({\ell })\) the statement labeled by \(\ell \).

The language \({\mathscr {W}}\) includes standard sequential constructs, such as assignments, loops, conditionals, and \(\mathsf {goto}\) statements. Additional statements control the interaction between threads, such as lock, wait-notify, and \(\mathsf {yield}\) statements. In \({\mathscr {W}}\), we only permit expressions that read from at most one shared variable and assignments that either read from or write to exactly one shared variable.Footnote 1 The language also includes \(\mathsf {assume}\), \(\mathsf {assume\_not}\) statements that operate on guard variables and become relevant later for our abstraction. The \(\mathsf {yield}\) statement is in a sense an annotation as it has no effect on the actual program running under a preemptive scheduler. We still present it here because it has a semantic meaning under the non-preemptive scheduler.

Language \({\mathscr {W}}\) has two statements that allow communication with an external system: \(\mathsf {input}( ch )\) reads from and \(\mathsf {output}( ch , ShExp )\) writes to a communication channel \( ch \). The channel is an interface between the program and an external system. The external system cannot observe the internal state of the program and only observes the information flow on the channel. In practice, we use the channels to model device registers. A device register is a special memory address, reading and writing from and to it is visible to the device. This is used to exchange information with a device. In our presentation, we assume all channels communicate with the same external system.

4.1.2 Semantics of \({\mathscr {W}}\)

We first define the semantics of a single thread in \({\mathscr {W}}\), and then extend the definition to concurrent non-preemptive and preemptive semantics.

4.1.2.1 4.1.2.1 Single-thread semantics (Fig. 6)

Let us fix a thread identifier \( tid \). We use \( tid \) interchangeably with the program it represents. A state of a single thread is given by \(\langle \mathscr {V}, \ell \rangle \) where \(\mathscr {V}\) is a valuation of all program variables, and \(\ell \) is a location identifier, indicating the statement in \( tid \) to be executed next. A thread is guaranteed not to read or write thread-local variables of other threads.

We define the flow graph \({\mathscr {G}}_{ tid }\) for thread \( tid \) in a manner similar to the control-flow graph of \( tid \). Every node of \({\mathscr {G}}_{ tid }\) represents a single statement (basic blocks are not merged) and the node is labeled with the location \(\ell \) of the statement. The flow graph \({\mathscr {G}}_{ tid }\) has a unique entry node and a unique exit node. These two may coincide if the thread has no statements. The entry node is the first labeled statement in \( tid \); we denote its location identifier by \(\mathsf{first}_{ tid }\). The exit node is a special node corresponding to a hypothetical statement \(\mathsf{last}_{ tid }:\, \mathsf {skip}\) placed at the end of \( tid \).

We define successors of locations of \( tid \) using \({\mathscr {G}}_{ tid }\). The location last has no successors. We define \(\mathsf{succ}(\ell ) = \ell '\) if node \(\ell : stmt \) in \({\mathscr {G}}_{ tid }\) has exactly one outgoing edge to node \(\ell ': stmt '\). Nodes representing conditionals and loops have two outgoing edges. We define \(\mathsf{succ}_1(\ell ) = \ell _1\) and \(\mathsf{succ}_2(\ell ) = \ell _2\) if node \(\ell : stmt \) in \({\mathscr {G}}_{ tid }\) has exactly two outgoing edges to nodes \(\ell _1: stmt _1\) and \(\ell _2: stmt _2\). Here \(\mathsf{succ}_1\) represents the \(\mathsf {then}\) or the \(\mathsf {loop}\) branch, whereas \(\mathsf{succ}_2\) represents the \(\mathsf {else}\) or the \(\mathsf {loopexit}\) branch.

We can now define the single-thread operational semantics. A single execution step \(\langle \mathscr {V}, \ell \rangle \xrightarrow {\alpha } \langle \mathscr {V}', \ell ' \rangle \) changes the program state from \(\langle \mathscr {V}, \ell \rangle \) to \(\langle \mathscr {V}', \ell '\rangle \), while optionally outputting an observable symbol \(\alpha \). The absence of a symbol is denoted using \(\epsilon \). In the following, \(e\) represents an expression and \(e[v / \mathscr {V}[v]]\) evaluates an expression by replacing all variables v with their values in \(\mathscr {V}\). We use \(\mathscr {V}[v:=k]\) to denote that variable v is set to k and all other variables in \(\mathscr {V}\) remain unchanged.

In Fig. 6, we present the rules for single execution steps. Each step is atomic, no interference can occur while the expressions in the premise are being evaluated. The only rules with an observable output are:

-

1.

Havoc: Statement \(\ell : ShVar :=\mathsf {havoc}\) assigns shared variable \( ShVar \) a non-deterministic value (say k) and outputs the observable \(( tid , \mathsf {havoc}, k, ShVar )\).

-

2.

Input, Output: \(\ell : ShVar :=\mathsf {input}( ch )\) and \(\ell : \mathsf {output}( ch , ShExp )\) read and write values to the channel \( ch \), and output \(( tid , \mathsf {in}, k, ch )\) and \(( tid , \mathsf {out}, k, ch )\), where k is the value read or written, respectively.

Intuitively, the observables record the sequence of non-deterministic guesses, as well as the input/output interaction with the tagged channels. The semantics of the synchronization statements shown in Fig. 6 is standard. The lock and unlock statements do not count and do not allow double (un)locking. There are no rules for \(\mathsf {goto}\) and the sequence statement because they are already taken care of by the flow graph.

4.1.3 Concurrent semantics

A state of a concurrent program is given by \(\langle \mathscr {V}, \textit{ctid}, (\ell _1, \ldots , \ell _n) \rangle \) where \(\mathscr {V}\) is a valuation of all program variables, \(\textit{ctid}\) is the thread identifier of the currently executing thread and \(\ell _1, \ldots , \ell _n\) are the locations of the statements to be executed next in threads \(\mathtt {T}_1\) to \(\mathtt {T}_n\), respectively. There are two additional states: \(\langle \mathtt {terminated}\rangle \) indicates the program has finished and \(\langle \mathtt {failed}\rangle \) indicates an assumption failed. Initially, all integer program variables and \(\textit{ctid}\) equal 0, all guard variable equal \(\mathtt {false}\) and for each \(i \in [1,n]: \ell _i = \mathtt {first}_i\). We introduce a non-preemptive and a preemptive semantics. The former is used as a specification of allowed executions, whereas the latter models concurrent sequentially consistent executions of the program.

4.1.3.1 Non-preemptive semantics (Fig. 7 ) The non-preemptive semantics ensures that a single thread from the program keeps executing using the single-thread semantics (Rule Seq) until one of the following occurs: (a) the thread finishes execution (Rule Thread_end) or (b) it encounters a \(\mathsf {yield}\), \(\mathsf {lock}\), \(\mathsf {wait}\) or \(\mathsf {wait\_not}\) statement (Rule Nswitch). In these cases, a context-switch is possible, however, the new thread must not be blocked. We consider a thread blocked if its current instruction is to acquire an unavailable lock, waits for a condition that is not signaled, or the thread reached the \(\mathsf{last}\) location. Note the difference between \(\mathsf {wait}\)/\(\mathsf {wait\_not}\) and \(\mathsf {assume}\)/\(\mathsf {assume\_not}\). The former allow for a context-switch while the latter transitions to the \(\langle \mathtt {failed}\rangle \) state if the assume is not fulfilled (rule Assume/Assume_not). A special rule exists for termination (Rule Terminate), which requires that all threads finished execution and also all locks are unlocked.

4.1.3.2 Preemptive semantics (Figs. 7, 8 ) The preemptive semantics of a program is obtained from the non-preemptive semantics by relaxing the condition on context-switches, and allowing context-switches at all program points. In particular, the preemptive semantics consist of the rules of the non-preemptive semantics and the single rule Pswitch in Fig. 8.

4.2 Abstract concurrent programs

The state of the concrete semantics contains unbounded integer variables, which may result in an infinite state space. We therefore introduce a simple, data-oblivious abstraction \({{\mathscr {W}}}_{\textit{abs}}\) for concurrent programs written in \({\mathscr {W}}\) communicating with an external system. The abstraction tracks types of accesses (read or write) to each memory location while abstracting away their values. Inputs/outputs to a channel are modeled as writes to a special memory location (\(\mathtt{dev}\)). Even inputs are modeled as writes because in our applications we cannot assume that reads from the external interface are free of side-effects in the component on the other side of the interface. Havocs become ordinary writes to the variable they are assigned to. Every branch is taken non-deterministically and tracked. Given \(\mathscr {C}\) written in \({\mathscr {W}}\), we denote by \(\mathscr {C}_{\textit{abs}}\) the corresponding abstract program written in \({{\mathscr {W}}}_{\textit{abs}}\).

4.2.1 Abstract syntax (Fig. 9)

In the figure, \( var \) denotes all shared program variables and the \(\mathtt{dev}\) variable. The syntax of all synchronization primitives and the assumptions over guard variables remains unchanged. The purpose of the guard variables is to improve the precision of our otherwise coarse abstraction. Currently, they are inferred manually, but can presumably be inferred automatically using an iterative abstraction-refinement loop. In our current benchmarks, guard variables needed to be introduced in only three scenarios.

4.2.2 Abstraction function (Fig. 10)

A thread in \({\mathscr {W}}\) can be translated to \({{\mathscr {W}}}_{\textit{abs}}\) using the abstraction function  . The abstraction replaces all global variable access with \(\mathsf {read}( var )\) and \(\mathsf {write}( var )\) and replaces branching conditions with nondeterminism (\(*\)). All synchronization primitives remain unaffected by the abstraction. The abstraction may result in duplicate labels \(\ell \), which are replaced by fresh labels. \(\mathsf {goto}\) statements are reordered accordingly. Our abstraction records branching choices (branch tagging). If one were to remove branch-tagging, the abstraction would be unsound. The justification and intuition for this can be found further below in Theorem 1. For example in our running example in Fig. 2 the abstraction of \(\ell _1\) results in two abstract labels \(\ell _{1a}\) and \(\ell _{1b}\) in Fig. 3.

. The abstraction replaces all global variable access with \(\mathsf {read}( var )\) and \(\mathsf {write}( var )\) and replaces branching conditions with nondeterminism (\(*\)). All synchronization primitives remain unaffected by the abstraction. The abstraction may result in duplicate labels \(\ell \), which are replaced by fresh labels. \(\mathsf {goto}\) statements are reordered accordingly. Our abstraction records branching choices (branch tagging). If one were to remove branch-tagging, the abstraction would be unsound. The justification and intuition for this can be found further below in Theorem 1. For example in our running example in Fig. 2 the abstraction of \(\ell _1\) results in two abstract labels \(\ell _{1a}\) and \(\ell _{1b}\) in Fig. 3.

4.2.3 Abstract semantics

As before, we first define the semantics of \({{\mathscr {W}}}_{\textit{abs}}\) for a single-thread.

4.2.3.1 4.2.3.1 Single-thread semantics (Fig. 11)

The abstract state of a single thread \( tid \) is given simply by \(\langle \mathscr {V}_o,\ell \rangle \) where \(\mathscr {V}_o\) is a valuation of all lock, condition and guard variables and \(\ell \) is the location of the statement in \( tid \) to be executed next. We define the flow graph and successors for locations in the abstract program \( tid \) in the same way as before. An abstract observable symbol is of the form: \(( tid , \theta ,\ell )\), where \(\theta \in \{(\mathsf {read}, ShVar ),(\mathsf {write}, ShVar ),\mathsf {then},\mathsf {else},\mathsf {loop},\mathsf {exitloop}\}\). The symbol \(\theta \) records the type of access to variables along with the variable name \(((\mathsf {read}, v),(\mathsf {write}, v))\) and records non-deterministic branching choices \(\{\mathsf {if},\mathsf {else},\mathsf {loop},\mathsf {exitloop}\}\). Fig. 11 presents the rules for statements unique to \({{\mathscr {W}}}_{\textit{abs}}\); the rules for statements common to \({{\mathscr {W}}}_{\textit{abs}}\) and \({\mathscr {W}}\) are the same.

4.2.3.2 4.2.3.2 Concurrent semantics

A state of an abstract concurrent program is either \(\langle \mathtt {terminated}\rangle , \langle \mathtt {failed}\rangle \), or is given by \(\langle \mathscr {V}_o, \textit{ctid}, (\ell _1, \ldots , \ell _n) \rangle \) where \(\mathscr {V}_o\) is a valuation of all lock, condition and guard variables, \(\textit{ctid}\) is the current thread identifier and \(\ell _1, \ldots , \ell _n\) are the locations of the statements to be executed next in threads \(\mathtt {T}_1\) to \(\mathtt {T}_n\), respectively. The non-preemptive and preemptive semantics of a concurrent program written in \({{\mathscr {W}}}_{\textit{abs}}\) are defined in the same way as that of a concurrent program written in \({\mathscr {W}}\).

4.3 Program correctness and problem statement

Let \(\mathbb {W}, \mathbb {W}_{\textit{abs}}\) denote the set of all concurrent programs in \({\mathscr {W}}, {{\mathscr {W}}}_{\textit{abs}}\), respectively.

4.3.1 Executions

A non-preemptive/preemptive execution of a concurrent program \(\mathscr {C}\) in \(\mathbb {W}\) is an alternating sequence of program states and (possibly empty) observable symbols, \(S_0 \alpha _1 S_1 \ldots \alpha _k S_k\), such that (a) \(S_0\) is the initial state of \(\mathscr {C}\), (b) \(\forall j \in [0,k-1]\), according to the non-preemptive/preemptive semantics of \({\mathscr {W}}\), we have \(S_j \xrightarrow {\alpha _{j+1}}{S_{j+1}}\), and (c) \(S_k\) is the state \(\langle \mathtt {terminated}\rangle \). A non-preemptive/preemptive execution of a concurrent program \(\mathscr {C}_{\textit{abs}}\) in \(\mathbb {W}_{\textit{abs}}\) is defined in the same way, replacing the corresponding semantics of \({\mathscr {W}}\) with that of \({{\mathscr {W}}}_{\textit{abs}}\).

4.3.2 Observable behaviors

Let \(\pi \) be an execution of program \(\mathscr {C}\) in \(\mathbb {W}\), then we denote with \(\omega = \mathsf {obs}(\pi )\) the sequence of non-empty observable symbols in \(\pi \). We use \([\![ \mathscr {C} ]\!]^{\textit{NP}}\), resp. \([\![ \mathscr {C} ]\!]^{P}\), to denote the non-preemptive, resp. preemptive, observable behavior of \(\mathscr {C}\), that is all sequences \(\mathsf {obs}(\pi )\) of all executions \(\pi \) under the non-preemptive, resp. preemptive, scheduling. The non-preemptive/preemptive observable behavior of program \(\mathscr {C}_{\textit{abs}}\) in \(\mathbb {W}_{\textit{abs}}\), denoted \([\![ \mathscr {C}_{\textit{abs}} ]\!]^{\textit{NP}}\)/\([\![ \mathscr {C}_{\textit{abs}} ]\!]^{P}\), is defined similarly.

We specify correctness of concurrent programs in \(\mathbb {W}\) using two implicit criteria, presented below.

4.3.3 Preemption-safety

Observable behaviors \(\omega _1\) and \(\omega _2\) of a program \(\mathscr {C}\) in \(\mathbb {W}\) are equivalent if: (a) the subsequences of \(\omega _1\) and \(\omega _2\) containing only symbols of the form \(( tid , \mathsf {in}, k, t)\) and \(( tid , \mathsf {out}, k, t)\) are equal and (b) for each thread identifier \( tid \), the subsequences of \(\omega _1\) and \(\omega _2\) containing only symbols of the form \(( tid , \mathsf {havoc}, k, x)\) are equal. Intuitively, observable behaviors are equivalent if they have the same interaction with the interface, and the same non-deterministic choices in each thread. For sets \(\mathscr {O}_1\) and \(\mathscr {O}_2\) of observable behaviors, we write \(\mathscr {O}_1 \Subset \mathscr {O}_2\) to denote that each sequence in \(\mathscr {O}_1\) has an equivalent sequence in \(\mathscr {O}_2\).

Given concurrent programs \(\mathscr {C}\) and \(\mathscr {C}'\) in \(\mathbb {W}\) such that \(\mathscr {C}'\) is obtained by adding locks to \(\mathscr {C}, \mathscr {C}'\) is preemption-safe w.r.t. \(\mathscr {C}\) if \([\![ \mathscr {C}' ]\!]^{P} \Subset [\![ \mathscr {C} ]\!]^{\textit{NP}}\).

4.3.4 Deadlock-freedom

A state \(S\) of concurrent program \(\mathscr {C}\) in \(\mathbb {W}\) is a deadlock state under non-preemptive/preemptive semantics if

-

(a)

The repeated application of the rules of the non-preemptive/preemptive semantics from the initial state \(S_0\) of \(\mathscr {C}\) can lead to \(S\),

-

(b)

\(S \ne \langle \mathtt {terminated}\rangle \),

-

(c)

\(S \ne \langle \mathtt {failed}\rangle \), and

-

(d)

\(\lnot \exists S'\): \(\langle S \rangle \xrightarrow {\alpha } \langle S' \rangle \) according to the non-preemptive/preemptive semantics of \({\mathscr {W}}\).

Program \(\mathscr {C}\) in \(\mathbb {W}\) is deadlock-free under non-preemptive/preemptive semantics if no non-preemptive/preemptive execution of \(\mathscr {C}\) hits a deadlock state. In other words, every non-preemptive/preemptive execution of \(\mathscr {C}\) ends in state \(\langle \mathtt {terminated}\rangle \) or \(\langle \mathtt {failed}\rangle \). The \(\langle \mathtt {failed}\rangle \) state indicates an assumption did not hold, which we do not consider a deadlock. We say \(\mathscr {C}\) is deadlock-free if it is deadlock-free under both non-preemptive and preemptive semantics.

4.3.5 Problem statement

We are now ready to state our main problem, the optimal synchronization synthesis problem. We assume we are given a cost function f from a program \(\mathscr {C}'\) to the cost of the lock placement solution, formally \(f:\mathbb {W}\mapsto \mathbb {R}\). Then, given a concurrent program \(\mathscr {C}\) in \(\mathbb {W}\), the goal is to synthesize a new concurrent program \(\mathscr {C}'\) in \(\mathbb {W}\) such that:

-

(a)

\(\mathscr {C}'\) is obtained by adding locks to \(\mathscr {C}\),

-

(b)

\(\mathscr {C}'\) is preemption-safe w.r.t. \(\mathscr {C}\),

-

(c)

\(\mathscr {C}'\) has no deadlocks not present in \(\mathscr {C}\), and,

-

(d)

\(\mathscr {C}' = \underset{\mathscr {C}''\in \mathbb {W}\text { satisfying (a)-(c) above}}{\arg \,\min } \; f(\mathscr {C}'')\)

5 Solution overview

Our solution framework (Fig. 12) consists of the following main components. We briefly describe each component below and then present them in more detail in subsequent sections.

5.1 Reduction of preemption-safety to language inclusion

To ensure tractability of checking preemption-safety, we build the abstract program \(\mathscr {C}_{\textit{abs}}\) from \(\mathscr {C}\) using the abstraction function described in Sect. 4.2. Under abstraction, we model each thread as a nondeterministic finite automaton (NFA) over a finite alphabet consisting of abstract observable symbols. This enables us to construct NFAs \(\mathsf {NP}_{\textit{abs}}\) and \({\mathsf {P}}'_{\textit{abs}}\) accepting the languages \([\![ \mathscr {C}_{\textit{abs}} ]\!]^{\textit{NP}}\) and \([\![ \mathscr {C}_{\textit{abs}}' ]\!]^{P}\), respectively. We proceed to check if all words of \({\mathsf {P}}'_{\textit{abs}}\) are included in \(\mathsf {NP}_{\textit{abs}}\) modulo an independence relation I that respects the equivalence of observables. We describe the reduction of preemption-safety to language inclusion and our language inclusion check procedure in Sect. 6.

5.2 Inference of mutex constraints from generalized counterexamples

If \({\mathsf {P}}'_{\textit{abs}}\) and \(\mathsf {NP}_{\textit{abs}}\) do not satisfy language inclusion modulo I, then we obtain a counterexample \(\textit{cex}\). A counterexample is a sequence of locations an observation sequence that is in \([\![ \mathscr {C}_{\textit{abs}} ]\!]^{P}\), but not in \([\![ \mathscr {C}_{\textit{abs}}' ]\!]^{\textit{NP}}\). We analyze \(\textit{cex}\) to infer constraints on \({\mathscr {L}}({\mathsf {P}}'_{\textit{abs}})\) for eliminating \(\textit{cex}\). We use \(\textit{nhood}(\textit{cex})\) to denote the set of all permutations of the symbols in \(\textit{cex}\) that are accepted by \({\mathsf {P}}'_{\textit{abs}}\). Our counterexample analysis examines the set \(\textit{nhood}(\textit{cex})\) to obtain an hbformula \(\phi \)—a Boolean combination of happens-before ordering constraints between events—representing all counterexamples in \(\textit{nhood}(\textit{cex})\). Thus \(\textit{cex}\) is generalized into a larger set of counterexamples represented as \(\phi \). From \(\phi \), we infer possible mutual exclusion (mutex) constraints on \({\mathscr {L}}({\mathsf {P}}'_{\textit{abs}})\) that can eliminate all counterexamples satisfying \(\phi \). We describe the procedure for finding constraints from \(\textit{cex}\) in Sect. 7.1.

5.3 Automaton modification for enforcing mutex constraints

Once we have the mutex constraints inferred from a generalized counterexample, we enforce them in \({\mathsf {P}}'_{\textit{abs}}\), effectively removing transitions from the automaton that violate the mutex constraint. This completes our loop and we repeat the language inclusion check of \({\mathsf {P}}'_{\textit{abs}}\) and \(\mathsf {NP}_{\textit{abs}}\). If another counterexample is found our loop continues, if the language inclusion check succeeds we proceed to the lock placement. This differs from the greedy approach employed in our previous work [4] that modifies \(\mathscr {C}_{\textit{abs}}'\) and then constructs a new automaton \({\mathsf {P}}'_{\textit{abs}}\) from \(\mathscr {C}_{\textit{abs}}'\) before restarting the language inclusion. The greedy approach inserts locks into \(\mathscr {C}_{\textit{abs}}'\) that are never removed in a future iteration. This can lead to inefficient lock placement. For example a larger lock may be placed that completely surrounds an earlier placed lock.

5.4 Computation of an f-optimal lock placement

Once \({\mathsf {P}}'_{\textit{abs}}\) and \(\mathsf {NP}_{\textit{abs}}\) satisfy language inclusion modulo I, we formulate global constraints over lock placements for ensuring correctness. These global constraints include all mutex constraints inferred over all iterations and constraints for enforcing deadlock-freedom. Any model of the global constraints corresponds to a lock placement that ensures program correctness. We describe the formulation of these global constraints in Sect. 8.

Given a cost function f, we compute a lock placement that satisfies the global constraints and is optimal w.r.t. f. We then synthesize the final output \(\mathscr {C}'\) by inserting the computed lock placement in \(\mathscr {C}\). We present various objective functions and describe the computation of their respective optimal solutions in Sect. 9.

6 Checking preemption-safety

6.1 Reduction of preemption-safety to language inclusion

6.1.1 Soundness of the abstraction

Formally, two observable behaviors \(\omega _1=\alpha _0\ldots \alpha _k\) and \(\omega _2=\beta _0\ldots \beta _k\) of an abstract program \(\mathscr {C}_{\textit{abs}}\) in \(\mathbb {W}_{\textit{abs}}\) are equivalent if:

-

(A1)

For each thread \( tid \), the subsequences of \(\alpha _0\ldots \alpha _k\) and \(\beta _0\ldots \beta _k\) containing only symbols of the form \(( tid ,a,\ell )\), for all a, are equal,

-

(A2)

For each variable var, the subsequences of \(\alpha _0\ldots \alpha _k\) and \(\beta _0\ldots \beta _k\) containing only write symbols (of the form \(( tid , (\mathsf {write}, var), \ell )\)) are equal, and

-

(A3)

For each variable var, the multisets of symbols of the form \(( tid , (\mathsf {read}, var), \ell )\) between any two write symbols, as well as before the first write symbol and after the last write symbol are identical.

Using this notion of equivalence, the notion of preemption-safety is extended to abstract programs: Given abstract concurrent programs \(\mathscr {C}_{abs}\) and \(\mathscr {C}'_{abs}\) in \(\mathbb {W}_{abs}\) such that \(\mathscr {C}'_{abs}\) is obtained by adding locks to \(\mathscr {C}_{abs}, \mathscr {C}'_{abs}\) is preemption-safe w.r.t. \(\mathscr {C}_{abs}\) if \([\![ \mathscr {C}'_{abs} ]\!]^{P} \Subset _{abs} [\![ \mathscr {C}_{abs} ]\!]^{\textit{NP}}\).

For the abstraction to be sound we require only that whenever preemption-safety does not hold for a program \(\mathscr {C}\), then there must be a trace in its abstraction \(\mathscr {C}_{\textit{abs}}\) feasible under preemptive, but not under non-preemptive semantics.

To illustrate this we use the program in Fig. 13, which is not preemption-safe. To see this consider the observation \((\mathtt {T1},\mathsf {out}, 10, \mathtt{ch})\) that cannot occur in the non-preemptive semantics because x is always 0 at \(\ell _4\). Note that \(\ell _3\) is unreachable because the variable y is initialized to 0 and never assigned. With the preemptive semantics the output can be observed if thread \(\mathtt {T}2\) interrupts thread \(\mathtt {T}1\) between lines \(\ell _1\) and \(\ell _4\). An example trace would be \(\ell _1;\ \ell _6;\ \ell _2;\ \ell _4;\ \ell _5\).

If we consider the abstract semantics, we notice that under the non-preemptive abstract semantics \(\ell _3\) is reachable because the abstraction makes the branching condition in \(\ell _2\) non-deterministic. However, since our abstraction is sound there must still be an observation sequence that is observable under the abstract preemptive semantics, but not under the abstract non-preemptive semantics. This observation sequence is \((\mathtt {T}1,(\mathsf {write}, \mathtt{x}), \ell _1),(\mathtt {T}2,(\mathsf {write}, \mathtt{x}), \ell _6),(\mathtt {T}1,(\mathsf {read}, \mathtt{y}), \ell _2),(\mathtt {T}1,\mathsf {else}, \ell _2),(\mathtt {T}1,(\mathsf {read}, \mathtt{x}), \ell _4),(\mathtt {T}1,\mathsf {then}, \ell _2),(\mathtt {T}1,(\mathsf {write}, \mathtt{dev}), \ell _5)\). The branch tagging records that the else branch is taken in \(\ell _2\). The non-preemptive semantics cannot produce this observation sequences because it must also take the \(\mathsf {else}\) branch in \(\ell _2\) and can therefore not reach the \(\mathsf {yield}\) statement and context-switch. As a site note, it is also not possible to transform this observation sequence into an equivalent one under the non-preemptive semantics because of the write to \(\mathtt x\) at \(\ell _6\) and the accesses to \(\mathtt x\) in \(\ell _1\) and \(\ell _4\).

This example illustrates why branch tagging is crucial to soundness of the abstraction. If we assume a hypothetical abstract semantics without branch tagging we would get the following preemptive observation sequence: \((\mathtt {T}1,(\mathsf {write}, \mathtt{x}), \ell _1),(\mathtt {T}2,(\mathsf {write}, \mathtt{x}), \ell _6),(\mathtt {T}1,(\mathsf {read}, \mathtt{y}), \ell _2),(\mathtt {T}1,(\mathsf {read}, \mathtt{x}), \ell _4),(\mathtt {T}1,(\mathsf {write}, \mathtt{dev}), \ell _5)\). This sequence would also be a valid observation sequence under the non-preemptive semantics, because it could take the \(\mathsf {then}\) branch in \(\ell _2\) and reach the \(\mathsf {yield}\) statement and context-switch.

Theorem 1

(soundness) Given concurrent program \(\mathscr {C}\) and a synthesized program \(\mathscr {C}'\) obtained by adding locks to \(\mathscr {C}, [\![ \mathscr {C}'_{abs} ]\!]^{P} \Subset _{abs} [\![ \mathscr {C}_{abs} ]\!]^{\textit{NP}}\implies [\![ \mathscr {C}' ]\!]^{P} \Subset [\![ \mathscr {C} ]\!]^{\textit{NP}}\).

Proof

It is easier to prove the contrapositive:  .

.

means that there is an observation sequence \(\omega '\) of \([\![ \mathscr {C}' ]\!]^{P}\) with no equivalent observation sequence in \([\![ \mathscr {C} ]\!]^{\textit{NP}}\). We now show that the abstract sequence \(\omega '_{abs}\) in \([\![ \mathscr {C}'_{abs} ]\!]^{P}\) corresponding to the sequence \(\omega '\) has no equivalent sequence in \([\![ \mathscr {C}_{abs} ]\!]^{\textit{NP}}\).

means that there is an observation sequence \(\omega '\) of \([\![ \mathscr {C}' ]\!]^{P}\) with no equivalent observation sequence in \([\![ \mathscr {C} ]\!]^{\textit{NP}}\). We now show that the abstract sequence \(\omega '_{abs}\) in \([\![ \mathscr {C}'_{abs} ]\!]^{P}\) corresponding to the sequence \(\omega '\) has no equivalent sequence in \([\![ \mathscr {C}_{abs} ]\!]^{\textit{NP}}\).

Towards contradiction we assume there is such an equivalent sequence \(\omega _{abs}\) in \([\![ \mathscr {C}_{abs} ]\!]^{\textit{NP}}\). We show that if \(\omega _{abs}\) indeed existed it would correspond to a concrete sequence \(\omega \) that is equivalent to \(\omega '\), thereby contradicting our assumption.

By (A1) \(\omega _{abs}\) would have the same control flow as \(\omega '_{abs}\) because of the branch tagging. By (A2) and (A3) \(\omega _{abs}\) would have the same data-flow, meaning all reads from global variables are reading the values written by the same writes as in \(\omega '_{abs}\). Since all interactions with the environment are abstracted to \(\mathsf {write}(\mathtt {dev})\) the order of interactions must be the same between \(\omega _{abs}\) and \(\omega '_{abs}\). This means that, assuming all inputs and havocs are returning the same value, in the execution \(\omega \) corresponding to \(\omega _{abs}\) all variables valuation are identical to those in \(\omega '\). Therefore, \(\omega \) is feasible and its interaction with the environment is identical to \(\omega '\) as all variable valuations are identical. Identical interaction with the environment is how equivalence between \(\omega \) and \(\omega '\) is defined. This concludes our proof. \(\square \)

6.1.2 Language inclusion modulo an independence relation

We define the problem of language inclusion modulo an independence relation. Let I be a non-reflexive, symmetric binary relation over an alphabet \({\varSigma }\). We refer to I as the independence relation and to elements of I as independent symbol pairs. We define a symmetric binary relation \(\approx _I\) over words in \({\varSigma }^*\): for all words \(\sigma , \sigma ' \in {\varSigma }^*\) and \((\alpha , \beta ) \in I, (\sigma \cdot \alpha \beta \cdot \sigma ', \sigma \cdot \beta \alpha \cdot \sigma ') \in \, \approx _I\). Let \(\approx ^t_I\) denote the reflexive transitive closure of \(\approx _I\).Footnote 2 Given a language \(\mathcal{L}\) over \({\varSigma }\), the closure of \(\mathcal{L}\) w.r.t. I, denoted \(\mathrm {Clo}_I(\mathcal{L})\), is the set \(\{\sigma \in {\varSigma }^* {:}\ \exists \sigma ' \in \mathcal L \text { with } (\sigma ,\sigma ') \in \, \hbox { }\ \approx ^t_I\}\). Thus, \(\mathrm {Clo}_I(\mathcal{L})\) consists of all words that can be obtained from some word in \(\mathcal{L}\) by repeatedly commuting adjacent independent symbol pairs from I.

Definition 1

(Language inclusion modulo an independence relation) Given NFAs A, B over a common alphabet \({\varSigma }\) and an independence relation I over \({\varSigma }\), the language inclusion problem modulo I is: \({\mathscr {L}}(\text{ A }) \subseteq \mathrm {Clo}_I({\mathscr {L}}(\text{ B }))\)?

6.1.3 Data independence relation

We define the data independence relation \({I_D}\) over our observable symbols. Two symbols \(\alpha =( tid _\alpha ,a_\alpha ,\ell _\alpha )\) and \(\beta =( tid _\beta ,a_\beta ,\ell _\beta )\) are independent, \((\alpha ,\beta )\in {I_D}\), iff (I0) \( tid _\alpha \ne tid _\beta \) and one of the following hold:

-

(I1)

\(a_\alpha \) or \(a_\beta \) in \(\{\mathsf {then},\mathsf {else},\mathsf {loop},\mathsf {loopexit}\} \)

-

(I2)

\(a_\alpha \) and \(a_\beta \) are both \((\mathsf {read}, var )\)

-

(I3)

\(a_\alpha \) is in \(\{(\mathsf {write}, var _\alpha ),\ (\mathsf {read}, var _\alpha )\}\) and \(a_\beta \) is in \(\{(\mathsf {write}, var _\beta ),\ (\mathsf {read}, var _\beta )\}\) and \( var _\alpha \ne var _\beta \)

6.1.4 Checking preemption-safety

Under abstraction, we model each thread as a nondeterministic finite automaton (NFA) over a finite alphabet consisting of abstract observable symbols. This enables us to construct NFAs \(\mathsf {NP}_{\textit{abs}}\) and \({\mathsf {P}}'_{\textit{abs}}\) accepting the languages \([\![ \mathscr {C}_{\textit{abs}} ]\!]^{\textit{NP}}\) and \([\![ \mathscr {C}_{\textit{abs}}' ]\!]^{P}\), respectively. \(\mathscr {C}_{\textit{abs}}\) is the abstract program corresponding to the input program \(\mathscr {C}\) and \(\mathscr {C}_{\textit{abs}}'\) is the program corresponding to the result of the synthesis \(\mathscr {C}'\). It turns out that preemption-safety of \(\mathscr {C}'\) w.r.t. \(\mathscr {C}\) is implied by preemption-safety of \(\mathscr {C}_{\textit{abs}}'\) w.r.t. \(\mathscr {C}_{\textit{abs}}\), which, in turn, is implied by language inclusion modulo \({I_D}\) of NFAs \({\mathsf {P}}'_{\textit{abs}}\) and \(\mathsf {NP}_{\textit{abs}}\). NFAs \({\mathsf {P}}'_{\textit{abs}}\) and \(\mathsf {NP}_{\textit{abs}}\) satisfy language inclusion modulo \({I_D}\) if any word accepted by \({\mathsf {P}}'_{\textit{abs}}\) is equivalent to some word obtainable by repeatedly commuting adjacent independent symbol pairs in a word accepted by \(\mathsf {NP}_{\textit{abs}}\).

Proposition 1

Given concurrent programs \(\mathscr {C}\) and \(\mathscr {C}', [\![ \mathscr {C}'_{abs} ]\!]^{P} \Subset _{abs} [\![ \mathscr {C}_{abs} ]\!]^{\textit{NP}}\) iff \({\mathscr {L}}({\mathsf {P}}'_{\textit{abs}}) \subseteq \mathrm {Clo}_{{I_D}}({\mathscr {L}}(\mathsf {NP}_{\textit{abs}}))\).

Proof

By construction \({\mathsf {P}}'_{\textit{abs}}\), resp. \(\mathsf {NP}_{\textit{abs}}\), accept exactly the observation sequences that \(\mathscr {C}'_{abs}\), resp. \(\mathscr {C}_{abs}\), may produce under the preemptive, resp. non-preemptive, semantics (denoted by \([\![ \mathscr {C}'_{abs} ]\!]^{P}\), resp. \([\![ \mathscr {C}_{abs} ]\!]^{\textit{NP}}\)). It remains to show that two observation sequences \(\omega _1=\alpha _0\ldots \alpha _k\) and \(\omega _2=\beta _0\ldots \beta _k\) are equivalent iff \(\omega _1\in \mathrm {Clo}_{{I_D}}(\{\omega _2\})\).

We first show that \(\omega _1\in \mathrm {Clo}_{{I_D}}(\{\omega _2\})\) implies \(\omega _1\) is equivalent to \(\omega _2\). The proof proceeds by induction: The base case is that no symbols are swapped and is trivially true. The inductive case assumes that \(\omega '\) is equivalent to \(\omega _2\) and we needs to show that after one single swap operation in \(\omega '\), resulting in \(\omega '', \omega '\) is equivalent to \(\omega ''\) and therefore by transitivity also equivalent to \(\omega _2\). Rule (A1) holds because \({I_D}\) does not allow symbols of the same thread to be swapped (I0). To prove (A2) we use the fact that writes to the same variable cannot be swapped (I2), (I3). To prove (A3) we use the fact that reads and writes to the same variable are not independent (I2), (I3).

It remains to show that \(\omega _1\) is equivalent to \(\omega _2\) implies \(\omega _1 \in \mathrm {Clo}_{{I_D}}(\{\omega _2\})\). Clearly \(\omega _1\) and \(\omega _2\) consist of the same multiset of symbols (A1). Therefore it is possible to transform \(\omega _2\) into \(\omega _1\) by swapping adjacent symbols. It remains to show that all swaps involve independent symbols. By (A1) the order of events in each thread does not change, therefore condition (I0) is always fulfilled. Branch tags can swap with every other symbol (I1) and accesses to different variables can swap with each other (I3). For each variables \( ShVar \) (A2) ensures that writes are in the same order and (A3) allows reads in between to be reordered. These swaps are allowed by (I2). No other swaps can occur. \(\square \)

6.2 Checking language inclusion

We first focus on the problem of language inclusion modulo an independence relation (Definition 1). This question corresponds to preemption-safety (Theorem 1, Proposition 1) and its solution drives our synchronization synthesis.

Theorem 2

For NFAs A, B over alphabet \({\varSigma }\) and a symmetric, irreflexive independence relation \(I\subseteq {\varSigma }\times {\varSigma }\), the problem \({\mathscr {L}}(A)\subseteq \mathrm {Clo}_I({\mathscr {L}}(B))\) is undecidable [2].

We now show that this general undecidability result extends to our specific NFAs and independence relation \({I_D}\).

Theorem 3

For NFAs \({\mathsf {P}}'_{\textit{abs}}\) and \(\mathsf {NP}_{\textit{abs}}\) constructed from \(\mathscr {C}_{\textit{abs}}\), the problem \({\mathscr {L}}({\mathsf {P}}'_{\textit{abs}})\subseteq \mathrm {Clo}_{{I_D}}({\mathscr {L}}(\mathsf {NP}_{\textit{abs}}))\) is undecidable.

Proof

Our proof is by reduction from the language inclusion modulo an independence relation problem (Definition 1). Theorem 3 follows from the undecidability of this problem (Theorem 2).

Assume we are given NFAs \(A=(Q_A,{\varSigma },{\varDelta }_A,Q_{\iota ,A},F_A)\) and \(B=(Q_B,{\varSigma },{\varDelta }_B,Q_{\iota ,B},F_B)\) and an independence relation \(I\subseteq {\varSigma }\times {\varSigma }\). Without loss of generality we assume \(A\) and \(B\) to be deterministic, complete, and free of \(\epsilon \)-transitions, meaning from every state there is exactly one transition for each symbol. We show that we can construct a program \(\mathscr {C}_{\textit{abs}}\) that is preemption-safe iff \({\mathscr {L}}(A)\subseteq \mathrm {Clo}_I({\mathscr {L}}(B))\).

For our reduction we construct a program \(\mathscr {C}_{\textit{abs}}\) that simulates \(A\) or \(B\) if run with a preemptive scheduler and simulates only \(B\) if run with a non-preemptive scheduler. Note that \({\mathscr {L}}(A)\cup {\mathscr {L}}(B)\subseteq \mathrm {Clo}_I({\mathscr {L}}(B))\) iff \({\mathscr {L}}(A)\subseteq \mathrm {Clo}_I({\mathscr {L}}(B))\). For every symbol \(\alpha \in {\varSigma }\) our simulator produces a sequence \(\omega _\alpha \) of abstract observable symbols. We say two such sequences \(\omega _\alpha \) and \(\omega _\beta \) commute if \(\omega _\alpha \cdot \omega _\beta \approx ^t_{I_D}\omega _\beta \cdot \omega _\alpha \), i.e, if \(\omega _\beta \cdot \omega _\alpha \) can be obtained from \(\omega _\alpha \cdot \omega _\beta \) by repeatedly swapping adjacent symbol pairs in \({I_D}\).

We will show that (a) \(\mathscr {C}_{\textit{abs}}\) simulates \(A\) or \(B\) if run with a preemptive scheduler and simulates only \(B\) if run with a non-preemptive scheduler, and (b) sequences \(\omega _\alpha \) and \(\omega _\beta \) commute iff \((\alpha ,\beta )\in I\).

The simulator is shown in Fig. 14. States and symbols of \(A\) and \(B\) are mapped to natural numbers and represented as bitvectors to enable simulation using the language \({{\mathscr {W}}}_{\textit{abs}}\). In particular we use Boolean guard variables from \({{\mathscr {W}}}_{\textit{abs}}\) to represent the bitvectors. We use \(\mathtt {true}\) to represent 1 and \(\mathtt {false}\) to represent 0. As the state space and the alphabet are finite we know the number of bits needed a priori. We use n, m, and p for the number of bits needed to represent \(Q_A, Q_B\), and \({\varSigma }\), respectively. The transition functions \({\varDelta }_A\) and \({\varDelta }_B\) likewise work on the individual bits. We represent bitvector x of length n as \(x^1\ldots x^n\).

Thread \(\mathtt {T}1\) simulates both automata A and B simultaneously. We assume the initial states of \(A\) and \(B\) are mapped to the number 0. In each iteration of the loop in thread \(\mathtt {T}1\) a symbol \(\alpha \in {\varSigma }\) is chosen non-deterministically and applied to both automata (we discuss this step in the next paragraph). Whether thread \(\mathtt {T}1\) simulates \(A\) or \(B\) is decided only in the end: depending on the value of \(\mathtt {simA}\) we assert that a final state of \(A\) or \(B\) was reached. The value of \(\mathtt {simA}\) is assigned in thread \(\mathtt {T}2\) and can only be \(\mathtt {true}\) if \(\mathtt {T}2\) is preempted between locations \(\ell _{12}\) and \(\ell _{13}\). With the non-preemptive scheduler the variable \(\mathtt {simA}\) will always be \(\mathtt {false}\) because thread \(\mathtt {T}2\) cannot be preempted. The simulator can only reach the \(\langle \mathtt {terminated}\rangle \) state if all assumptions hold as otherwise it would end in the \(\langle \mathtt {failed}\rangle \) state. The guard \(\mathtt {final}\) will only be assigned \(\mathtt {true}\) in \(\ell _{10}\) if either \(\mathtt {simA}\) is \(\mathtt {false}\) and a final state of \(B\) has been reached or if \(\mathtt {simA}\) is \(\mathtt {true}\) and a final state of \(A\) has been reached. Therefore the valid non-preemptive executions can only simulate \(B\). In the preemptive setting the simulator can simulate either \(A\) or \(B\) because \(\mathtt {simA}\) can be either \(\mathtt {true}\) or \(\mathtt {false}\). Note that the statement in location \(\ell _{10}\) executes atomically and the value of \(\mathtt {simA}\) cannot change during its evaluation. This means that \({\mathsf {P}}'_{\textit{abs}}\) simulates \({\mathscr {L}}(A)\cup {\mathscr {L}}(B)\) and \(\mathsf {NP}_{\textit{abs}}\) simulates \({\mathscr {L}}(B)\).

We use \(\tau \) to store the symbol used by the transition function. The choice of the next symbol needs to be non-deterministic to enable simulation of \(A,B\) and there is no havoc statement in \({{\mathscr {W}}}_{\textit{abs}}\). We therefore use the fact that the next thread to execute is chosen non-deterministically at a preemption point. We define a thread \(\mathtt {T}_\alpha \) for every \(\alpha \in {\varSigma }\) that assigns to \(\tau \) the number \(\alpha \) maps to. Threads \(\mathtt {T}_\alpha \) can only run if the conditional variable ch-sym is set to 1 by the \(\mathsf {notify}\) statement in \(\ell _{2}\). The  in \(\ell _{3}\) is a preemption point for the non-preemptive semantics. Then, exactly one thread \(\mathtt {T}_\alpha \) can proceed because the

in \(\ell _{3}\) is a preemption point for the non-preemptive semantics. Then, exactly one thread \(\mathtt {T}_\alpha \) can proceed because the  statement in \(\ell _{15}\) atomically resets ch-sym to 0. After setting \(\tau \) and outputting the representation of \(\alpha \) thread \(\mathtt {T}_\alpha \), notifies thread \(\mathtt {T}1\) using condition variable ch-sym-compl. Another symbol can only be produced in the next loop iteration of \(\mathtt {T}1\).

statement in \(\ell _{15}\) atomically resets ch-sym to 0. After setting \(\tau \) and outputting the representation of \(\alpha \) thread \(\mathtt {T}_\alpha \), notifies thread \(\mathtt {T}1\) using condition variable ch-sym-compl. Another symbol can only be produced in the next loop iteration of \(\mathtt {T}1\).

To produce an observable sequence faithful to I for each symbol in \({\varSigma }\) we define a homomorphism h that maps symbols from \({\varSigma }\) to sequences of observables. Assuming the symbol \(\alpha \in {\varSigma }\) is chosen, we produce the following observables:

-

Loop tag To output \(\alpha \) the thread \(\mathtt {T}_\alpha \) has to perform one loop iteration. This implicitly produces a loop tag \((\mathtt {T}_\alpha , \mathsf {loop}, \ell _{14})\).

-

Conflict variables For each pair of \((\alpha , \alpha _i) \notin I\), we define a conflict variable \(v_{\{\alpha , \alpha _i\}}\). Note that \(v_{\{\alpha , \alpha _i\}}=v_{\{\alpha _i, \alpha \}}\) and two writes to \(v_{\{\alpha , \alpha _i\}}\) do not commute under \({I_D}\). For each \(\alpha _i\), we produce a tag \((T_\alpha , (\mathsf {write},v_{\{\alpha , \alpha _i\}}, \ell _{oi}))\). Therefore if two variables \(\alpha _1\) and \(\alpha _2\) are dependent the observation sequences produced for each of them will contain a write to \(v_{\{\alpha _1, \alpha _2\}}\).

Formally, the homomorphism h is given by \(h(\alpha ) = (\mathtt {T}_\alpha , \mathsf {loop}, \ell _{14});(\mathtt {T}_\alpha , (\mathsf {write},v_{\{\alpha , \alpha _1\}}),\ell _{o1}); \cdots ; (\mathtt {T}_\alpha , (\mathsf {write},v_{\{\alpha , \alpha _k\}}), \ell _{ok})\). For a sequence \(\sigma =\alpha _1\ldots \alpha _n\) use define \(h(\sigma )=h(\alpha _1)\ldots h(\alpha _n)\).

We show that \((\alpha _1,\alpha _2)\in I\) iff \(h(\alpha _1)\) and \(h(\alpha _2)\) commute. The loop tags are independent iff \(\alpha _1\ne \alpha _2\). If \(\alpha _1=\alpha _2\) then \((\alpha _1,\alpha _2)\notin I\) and \(h(\alpha _1)\) and \(h(\alpha _2)\) do not commute due to the loop tags. Assuming \((\alpha _1,\alpha _2)\in I\) then \(h(\alpha _1)\) and \(h(\alpha _2)\) commute because they have no common conflict variable they write to. On the other hand, if \((\alpha _1,\alpha _2)\notin I\), then both \(h(\alpha _1)\) and \(h(\alpha _2)\) will contain \((\mathtt {T}_{\alpha _{\{1,2\}}}, (\mathsf {write},v_{\{\alpha _1, \alpha _2\}}), \ell _{oi})\) and therefore cannot commute. We extend this result to sequences and have that \({h(\sigma ')\approx ^t_{I_D}h(\sigma )}\) iff \({\sigma ' \approx ^t_I \sigma }\).

This concludes our reduction. It remains to show that \(\mathscr {C}_{\textit{abs}}\) is preemption-safe iff \({\mathscr {L}}(A)\subseteq \mathrm {Clo}_I({\mathscr {L}}(B))\). By Proposition 1 it suffices to show that \({\mathscr {L}}(A)\subseteq \mathrm {Clo}_I({\mathscr {L}}(B))\) iff \({\mathscr {L}}({\mathsf {P}}'_{\textit{abs}})\subseteq \mathrm {Clo}_{{I_D}}({\mathscr {L}}(\mathsf {NP}_{\textit{abs}}))\).

-

1.

We assume that \({\mathscr {L}}(A)\subseteq \mathrm {Clo}_I({\mathscr {L}}(B))\). Then, for every word \(\sigma \in {\mathscr {L}}(A)\) we have that \(\sigma \in \mathrm {Clo}_I({\mathscr {L}}(B))\). By construction \(h(\sigma )\in {\mathscr {L}}({\mathsf {P}}'_{\textit{abs}})\). It remains to show that \(h(\sigma )\in \mathrm {Clo}_{{I_D}}({\mathscr {L}}(\mathsf {NP}_{\textit{abs}}))\). By \(\sigma \in \mathrm {Clo}_I({\mathscr {L}}(B))\) we know there exists a word \(\sigma '\in {\mathscr {L}}(B)\), such that \(\sigma '\approx _I^t \sigma \). Therefore also \(h(\sigma ')\approx ^t_{I_D}h(\sigma )\) and by construction \(h(\sigma ')\in {\mathscr {L}}(\mathsf {NP}_{\textit{abs}})\).

-

2.

We assume that \({\mathscr {L}}(A)\nsubseteq \mathrm {Clo}_I({\mathscr {L}}(B))\). Then, there exists a word \(\sigma \in {\mathscr {L}}(A)\) such that \(\sigma \notin \mathrm {Clo}_I({\mathscr {L}}(B))\). By construction \(h(\sigma )\in {\mathscr {L}}({\mathsf {P}}'_{\textit{abs}})\). Let us assume towards contradiction that \(h(\sigma ) \in \mathrm {Clo}_{{I_D}}({\mathscr {L}}(\mathsf {NP}_{\textit{abs}}))\). Then there exists a word \(\omega \) in \({\mathscr {L}}(\mathsf {NP}_{\textit{abs}})\) such that \(\omega \approx ^t_{I_D}h(\sigma )\). By construction, this implies there exists some \(\sigma '\in {\mathscr {L}}(B)\) such that \(\omega = h(\sigma ')\) and \(h(\sigma ') \approx ^t_{I_D}h(\sigma )\). Thus, there exists \(\sigma '\in {\mathscr {L}}(B)\) such that \(\sigma ' \approx ^t_I \sigma \). This implies \(\sigma \in \mathrm {Clo}_I({\mathscr {L}}(B))\), which is a contradiction. \(\square \)

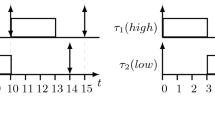

Fortunately, a bounded version of the language inclusion modulo I problem is decidable. Recall the relation \(\approx _I\) over \({\varSigma }^*\) from Sect. 6.1. We define a symmetric binary relation \(\approx _I^i\) over \({\varSigma }^*\): \((\sigma , \sigma ') \in \, \approx _I^i\) iff \(\exists (\alpha ,\beta ) \in I\): \((\sigma , \sigma ') \in \, \approx _I, \sigma [i] = \sigma '[i+1] = \alpha \) and \(\sigma [i+1] = \sigma '[i] = \beta \). Thus \(\approx ^i_I\) consists of all words that can be obtained from each other by commuting the symbols at positions i and \(i+1\). We next define a symmetric binary relation \(\asymp \) over \({\varSigma }^*\): \((\sigma ,\sigma ') \in \, \asymp \) iff \(\exists \sigma _1,\ldots ,\sigma _t\): \((\sigma ,\sigma _1) \in \, \approx _I^{i_1},\ldots , (\sigma _{t},\sigma ') \in \, \approx _I^{i_{t+1}}\) and \(i_1< \ldots < i_{t+1}\). The relation \(\asymp \) intuitively consists of words obtained from each other by making a single forward pass commuting multiple pairs of adjacent symbols. We recursively define \(\asymp ^k\) as follows: \(\asymp ^0\) is the identity relation id. For \(k>0\) we define \(\asymp ^k = \asymp \circ \asymp ^{k-1}\), the composition of \(\asymp \) with \(\asymp ^{k-1}\). Given a language \(\mathcal{L}\) over \({\varSigma }\), we use \(\mathrm {Clo}_{k,I}(\mathcal{L})\) to denote the set \(\{\sigma \in {\varSigma }^*: \exists \sigma ' \in \mathcal L \text { with } (\sigma ,\sigma ') \in \, \asymp ^{\hbox { }\ \scriptstyle k}\}\). In other words, \(\mathrm {Clo}_{k,I}(\mathcal{L})\) consists of all words which can be generated from \(\mathcal{L}\) using a finite-state transducer that remembers at most k symbols of its input words in its states. By definition we have \(\mathrm {Clo}_{0,I}(\mathcal L) = \mathcal L\).

Example 1

We assume the language \(\mathcal{L}=\{a,b\}^*\), where \((a,b)\in I\).

-

\(\textit{aaab} \asymp _I^1 \textit{aaba}\) because one can swap the letters as position 3 and 4.

-

\(\textit{aaab} \not \asymp _I^1 \textit{abaa}\) because one can only swap the letters as position 3 and 4 in one pass, but not after that swap 2 and 3.

-

However, \(\textit{aaab} \asymp _I^2 \textit{abaa}\), as two passes suffice to do the two swaps.

-

\(\textit{baaa} \asymp _I^1 \textit{aaba}\) because in a single pass one can swap 1 and 2 and then 2 and 3.

Definition 2

(Bounded language inclusion modulo an independence relation) Given NFAs \(A, B\) over \({\varSigma }, I\subseteq {\varSigma }\times {\varSigma }\) and a constant \(k\ge 0\), the k-bounded language inclusion problem modulo I is: \({\mathscr {L}}(\text{ A })\subseteq \mathrm {Clo}_{k,I}({\mathscr {L}}(\text{ B }))\)?

Theorem 4

For NFAs \(A, B\) over \({\varSigma }, I\subseteq {\varSigma }\times {\varSigma }\) and a constant \(k\ge 0, {\mathscr {L}}(\text{ A }) \subseteq \mathrm {Clo}_{k,I}({\mathscr {L}}(\text{ B }))\) is decidable.

We present an algorithm to check k-bounded language inclusion modulo I, based on the antichain algorithm for standard language inclusion [11].

6.3 Antichain algorithm for language inclusion

Given a partial order \((X, \sqsubseteq )\), an antichain over X is a set of elements of X that are incomparable w.r.t. \(\sqsubseteq \). In order to check \({\mathscr {L}}(A)\subseteq {\mathscr {L}}(B)\) for NFAs \(A = (Q_A,{\varSigma },{\varDelta }_A,Q_{\iota ,A},F_A)\) and \(B = (Q_B,{\varSigma },{\varDelta }_B,Q_{\iota ,B},F_B)\), the antichain algorithm proceeds by exploring \(A\) and \(B\) in lockstep. Without loss of generality we assume that \(A\) and \(B\) do not have \(\epsilon \)-transitions. While \(A\) is explored nondeterministically, \(B\) is determinized on the fly for exploration. The algorithm maintains an antichain, consisting of tuples of the form \((s_A, S_B)\), where \(s_A\in Q_A\) and \(S_B\subseteq Q_B\). The ordering relation \(\sqsubseteq \) is given by \((s_A, S_B) \sqsubseteq (s'_A, S'_B)\) iff \(s_A= s'_A\) and \(S_B\subseteq S'_B\). The algorithm also maintains a frontier set of tuples yet to be explored.

Given state \(s_A\in Q_A\) and a symbol \(\alpha \in {\varSigma }\), let \(\mathsf{succ}_\alpha (s_A)\) denote \(\{s_A' \in Q_A: (s_A,\alpha ,s_A') \in {\varDelta }_A\}\). Given set of states \(S_B\subseteq Q_B\), let \(\mathsf{succ}_\alpha (S_B)\) denote \(\{s_B'\in Q_B: \exists s_B\in S_B:\ (s_B,\alpha ,s_B')\in {\varDelta }_B\}\). Given tuple \((s_A, S_B)\) in the frontier set, let \(\mathsf{succ}_\alpha (s_A, S_B)\) denote \(\{(s'_A,S'_B): s'_A\in \mathsf{succ}_\alpha (s_A), S'_B= \mathsf{succ}_\alpha (S_B)\}\).