Abstract

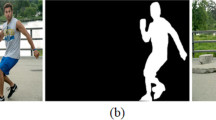

Photo quality enhancement by photographic evaluation is a challenging problem in photography. In this paper, a new photo quality enhancement algorithm for photographic recomposition based on subject relocation is presented. Most related research focuses on image size alteration, or cropping, while the proposed algorithm in this paper is extending existing ones by relocating foreground object to meet one of the composition rules. The contribution of this paper is to overcome the problem of existing algorithms which cannot place the main object according to photographers’ intentions. In detail, the presented algorithm is consist of three parts: image matting, region filling and image recomposition. Image matting is used to extract foreground object from background image. The region where foreground object is separated must be filled naturally without any seams or artifacts by the aid of region filling algorithm. Finally, the foreground object can be relocated to a proper location with a better composition score. Experimental results will prove the excellence of the presented algorithm. The presented method can be embedded on commercial cameras, so the authors expect that it can be applied in the near future.

Similar content being viewed by others

References

Criminisi, A., Pérez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 13(9), 1200–1212 (2014)

Kim, S.-H., Jeong, Y.-S.: Mobile image sensors for object detection using color segmentation. cluster Comput. 16(4), 757–763 (2013)

Balafoutis, E., Panagakis, A., Laoutaris, N., Stavrakakis, I.: Study of the impact of replacement granularity and associated strategies on video caching. Cluster Comput. 8(1), 89–100 (2005)

Zhang, S., McCullagh, P., Zhang, J., Yu, T.: A smartphone based real-time daily activity monitoring system. Cluster Comput. 17(3), 711–721 (2014)

Park, R.C., Jung, H., Shin, D.-K., Kim, G.-J., Yoon, K.-H.: M2M-based smart health service for human UI/UX using motion recognition. Cluster Comput. 18(1), 221–232 (2015)

Wang, J., Cohen, M.F.: Image and video matting: a survey. Found. Trends Comput. Graph. Vis. 3(2), 97–180 (2007)

Bai, X., Sapiro, G.: A geodesic framework for fast interactive image and video segmentation and matting. Int. J. Comput. Vis. 82(2), 1–8 (2009)

Protiere, A., Sapiro, G.: Interactive image segmentation via adaptive weighted distances. IEEE Trans. Image Process. 16, 1046–1057 (2007)

Wang, J., Cohen, M.F.: An iterative optimization approach for unified image segmentation and matting. Proc. IEEE ICCV 2005, 936–943 (2005)

Yatziv, L., Sapiro, G.: Fast image and video colorization using chrominance blending. IEEE Trans. Image Process. 15(5), 1120–1129 (2006)

Lee, T.-H., Hwang, B.-H., Yun, J.-H., Choi, M.-R.: A road region extraction using openCV CUDA to advance the processing speed. J. Digital Converg. 12(6), 231–236 (2014)

Kang, S.-K., Lee, J.-H.: Real-time head tracking using adaptive boosting in surveillance. J. Digital Converg. 11(2), 243–248 (2013)

Kang, S.-K., Choi, K.-H., Chung, K.-Y., Lee, J.-H.: Object detection and tracking using bayesian classifier in surveillance. J. Digital Converg. 10(6), 297–302 (2012)

Wang, J.Z., Li, J., Gray, R.M., Wiederhold, G.: Unsupervised multiresolution segmentation for images with low depth of field. In: IEEE Trans. on Pattern Analysis and Machine Intel., Vol. 23, pp. 85–90 (2001)

Beauchemin, S.S., Barron, J.L.: The computation of optical flow. ACM Comput. Surv. 27(3), 433–466 (1995)

Weber, J., Malik, J.: Robust computation of optical-flow in a multiscale differential framework. Int. J. Comput. Vis. 14(1), 67–81 (1995)

Haussecker, H., Fleet, D.J.: Estimating optical flow with physical models of brightness variation. IEEE Trans. Pattern Anal. Mach. Intell. 23(6), 661–673 (2001)

Bertalmio, M., Vese, L., Sapiro, G., Osher, S.: Simultaneous structure and texture image inpainting. In: Proceedings of Computer Vision Pattern Recognition (2003)

Bertalmio, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. In: Proceedings of ACM SIGGRAPH, pp. 417–424 (2000)

Chan, T.F., Shen, J.: Non-texture inpainting by curvature-driven diffusions (CDD). J. Vis. Commun. Image Represent. 4(12), 436–449 (2001)

De Bonet, J.S.: Multiresolution sampling procedure for analysis and synthesis of texture images. In: Proceedings of ACM SIGGRAPH, pp. 361–368 (1997)

Efros, A., Leung, T.: Texture synthesis by non-parametric sampling. In: Proceedings of International Conference on Computer Vision, pp. 1033–1038 (1999)

Paget, R., Longstaff, I.: Texture synthesis via a noncausal nonparametric multiscale markov random field. IEEE Trans. Image Process. 7(6), 925–931 (1998)

Igehy, H., Pereira, L.: Image replacement through texture synthesis. In: Proceedings of International Conference on Image Processing, pp. 186–189 (1997)

London, B., Upton, J., Kobre, K., Brill, B.: Photography, 8th edn. Prentice Hall, New Jersey (2004)

Jeong, K., Cho, H.J.: Digital panning shot generator from photographs. Cluster Comput. 18(2), 667–676 (2015)

Jeong, K., Cho, H.-J.: A digitalized recomposition technique based on photo quality evaluation criteria. Wireless Personal Commun. (2015) (Accepted for publication)

Petschnigg, G., Szeliski, R., Agrawala, M., Cohen, M., Hoppe, H., Toyama, K.: Digital photography with flash and no-flash image pairs. ACM Trans. Graph. 23(3), 664–672 (2004)

Raskar, R., Tan, K., Feris, R., Yu, J., Turk, M.: Non-photorealistic camera: depth edge detection and stylized rendering using multi-flash imaging. ACM Trans. Graph. 23(3), 679–688 (2004)

Masselus, V., Peers, P., Dutré, P., Willems, Y.D.: Relighting with 4D incident light fields. ACM Trans. Graph. 22(3), 613–620 (2003)

Matusik, W., Loper, M., Pfister, H.: Progressively-refined reflectance functions from natural illumination. In: Eurographics Symposium on Rendering, pp. 299–308 (2004)

Sajadi, B., Majumder, A., Hiwada, K., Maki, A., Raskar, R.: Switchable primaries using shiftable layers of color filter arrays. ACM Trans. Graph. 30, 65 (2011)

Banerjee, S., Evans, B.L.: Unsupervised automation of photographic composition rules in digital still cameras. In: Proc. SPIE Conf. Sensors, Color, Cameras, and Syst. for Digital Photography VI, pp. 364–373 (2004)

Banerjee, S., Evans, B.L.: Unsupervised merger detection and mitigation in still images using frequency and color content analysis. In: Proc. IEEE Int. Conf. Acoust. Speech Signal Proc., pp. 549–552 (2004)

Banerjee, S., Evans, B.L.: In-camera automation of photographic composition rules. IEEE Trans. Image Process. 16(7), 1807–1820 (2007)

Santella, A., Agrawala, M., DeCarlo, D., Salesin, D.H., Cohen, M.F.: Gaze-based interaction for semi-automatic photo cropping. ACM Human Factors in Computing Systems (CHI), pp. 771–780 (2006)

Chen, L., Xie, X., Fan, X., Ma, W., Zhang, H., Zhou, H.: A visual attention model for adapting images on small displays. Multimedia Syst. 9(4), 353–364 (2003)

Suh, B., Ling, H., Bederson, B.B., Jacobs, D.W.: Automatic thumbnail cropping and its effectiveness. CHI Lett. 5(2), 95–104 (2003)

Jeong, K.: Paradigm shift of camera: part I. computational photography. J. Korea Comput. Graph. Soc. 15(4), 23–30 (2009)

Raskar, R., Tumblin, J., Levoy, M., Nayer, S.: SIGGRAPH 2006 course notes on computational photography. SIGGRAPH (2006)

Yuan, L., Sun, J., Quan, L., Shum, H.Y.: Image deblurring with blurred/noisy image Pairs. ACM Trans. Graph. 26(3), 1 (2007)

Eisemann, E., Durand, F.: Flash photography enhancement via intrinsic relighting. ACM Trans. Graph. 23(3), 673–678 (2004)

Bando, Y., Chen, B., Nishita, T.: Extracting depth and matte using a color-filtered aperture. ACM Trans. Graph. 27(5), 134 (2008)

Cossairt, O., Zhou, C., Nayar, S.: Diffusion coded photography for extended depth of field. ACM Trans. Graph. 29(4), 31 (2010)

Levin, A., Fergus, R., Durand, F., Freeman, B.: Image and depth from a conventional camera with a coded aperture. ACM Trans. Graph. 26(3), 70 (2007)

Jeong, K., Kim, D., Park, S.-Y., Lee, S.: Digital shallow depth-of-field adapter for photographs. Visual Comput. 24(4), 281–294 (2008)

Shan, Q., Jia, J., Agarwala, A.: High-quality motion deblurring from a single image. ACM Trans. Graph. 27(3), 1–10 (2008)

Fergus, R., Singh, B., Hertzmann, A., Roweis, S., Freeman, W.: Removing camera shake from a single image. ACM Trans. Graph. 24(3), 787–794 (2006)

Cho, S., Matsushita, Y., Lee, S.: Removing non-uniform motion blur from images. In: IEEE International Conference on Computer Vision, pp. 1–8 (2007)

Talvala, E., Adams, A., Horowitz, M., Levoy, M.: Veiling glare in high dynamic range imaging. ACM Trans. Graph. 26(3), 37 (2007)

Debevec, P., Malik, J.: Recovering high dynamic range radiance maps from photographs. In: Proceedings of ACM SIGGRAPH, pp. 369–378 (1997)

Shan, Q., Li, Z., Jia, J., Tang, C.: Fast image/video upsampling. ACM Trans. Graph. 27(5), 153 (2008)

Sun, J., Xu, Z., Shum, H.: Image super-resolution using gradient profile prior. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Freeman, G., Fattal, R.: Image and video upscaling from local self-examples. ACM Trans. Graph. 28(3), 1–10 (2010)

Kopf, J., Uyttendaele, M., Deussen, O., Cohen, M.: Capturing and viewing gigapixel images. ACM Trans. Graph. 26(3), 93 (2007)

Kopf, J., Cohen, M., Lischinski, D., Uyttendaele, M.: Joint bilateral upsampling. ACM Trans. Graph. 26(3), 96 (2007)

Wang, Y., Hsiao, J., Sorkine, O., Lee, T.: Scalable and coherent video resizing with per-frame optimization. ACM Trans. Graph. 30(3), 88 (2011)

Wang, Y., Lin, H., Sorkine, O., Lee, T.: Motion-based video retargeting with optimized crop-and-warp. ACM Trans. Graph. 29(3), 90 (2010)

Rubinsteing, M., Shamir, A., Avidan, S.: Improved seam carving for video retargeting. ACM Trans. Graph. 27(3), 16 (2008)

Zheng, Y., Kambhamettu, C., Yu, J., Bauer, T., Steiner, K.: FuzzyMatte: a computationally efficient scheme for interactive matting. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2008)

Wang, J., Agrawala, M., Cohen, M.: Soft scissors : an interactive tool for realtime high quality matting. ACM Trans. Graph. 25(3), 9 (2008)

McGuire, M., Matusik, W., Pfister, H., Hughes, J., Durand, F.: Defocus video matting. ACM Trans. Graph. 24(3), 567–576 (2005)

Gooch, A.A., Olsen, S.C., Tumblin, J., Gooch, B.: Color2Gray: salience-preserving color removal. ACM Trans. Graph. 24(3), 1–6 (2005)

Kim, Y., Jang, C., Demouth, J., Lee, S.: Robust color-to-gray via nonlinear global mapping. ACM Trans. Graph. 28(5), 161 (2009)

Grundland, M., Dodgson, N.A.: Decolorize: fast, contrast enhancing, color to grayscale conversion. Proc. IEEE Conf. Comput. Vision Pattern Recognit. 40(11), 2891–2896 (2007)

Smith, K., Landes, P., Thollot, J., Myszkowski, K.: Apparent greyscale: a simple and fast conversion to perceptually accurate images and video. Comput. Graph. Forum 27(2), 193–200 (2008)

Tang, H., Joshi, N., Kapoor, A.: Learning a blind measure of perceptual image quality. In: Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 305–312 (2011)

Lee, Y.-H., Cho, H.-J., Lee, J.-H.: Image retrieval using multiple features on mobile platform. J. Digital Converg. 12(2), 243–243 (2014)

Acknowledgments

This research was supported by the Daegu University Research Grant, 2015.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jeong, K., Cho, HJ. Photo quality enhancement by relocating subjects. Cluster Comput 19, 939–948 (2016). https://doi.org/10.1007/s10586-016-0547-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-016-0547-z