Abstract

This study examines how codes of conduct, monitoring, and penalties for dishonest reporting affect reporting honesty in an online labor market setting. Prior research supports the efficacy of codes of conduct in promoting ethical behavior in a variety of contexts. However, the effects of such codes and other methods have not been examined in online labor markets, an increasingly utilized resource that differs from previously examined settings in several key regards (e.g., transient workforce, lack of an established culture). Leveraging social norm activation theory, we predict and find experimental evidence that while codes of conduct and monitoring without economic penalties are ineffective in online settings, monitoring with economic penalties activates social norms for honesty and promotes honest reporting in an online setting. Further, we find that imposing penalties most effectively promotes honest reporting in workers who rate high in Machiavellianism, a trait that is highly correlated with dishonest reporting. In fact, while in the absence of penalties we observe significantly more dishonest reporting from workers who rate high versus low in Machiavellianism, this difference is eliminated in the presence of penalties. Implications of these findings for companies, researchers, online labor market administrators, and educators are discussed.

Similar content being viewed by others

Introduction

Businesses and researchers are increasingly utilizing online labor markets for various tasks (e.g., Amazon.com’s Mechanical Turk, CrowdFlower) due to the speed and cost benefits these markets offer (Ipeirotis 2010; Worstall 2013). Yet, several idiosyncratic features of online markets create concerns about unethical worker behavior. Most notably, relative to more traditional business settings, online labor markets utilize a more transient, anonymous workforce that is paid significantly lower wages (Horton and Chilton 2010). With respect to wages paid, critics have referred to online labor markets as digital sweatshops (Zittrain 2009) and even online feudalism (Glazer 2011; Mims 2015). In addition, online workers generally have weaker social ties to their employers, potentially face greater distractions, and often work in uncontrolled settings (Farrell et al. 2017).

Collectively, these characteristics of online labor markets provide online workers with motivation, opportunity, and rationalization to act dishonestly for personal gain at their employer’s expense, thereby increasing the probability of dishonest behavior (Cressey 1973). For example, Folbre (2013) speculates that online workers may write reviews for products or services they have never used. Consistent with this concern, Farrell et al. (2017) and several other studies on honesty in online markets (Suri et al. 2011; Goodman et al. 2012) find that a significant percentage of online workers report dishonestly.

These findings are troubling not only because an increasing number of companies and researchers are either beginning to utilize or are expanding their use of online markets, but also because they are starting to use online markets for more complex tasks. Specifically, while online labor markets generally have been used for low-skilled tasks, companies and academic researchers are starting to use online markets for demanding, high-skilled tasks (Ipeirotis 2010; Brasel et al. 2016). Farrell et al. (2017) indicate that the potential for unethical behavior in online labor markets is magnified for more demanding tasks, making it even more critical to understand how best to deter it. However, despite the clear significance of this issue, little to no prior research examines how best to promote ethical behavior in online settings. We address this gap in the literature by examining the efficacy of the following methods of promoting honesty in online labor markets: (1) codes of conduct (hereafter “Codes”), (2) monitoring without penalties, and (3) monitoring with explicit penalties.Footnote 1

To develop hypotheses, we consider the likely effects of the above methods through the lens of social norm activation theory (Bicchieri 2006). This theory is particularly conducive to studies of ethical decision making because it emphasizes “…the ability of contextual cues to alter behavior by forming common expectations of social norm behavior” (Blay et al. 2016, 4). Thus, we consider the efficacy of the examined methods based on their likely ability to activate social norms for honest reporting within online markets. While Codes have been found to activate norms by communicating employer expectations of employee behavior, such activation largely depends on the presence of a pre-established organizational culture (Bicchieri 2006; Stevens 2008; Blay et al. 2016).Footnote 2 Online labor markets, however, generally lack such cultures due to one-time, short-term contracting with transient, anonymous workers. Thus, we do not expect Codes alone to activate social norms for honesty or to effectively reduce dishonest reporting in online settings.

Nevertheless, imposing penalties, either reputational or economic, can activate social norms and mitigate unethical behavior (Antle 1984; Bicchieri 2006; Rosman et al. 2012). Whether penalties activate social norms, however, likely depends on the nature of the penalties imposed. In online markets, reputational costs often are insignificant due to one-time contracting and worker anonymity (Farrell et al. 2017). Thus, we do not expect monitoring with only implied reputational penalties (i.e., without explicit economic penalties) to activate social norms for honesty or to reduce dishonest reporting. Conversely, economic penalties likely are significant to online workers, as obtaining monetary payment likely is their primary motivation for performing a given task. Therefore, we expect monitoring with explicit economic penalties to activate social norms for honesty and, ultimately, reduce dishonest reporting in an online labor market setting.

It is important to note, however, that whether penalties activate social norms not only depends on the nature of the penalty imposed but also on personality traits, particularly sensitivity to social norms (Bicchieri 2006). Specifically, while workers who “naturally” are sensitive to social norms are unlikely to act unethically even in the absence of penalties for unethical behavior, workers who are less sensitive to social norms are less likely to act ethically, unless they believe they will incur personal costs for doing so (Bicchieri 2006; Blay et al. 2016). Thus, economic penalties have a greater “opportunity” to reduce dishonest reporting in workers who are insensitive to social norms. As such, we predict that monitoring with penalties will more effectively promote honest reporting in workers who are relatively insensitive to social norms. Consistent with this prediction, Blay et al. (2016) note, “For some it is sufficient to believe that other people expect them to conform to the norm, whereas some need to believe that others are prepared to punish them for nonconformance.”

Beyond the predictions above, we also propose that a potentially useful proxy for individuals’ sensitivity to social norms is their level of Machiavellianism (Christie and Geis 1970; Verbeke et al. 1996; Rayburn and Rayburn 1996; Bass et al. 1999).Footnote 3 In particular, we propose that individuals who rate high in Machiavellianism (henceforth “high machs”) are less sensitive to social norms and are more likely affected by penalties for dishonest reporting than individuals who are low in Machiavellianism (“low machs”). Thus, at an operational level, we predict that monitoring with penalties will reduce unethical behavior in online settings to a greater extent among high versus low machs.

To test our predictions, we conducted a 2 × 3 × 2 between-participants experiment within an online labor market (Mechanical Turk) using a modified task used in numerous previous studies that examine reporting honesty (e.g., Gino et al. 2009). In this task, participants’ total compensation depends, in part, on their self-reported level of performance. The experiment manipulates the presence of a Code at two levels (present/absent) and monitoring at three levels (none/monitoring without explicit penalty/monitoring with explicit penalty). The other independent variable is a median split on the personality trait of Machiavellianism measured with a well-established scale (Christie and Geis 1970). Consistent with prior studies (e.g., Gino et al. 2009), honesty is inferred by differences across conditions in the mean self-reported performance, as we assume that other variables relevant to performance are randomly distributed across conditions. The experiment also includes several unsolvable questions for a direct measure of honesty, i.e., participants who indicate that they correctly answered an unsolvable question are assumed to have reported dishonestly.

Results indicate that Codes have no impact on dishonest reporting in online settings, consistent with prior findings that Codes do not activate social norms when established cultures are absent. Likewise, informing participants that their work will be monitored without economically penalizing them for misreporting does not deter dishonest reporting. However, informing participants that their work will be monitored and that they will incur economic penalties for misreporting activates a social norm for honesty and reduces dishonest reporting. Further, the effect of penalties on reporting honesty appears to depend on workers’ sensitivity to social norms as imposing penalties reduces dishonest reporting to a greater extent for high versus low machs. In fact, while we observe significantly greater dishonest reporting for high versus low machs in the absence of penalties, this difference is eliminated in the presence of penalties.

This study extends social norm activation theory (Bicchieri 2006; Blay et al. 2016) in several key regards. First, we answer Bicchieri’s (2006) call for research on understanding social norm activation in unique social settings, as online labor markets involve remote, anonymous workers who rarely interact in the performance of their duties. Given these features, there was reason to doubt whether methods that previously have been found to activate social norms in traditional work settings (e.g., Codes, monitoring without penalty—Stevens 2008; Rosman et al. 2012; Davidson and Stevens 2013) would activate norms for ethical behavior in online settings. Consistent with such doubt, we find that Codes and monitoring without penalties are ineffective in online settings. However, we find that imposing economic penalties can activate social norms for honesty and promote honest reporting in online market settings.

Second, this study extends social norm activation theory by answering Blay et al.’s (2016) call for research examining how organizational and dispositional factors separately and interactively activate social norms. Specifically, we find that imposing penalties reduces dishonest reporting to a greater extent within individuals who, absent penalties, are unlikely to be sensitive to social norms, i.e., high machs. In this sense, our study confirms the key role of social norm sensitivity in moderating the effects of various methods of activating social norms in online settings, and it also demonstrates that individuals’ Machiavellianism ratings (Christie and Geis 1970) can function as a useful proxy for their social norm sensitivity.

In addition to extending social norm activation theory, the findings discussed above also contribute to the nascent literature on online labor markets. In particular, whereas previous studies primarily focus on whether online workers are dishonest (Suri et al. 2011; Goodman et al. 2012; Farrell et al. 2017), this is the first study, to our knowledge, to examine how best to promote ethical behavior in light of the idiosyncratic features of online labor markets.

Last, this study contributes to the literature on Machiavellianism. We demonstrate that the personality trait’s effect on unethical behavior (e.g., Verbeke et al. 1996; Rayburn and Rayburn 1996; Bass et al. 1999) is robust to dishonesty in the online labor market setting and that the efficacy of methods designed to reduce dishonest reporting depends on online workers’ level of Machiavellianism. Perhaps most importantly, we identify mechanisms to mitigate, or potentially eliminate, the deleterious effects of Machiavellianism on human behavior. Thus, we address, within an online market setting, a call for research on how individual and situational factors interactively affect ethical decision making (O’Fallon and Butterfield’s 2005, 399).

Hypothesis Development

In this section, we use social norm activation theory to develop hypotheses regarding the effects of various methods of deterring dishonest reporting in online labor markets and how such effects likely depend on workers’ sensitivity to social norms as proxied by their level of Machiavellianism. Before doing so, we review the literature on honesty in online labor markets and introduce our theoretical framework.

Honesty in Online Labor Markets

There are significant concerns about the honesty of workers in online labor markets due to the potential for significant agency issues, both adverse selection and moral hazard, and a general lack of controls to address these issues (Farrell et al. 2017). Adverse selection is a significant concern primarily because workers self-select into these markets and often work for extremely low wages (Akerlof 1970). In fact, 90% of jobs on MTurk pay less than $0.10 (Ipeirotis 2010), and workers’ hourly reservation wage has been estimated at $1.38 (Horton and Chilton 2010). As such, workers willing to accept such low pay may also have low thresholds for dishonest reporting particularly when it can increase pay, consistent with Dobson’s (2013) characterization of online workers as desperate. Further, there are several features of online labor markets that raise moral hazard concerns, including one-time contracting with an anonymous, transient workforce and remote work environments making monitoring more challenging (Farrell et al. 2017). Collectively, these features provide online workers with motivation, opportunity, and rationalization to act dishonestly for personal gain, increasing the probability of dishonest behavior (Cressey 1973).

A few recent studies examine worker honesty in online labor markets. Consistent with the above concerns, there is evidence that online workers cheat on low-payoff, simple tasks, with dishonesty increasing with the size of the payoff (Goodman et al. 2012). Likewise, Suri et al. (2011) infer that online workers dishonestly report privately observed die rolls to increase their payoff. Farrell et al. (2017) extend these studies by examining the honesty of online workers in the context of students performing more demanding tasks that are more characteristic of business and research settings. They find that online workers are no less honest than student populations, even in light of the significant agency issues discussed above. However, Farrell et al. (2017) still report a significant amount of dishonest reporting among online workers. Thus, it is important to examine methods of promoting honest reporting in online labor markets, particularly since the efficacy of such methods likely is affected by unique features of these markets according to social norm activation theory (Bicchieri 2006).

Social Norm Activation Theory

Social norms are shared beliefs about appropriate behavior within social settings (Cialdini and Trost 1998).Footnote 4 Further, individuals use cues within their environment to interpret a given social setting and determine appropriate behavior (Cialdini and Trost 1998). Bicchieri’s (2006) model of social norm activation provides a theoretical framework for predicting when social norm activation is likely to occur and thus the likelihood of an individual conforming to the norm. Specifically, Bicchieri’s (2006) model indicates that an individual is most likely to conform to a social norm when the following conditions are met: (1) the individual believes that a social norm exists and applies to the current situation (contingency condition); (2) the individual believes that a significant subset of other individuals conform to the norm in similar situations (empirical expectations condition); and (3) the individual believes that a significant number of other individuals expect conformance to the norm in the given circumstance (normative expectations condition). If all three conditions are met, individuals will have a conditional preference to follow the norm. It is important to note, however, that the likelihood of satisfying all three conditions varies by individual, resulting in differential norm sensitivity across individuals (Bicchieri 2006; Blay et al. 2016).

Based on these conditions, the Bicchieri (2006) model provides a framework for predicting how cues/information in a given social setting affects the likelihood of individuals following a given social norm. As summarized in Blay et al. (2016) Table 1, any cue or information that (1) makes the norm more salient increases the chances of satisfying the contingency condition, (2) enhances the belief that others conform to the norm increases the chances of satisfying the empirical expectations condition, and (3) enhances the belief that conformance is expected and/or that nonconformance will be sanctioned increases the chances of satisfying the normative expectations condition. In the remainder of this section, we analyze codes of conduct and monitoring (with and without explicit economic penalties) in relation to this framework to predict the likelihood of these methods reducing dishonest reporting in online labor markets.

Codes of Conduct in Online Labor Markets

Codes of conduct are concise, formalized summaries of various entities’ social norms (Lapinski and Rimal 2005), which have the potential to activate social norms (Craft 2013).Footnote 5 Craft (2013) identifies five studies between 2004 and 2011 that examine the effects of Codes on ethical judgment and finds that only three studies documented a significant effect, indicating that efficacy likely depends on organizational and individual factors (Blay et al. 2016).

Codes can convey to online workers that a social norm for ethical behavior (e.g., honest reporting) exists and applies to the situation (i.e., contingency condition). However, in isolation, Codes likely neither convince online workers that a significant subset of other online workers conform to the norm (i.e., the empirical expectations condition) nor convince online workers that employers in the online labor market expect conformance and/or will sanction nonconformance (i.e., the normative expectations condition). As such, social norm activation theory suggests that Codes in isolation will not effectively reduce dishonest reporting in an online market setting, as Codes are unlikely to satisfy empirical or normative expectations.

Furthermore, prior studies emphasize the importance of establishing an ethical organizational culture for Codes to be effective (Jenkins et al. 2008; Stevens 2008; Dellaportas 2006). Within Bicchieri’s (2006) model, a strong ethical culture likely increases both empirical and normative expectations. That is, a strong ethical culture demonstrates that others conform to a given social norm such as reporting honesty and that conformance is expected and nonconformance will be sanctioned. However, online labor markets typically lack a preexisting culture or training with a particular employer.Footnote 6 Instead, in most circumstances, transient, anonymous workers engage in one-time, short-term contracts with unknown employers. As such, given the lack of a preexisting culture and the reasons discussed above, we expect that in isolation, Codes will be ineffective in deterring unethical behavior in online labor markets.

Nonetheless, it is possible that one feature of Codes, certification, could be incorporated in online labor market settings to increase the likelihood of Codes satisfying both empirical and normative expectations, resulting in decreased dishonest reporting. Specifically, Davidson and Stevens (2013) predict and find that codes are more effective when individuals certify that they will comply with the code, potentially due to increased empirical and normative expectations. Essentially, individuals who certify could be more likely to believe that others will conform since they also were asked to certify, and conformance is expected by the employer since certification was requested. Code certification is easily included in online market settings (and is incorporated in our experiment). While Davidson and Stevens (2013) demonstrate that codes have a greater impact on behavior when participants certify the code, their findings relate more to fairness than the ethical behavior we examine in this study, i.e., reporting honesty. It is unclear whether the positive effects of code certifications extend to reporting honesty, particularly when it is not readily apparent how dishonest reporting would directly disadvantage others, i.e., would be unfair to other workers. This leads to the following null hypothesis:

Hypothesis 1 (null):

Online labor market participants who are presented with and attest to a code of conduct will engage in the same degree of dishonest reporting as participants who are not.

Monitoring in Online Labor Markets

Prior research suggests that monitoring agents can mitigate unethical behavior such as dishonest reporting by imposing economic and/or reputational costs on agents (Antle 1984; Rosman et al. 2012). From a pure economic theory perspective, agents are motivated by self-interest and will behave unethically if it increases their economic payout. Thus, honest reporting could be achieved by monitoring actions and penalizing dishonest reporting (Mazar and Ariely 2006). From a reputational perspective, agents often either repeatedly or continuously contract with a single employer. Thus, dishonest reporting can impair their reputation and jeopardize their relationship with the employer. For example, Rosman et al. (2012) conduct an experiment and find that participants who are monitored engage in less earnings management than those participants who are not monitored. Likewise, Covey et al. (1989) find that monitoring decreases dishonesty in an educational setting.

In the online labor market setting, however, reputational costs often are insignificant due to one-time contracting and worker anonymity (Farrell et al. 2017). That is, even though employers can prohibit certain online labor market anonymous worker IDs from future contracting, online labor market workers likely have little concern over the reputational effects of being monitored since they are anonymous, will not be confronted directly by their employer, and there are thousands of other employment opportunities. Thus, in the absence of explicit penalties, monitoring only partially addresses the normative expectations of social norm activation theory. Specifically, monitoring implies that honest reporting is expected, but it does not signal that dishonest reporting will be sanctioned. In fact, in our experimental setting, workers who are not randomly assigned to the “monitoring with penalties” condition likely will not perceive economic costs of dishonesty, as they are explicitly informed that there are no penalties.Footnote 7

Monitoring without penalties also does little to increase the belief that other online workers will report honestly (empirical expectations). This is especially the case since online labor markets are anonymous. According to Bicchieri’s (2006) model, anonymity reduces the likelihood that a social norm is activated, as it reduces the chances of the empirical and normative expectation conditions being satisfied. In our setting, anonymity reduces the risk of dishonest reporting being associated with an individual, thereby limiting beliefs that others will report honestly or that dishonesty will be punished. For these reasons, we do not expect monitoring with only implied reputational penalties (i.e., without explicit economic penalties) to activate social norms for honesty or to reduce dishonest reporting.

However, we expect monitoring to deter dishonest reporting when there are explicit economic penalties. Specifically, when there are economic penalties, the chances of the normative expectations condition being satisfied is high because individuals are explicitly informed that sanctions are imposed. In fact, Bicchieri’s (2006) model indicates that a penalty for nonconformance is one of the strongest methods for enhancing normative expectations (Blay et al. 2016). Also, with knowledge of these sanctions, online workers are more likely to believe that other online workers will report honestly to avoid the economic penalties (i.e., empirical expectations condition). In sum, consistent with social norm activation theory (Bicchieri 2006) and the pure economic theory of deterring dishonest behavior (Mazar and Ariely 2006; Gino et al. 2009), we expect monitoring to be more effective when economic penalties are imposed and made salient to workers. As such, we present the following hypotheses:

Hypothesis 2a (null):

Online labor market participants who are informed that their responses may be monitored but not subject to penalties for non-compliance will engage in the same degree of dishonest reporting as participants who are not monitored.

Hypothesis 2b:

Online labor market participants who are informed that their responses may be monitored and subject to monetary penalties for non-compliance will engage in less dishonest reporting than participants who are not monitored.

Hypothesis 2c:

Online labor market participants who are informed that their responses may be monitored and subject to monetary penalties for non-compliance will engage in less dishonest reporting than participants who are informed that their responses will be monitored but not subject to monetary penalties for non-compliance.

Personality Differences

A key feature of Bicchieri’s model (2006) is differential sensitivity to social norm activation, whereby individuals differ in their likelihood of meeting the three criteria necessary for activation due to their individual characteristics (see Stevens 2002; Hobson et al. 2011, for empirical evidence of differential sensitivity). Thus, the impact of monitoring with penalties likely depends on workers’ innate sensitivity to social norms (Bicchieri 2006). Specifically, since workers who “naturally” are sensitive to social norms are relatively unlikely to act unethically regardless of the presence of penalties for unethical behavior, we expect penalties to reduce unethical behavior to a greater extent for workers who are relatively insensitive to social norms. For some people, it may be sufficient to believe that other people expect them to conform to the norm, whereas some individuals may need to believe that other people are willing to punish them for nonconformance (Bicchieri 2006; Blay et al. 2016).

One potentially useful proxy for an individual’s social norm sensitivity is their level of Machiavellianism (Christie and Geis 1970; Verbeke et al. 1996; Rayburn and Rayburn 1996; Bass et al. 1999). In particular, Machiavellianism has been shown to be predictive of unethical behavior (Triki et al. 2015; Hartmann and Maas 2010; Murphy 2012; Majors 2015). Generally, those who score higher on the Machiavellianism scale (high machs) are more self-centered, individualistic, and are less likely to engage in ethical behavior compared to those who score lower on the Machiavellianism scale (low machs) (Kish-Gephart et al. 2010; Chen and Tang 2013; Triki et al. 2015). For example, high machs are more likely to use questionable tactics during interviews (Lopes and Fletcher 2004; Hogue et al. 2013) and to have a favorable attitude toward cheating (Bloodgood et al. 2010), including plagiarism (Quah et al. 2012). High machs are also less likely to report or question unethical behavior (Bass et al. 1999; Pope 2005; Dalton and Radtke 2013) or support corporate ethics (Shafer and Simmons 2008).Footnote 8 These findings suggest that high machs are less sensitive to social norms than low machs. Thus, consistent with social norm activation theory (Bicchieri 2006; Blay et al. 2016), we predict that high machs generally will report less honestly than low machs but that monitoring with penalties will reduce unethical behavior in online settings to a greater extent for high versus low machs. Thus, we predict both main and interactive effects of Machiavellianism.

Hypothesis 3a:

Market participants who rate low in Machiavellianism will display significantly lower rates of dishonest reporting than online labor market participants who rate high in Machiavellianism.

Hypothesis 3b:

Monitoring with explicit penalties will deter dishonest reporting behavior to a greater extent among online labor market participants who rate high in Machiavellianism relative to online labor market participants who rate low in Machiavellianism.

Experimental Design

To test our hypotheses, we conduct a 2 × 3 × 2 between-participants experiment within an online labor market. In the experimental task, participants complete arithmetic-based puzzles and self-report their performance on the task for compensation.

Participants

We recruited participants (n = 180) from Amazon’s Mechanical Turk, an online service that allows individuals (i.e., “workers”) to complete various human intelligence tasks for compensation. Based on self-reports, participants (38.9% female) were on average 33.5 years old and educated (58.9% have an associate degree or higher). The majority of participants currently were employed (81.1%), with 41.7% indicating an average salary between $20,000 and $50,000 per year. Several prior studies examine the characteristics of Mechanical Turk workers and find that these workers are more diverse and representative of the US population than undergraduate students (Paolacci et al. 2010; Horton et al. 2011; Buhrmester et al. 2011; Brink and Lee 2015; Brink and White 2015). We chose Mechanical Turk as it is heavily utilized and offers significant cost and speed benefits. In addition, Mechanical Turk contains all of the features of online labor markets that are most pertinent to our theoretical development (i.e., lack of an established culture, anonymous and transient workers, one-time short-term contracting).

Experimental Task

The experimental task is a series of arithmetic-based puzzles utilized in several prior psychology studies (e.g., Mazar and Ariely 2006; Gino et al. 2009). Participants are presented with a 3 × 4 matrix of numbers and are asked to find two numbers within the given twelve numbers that sum to 10. All provided numbers are stated in hundredths; an example of two numbers within a matrix that sum to 10 is 3.71 and 6.29. Within our adaptation of this task, participants potentially can view up to 20 matrices and are informed that they have 4 min to find as many pairs as possible. Prior to beginning, participants are informed that they will be paid $0.50 for each pair of numbers summing to 10 that they identify within the given 4-min period and that their compensation will be based on their self-reported number of correctly identified pairs. It is important to note that the results of prior research indicate that it will be exceedingly difficult to complete 20 matrices within a 4-min period. Also, it is important to note that of the 20 matrices participants potentially could view, only 15 contained a pair of numbers that sum to 10, i.e., five of the matrices are unsolvable.

The allotted 4-min period begins when participants are presented with the first matrix. After completing the initial matrix, participants advance to subsequent matrices by either clicking the “Found It” button, indicating that they found the pair of numbers within the given matrix that sums to 10; if they cannot find a pair of numbers that sum to 10, participants can click “Skip (Can Not Find It)” and advance to the next matrix. Participants are not asked to indicate which two numbers they found that sum to ten; therefore, selecting the “Found It” button does not necessarily indicate the participant actually found the two numbers summing to ten, thus permitting dishonest reporting. After completing the task, participants are asked to report the total number of pairs of numbers that sum to 10 that they identified during the 4-min period. After completing the task, participants complete a series of questions including manipulation checks and supplemental questions to measure participants’ ethical beliefs about misreporting. The experiment concludes by asking participants to complete the Machiavellianism scale (Christie and Geis 1970) and demographic questions.Footnote 9

Independent Variables

The experiment manipulates three independent variables. First, the experiment manipulates codes of conduct between participants at two levels, treatment (hereafter Code) and control (hereafter No Code). In the Code condition, prior to beginning, participants are presented with an adaptation of a code of conduct utilized in a major US university business school that emphasizes integrity, responsibility, and respect (“Appendix 1”, section). Integrity is described in direct relation to honesty. Responsibility is described as professional and ethical conduct, which should preclude misreporting performance (although it was not formally stated in the code). Respect is described as one’s treatment of others, which should preclude misreporting for personal gain at your employer’s expense (although it was not formally stated in the code). After participants are presented with the code of conduct, they are asked to indicate their agreement by certifying the following statement: “I have read, understand, and agree to the above statement.” This agreement acts as a certification to bias us against support for our null hypothesis by strengthening the manipulation (cf. Davidson and Stevens 2013). In the No Code condition, participants are not presented with, and are not asked to attest to, any codes of conduct.

Second, the experiment manipulates Monitoring between participants at three levels: Monitoring—No Penalty, Monitoring—Penalty, and No Monitoring. In both Monitoring conditions, participants read the following statement, “Your performance in this task may be monitored. We will audit a randomly selected small percentage of responses.” In the Monitoring—No Penalty condition, they also read the following statement, “Your payment will NOT be adversely affected if your response is chosen for audit and you do not report honestly.” In the Monitoring—Penalty condition, they also read the following statement, “Your payment will be adversely affected if your response is chosen for audit and you do not report honestly.”Footnote 10 In the No Monitoring condition, participants are not informed that responses will be monitored.

Our final independent variable is participants’ level of Machiavellianism measured using the average of the 20-question MACH IV scale (Christie and Geis 1970; “Appendix 2”, section). We classified participants as either High Mach or Low Mach participants based on the median split.

Dependent Variables

We examine two dependent variables, Found Its and Unsolvables. We measure Found Its as participants’ self-reports of the number of identified pairs of numbers that sum to 10. Although it is possible that subjects could make honest addition errors, given random assignment and consistent with prior research (e.g., Mazar and Ariely 2006; Gino et al. 2009), we assume that in the absence of dishonest reporting, the mean number of correctly identified pairs of numbers that sum to 10 will not significantly differ between experimental conditions. Thus, consistent with prior research, we attribute significant increases in Found Its across experimental conditions to dishonest reporting. As a supplement to this indirect measure and to rule out the possibility of unsuccessful random assignment of ability, we created a direct measure, Unsolvables, defined as the number of times a participant clicked the “Found It” button for one of the five matrices that did not include a pair of numbers that sum to 10.Footnote 11

Results

Manipulation Checks

After participants completed the task, they next completed a series of manipulation check questions. When asked if they were presented with a code of conduct, 98.9% of the participants in the Code condition correctly identified the statement as being true. In addition, 96.7% of the participants in the Monitoring—Penalty condition correctly identified that before they began the task, they were informed that their performance in the task may be monitored, that the experimenter will audit a randomly selected small percentage of responses, and that payment will be adversely affected if their response is chosen for audit and they did not report honestly.Footnote 12

Participants were also asked to indicate their agreement with two statements on a scale from 1 “Strongly Disagree” to 7 “Strongly Agree”. The first question asked participants their agreement with the following:

I felt that the "requester" would check to see if I misreported the number of correct solutions I reported finding compared to the number of matrices in which I selected “Found It”.

Participants in the monitoring conditions were more likely to agree with this statement (M = 5.79) than participants who were not (M = 5.23) (t178 = 2.30, pone-tailed = 0.012). Additionally, as expected, participants in the monitoring with penalty condition (M = 5.85) did not differ in their agreement with this question than participants in the monitoring without penalty condition (M = 5.73) (t117 = 0.48, ptwo-tailed = 0.633).

The second question asked participants their agreement with the following

I felt that if the “requester” discovered that I misreported the number of correct solutions I reported finding compared to the number of matrices in which I selected “Found It”, I would earn a lower compensation for this task.

Participants in the penalty conditions were more likely to agree with this statement (M = 5.72) than participants who were not (M = 4.39) (t178 = 4.42, pone-tailed < 0.001).

Tests of Hypotheses

Hypothesis 1 is a null hypothesis predicting that online labor market participants who are presented with, and attest to, a code of conduct will engage in the same degree of unethical behavior as participants who are not. To examine this hypothesis, Tables 1 and 2, Panel B, present an ANOVA with both dependent measures, each showing insignificant main effects of the Code (C) (ptwo-tailed = 0.538 and 0.783, respectively). Therefore, consistent with our expectations in H1, results indicate that having individuals read and attest to a code of conduct does not reduce the level of dishonest reporting in an online market setting. Likewise, results of a pairwise comparison presented in Table 1, Panel C, indicate that there is no significant difference in the number of Found Its reported by participants who were provided with and attested to the code (M = 7.43) versus participants who were not (M = 7.29, t178 = 0.16, ptwo-tailed = 0.871). Similarly, as presented in Table 2, Panel C, there is no significant difference in the number of Unsolvables reported by participants who were provided with the code (M = 1.27) versus participants who were not (M = 1.31, t178 = 0.16, ptwo-tailed = 0.871). Thus, consistent with our theoretical development, having participants read and attest to a code of conduct, in isolation, appears to be an ineffective method of deterring dishonest reporting in an online labor market setting.

Hypothesis 2a also is a null hypothesis predicting that online labor market participants who are informed that their responses may be monitored but not subject to penalties will engage in the same degree of unethical behavior as participants who are not monitored. Tables 1 and 2, Panel B, both show that there is a significant effect of Monitoring on our dependent variables (ptwo-tailed = 0.007 and 0.059, respectively). Recall that monitoring has three levels: (1) No Monitoring, (2) Monitoring—No Penalty, and (3) Monitoring—Penalty. Therefore, the appropriate way to examine the second hypothesis is to perform pairwise comparisons between the No Monitoring and the Monitoring—No Penalty treatment conditions. Tables 1 and 2, Panel C, show that results, consistent with our hypothesis, fail to reject the null. Specifically, there is no significant difference in the number of Found Its reported by participants who were informed that their responses would be monitored but not be subject to monetary penalties for dishonest reporting (M = 8.56) versus participants who were not informed that their responses would be monitored (and thus who were not informed of any penalties) (M = 8.00, t118 = 0.49, ptwo-tailed = 0.628). Similarly, there is no significant difference in the number of Unsolvables reported by participants who were informed that their responses would be monitored but not subject to penalties (M = 1.42) versus participants who were not informed that their responses would be monitored (M = 1.61, t118 = 0.51, ptwo-tailed = 0.612). Thus, consistent with our theoretical development, informing participants their responses will be monitored for accuracy, without penalties for dishonesty, also appears to be an ineffective method of discouraging dishonest reporting in an online labor market setting.

Hypothesis 2b predicts that online labor market participants who are informed that their responses may be monitored and subject to monetary penalties for non-compliance engage in less unethical behavior than participants who are not monitored. Tables 1 and 2, Panel C, show that results are consistent with our hypothesis. Specifically, there is a significant difference in the number of Found Its reported by participants who were informed that their responses would be monitored and subject to monetary penalties for dishonest reporting (M = 5.53) versus participants who were not informed that their responses would be monitored (and thus who were not informed of any penalties) (M = 8.00, t119 = 2.49, pone-tailed = 0.007). Similarly, there is a significant difference in the number of Unsolvables reported by participants who were informed that their responses would be monitored and subject to penalties (M = 0.83) versus participants who were not informed that their responses would be monitored (M = 1.61, t119 = 2.51, pone-tailed = 0.007).

Hypothesis 2c predicts that online labor market participants who are informed that their responses may be monitored during a task and subject to monetary penalties for non-compliance engage in less unethical behavior than participants who are informed that their responses will be monitored but not subject to monetary penalties for non-compliance. Tables 1 and 2, Panel C, show that results are consistent with our hypothesis. Specifically, there is a significant difference in the number of Found Its reported by participants who were informed that their responses would be monitored and subject to monetary penalties for dishonest reporting (M = 5.53) versus participants who were informed that their responses would be monitored but not be subject to monetary penalties for dishonest reporting (M = 8.56, t117 = 2.90, pone-tailed = 0.003). Similarly, there is a significant difference in the number of Unsolvables reported by participants who were informed that their responses would be monitored and subject to penalties (M = 0.83) versus participants who were informed that their responses would be monitored but not subject to monetary penalties for dishonest reporting (M = 1.42, t117 = 1.86, pone-tailed = 0.033).Footnote 13 Thus, consistent with our theoretical development, informing participants that their responses will be monitored for honesty, with monetary penalties for dishonesty, appears to be an effective method of discouraging dishonest reporting in an online labor market setting.

Machiavellianism

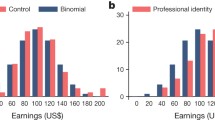

The Machiavellianism scores in our sample range from 1.40 to 4.25 with a mean of 2.71 (SD = 0.54) and a median of 2.78, which is slightly below the scale mean of 3, indicating that our MTurk sample tended to somewhat disagree with the statements characteristic of a Machiavellian personality. Machiavellianism scores in the 25th percentile were 2.35, 50th percentile were 2.78, and 75th percentile were 3.05. See Fig. 1 for graphical representation. We assessed the reliability of the MACH IV scale using Cronbach’s alpha (α = 0.848), which indicated acceptable scale reliability. Results of a one-way ANOVA testing confirm that Machiavellianism did not differ significantly between experimental conditions. We classified participants as a Low Mach if their average MACH IV score was below the median and classified participants as a High Mach otherwise.Footnote 14

Consistent with H3a, results indicate that Low Mach participants display significantly lower rates of dishonest reporting than High Mach participants. Presented in Tables 1 and 2, Panel B, there is a significant main effect on the MACH IV variable when examining both dependent measures (ptwo-tailed = 0.002 and 0.004, respectively). Specifically, the number of Found Its reported is significantly lower among Low Mach participants (M = 6.11) than High Mach participants (M = 8.61, t178 = 2.88, pone-tailed = 0.003). Similarly, the number of Unsolvables reported is significantly lower among Low Mach participants (M = 0.91) versus High Mach participants (M = 1.67, t178 = 2.83, pone-tailed = 0.003).

Hypothesis 3b predicts that monitoring with explicit penalties will be more effective in deterring unethical behavior (relative to participants who are not monitored, or monitored without penalties) for online labor market participants who rate high in Machiavellianism relative to online labor market participants who rate low in Machiavellianism. Because the pattern of predicted results is an ordinal interaction, conventional ANOVA terms may not be appropriate to analyze the interaction. Following Buckless and Ravenscroft (1990), we utilized contrast analysis to test for the significance of the interaction, since the predicted pattern of results is nonsymmetrical. Using the contrast weights (2, 2, 1, −1.5, −1.5, −2) presented in Fig. 2, Panel A, the overall pattern of results is consistent with H3b using both our dependent measures (t174 = 3.78, pone-tailed < 0.001 and t174 = 3.41, pone-tailed < 0.001, respectively).Footnote 15

a Predicted pattern of results with Buckless and Ravenscroft (1990) contrast codes, b Results using “Found Its” as the dependent measure, c Results using “Unsolvables” as the dependent measure

Additional pairwise comparisons and descriptive statistics reported in Table 1 indicate that the nature of the interaction is consistent with H3b. In particular, High Mach participants reported significantly fewer Found Its when penalties were imposed (M = 5.81) compared to both when monitoring was present but penalties were absent (M = 11.00, t55 = 3.18, pone-tailed = 0.001) and when no monitoring nor penalties were present (M = 9.36, t62 = 2.33, pone-tailed = 0.012). As expected, there is no significant difference in the No Monitoring versus Monitoring—No Penalty condition for High Mach participants (t57 = 0.96, ptwo-tailed = 0.342). However, the number of Found Its reported by Low Mach participants is not different when penalties were imposed (M = 5.24), versus either when monitoring was present but penalties were absent (M = 6.64, t60 = 1.12, pone-tailed = 0.135) or when no monitoring nor penalties were present (M = 6.39, t55 = 0.98, pone-tailed = 0.167).Footnote 16 This may be because Low Mach participants are less likely to engage in unethical behavior, regardless of control mechanisms. Consistent with Bicchieri’s (2006) model, participants with high social norm sensitivity (e.g., Low Machs) have high normative expectations, and the threat of punishment for nonconformance is not necessary as a control mechanism.

Examining this pattern of results further, results indicate that in the absence of monitoring for dishonest reporting, Low Mach participants display significantly lower rates of dishonest reporting than High Mach participants. Specifically, in No Monitoring, the number of Found Its reported is significantly lower among Low Mach participants (M = 6.39) than High Mach participants (M = 9.36, t59 = 1.944, pone-tailed = 0.029). Similarly, in No Monitoring, the number of Unsolvables reported is marginally significantly lower among Low Mach participants (M = 1.25) versus High Mach participants (M = 1.91, t59 = 1.34, pone-tailed = 0.093).

Likewise, under monitoring for dishonest reporting without penalties, Low Mach participants display significantly lower rates of dishonest reporting than High Mach participants. Specifically, in Monitoring—No Penalty, the number of Found Its reported is significantly lower among Low Mach participants (M = 6.64) than High Mach participants (M = 11.00, t57 = 2.68, pone-tailed = 0.005). Further, in Monitoring—No Penalty, the number of Unsolvables reported is significantly lower among Low Mach participants (M = 0.88) versus High Mach participants (M = 2.12, t57 = 2.44, pone-tailed = 0.009).

Most interestingly though, results indicate that when there is monitoring for dishonest reporting in the presence of penalties, High Mach participants display statistically similar rates of dishonest reporting as Low Mach participants. Specifically, in Monitoring—Penalty, the number of Found Its reported is not significantly different among Low Mach participants (M = 5.24) and High Mach participants (M = 5.81, t58 = 0.46, ptwo-tailed = 0.648). Similarly, in Monitoring—Penalty, the number of Unsolvables reported is not significantly different among Low Mach participants (M = 0.62) and High Mach participants (M = 1.03, t58 = 1.13, ptwo-tailed = 0.264). Thus, it appears that High Mach participants were consistently more likely to engage in dishonest self-serving behavior than Low Mach participants were, unless there were potential penalties for dishonest reporting. Thus, while High Mach participants report more dishonestly than Low Mach participants in the absence of penalties for dishonest reporting, imposing such penalties effectively eliminates this difference. Overall, we find that of the three examined methods, only imposing penalties for dishonest reporting increases reporting honesty in an online labor market setting, and it is more effective for individuals who are most likely to behave dishonestly, i.e., high machs.

Supplemental Analysis—Social Norm Sensitivity

As discussed in Section II, we propose that individuals’ level of Machiavellianism can function as a useful proxy for their sensitivity to social norms. This is important because Bicchieri’s (2006) model indicates that ethical behavior depends on individuals’ sensitivity to social norms and that the effects of monitoring with penalties likely depend on individuals’ sensitivity to social norms. To examine these assertions, we analyzed and compared participants’ Machiavellianism ratings and their sensitivity to the social norm of honest reporting, as measured by participants’ responses to the following two questions (using a Likert scale with end points 1 = “Strongly Disagree” and 7 = “Strongly Agree”):

I believe it was unethical to indicate that I “Found It” on the matrices without actually finding two numbers that summed to 10.

I believe it was unethical to indicate that I identified more correct solutions than I actually did in order to earn higher compensation for this task.

Results of this analysis support the notion that individuals’ level of Machiavellianism functions as a useful proxy for their sensitivity to social norms. Specifically, Low Mach participants indicated that it is more unethical to indicate “Found It” without actually finding two numbers that summed to 10 (M = 6.37) than High Mach participants (M = 5.78) (t174 = 2.58, ptwo-tailed = 0.011). Similarly, Low Mach participants indicated that it is more unethical to indicate more correct solutions than actually achieved (M = 6.44) than High Mach participants (M = 5.92) (t178 = 2.38, ptwo-tailed = 0.018).

Further supporting Bicchieri’s (2006) model, the presence of an economic penalty activated the social norm for those participants with low social norm sensitivity (High Machs). In particular, High Mach participants reported that it is more unethical to indicate “Found It” without actually finding two numbers that summed to 10 in the presence of economic penalties (M = 6.45) than in the absence of penalties (M = 5.52) (t54 = 2.85, ptwo-tailed = 0.006). Similarly, High Mach participants reported that it is more unethical to indicate more correct solutions than actually achieved in the presence of economic penalties (M = 6.42) than in the absence of penalties (M = 5.67) (t51 = 2.21, ptwo-tailed = 0.032). This shows that for the High Mach participants, the introduction of potential economic penalties enhances the normative expectation for honesty.

Next, we conducted additional analyses to determine whether our independent variables affected participants’ sensitivity to the social norm of honest reporting. Results indicate a similar pattern of results as those reported for our primary dependent measures. Specifically, participants who were monitored with economic penalties indicated that it is more unethical to indicate “Found It” without actually finding two numbers that summed to 10 (M = 6.60), either versus participants who were not monitored (M = 5.70) (t84 = 3.68, ptwo-tailed < 0.001) or versus participants who were monitored but without penalties (M = 5.92) (t79 = 3.68, ptwo-tailed = 0.009). Similarly, participants who were subject to monitoring with explicit monetary penalties indicated that it is more unethical to indicate more correct solutions than actually achieved (M = 6.62) either versus participants who were not monitored (M = 5.92) (t84 = 2.96, ptwo-tailed = 0.004) or versus participants who were monitored but without penalties (M = 6.02) (t79 = 2.42, ptwo-tailed = 0.018). There are not, however, significant differences in participants’ responses to either question when participants were not monitored, compared to when participants were monitored but without penalties (all p values >0.50).

Last, consistent with Bicchieri’s (2006) model, participants’ responses for the two questions above are highly correlated with the number of Found Its reported (r = −0.221, ptwo-tailed = 0.003 and r = −0.206, ptwo-tailed = 0.006, respectively) and the number of Unsolvables reported (r = -0.224, ptwo-tailed < 0.001 and r = −0.244, ptwo-tailed = 0.001, respectively). Results of this analysis indicate that monitoring with penalties for misreporting activates the social norm for honesty, which reduces dishonest reporting. Consistent with this assertion, results of Sobel tests for mediation indicate that participants’ beliefs on how unethical it is to indicate “Found It” without actually having found the correct pair of numbers partially mediates the effect of monitoring with penalties on reporting of “Found Its” (z = 2.23, p = 0.026). Further, results of Sobel tests for mediation indicate that participants’ beliefs as to how unethical it is to indicate more correct solutions than actually achieved partially mediates the effect of monitoring with penalties on the number of Unsolvables reported (z = 2.16, p = 0.031).Footnote 17

Supplemental Analysis—Age and Gender

Participants were asked to answer a number of other supplemental questions to explore what other factors our independent variables may impact and/or what factors may impact the dependent measures. Correlation analysis identified four additional variables that are highly correlated with the dependent measures. The first two are age and gender. There are significant negative correlations between a person’s age and the number of Found Its (r = −0.176, ptwo-tailed = 0.018) and with the number of Unsolvables (r = −0.153, ptwo-tailed = 0.040). This suggests that younger participants were more likely to engage in dishonest reporting, consistent with prior literature (e.g., Kohlberg 1969; Kelley et al. 1990; Trevino 1992) or potential mathematical ability differences across age levels in online labor markets. In addition, females were significantly less likely to report unethically than males, for both the number of Found Its (r = −0.229, ptwo-tailed = 0.002) and the number of Unsolvables (r = −0.164, ptwo-tailed = 0.027), consistent with prior literature (e.g., Bernardi and Bean 2008; Christensen et al. 2016). When both age and gender are input into the ANOVA models discussed above, they remain significant; however, the statistical conclusions drawn in hypothesis testing remain unchanged.

Conclusion

Leveraging social norm activation theory (Bicchieri 2006; Blay et al. 2016), this study examines the efficacy of various methods of deterring unethical behavior in online labor markets. Results indicate that having participants read and attest to a code of conduct and informing participants that their responses will be monitored for accuracy (without any penalties for dishonesty) generally are ineffective in activating norms for honesty and in reducing dishonest reporting. However, informing participants that their responses will be monitored and that penalties will be imposed for dishonest reporting activates norms for honesty and increases honest reporting. Additionally, we demonstrate that such effects depend on individuals’ sensitivity to social norms, as monitoring with penalties is differentially effective based on online workers’ level of Machiavellianism. In fact, while we observe significantly greater dishonest reporting for high versus low machs in the absence of penalties, this difference is eliminated in the presence of penalties.

This study has implications for both theory and practice. Regarding theory, this study answers Bicchieri’s (2006) call for research on understanding social norm activation in unique social settings, as online labor markets involve remote, anonymous workers who rarely interact in the performance of their duties. Further, this study answers Blay et al. (2016) call for research examining how organizational and dispositional factors separately and interactively activate social norms. Specifically, our study confirms the key role of social norm sensitivity in moderating the effects of various methods of activating social norms in online settings, and it demonstrates that individuals’ Machiavellianism ratings (Christie and Geis 1970) can function as a useful proxy for their social norm sensitivity.

Regarding practice, our study has implications for employers in online labor markets, online labor market administrators, and educators. Specifically, when contracting for work that has the potential for reporting dishonesty, employers in online labor markets should consider explicitly informing workers that their work will be monitored and subject to penalties for unethical behavior. Online labor market administrators may also want to consider providing (and perhaps mandating) ethical training for their workers, as creating a strong ethical culture would be quite difficult, if not impossible, due to the idiosyncratic features of online labor markets. Doing so, perhaps in conjunction with a code of conduct, might effectively reduce unethical behavior, thereby easing the burden on employers to monitor and penalize workers. However, future research should examine the efficacy of such actions. Finally, results of our study potentially have implications for online education. For example, many online courses have exams and other assignments with a high potential for unethical student behavior. Like online labor markets, codes of conduct may not be particularly effective unless the educational institution has a strong ethical culture. Otherwise, instructors may need to develop more sophisticated ways to monitor students and be willing to impose penalties for any identified ethical violations.

This study has several limitations beyond those normally associated with experimental research. First, we examine an online market that does not have an established culture of ethical behavior (which is not to that say that the examined market has an established culture of unethical behavior). Thus, our finding that codes of conduct have limited effectiveness may not generalize to online labor markets that have an established culture of ethical behavior and/or provide ethical training to its workers. Second, before beginning the task, we do not inform participants of the amount of the penalty or the percentage of responses that will be audited (beyond saying it will be a small percentage). Thus, it is important to note that our study indicates that non-specified penalties can effectively reduce dishonest reporting. However, the efficacy of penalties could be diminished if online workers consider potential penalties to be immaterial or the audit probability to be extremely low. Future research should examine whether specifying the size of penalties affects the results reported in this study, and if so, how large penalties need to be to reduce dishonest reporting. Third, it is possible that participants were not impacted by honor codes and monitoring without penalties because the participants felt that dishonest behavior does not harm an explicit party. However, this explanation does not detract from the generalizability of our results to the online worker environment, in which the relationship between workers and employers is anonymous. Fourth, we only examine one form of monitoring (i.e., a random audit), and results may not generalize to other forms of monitoring in online labor markets. Finally, we only examine one ethical decision (i.e., reporting honesty), and results may not generalize to other ethical decisions in online labor markets. In light of these limitations, we provide robust evidence on the efficacy of three common methods of deterring unethical behavior in online labor markets.

Notes

In the literature, codes of conduct may be referred to as code of ethics, corporate ethical code, honor code (in education) or other terms. For expositional simplicity, we use the term Code throughout this manuscript.

The recent Wells Fargo scandal, where bank employees allegedly opened bank and credit/debit card accounts for customers without their knowledge or consent, provides an excellent example of how Codes must be paired with a strong ethical culture. These actions stand in stark contrast to the bank’s stated value and Code of Ethics. One of the company’s five shared values in Vision and Values of Wells Fargo is “We value what’s right for our customers in everything we do” and in their Code of Ethics and Business Conduct, they talk about holding themselves to the highest ethical standards (as cited by Verschoor 2016, 20). These Code violations were likely due to the culture created from extremely aggressive sales goals (Verschoor 2016).

We thank an anonymous reviewer for suggesting that Machiavellianism proxies for social norm sensitivity.

Codes have existed for decades and are growing in popularity (Kapstein 2004; Erwin 2011). Codes originated in the aftermath of the political scandals (e.g., Watergate) of the 1970s (Stevens 2008). Another major escalation of the use of Codes occurred in the late 1990s and early 2000s in the aftermath of the accounting scandals (e.g., Enron, WorldCom, Tyco). In fact, Sect. 406 of the Sarbanes–Oxley Act of 2002 requires the disclosure of adoption of a corporate code of ethics (or to provide a rationale for not doing so). However, Codes are not only used where mandated but can be an effective instrument in creating an ethical organizational culture for a wide variety of organizations, including nonpublic companies, non-profits, education, and government agencies (cf. Rezaee et al. 2001).

Mechanical Turk workers do have to agree to a worker agreement. We reviewed the agreement (https://www.mturk.com/mturk/conditionsofuse), and it does not directly discuss ethical behavior or culture. As it is primarily legal matters, its intent does not appear to be to communicate the values or culture of Amazon or its online labor market to the workers like a Code does.

We made this design choice to isolate the effects of imposing penalties because without this statement, participants may have assumed there would be penalties, since their performance was being monitored. However, we expect such assumptions to be rarely made by workers as online labor markets, such as MTurk, encourage employers to be extremely detailed in laying out the terms of the employment contract, including anything that could adversely affect the workers’ pay. Nevertheless, future research examining the effectiveness of monitoring without mention of the lack or presence of penalties would be beneficial.

See Wakefield (2008) for an examination of the factors associated with Machiavellianism within the accounting profession.

In addition to the measures described, this study also captured the participants’ authoritarian personalities using a scale develop by Rigby (1984). However, the scale reliability level was not appropriate for further statistical analysis.

We randomly audited 10% of responses within the two monitoring conditions and planned to impose a penalty of 50% of total pay for misreporting for those in the penalty condition. However, of the 12 participants selected for audit, we did not identify any dishonest reporting using this measure.

Participants were presented all twenty matrices in the same order. Matrices 3, 6, 8, 10, and 14 are unsolvable.

We used the same question for Monitoring—No Penalty, and only 36% of participants indicated the statement was false, raising concern about manipulation failure. However, responses to another question give us confidence that this question was simply poorly worded as the participants who failed were likely more focused on the first part of the question about their performance being monitored as opposed to the second part about there being a penalty. Specifically, participants in this condition were significantly less likely to believe their compensation would be penalized for misreporting than participants in Monitoring—Penalty using the Likert scale question discussed below (4.08 vs. 5.72 respectively; t117 = 4.71, pone-tailed < 0.001). Most importantly, a belief that that there would be both monitoring and penalties (i.e., what the “failure’s” responses to this question indicates) biases us against finding results as it would strengthen the Monitoring–No Penalty manipulation, making us more likely to reject H2a (null) and less likely to find support for H3b.

Also presented in the ANOVAs in Tables 1 and 2, Panel B, there is not a significant interaction between the presence or absence of a code and our three levels of monitoring and penalties (ptwo-tailed = 0.597 and 0.638, respectively). This suggests that the presence of a code does not moderate the effect of penalties on decreasing unethical behavior.

Robustness checks were performed to ensure that the median split of participants into high/low mach groups were appropriate and did not alter the results discussed in the paper. Separating the participants into high/low mach groups based on mean split (2.71), midpoint of the MACH IV scale (3), or utilizing the MACH IV score as a continuous variable did not affect the significance of the results presented in the body of the paper. .

This contrast coding tests the three main assumptions embedded within the hypothesis. (1) Dishonesty is higher for High Mach relative to Low Mach (evidenced by the High Mach contrast weights being uniformly higher than the Low Mach contrast weights). (2) Monitoring—No Penalty will be ineffective, relative to No Monitoring, for both levels of Mach (evidenced by the contrast weights on No Monitoring and Monitoring—No Penalty being equal). (3) Finally, Monitoring—Penalty will be more effective for High Mach (evidenced by the reduction in dishonesty between Monitoring—Penalty and the other two cells being greater in High Mach than Low Mach). For robustness, we tested three additional sets of contrast weights that model these assumptions (+3, +3, +1, −2.5, −2.5, −2; +1.75, +1.75, +0.5, −2.5, −2.5, −2; and +2.5, +2.5, +0.5, −1.5, −1.5, −2.5) and obtained similar results (all p < 0.001).

The pattern of results is consistent when using Unsolvables as the primary dependent measure, with the exception of the number of Unsolvables reported by Low Mach participants in the penalty imposed condition (M = 0.62) being statistically different than those reported by the Low Mach participants when monitoring was absent condition (M = 1.25, t55 = 1.727, pone-tailed = 0.045).

For both tests for partial mediation, we combined the no monitoring and monitoring without penalties conditions into a single “no penalties” condition.

References

Akerlof, G. (1970). The market for “lemons:” Quality uncertainty and the market mechanism. The Quarterly Journal of Economics, 84(3), 488–500.

Antle, R. (1984). Auditor independence. Journal of Accounting Research, 22, 1–20.

Bass, K., Barnett, T., & Brown, G. (1999). Individual difference variables, ethical judgments, and ethical behavioral intentions. Business Ethics Quarterly, 9(2), 183–205.

Bernardi, R. A., & Bean, D. F. (2008). Establishing a standardized sample for accounting students’ DIT scores: A meta-analysis. Research on Professional Responsibility and Ethics in Accounting, 12, 1–21.

Bicchieri, C. (2006). The grammar of society: The nature and dynamics of social norms. New York, NY: Cambridge University Press.

Blay, A. D., Gooden, E. S., Mellon, M. J., & Stevens, D. E. (2016). The usefulness of social norm theory in empirical business ethics research: A review and suggestions for future research. Journal of Business Ethics. doi:10.1007/s10551-016-3286-4.

Bloodgood, J. M., Turnley, W. H., & Mudrack, P. E. (2010). Ethics instruction and the perceived acceptability of cheating. Journal of Business Ethics, 95(1), 23–37.

Brasel, K., Doxey, M. M., Grenier, J. H., & Reffett, A. (2016). Risk disclosure preceding negative outcomes: The effects of reporting critical audit matters on judgments of auditor liability. Accounting Review, 91(5), 1345–1362.

Brink, W., & Lee, L. (2015). The effect of tax preparation software on tax compliance: A research note. Behavioral Research in Accounting, 27(1), 121–135.

Brink, W., & White, R. (2015). The effects of a shared interest and regret salience on tax evasion. The Journal of the American Taxation Association, 37(2), 109–135.

Buckless, F. A., & Ravenscroft, S. P. (1990). Contrast coding: A refinement of ANOVA in behavioral analysis. Accounting Review, 65(4), 933–945.

Buhrmester, M., Kwang, T., & Gosling, S. (2011). Amazon’s Mechanical Turk: A new source of inexpensive, yet high-quality, data? Perspectives on Psychological Science, 6(1), 3–5.

Chen, Y., & Tang, T. L. (2013). The bright and dark sides of religiosity among university students: Do gender, college major, and income matter? Journal of Business Ethics, 115(3), 531–553.

Christensen, A. L., Cote, J., & Latham, C. K. (2016). Insights regarding the applicability of the defining issues test to advance ethics research with accounting students: A meta-analytic review. Journal of Business Ethics, 133, 141–163.

Christie, R., & Geis, F. L. (1970). Studies in Machiavellianism. New York, NY: Academic Press.

Cialdini, R., & Trost, M. (1998). Social influence: Social norms, conformity, and compliance. In D. T. Gilbert, S. T. Fiske, & G. Lindzey (Eds.), The handbook of social psychology (Vol. 2, pp. 151–192). Boston: Oxford University Press.

Covey, M. K., Saladin, S., & Killen, P. J. (1989). Self-monitoring, surveillance, and incentive effects on cheating. The Journal of Social Psychology, 129(5), 673–679.

Craft, J. (2013). A review of the empirical ethical decision-making literature: 2004–2011. Journal of Business Ethics, 117(2), 221–259.

Cressey, D. R. (1973). Other people’s money. Montclair: Patterson Smith.

Dalton, D., & Radtke, R. R. (2013). The joint effects of machiavellianism and ethical environment on whistle-blowing. Journal of Business Ethics, 117(1), 153–172.

Davidson, B. I., & Stevens, D. E. (2013). Can a code of ethics improve manager behavior and investor confidence? An experimental study. The Accounting Review, 88(1), 51–74.

Dellaportas, S. (2006). Making a difference with a discrete course on accounting ethics. Journal of Business Ethics, 65(4), 391–404.

Dobson, J. (2013). Mechanical Turk: Amazon’s new underclass. The Huffington Post 19 February. Web. 25 March 2014. http://www.huffingtonpost.com/julian-dobson/mechanical-turk-amazons-underclass_b_2687431.html.

Erwin, P. (2011). Corporate codes of conduct: the effects of code content and quality on ethical performance. Journal of Business Ethics, 99, 535–548.

Farrell, A., Grenier, J., & Leiby, J. (2017). Scoundrels or stars? Theory and evidence on the quality of workers in online labor markets. The Accounting Review, 92(1), 93–114.

Folbre, N. (2013). The unregulated work of Mechanical Turk. The New York Times 18 March. Web. 25 March 2014.

Gino, F., Ayal, S., & Ariely, D. (2009). Contagion and differentiation in unethical behavior. Psychological Science, 20(3), 393–397.

Glazer, E. (2011). Serfing the web: Sites let people farm out their chores. The Wall Street Journal 28 November. Web. 26 March 2014.

Goodman, J., Cryder, C., & Cheema, A. (2012). Data collection in a flat world: The strengths and weaknesses of Mechanical Turk samples. Journal of Behavioral Decision Making, 26(3), 213–224.

Hartmann, F. G. H., & Maas, V. S. (2010). Why business unit controllers create budget slack: Involvement in management, social pressure, and Machiavellianism. Behavioral Research in Accounting, 22(2), 27–49.

Hobson, J., Mellon, M., & Stevens, D. (2011). Determinants of moral judgments regarding budgetary slack: An experimental examination of pay scheme and personal values. Behavioral Research in Accounting, 23(1), 87–107.

Hogue, M., Levashina, J., & Hang, H. (2013). Will I fake it? The interplay of gender, Machiavellianism, and self-monitoring on strategies for honesty in job interviews. Journal of Business Ethics, 117(2), 399–411.

Horton, J., & Chilton, L. (2010). The labor economics of paid crowdsourcing. In Proceedings of the 11th ACM conference on electronic commerce. New York, NY: ACM.

Horton, J., Rand, D., & Zeckhauser, R. (2011). The online laboratory: Conducting experiments in real labor markets. Experimental Economics, 14(3), 399–425.

Ipeirotis, P. (2010). Analyzing the Amazon Mechanical Turk marketplace. XRDS, 17(2), 16–21.

Jenkins, J., Deis, D., Bedard, J., & Curtis, M. (2008). Accounting firm culture and governance: a research synthesis. Behavioral Research in Accounting, 20(1), 45–74.

Kapstein, M. (2004). Business codes of multinational firms: what do they say? Journal of Business Ethics, 50, 13–31.

Kelley, S. W., Ferrell, O. C., & Skinner, S. J. (1990). Ethical behavior among marketing researchers: An assessment of selective demographic characteristics. Journal of Business Ethics, 9(8), 681–688.

Kish-Gephart, J., Harrison, D. A., & Treviño, L. K. (2010). Bad apples, bad cases, and bad barrels: Meta-analytic evidence about sources of unethical decisions at work. Journal of Applied Psychology, 95(1), 1–31.

Kohlberg, L. (1969). Stage and sequence: The cognitive developmental approach to socialization. In D. Goslin (Ed.), Handbook of socialization theory and research (pp. 347–480). Chicago, IL: Rand McNally.

Lapinski, M. K., & Rimal, R. (2005). An explication of social norms. Communication Theory, 15(2), 127–147.

Lopes, J., & Fletcher, C. (2004). Fairness of impression management in employment interviews: A cross-country study of the role of equity and Machiavellianism. Social Behavior and Personality: An International Journal, 32(8), 747–767.

Majors, T. (2015). The interaction of communicating measurement uncertainty and the dark triad on managers’ reporting decisions. The Accounting Review, 91(3), 973–992.

Mazar, N., & Ariely, D. (2006). Dishonesty in everyday life and its policy implications. Journal of Public Policy and Marketing, 25(1), 117–126.

Mims, C. (2015). How everyone gets the sharing economy wrong. Wall Street Journal 24 May. Web. 19 June 2015. http://www.wsj.com/articles/how-everyone-gets-the-sharing-economywrong-1432495921.

Murphy, P. R. (2012). Attitude, Machiavellianism and the rationalization of misreporting. Accounting, Organizations and Society, 37(4), 242–259.

O’Fallon, M., & Butterfield, K. (2005). A review of the empirical ethical decision-making literature. Journal of Business Ethics, 59(4), 375–413.

Paolacci, G., Chandler, J., & Ipeirotis, P. (2010). Running experiments on Amazon Mechanical Turk. Judgment and Decision Making, 5(5), 411–419.

Pope, K. R. (2005). Measuring the ethical propensities of accounting students: Mach IV versus DIT. Journal of Academic Ethics, 3(2–4), 89–111.

Quah, C. H., Stewart, N., & Lee, J. W. C. (2012). Attitudes of business students’ toward plagiarism. Journal of Academic Ethics, 10(3), 185–199.

Rayburn, J. M., & Rayburn, L. G. (1996). Relationship between Machiavellianism and type a personality and ethical-orientation. Journal of Business Ethics, 15(11), 1209–1219.

Rezaee, Z., Elmore, R., & Szendi, J. (2001). Ethical behaviors in higher education institutions: The role of the code of conduct. Journal of Business Ethics, 30, 171–183.

Rigby, K. (1984). Acceptance of authority and directiveness as indicators of authoritariansm: A new framework. Journal of Social Psychology, 122(2), 171.

Rosman, A. J., Biggs, S. F., & Hoskin, R. E. (2012). The effects of tacit knowledge on earnings management behavior in the presence and absence of monitoring at different levels of firm performance. Behavioral Research in Accounting, 24(1), 109–130.

Shafer, W. E., & Simmons, R. S. (2008). Social responsibility, Machiavellianism and tax avoidance. Accounting, Auditing and Accountability Journal, 21(5), 695.

Stevens, D. (2002). The effects of reputation and ethics on budgetary slack. Journal of Management Accounting Research, 14, 153–171.

Stevens, B. (2008). Corporate ethical codes: effective instruments for influencing behavior. Journal of Business Ethics, 78, 601–609.