Abstract

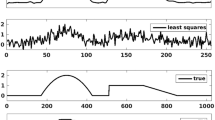

The solution, \(\varvec{x}\), of the linear system of equations \(A\varvec{x}\approx \varvec{b}\) arising from the discretization of an ill-posed integral equation \(g(s)=\int H(s,t) f(t) \,dt\) with a square integrable kernel H(s, t) is considered. The Tikhonov regularized solution \(\varvec{x}(\lambda )\) approximating the Galerkin coefficients of f(t) is found as the minimizer of \(J(\varvec{x})=\{ \Vert A \varvec{x} -\varvec{b}\Vert _2^2 + \lambda ^2 \Vert L \varvec{x}\Vert _2^2\}\), where \(\varvec{b}\) is given by the Galerkin coefficients of g(s). \(\varvec{x}(\lambda )\) depends on the regularization parameter \(\lambda \) that trades off between the data fidelity and the smoothing norm determined by L, here assumed to be diagonal and invertible. The Galerkin method provides the relationship between the singular value expansion of the continuous kernel and the singular value decomposition of the discrete system matrix for square integrable kernels. We prove that the kernel maintains square integrability under left and right multiplication by bounded functions and thus the relationship also extends to appropriately weighted kernels. The resulting approximation of the integral equation permits examination of the properties of the regularized solution \(\varvec{x}(\lambda )\) independent of the sample size of the data. We prove that consistently down sampling both the system matrix and the data provides a small scale system that preserves the dominant terms of the right singular subspace of the system and can then be used to estimate the regularization parameter for the original system. When g(s) is directly measured via its Galerkin coefficients the regularization parameter is preserved across resolutions. For measurements of g(s) a scaling argument is required to move across resolutions of the systems when the regularization parameter is found using a regularization parameter estimation technique that depends on the knowledge of the variance in the data. Numerical results illustrate the theory and demonstrate the practicality of the approach for regularization parameter estimation using generalized cross validation, unbiased predictive risk estimation and the discrepancy principle applied to both the system of equations, and to the regularized system of equations.

Similar content being viewed by others

Notes

If the kernel integral is calculated exactly over the given interval it is still possible to obtain \({A^{(n)}}\) from \({A^{(N)}}\) by summing the relevant terms from \({A^{(N)}}\) but the scaling factor is the inverse of that in (4.5).

The scaling can also be verified directly for each regularization function MDP, ADP and UPRE.

We show this result to demonstrate that a simple check for the convergence of \((\varDelta ^{(n)})^2\) might be misleading, since it is not always the case that an analytic expression is known for the matrix elements. It is more common that the matrix elements are calculated using numerical quadrature.

References

Aster, R.C., Borchers, B., Thurber, C.H.: Parameter Estimation and Inverse Problems, 2nd edn. Elsevier Inc., Amsterdam (2013)

Baker, C.T.H.: The Numerical Treatment of Integral Equations. Clarendon Press, Oxford (1977)

Chung, J.M., Nagy, J., O’Leary, D.P.: A weighted GCV method for Lanczos hybrid regularization. ETNA 28, 149–167 (2008)

Chung, J., Easley, G., O’Leary, D.P.: Windowed spectral regularization of inverse problems. SIAM J. Sci. Comput. 6, 3175–3200 (2011)

Gazzola, S., Novati, P., Russo, M.R.: Embedded techniques for choosing the parameter in Tikhonov regularization. Numer. Linear Algebra Appl. 21(6), 796–812 (2014)

Golub, G.H., van Loan, C.: Matrix Computations, 3rd edn. Johns Hopkins Press, Baltimore (1996)

Golub, G.H., Heath, M., Wahba, G.: Generalized cross-validation as a method for choosing a good ridge parameter. Technometrics 21(2), 215–223 (1979)

Hansen, P.C.: Computation of the singular value expansion. Computing 40, 185–199 (1988)

Hansen, P.C.: Rank-deficient and discrete ill-posed problems: numerical aspects of linear inversion. In: SIAM Monographs on Mathematical Modeling and Computation, vol. 4, Philadelphia (1998)

Hansen, P.C.: The L-curve and its use in the numerical treatment of inverse problems. In: Johnston, P. (ed.) Invited Chapter in Computational Inverse Problems in Electrocardiology. WIT Press, Southampton, pp. 119–142 (2001)

Hansen, P.C.: Regularization Tools:A Matlab package for analysis and solution of discrete ill-posed problems. Version 4.0 for Matlab 7.3. Numer. Algorit. 46, 189–194. http://www2.imm.dtu.dk/~pcha/Regutools/ (2007)

Hansen, P.C.: Discrete inverse problems: insights and algorithms. In: SIAM Series on Fundamentals of Algorithms, vol. 7, Philadelphia, PA (2013)

Hansen, P.C., Jensen, T.K.: Noise propagation in regularizing iterations for image deblurring. ETNA 31, 204–220 (2008)

Hansen, P.C., Nagy, J., O’Leary, D.: Deblurring Images Matrices Spectra and Filtering, Philadelphia PA (2006)

Ples̆inger, M., Hnĕtynková, I., Strakos̆, Z.: The regularizing effect of the Golub–Kahan iterative bidiagonalization and revealing the noise level in the data. BIT Numer. Math. 49(4), 669–696 (2009)

Huang, Q., Renaut, R.A.: Functional partial canonical correlation. Bernoulli. 21(2), 1047–1066 (2014). http://dx.doi.org/10.3150/14-BEJ597

Jensen, T.K., Hansen, P.C.: Iterative regularization with minimum-residual methods. BIT Numer. Math. 47, 103–120 (2007)

Kirsch, A.: An Introduction to the Mathematical Theory of Inverse Problems. Springer, Berlin (1996)

Kress, R.: Linear Integral Equations. Springer, Berlin (1989)

Lu, Y., Shen, L., Xu, Y.: Multi-parameter regularization methods for high-resolution image reconstruction with displacement errors. IEEE Trans. Circ. Syst. I 54(8), 1788–1799 (2007)

McCormick, S.: Multigrid Methods Society for Industrial and Applied Mathematics, Philadelphia, PA (1987). doi:10.1137/1.9781611971057

Mead, J.L.: Discontinuous parameter estimates with least squares estimators. Appl. Math. Comput. 219, 5210–5223 (2013)

Mead, J.L., Renaut, R.A.: A Newton root-finding algorithm for estimating the regularization parameter for solving ill-conditioned least squares problems. Inverse Probl. 25, 025002 (2009)

Morozov, V.A.: On the solution of functional equations by the method of regularization. Soviet Math. Doklady 7, 414–417 (1966)

Paige, C.C., Saunders, M.A.: LSQR: an algorithm for sparse linear equations and sparse least squares. ACM Trans. Math. Softw. 8, 43–71 (1982)

Paige, C.C., Saunders, M.A.: ALGORITHM 583 LSQR: sparse linear equations and least squares problems. ACM Trans. Math. Softw. 8, 195–209 (1982)

Reichel, L., Sgallari, F., Ye, Q.: Tikhonov regularization based on generalized Krylov subspace methods. Appl. Numer. Math. 62, 1215–1228 (2012)

Renaut, R.A., Hnětynková, I., Mead, J.L.: Regularization parameter for large-scale Tikhonov regularization using a priori information. Comput. Stat. Data Anal. 54, 3430–3445 (2010)

Smithies, F.: Integral Equations Cambridge Tract No. 49. Cambridge University Press, Cambridge (1958)

Turchin, V.F.: Solution of Fredholm equations of the first kind in a statistical ensemble of smooth functions. USSR Comput. Math. Math. Phys. 7, 79–101 (1967)

Vogel, C.R.: Computational methods for inverse problems. In: SIAM Frontiers in Applied Mathematics, Philadelphia, PA (2002)

Acknowledgements

Rosemary Renaut acknowledges the support of AFOSR Grant 025717: “Development and Analysis of Non-Classical Numerical Approximation Methods”, and NSF Grant DMS 1216559: “Novel Numerical Approximation Techniques for Non-Standard Sampling Regimes”. All authors are appreciative of the many comments of the reviewers which assisted in the improved exposition of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Lothar Reichel.

Rights and permissions

About this article

Cite this article

Renaut, R.A., Horst, M., Wang, Y. et al. Efficient estimation of regularization parameters via downsampling and the singular value expansion. Bit Numer Math 57, 499–529 (2017). https://doi.org/10.1007/s10543-016-0637-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-016-0637-6

Keywords

- Singular value expansion

- Singular value decomposition

- Ill-posed inverse problem

- Tikhonov regularization

- Regularization parameter estimation