Abstract

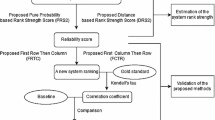

Analyzing retrieval model performance using retrievability (maximizing findability of documents) has recently evolved as an important measurement for recall-oriented retrieval applications. Most of the work in this domain is either focused on analyzing retrieval model bias or proposing different retrieval strategies for increasing documents retrievability. However, little is known about the relationship between retrievability and other information retrieval effectiveness measures such as precision, recall, MAP and others. In this study, we analyze the relationship between retrievability and effectiveness measures. Our experiments on TREC chemical retrieval track dataset reveal that these two independent goals of information retrieval, maximizing retrievability of documents and maximizing effectiveness of retrieval models are quite related to each other. This correlation provides an attractive alternative for evaluating, ranking or optimizing retrieval models’ effectiveness on a given corpus without requiring any ground truth available (relevance judgments).

Similar content being viewed by others

Notes

For generating query sets, we first order all queries in \(Q\) on the basis of simplified query clarity score (SCS) [16]. Then, we extract three query subsets from \(Q\). First from low SCS range (low-quality queries), second from middle SCS range (medium-quality queries) and third from high SCS range (high-quality queries).

The complete log of genetic programming and optimized retrieval models up to 100 generation is available at http://www.ifs.tuwien.ac.at/~bashir/Relationhip_Retrievability_Effectiveness.htm.

References

Amitay E, Carmel D, Lempel R, Soffer A (2004) Scaling ir-system evaluation using term relevance sets. In: SIGIR ’04: proceedings of the 27th annual international ACM SIGIR conference on research and development in, information retrieval, pp 10–17

Aslam JA, Savell R (2003) On the effectiveness of evaluating retrieval systems in the absence of relevance judgments. In: SIGIR’03: proceedings of the 26th international ACM SIGIR conference on research and development in, information retrieval, pp 361–362

Azzopardi L, Bache R (2010) On the relationship between effectiveness and accessibility. In: SIGIR ’10: proceeding of the 33rd annual international ACM SIGIR conference on research and development in information retrieval. Geneva, Switzerland, pp 889–890

Azzopardi L, Owens C (2009) Search engine predilection towards news media providers. In: SIGIR ’09: proceedings of the 32nd annual international ACM SIGIR conference on research and development in information retrieval. Boston, MA, USA, pp 774–775

Azzopardi L, Vinay V (2008) Retrievability: an evaluation measure for higher order information access tasks. In: CIKM ’08: proceeding of the 17th ACM conference on information and knowledge management. Napa Valley, CA, USA, pp 561–570

Baccini A, Déjean S, Lafage L, Mothe J (2012) How many performance measures to evaluate information retrieval systems? In, Knowledge and Information Systems, volume 30, pp. 693–713. Springer

Bache R, Azzopardi L (2010) Improving access to large patent corpora. In Transactions on Large-Scale Data- and Knowledge-Centered Systems II, volume 2, pages 103–121. Springer

Bashir S, Rauber A (2009a) Analyzing document retrievability in patent retrieval settings. DEXA ’09: Proceedings of the 20th International Conference on Database and Expert Systems Applications (Springer). Linz, Austria, pp 753–760

Bashir S, Rauber A (2009b) Improving retrievability of patents with cluster-based pseudo-relevance feedback documents selection. In CIKM ’09: Proceedings of the 18th ACM Conference on Information and Knowledge Management, pages 1863–1866, Hong Kong, China, November 2–6

Bashir S, Rauber A (2010a) Improving retrievability and recall by automatic corpus partitioning. In: Transactions on large-scale data- and knowledge-centered systems II, vol 2. Springer, pp 122–140

Bashir S, Rauber A (2010b) Improving retrievability of patents in prior-art search. In: ECIR ’10: 32nd European conference on information retrieval research (Springer). Milton Keynes, UK. Springer, pp 457–470, March 28–31

Bashir S, Rauber A (2011) On the relationship between query characteristics and ir functions retrieval bias. J Am Soc Inf Sci Technol 62(8):1512–1532

Callan J, Connell M (2001) Query-based sampling of text databases. ACM Trans Inf Syst (TOIS) J 19(2):97–130

Cao G, Nie J-Y, Gao J, Robertson S (2008) Selecting good expansion terms for pseudo-relevance feedback. In: SIGIR ’08: proceedings of the 31st annual international ACM SIGIR conference on research and development in information retrieval. ACM, New York, NY, USA, pp 243–250

Chen H (1995) Machine learning for information retrieval: neural networks, symbolic learning, and genetic algorithms. J Am Soc Inf Sci Technol 46(3):194–216

Cronen-Townsend S, Zhou Y, Croft WB (2002) Predicting query performance. In: SIGIR ’02: proceedings of the 25th annual international ACM SIGIR conference on research and development in information retrieval, August 11–15. Tampere, Finland, pp 299–306

Cummins R, O’Riordan C (2005) Evolving general term-weighting schemes for information retrieval: tests on larger collections. Artif Intell Rev 24(3–4):277–299

Cummins R, O’Riordan C (2009) Learning in a pairwise term-term proximity framework for information retrieval. In: SIGIR ’09: proceedings of the 32nd annual international ACM SIGIR conference on research and development in information retrieval. ACM, New York, NY, USA, pp 251–258

Diaz-Aviles E, Nejdl W, Lars S-T (2009) Swarming to rank for information retrieval. In: GECCO ’09, proceedings of the 11th annual conference on genetic and evolutionary computation. ACM, New York, NY, USA, pp 9–16

Fan W, Fox EA, Pathak P, Wu H (2004) The effects of fitness functions on genetic programming-based ranking discovery for web search: research articles. J Am Soc Inf Sci Technol 55(7):628–636

Fujii A, Iwayama M, Kando N (2007) Introduction to the special issue on patent processing. Inf Process Manag J 43(5):1149–1153

Gastwirth JL (1972) The estimation of the Lorenz curve and Gini index. Rev Econ Stat 54(3):306–416

Hauff C, Hiemstra D, de Jong F, Azzopardi L (2009) Relying on topic subsets for system ranking estimation. In: CIKM ’09: proceeding of the 18th ACM conference on information and knowledge management, pp 1859–1862

He B, Ounis I (2006) Query performance prediction. Inf Syst J 31(7):585–594

Itoh H (2004) Patent retrieval experiments at ricoh. In: Proceedings of NTCIR ’04: NTCIR-4 workshop meeting

Kamps J (2005) Web-centric language models. In: CIKM’05: proceeding of the 14th ACM conference on information and knowledge management. ACM

Koza JR (1992) A genetic approach to the truck backer upper problem and the inter-twined spiral problem. In: Proceedings of IJCNN international joint conference on neural networks, vol IV. IEEE Press, pp 310–318

Kraaij W, Westerveld T (2000) Tno/ut *at trec-9: How different are web documents? In Proceedings of TREC-9, the 9th text retrieval conference

Lawrence S, Giles CL (1999) Accessibility of information on the web. Nature 400:107–109

Losada DE, Azzopardi L (2008) An analysis on document length retrieval trends in language modeling smoothing. Inf Retr J 11(2):109–138

Lupu M, Huang J, Zhu J, Tait J (2009) TREC-CHEM: large scale chemical information retrieval evaluation at TREC. ACM SIGIR Forum 43(2):63–70

Mase H, Matsubayashi T, Ogawa Y, Iwayama M, Oshio T (2005) Proposal of two-stage patent retrieval method considering the claim structure. ACM Trans Asian Lang Inf Process (TALIP) 4(2):190–206

Mowshowitz A, Kawaguchi A (2002) Bias on the web. Commun ACM 45(9):56–60

Nuray R, Can F (2006) Automatic ranking of information retrieval systems using data fusion. Inf Process Manag J 42(3):595–614

Cordon O, Herrera-Viedma E (2003) A review on the application of evolutionary computation to information retrieval. Int J Approx Reason 34(2–3):241–264

Robertson SE, Walker S (1994) Some simple effective approximations to the 2-poisson model for probabilistic weighted retrieval. In: SIGIR ’94: proceedings of the 17th annual international ACM SIGIR conference on research and development in information retrieval. Dublin, Ireland, pp 232–241

Shinmori A, Okumura M, Marukawa Y, Iwayama M (2003) Patent claim processing for readability: structure analysis and term explanation. In: Proceedings of the ACL-2003 workshop on patent corpus processing, vol 20, pp 56–65

Singhal A (1997) At&t at trec-6. In: The 6th text retrieval conference (TREC6), pp 227–232

Singhal A, Buckley C, Mitra M (1996) Pivoted document length normalization. In: SIGIR ’96: proceedings of the 19th annual international ACM SIGIR conference on research and development in, information retrieval. ACM, pp 21–29

Soboroff I, Nicholas C, Cahan P (2001) Ranking retrieval systems without relevance judgments. In: SIGIR ’01: proceedings of the 24th annual international ACM SIGIR conference on research and development in, information retrieval, pp 66–73

Spoerri A (2007) Using the structure of overlap between search results to rank retrieval systems without relevance judgments. Inf Process Manag J 43(4):1059–1070

Tao T, Zhai C (2007) An exploration of proximity measures in information retrieval. In: SIGIR ’07: proceedings of the 30th annual international ACM SIGIR conference on research and development in information retrieval. ACM, New York, NY, USA, pp 295–302

Vaughan L, Thelwall M (2004) Search engine coverage bias: evidence and possible causes. Inf Process Manag J 40(4):693–707

Verberne S, van Halteren H, Theijssen D, Raaijmakers S, Boves L (2011) Learning to rank for why-question answering. Inf Retr 14:107–132

Vrajitoru D (1998) Crossover improvement for the genetic algorithm in information retrieval. Inf Process Manag J 34(4):405–415

Lauw WH, Lim E-P, Wang K (2006) Bias and controversy: beyond the statistical deviation. In: Proceedings of the 12th ACM SIGKDD international conference on knowledge discovery and data mining. Philadelphia, PA, USA, pp 625–630

Wu S, Crestani F (2003) Methods for ranking information retrieval systems without relevance judgments. In: SAC ’03: proceedings of the 2003 ACM symposium on applied, computing, pp 811–816

Zhai C (2002) Risk minimization and language modeling in text retrieval. PhD Thesis, Carnegie Mellon University

Zhao J, Yun Y (2009) A proximity language model for information retrieval. In: SIGIR ’09: proceedings of the 32nd annual international ACM SIGIR conference on research and development in information retrieval. ACM, New York, NY, USA, pp 291–298

Zhao Y, Scholer F, Tsegay Y (2008) Effective pre-retrieval query performance prediction using similarity and variability evidence. In: ECIR’08: proceedings of the 30th European conference on advances in information retrieval. Glasgow, UK, pp 52–64

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bashir, S., Rauber, A. Automatic ranking of retrieval models using retrievability measure. Knowl Inf Syst 41, 189–221 (2014). https://doi.org/10.1007/s10115-014-0759-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-014-0759-6