Abstract

The perceptron algorithm is a simple iterative procedure for finding a point in a convex cone \(F\subseteq \mathbb {R}^m\). At each iteration, the algorithm only involves a query to a separation oracle for \(F\) and a simple update on a trial solution. The perceptron algorithm is guaranteed to find a point in \(F\) after \(\mathcal O(1/\tau _F^2)\) iterations, where \(\tau _F\) is the width of the cone \(F\). We propose a version of the perceptron algorithm that includes a periodic rescaling of the ambient space. In contrast to the classical version, our rescaled version finds a point in \(F\) in \(\mathcal O(m^5 \log (1/\tau _F))\) perceptron updates. This result is inspired by and strengthens the previous work on randomized rescaling of the perceptron algorithm by Dunagan and Vempala (Math Program 114:101–114, 2006) and by Belloni et al. (Math Oper Res 34:621–641, 2009). In particular, our algorithm and its complexity analysis are simpler and shorter. Furthermore, our algorithm does not require randomization or deep separation oracles.

Similar content being viewed by others

References

Agmon, S.: The relaxation method for linear inequalities. Can. J. Math. 6(3), 382–392 (1954)

Amaldi, E., Belotti, P., Hauser, R.: A randomized algorithm for the maxFS problem. In: IPCO, pp. 249–264 (2005)

Amaldi, E., Hauser, R.: Boundedness theorems for the relaxation method. Math. Oper. Res. 30(4), 939–955 (2005)

Ball, K.: An Elementary Introduction to Modern Convex Geometry. Flavors of Geometry, vol. 31, pp. 1–58. Cambridge University Press, Cambridge (1997)

Bauschke, H.H., Borwein, J.M.: Legendre functions and the method of random Bregman projections. J. Convex Anal. 4, 27–67 (1997)

Bauschke, H.H., Borwein, J.M., Lewis, A.: The method of cyclic projections for closed convex sets in Hilbert space. Contemp. Math. 204, 1–38 (1997)

Belloni, A., Freund, R., Vempala, S.: An efficient rescaled perceptron algorithm for conic systems. Math. Oper. Res. 34(3), 621–641 (2009)

Betke, U.: Relaxation, new combinatorial and polynomial algorithms for the linear feasibility problem. Discrete Comput. Geom. 32, 317–338 (2004)

Block, H.D.: The perceptron: a model for brain functioning. Rev. Mod. Phys. 34, 123–135 (1962)

Blum, A., Frieze, A., Kannan, R., Vempala, S.: A polynomial-time algorithm for learning noisy linear threshold functions. Algorithmica 22(1–2), 35–52 (1998)

Chubanov, S.: A strongly polynomial algorithm for linear systems having a binary solution. Math. Program. 134, 533–570 (2012)

Dunagan, J., Vempala, S.: A simple polynomial-time rescaling algorithm for solving linear programs. Math. Program. 114(1), 101–114 (2006)

Fleming, W.: Functions of Several Variables. Springer, New York (1977)

Freund, Y., Schapire, R.: Large margin classification using the perceptron algorithm. Mach. Learn. 37, 277–296 (1999)

Gilpin, A., Peña, J., Sandholm, T.: First-order algorithm with \({\cal {O}}({\ln }(1/\epsilon ))\) convergence for \(\epsilon \)-equilibrium in two-person zero-sum games. Math. Program. 133, 279–298 (2012)

Goffin, J.: The relaxation method for solving systems of linear inequalities. Math. Oper. Res. 5, 388–414 (1980)

Goffin, J.: On the non-polynomiality of the relaxation method for systems of linear inequalities. Math. Program. 22, 93–103 (1982)

Huber, G.: Gamma function derivation of \(n\)-sphere volumes. Am. Math. Mon. 89, 301–302 (1982)

Motzkin, T.S., Schoenberg, I.J.: The relaxation method for linear inequalities. Can. J. Math. 6(3), 393–404 (1954)

Novikoff, A.B.J.: On convergence proofs on perceptrons. In: Proceedings of the Symposium on the Mathematical Theory of Automata, vol. XII, pp. 615–622 (1962)

O’Donoghue, B., Candès, E.J.: Adaptive restart for accelerated gradient schemes. Found. Comput. Math. (2013) doi:10.1007/s10208-013-9150-3

Rosenblatt, F.: The perceptron: a probabilistic model for information storage and organization in the brain. Cornell Aeronaut. Lab. Psychol. Rev. 65(6), 386–408 (1958)

Shalev-Shwartz, S., Singer, Y., Srebro, N., Cotter, A.: Pegasos: primal estimated sub-gradient solver for SVM. Math. Program. 127, 3–30 (2011)

Soheili, N., Peña, J.: A smooth perceptron algorithm. SIAM J. Optim. 22(2), 728–737 (2012)

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of (7) and (8)

Appendix: Proof of (7) and (8)

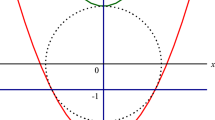

Let \(v \in \text {int}(\mathbb {B}^{m-1})\) be given. The volumes \({\left| \left| \left| (\Psi \circ \Phi )'(v) \right| \right| \right| }\) and \({\left| \left| \left| \Phi '(v) \right| \right| \right| }\) are \(\sqrt{\det \left( (\Psi \circ \Phi )'(v)^\mathrm{T}(\Psi \circ \Phi )'(v)\right) }\) and \(\sqrt{\det \left( \Phi '(v)^\mathrm{T}\Phi '(v)\right) }\) respectively. Thus (7) and (8) are equivalent to

and

We first prove (15). To simplify notation, put \(t^2 := \alpha ^2+\left( 1-\alpha ^2\right) \Vert v\Vert ^2\). Computing the partial derivatives of \((\Psi \circ \Phi )(v) = \frac{\left( v, \alpha \sqrt{1-\Vert v\Vert ^2}\right) }{\sqrt{\alpha ^2 + (1-\alpha ^2)\Vert v\Vert ^2}}\) we get

where \(I_{m-1}\) is the \((m-1)\times (m-1)\) identity matrix. Hence,

where

The fourth step above follows from \(t^2 = 1-(1-\alpha ^2)(1-\Vert v\Vert ^2) = \alpha ^2 + (1-\alpha ^2)\Vert v\Vert ^2\).

Hence we have

The last step follows because

The proof of (16) is similar but easier. Computing partial derivatives of \(\Phi (v) = (v,\sqrt{1-\Vert v\Vert ^2})\) we get

Hence

Therefore,

Rights and permissions

About this article

Cite this article

Peña, J., Soheili, N. A deterministic rescaled perceptron algorithm. Math. Program. 155, 497–510 (2016). https://doi.org/10.1007/s10107-015-0860-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-015-0860-y