Abstract

Various approaches have been proposed to denote treatment outcome, such as the effect size of the pre-to-posttest change, percentage improvement, statistically reliable change, and clinical significant change. The aim of the study is to compare these approaches and evaluate their aptitude to differentiate among child and adolescent mental healthcare providers regarding their treatment outcome. Comparing outcomes according to continuous and categorical outcome indicators using real-life data of seven mental healthcare providers, three using the Child Behavior Checklist and four using the Strengths and Difficulties Questionnaire as primary outcome measure. Within each dataset consistent differences were found between providers and the various methods led to comparable rankings of providers. Statistical considerations designate continuous outcomes as the optimal choice. Change scores have more statistical power and allow for a ranking of providers at first glance. Expressing providers’ performance in proportions of recovered, changed, unchanged, or deteriorated patients has supplementary value, as it denotes outcome in a manner more easily interpreted and appreciated by clinicians, managerial staff, and, last but not least, by patients or their parents.

Similar content being viewed by others

Introduction

Recently, Dutch mental healthcare has embarked on an endeavor to implement countrywide two treatment supportive measures: Routine outcome monitoring (ROM) and benchmarking. ROM is the process of routinely monitoring and evaluating the progress made by individual patients in mental healthcare (ROM [8]; ROMCKAP http://www.romckap.org). Each patient is assessed at the onset of treatment and assessments are repeated periodically to track changes over time. Thus, standardized information about the treatment effect at regular intervals is obtained, and feedback regarding progress is provided to the therapist and the patient and/or parents. There is ample evidence that ROM improves treatment outcome, especially through the process of providing feedback [5, 24], albeit to a modest extent [6, 22]. Internationally, implementation of ROM initiatives in child and adolescent psychiatry is on the rise [13, 20].

Aggregated ROM data, collected in mental health centers across the country, allow for comparison of providers’ performance (benchmarking), aimed at learning what works best for whom in everyday clinical practice. In The Netherlands, a foundation (Stichting Benchmark GGZ or SBG http://www.sbggz.nl), was established in 2011 for benchmarking mental healthcare providers. SBG is an independent foundation, governed conjointly by the Dutch association of mental healthcare providers (GGZ-Nederland; http://www.ggznederland.nl), the association for healthcare insurers (Zorgverzekeraars Nederland; http://www.zn.nl), and the Dutch Patient Advocate Organisation (Landelijk Platform GGZ; http://www.lpggz.nl). The goal of SBG is to aggregate ROM-data, compare the outcome of groups of patients and provide results to insurers, managers, therapists, and, eventually, to consumers: the patients or their parents. If certain conditions are met, aggregated data allow for comparing treatment outcomes of healthcare providers (external benchmarking). Also, aggregated data may reveal differences in outcome between locations or departments or treatment methods within providers or can be used for comparing the caseloads of therapists (internal benchmarking). Transparency regarding outcomes and a valid feedback system for discussing outcomes, may improve the quality of care [4, 13, 20].

Healthcare insurers seek reliable and valid performance indicators and their attention has shifted from process to outcome indicators [29]. A valid comparison requires unbiased data and potential confounding of results should be counteracted by case-mix adjusted outcomes [14]. In The Netherlands, access of insurers to aggregated outcome data is restricted to the level of comparing providers; they use this information to monitor quality and may, in the near future, use it in their contracting negotiations. In the long run, also patients will benefit from transparency on the effectiveness of mental healthcare providers, as quality of care is a relevant factor in choosing a provider.

Use of treatment outcome as the main performance indicator of quality of care requires a simple, straightforward, and valid method to denote outcome. Various methods have been proposed to delineate treatment outcome and improvement over time [23]. In treatment outcome research, the effect size of the standardized pre-to-posttest change (ES or Cohen’s d) [7] is often used to express the magnitude of the treatment effect. It is, however, difficult to translate a given effect size in clinical terms and appreciate its clinical implication. Therefore, such scores alone may not be sufficient to communicate to a broad audience what is accomplished by mental healthcare. To express the effectiveness of a course of psychotherapy for an individual patient, Jacobson and colleagues have proposed two concepts: clinical significance and statistical reliable change [17]. Jacobson and colleagues introduced their method to evaluate the effectiveness of treatment beyond a statistical comparison at group level (comparing pre–post group means) and to convey information regarding the progress of individual patients. They proposed to use the term “clinical significance” for the change that is attained when a client transgresses a threshold score and has a postscore falling within the range of the “normal” population. However, whether a patient has moved during therapy from the dysfunctional to the healthy population is in itself not sufficient to denote meaningful change. A patient with a pretest score very close to the threshold score may experience a very minor change, but still transgress the cutoff value, and would thus be classified as meaningfully changed. Therefore, Jacobson and colleagues [17, 18] introduced an additional requirement: the Reliable Change Index (RCI). It is defined as the difference between the pre- and postscore, divided by the standard error of measurement. If the change from pre- to posttest score is larger than 1.96× RCI, the change is statistically significant (p < 0.05). The two proposed criteria, clinical significance and statistical significance, can be taken together and utilized to categorize patients into four categories: recovered, merely improved, unchanged, and deteriorated [18].

Over the years, several refinements of the traditional Jacobson–Truax (JT) method have been suggested [3, 36]. Alternative methods are recommended to correct for regression to the mean, which the JT method does not. McGlinchey, Atkins, and Jacobson [26] compared them: They concluded that four of the five investigated methods yielded similar results in patient’s outcome classification with a mean agreement of 93.7 %. Because all indexes performed similarly, they recommended the original JT method as it is easy to compute and cutoff estimates are available for a number of widely used instruments. Moreover, the JT method takes a moderate position between the more extreme alternatives. If the JT method is used with measures for which large normative samples are available, then standard cutoff and RCI values can be applied uniformly by researchers rather than requiring sample- and study-specific recalculations. This means that a standard for clinically significant change can be set and applied regardless of the research or clinical setting in which the study is conducted [3].

A recent addition to the expanding literature on outcome indicators is the method proposed by Hiller, Schindler, and Lambert [15]: the percentage improvement (PI). PI is defined as the percentage reduction in symptom score within each patient. PI has a long tradition of use in pharmacological research, where it is used to establish responder status (e.g., by requiring a 50 % reduction in score on the outcome measure or PI50). Hiller et al. [15] proposed to calculate PI as the pre-to-posttest change proportionally to the difference between the prescore and the mean score of the functional population (pre minus post/pre minus functional). The method offers two ways of usage: Just expressing the percentage of change within a patient, or categorizing patients above or below a cutoff of ≥50 % reduction of symptoms in “responders” and “non-responders”. For responder status, Hiller et al. [15] proposed an additional criterion of 25 % reduction on the entire range of the scale, which corresponds to the RCI requirement of the JT method. As this is a substantial modification of the original percentage improvement score, we will refer to this indicator as PImod. Hiller et al. [15] compared PImod with RCI for 395 adult depressive outpatients who were treated with cognitive behavior therapy. The RCI was significantly more conservative than PImod in classifying patients as responders.

An important asset of PImod is that it corrects for baseline severity. According to the law of initial value [35] the pretreatment severity is positively associated with the degree of improvement: patients with high initial scores tend to achieve a larger pre–post change than those with low scores and, consequently, the RCI is more easily transgressed. In contrast, with PImod, the higher the baseline score of an individual, the more improvement in terms of score reduction is needed to meet the respondent criterion of ≥50 % reduction. For benchmarking, this is advantageous. If PImod appears insensitive to pretreatment level, no case-mix correction for pretest severity [14] is needed, which would simplify comparison of treatment providers. The other advantage Hiller et al. [15] point out for PImod is that no reliability and standard deviations have to be determined, as is the case for the JT method.

Three issues regarding conceptual and methodological aspects of outcome measurement need to be addressed; two pertain to measurement scale properties, and one regards statistical power considerations in relation to continuous vs. categorical data. Every textbook on statistics will point out that subtracting pre- and posttest scores requires an interval scale (the distance between 9 and 7 is equal to the distance between 6 and 4). Raw scores on self-report scales seldom meet this criterion, as scores on these instruments tend to be peaked and skewed to the right. Consequently, the magnitude of a two-point change on the high end of the scale does not correspond to a magnitude of a two-point change on its low end. Normalizing scores deals with skewness and kurtosis in the raw data and, from a measurement scale perspective, the difference in T-scores (∆T) is superior to ES. Thus, for benchmarking raw scores are transformed in normalized T-scores [21] with a pretest mean of M = 50 and SD = 10.

Another issue pertaining to properties of measurement scales is mentioned by Russel [30] and concerns the shortcomings of PImod as an indicator of change. A percentage improvement score requires a ratio measurement scale with a meaningful 0-score (such as distance in miles or degrees Kelvin). Again, self-report questionnaires yield scores that do not meet this requirement. Use of the cutoff value between the functional and the dysfunctional population as a proxy for a 0-score, the solution chosen for PImod, invokes new problems, as it makes improvement in access of 100 % possible and likely. Thus, percentage change based upon an interval or ordinal measurement scale is methodologically flawed.

Transforming continuous data into categories diminishes their informative value and has negative consequences for the statistical power to detect differences. Federov, Mannino, and Zhang [9] show that categorizing treatment outcome in two categories of equal size, amounts to a loss of power of 36.3 % (1–2/π). To compensate for this loss of power, a 1.571 times larger sample size is required. Some examples of power calculation can illustrate this point. Sufficient statistical power (e.g., 1 − β = 0.80) to demonstrate a large difference (e.g., M = 50 vs. M = 42, SD = 10; d = 0.80) between the means of two groups with a T test at p > 0.05 (two-tailed) requires at least N = 26 observations in each group; a medium size difference (e.g., M = 50 vs. M = 45, SD = 10; d = 0.50) requires N = 64 in each group [12]. In comparison, a Chi square test requires N = 32 to demonstrate a large difference in proportion (e.g., 75/25 vs. 50/50; w = 0.50) and N = 88 to demonstrate a medium size difference in proportion (e.g., 65/35 vs. 50/50; w = 0.30) [10]. The loss of information, going from continuous data to a dichotomous categorization, needs to be offset with more participants to maintain the same statistical power to demonstrate differences in performance between providers. Loss of statistical power could lead to the faulty conclusion that there are no differences between providers (type II error), an undesirable side effect of categorization when benchmarking mental healthcare. One way to increase statistical power and counter type II error would be to raise the number of possible outcome categories from a dichotomy to three or more. It should be noted that the negative influence of diminished statistical power is dependent on the size of the number of observations to begin with. If one starts out with a large dataset, which grants substantial statistical power, some decrease of power can be sustained, and the probability of a type II error would not be raised to unacceptable heights. However, with smaller datasets, e.g., when we would compare the treatment effect of individual therapists, statistical power definitely becomes an issue and categorized outcome indicators may no longer be an alternative for average pre-to-post change. Thus, caution should be applied when comparing small data sets, e.g., the caseload of individual therapists, as true differences in effectiveness may get obscured when using categorical outcomes.

Finally, a relevant consideration for the choice between a continuous vs. a categorical outcome is the issue whether psychopathology should be regarded as a discrete (sick vs. healthy) or a continuous phenomenon. Markon, Chmielewski, and Miller [25] provide a comprehensive discussion of this topic and demonstrate superior reliability and validity of continuous over discrete measures in a meta-analysis of studies assessing psychopathology. They report a 15 % increase in reliability and a 37 % increase in validity when continuous measures of psychopathology are used instead of discrete measures. They argue that these increases in reliability and validity may be based on the more fitting conceptualization of (developmental) psychopathology as a dimensional rather than as a categorical phenomenon [16, 34]. If psychopathology is better viewed as dimensional, than treatment outcome can also be considered best on a continuous scale. When a continuous outcome variable is discretized, information on variation within outcome categories is lost and patients with highly similar outcomes may fall on either side of the cutoff ending up in different categories. However, in support of categorization, Markon et al. [25] also mention the discrepancy between optimizing reliability and validity vs. optimizing clinical usefulness, which might be dependent on the goal of measurement (idiosyncratic or nomothetic). When the goal is to denote clinical outcome in individual cases to, for instance, decide on the further course of treatment, optimized idiosyncratic assessment is key. Finally, patients/parents may be better informed by discrete information (e.g., % of recovered patients), which may be taken as representing an approximation of their chance to recovery.

The above brings forth the following research questions: How do the various continuous and categorical approaches to denote treatment outcome compare in practice, when applied to real-life data? What is their concordance? Which method reveals the largest differences in outcome between providers? How do various indicators compare in ranking treatment providers? Which approach is most useful or informative for benchmarking purposes, i.e., which method is best to establish differential effectiveness of Dutch mental healthcare providers treating children and adolescents?

Method

Participants

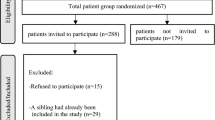

To ensure sufficient statistical power to detect differences between treatment providers, we selected from the SBG-database only providers that had collected outcome data from at least 200 patients in the period from January 2012 to December 2013. We randomly selected four providers using the Child Behavior Checklist (CBCL) [1] and four using the Strengths and Difficulties Questionnaire (SDQ) [11]. From the first group, one center declined participation. All patients were treated for an Axis I or II diagnosis according to the Diagnostic Statistical Manual of Mental Disorders, DSM–IV [2]. All data were anonymized before being submitted to SBG. According to Dutch law, no informed consent is required for data collected during therapy to support the clinical process. Nevertheless, patients were informed about ROM procedures and anonymous use of their data for research purposes, as is part of the general policy at Dutch mental healthcare providers. Response rates for ROM differed among providers, as implementation of ROM in child and adolescent mental healthcare is an ongoing process, with response rates growing annually by about 10 %. For the year 2013, the response rates lie between 20 and 30 %.

The duration of psychiatric treatment for children and adolescents may vary from months to several years. To standardize the pre-to-posttest interval, SBG utilizes the Dutch reimbursement system. For administrative purposes, long treatments are segmented in units of one year called Diagnosis Treatment Combinations (DTCs). To get a more homogeneous dataset, in the present study, only outcomes of initial DTCs were analyzed (outcome of the first year of treatment as opposed to prolonged treatment or follow-up). Thus, the maximum pre-to-posttest interval was 1-year.

Table 1 presents data on gender, age, and pre-to-posttest interval of the included patients of the seven providers. In the CBCL dataset, it appeared that provider three treated more boys [χ 2(2) = 6.25, p = 0.04], the providers differed in age of their clients [F (2) = 347.1, p < 0.001, η 2 = 0.30, 2 > 1 > 3] and in pre-to-posttest interval [F (2) = 238.2, p < 0.001, η 2 = 0.23, 3 > 2 > 1]. In the SDQ dataset provider C and D treated more boys [χ 2(3) = 45.2, p < 0.001], patients in providers A and C were younger than in providers B and D [F (3) = 16.5, p < 0.001, η 2 = 0.04] and the providers differed in duration of the pre-to-posttest interval [F (3) = 154.0, p < 0.001, η 2 = 0.28, C > D > A > B].

Measurement instruments

For ROM in The Netherlands, the two most commonly used self-report questionnaires are the CBCL [1] and the SDQ [11]. The CBCL assesses the competence level of the child by assessing behavioral and emotional problems and abilities [1, 33]. The CBCL questionnaire contains 113 items, which are scored on a three-point scale (0 = absent, 1 = occurs sometimes, 2 = occurs often). We used the total score of parents’ reports.

The SDQ is a 25-item questionnaire with good psychometric properties [11, 27, 32]. The SDQ measures five constructs: emotional symptoms, conduct problems, hyper-activity-inattention symptoms, peer problems, and pro-social behavior, rated on a three-point scale from 0 = not true to 2 = certainly true. For the present study, we used the sum score of the 20 items regarding difficulties (leaving pro-social behavior items out) from the parent version, as this selection of items is comparable to what is measured with the total score of the CBCL. Both questionnaires correlate highly with one another [12].

Outcome indicators

ES and ΔT

Cohen’s d effect size (ES) is calculated on raw scores and defined as the pre-to-post difference divided by the standard deviation of pretest scores. To make outcomes on various measurement instruments comparable and make raw scores with a skewed frequency distribution suitable for subtraction, scores are standardized into normalized T-scores (mean = 50, SD = 10; [19]). Delta-T (ΔT) is the pre-to-post difference of normalized T-scores. As T-scores are standardized with SD = 10, the size of ΔT is approximately 10 times Cohen’s d. For the CBCL and the SDQ, we based the T-score conversion on normative data of clinical samples. (In case of the CBCL our approach deviates from the usual T-score conversion in the user manual, which is based on ratings of a normative sample of “healthy” children.)

PI mod

The percentage improvement (PImod) method as applied by Hiller et al. [15] defines a responder as a patient who reports at least a 50 % reduction of symptoms from pretest toward the cutoff halfway the mean score of the functional and the dysfunctional population, as well as a 25 % reduction on the entire range. For instance, with a baseline score of T = 55 on the SDQ (T cutoff = 42,5; T range is 20–90), a posttest score ≤46 is required to meet the criterion for responder status (a nine points change implies 72 % reduction toward the cutoff value and 26 % reduction on the entire scale).

JTRCI, JTCS, and JTRCI&CS

The Jacobson–Truax (JT) method yields three performance indicators. Reliable change requires a pre–post change beyond a cutoff value based on the reliability and pretest variance of the instrument. The indicator for comparing the proportions of reliably changed patients is called the Reliable Change Index (JTRCI). Clinical Significance (JTCS) requires a pre-to-posttest score transition by which a cutoff value is crossed, denoting the passage from the dysfunctional to the functional population. The outcome indicator JTCS is based on the proportion of patients meeting the required passage. Combining both criteria yields a categorization (JTRCI&CS) with theoretically five levels (recovered, improved, unchanged, deteriorated, and relapsed). In the present study, four levels were used, as patients rarely meet the criteria for the category “relapsed” (<2.9 % in our datasets started “healthy” and concluded their first year of treatment with a score in the pathological range). Therefore, we collapsed deteriorated and relapsed patients into a single category.

Categorization is based on T-values. For JTRCI, we chose a value of ΔΤ = 5.0, which corresponds to half a standard deviation and is commonly considered the minimal clinically important difference (Jaeschke, 1989 1205/id; Norman, 2003 1206/id;Sloan, 2005 1207/id). Use of the formulas provided by Jacobson and Truax [18], with an instrument reliability of Cronbach’s α = 0.95, would result in RCI90 = 5.22. For the JTCS, we used a value of T = 42.5, which is based on the third option for calculation of the cutoff value described by Jacobson and Truax [18], i.e., halfway the mean of the functional and the dysfunctional population. (The average of the dysfunctional population is by definition 50; the average for the functional population (T = 35.0) was derived from a translation of raw mean scores from the manuals the CBCL and the SDQ to T-scores. This resulted in T-scores of 37 and 33 for the CBCL and the SDQ, respectively. Using these reference values, the mean of the functional population was estimated as T = 35.0).

Table 2 gives an overview of the indicators, summarizes how each indicator is calculated and briefly describes the advantages and disadvantages of each indicator.

Statistical analysis

All analyses were performed for the CBCL dataset and the SDQ dataset separately. Raw scores were standardized into normalized T-scores. Concordance among the different outcome indicators was assessed with correlational analyses (Pearson’s PMCC, Kendall’s Tau, and Spearman’s Rho correlation coefficients). Also, the prognostic value of pretest severity for outcome (change score or category) was assessed with correlational analysis. Pre- and posttest T-scores of providers were compared with repeated measures ANOVA with time as the within-subjects factor and provider as the between-subjects factor. To investigate which providers differed significantly among each other, we conducted pairwise comparisons with a Bonferroni correction for multiple comparisons. Differences in proportions of patients according to the various categorical approaches were assessed with Chi square tests. Providers were placed in rank order from best to worst results and the ranking according to the various outcome indicators was compared.

Results

Comparison of outcome indicators

For both the CBCL data and the SDQ data, the association between ES and ∆T amounted to r = 0.99, p < 0.001. Although transforming raw scores into normalized T-scores is important from a theoretical perspective, in practice it has little effect on the relative position of outcome scores within each dataset.

The pattern of associations among indicators based on T-scores is presented in Table 3. Findings are highly similar for both datasets. Pre-test severity is substantially associated with ∆T (r = 0.30 and r = 0.41 for the CBCL and SDQ, respectively) and JTRCI (r = 0.19 and r = 0.23). Compared to ∆T, PImod is relatively insensitive to pretest level. When looking at associations among performance indicators, the strongest concordance is found between PImod and the combined JTRCI&CS index (Spearman’s Rho = 0.76 and 0.77 for the CBCL and SDQ, respectively), followed by the concordance of ∆T and JTRCI&CS (Kendall’s Tau = 0.75 and 0.77). JTCS appears less concordant with ΔT and PImod.

The CBCL outcomes

Table 4 shows CBCL-based results of 1,641 patients, differentiating providers according to the various outcome indicators along with a ranking of providers.

A 2 (time) × 3 (provider) repeated measures ANOVA revealed a statistically significant effect of time, F (11,638) = 469.0, p < 0.001, η 2 = 0.28, indicating a pre–post difference, irrespective of provider. Overall the pre-to-posttest difference amounts to d = 0.44, an effect of medium size. There is also a main effect of provider, F (21,638) = 4.3, p < 0.01, η 2 = 0.005, indicating differences between providers, irrespective of time. Finally, there was a significant interaction effect (time × provider) indicating a difference in effectiveness between providers, F (31,638) = 16.2, p < 0.001, η 2 = 0.02. Pairwise comparisons (Bonferroni corrected) indicated that providers 2 and 3 had different outcomes. Also with the indicators PImod, ∆T, JTRCI and JTCS significant differences were found in outcome between providers. Overall, the ranking is consistent:1–2–3. JTRCI&CS yields a similar pattern of outcome ranking in all categories. In plain English, these results indicate that a year of treatment from provider 1 was beneficial to 55.3 % of the patients, vs. 40.9 % of the patients of provider 3. Moreover, the percentage of deteriorated patients of provider 3 was twice the percentage of provider 1 (11.7 vs. 5.6 %). Figure 1 shows the proportion of patients, according to the JTRCI&CS 4-level categorization in stacked-bar graph, based on normalized T-scores. Evidently, provider 3 has less recovered and more unchanged patients as compared to the other two providers.

The SDQ outcomes

Table 5 displays the results from the dataset containing pre-to-posttest scores of 1,207 patients. Overall, the results mimic the findings in the CBCL dataset, with the various methods yielding similar results and all indicators showing significant differences among the providers.

Analysis of variance for a 2 (time) × 4 (provider) design indicated a significant effect of time, F (11,203) = 356.5, p < 0.001, η 2 = 0.23), provider F (11,203) = 21.2, p < 0.001, η 2 = 0.05, and a significant interaction effect, F (31,203) = 19.1, p < 0.001, η 2 = 0.05, meaning that the change over time was different among the providers. Pairwise comparisons revealed that A and B outperformed C and D. The rank order is the same with the various approaches, but varies somewhat among the four categories of the JTRCI&CS approach (with providers C and D trading places). For the total sample, almost half the patients improve or recover after 1-year of service delivery; about 10 % deteriorate (similar numbers are found in the CBCL sample). With provider A 59.4 % of the patients benefit and 7.0 % deteriorate, with provider D only 35.0 % benefit and 16.7 % deteriorate. Figure 2 presents the proportions of patients in the four outcome categories graphically for each provider.

Discussion

The results revealed consistent differences in outcome between providers and, by and large, the various methods converged. Each index showed a similar assignment of patients to outcome categories, as evidenced by the concordance among indicators, especially between ΔT, PImod, and JTRCI&CS. Also, a similar ranking of providers is found by the various methods.

Hiller et al. [15] proposed the PImod method as an alternative to the RCI, as it takes differences in pretreatment severity into account. The present findings support this contention partially, as PImod was less strongly associated with pretest level than ΔT was. In addition, Hiller et al. claimed that the RCI index leads to a more conservative categorization of patients as compared to the PI method. Comparing the performance of RCI and PImod in this study does not support their claim, as the percentages of patients reaching RCI (about 48 %) exceeded those for PImod (about 32 %). Methodologically, percentage change based upon an interval or ordinal measurement scale is flawed and the solution chosen by Hiller et al. of using the cutoff between the functional and the dysfunctional population as a proxy for a 0-point invokes new problems, as it makes improvement in access of 100 % likely for many patients (14 and 23 % for the CBCL and the SDQ, respectively). Given these drawbacks, we recommend against the use of PImod.

Advantageous of the categorical approach is that it provides a more easily interpretable presentation of the comparison of provider effectiveness than differences in pre-to-posttest change do. Moreover, the categorical approach denotes differences in clinically meaningful terms (e.g., provider 1 outperforms provider 3 in the proportion of patients that benefitted from treatment (improvement or recovery, 55.3 vs. 40.9 %). As the categorical outcomes (PImod and JT) and the continuous outcomes (ΔT or Cohen’s d) largely converged, one could conclude that the categorical approach is a good alternative for continuous outcomes.

As was pointed out earlier in the introduction, transforming continuous data into categories diminishes their informative value, may not be fitting for an inherently continuous phenomenon [25], and has negative consequences for the statistical power to detect differences. Fedorov, Mannino, and Zhang [9] demonstrated that with a “trichotomous” categorization the loss of power would still be substantial (19 %). Statistical power increases gradually with the use of more categories. Conversely, the ease of interpretation diminishes with the increase of outcome categories. The JTRCI&CS approach applied to the SDQ data nicely illustrates this point: it can be problematic to compare four providers on four outcome categories and ranking is not straightforward. With JTRCI&CS, ranking providers depends on the focus: Do we consider primarily treatment successes, i.e., the proportion of recovered or improved patients? Or do we focus on failures, i.e., unchanged or deteriorated patients? Alternatively, it could be argued that the most extreme classifications are key to ranking providers. This cannot be decided beforehand and makes the interpretation of a multi-categorical outcome indicator more subjective than comparing them on average pre-to-posttest change. Hence, using more than two categories may successfully preserve statistical power, but it also diminishes the plainness of the results.

Strengths

The present study used real-life data, containing the treatment outcomes of young patients submitted to the database of SBG. Six different outcome indicators were investigated and compared to denote treatment outcome on two frequently used outcome measures, the CBCL and the SDQ. Both questionnaires seemed equally suitable to assess pre-to-post differences and compare outcome indicators. This observation corresponds to other research comparing the CBCL and SDQ, which ascertained that both questionnaires correlate highly with one another [12].

Limitations

The aim of the present study was to compare various indicators on their ability to differentiate between mental healthcare providers regarding their average treatment outcome. As such, the present study compares methods, not mental healthcare providers. Data per provider were far from complete (leaving ample room for selection bias), treatment duration was limited to 1-year, and no correction was applied for potential confounders. Furthermore, variations in outcome measurement (e.g., questionnaire use or parental respondent) or timing of assessments may have differential effects on outcome and were not controlled for in this study. Hence, the present report should not be regarded as a definitive report on the comparison of mental healthcare providers or on the effectiveness of Dutch mental healthcare for children and adolescents, but merely as an investigation of various methods of comparing treatment outcomes.

Secondly, the present study lacks a criterion or ‘gold standard’ that can be used to test the validity of each outcome indicator. Indicators are compared solely among each other and a similar rank order is more easily obtained with a limited set of 3 or 4 providers, as compared to countrywide benchmarking (currently 175 providers submit their outcome data to SBG). In future studies, we may assess and compare the predictive value of each indicator for an external criterion, such as functioning at long-term follow-up, readmission, or continued care.

In sum, we conclude that outcome is best operationalized as a continuous variable: preferably the pre-to-post difference on normalized T-scores (ΔT), as this outcome indicator is not unduly influenced by pretest variance in the sample. Continuous outcome is superior to categorical outcome as change scores have more statistical power and allow for a ranking of providers at first glance. This performance indicator can be supplemented with categorical outcome information, preferably the JTRCI&CS method, which presents outcome information in a manner which is more informative to clinicians and patients or their parents.

References

Achenbach TM (1991) Manual for the child behavior checklist 4–18 and 1991 profiles. Department of Psychiatry, University of Vermont, Burlington

American Psychiatric Association (1994) Diagnostic and statistical manual of mental disorders IV. Author, Washington, DC

Bauer S, Lambert MJ, Nielsen SL (2004) Clinical significance methods: a comparison of statistical techniques. J Pers Assess 82:60–70

Berwick DM, Nolan TW, Whittington J (2008) The triple aim: care, health, and cost. Health Aff 27:759–769

Bickman L, Kelley SD, Breda C, de Andrade AR, Riemer M (2011) Effects of routine feedback to clinicians on mental health outcomes of youths: results of a randomized trial. Psychiatr Serv 62:1423–1429

Carlier IV, Meuldijk D, van Vliet IM, van Fenema E, van der Wee NJ, Zitman FG (2012) Routine outcome monitoring and feedback on physical or mental health status: evidence and theory. J Eval Clin Pract 18:104–110

Cohen J (1988) Statistical power analysis for the behavioral sciences. Lawrence Erlbaum Associates, Hillsdale

de Beurs E, den Hollander-Gijsman ME, van Rood YR, van der Wee NJ, Giltay EJ, van Noorden MS, van der Lem R, van Fenema E, Zitman FG (2011) Routine outcome monitoring in the Netherlands: practical experiences with a web-based strategy for the assessment of treatment outcome in clinical practice. Clin Psychol Psychother 18:1–12

Fedorov V, Mannino F, Zhang R (2009) Consequences of dichotomization. Pharm Stat 8:50–61

Fleiss JL (1973) Statistical methods for rates and proportions. Wiley, New York

Goodman R (2001) Psychometric properties of the strengths and difficulties questionnaire. J Am Acad Child Adolesc Psychiatr 40:1337–1345

Goodman R, Scott S (1999) Comparing the strengths and difficulties questionnaire and the child behavior checklist: is small beautiful? J Abnorm Child Psychol 27:17–24

Hall CL, Moldavsky M, Baldwin L, Marriot M, Newell K, Taylor J, Hollis C (2013) The use of routine outcome measures in two child and adolescent mental health services: a completed audit cycle. BMC Psychiatr 13:270

Hermann RC, Rollins CK, Chan JA (2007) Risk-adjusting outcomes of mental health and substance-related care: a review of the literature. Harv Rev Psychiatr 15:52–69

Hiller W, Schindler AC, Lambert MJ (2012) Defining response and remission in psychotherapy research: a comparison of the RCI and the method of percent improvement. Psychother Res 22:1–11

Hudziak JJ, Achenbach TM, Althoff RR, Pine DS (2007) A dimensional approach to developmental psychopathology. Int J Methods Psychiatr Res 16:S16–S23

Jacobson NS, Follette WC, Revenstorf D (1984) Psychotherapy outcome research: methods for reporting variability and evaluating clinical significance. Behav Ther 15:336–352

Jacobson NS, Truax P (1991) Clinical significance: a statistical approach to defining meaningful change in psychotherapy research. J Consul Clin Psychol 59:12–19

Jaeschke R, Singer J, Guyatt GH (1989) Measurement of health status: ascertaining the minimal clinically important difference. Control Clin Trials 10(4):407–415

Kelley SD, Bickman L (2009) Beyond routine outcome monitoring: measurement feedback systems (MFS) in child and adolescent clinical practice. Cur Opin Psychiatr 22:363–368

Klugh HE (2006) Normalized T Scores. In: Kotz S, Read CB, Balakrishnan N, Vidakovic B (eds) Encyclopedia of statistical sciences, 2nd edn. Wiley, New York

Knaup C, Koesters M, Schoefer D, Becker T, Puschner B (2009) Effect of feedback of treatment outcome in specialist mental healthcare: meta-analysis. Br J Psychiatry 195:15–22

Kraemer HC, Morgan GA, Leech NL, Gliner JA, Vaske JJ, Harmon RJ (2003) Measures of clinical significance. J Am Ac Child Adolesc Psychiatr 42:1524–1529

Lambert M (2007) Presidential address: what we have learned from a decade of research aimed at improving psychotherapy outcome in routine care. Psych Res 17:1–14

Markon KE, Chmielewski M, Miller CJ (2011) The reliability and validity of discrete and continuous measures of psychopathology: a quantitative review. Psych Bul 137:856–879

McGlinchey JB, Atkins DC, Jacobson NS (2002) Clinical significance methods: which one to use and how useful are they? Behav Ther 33:529–550

Muris P, Meesters C, van den Berg F (2003) The strengths and difficulties questionnaire (SDQ). Eur Child Adolesc Psychiatr 12:1–8

Norman GR, Sloan JA, Wyrwich KW (2003) Interpretation of changes in health-related quality of life: the remarkable universality of half a standard deviation. Med Care 41:582–592

Porter ME, Teisberg EO (2006) Redefining healthcare: creating value-based competition on results. Harvard Business Press, Cambridge

Russel M (2000) Summarizing change in test scores: shortcomings of three common methods. Practical Assessment, Research & Evaluation 7

Sloan JA, Cella D, Hays RD (2005) Clinical significance of patient-reported questionnaire data: another step toward consensus. J Clin Epidem 58:1217–1219

van Widenfelt B, Goedhart A, Treffers P, Goodman R (2003) Dutch version of the strengths and difficulties questionnaire (SDQ). Eur Child Adolesc Psychiatr 12:281–289

Verhulst FC, Van der Ende J, Koot HM (1996) Handleiding voor de CBCL/4-18 [Dutch manual for the CBCL/4-18]. Department of Child and Adolescent Psychiatry, Erasmus University, Sophia Children’s Hospital, Rotterdam

Walton K, Ormel J, Krueger R (2011) The dimensional nature of externalizing behaviors in adolescence: evidence from a direct comparison of categorical, dimensional, and hybrid models. J Abnorm Child Psychol 39:553–561

Wilder J (1965) Pitfalls in the methodology of the law of initial value. Am J Psychother 19:577–584

Wise EA (2004) Methods for analyzing psychotherapy outcomes: a review of clinical significance, reliable change, and recommendations for future directions. J Pers Assess 82:50–59

Acknowledgments

The authors are grateful to the following mental health providers for allowing us to use their outcome data: De Viersprong, Mentaal Beter, Mutsaersstichting, OCNR, Praktijk Buitenpost, Yorneo, and Yulius.

Conflict of interest

No conflicts declared.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

de Beurs, E., Barendregt, M., Rogmans, B. et al. Denoting treatment outcome in child and adolescent psychiatry: a comparison of continuous and categorical outcomes. Eur Child Adolesc Psychiatry 24, 553–563 (2015). https://doi.org/10.1007/s00787-014-0609-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00787-014-0609-9